1. 概述

安装版本:14.4.210816098 Premium(高级版)

部署简单,不需要考虑激活问题

2. 部署

安装docker,各系统命令不一样,自行安装

docker pull secfa/docker-awvs 拉取镜像

docker run -it -d -p 13443:3443 secfa/docker-awvs 启动容器,外部监听端口13443

3. 使用

访问:https://YOUR_IP:13443/

用户名:admin@admin.com

密码:Admin123

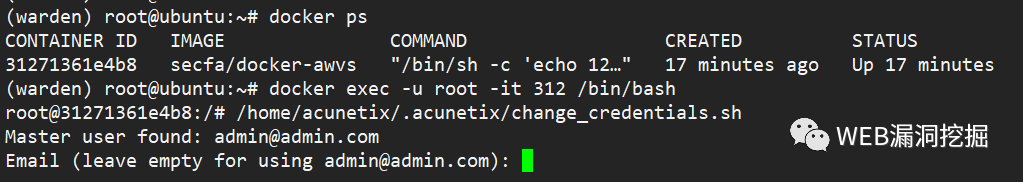

4. 修改口令

docker exec -u root -it $docker_names bin/bash

/home/acunetix/.acunetix/change_credentials.sh

输入新口令

5. 批量扫描

分享一个批量扫描脚本,我是从数据库查询所有测试子域名,最大扫描任务数量可以自己设定,因为AWVS默认好像是20个任务,同时扫,电脑有点受不了。脚本很简单,有需求的自行修改吧。

import pymysql

import time

import urllib3

import requests

import json

from loguru import logger

urllib3.disable_warnings()

AWVS_HOST = 'https://127.0.0.1:13443/'

AWVS_API_KEY = '1986ad8c0a5b3df4d7028d5f3c06e936c3765f2f4a2f54908a90f20fa01c9e3cc'

AWVS_API_HEADER = {"X-Auth": AWVS_API_KEY, "content-type": "application/json"}

awvs_scan_rule = {

"full": "11111111-1111-1111-1111-111111111111",

"highrisk": "11111111-1111-1111-1111-111111111112",

"XSS": "11111111-1111-1111-1111-111111111116",

"SQL": "11111111-1111-1111-1111-111111111113",

"Weakpass": "11111111-1111-1111-1111-111111111115",

"crawlonly": "11111111-1111-1111-1111-111111111117",

}

logger.info('start')

def start(subdomain):

target_id = add_task(subdomain)

scan_task(subdomain, target_id)

# result = get_scan_info(target_id)

# get_reports(result, subdomain)

# print(subdomain)

def add_task(url):

data = {"address": url, "description": url, "criticality": "10"}

try:

response = requests.post(AWVS_HOST + "api/v1/targets", data=json.dumps(data), headers=AWVS_API_HEADER,

timeout=30, verify=False)

result = json.loads(response.content.decode('utf-8'))

# logger.info(url + ' 已添加')

return result['target_id']

except Exception as e:

logger.critical('* %s' % e)

return

def scan_task(url, target_id):

data = {'target_id': target_id, 'profile_id': awvs_scan_rule['highrisk'],

'schedule': {'disable': False, 'start_date': None, 'time_sensitive': False}}

try:

response = requests.post(url=AWVS_HOST + 'api/v1/scans', timeout=10, verify=False, headers=AWVS_API_HEADER,

data=json.dumps(data))

if response.status_code == 201:

logger.info('%s 已开启扫描' % url)

except Exception as e:

logger.critical(e)

def get_scan_info(target_id):

try:

response = requests.get(AWVS_HOST + "api/v1/scans", headers=AWVS_API_HEADER, timeout=30, verify=False)

results = json.loads(response.content.decode('utf-8'))

logger.info(results['scans'])

for result in results['scans']:

if result['target_id'] == target_id:

logger.info(target_id)

return result

except Exception as e:

logger.critical('* %s' % e)

return

def get_status(result):

# 获取scan_id的扫描状况

try:

logger.info(result)

scan_id = result['scan_id']

response = requests.get(AWVS_HOST + "api/v1/scans/" + str(scan_id), headers=AWVS_API_HEADER, timeout=5, verify=False)

result = json.loads(response.content.decode('utf-8'))

status = result['current_session']['status']

# 如果是completed 表示结束.可以生成报告

if status == "completed":

return "completed"

else:

return result['current_session']['status']

except Exception as e:

logger.critical('* %s' % e)

return 'aborted'

def get_reports(result, subdomain):

while True:

time.sleep(15)

res = get_status(result)

if res == 'completed':

logger.info(subdomain + ', scan completed')

break

if res == 'aborted':

break

def get_scans_running_count():

try:

response = requests.get(AWVS_HOST + "/api/v1/me/stats", headers=AWVS_API_HEADER, timeout=30, verify=False)

results = json.loads(response.content.decode('utf-8'))

count = results['scans_running_count']

# logger.info('目前运行任务数:' + str(count))

return count

except Exception as e:

logger.critical('* %s' % e)

return 5

sql = "select subdomain, waf, awvs_scanned from webapps_webapp where status_code=200 order by id desc"

subdomains = []

try:

db = pymysql.connect(host="", port=3360, user="root", password="", database="")

cursor = db.cursor()

cursor.execute(sql)

results = cursor.fetchall()

for row in results:

if row[1]:

continue

if row[2] == 'yes':

continue

subdomain = row[0]

subdomains.append(subdomain)

db.close()

except Exception as e:

logger.critical(e)

jobs = len(subdomains)

count = 0

i = 0

while True:

count = get_scans_running_count()

if count < 3:

subdomain = subdomains[i]

start(subdomain)

time.sleep(15)

i += 1复制

文章转载自WEB漏洞挖掘,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

评论

相关阅读

轻松上手:使用 Docker Compose 部署 TiDB 的简易指南

shunwahⓂ️

86次阅读

2025-04-27 16:19:49

Dify使用deepseek

golang算法架构leetcode技术php

53次阅读

2025-04-21 10:35:08

Pigsty v3.4 发布:更好的备份与 PITR,排序,基础设施与应用

IvorySQL开源数据库社区

48次阅读

2025-04-11 15:34:47

GreatSQL社区月报 | 2025.3

GreatSQL社区

31次阅读

2025-04-15 09:49:59

Harbor使用指南

老柴杂货铺

27次阅读

2025-04-12 00:03:42

KubeKey部署Kubernetes

老柴杂货铺

19次阅读

2025-04-11 02:16:37

Qwen3震撼发布,用openGauss x Dify抢先体验,秒速搭建智能知识库

Gauss松鼠会

16次阅读

2025-05-07 10:05:56

Qwen3震撼发布,用openGauss x Dify抢先体验,秒速搭建智能知识库

openGauss

13次阅读

2025-05-06 10:19:35

CentOS 9 (stream) 安装 Docker

韩公子的Linux大集市

8次阅读

2025-04-21 07:08:32

Docker的极简入门知识整理

济南小老虎

7次阅读

2025-04-20 22:08:26