在Kubernetes中主要有三大容器接口,CRI(容器运行时接口),CNI(容器网络接口)和CSI(容器存储接口)。

CNI是Container Network Interface的简称,是CNCF社区孵化的一个计算机基础项目,由一个规范和库组成,用于编写插件来配置Linux容器中的网络接口,CNI只涉及容器的网络连接以及删除容器时删除分配的网络资源。

只要调用CNI插件的实体遵守CNI规范,就能从CNI拿到满足网络互通条件的网络参数(如IP地址、网关、路由、DNS等),这些网络参数可以配置容器实例。

01

支持Kubernetes的CNI插件很多,如Flannel、Calico、Canal、Weave等,这些插件可以按CNI的网络模型进行划分为封装的网络模型和未封装的网络模型。

封装的网络模型如虚拟可扩展Lan(VXLAN),以Flannel为代表,Flannel也是Kubernetes默认的CNI插件。

未封装的网络模型如边界网关协议(BGP),以Calico为代表,也是Kubernetes生态系统中另一个流行的网络选择。

02

Kubernetes集群已安装完毕,还没安装Calico

[root@kube-master01 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kube-master01 NotReady master 26h v1.18.0 172.31.209.239 <none> CentOS Linux 8 (Core) 5.4.28 cri-o://1.18.0-dev

kube-worker01 NotReady node 26h v1.18.0 172.31.209.240 <none> CentOS Linux 8 (Core) 5.4.28 cri-o://1.18.0-dev

kube-worker02 NotReady node 26h v1.18.0 172.31.209.241 <none> CentOS Linux 8 (Core) 5.4.28 cri-o://1.18.0-dev

[root@kube-master01 ~]#

Calico同时支持Kubernetes API数据存储(kdd)和etcd数据存储,这里选择存储到etcd

curl https://docs.projectcalico.org/manifests/calico-etcd.yaml -o calico-etcd.yaml

pod的网段默认为192.168.0.0/16,可以通过CALICO_IPV4POOL_CIDR变量修改

- name: CALICO_IPV4POOL_CIDR

value: "192.168.0.0/16"

配置连接etcd的ConfigMap

[root@kube-master01 ~]# vi calico-etcd.yaml

etcd-key: `(cat /etcd-key证书 | base64 | tr -d '\n') `

etcd-cert: `(cat /etcd-cert证书 | base64 | tr -d '\n')`

etcd-ca: `(cat /ca证书 | base64 | tr -d '\n')`

---

etcd_endpoints: "https://172.19.0.11:2379,https://172.19.0.13:2379,https://172.19.0.2:2379"

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

因为CentOS Linux 8的iptables基于nftables,需要添加FELIX_IPTABLESBACKEND选项

- name: FELIX_IPTABLESBACKEND

value: "NFT"

kubelet的配置加入--network-plugin=cni参数,然后执行kubectl apply -f calico-etcd.yaml

配置calicoctl命令行工具

[root@kube-master01 ~]# wget https://github.com/projectcalico/calicoctl/releases/download/v3.13.0/calicoctl-linux-amd64

[root@kube-master01 ~]# chmod +x calicoctl-linux-amd64

[root@kube-master01 ~]# mv calicoctl-linux-amd64 /usr/local/bin/calicoctl

[root@kube-master01 ~]# mkdir -p /etc/calico/

[root@kube-master01 ~]# cat >/etc/calico/calicoctl.cfg <<EOF

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

datastoreType: "etcdv3"

etcdEndpoints: "https://172.19.0.11:2379,https://172.19.0.13:2379,https://172.19.0.2:2379"

etcdCACertFile: "/${ca证书}"

etcdKeyFile: "/${etcd-key证书}"

etcdCertFile: "/${etcd-cert证书}"

EOF

[root@kube-master01 ~]# calicoctl get nodes --out=wide

NAME ASN IPV4 IPV6

kube-master01 (64512) 172.31.209.239/20

kube-worker01 (64512) 172.31.209.240/20

kube-worker02 (64512) 172.31.209.241/20

[root@kube-master01 ~]#

03

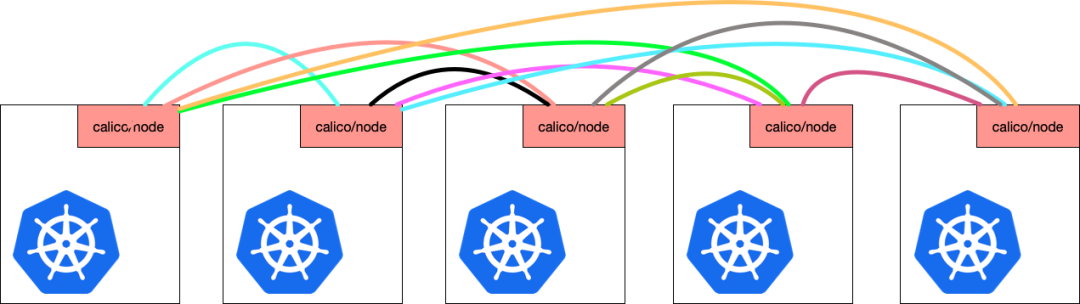

Calico默认是BGP Speaker 全互联模式(node-to-node mesh)模式,集群中的所有节点之间都会相互建立连接,用于路由交换。

[root@kube-master01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+----------+-------------+

| 172.31.209.240 | node-to-node mesh | up | 07:30:06 | Established |

| 172.31.209.241 | node-to-node mesh | up | 07:22:30 | Established |

+----------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-master01 ~]#

BGP使用TCP协议,依靠天然的可靠传输机制,重传、排序等机制来保证BGP协议信息交换的可靠性,默认监听179端口。可以看到有两个连接。与上面node status显示一致。而其他两个节点也是一样

[root@kube-master01 ~]# ss -nat | grep 179

LISTEN 0 8 0.0.0.0:179 0.0.0.0:*

ESTAB 0 0 172.31.209.239:179 172.31.209.241:49745

ESTAB 0 0 172.31.209.239:179 172.31.209.240:50581

ESTAB 0 0 172.31.209.239:6443 172.31.209.239:12179

该模式适合在规模不大的集群中运行,一旦集群增长到数百个节点,将形成一个巨大服务网格,连接数就会成倍增加,出现性能瓶颈。

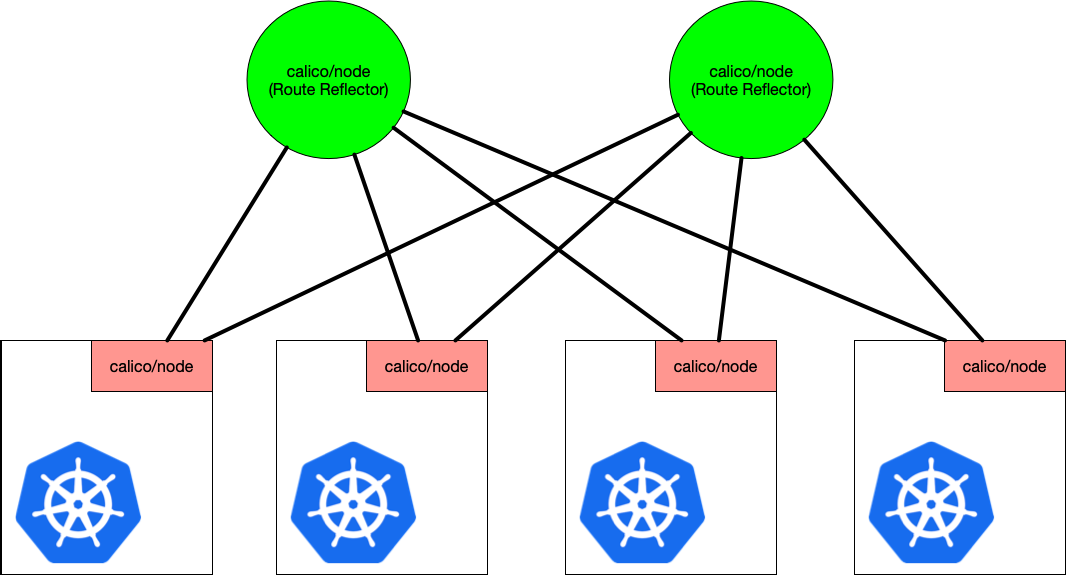

路由反射器(route reflector)为此提供了解决方案。把其中的2个calico节点当做路由反射器,其他节点只需要把这2个节点当做对等体建立连接,路由器反射器会把传递过来的路由,在传递给其他节点,来实现路由交换。

04

默认的BGP没有配置资源,还需要创建一个BGP配置资源, 并且配置为关闭全互连模式

[root@kube-master01 ~]# vi bgp-config.yaml

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: false

asNumber: 63400

BGPConfiguration: BGPConfiguration是全局的配置资源。

nodeToNodeMeshEnabled: 是否开启全互联模式。

asNumber: as表示自治系统,ASN自治系统编号。默认是64512

[root@kube-master01 ~]# calicoctl get nodes -o wide

NAME ASN IPV4 IPV6

kube-master01 (64512) 172.31.209.239/20

kube-worker01 (64512) 172.31.209.240/20

kube-worker02 (64512) 172.31.209.241/20

注意:全互连模式关闭后, k8s集群网络就会中断!

[root@kube-master01 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.239 metric 100

192.168.132.0/26 via 172.31.209.240 dev tunl0 proto bird onlink

blackhole 192.168.237.64/26 proto bird

192.168.237.67 dev calia1ac7c24b53 scope link

192.168.237.68 dev cali9b83f35c440 scope link

192.168.247.192/26 via 172.31.209.241 dev tunl0 proto bird onlink

[root@kube-master01 ~]# calicoctl apply -f bgp-config.yaml

Successfully applied 1 'BGPConfiguration' resource(s)

//关闭以后bgp之间的连接中断,路由清空,网络也就中断了

[root@kube-master01 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.239 metric 100

blackhole 192.168.237.64/26 proto bird

192.168.237.67 dev calia1ac7c24b53 scope link

192.168.237.68 dev cali9b83f35c440 scope link

[root@kube-master01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

No IPv4 peers found.

IPv6 BGP status

No IPv6 peers found.

[root@kube-master01 ~]# kubectl get pod -n kube-system -o wide | grep kibana-logging

kibana-logging-5b578cd4fb-jk52g 1/1 Running 1 24h 192.168.132.20 kube-worker01 <none> <none>

[root@kube-master01 ~]# ping 192.168.132.20

PING 192.168.132.20 (192.168.132.20) 56(84) bytes of data.

注:把nodeToNodeMeshEnabled改为true即启用全互连模式,网络也会恢复

05

配置BGP对等体

https://docs.projectcalico.org/networking/bgp

查看节点信息

[root@kube-master01 ~]# calicoctl get nodes -o wide

NAME ASN IPV4 IPV6

kube-master01 (63400) 172.31.209.239/20

kube-worker01 (63400) 172.31.209.240/20

kube-worker02 (63400) 172.31.209.241/20

对等体资源对象叫做BGPPeer,这里把kube-worker02作为全局的对等体

[root@kube-master01 ~]# kubectl label node kube-worker02 rack=rack-3

node/kube-worker02 labeled

[root@kube-master01 ~]# kubectl label node kube-master01 rack=rack-3

node/kube-master01 labeled

[root@kube-master01 ~]# vi my-global-bgppeer.yaml

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: my-global-bgppeer

spec:

peerIP: 172.31.209.241

asNumber: 63400

[root@kube-master01 ~]# calicoctl create -f my-global-bgppeer.yaml

Successfully created 1 'BGPPeer' resource(s)

kube-master01:

[root@kube-master01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-----------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-----------+-------+----------+-------------+

| 172.31.209.241 | global | up | 18:33:06 | Established |

+----------------+-----------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-master01 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.239 metric 100

blackhole 192.168.237.64/26 proto bird

192.168.237.67 dev calia1ac7c24b53 scope link

192.168.237.68 dev cali9b83f35c440 scope link

192.168.247.192 via 172.31.209.241 dev tunl0 proto bird onlink

[root@kube-master01 ~]#

kube-worker01:

[root@kube-worker01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-----------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-----------+-------+----------+-------------+

| 172.31.209.241 | global | up | 18:33:04 | Established |

+----------------+-----------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-worker01 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.240 metric 100

blackhole 192.168.132.0/26 proto bird

192.168.132.16 dev cali1bb89227365 scope link

192.168.132.17 dev calia6ecaf1e34b scope link

192.168.132.18 dev cali12d4a061371 scope link

192.168.132.19 dev caliac62eeb3c40 scope link

192.168.132.20 dev calid4356ebc5d1 scope link

192.168.237.64/26 via 172.31.209.239 dev tunl0 proto bird onlink

192.168.247.192 via 172.31.209.241 dev tunl0 proto bird onlink

kube-worker02:

[root@kube-worker02 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+---------------+-------+----------+-------------+

| 172.31.209.239 | node specific | up | 18:33:06 | Established |

| 172.31.209.240 | node specific | up | 18:33:04 | Established |

+----------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-worker02 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.241 metric 100

192.168.132.0/26 via 172.31.209.240 dev tunl0 proto bird onlink

192.168.237.64/26 via 172.31.209.239 dev tunl0 proto bird onlink

[root@kube-worker02 ~]#

kube-worker02与另外两个节点都有对等关系,由于BGP的内部安全机制没有把两个节点的路由发给对方,需要创建路由反射器

创建路由反射器

这里选择kube-worker02作为路由反射器,从kubernetes导出该的节点配置,添加routeReflectorClusterID配置

[root@kube-master01 ~]# calicoctl get node kube-worker02 -o yaml > kube-worker02.yaml

[root@kube-master01 ~]# vi kube-worker02.yaml

apiVersion: projectcalico.org/v3

kind: Node

metadata:

annotations:

projectcalico.org/kube-labels: '{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"amd64","kubernetes.io/hostname":"kube-worker02","kubernetes.io/

os":"linux","node-role.kubernetes.io/node":"kube-worker02","rack":"rack-3"}'

creationTimestamp: "2020-03-28T13:04:13Z"

labels:

beta.kubernetes.io/arch: amd64

beta.kubernetes.io/os: linux

kubernetes.io/arch: amd64

kubernetes.io/hostname: kube-worker02

kubernetes.io/os: linux

node-role.kubernetes.io/node: kube-worker02

rack: rack-3

name: kube-worker02

resourceVersion: "263323"

uid: fc093557-4cb8-4c93-b345-2ff635c58152

spec:

bgp:

ipv4Address: 172.31.209.241/20

ipv4IPIPTunnelAddr: 192.168.247.192

routeReflectorClusterID: 244.0.0.1

orchRefs:

- nodeName: kube-worker02

orchestrator: k8s

[root@kube-master01 ~]# calicoctl apply -f kube-worker02.yaml

Successfully applied 1 'Node' resource(s)

[root@kube-master01 ~]#

路由反射器设置高可用,否则单点故障会导致整个集群的网络中断。再添加kube-master01为路由器反射器,从kubernetes导出该的节点配置,添加routeReflectorClusterID即可

选择路由反射器为对等体

[root@kube-master01 ~]# vi peer-with-route-reflectors.yaml

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: peer-with-route-reflectors

spec:

nodeSelector: all()

peerSelector: rack == "rack-3"

[root@kube-master01 ~]# calicoctl apply -f peer-with-route-reflectors.yaml

Successfully applied 1 'BGPPeer' resource(s)

kube-master01:

[root@kube-master01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+---------------+-------+----------+-------------+

| 172.31.209.241 | global | up | 18:33:05 | Established |

| 172.31.209.240 | node specific | up | 18:49:37 | Established |

+----------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-master01 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.239 metric 100

192.168.132.0/26 via 172.31.209.240 dev tunl0 proto bird onlink

blackhole 192.168.237.64/26 proto bird

192.168.237.67 dev calia1ac7c24b53 scope link

192.168.237.68 dev cali9b83f35c440 scope link

192.168.247.192 via 172.31.209.241 dev tunl0 proto bird onlink

[root@kube-master01 ~]#

kube-worker01:

[root@kube-worker01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+---------------+-------+----------+-------------+

| 172.31.209.241 | global | up | 18:33:03 | Established |

| 172.31.209.239 | node specific | up | 18:49:37 | Established |

+----------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-worker01 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.240 metric 100

blackhole 192.168.132.0/26 proto bird

192.168.132.16 dev cali1bb89227365 scope link

192.168.132.17 dev calia6ecaf1e34b scope link

192.168.132.18 dev cali12d4a061371 scope link

192.168.132.19 dev caliac62eeb3c40 scope link

192.168.132.20 dev calid4356ebc5d1 scope link

192.168.237.64/26 via 172.31.209.239 dev tunl0 proto bird onlink

192.168.247.192 via 172.31.209.241 dev tunl0 proto bird onlink

[root@kube-worker01 ~]#

kube-worker02:

[root@kube-worker02 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+---------------+-------+----------+-------------+

| 172.31.209.239 | node specific | up | 18:33:05 | Established |

| 172.31.209.240 | node specific | up | 18:33:03 | Established |

+----------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@kube-worker02 ~]# ip route

default via 172.31.223.253 dev eth0 proto dhcp metric 100

172.31.208.0/20 dev eth0 proto kernel scope link src 172.31.209.241 metric 100

192.168.132.0/26 via 172.31.209.240 dev tunl0 proto bird onlink

192.168.237.64/26 via 172.31.209.239 dev tunl0 proto bird onlink

[root@kube-worker02 ~]#

06

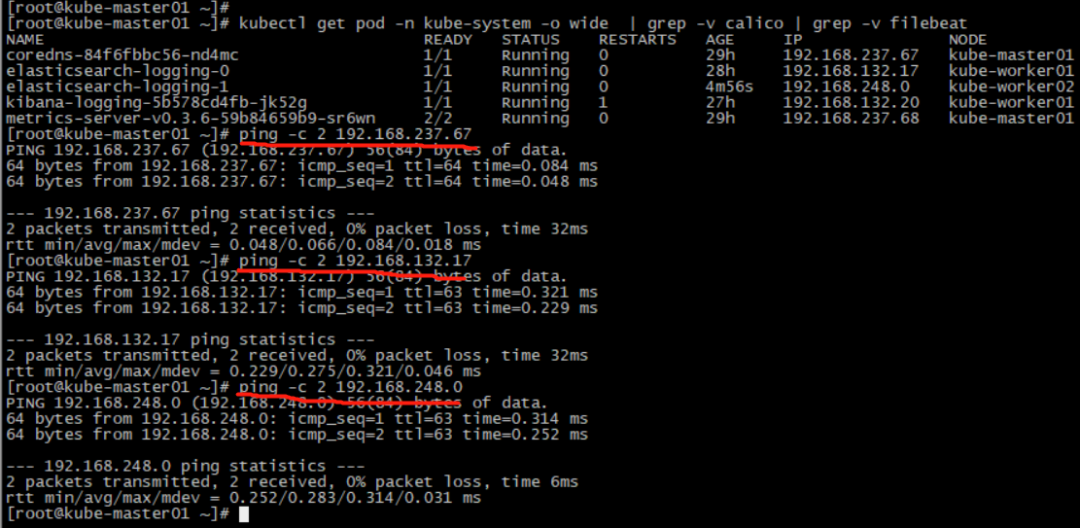

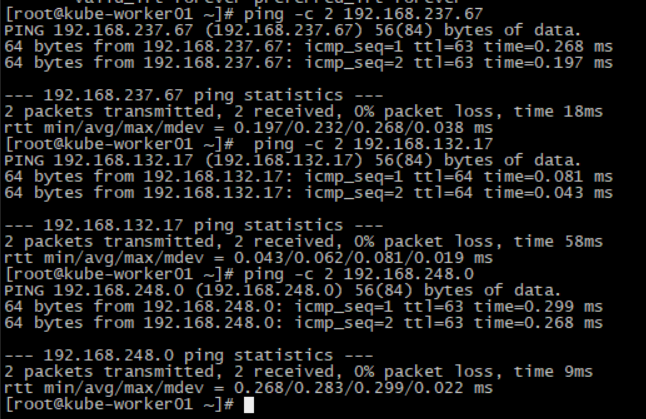

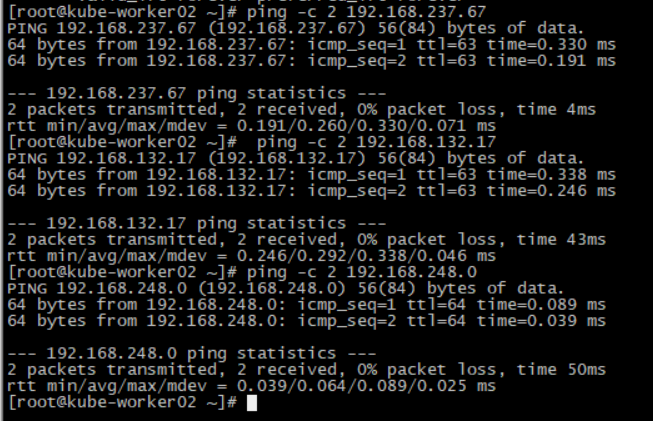

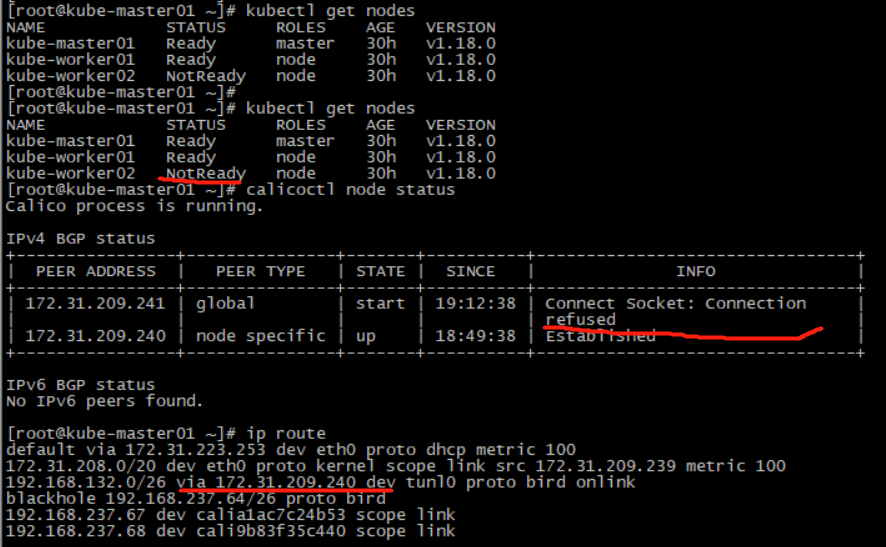

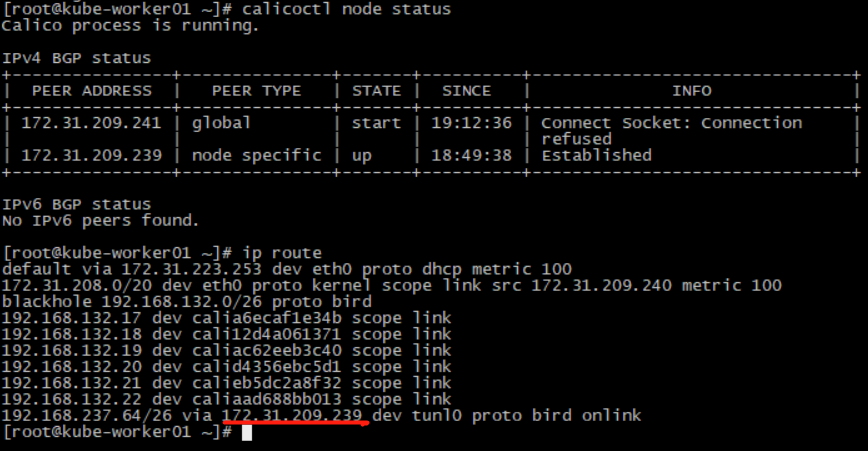

验证

模拟故障,把kube-worker02停机

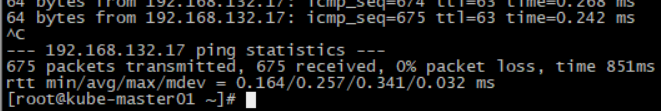

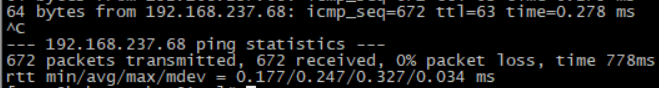

kube-worker02停机过程其他两台机相互ping,网络没有中断

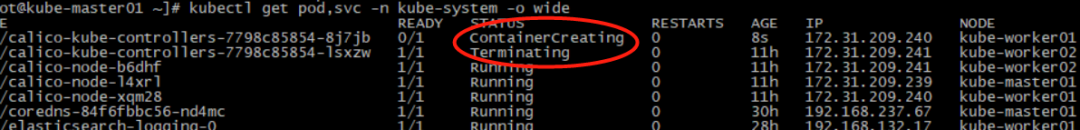

Kubernetes故障正常转移

验证待续...