01

点击上方“icloud布道师”,“星标或置顶公众号”

逆境前行,能帮你的只有自己

************************************

Kubernetes——03

——二进制部署--part1

本文制作需要10小时,阅读需要40分钟

字数超了.剩下的部分下礼拜见......大概没遇见过排一星期错误的......

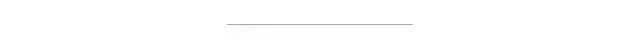

背景介绍

kubeadm快速搭建集群后,想上prometheus+grafana来进行相关测试,无奈被告知会有一系列可能会发生的问题,卸载Kubeadm安装的版本,上二进制安装(默认当前环境为上次部署完成的状态)

初上手不建议二进制安装,因为这会使你迅速失去信心!

本次实验在上次kubeadm安装的基础上进行操作,一些基础环境就不再二次展示

传送门:Kubernetes--概念篇 Kubernetes--部署篇

卸载K8S集群

卸载kubeadm安装的k8s版本

$ kubectl delete node --all //卸载所有节点node "wqdcsrv032.cn.infra" deletednode "wqdcsrv033" deletednode "wqdcsrv033.cn.infra" deleted$ kubeadm reset -f$ modprobe -r ipip$ lsmod$ yum remove -y kubelet kubeadm kubectl //卸载以上软件Loaded plugins: langpacks, ulninfoResolving Dependencies--> Running transaction check---> Package kubeadm.x86_64 0:1.15.1-0 will be erased---> Package kubectl.x86_64 0:1.15.1-0 will be erased---> Package kubelet.x86_64 0:1.15.1-0 will be erased----省略部分信息Removed:kubeadm.x86_64 0:1.15.1-0 kubectl.x86_64 0:1.15.1-0 kubelet.x86_64 0:1.15.1-0$ rm -rf ~/.kube/ //删除所有组建的系统文件rm -rf /etc/kubernetes/rm -rf /etc/systemd/system/kubelet.service.drm -rf /etc/systemd/system/kubelet.servicerm -rf /usr/bin/kube*rm -rf etc/cnirm -rf opt/cnirm -rf var/lib/etcdrm -rf /var/etcd

二进制安装准备阶段

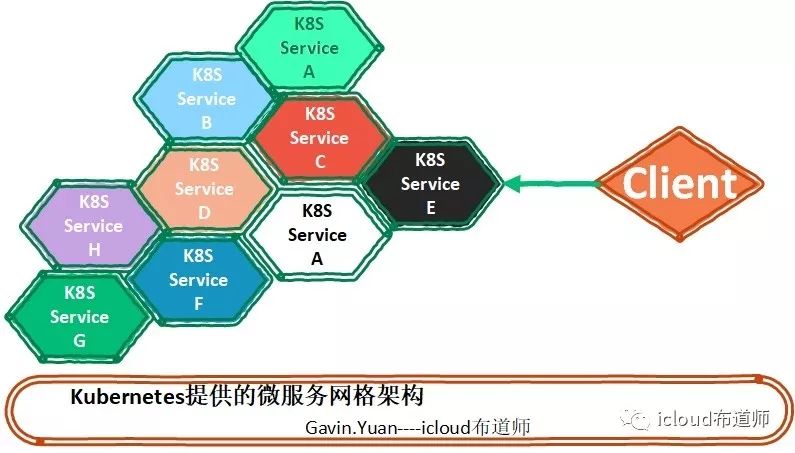

下载二进制包

从官网https://github.com/kubernetes/kubernetes/releases 找到对应的版本号,单击changelog,找到已经编译好的二进制文件的下载页面;如下图所示:

在压缩包kubernetes.tar.gz内包含了kubernetes的服务程序文件、文档和示例,在压缩包kubernetes.src.tar.gz中则包含了全部的代码,也可以直接下载server binaries中的kubernetes-server-linux-amd64.tar.gz文件,其中包含了kubernetes需要运行的全部服务程序文件。

分别下载对应的 Client Binaries、Server Binaries、Node Binaries 版本;

从github官网(https://github.com/coreos/etcd/releases)下载etcd二进制文件

最后得到如下list:

# lltotal 548584-rw-r--r-- 1 root root 10423953 Jul 30 17:25 etcd-v3.3.13-linux-amd64.tar.gz-rw-r--r-- 1 root root 13337585 Jul 30 17:18 kubernetes-client-linux-amd64.tar.gz-rw-r--r-- 1 root root 94223529 Jul 30 17:18 kubernetes-node-linux-amd64.tar.gz-rw-r--r-- 1 root root 443761836 Jul 30 17:24 kubernetes-server-linux-amd64.tar.gz

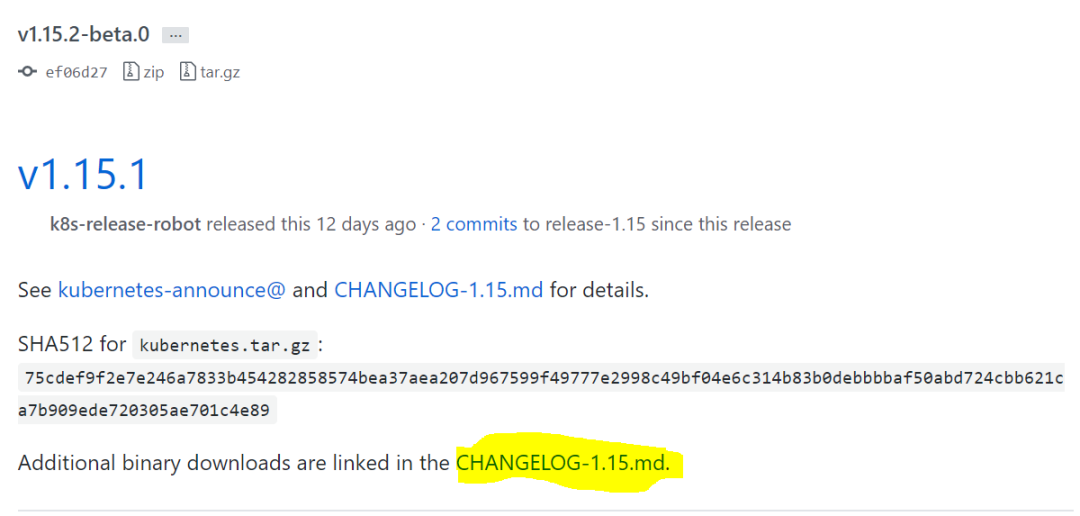

搭建环境介绍

我们这次实验采用1.5.1的版本进行安装

主要的服务程序员文件列表如下:

| 文件名 | 说明 |

|---|---|

| kube-apiserver | kube-apiserver的主程序 |

| kube-apiserver.docker_tag | kube-apiserver docker镜像的tag |

| kube-controller-manager | kube-controller-manager的主程序 |

kube-controller-manager.docker_tag | kube-controller-manager 的docker镜像 的 tag |

| kube-controller-manager.tar | kube-controller-manager 的docker镜像 文件 |

| kube-scheduler | kube-scheduler 主程序 |

kubescheduler.docker_tag | kube-scheduler的docker镜像的tag |

| kube-scheduler.tar | kube-scheduler的docker镜像文件 |

| kubelet | kubelet主程序 |

| kube-proxy | kube-proxy主程序 |

| kubeproxy.tar | kube-proxy的docker镜像文件 |

| kubectl | 客户端命令行工具 |

kubeadm | kubernetes集群安装的命令行工具 |

| hyperkube | 包含了所有服务的程序,可以启动任一服务 |

cloud-controller-manager | 提供与各种云工供应商对接的各种controller |

| apiextensions-apiserver | 提供实现自定义资源对象的扩展API Server |

kubernetes的主要服务程序都可以通过直接运行二进制文件加上启动参数完成运行

在kubernetes的Master上需要部署etcd、kube-apiserver、kube-controller-manager、kube-scheduler服务进程

在工作Node上需要部署docker、kubelet、和kube-proxy服务进程

将kubernetes的二进制可执行文件copy到/usr/bin目录下,然后在/usr/lib/systemd/system目录下为各服务创建systemd服务配置文件(稍后)要使得kubernetes正常工作,需要详细的配置各个服务的启动参数,接下来主要介绍服务最主要的启动参数。

下图展示的为这次实验的架构

log管理

设置rsylogd和systemd journald

systemd 的 journald 是 Centos 7 缺省的日志记录工具,它记录了所有系统、内核、Service Unit 的日志。

相比 systemd,journald 记录的日志有如下优势:

可以记录到内存或文件系统;(默认记录到内存,对应的位置为 run/log/jounal);

可以限制占用的磁盘空间、保证磁盘剩余空间;

可以限制日志文件大小、保存的时间;

journald 默认将日志转发给 rsyslog,这会导致日志写了多份,/var/log/messages 中包含了太多无关日志,不方便后续查看,同时也影响系统性能。

mkdir var/log/journal # 持久化保存日志的目录mkdir etc/systemd/journald.conf.dcat > etc/systemd/journald.conf.d/99-prophet.conf <<EOF[Journal]# 持久化保存到磁盘Storage=persistent# 压缩历史日志Compress=yesSyncIntervalSec=5mRateLimitInterval=30sRateLimitBurst=1000# 最大占用空间 10GSystemMaxUse=10G# 单日志文件最大 200MSystemMaxFileSize=200M# 日志保存时间 2 周MaxRetentionSec=2week# 不将日志转发到 syslogForwardToSyslog=noEOFsystemctl restart systemd-journald

环境变量

将此脚本分发到每个节点,并依据自己情况修改

vim environment.sh#!/usr/bin/bash# 生成 EncryptionConfig 所需的加密 keyexport ENCRYPTION_KEY=$(head -c 32 dev/urandom | base64)# 集群各机器 IP 数组export NODE_IPS=(10.67.194.43 10.67.194.42 10.67.194.41)# 集群各 IP 对应的主机名数组export NODE_NAMES=(master.kube node1.kube node2.kube)# etcd 集群服务地址列表export ETCD_ENDPOINTS="https://10.67.194.43:2379,https://10.67.194.42,https://10.67.194.41:2379"# etcd 集群间通信的 IP 和端口export ETCD_NODES="master.kube=https://10.67.194.43:2380,node1.kube=https://10.67.194.42:2380,node2.kube=https://10.67.194.41:2380"# kube-apiserver 的反向代理(kube-nginx)地址端口export KUBE_APISERVER="https://127.0.0.1:8443"# 节点间互联网络接口名称export IFACE="eth0"# etcd 数据目录export ETCD_DATA_DIR="/data/k8s/etcd/data"# etcd WAL 目录,建议是 SSD 磁盘分区,或者和 ETCD_DATA_DIR 不同的磁盘分区export ETCD_WAL_DIR="/data/k8s/etcd/wal"# k8s 各组件数据目录export K8S_DIR="/data/k8s/k8s"# docker 数据目录export DOCKER_DIR="/data/k8s/docker"## 以下参数一般不需要修改# TLS Bootstrapping 使用的 Token,可以使用命令 head -c 16 dev/urandom | od -An -t x | tr -d ' ' 生成BOOTSTRAP_TOKEN="41f7e4ba8b7be874fcff18bf5cf41a7c"# 最好使用 当前未用的网段 来定义服务网段和 Pod 网段# 服务网段,部署前路由不可达,部署后集群内路由可达(kube-proxy 保证)SERVICE_CIDR="10.254.0.0/16"# Pod 网段,建议 16 段地址,部署前路由不可达,部署后集群内路由可达(flanneld 保证)CLUSTER_CIDR="172.30.0.0/16"# 服务端口范围 (NodePort Range)export NODE_PORT_RANGE="30000-32767"# flanneld 网络配置前缀export FLANNEL_ETCD_PREFIX="/kubernetes/network"# kubernetes 服务 IP (一般是 SERVICE_CIDR 中第一个IP)export CLUSTER_KUBERNETES_SVC_IP="10.254.0.1"# 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)export CLUSTER_DNS_SVC_IP="10.254.0.2"# 集群 DNS 域名(末尾不带点号)export CLUSTER_DNS_DOMAIN="cluster.local"# 将二进制目录 opt/k8s/bin 加到 PATH 中# export PATH=/opt/k8s/bin:$PATH

CA认证搭建

在一个安全的内网环境中,kubernetes的各个组件与Master通信可以通过kube-apiserver的非安全端口http://<kube-apiserver-ip>:8080进行访问,但如果API Server需要对外提供服务,或者集群中的某些容器也需要访问API Server以获取集群中的某些信息,则更安全的方案就是启动https安全机制。

kubernetes提供了基于CA签名的双向数字证书认证方式和简单的基于HTTP base或token的认证方式,其中CA证书的方式安全性最高。我们采用CA证书的方式配置kubernetes集群。要求Master上的kube-apiserver、kube-controller-manager、kube-scheduler进程和Node上的kubelet、kube-proxy进程进行CA签名双向数字证书安全设置

本文档使用 CloudFlare 的 PKI 工具集 cfssl 创建所有证书。

基于CA认证的过程如下:

为kube-apiserver生成一个数字证书,并使用CA签名认证

为kube-apiserver进程配置证书相关的启动参数,包括CA证书(用于验证客户端证书的签名真伪),自己的经过CA签名后的证书及私钥

为每个访问Kubernetes API Server的客户端(如kube-controller-manager的客户端程序)进程都生成自己的数字证书,也都用CA证书签名,在相关程序的启动启动参数增加CA证书、自己的证书等相关参数

1.安装cfssl工具集

mkdir -p opt/k8s/cert && cd opt/k8s/wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64lltotal 567386drwxr-xr-x 2 root root 1024 Jul 31 17:15 cert-rw-r--r-- 1 root root 6595195 Jul 31 16:57 cfssl-certinfo_linux-amd64-rw-r--r-- 1 root root 2277873 Jul 31 16:57 cfssljson_linux-amd64-rw-r--r-- 1 root root 10376657 Jul 31 16:57 cfssl_linux-amd64mkdir opt/k8s/bin/mv cfssl_linux-amd64 opt/k8s/bin/cfsslmv cfssljson_linux-amd64 opt/k8s/bin/cfssljsonmv cfssl-certinfo_linux-amd64 opt/k8s/bin/cfssl-certinfochmod +x opt/k8s/bin/*export PATH=/opt/k8s/bin:$PATH

2.创建根证书(CA)

CA 证书是集群所有节点共享的,只需要创建一个 CA 证书,后续创建的所有证书都由它签名。

创建配置文件

CA 配置文件用于配置根证书的使用场景 (profile) 和具体参数 (usage,过期时间、服务端认证、客户端认证、加密等),后续在签名其它证书时需要指定特定场景

mkdir opt/k8s/workcd opt/k8s/workvim ca-config.json****************************************{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "87600h"}}}}****************************************-- signing:表示该证书可用于签名其它证书,生成的 ca.pem 证书中 CA=TRUE;-- server auth:表示 client 可以用该证书对 server 提供的证书进行验证;-- client auth:表示 server 可以用该证书对 client 提供的证书进行验证;

创建证书签名请求文件

cd opt/k8s/workcat > ca-csr.json <<EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "4Paradigm"}],"ca": {"expiry": "876000h"}}EOF**************************************************************-- CN:Common Name,kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name),浏览器使用该字段验证网站是否合法;-- O:Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group);-- kube-apiserver 将提取的 User、Group 作为 RBAC 授权的用户标识;

生成CA证书和私钥

cd /opt/k8s/workcfssl gencert -initca ca-csr.json | cfssljson -bare ca2019/07/31 17:34:06 [INFO] generating a new CA key and certificate from CSR2019/07/31 17:34:06 [INFO] generate received request2019/07/31 17:34:06 [INFO] received CSR2019/07/31 17:34:06 [INFO] generating key: rsa-20482019/07/31 17:34:06 [INFO] encoded CSR2019/07/31 17:34:06 [INFO] signed certificate with serial number 586104341755097112569226212982884644575651160362ll ca*-rw-r--r-- 1 root root 292 Jul 31 17:27 ca-config.json-rw-r--r-- 1 root root 1005 Jul 31 17:34 ca.csr-rw-r--r-- 1 root root 249 Jul 31 17:29 ca-csr.json-rw------- 1 root root 1675 Jul 31 17:34 ca-key.pem-rw-r--r-- 1 root root 1371 Jul 31 17:34 ca.pem

3.分发证书文件

将生成的 CA 证书、秘钥文件、配置文件拷贝到所有节点的 /etc/kubernetes/cert

目录下:

#在master、node1、2创建文件mkdir /etc/kubernetes/cert -p#将主机的文件传过来scp etc/kubernetes/ca/ca.* root@10.67.194.4*:/etc/kubernetes/cert#我这有跳板机......lrzsz吧,也记录一下sz ca*rz -e 五次...x2#最终把所有文件放到/etc/kubernetes/cert/

环境就算搭完了,现在开始正式部署

安装kubectl

kubectl 默认从 ~/.kube/config

文件读取 kube-apiserver 地址和认证信息,如果没有配置,执行 kubectl 命令时可能会出错:

$ kubectl get podsThe connection to the server localhost:8080 was refused - did you specify the right host or port?

下载和分发kubectl

cd opt/k8s/workwget https://dl.k8s.io/v1.15.1/kubernetes-client-linux-amd64.tar.gtar -xzvf kubernetes-client-linux-amd64.tar.gz分发到所有使用 kubectl 的节点的/opt/ks/bin/目录下scp kubernetes/client/bin/kubectl root@${node_ip}:/opt/k8s/bin/chmod +x /opt/k8s/bin/*export PATH=/opt/k8s/bin:$PATH

创建admin证书和私钥

kubectl 与 apiserver https 安全端口通信,apiserver 对提供的证书进行认证和授权。

kubectl 作为集群的管理工具,需要被授予最高权限,这里创建具有最高权限的 admin 证书。

创建证书签名请求:

cd opt/k8s/workcat > admin-csr.json <<EOF{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "system:masters","OU": "4Paradigm"}]}EOF*****************************************-- O 为 system:masters,kube-apiserver 收到该证书后将请求的 Group 设置为 system:masters;-- 预定义的 ClusterRoleBinding cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予所有 API的权限;-- 该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空;

生成证书和私钥:

cd /opt/k8s/workcfssl gencert -ca=/opt/k8s/work/ca.pem \-ca-key=/opt/k8s/work/ca-key.pem \-config=/opt/k8s/work/ca-config.json \-profile=kubernetes admin-csr.json | cfssljson -bare adminls admin*

创建kubeconfig

kubeconfig 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

cd opt/k8s/work# 设置集群参数kubectl config set-cluster kubernetes \--certificate-authority=/opt/k8s/work/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=kubectl.kubeconfig# 设置客户端认证参数kubectl config set-credentials admin \--client-certificate=/opt/k8s/work/admin.pem \--client-key=/opt/k8s/work/admin-key.pem \--embed-certs=true \--kubeconfig=kubectl.kubeconfig# 设置上下文参数kubectl config set-context kubernetes \--cluster=kubernetes \--user=admin \--kubeconfig=kubectl.kubeconfig# 设置默认上下文kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig************************************************-- certificate-authority:验证 kube-apiserver 证书的根证书;-- client-certificate、--client-key:刚生成的 admin 证书和私钥,连接 kube-apiserver 时使用;-- embed-certs=true:将 ca.pem 和 admin.pem 证书内容嵌入到生成的 kubectl.kubeconfig 文件中(不加时,写入的是证书文件路径,后续拷贝 kubeconfig 到其它机器时,还需要单独拷贝证书文件,不方便。);

分发kubeconfig

kubeconfig 文件分发到所有节点

cd opt/k8s/work#在所有节点创建~/.kubemkdir ~/.kube#然后把文件加入到 .kubescp kubectl.kubeconfig root@${node_ip}:~/.kube/config

注意!保存的文件名为 ~/.kube/config

etcd部署

etcd服务作为kubernetes集群的主数据库,在安装kubernetes各服务之前需要首先安装并启动etcd服务

etcd 是基于 Raft 的分布式 key-value 存储系统,由 CoreOS 开发,常用于服务发现、共享配置以及并发控制(如 leader 选举、分布式锁等)。kubernetes 使用 etcd 存储所有运行数据

部署一个3节点高可用 etcd 集群的步骤:

下载和分发 etcd 二进制文件;

创建 etcd 集群各节点的 x509 证书,用于加密客户端(如 etcdctl) 与 etcd 集群、etcd 集群之间的数据流;

创建 etcd 的 systemd unit 文件,配置服务参数;

检查集群工作状态;

下载和分发 etcd 二进制文件

从github官网(https://github.com/coreos/etcd/releases)下载etcd二进制文件,将etcd和etcdctl文件复制到/usr/bin目录下,以便在各路径下都能使用这两个命令。

cd /opt/k8s/workwget https://github.com/etcd-io/etcd/releases/download/v3.3.13/etcd-v3.3.13-linux-amd64.tar.gzll-rw-r--r-- 1 root root 10423953 Jul 29 17:49 etcd-v3.3.13-linux-amd64.tar.gztar -zxvf etcd-v3.3.13-linux-amd64.tar.gzcd etcd-v3.3.13-linux-amd64/lltotal 29776drwxr-xr-x 10 1000 1000 4096 May 3 01:55 Documentation-rwxr-xr-x 1 1000 1000 16927136 May 3 01:55 etcd-rwxr-xr-x 1 1000 1000 13498880 May 3 01:55 etcdctl-rw-r--r-- 1 1000 1000 38864 May 3 01:55 README-etcdctl.md-rw-r--r-- 1 1000 1000 7262 May 3 01:55 README.md-rw-r--r-- 1 1000 1000 7855 May 3 01:55 READMEv2-etcdctl.mdcp etc* opt/k8s/bin/不要忘记传到所有node,我是lrzsz...#Master上:sz etc*#所有Node上: mkdir /etc/k8s/bin/ -prz -e

chmod -R +x etc/k8s/bin/

创建etcd证书及私钥

创建证书签名请求:

cd opt/k8s/workcat > etcd-csr.json <<EOF{"CN": "etcd","hosts": ["127.0.0.1","10.67.194.43","10.67.194.42","10.67.194.41"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "4Paradigm"}]}EOF*********************************************-- hosts 字段指定授权使用该证书的 etcd 节点 IP 或域名列表,需要将 etcd 集群的三个节点 IP 都列在其中;

生成证书和私钥:

cd opt/k8s/workcfssl gencert -ca=/etc/kubernetes/cert/ca.pem \> -ca-key=/etc/kubernetes/cert/ca-key.pem \> -config=/etc/kubernetes/certc/ca-config.json \> -profile=kubernetes etcd-csr.json | cfssljson -bare etcd2019/07/31 18:12:55 [INFO] generate received request2019/07/31 18:12:55 [INFO] received CSR2019/07/31 18:12:55 [INFO] generating key: rsa-20482019/07/31 18:12:56 [INFO] encoded CSR2019/07/31 18:12:56 [INFO] signed certificate with serial number 2798489592957340725418071893138152807430754254602019/07/31 18:12:56 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").ll etcd*pem-rw------- 1 root root 1675 Jul 31 18:12 etcd-key.pem-rw-r--r-- 1 root root 1444 Jul 31 18:12 etcd.pem

分发证书和私钥

分发生成的证书和私钥到各 etcd 节点:

#Master上操作: sz etc*# mkdir etc/etcd/cert -p#所有Node上操作: rz -e 到//etc/etcd/cert/

设置配置文件

设置systemd服务配置文件/usr/lib/systemd/system/etcd.service:

cd opt/k8s/worksource /opt/k8s/bin/environment.shcat > etcd.service.template <<EOF[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.targetDocumentation=https://github.com/coreos[Service]Type=notifyWorkingDirectory=${ETCD_DATA_DIR}ExecStart=/opt/k8s/bin/etcd \\--data-dir=${ETCD_DATA_DIR} \\--wal-dir=${ETCD_WAL_DIR} \\--name=##NODE_NAME## \\--cert-file=/etc/etcd/cert/etcd.pem \\--key-file=/etc/etcd/cert/etcd-key.pem \\--trusted-ca-file=/etc/kubernetes/cert/ca.pem \\--peer-cert-file=/etc/etcd/cert/etcd.pem \\--peer-key-file=/etc/etcd/cert/etcd-key.pem \\--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \\--peer-client-cert-auth \\--client-cert-auth \\--listen-peer-urls=https://##NODE_IP##:2380 \\--initial-advertise-peer-urls=https://##NODE_IP##:2380 \\--listen-client-urls=https://##NODE_IP##:2379,http://127.0.0.1:2379 \\--advertise-client-urls=https://##NODE_IP##:2379 \\--initial-cluster-token=etcd-cluster-0 \\--initial-cluster=${ETCD_NODES} \\--initial-cluster-state=new \\--auto-compaction-mode=periodic \\--auto-compaction-retention=1 \\--max-request-bytes=33554432 \\--quota-backend-bytes=6442450944 \\--heartbeat-interval=250 \\--election-timeout=2000Restart=on-failureRestartSec=5LimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF******************************************-- WorkingDirectory、--data-dir:指定工作目录和数据目录为 ${ETCD_DATA_DIR},需在启动服务前创建这个目录;-- wal-dir:指定 wal 目录,为了提高性能,一般使用 SSD 或者和 --data-dir 不同的磁盘;-- name:指定节点名称,当 --initial-cluster-state 值为 new 时,--name 的参数值必须位于 --initial-cluster 列表中;-- cert-file、--key-file:etcd server 与 client 通信时使用的证书和私钥;-- trusted-ca-file:签名 client 证书的 CA 证书,用于验证 client 证书;-- peer-cert-file、--peer-key-file:etcd 与 peer 通信使用的证书和私钥;-- peer-trusted-ca-file:签名 peer 证书的 CA 证书,用于验证 peer 证书;

其中,WorkingDirectory表示etcd数据库保存的目录,需要在启动etcd服务之前创建!否则启动服务的时候会报错“Failed at step CHDIR spawning opt/k8s/bin/etcd: No such file or directory”;

分发配置文件到各节点

替换模板文件中的变量,为各节点创建 systemd unit 文件:

cd opt/k8s/worksource opt/k8s/bin/environment.shfor (( i=0; i < 3; i++ ))dosed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.servicedonels *.service****************************************************************-- NODE_NAMES 和 NODE_IPS 为相同长度的 bash 数组,分别为节点名称和对应的 IP;

分发生成的 systemd unit 文件:

文件重命名为 etcd.service!!!scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service************************--方法很多,自行尝试

配置完成后,通过systemctl start 命令启动etcd服务,同时使用systemctl enable 命令将服务加入开机启动启动列表

source opt/k8s/bin/environment.shmkdir -p ${ETCD_DATA_DIR} ${ETCD_WAL_DIR}chmod 755 opt/k8s/bin/* -Rchmod +x -R etc/etcd/cert/chmod -R +x etc/kubernetes/certsystemctl daemon-reloadsystemctl enable etcd.servicesystemctl start etcd.service

通过执行ectdctl cluster-health,可以验证etcd是否正确启动

etcdctl cluster-healthfailed to check the health of member 512545bc7e1e6eed on https://10.67.194.42:2379: Get https://10.67.194.42:2379/health: x509: certificate signed by unknown authoritymember 512545bc7e1e6eed is unreachable: [https://10.67.194.42:2379] are all unreachablefailed to check the health of member d7069edb0fde59b2 on https://10.67.194.41:2379: Get https://10.67.194.41:2379/health: x509: certificate signed by unknown authoritymember d7069edb0fde59b2 is unreachable: [https://10.67.194.41:2379] are all unreachablefailed to check the health of member efdef5ee8872f5ac on https://10.67.194.43:2379: Get https://10.67.194.43:2379/health: x509: certificate signed by unknown authoritymember efdef5ee8872f5ac is unreachable: [https://10.67.194.43:2379] are all unreachablecluster is unavailable**************************有错误********************************这个报错应该是证书的问题了,不带证书测试就是报这个错误,带证书后,测试正常,见下:# 指定证书检查etcdctl --ca-file=/etc/kubernetes/cert/ca.pem --cert-file=/etc/etcd/cert/etcd.pem --key-file=/etc/etcd/cert/etcd-key.pem --endpoints=https://10.67.194.43:2379,https://10.67.194.42:2379,https://10.67.194.41:2379 cluster-healthmember 512545bc7e1e6eed is healthy: got healthy result from https://10.67.194.42:2379member d7069edb0fde59b2 is healthy: got healthy result from https://10.67.194.41:2379member efdef5ee8872f5ac is healthy: got healthy result from https://10.67.194.43:2379cluster is healthy#验证服务状态for node_ip in ${NODE_IPS[@]}> do> echo ">>> ${node_ip}"> ETCDCTL_API=3 opt/k8s/bin/etcdctl \> --endpoints=https://${node_ip}:2379 \> --cacert=/etc/kubernetes/cert/ca.pem \> --cert=/etc/etcd/cert/etcd.pem \> --key=/etc/etcd/cert/etcd-key.pem endpoint health> done>>> 10.67.194.43https://10.67.194.43:2379 is healthy: successfully committed proposal: took = 1.459379ms>>> 10.67.194.42https://10.67.194.42:2379 is healthy: successfully committed proposal: took = 1.69417ms>>> 10.67.194.41https://10.67.194.41:2379 is healthy: successfully committed proposal: took = 1.42945ms#查看集群状态ETCDCTL_API=3 opt/k8s/bin/etcdctl \-w table --cacert=/etc/kubernetes/cert/ca.pem \--cert=/etc/etcd/cert/etcd.pem \--key=/etc/etcd/cert/etcd-key.pem \--endpoints=${ETCD_ENDPOINTS} endpoint statusFailed to get the status of endpoint https://10.67.194.42 (context deadline exceeded)+---------------------------+------------------+---------+---------+-----------+-----------+------------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |+---------------------------+------------------+---------+---------+-----------+-----------+------------+| https://10.67.194.43:2379 | efdef5ee8872f5ac | 3.3.13 | 20 kB | true | 1697 | 28 || https://10.67.194.41:2379 | d7069edb0fde59b2 | 3.3.13 | 20 kB | false | 1697 | 28 |+---------------------------+------------------+---------+---------+-----------+-----------+------------+#查看主etcdetcdctl --ca-file=/etc/kubernetes/cert/ca.pem --cert-file=/etc/etcd/cert/etcd.pem --key-file=/etc/etcd/cert/etcd-key.pem --endpoints=https://10.67.194.43:2379,https://10.67.194.42:2379,https://10.67.194.41:2379? member list512545bc7e1e6eed: name=node1.kube peerURLs=https://10.67.194.42:2380 clientURLs=https://10.67.194.42:2379 isLeader=falsed7069edb0fde59b2: name=node2.kube peerURLs=https://10.67.194.41:2380 clientURLs=https://10.67.194.41:2379 isLeader=falseefdef5ee8872f5ac: name=master.kube peerURLs=https://10.67.194.43:2380 clientURLs=https://10.67.194.43:2379 isLeader=true#测试数据master创建etcdctl --ca-file=/etc/kubernetes/cert/ca.pem --cert-file=/etc/etcd/cert/etcd.pem --key-file=/etc/etcd/cert/etcd-key.pem mkdir testetcdctl --ca-file=/etc/kubernetes/cert/ca.pem --cert-file=/etc/etcd/cert/etcd.pem --key-file=/etc/etcd/cert/etcd-key.pem mkdir lsetcdctl --ca-file=/etc/kubernetes/cert/ca.pem --cert-file=/etc/etcd/cert/etcd.pem --key-file=/etc/etcd/cert/etcd-key.pem ls/ls/test#在节点查看数据已经同步

部署flannel网络

kubernetes 要求集群内各节点(包括 master 节点)能通过 Pod 网段互联互通。flannel 使用 vxlan 技术为各节点创建一个可以互通的 Pod 网络,使用的端口为 UDP 8472(需要开放该端口,如公有云 AWS 等)。

flanneld 第一次启动时,从 etcd 获取配置的 Pod 网段信息,为本节点分配一个未使用的地址段,然后创建 flannedl.1

网络接口(也可能是其它名称,如 flannel1 等)。

flannel 将分配给自己的 Pod 网段信息写入 /run/flannel/docker

文件,docker 后续使用这个文件中的环境变量设置 docker0

网桥,从而从这个地址段为本节点的所有 Pod 容器分配 IP。

下载分发flannel服务

下载二进制包

cd opt/k8s/workmkdir flanneltar -xzvf flannel-v0.11.0-linux-amd64.tar.gz -C flannel

cd opt/k8s/worksource opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]}doecho ">>> ${node_ip}"scp flannel/{flanneld,mk-docker-opts.sh} root@${node_ip}:/opt/k8s/bin/ssh root@${node_ip} "chmod +x opt/k8s/bin/*"donechmod +x opt/k8s/bin/*

创建flannel证书和私钥

flanneld 从 etcd 集群存取网段分配信息,而 etcd 集群启用了双向 x509 证书认证,所以需要为 flanneld 生成证书和私钥。

cd opt/k8s/workcat > flanneld-csr.json <<EOF{"CN": "flanneld","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "4Paradigm"}]}EOF**************************************************-- 该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空

cfssl gencert -ca=/opt/k8s/work/ca.pem \-ca-key=/opt/k8s/work/ca-key.pem \-config=/opt/k8s/work/ca-config.json \-profile=kubernetes flanneld-csr.json | cfssljson -bare flanneldll flanneld*pem

mkdir -p etc/flanneld/cert将flanel的证书分发到三个节点chmod +x *

向etcd写入网段信息(只需要在master执行)

cd /opt/k8s/worksource /opt/k8s/bin/environment.shetcdctl \--endpoints=${ETCD_ENDPOINTS} \--ca-file=/opt/k8s/work/ca.pem \--cert-file=/opt/k8s/work/flanneld.pem \--key-file=/opt/k8s/work/flanneld-key.pem \mk ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}'------------------------------{"Network":"172.30.0.0/16", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}-- flanneld 当前版本 (v0.11.0) 不支持 etcd v3,故使用 etcd v2 API 写入配置 key 和网段数据;-- 写入的 Pod 网段 ${CLUSTER_CIDR} 地址段(如 16)必须小于 SubnetLen,必须与 kube-controller-manager 的 --cluster-cidr 参数值一致;

创建flannel的systemd文件

cd opt/k8s/worksource opt/k8s/bin/environment.shcat > flanneld.service << EOF[Unit]Description=Flanneld overlay address etcd agentAfter=network.targetAfter=network-online.targetWants=network-online.targetAfter=etcd.serviceBefore=docker.service[Service]Type=notifyExecStart=/opt/k8s/bin/flanneld \\-etcd-cafile=/etc/kubernetes/cert/ca.pem \\-etcd-certfile=/etc/flanneld/cert/flanneld.pem \\-etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \\-etcd-endpoints=${ETCD_ENDPOINTS} \\-etcd-prefix=${FLANNEL_ETCD_PREFIX} \\-iface=${IFACE} \\-ip-masqExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d run/flannel/dockerRestart=alwaysRestartSec=5StartLimitInterval=0[Install]WantedBy=multi-user.targetRequiredBy=docker.serviceEOF***************************************************************-- mk-docker-opts.sh 脚本将分配给 flanneld 的 Pod 子网段信息写入 run/flannel/docker 文件,后续 docker 启动时使用这个文件中的环境变量配置 docker0 网桥;-- flanneld 使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用 -iface 参数指定通信接口;-- flanneld 运行时需要 root 权限;-- -ip-masq: flanneld 为访问 Pod 网络外的流量设置 SNAT 规则,同时将传递给 Docker 的变量 --ip-masq(/run/flannel/docker 文件中)设置为 false,这样 Docker 将不再创建 SNAT 规则;Docker 的 --ip-masq 为 true 时,创建的 SNAT 规则比较“暴力”:将所有本节点 Pod 发起的、访问非 docker0 接口的请求做 SNAT,这样访问其他节点 Pod 的请求来源 IP 会被设置为 flannel.1 接口的 IP,导致目的 Pod 看不到真实的来源 Pod IP。flanneld 创建的 SNAT 规则比较温和,只对访问非 Pod 网段的请求做 SNAT。

分发flannel的systemd文件

#下载flannled.service文件scp flanneld.service root@${node_ip}:/etc/systemd/system/

启动flannel(三节点全部启动)

systemctl daemon-reloadsystemctl enable flanneldsystemctl restart flanneldsystemctl status flanneldINFRA [root@wqdcsrv034 system]# systemctl restart flanneldINFRA [root@wqdcsrv034 system]# systemctl status flanneld● flanneld.service - Flanneld overlay address etcd agentLoaded: loaded (/etc/systemd/system/flanneld.service; enabled; vendor preset: disabled)Active: active (running) since Fri 2019-08-02 10:42:37 CST; 21s agoProcess: 10399 ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d run/flannel/docker (code=exited, status=0/SUCCESS)Main PID: 10371 (flanneld)Memory: 11.4MCGroup: system.slice/flanneld.service└─10371 opt/k8s/bin/flanneld -etcd-cafile=/etc/kubernetes/cert/ca.pem -etcd-certfile=/etc/flanneld/cer...Aug 02 10:42:37 wqdcsrv034.cn.infra flanneld[10371]: I0802 10:42:37.274645 10371 iptables.go:155] Adding ipt...TURNAug 02 10:42:37 wqdcsrv034.cn.infra flanneld[10371]: I0802 10:42:37.276682 10371 iptables.go:155] Adding ipt...RADEAug 02 10:42:37 wqdcsrv034.cn.infra systemd[1]: Started Flanneld overlay address etcd agent.Hint: Some lines were ellipsized, use -l to show in full.

检查分配的网段

source opt/k8s/bin/environment.shetcdctl \--endpoints=${ETCD_ENDPOINTS} \--ca-file=/etc/kubernetes/cert/ca.pem \--cert-file=/etc/flanneld/cert/flanneld.pem \--key-file=/etc/flanneld/cert/flanneld-key.pem \get ${FLANNEL_ETCD_PREFIX}/config#输出如下{"Network":"172.30.0.0/16", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}

etcdctl \--endpoints=${ETCD_ENDPOINTS} \--ca-file=/etc/kubernetes/cert/ca.pem \--cert-file=/etc/flanneld/cert/flanneld.pem \--key-file=/etc/flanneld/cert/flanneld-key.pem \ls ${FLANNEL_ETCD_PREFIX}/subnets#输出如下/kubernetes/network/subnets/172.30.120.0-21/kubernetes/network/subnets/172.30.136.0-21/kubernetes/network/subnets/172.30.176.0-21

etcdctl \--endpoints=${ETCD_ENDPOINTS} \--ca-file=/etc/kubernetes/cert/ca.pem \--cert-file=/etc/flanneld/cert/flanneld.pem \--key-file=/etc/flanneld/cert/flanneld-key.pem \get ${FLANNEL_ETCD_PREFIX}/subnets/172.30.120.0-21#输出如下{"PublicIP":"10.67.194.43","BackendType":"vxlan","BackendData":{"VtepMAC":"e6:7f:4b:16:c5:04"}}-- 172.30.120.0/21 被分配给节点 master.kube(10.67.194.43);-- VtepMAC 为 master.kube 节点的 flannel.1 网卡 MAC 地址;

查看节点flannel的状态信息

ip addr show1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group defaultlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:50:56:ae:2d:cf brd ff:ff:ff:ff:ff:ffinet 10.67.194.42/20 brd 10.67.207.255 scope global eth0valid_lft forever preferred_lft forever21: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group defaultlink/ether 5a:c5:72:1c:f7:89 brd ff:ff:ff:ff:ff:ffinet 172.30.136.0/32 scope global flannel.1valid_lft forever preferred_lft forever*******************************************、-- flannel.1 网卡的地址为分配的 Pod 子网段的第一个 IP(.0),且是 32 的地址;

ip route show |grep flannel.1172.30.120.0/21 via 172.30.120.0 dev flannel.1 onlink172.30.176.0/21 via 172.30.176.0 dev flannel.1 onlink************************************************-- 到其它节点 Pod 网段请求都被转发到 flannel.1 网卡;-- flanneld 根据 etcd 中子网段的信息,如 ${FLANNEL_ETCD_PREFIX}/subnets/172.30.120.0-21 ,来决定进请求发送给哪个节点的互联 IP;

验证节点能通过pod网段通信

在各节点上部署 flannel 后,检查是否创建了 flannel 接口(名称可能为 flannel0、flannel.0、flannel.1 等):

source opt/k8s/bin/environment.shfor node_ip in ${NODE_IPS[@]}doecho ">>> ${node_ip}"ssh ${node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"done#显示如下>>> 10.67.194.43The authenticity of host '10.67.194.43 (10.67.194.43)' can't be establisheECDSA key fingerprint is SHA256:RYPS0lG5lc7p1joMPJI9rRrxPBoHWtrWIFBHsCY+1wECDSA key fingerprint is MD5:54:de:f4:1b:53:95:ad:d8:ab:50:ad:9e:4a:2f:03:Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '10.67.194.43' (ECDSA) to the list of known hosroot@10.67.194.43's password:

for node_ip in ${NODE_IPS[@]}doecho ">>> ${node_ip}"ssh ${node_ip} "ping -c 1 172.30.120.0"ssh ${node_ip} "ping -c 1 172.30.136.0"ssh ${node_ip} "ping -c 1 172.30.176.0"done

Master节点部署

kubernetes master 节点运行如下组件:

kube-apiserver

kube-scheduler

kube-controller-manager

kube-apiserver服务

步骤与etcd一样...

将kube-apiserver、kube-controller-manager和kube-scheduler文件复制到/opt/k8s/bin/

新建工作目录

新建systemd服务文件

下载二进制文件

wget https://dl.k8s.io/v1.15.1/kubernetes-server-linux-amd64.tar.gzlltotal 535-rw-r--r-- 1 root root 443761836 Jul 30 10:43 kubernetes-server-linux-amd64.tar.gztar -zxvf kubernetes-server-linux-amd64.tar.gz -C /opt/k8s/workcd /opt/k8s/work/kubernetes/lltotal 27179drwxr-xr-x 2 root root 1024 Aug 2 11:22 addonsdrwxr-xr-x 3 root root 1024 Jul 18 17:53 client-rw-r--r-- 1 root root 26620736 Aug 2 11:22 kubernetes-src.tar.gz-rw-r--r-- 1 root root 1205293 Aug 2 11:22 LICENSESdrwxr-xr-x 3 root root 1024 Aug 2 11:22 servertar -xzvf kubernetes-src.tar.gzcp kubernetes/server/bin/{apiextensions-apiserver,cloud-controller-manager,kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubeadm,kubectl,kubelet,mounter} /opt/k8s/bin/chmod +x /opt/k8s/bin/*

创建密钥

cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > kubernetes-csr.json <<EOF{"CN": "kubernetes","hosts": ["127.0.0.1","10.67.194.43","10.67.194.42","10.67.194.41","${CLUSTER_KUBERNETES_SVC_IP}","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local."],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "4Paradigm"}]}EOF

hosts 字段指定授权使用该证书的 IP 和域名列表,这里列出了 master 节点 IP、kubernetes 服务的 IP 和域名;

kubernetes 服务 IP 是 apiserver 自动创建的,一般是

--service-cluster-ip-range

参数指定的网段的第一个IP

生成证书和私钥

cfssl gencert -ca=/opt/k8s/work/ca.pem \-ca-key=/opt/k8s/work/ca-key.pem \-config=/opt/k8s/work/ca-config.json \-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetesls kubernetes*pem

将生成证书和私钥拷贝到指定位置

mkdir -p /etc/kubernetes/certcp kubernetes*.pem /etc/kubernetes/cert

创建加密配置文件

cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > encryption-config.yaml <<EOFkind: EncryptionConfigapiVersion: v1resources:- resources:- secretsproviders:- aescbc:keys:- name: key1secret: ${ENCRYPTION_KEY}- identity: {}EOF

/etc/kubernetes目录下:

cp encryption-config.yaml /etc/kubernetes/

创建审计策略文件

cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > audit-policy.yaml <<EOFapiVersion: audit.k8s.io/v1beta1kind: Policyrules:# The following requests were manually identified as high-volume and low-risk, so drop them.- level: Noneresources:- group: ""resources:- endpoints- services- services/statususers:- 'system:kube-proxy'verbs:- watch- level: Noneresources:- group: ""resources:- nodes- nodes/statususerGroups:- 'system:nodes'verbs:- get- level: Nonenamespaces:- kube-systemresources:- group: ""resources:- endpointsusers:- 'system:kube-controller-manager'- 'system:kube-scheduler'- 'system:serviceaccount:kube-system:endpoint-controller'verbs:- get- update- level: Noneresources:- group: ""resources:- namespaces- namespaces/status- namespaces/finalizeusers:- 'system:apiserver'verbs:- get# Don't log HPA fetching metrics.- level: Noneresources:- group: metrics.k8s.iousers:- 'system:kube-controller-manager'verbs:- get- list# Don't log these read-only URLs.- level: NonenonResourceURLs:- '/healthz*'- /version- '/swagger*'# Don't log events requests.- level: Noneresources:- group: ""resources:- events# node and pod status calls from nodes are high-volume and can be large, don't log responses for expected updates from nodes- level: RequestomitStages:- RequestReceivedresources:- group: ""resources:- nodes/status- pods/statususers:- kubelet- 'system:node-problem-detector'- 'system:serviceaccount:kube-system:node-problem-detector'verbs:- update- patch- level: RequestomitStages:- RequestReceivedresources:- group: ""resources:- nodes/status- pods/statususerGroups:- 'system:nodes'verbs:- update- patch# deletecollection calls can be large, don't log responses for expected namespace deletions- level: RequestomitStages:- RequestReceivedusers:- 'system:serviceaccount:kube-system:namespace-controller'verbs:- deletecollection# Secrets, ConfigMaps, and TokenReviews can contain sensitive & binary data,# so only log at the Metadata level.- level: MetadataomitStages:- RequestReceivedresources:- group: ""resources:- secrets- configmaps- group: authentication.k8s.ioresources:- tokenreviews# Get repsonses can be large; skip them.- level: RequestomitStages:- RequestReceivedresources:- group: ""- group: admissionregistration.k8s.io- group: apiextensions.k8s.io- group: apiregistration.k8s.io- group: apps- group: authentication.k8s.io- group: authorization.k8s.io- group: autoscaling- group: batch- group: certificates.k8s.io- group: extensions- group: metrics.k8s.io- group: networking.k8s.io- group: policy- group: rbac.authorization.k8s.io- group: scheduling.k8s.io- group: settings.k8s.io- group: storage.k8s.ioverbs:- get- list- watch# Default level for known APIs- level: RequestResponseomitStages:- RequestReceivedresources:- group: ""- group: admissionregistration.k8s.io- group: apiextensions.k8s.io- group: apiregistration.k8s.io- group: apps- group: authentication.k8s.io- group: authorization.k8s.io- group: autoscaling- group: batch- group: certificates.k8s.io- group: extensions- group: metrics.k8s.io- group: networking.k8s.io- group: policy- group: rbac.authorization.k8s.io- group: scheduling.k8s.io- group: settings.k8s.io- group: storage.k8s.io# Default level for all other requests.- level: MetadataomitStages:- RequestReceivedEOF

分发审计策略文件

cp audit-policy.yaml /etc/kubernetes/audit-policy.yaml

创建后续访问 metrics-server 使用的证书

创建签名请求证书

cat > proxy-client-csr.json <<EOF{"CN": "aggregator","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "BeiJing","L": "BeiJing","O": "k8s","OU": "4Paradigm"}]}EOF

CN 名称需要位于 kube-apiserver 的

--requestheader-allowed-names

参数中,否则后续访问 metrics 时会提示权限不足。

生成私钥和证书

cfssl gencert -ca=/etc/kubernetes/cert/ca.pem \-ca-key=/etc/kubernetes/cert/ca-key.pem \-config=/etc/kubernetes/cert/ca-config.json \-profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-clientls proxy-client*.pemproxy-client-key.pem proxy-client.pem

拷贝私钥和证书

cp proxy-client*.pem /etc/kubernetes/cert/

设置systemd服务配置文件kube-apiserver.service

cd /opt/k8s/worksource /opt/k8s/bin/environment.shcat > kube-apiserver.service.template <<EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=network.target[Service]WorkingDirectory=${K8S_DIR}/kube-apiserverExecStart=/opt/k8s/bin/kube-apiserver \\--advertise-address=##NODE_IP## \\--default-not-ready-toleration-seconds=360 \\--default-unreachable-toleration-seconds=360 \\--feature-gates=DynamicAuditing=true \\--max-mutating-requests-inflight=2000 \\--max-requests-inflight=4000 \\--default-watch-cache-size=200 \\--delete-collection-workers=2 \\--encryption-provider-config=/etc/kubernetes/encryption-config.yaml \\--etcd-cafile=/etc/kubernetes/cert/ca.pem \\--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \\--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \\--etcd-servers=${ETCD_ENDPOINTS} \\--bind-address=##NODE_IP## \\--secure-port=6443 \\--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \\--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \\--insecure-port=0 \\--audit-dynamic-configuration \\--audit-log-maxage=15 \\--audit-log-maxbackup=3 \\--audit-log-maxsize=100 \\--audit-log-truncate-enabled \\--audit-log-path=${K8S_DIR}/kube-apiserver/audit.log \\--audit-policy-file=/etc/kubernetes/audit-policy.yaml \\--profiling \\--anonymous-auth=false \\--client-ca-file=/etc/kubernetes/cert/ca.pem \\--enable-bootstrap-token-auth \\--requestheader-allowed-names="aggregator" \\--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\--requestheader-extra-headers-prefix="X-Remote-Extra-" \\--requestheader-group-headers=X-Remote-Group \\--requestheader-username-headers=X-Remote-User \\--service-account-key-file=/etc/kubernetes/cert/ca.pem \\--authorization-mode=Node,RBAC \\--runtime-config=api/all=true \\--enable-admission-plugins=NodeRestriction \\--enable-aggregator-routing=true \\--allow-privileged=true \\--apiserver-count=3 \\--event-ttl=168h \\--kubelet-certificate-authority=/etc/kubernetes/cert/ca.pem \\--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \\--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \\--kubelet-https=true \\--kubelet-timeout=10s \\--proxy-client-cert-file=/etc/kubernetes/cert/proxy-client.pem \\--proxy-client-key-file=/etc/kubernetes/cert/proxy-client-key.pem \\--service-cluster-ip-range=${SERVICE_CIDR} \\--service-node-port-range=${NODE_PORT_RANGE} \\--logtostderr=true \\--v=2Restart=on-failureRestartSec=10Type=notifyLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

对启动参数说明如下:

--advertise-address

:apiserver 对外通告的 IP(kubernetes 服务后端节点 IP);--default-*-toleration-seconds

:设置节点异常相关的阈值;--max-*-requests-inflight

:请求相关的最大阈值;--etcd-*

:访问 etcd 的证书和 etcd 服务器地址;--experimental-encryption-provider-config

:指定用于加密 etcd 中 secret 的配置;--bind-address

:https 监听的 IP,不能为127.0.0.1

,否则外界不能访问它的安全端口 6443;--secret-port

:https 监听端口;--insecure-port=0

:关闭监听 http 非安全端口(8080);--tls-*-file

:指定 apiserver 使用的证书、私钥和 CA 文件;--audit-*

:配置审计策略和审计日志文件相关的参数;--client-ca-file

:验证 client (kue-controller-manager、kube-scheduler、kubelet、kube-proxy 等)请求所带的证书;--enable-bootstrap-token-auth

:启用 kubelet bootstrap 的 token 认证;--requestheader-*

:kube-apiserver 的 aggregator layer 相关的配置参数,proxy-client & HPA 需要使用;--requestheader-client-ca-file

:用于签名--proxy-client-cert-file

和--proxy-client-key-file

指定的证书;在启用了 metric aggregator 时使用;--requestheader-allowed-names

:不能为空,值为逗号分割的--proxy-client-cert-file

证书的 CN 名称,这里设置为 "aggregator";--service-account-key-file

:签名 ServiceAccount Token 的公钥文件,kube-controller-manager 的--service-account-private-key-file

指定私钥文件,两者配对使用;--runtime-config=api/all=true

:启用所有版本的 APIs,如 autoscaling/v2alpha1;--authorization-mode=Node,RBAC

、--anonymous-auth=false

:开启 Node 和 RBAC 授权模式,拒绝未授权的请求;--enable-admission-plugins

:启用一些默认关闭的 plugins;--allow-privileged

:运行执行 privileged 权限的容器;--apiserver-count=3

:指定 apiserver 实例的数量;--event-ttl

:指定 events 的保存时间;--kubelet-*

:如果指定,则使用 https 访问 kubelet APIs;需要为证书对应的用户(上面 kubernetes*.pem 证书的用户为 kubernetes) 用户定义 RBAC 规则,否则访问 kubelet API 时提示未授权;--proxy-client-*

:apiserver 访问 metrics-server 使用的证书;--service-cluster-ip-range

:指定 Service Cluster IP 地址段;--service-node-port-range

:指定 NodePort 的端口范围;

如果 kube-apiserver 机器没有运行 kube-proxy,则还需要添加 --enable-aggregator-routing=true

参数;

关于 --requestheader-XXX

相关参数,参考:

https://github.com/kubernetes-incubator/apiserver-builder/blob/master/docs/concepts/auth.md

https://docs.bitnami.com/kubernetes/how-to/configure-autoscaling-custom-metrics/

注意:

requestheader-client-ca-file 指定的 CA 证书,必须具有 client auth and server auth;

如果

--requestheader-allowed-names

为空,或者--proxy-client-cert-file

证书的 CN 名称不在 allowed-names 中,则后续查看 node 或 pods 的 metrics 失败,提示:

kubectl top nodesError from server (Forbidden): nodes.metrics.k8s.io is forbidden: User "aggregator" cannot list resource "nodes" in API group "metrics.k8s.io" at the cluster scopekube-apiserver systemd unit 文件

创建kube-apiserver systemd unit 文件

替换模板文件中的变量,为各节点生成 systemd unit 文件:cd /opt/k8s/worksource /opt/k8s/bin/environment.shfor (( i=0; i < 3; i++ ))dosed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${NODE_IPS[i]}.servicedonels kube-apiserver*.service//这里是为3master创建的模板,只要自己需要的就行

文件重命名为kube-apiserver.service

cp kube-apiserver-10.67.194.43.service /etc/systemd/system/kube-apiserver.service

mkdir -p ${K8S_DIR}/kube-apiserversystemctl daemon-reloadsystemctl enable kube-apiserversystemctl restart kube-apiserversystemctl status kube-apiserver.service● kube-apiserver.service - Kubernetes API ServerLoaded: loaded (/etc/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)Active: active (running) since Fri 2019-08-02 12:17:48 CST; 9s agoDocs: https://github.com/GoogleCloudPlatform/kubernetesMain PID: 8742 (kube-apiserver)Memory: 208.6MCGroup: /system.slice/kube-apiserver.service└─8742 /opt/k8s/bin/kube-apiserver --advertise-address=10.67.194.43 --default-not-ready-toleration-seco...Aug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [+]poststarthook/generic-apiserver-start-informers okAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [+]poststarthook/start-apiextensions-informers okAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [+]poststarthook/start-apiextensions-controllers okAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [-]poststarthook/crd-informer-synced failed: reason w...eldAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [+]poststarthook/bootstrap-controller okAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [-]poststarthook/rbac/bootstrap-roles failed: reason ...eldAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [-]poststarthook/scheduling/bootstrap-system-priority...eldAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [-]poststarthook/ca-registration failed: reason withheldAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [+]poststarthook/start-kube-apiserver-admission-initi... okAug 02 12:17:48 wqdcsrv034.cn.infra kube-apiserver[8742]: [+]poststarthook/start-kube-aggregator-

打印kube-apiserver写入etcd数据

ETCDCTL_API=3 etcdctl \--endpoints=${ETCD_ENDPOINTS} \--cacert=/opt/k8s/work/ca.pem \--cert=/opt/k8s/work/etcd.pem \--key=/opt/k8s/work/etcd-key.pem \get /registry/ --prefix --keys-only

检查集群信息

7.0.0.1:8443 was refused - did you specify the right host or port?INFRA [root@wqdcsrv034 .kube]# netstat -lnpt|grep kubetcp 0 0 10.67.194.43:6443 0.0.0.0:* LISTEN 30710/kube-apiserve

字数限制...

To be continued >>>