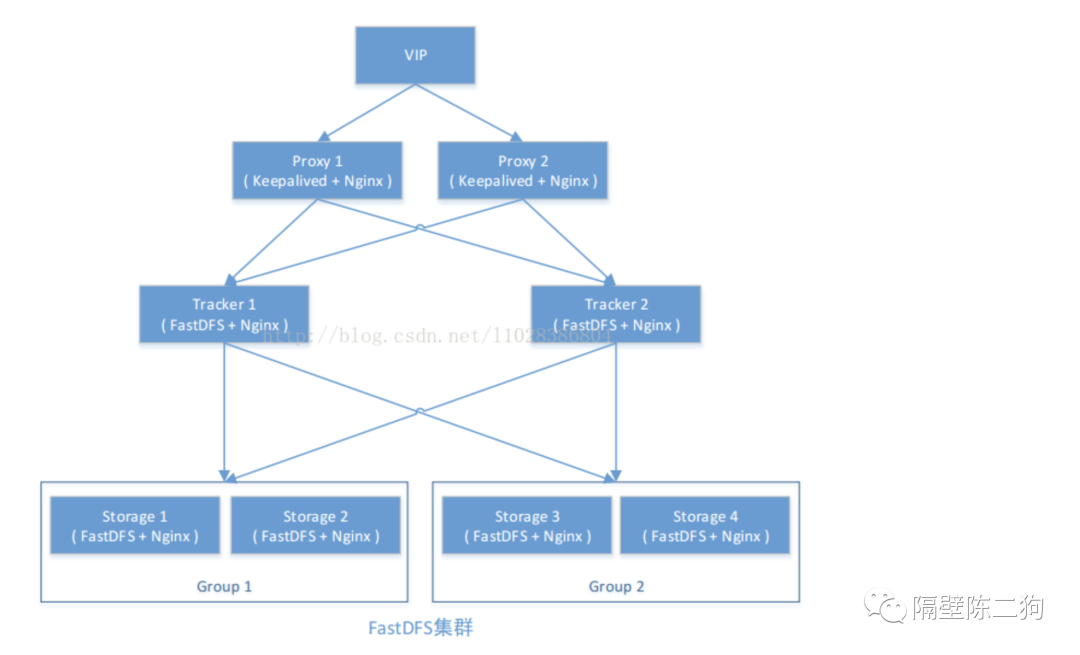

集群架构图

先来简单说一下这个架构图,前段是两台nginx + keepalived的高可用负载均衡群集,对后面两个tracker服务器做负载均衡,然后最后端是由fastdfs组成的分布式存储池,通过tracker进行跟踪控制,文件调度等

环境介绍

前段 nginx + keepalived (两台机器,一主一备 192.168.3.21/22)

tracker服务器 nginx fastdfs tracker(两台机器,对等关系 192.168.3.19.20)

storage服务器 nginx fastdfs storage fastdfs相关模块 (两台机器,对等的两组 192.168.3.23/24)

keepalived结合nginx做高可用的负载均衡

由于前端只是用来做简单的负载均衡,不需要装载其他的模块,直接简单安装一下即可,如果是生产环境下,则最好统一安装编译,保持一致性

1)安装nginx

不再多说,自行Google

2)安装keepalived

cd /usr/local/src && wget http://www.keepalived.org/software/keepalived-1.2.18.tar.gz

tar zxf keepalived-1.2.18.tar.gz && cd keepalived-1.2.18

./configure —prefix=/usr/local/keepalived && make && make install

3)拷贝文件至默认的目录

mkdir /etc/keepalived

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

ln -s /usr/local/sbin/keepalived /usr/sbin/

ln -s /usr/local/keepalived/sbin/keepalived /sbin/

4)添加至系统服务和开机启动

# cat /lib/systemd/system/keepalived.service

[Unit]

Description=Keepalived

After=syslog.target network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/var/run/keepalived.pid

ExecStart=/usr/local/keepalived/sbin/keepalived -D

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.target

#systemctl daemon-reload

#systremctl enable keepalived

#systemctl start keepalived

注意:

至此,两台机器执行同样的操作,安装nginx和keepalived服务,然后添加系统服务等

5)修改主备的配置文件

# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface enp0s3

virtual_router_id 33

mcast_src_ip 192.168.3.21

priority 100

nopreemt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.3.100

}

}

[root@keepalived-nginx-backup /etc/keepalived]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id backup

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface enp0s3

virtual_router_id 33

mcast_src_ip 192.168.3.22

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.3.100

}

}

6)编写nginx状态监控脚本

# cat /etc/keepalived/nginx_check.sh

#!/bin/bash

A=`ps -C nginx –no-header |wc -l`

if [ $A -eq 0 ];then

/usr/local/nginx/sbin/nginx

sleep 2

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then

killall keepalived

fi

fi

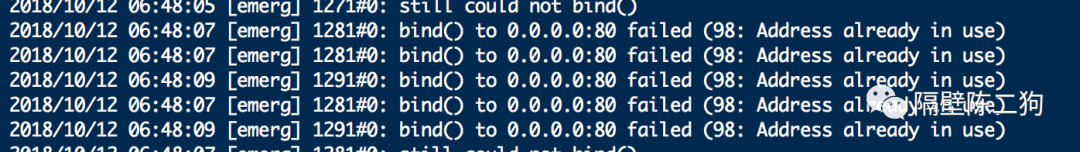

注意:这个脚本存在一些问题,需要把 —no-header 去掉,否则nginx会报错,起不来,显示端口被占用

7)测试

大体的思路就是,两台机器在访问时做好区分,然后主上停掉keepalived和nginx,在浏览器上访问vip,看能否访问,切到备上,看下vip是否飘过来,再看下系统日志关于keepalived的VIP的状态信息等。

搭建fastdfs的tracker服务器

略

tracker机器上的nginx不用添加fastdfs-ngx-mod 模块

搭建fastdfs的storage服务器

略

修改配置文件

1)将storage和tracker服务器联系起来

在tracker服务器上做以下修改:

# egrep -v "^#|^$" tracker.conf

disabled=false

bind_addr=

port=22122

connect_timeout=30

network_timeout=60

base_path=/data/tracker

max_connections=256

accept_threads=1

work_threads=4

min_buff_size = 8KB

max_buff_size = 128KB

store_lookup=2

store_group=group2

store_server=0

store_path=0

download_server=0

reserved_storage_space = 10%

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

sync_log_buff_interval = 10

check_active_interval = 120

thread_stack_size = 64KB

storage_ip_changed_auto_adjust = true

storage_sync_file_max_delay = 86400

storage_sync_file_max_time = 300

use_trunk_file = false

slot_min_size = 256

slot_max_size = 16MB

trunk_file_size = 64MB

trunk_create_file_advance = false

trunk_create_file_time_base = 02:00

trunk_create_file_interval = 86400

trunk_create_file_space_threshold = 20G

trunk_init_check_occupying = false

trunk_init_reload_from_binlog = false

trunk_compress_binlog_min_interval = 0

use_storage_id = false

storage_ids_filename = storage_ids.conf

id_type_in_filename = ip

store_slave_file_use_link = false

rotate_error_log = false

error_log_rotate_time=00:00

rotate_error_log_size = 0

log_file_keep_days = 0

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.server_port=8000

http.check_alive_interval=30

http.check_alive_type=tcp

http.check_alive_uri=/status.html

# egrep -v "^#|^$" client.conf

connect_timeout=30

network_timeout=60

base_path=/data/tracker

tracker_server=192.168.3.20:22122

tracker_server=192.168.3.19:22122

log_level=info

use_connection_pool = false

connection_pool_max_idle_time = 3600

load_fdfs_parameters_from_tracker=false

use_storage_id = false

storage_ids_filename = storage_ids.conf

http.tracker_server_port=8000

修改nginx配置文件,使其反向代理后端storage服务器

# egrep -v "^#|^$" /usr/local/nginx/conf/nginx.conf

user root;

worker_processes 1;

events {

worker_connections 1024;

use epoll;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

#设置缓存

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 300m;

proxy_redirect off;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 16k;

proxy_buffers 4 64k;

proxy_busy_buffers_size 128k;

proxy_temp_file_write_size 128k;

#设置缓存存储路径、存储方式、分配内存大小、磁盘最大空间、缓存期限

proxy_cache_path /data/cache/nginx/proxy_cache levels=1:2

keys_zone=http-cache:200m max_size=1g inactive=30d;

proxy_temp_path /data/cache/nginx/proxy_cache/tmp;

#设置 group1 的服务器

upstream fdfs_group1 {

#server 10.0.3.89:8888 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.3.23:8888 weight=1 max_fails=2 fail_timeout=30s;

}

#设置 group2 的服务器

upstream fdfs_group2 {

#server 10.0.3.88:8888 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.3.24:8888 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 8000;

server_name localhost;

#设置 group 的负载均衡参数

location /group1/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group1;

expires 30d;

}

location /group2/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group2;

expires 30d;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

两台tracker服务器相对应就可以啦

2)修改storage服务器

# egrep -v "^$|^#" storage.conf

disabled=false

group_name=group1

bind_addr=

client_bind=true

port=23000

connect_timeout=30

network_timeout=60

heart_beat_interval=30

stat_report_interval=60

base_path=/data/storage

max_connections=256

buff_size = 256KB

accept_threads=1

work_threads=4

disk_rw_separated = true

disk_reader_threads = 1

disk_writer_threads = 1

sync_wait_msec=50

sync_interval=0

sync_start_time=00:00

sync_end_time=23:59

write_mark_file_freq=500

store_path_count=1

store_path0=/data/storage

subdir_count_per_path=256

tracker_server=192.168.3.19:22122

tracker_server=192.168.3.20:22122

log_level=info

run_by_group=

run_by_user=

allow_hosts=*

file_distribute_path_mode=0

file_distribute_rotate_count=100

fsync_after_written_bytes=0

sync_log_buff_interval=10

sync_binlog_buff_interval=10

sync_stat_file_interval=300

thread_stack_size=512KB

upload_priority=10

if_alias_prefix=

check_file_duplicate=0

file_signature_method=hash

key_namespace=FastDFS

keep_alive=0

use_access_log = false

rotate_access_log = false

access_log_rotate_time=00:00

rotate_error_log = false

error_log_rotate_time=00:00

rotate_access_log_size = 0

rotate_error_log_size = 0

log_file_keep_days = 0

file_sync_skip_invalid_record=false

use_connection_pool = false

connection_pool_max_idle_time = 3600

http.domain_name=

http.server_port=8888

# egrep -v "^$|^#" mod_fastdfs.conf

connect_timeout=100

network_timeout=300

base_path=/tmp

load_fdfs_parameters_from_tracker=true

storage_sync_file_max_delay = 86400

use_storage_id = false

storage_ids_filename = storage_ids.conf

tracker_server=192.168.3.19:22122

tracker_server=192.168.3.20:22122

storage_server_port=23000

group_name=group1

url_have_group_name = true

store_path_count=1

store_path0=/data/storage

log_level=info

log_filename=

response_mode=proxy

if_alias_prefix=

flv_support = true

flv_extension = flv

group_count = 2

[group1]

group_name=group1

storage_server_port=23000

store_path_count=1

store_path0=/data/storage

[group2]

group_name=group2

storage_server_port=23000

store_path_count=1

store_path0=/data/storage

修改nginx的配置文件

# egrep -v "^$|^#" /usr/local/nginx/conf/conf.d/storage.conf

server {

listen 8888;

server_name localhost;

location ~/group([0-9])/M00 {

#alias /fastdfs/storage/data;

ngx_fastdfs_module;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

两台storage服务器保持对应即可。

3)修改负载均衡器的配置文件

# egrep -v "^$|^#" nginx.conf

user root;

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

proxy_read_timeout 150;

#gzip on;

## FastDFS Tracker Proxy

upstream fastdfs_tracker {

#server 10.0.3.90:8000 weight=1 max_fails=2 fail_timeout=30s;

#server 10.0.3.90:8000 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.3.19:8000 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.3.20:8000 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

## FastDFS Proxy

location /dfs {

root html;

index index.html index.htm;

proxy_pass http://fastdfs_tracker/;

proxy_set_header Host $http_host;

proxy_set_header Cookie $http_cookie;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

client_max_body_size 300m;

}

}

}

剩下的就是测试了

1)先测试fastdfs

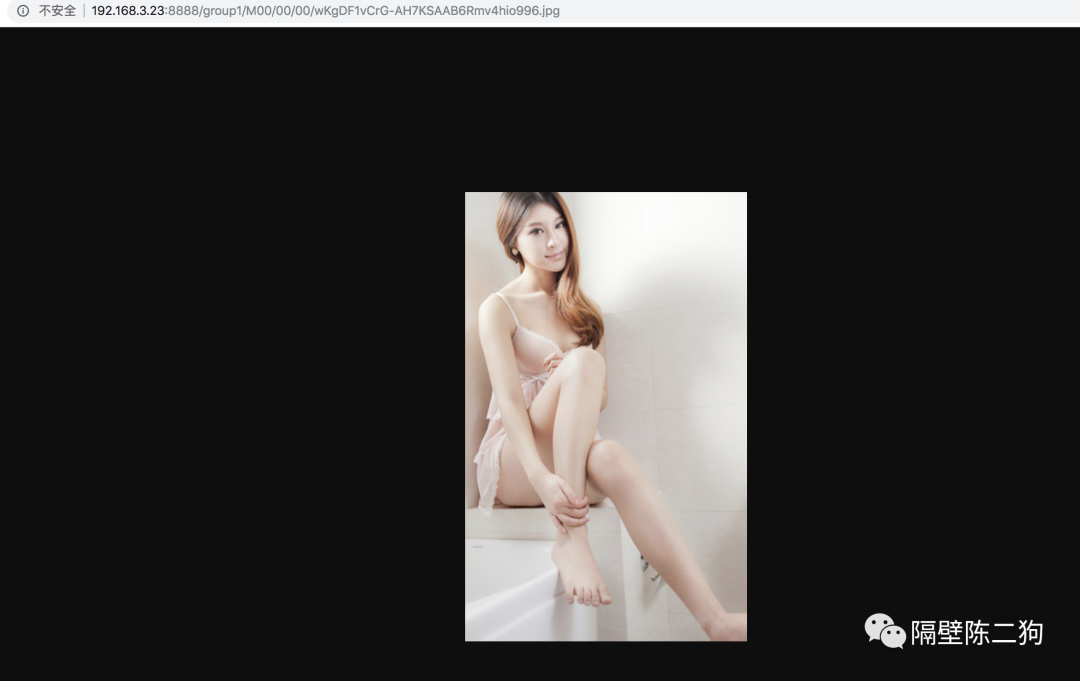

我们在tracker服务器上传一张图片,然后在storage服务器上访问

/usr/bin/fdfs_upload_file /etc/fdfs/client.conf image.jpg

然后我们在访问tracker服务器,看是否能反向代理到后端storage上

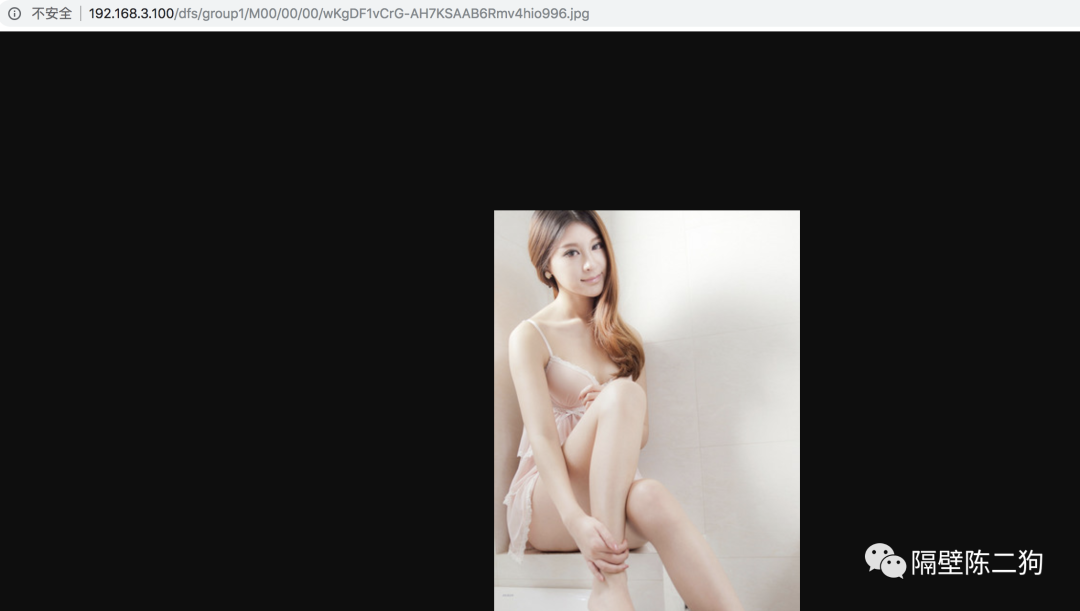

2)测试负载均衡器

我们访问负载均衡器的VIP,来看下是否能访问

至此,,一个简单的fastdfs高可用的负载均衡集群已经搭建完毕,后面我们再做一些优化。