通过oracle导出csv再在heavydb用copy方式导入csv,注意生成的csv必须是utf8格式,否则中文乱码。

上一篇heavydb的安装文档还是在一年以前的了,参见https://www.modb.pro/db/400781

1.利用sqluldr2从oracle导出csv文件

可参考https://www.modb.pro/db/1696201950291382272

[oracle]$ /home/oracle/sqluldr2 test/test@192.168.207.58:1521/lndb query="select * from addressinfo" head=yes file=addressinfo.csv text=CSV Charset=utf8

0 rows exported at 2023-09-18 12:47:55, size 0 MB.

1000000 rows exported at 2023-09-18 12:48:05, size 388 MB.

2000000 rows exported at 2023-09-18 12:48:16, size 815 MB.

3000000 rows exported at 2023-09-18 12:48:30, size 1239 MB.

4000000 rows exported at 2023-09-18 12:48:43, size 1662 MB.

5000000 rows exported at 2023-09-18 12:48:55, size 2098 MB.

6000000 rows exported at 2023-09-18 12:49:10, size 2554 MB.

7000000 rows exported at 2023-09-18 12:49:27, size 2985 MB.

8000000 rows exported at 2023-09-18 12:49:41, size 3369 MB.

9000000 rows exported at 2023-09-18 12:49:56, size 3773 MB.

10000000 rows exported at 2023-09-18 12:50:10, size 4176 MB.

11000000 rows exported at 2023-09-18 12:50:26, size 4584 MB.

12000000 rows exported at 2023-09-18 12:50:39, size 5003 MB.

13000000 rows exported at 2023-09-18 12:50:50, size 5435 MB.

14000000 rows exported at 2023-09-18 12:51:04, size 5843 MB.

15000000 rows exported at 2023-09-18 12:51:20, size 6282 MB.

16000000 rows exported at 2023-09-18 12:51:33, size 6682 MB.

17000000 rows exported at 2023-09-18 12:51:45, size 7113 MB.

18000000 rows exported at 2023-09-18 12:51:56, size 7553 MB.

19000000 rows exported at 2023-09-18 12:52:08, size 8005 MB.

20000000 rows exported at 2023-09-18 12:52:22, size 8380 MB.

21000000 rows exported at 2023-09-18 12:52:34, size 8784 MB.

22000000 rows exported at 2023-09-18 12:52:48, size 9219 MB.

23000000 rows exported at 2023-09-18 12:52:59, size 9671 MB.

24000000 rows exported at 2023-09-18 12:53:10, size 10107 MB.

25000000 rows exported at 2023-09-18 12:53:25, size 10514 MB.

26000000 rows exported at 2023-09-18 12:53:36, size 10926 MB.

27000000 rows exported at 2023-09-18 12:53:52, size 11349 MB.

28000000 rows exported at 2023-09-18 12:54:07, size 11761 MB.

29000000 rows exported at 2023-09-18 12:54:20, size 12181 MB.

30000000 rows exported at 2023-09-18 12:54:34, size 12612 MB.

30748154 rows exported at 2023-09-18 12:54:42, size 12956 MB.

output file addressinfo.csv closed at 30748154 rows, size 12956 MB.

另外也可以使用存储过程方法,不过速度太慢,无法用工具时可应急:

SQL> CREATE OR REPLACE PROCEDURE SQL_TO_CSV

(

P_QUERY IN VARCHAR2, -- PLSQL文

P_DIR IN VARCHAR2, -- 导出的文件放置目录

P_FILENAME IN VARCHAR2 -- CSV名

)

IS

L_OUTPUT UTL_FILE.FILE_TYPE;

L_THECURSOR INTEGER DEFAULT DBMS_SQL.OPEN_CURSOR;

L_COLUMNVALUE VARCHAR2(4000);

L_STATUS INTEGER;

L_COLCNT NUMBER := 0;

L_SEPARATOR VARCHAR2(1);

L_DESCTBL DBMS_SQL.DESC_TAB;

P_MAX_LINESIZE NUMBER := 32000;

BEGIN

--OPEN FILE

L_OUTPUT := UTL_FILE.FOPEN(P_DIR, P_FILENAME, 'W', P_MAX_LINESIZE);

--DEFINE DATE FORMAT

EXECUTE IMMEDIATE 'ALTER SESSION SET NLS_DATE_FORMAT=''YYYY-MM-DD HH24:MI:SS''';

--OPEN CURSOR

DBMS_SQL.PARSE(L_THECURSOR, P_QUERY, DBMS_SQL.NATIVE);

DBMS_SQL.DESCRIBE_COLUMNS(L_THECURSOR, L_COLCNT, L_DESCTBL);

--DUMP TABLE COLUMN NAME

FOR I IN 1 .. L_COLCNT LOOP

UTL_FILE.PUT(L_OUTPUT,L_SEPARATOR || '"' || L_DESCTBL(I).COL_NAME || '"'); --输出表字段

DBMS_SQL.DEFINE_COLUMN(L_THECURSOR, I, L_COLUMNVALUE, 4000);

L_SEPARATOR := ',';

END LOOP;

UTL_FILE.NEW_LINE(L_OUTPUT); --输出表字段

--EXECUTE THE QUERY STATEMENT

L_STATUS := DBMS_SQL.EXECUTE(L_THECURSOR);

--DUMP TABLE COLUMN VALUE

WHILE (DBMS_SQL.FETCH_ROWS(L_THECURSOR) > 0) LOOP

L_SEPARATOR := '';

FOR I IN 1 .. L_COLCNT LOOP

DBMS_SQL.COLUMN_VALUE(L_THECURSOR, I, L_COLUMNVALUE);

UTL_FILE.PUT(L_OUTPUT,

L_SEPARATOR || '"' ||

TRIM(BOTH ' ' FROM REPLACE(L_COLUMNVALUE, '"', '""')) || '"');

L_SEPARATOR := ',';

END LOOP;

UTL_FILE.NEW_LINE(L_OUTPUT);

END LOOP;

--CLOSE CURSOR

DBMS_SQL.CLOSE_CURSOR(L_THECURSOR);

--CLOSE FILE

UTL_FILE.FCLOSE(L_OUTPUT);

EXCEPTION

WHEN OTHERS THEN

RAISE;

END;

/

Procedure created.

SQL> create directory ORADMP as '/oracle/dmp';

SQL> grant read,write on directory oradmp to public;

SQL> set lines 200 pages 200

SQL> col owner for a30

SQL> col directory_name for a30

SQL> col directory_path for a85

SQL> select * from dba_directories ;

OWNER DIRECTORY_NAME DIRECTORY_PATH

------------------------------ ------------------------------ -------------------------------------------------------------------------------------

SYS ORACLE_OCM_CONFIG_DIR2 /oracle/app/product/11.2.0/db_1/ccr/state

SYS DATA_PUMP_DIR /oracle/app/admin/lndb/dpdump/

SYS ORACLE_OCM_CONFIG_DIR /oracle/app/product/11.2.0/db_1/ccr/hosts/lnkf/state

SYS XMLDIR /oracle/app/product/11.2.0/db_1/rdbms/xml

SYS ORADMP /oracle/dmp

6 rows selected.

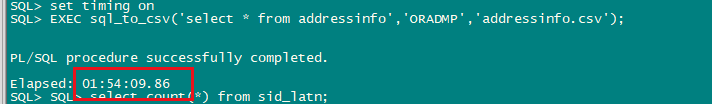

SQL> set timing on

SQL> EXEC sql_to_csv('select * from addressinfo','ORADMP','addressinfo.csv');

2.提取oracle中表结构

通过pl/sql工具或其它命令获取即可

-- Create table

create table ADDRESSINFO

(

id VARCHAR2(16) not null,

ods_id VARCHAR2(64),

code VARCHAR2(2000),

province VARCHAR2(100),

city VARCHAR2(100),

regionid VARCHAR2(20),

region_name VARCHAR2(256),

street_id VARCHAR2(50),

street_name VARCHAR2(512),

road_id VARCHAR2(50),

road_name VARCHAR2(512),

number_plate_id VARCHAR2(50),

number_plate_name VARCHAR2(512),

cpn_id VARCHAR2(50),

cpn_code VARCHAR2(50),

community_name VARCHAR2(255),

premises_network VARCHAR2(32),

building_code VARCHAR2(50),

building VARCHAR2(255),

unit_id VARCHAR2(50),

unit VARCHAR2(128),

layer_id VARCHAR2(50),

layer VARCHAR2(128),

room_id VARCHAR2(50),

room VARCHAR2(128),

rms_code VARCHAR2(255),

substation_id VARCHAR2(16),

simple_mark VARCHAR2(4000),

inner_symbol_id VARCHAR2(16),

if_inner VARCHAR2(2),

switch_no VARCHAR2(64),

type NUMBER(2) default 0,

queryindex VARCHAR2(255),

remark VARCHAR2(255),

alias_name VARCHAR2(255),

if_relate VARCHAR2(1) default 0,

addresstype VARCHAR2(10),

source VARCHAR2(32),

create_time DATE,

fldtag VARCHAR2(64) default 49,

original_company VARCHAR2(1) default 0,

useremark VARCHAR2(16),

village_code VARCHAR2(50),

update_time DATE,

create_op VARCHAR2(32)

);

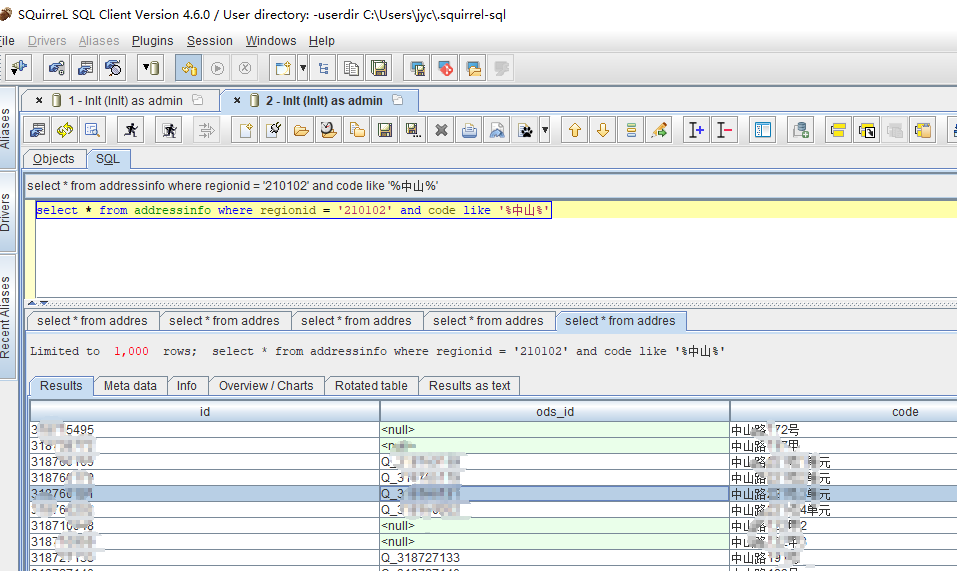

3.在heavydb中创建表结构

注意字段类型修改参考:DATE改成timestamp,varchar2改成TEXT,NUMBER(2)可改成DECIMAL(2,0)

参考:https://docs.heavy.ai/sql/data-definition-ddl/datatypes-and-fixed-encoding

heavysql> create database lnlt;

heavysql> \c lnlt admin XXX;

heavysql> create table ADDRESSINFO

(

id TEXT ENCODING NONE,--内容重复性低的类型

ods_id TEXT,

code TEXT ENCODING NONE,--内容重复性低的类型

province TEXT,

city TEXT,

regionid TEXT,

region_name TEXT,

street_id TEXT,

street_name TEXT,

road_id TEXT,

road_name TEXT,

number_plate_id TEXT,

number_plate_name TEXT,

cpn_id TEXT,

cpn_code TEXT,

community_name TEXT,

premises_network TEXT,

building_code TEXT,

building TEXT,

unit_id TEXT,

unit TEXT,

layer_id TEXT,

layer TEXT,

room_id TEXT,

room TEXT,

rms_code TEXT,

substation_id TEXT,

simple_mark TEXT,

inner_symbol_id TEXT,

if_inner TEXT,

switch_no TEXT,

type INTEGER,

queryindex TEXT,

remark TEXT,

alias_name TEXT,

if_relate TEXT,

addresstype TEXT,

source TEXT,

create_time timestamp,

fldtag TEXT,

original_company TEXT,

useremark TEXT,

village_code TEXT,

uptimestamp_time timestamp,

create_op TEXT

);

4.scp到heavydb服务器

heavyai@node13:/var/lib/heavyai/storage/import/sample_datasets$ scp root@192.168.205.58:/oracle/addressinfo.csv ./

root@192.168.205.58's password:

addressinfo.csv 100% 13GB 8.6MB/s 24:59

heavyai@node13:/var/lib/heavyai/storage/import/sample_datasets$ ll

total 127487244

drwxr-xr-x 4 heavyai heavyai 4096 9 18 12:49 ./

drwxr-xr-x 3 root root 4096 9 7 15:54 ../

-rw-r--r-- 1 heavyai heavyai 13585817776 9 18 13:14 addressinfo.csv

5.导入到heavydb

heavysql> COPY addressinfo FROM '/var/lib/heavyai/storage/import/sample_datasets/addressinfo.csv';

Result

Loaded: 30748154 recs, Rejected: 0 recs in 57.354000 secs

1 rows returned.

Execution time: 57716 ms, Total time: 57718 ms

heavysql> select count(*) from addressinfo;

EXPR$0

30748154

1 rows returned.

Execution time: 14 ms, Total time: 15 ms

非交互式操作:

cat test.sql | heavysql -p admin --db lnlt

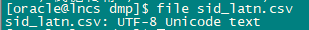

可能遇到显示乱码问题?:

如果dbeaver查询乱码,需转换csv格式再copy导入。

centos/rehdat命令检查:

file addressinfo.csv

如下是ubuntu下检查和转换方法:

转换命令参考:https://zhuanlan.zhihu.com/p/27142605

heavyai@node13:/var/lib/heavyai/storage/import/sample_datasets$ enca -L zh_CN sid_latn1.csv

Simplified Chinese National Standard; GB2312

heavyai@node13:/var/lib/heavyai/storage/import/sample_datasets$ enca -L zh_CN -x UTF-8 < sid_latn1.csv > sid_latn2.csv

heavyai@node13:/var/lib/heavyai/storage/import/sample_datasets$ enca -L zh_CN sid_latn2.csv

Universal transformation format 8 bits; UTF-8

常用命令:

修改密码:ALTER USER admin (password = 'admin');

非交互式执行sql:

[heavyai@test heavyai]$ ./bin/heavysql heavyai -u admin -p admin -s 192.168.207.170 < /var/lib/heavyai/storage/import/sample_datasets/nyc_trees_2015_683k/nyc_trees_2015_683k.sql

User admin connected to database heavyai

User admin disconnected from database heavyai

非交互式导入数据:

[heavyai@test heavyai]$ echo "copy nyc_trees_2015_683k from '/var/lib/heavyai/storage/import/sample_datasets/nyc_trees_2015_683k/nyc_trees_2015_683k.csv' with (quoted='true');" | ./bin/heavysql heavyai -u admin -p admin -s 192.168.207.170

User admin connected to database heavyai

Result

Loaded: 683788 recs, Rejected: 0 recs in 1.570000 secs

User admin disconnected from database heavyai

配置文件参考:

heavyai@node13:/opt/heavyai/bin$ cat /var/lib/heavyai/heavy.conf

port = 6274

http-port = 6278

calcite-port = 6279

data = "/var/lib/heavyai/storage"

null-div-by-zero = true

#cpu-only=true

#rendering=false

allowed-export-paths=["/var/lib/heavyai6.4","/var/lib/heavyai"]

allowed-import-paths=["/var/lib/heavyai6.4","/var/lib/heavyai"]

[web]

port = 6273

frontend = "/opt/heavyai/frontend"

最后修改时间:2023-11-14 14:41:07

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。