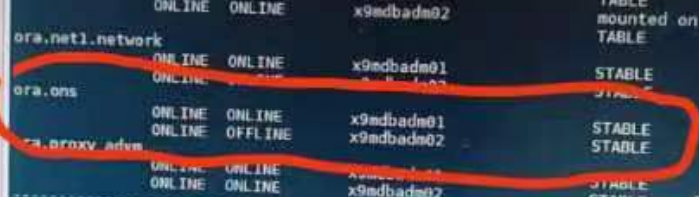

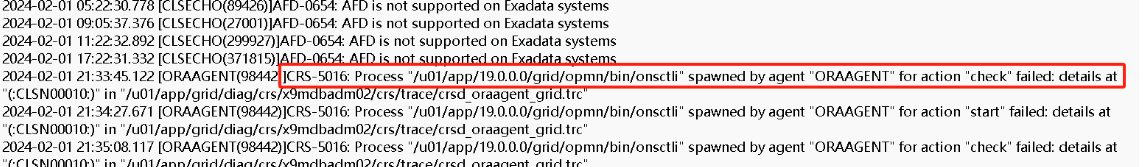

最近x9m一体机oracle19.21rac发现ora.ons时不时的会offline。

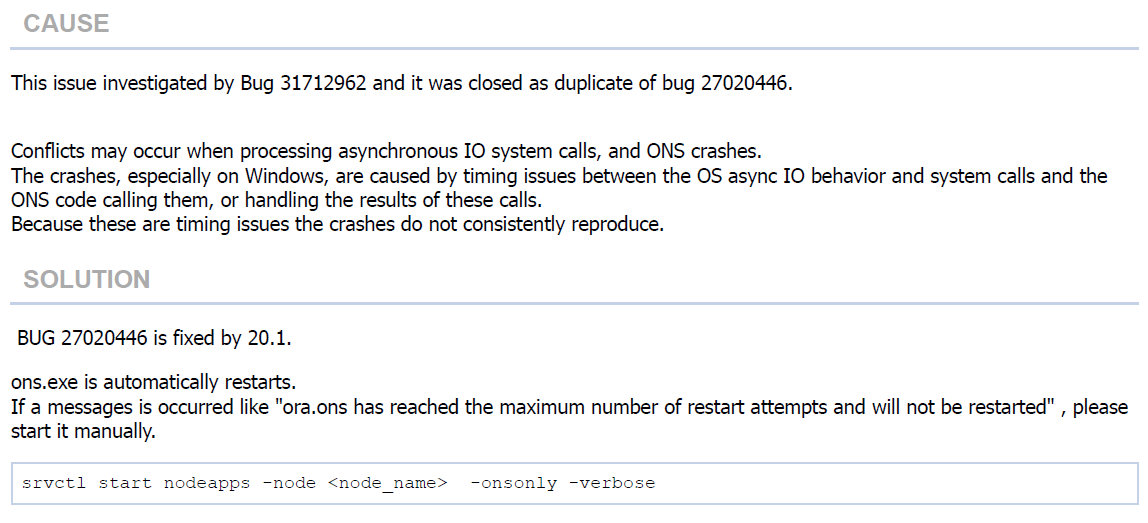

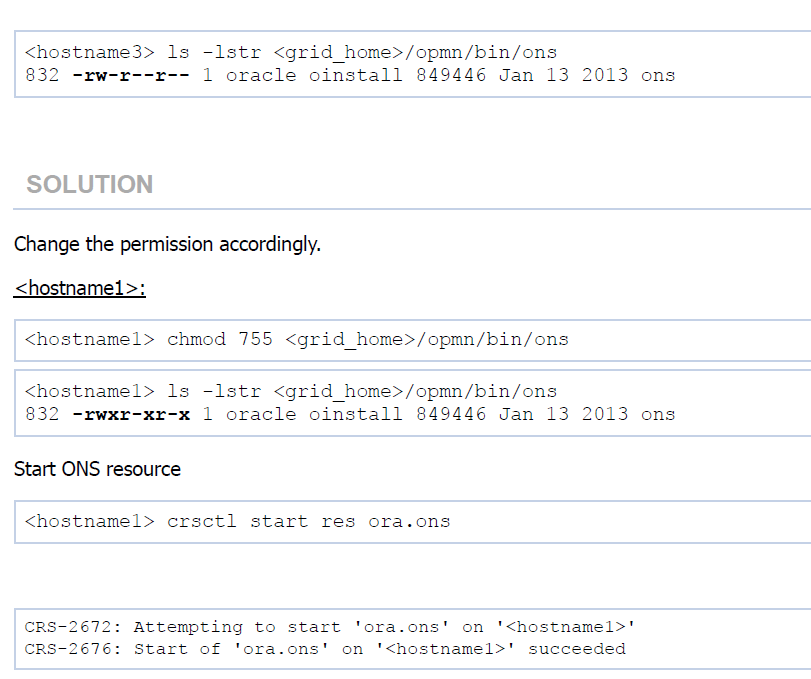

核查metalink,有两篇文章提到,可能是ons权限不对,也可能是bug.

启动方式:onsctl start

分别对应:

The ora.ons Resource Occasionally Goes Offline (Doc ID 2875604.1)

ORA.ONS Resource is Showing OFFLINE (Doc ID 2232270.1)

其它参考:

https://www.cnblogs.com/yutingliuyl/p/6921092.html

关于ora.ons的理解,参看这篇文章:

The ONS Daemon Explained In Oracle Clusterware/RAC Environment (Doc ID 759895.1)

Gen 1 Exadata Cloud at Customer (Oracle Exadata Database Cloud Machine) - Version N/A and later

Oracle Database - Enterprise Edition - Version 10.2.0.1 and later

Oracle Cloud Infrastructure - Database Service - Version N/A and later

Oracle Database Cloud Exadata Service - Version N/A and later

Oracle Database Exadata Express Cloud Service - Version N/A and later

Information in this document applies to any platform.

PURPOSE

This note is intended to explain the purpose of the ONS daemon, how it is configured and what need to be checked when troubleshooting a ONS related problem in an Oracle Clusterware installation.

SCOPE

DBA and Oracle Clusterware installers

DETAILS

1. purpose of the ons daemon

The Oracle Notification Service daemon is a daemon started by the Oracle Clusterware as part of the nodeapps. There is one ons daemon started per clustered node.

The Oracle Notification Service daemon is receiving a subset of published clusterware events

via the local evmd and racgimon clusterware daemons and forward those events to application subscribers and to the local listeners, this in order to facilitate:

a. the FAN or Fast Application Notification feature for allowing applications to respond

to database state changes. Fast Connection Failover (FCF) is the client mechanism which uses the

FAN feature to achieve it. FCF clients/subscribers are JDBC, OCI, and ODP.NET in 10gR2.

b. the Load Balancing Advisory (the RLB feature) or the feature that permit load balancing

accross different rac nodes dependent of the load on the different nodes. The rdbms MMON is creating

an advisory for distribution of work every 30seconds and forward it via racgimon and ONS

to listeners and applications.

2. launching the ons daemon

ons daemon is started as part of the nodeapps in the crs home environment with user oracle and is encapsulated as a resource, whose settings can be seen via:

crs_stat -p ora.<hostname>.ons or crsctl stat res ora.ons

crs_getperm ora.hostname.ons or crs_getperm ora.ons, e.g.

Name: ora.hostname.ons

owner:oracle:rwx,pgrp:dba:r-x,other::r--,

The command used by the clusterware to start/stop/ping the ons is 'onsctl start', 'onsctl stop' and 'onsctl ping'.

It is possible to start/stop the ons daemon on one node via the clusterware commands:

crs_start ora.<hostname>.ons or crsctl start res ora.ons

crs_stop ora.<hostname>.ons or crsctl stop res ora.ons

for debugging purposes, so that the other nodeapps (vip, listener, gsd) don't need to be stopped when using srvctl stop/start nodeapps.

3. configuration of the ons daemon prior to 11gR2

The configuration stands in <crs_home>/opmn/conf/ons.config file on all nodes or in the OCR. The different ons.config parameters are:

a. the tcp listening port parameters, e.g.

localport=6101

remoteport=6200

The localport is used to communicate with local clients, e.g. the listeners on the server itself. The remoteport is used to communicate with remote ONS daemons, e.g. the ONS daemons running on the other node(s) of the cluster, or with ons clients (e.g. application or listeners).

b. the "useocr" parameter, i.e. useocr=on

When the useocr=on is set, then the ons configuration in the ocr is read to define the servers that will be contacted by the ons daemon to receive ons events from it.

It is set via the ONS configuration assistant launched during the initial

clusterware installation, via root command, e.g.

racgons add_config hostname1:6200 hostname2:6200

The hostname to use need to match the name retrieved from the OS command "hostname"

(see note:744849.1). The port need to match the remoteport setup of point a.

The remote ONS daemons are normally the unique Oracle RAC ons daemons running on all nodes of the cluster,

together with any servers with ons daemons running on them (e.g. a iAS remote installation).

"racgons remove_config hostname1" permits to delete the ocr configuration (or to replace it

together with the "racgons add_config hostname1:port1" command)

The command "onsctl debug" permit to view the ocr configuration, e.g.

Number of onsconfiguration retrieved, numcfg = 2

onscfg[0]

{node = hostname1, port = 6200}

Adding remote host hostname1:6200

onscfg[1]

{node = hostname2, port = 6200}

Adding remote host hostname2:6200

All remote server connections are viewable via the server connection part of the 'onsctl debug'

output, e.g. on a two node rac cluster, the remote node will appear:

Server connections:

ID IP PORT FLAGS SENDQ WORKER BUSY SUBS

---------- --------------- ----- -------- ---------- -------- ------ -----

6 140.087.216.062 6200 00010025 0 1 0

c. "loglevel" and "logfile", e.g.

loglevel=3

logfile=<fullpath_filename>

loglevel specify the level of messages that should be logged by ons. loglevel=3 is

the default level. loglevel=9 is the most verbose level. loglevel=6 is intermediate.

logfile specify the location of the ons logging (default is <crs_home>/opmn/logs/ons.log)

d. optional parameter "usesharedinstall" to permit ons to start when a shared $CRS_HOME is used on all nodes of the Oracle Clusterware. The ons is them appending the OS hostname to different files like the ons.log.<hostname> and the .formfactor.<hostname>

useharedinstall=true

e. optional parameter "allowgroup" to permit installations done with other oracle users than the crs installing user to communicate with the Oracle Clusterware ons, e.g. when the rdbms installation is done with orardbms user and the crs installation with oracrs user, then the "allowgroup' parameter need to be set to true to permit the orardbms listener to communicate with the oracrs ons daemon

allowgroup=true

e. optional parameter "walletfile" to be used to setup ssl to secure the ONS communication via a walletfile.

4. configuration of the ons daemon in 11gR2

The ons configuration became dynamic in 11gR2, i.e. the clusterware ons agent force the usage of some parameters by changing dynamically the ons.config file and provide a new command line <grid_home>/opmn/bin/onsctli interface to debug the ons daemon. The srvctl is further enhanced to set the port values in the ocr instead of racgons (parameter useocr is deprecated and ons works as if useocr would be set to true). So, the clusterware ONS agent creates the ons config file based on current clusterware membership and adds host and port information to the config file following the ocr stored configuration. This way when nodes join the cluster, the ONS daemon on the new node can find the ONS servers already running and join the ONS network. The ones already running also find about the new ones by virtue of the new ones contacting them.

See the command line help available via

srvctl modify nodeapps -h

<grid home>/opmn/bin/onsctli help

<grid home>/opmn/bin/onsctli usage

The following ons config parameters are fixed by the clusterware ons agent to the underneath values

localport=6201 # line added by Agent

allowgroup=true # line added by Agent

usesharedinstall=true # line added by Agent

remoteport=6202 # line added by Agent

nodes=<host1>:6202,<host2>:6202 # line added by Agent

The parameters logfile and walletfile still can be set as in previous releases, e.g. logfile can be used to change the default logfile location in $GRID_HOME/opmn/logs. parameter loglevel is obsolete like useocr. When obsolete parameters are detected, they are removed automatically.

4.1 Setting the localport and remoteport via srvctl in 11gR2

In 11gR2, OCR contain both the remoteport and localport, together with the EM port and is maintained via commands like:

srvctl config nodeapps -s

ONS exists: Local port 6201, remote port 6202, EM port 2016

srvctl modify nodeapps -l 6201

srvctl modify nodeapps -r 6202

srvctl modify nodeapps -e 2017

srvctl config nodeapps -s

ONS exists: Local port 6201, remote port 6202, EM port 2017

Changes are only active after a rebounce of the ons daemons, via e.g. 'onsctli reload'.

4.2 to set the debug level ( see onsctli usage for a detailed descrition) in 11gR2

onsctli has the possibility to start/shutdown the ons daemon. When in the <grid_home>,

$ ./opmn/bin/onsctli shutdown

onsctl shutdown: shutting down ons daemon ...

$ ./opmn/bin/onsctli start

onsctl start: ons started

The default settings are "internal;ons" for target=log, so normally onsctli set is always used with target=debug. Only the last onsctli set command is memorized (no cumulative execs).

$ ./opmn/bin/onsctli query target=log

internal;ons

$ ./opmn/bin/onsctli query target=debug

$ ./opmn/bin/onsctli set target=debug comp=ons

$ ./opmn/bin/onsctli query target=debug

ons

$

To remove the dynamic parameters and go back the the default values, the only option is to restart the ons daemon, e.g.

$ ./opmn/bin/onsctli reload

$ ./opmn/bin/onsctli query target=debug

$

Eg. to set specific components, you normally need to script the commands since the sign !

is a reserved sign in bash, so creating a change.sh with #?/bin/bash instead of normally #!/bin/bash, e.g.

$ ./opmn/bin/onsctl set target=debug comp="ons[all,!workers,!servers]"

-bash: !workers,!servers]": event not found

$ ./opmn/bin/onsctli query target=debug

$ more change.sh

#?/bin/bash

./opmn/bin/onsctl set target=debug comp="ons[all,!workers,!servers]"

$ ./change.sh

$ ./opmn/bin/onsctli query target=debug

ons[all,!workers,!servers]

5. ons clients/subscribers

clients or subscribers connected to the ons are viewable via the SUBS column of the client connections

output of the 'onsctl debug', e.g.

Client connections:

ID IP PORT FLAGS SENDQ WORKER BUSY SUBS

---------- --------------- ----- -------- ---------- -------- ------ -----

2 127.000.000.001 6101 0001001a 0 1 0

5 127.000.000.001 6101 0001001a 0 1 1

5.1 the listeners are subscribers for the ons daemon

When the ons is started, the listeners will register to the ons as client subscribers to all FAN and RLB events.

Parameter SUBSCRIBE_FOR_NODE_DOWN_EVENT_<listener_name>=ON need to be set in the

listener.ora files. When that parameter is set and TRACE_LEVEL_<listener_name>=16 is set,

then a problem to subscribe to the locally running ONS can be viewed in the listener.log via messages like

WARNING: Subscription for node down event still pending

It is normally due to note:284602.1 and bug:4417761. When you start the listener using lsnrctl, environment variable ORACLE_CONFIG_HOME = {Oracle Clusterware HOME}

need to be set prior to 10.2.0.4 (settable in the $ORACLE_HOME/bin/racgwrap scripts).

5.2 application clients/subscribers

With Oracle Database 10g Release 1, JDBC clients (both thick and thin driver) are integrated

with FAN by providing FCF. With Oracle Database 10g Release 2, ODP.NET and OCI clients

have been added. note:433827.1 can be used to setup an FCF client.

6. the FAN and RLB events

There are two types of events ONS handle. The FAN event (or HA events) are meant for FAN processing.The RLB events are meant for workload management. When setting loglevel to 9, it is possible to check the events viewed in the <crs home>/opmn/logs/ons.log files.

6.1 the FAN events

The FAN events (event type=database/event/service) are forwarded by the racgimon (for pre 11gR2 databases) or by the 11gR2 agent and evmd clusterware processes to the ons daemon. Main unpublished bug:13879428 need to be fixed in this area (see note:1489751.1) and unpublished bug:6760284 RACGEVTF SOMTIMES DOES NOT SEND ONS EVENT.

6.1.1 FAN events forwarded to the ons daemon by 11gR2 agent or pre-11gR2 racgimon

The clusterware forward instance and service up/down events to the ons daemon.

e.g. ../opmn/logs> grep -E "body|VERSION" ons.log (loglevel=9)

09/01/07 13:56:24 [8] Connection 2,127.0.0.1,6101 body:

VERSION=1.0 service= instance=ASM1 database= host=hostname1 status=up reason=boot

09/01/07 13:56:45 [8] Connection 4,127.0.0.1,6101 body:

VERSION=1.0 service=racdb instance=racdb1 database=racdb host=hostname1 status=up reason=boot

09/01/07 13:56:46 [8] Connection 4,127.0.0.1,6101 body:

VERSION=1.0 service=ALL instance=racdb1 database=racdb host=hostname1 status=up card=1 reason=boot

...

09/01/07 14:20:26 [8] Connection 5,140.x.x.64,6200 body:

VERSION=1.0 service=racdb instance=racdb2 database=racdb host=hostname2 status=down reason=user

09/01/07 14:20:26 [8] Connection 5,140.x.x.64,6200 body:

VERSION=1.0 service=ALL instance=racdb2 database=racdb host=hostname2 status=down reason=failure

09/01/07 14:20:27 [8] Connection 5,140.x.x.64,6200 body:

VERSION=1.0 service=ALL instance=racdb2 database=racdb host=hostname2 status=not_restarting reason=UNKNOWN

6.1.2 FAN events forwarded from the evmd daemon to the ons daemon

It concerns the node down and public network down events. Main unpublished bug:6083726 (see note:6083726.8), Bug:9538932 REBOOTED SERVER NODE ONS DOES NOT SEND EVENTS TO CLIENT ONS and unpublished bug:6760284 RACGEVTF SOMTIMES DOES NOT SEND ONS EVENT need to be fixed in this area.

When there is a node down event or a public vip network down event, then the evmd will post an event to

the ONS, i.e. when the vip is stopped on a preferred node, then a public network down event is originated from the failing node. This evm event is received by the evmd on all other surviving nodes via the interconnect. The evmd on the remote nodes then publish the event to the ONS daemon locally.

e.g. ons.log with level=9 showing

VERSION=1.0 host=hostname incarn=100 status=nodedown reason=member_leave

The FAN events are a subset of the EVM events (logged in the $CRS_HOME/evm/log/<hostname>_evmlog.<date> files). All evm events can be viewed via:

evmshow -t "@timestamp @@" <hostname>_evmlog.<date>

6.2 the RLB events

The RLB events (event type=database/event/servicemetrics/<service_name>) sent by the racgimon on MMON background process request

e.g. Notification Type "database/event/servicemetrics/ALL" set via

exec DBMS_SERVICE.MODIFY_SERVICE (service_name => 'ALL', goal => DBMS_SERVICE.GOAL_THROUGHPUT, clb_goal => DBMS_SERVICE.CLB_GOAL_SHORT)

Querying the sys$service_metrics_tab show MMON events logged every 30seconds, e.g.

SELECT user_data from SYS.SYS$SERVICE_METRICS_TAB order by 1 ;

USER_DATA(SRV, PAYLOAD)

--------------------------------------------------------------------------------

SYS$RLBTYP('ALL', 'VERSION=1.0 database=racdb service=ALL { {instance=racdb1 percent=100 flag=UNKNOWN} } timestamp=2009-01-07 21:39:18')

grep -E 'body|percent' ons.log (with loglevel=9) show the same events

VERSION=1.0 database=racdb { {instance=racdb1 percent=100 flag=UNKNOWN} } timestamp=2009-01-07 21:39:48

09/01/07 21:39:48 [9] Worker Thread 2 sending body [20:1073838448]: connection 5,140.xxx.xxx.64,6200

REFERENCES

NOTE:752595.1 - Questions about how ONS and FCF work with JDBC

NOTE:754619.1 - CRS-0215 / ONS Failed to Start. Pingwait Exited With Exit Status 2

NOTE:1489751.1 - 10.2/11.1 service does not failover at instance crash in 11.2 GI env

NOTE:433827.1 - How to Verify and Test Fast Connection Failover (FCF) Setup from a JDBC Thin 10g Client Against a 10.2.x RAC Cluster

NOTE:5749953.8 - Bug 5749953 - Solaris: CRS crashes after applying 10.2.0.3 Patch Set

NOTE:6083726.8 - Bug 6083726 - ONS does not receive NODEDOWN event

NOTE:284602.1 - 10g Listener: High CPU Utilization - Listener May Hang

NOTE:372959.1 - Non-RAC or Standalone Only: 'WARNING: Subscription for node down event still pending' in Listener Log

NOTE:744849.1 - After CRS Installation, ONS Cannot Start on 1 Node

NOTE:731370.1 - ONS consumes high CPU and/or Memory

NOTE:566573.1 - Fast Connection Failover (FCF) Test Client Using the JDBC Driver version 11.1 and a RAC Cluster version 11.1

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。