Oracle Linux7+11gR2 Rac搭建手册

- 搭建准备

1、环境搭建软件

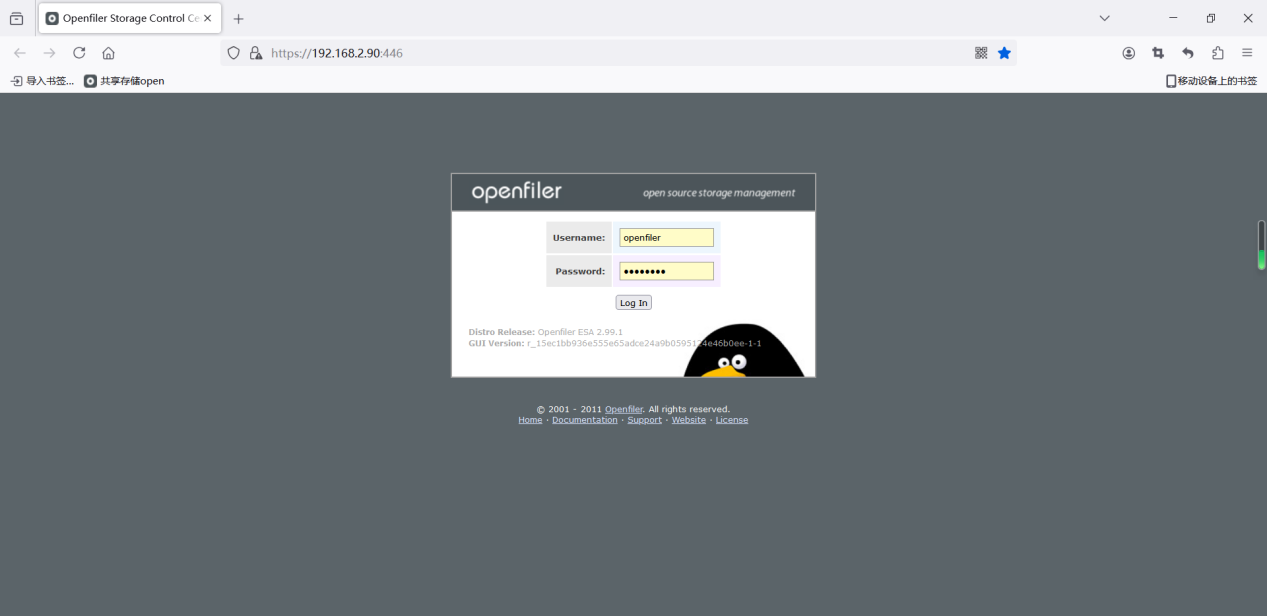

虚拟机:VMware workstation16.2.3

共享存储模拟软件:openfileresa-2.99.1_X86

操作系统:OracleLinux-R7-U9-Server-x86_64

Oracle数据库+grid网格

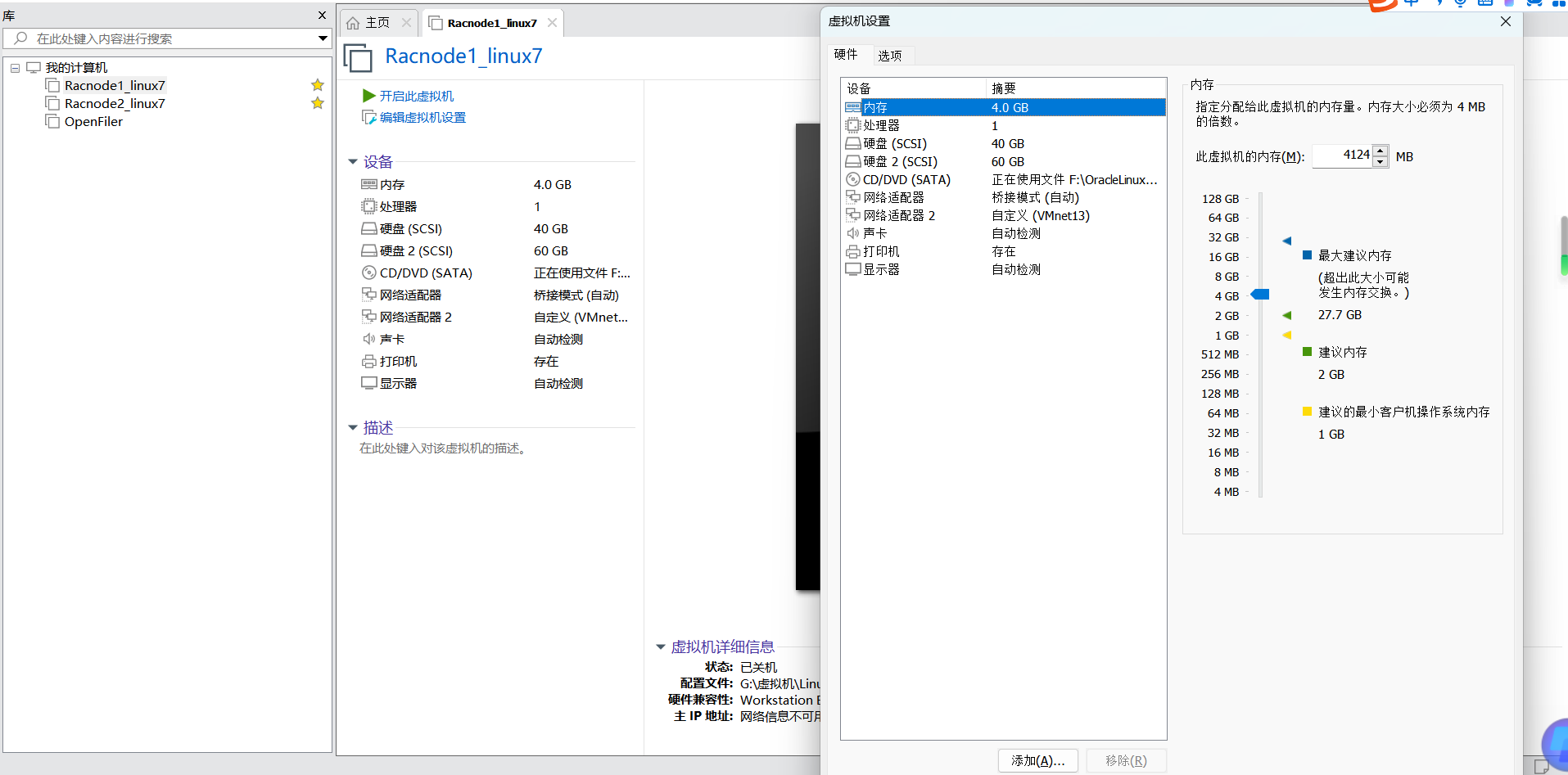

2、建数据库服务器虚拟机

2.1先创建一台虚拟机,另一台虚拟机克隆

2.2 操作系统优化(node1、nod2全部执行)

关闭防火墙、关闭chrony时钟同步、禁用selinux、修改sshd远程端口

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl stop chronyd.service

systemctl disable chronyd.service

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

以上操作完成后reboot重启操作系统系统

2.3 配置NTP时钟同步服务(Rac安装时会检测NTP是否配置)

(node1、node2需要全部配置)

将ntp.conf重命名,并重新创建ntp.conf mv /etc/ntp.conf /etc/ntp.conf_bak vi /etc/ntp.conf 添加以下配置文件 停止ntpd服务,并做初始化时间同步,然后在启动ntp服务 systemctl stop ntpd ntpdate -u 120.25.115.20 ##出现以下字样,证明校时成功 将ntp服务加入到开机自启动,并开启 systemctl enable ntpd systemctl stop ntpd |

2.4 虚拟机IP规划

主机名 | 地址 | 接口名称 | 网卡类型 |

node1 | 192.168.2.80 | node1 | Public |

node1 | 192.168.2.82 | node1-vip | Virtual |

node1 | 1.1.1.80 | node1-priv | Private |

node2 | 192.168.2.81 | node2 | Public |

node2 | 192.168.2.83 | node2-vip | Virtual |

node2 | 1.1.1.81 | node2-priv | Private |

负载均衡 | 192.168.2.84 | node-scan | SCAN |

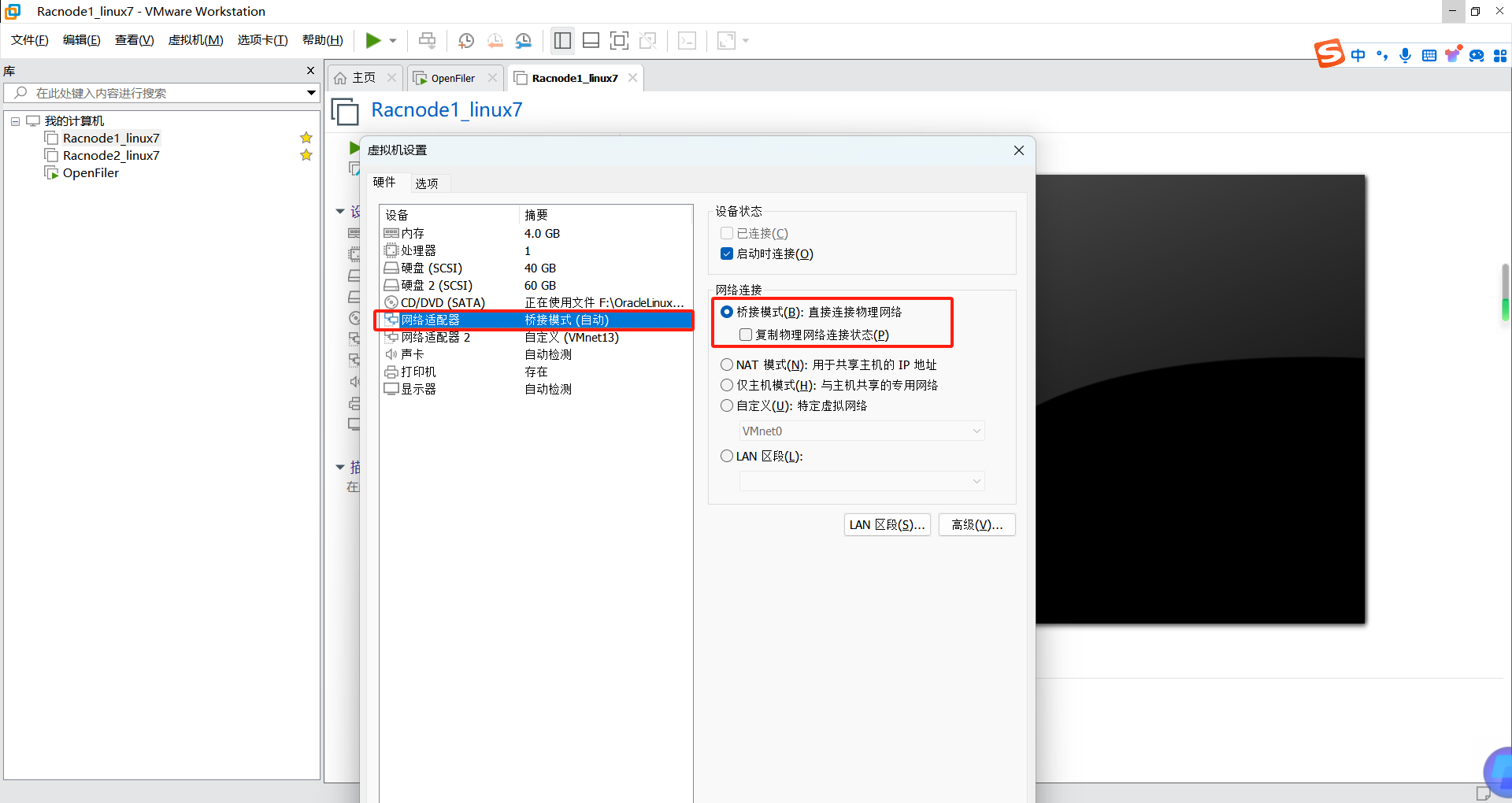

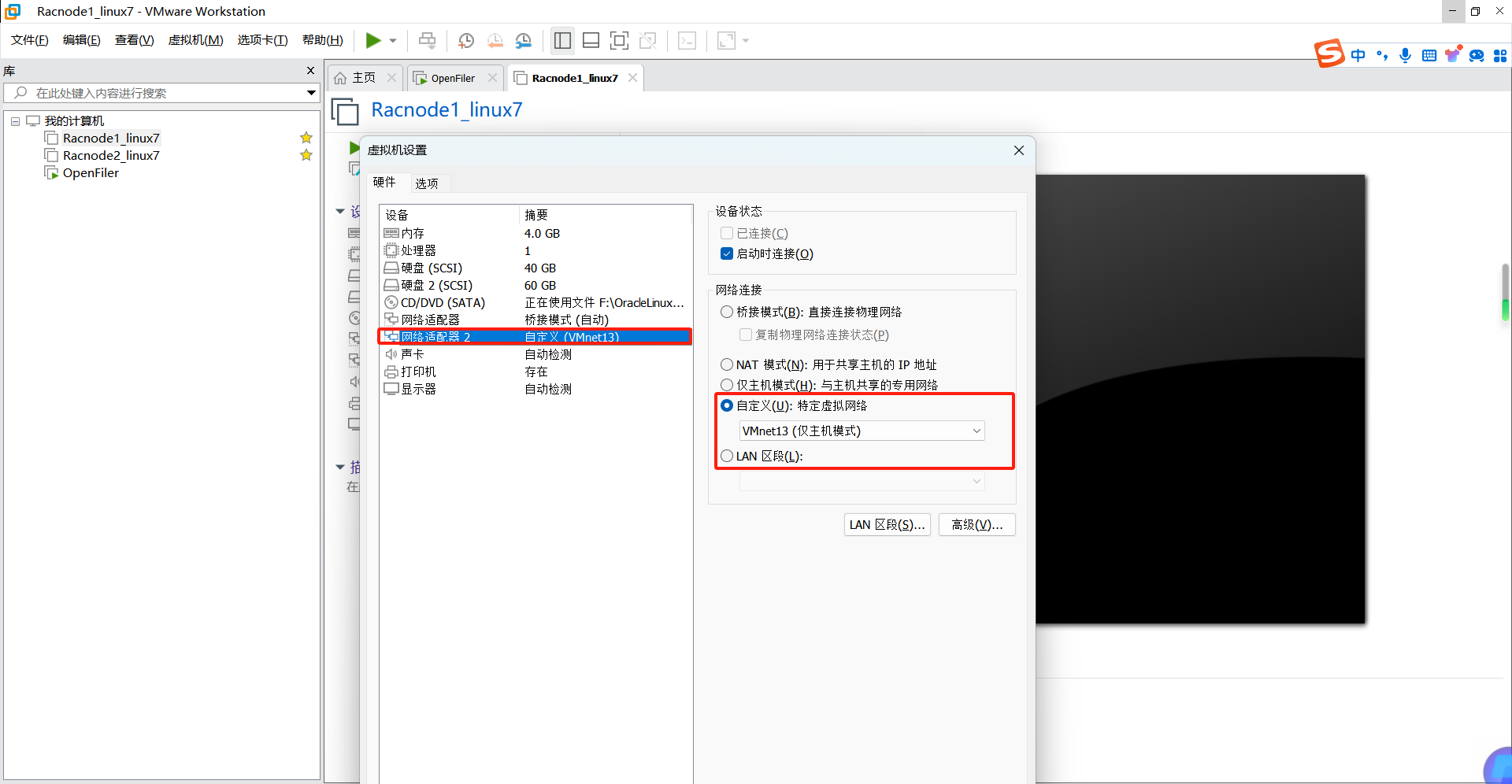

2.5 数据库服务器虚拟机网卡配置

OracleRAC安装至少需要两张网卡,虚拟机需要单独添加两张网卡,一张用于公网IP通信,另外一张用于私网IP通信,私网IP需要单独设置网段。

公网网卡配置(除IP不同 node1、node2配置方法相同)) |

[root@node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="none" DEFROUTE="yes" IPV4_FAILURE_FATAL="yes" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="09a8e3d2-b5be-48fa-80cc-a752eefd5c67" DEVICE="ens33" ONBOOT="yes" IPADDR="192.168.2.80" PREFIX="24" GATEWAY="192.168.2.1" IPV6_PRIVACY="no" |

虚拟网卡配置(除IP不同 node1、node2配置方法相同) |

[root@node1 ~]# cd /etc/sysconfig/network-scripts/ [root@node1 network-scripts]# cp ifcfg-ens33 ifcfg-ens33:1 [root@node1 network-scripts]# cat ifcfg-ens33:1 TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="none" DEFROUTE="yes" IPV4_FAILURE_FATAL="yes" IPV6INIT="no" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" #NAME="ens33" NAME="ens33:1" UUID="09a8e3d2-b5be-48fa-80cc-a752eefd5c67" DEVICE="ens33:1" ONBOOT="yes" IPADDR="192.168.2.82" PREFIX="24" GATEWAY="192.168.2.1" IPV6_PRIVACY="no" |

私网网卡配置(除IP不同 node1、node2配置方法相同) |

[root@node1 ~]# cd /etc/sysconfig/network-scripts [root@node1 network-scripts]# more ifcfg-ens38 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=no IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=ens38 UUID=ed5ebd6e-2d01-4402-a7f9-82874cb64023 DEVICE=ens38 ONBOOT=yes IPADDR=1.1.1.80 PREFIX=24 GATEWAY=1.1.1.0 |

2.6 配置hosts主机文件(node1、node2配置一样)

[root@node1 ~]# cat /etc/hosts

#127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#Public IP

192.168.2.80 node1

192.168.2.81 node2

#Virtual IP

192.168.2.82 node1-vip

192.168.2.83 node2-vip

#Private IP

1.1.1.80 node1-priv

1.1.1.81 node2-priv

#Scan IP

192.168.2.84 node-scan

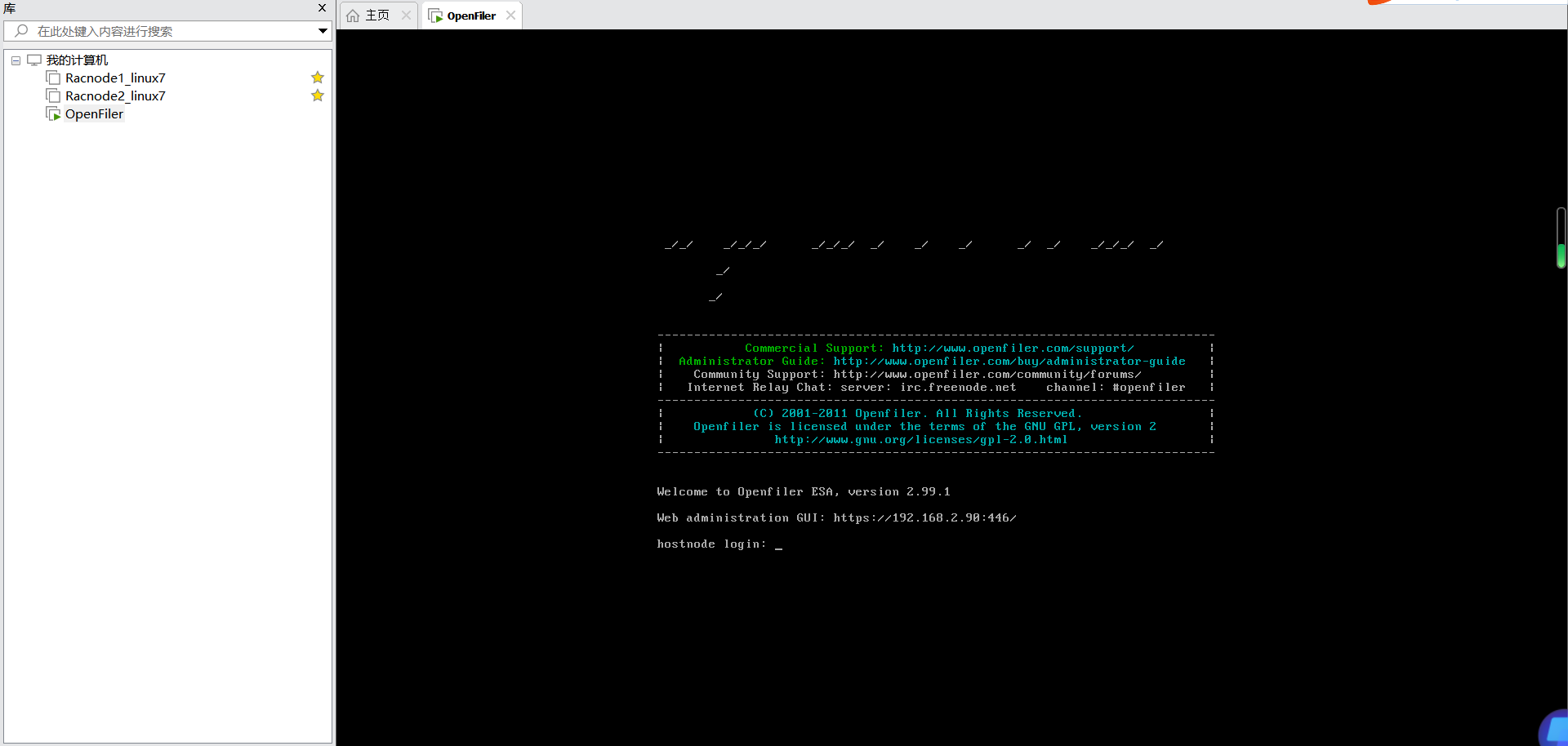

3、安装共享存储软件

OpenFiler共享存储软安装参考以下链接

https://www.cnblogs.com/lhrbest/p/6345157.html

- 配置共享存储,并共享给数据库服务器两个节点(node1、node2共享)(采用Multipathd+Udev方式)

- 共享磁盘组规划

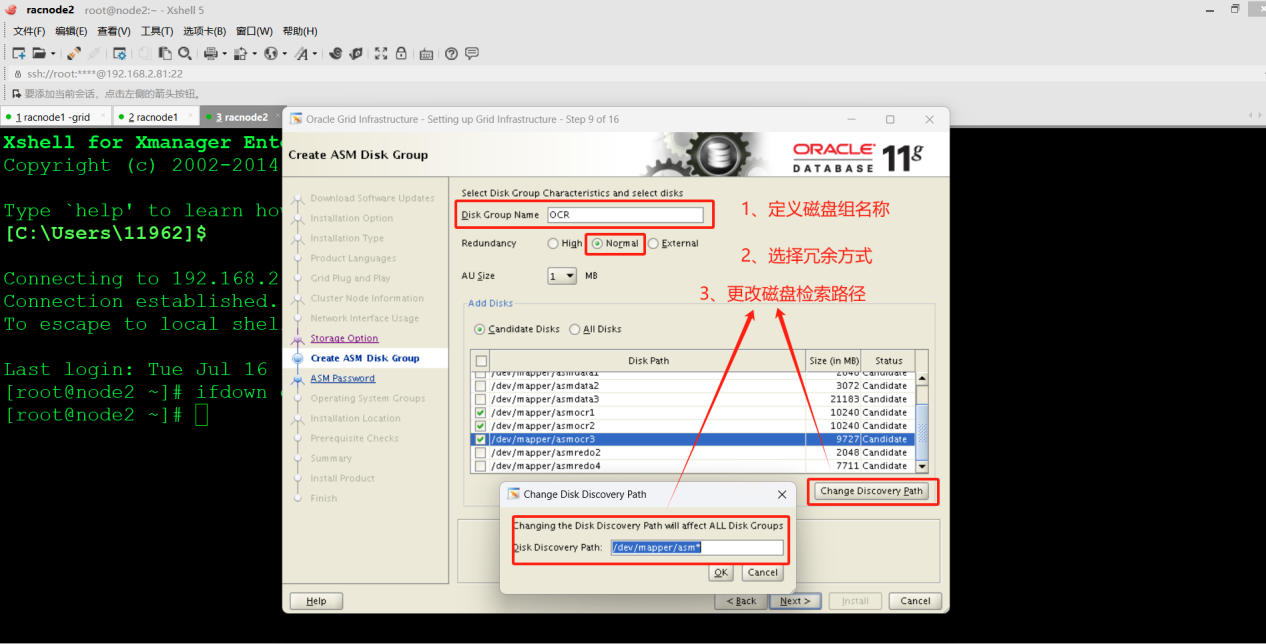

磁盘路径 | 磁盘组 | 大小 | 用途 | 冗余策略 |

/dev/sdc1 | DATA | 10G | 数据存储 | External |

/dev/sdc2 | DATA | 10G | 数据存储 | |

/dev/sdc3 | DATA | 10G | 数据存储 | |

/dev/sdd1 | OCR | 10G | 表决盘文件存储 | Normal |

/dev/sdd2 | OCR | 10G | 表决盘文件存储 | Normal |

/dev/sdd3 | OCR | 10G | 表决盘文件存储 | Normal |

/dev/sdf2 | REDO | 5G | Redo日志+系统参数文件存储 | External |

/dev/sdf4 | REDO | 5G | ||

/dev/sde1 | ARCH | 5G | 归档日志 | External |

/dev/sde2 | ARCH | 5G | External |

- 配置Multipath多路径(node1配置,node2拷贝配置文件复用)

2.1安装multipath多路径软件

[root@ypwtest ~]# yum -y install *multipath*

2.2设置开机自启动

[root@ypwtest ~]# systemctl enable multipathd.service

2.3检查内核加载情况,如未加载则进行初始化

[root@ypwtest ~]# lsmod | grep dm_multipath

加载内核

[root@ypwtest ~]# modprobe dm_round-robin

[root@ypwtest ~]# lsmod | grep dm_multipath

2.4生成多路径配置文件,启动multipathd

[root@ypwtest ~]# mpathconf --enable

[root@ypwtest ~]# cat /etc/multipath.conf

[root@ypwtest ~]# systemctl start multipathd.service

2.5查看磁盘WWID

[root@node1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sdc

14f504e46494c45526832554559422d44507a642d3059754d

[root@node1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sdd

14f504e46494c4552654b634431432d6b744f392d52505031

[root@node1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sde

14f504e46494c4552394a555679452d644a33522d7172727a

[root@node1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sdf

14f504e46494c455230723361424c2d6e4a39532d6b5a3239

2.6将磁盘WWID加入到/etc/wwids文件中

[root@node1 ~]# multipath -a /dev/sdc

wwid '14f504e46494c45526832554559422d44507a642d3059754d'

[root@node1 ~]# multipath -a /dev/sdd

wwid '14f504e46494c4552654b634431432d6b744f392d52505031'

[root@node1 ~]# multipath -a /dev/sde

wwid '14f504e46494c4552394a555679452d644a33522d7172727a'

[root@node1 ~]# multipath -a /dev/sdf

wwid '14f504e46494c455230723361424c2d6e4a39532d6b5a3239'

2.7配置/etc/multipath.conf配置文件

设置黑名单,绑定磁盘ID,自定义别名 --以下为参考

defaults { user_friendly_names yes find_multipaths yes } blacklist { wwid 36000c2916d86d3eb931c2e98de8db0e0 #devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*" devnode "^sda" devnode "^sdb" } # alias是自定义别名 # wwid是磁盘ID,根据磁盘组规划划分要求配置 # multibus所有路径默认是同时使用的 # round-robin 0 代表负载均衡模式 # manual 手工切换 # no_path_retry系统重试的次数 multipaths { multipath { wwid 14f504e46494c4552654b634431432d6b744f392d52505031 alias asmocr path_grouping_policy multibus path_selector "round-robin 0" failback manual rr_weight priorities no_path_retry 5 }

multipath { wwid 14f504e46494c45526832554559422d44507a642d3059754d alias asmdata path_grouping_policy multibus path_selector "round-robin 0" failback manual rr_weight priorities no_path_retry 5 } multipath { wwid 14f504e46494c4552394a555679452d644a33522d7172727a alias asmarch path_grouping_policy multibus path_selector "round-robin 0" failback manual rr_weight priorities no_path_retry 5 } multipath { wwid 14f504e46494c455230723361424c2d6e4a39532d6b5a3239 alias asmredo path_grouping_policy multibus path_selector "round-robin 0" failback manual rr_weight priorities no_path_retry 5 } } |

Multipath -F --激活多路径

Multipath -v2

Multipath -v3 --生成多路径

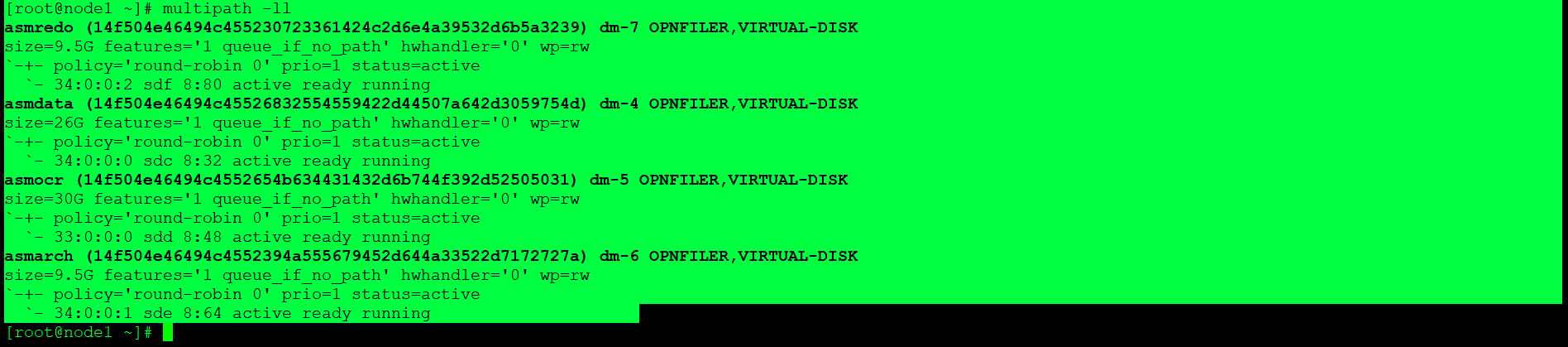

查看多路径状态

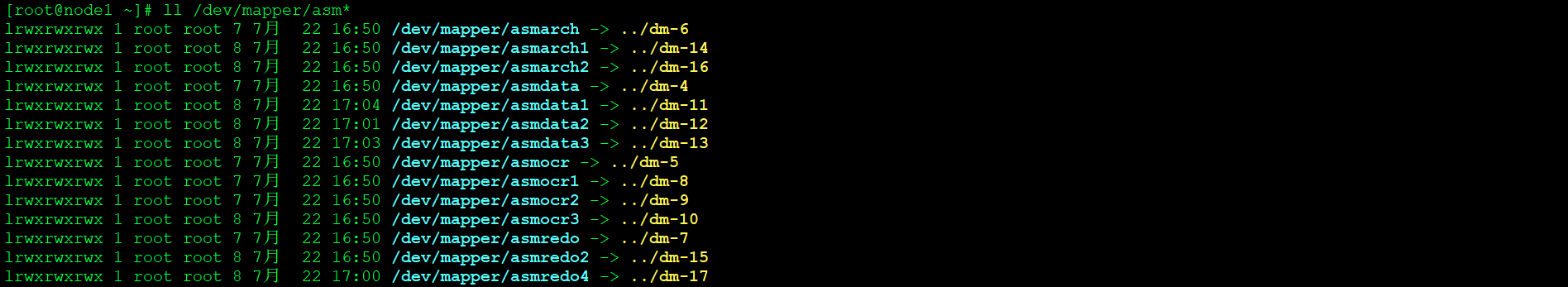

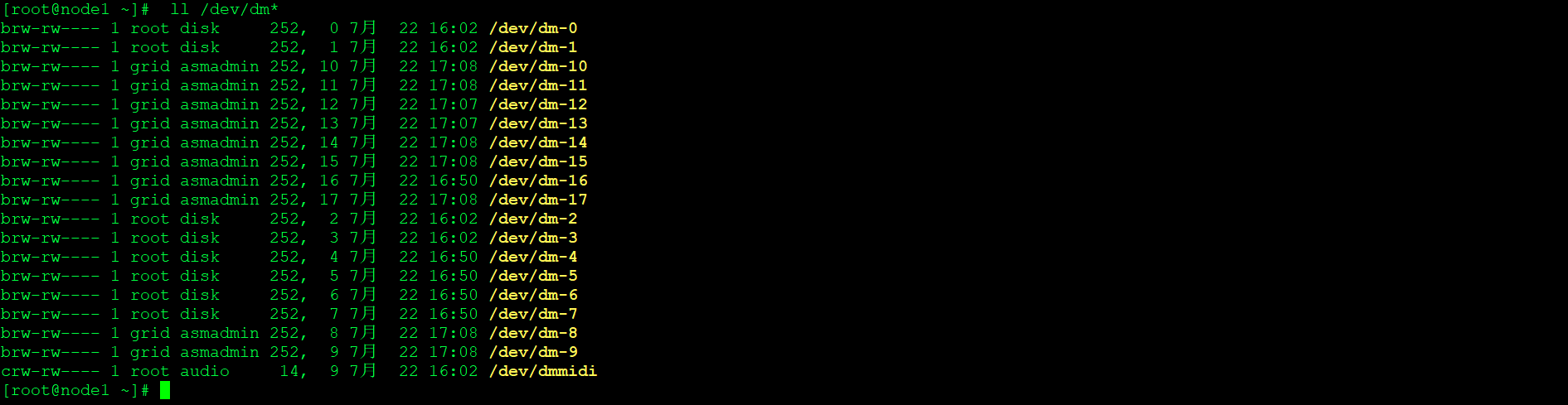

3、配置Udev绑定磁盘组权限和所有者(node1配置,node2拷贝配置文件复用)

[root@node2 ~]# cat /etc/udev/rules.d/99-dm.rules

ENV{DM_NAME}=="asmocr1",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmocr2",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmocr3",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmdata1",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmdata2",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmdata3",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmarch1",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmarch2",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmredo2",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

ENV{DM_NAME}=="asmredo4",OWNER:="grid",GROUP:="asmadmin",MODE:="660"

重新加载udev配置文件,使其生效

udevadm control --reload-rules

udevadm trigger --type=devices --action=change

三、配置SSH互信认证(Grid、Oracle用户)

1、su - grid (node1和node2分开执行)

[grid@node1 ~]$ ssh-keygen -t rsa

[grid@node1 ~]$ ssh-keygen -t dsa

su - oracle (node1和node2分开执行)

[oracle@node1 ~]$ ssh-keygen -t rsa

[oracle@node1 ~]$ ssh-keygen -t dsas

2、su - grid (node1和node2分开执行)

[grid@node1 ~]$ cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[grid@node1 ~]$ ssh grid@node2 cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[grid@node2 ~]$ cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[grid@node2 ~]$ ssh grid@node1 cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

3、su - oracle (node1和node2分开执行)

[oracle@node1 ~]$ cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[oracle@node1 ~]$ ssh oracle@node2 cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[oracle@node1 ~]$ ssh oracle@node1 cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[oracle@node2 ~]$ cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[oracle@node2 ~]$ ssh oracle@node2 cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

[oracle@node2 ~]$ ssh oracle@node1 cat ~/.ssh/*.pub >>~/.ssh/authorized_keys

4、建立节点间等效性

node1、node2双节点执行(node1、node2分别执行)

[root@node1 ~]# ssh node1 date

[root@node1 ~]# ssh node2 date

[root@node1 ~]# ssh node1-vip date

[root@node1 ~]# ssh node2-vip date

四、创建用户和用户所有者(node1、node2全部执行)

/usr/sbin/groupadd -g 501 oinstall

/usr/sbin/groupadd -g 502 dba

groupadd oper

groupadd backupdba

groupadd dgdba

groupadd kmdba

groupadd asmdba

groupadd asmoper

groupadd asmadmin

/usr/sbin/useradd -m -u 501 -g oinstall -G dba oracle

useradd -g oinstall -G asmadmin,asmdba,dba grid

usermod -g oinstall -G dba,backupdba,dgdba,kmdba,asmdba,asmoper oracle

passwd oracle --设置Oracle密码

passwd grid --设置Grid密码

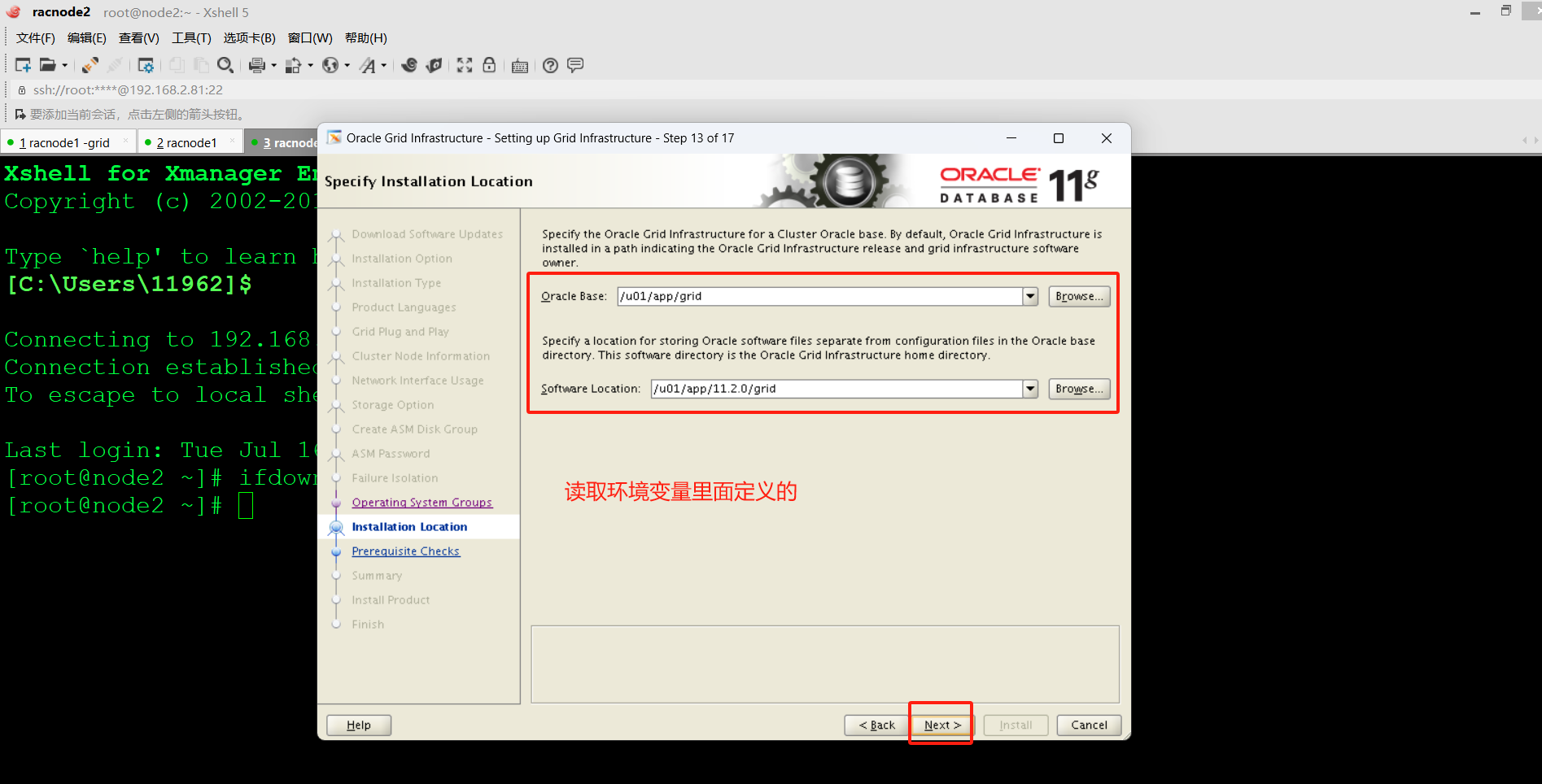

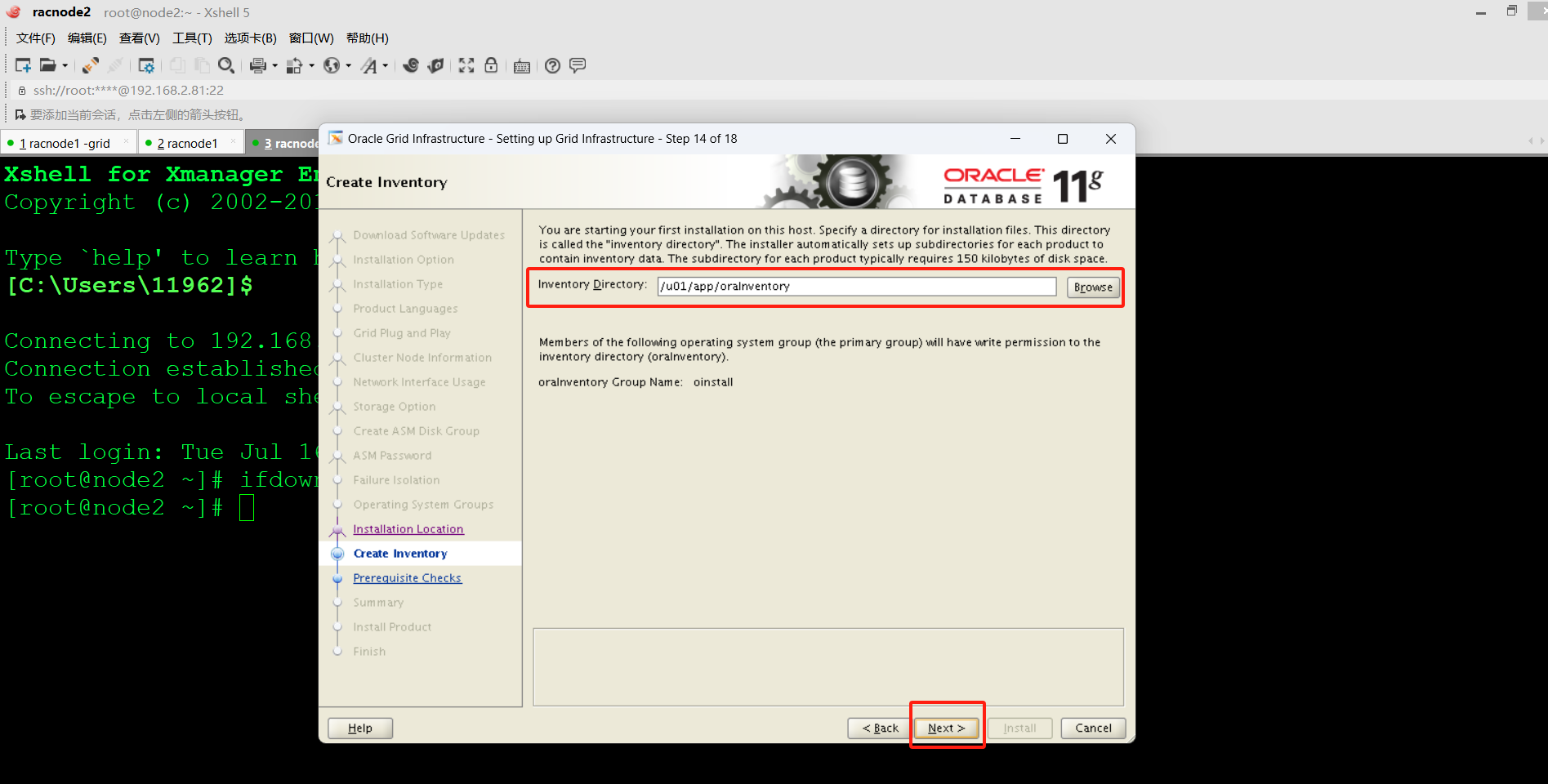

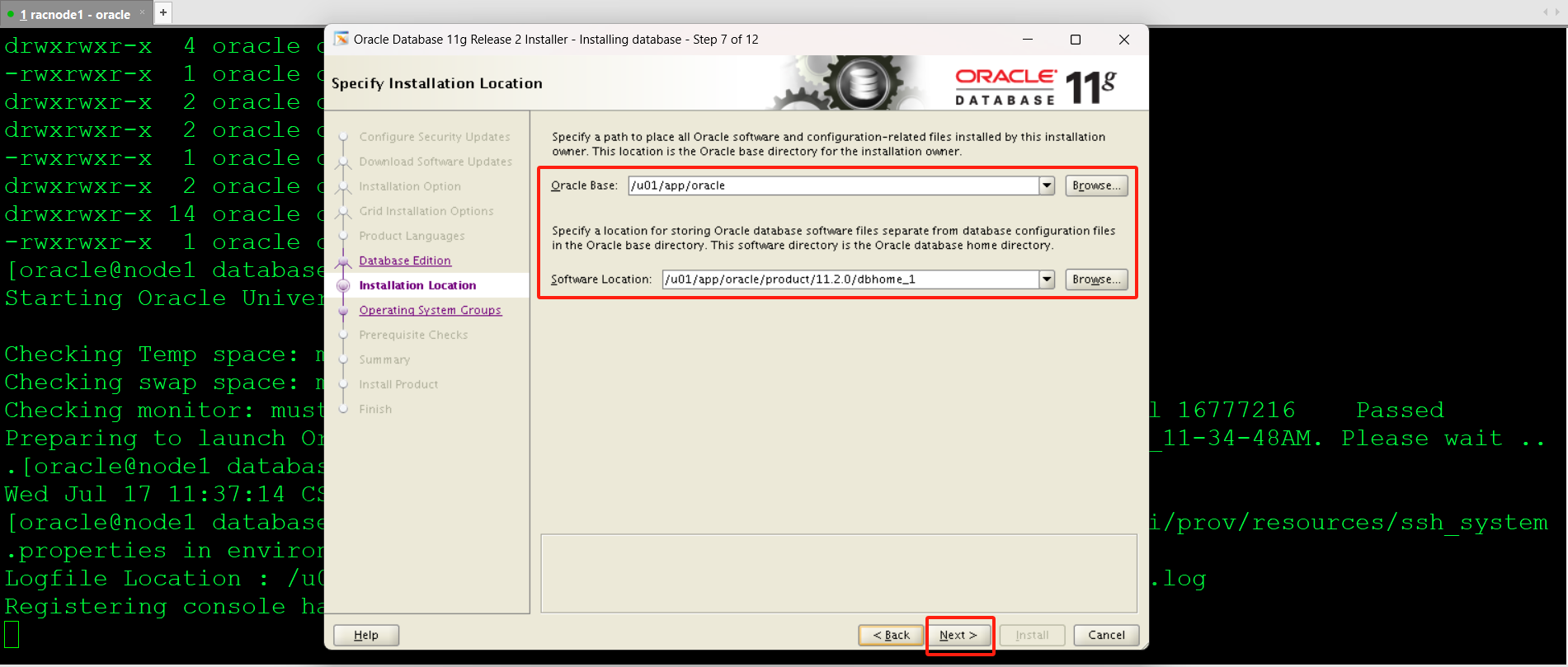

五、创建安装目录(node1、node2全部执行)

mkdir -p /u01/app/grid

mkdir -p /u01/app/oracle

mkdir -p /u01/app/11.2.0/grid

mkdir -p /u01/app/oracle/product/11.2.0/dbhome_1

mkdir -p /u01/app/oraInventory

chown -R oracle:oinstall /u01/app/oracle/product/11.2.0/dbhome_1/

chown -R grid:oinstall /u01/app/grid

chown -R grid:oinstall /u01/app/11.2.0

chown oracle:oinstall /u01/app/oracle

chmod -R 775 /u01

chown -R grid:oinstall /u01/app/oraInventory

chmod -R 775 /u01/app/oraInventory

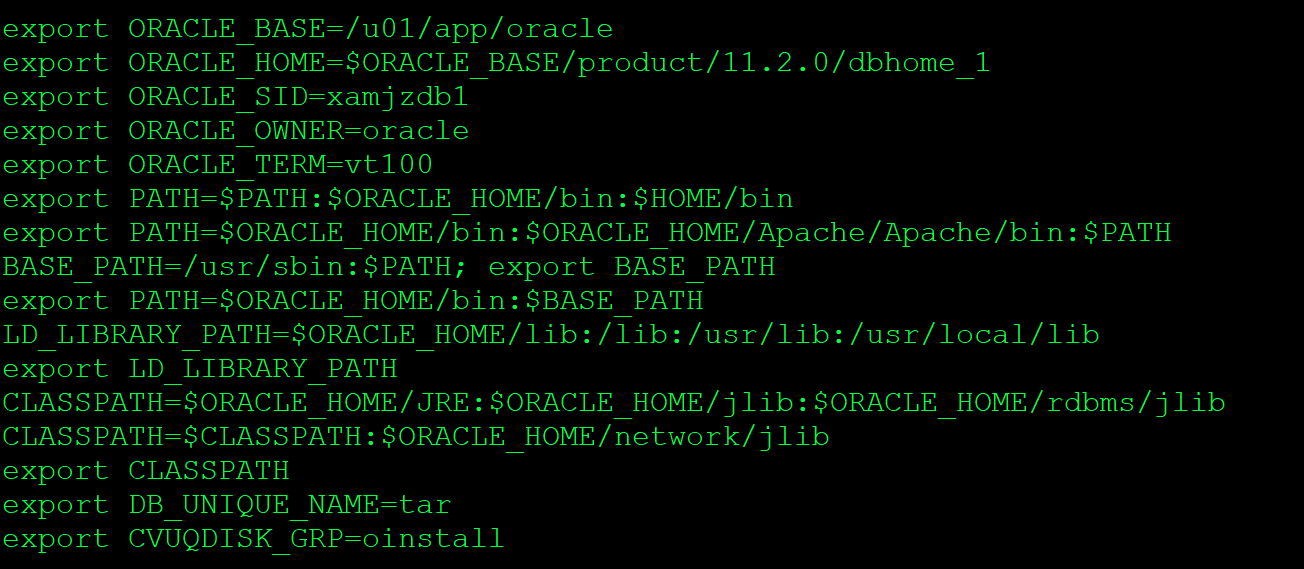

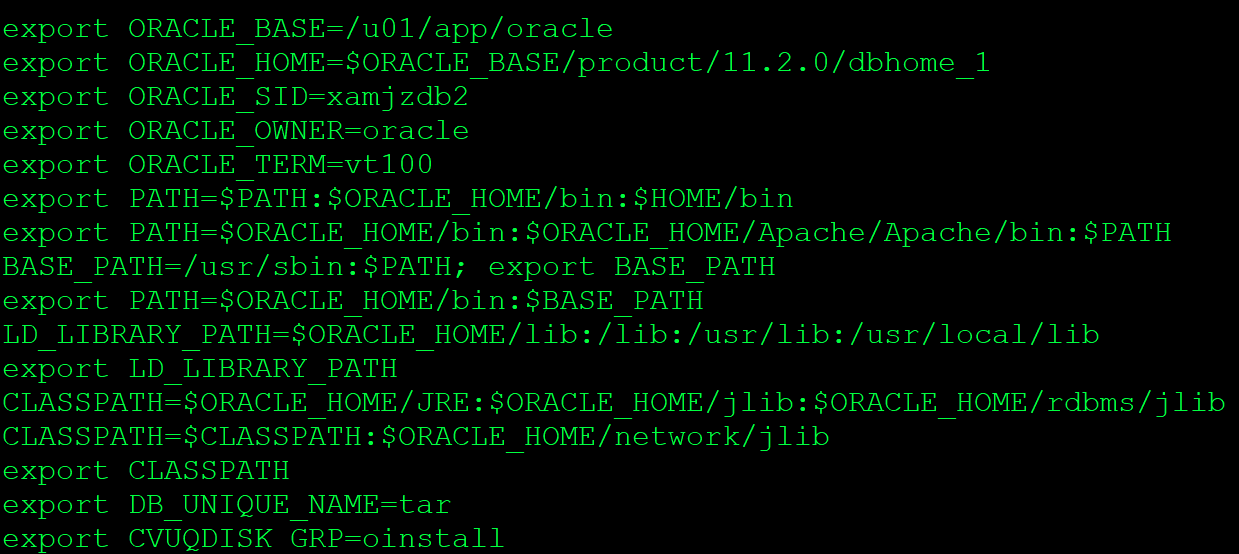

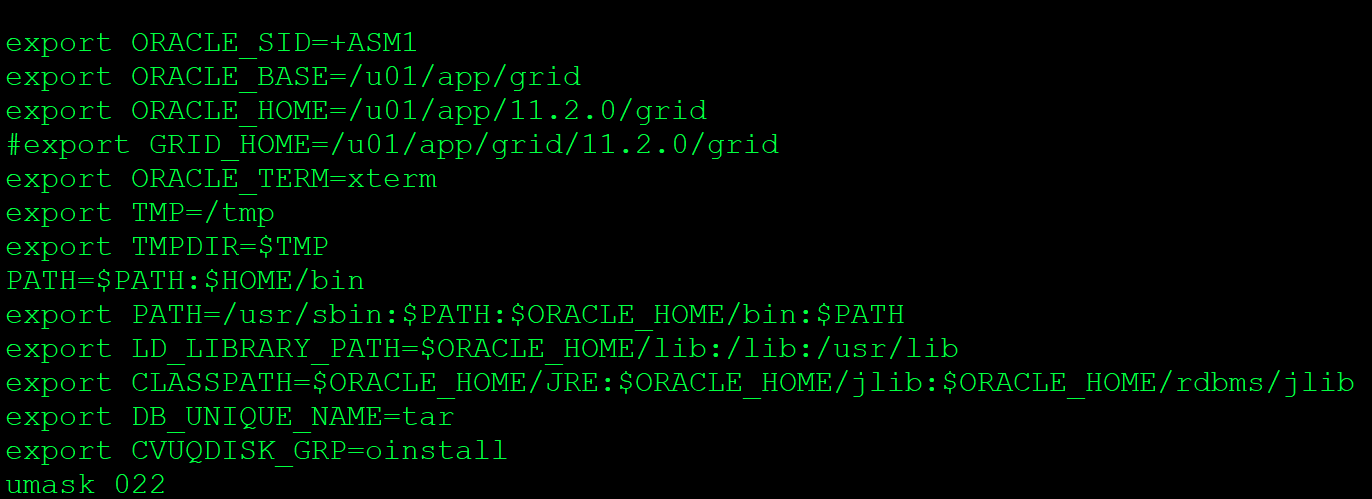

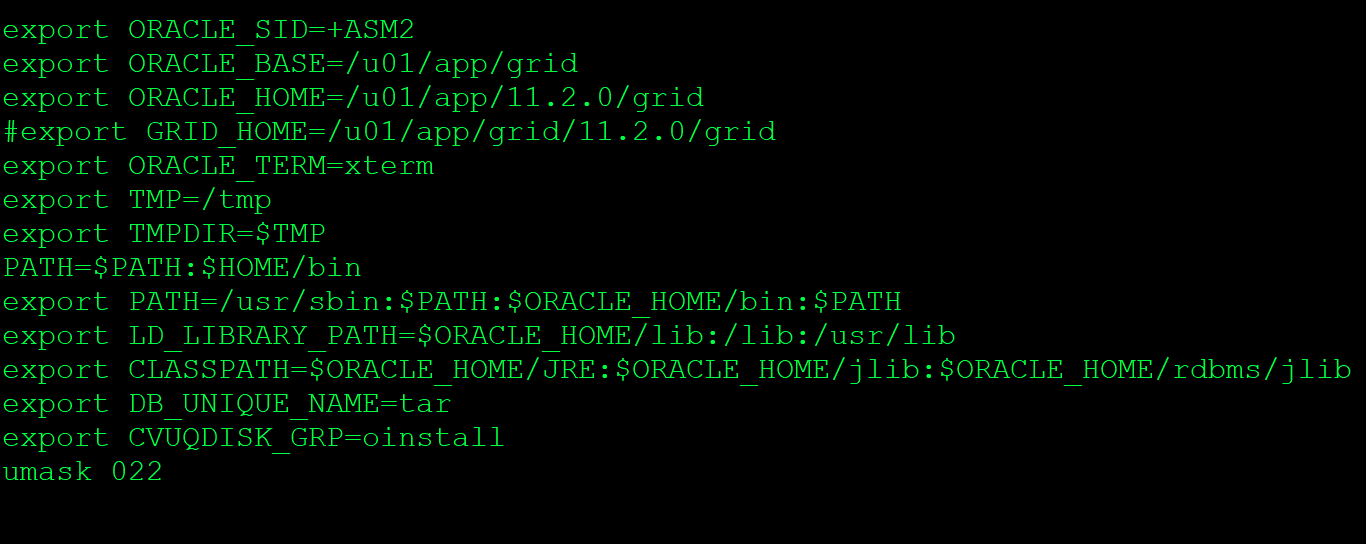

- 配置Oracle和grid环境变量(node1、node2全部执行)

**********************Oracle环境变量配置********************

Oracle实例名称node1要用xamjzdb1,node2要用xamjzdb2

***********************grid环境变量配置********************

ASM实例名称node1要用+ASM1,node2要用+ASM2

七、安装依赖包(node1、node2全部执行)

yum -y install gtk-vnc*

yum -y install libvncserver*

yum -y install tigervnc*

yum -y install autoconf*

--yum install automake*

yum -y install binutils-*

yum -y install compat*

--yum install compat-db*

--yum install elfutils-libelf-0.*.i386.rpm

yum -y install elfutils-libelf-devel*

yum -y install elfutils-libs*

--yum install elfutils-0.*

yum -y install glibc*

yum -y install gcc*

--yum install gnome-screensaver*

--yum install ksh*

yum -y install libXp*

--yum install libXt*

--yum install libXt*i686.rpm

yum -y install libstdc++-*

yum -y install libaio*

--yum install make*

yum -y install openmotif*

yum -y install rpm-*

yum -y install sysstat*

--yum -y install *gnome*

八、修改操作系统参数(node1、node2全部执行)

1、修改用户登录参数

打开 vi /etc/pam.d/login ,在文件末尾处加入以下参数

session required pam_limits.so

2、修改gird和oracle在操作系统层面资源限制

cp /etc/security/limits.conf /etc/security/limits.conf.`date +%Y%m%d`

echo "grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

grid soft nproc 2047

grid hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

root soft nproc 2047 " >> /etc/security/limits.conf

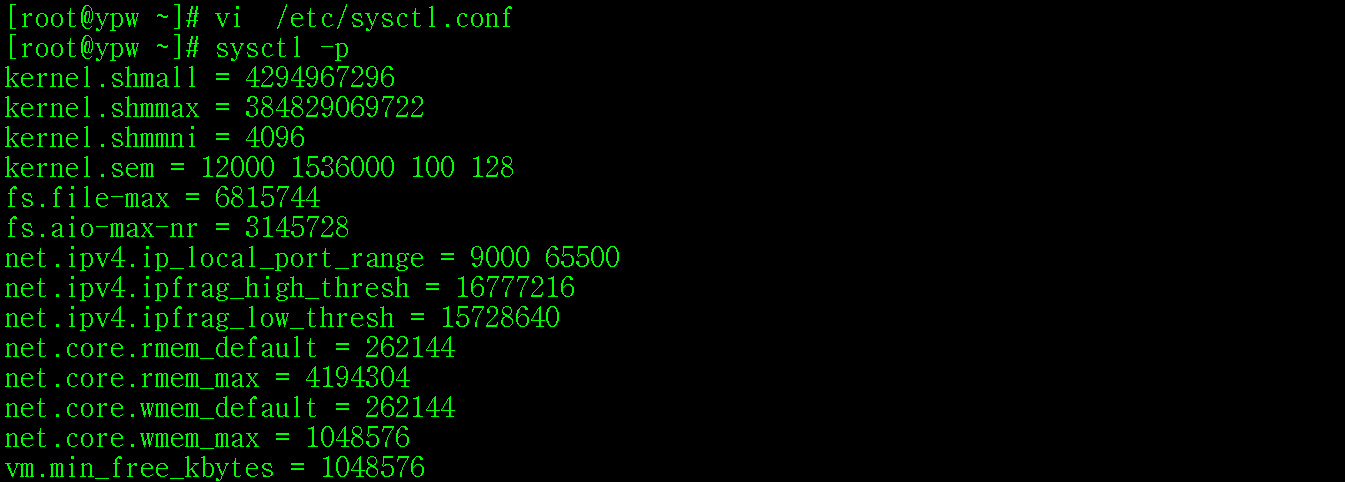

3、Linux内核参数配置

[root@ypw ~]# vi /etc/sysctl.conf

#ORACLE SETTING

kernel.shmall = 4294967296

kernel.shmmax = 384829069722

kernel.shmmni = 4096

kernel.sem = 12000 1536000 100 128

fs.file-max = 6815744

fs.aio-max-nr = 3145728

net.ipv4.ip_local_port_range = 9000 65500

net.ipv4.ipfrag_high_thresh = 16777216

net.ipv4.ipfrag_low_thresh = 15728640

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

vm.min_free_kbytes= 1048576

以上完成后执行 sysctl -p

4、修改/etc/profile

[root@ypw ~]# vi /etc/profile

if [ \$USER = "oracle" ]||[ \$USER = "grid" ]; then

if [ \$SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

以上完成后执行 source /etc/profile,使环境变量生效

九、要单独安装的兼容包(node1、node2全部执行)

[root@node1 ~]# cd /home/soft/

[root@node1 soft]#

rpm -i --force --nodeps pdksh-5.2.14-30.x86_64.rpm

十、安装grid软件

1、使用root用户设置CVUQDISK_GRP指向cvudisk(表决盘)的拥有者

export CVUQDISK_GRP=oinstall(node1、node2两个节点都要执行)

[root@node1 ~]# cd /home/soft/grid/rpm/

[root@node1 rpm]# export CVUQDISK_GRP=oinstall

[root@node1 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm

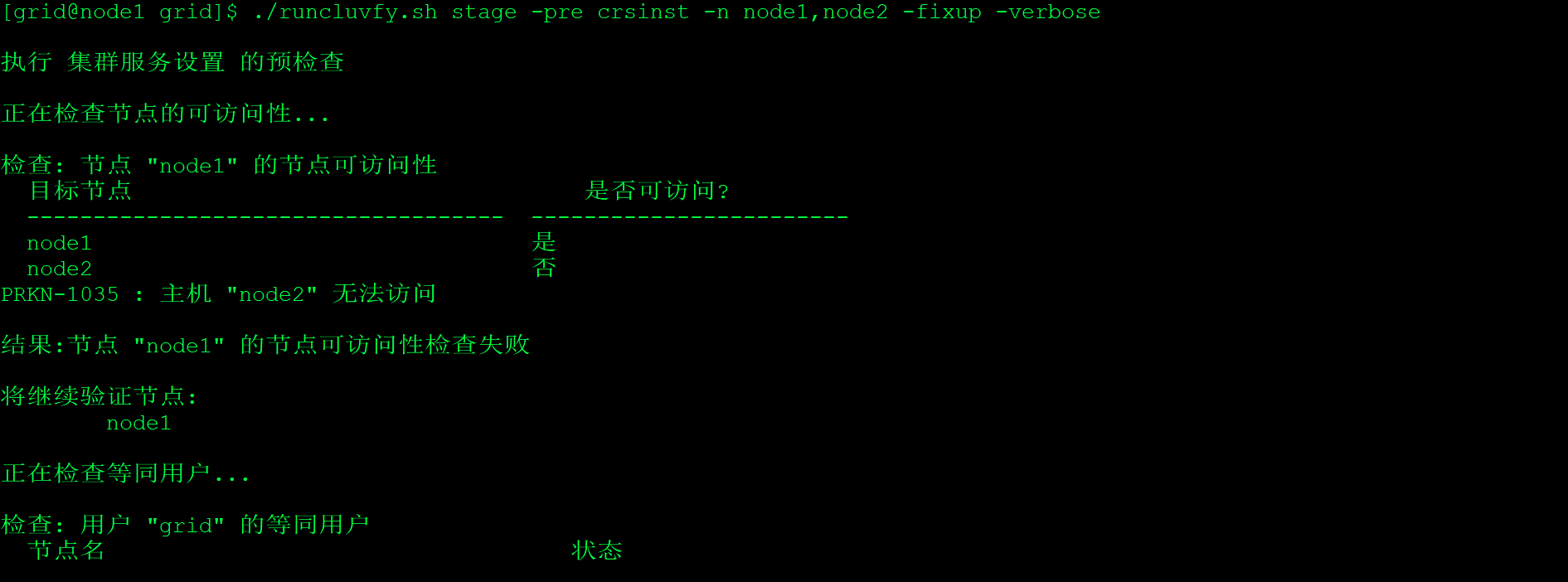

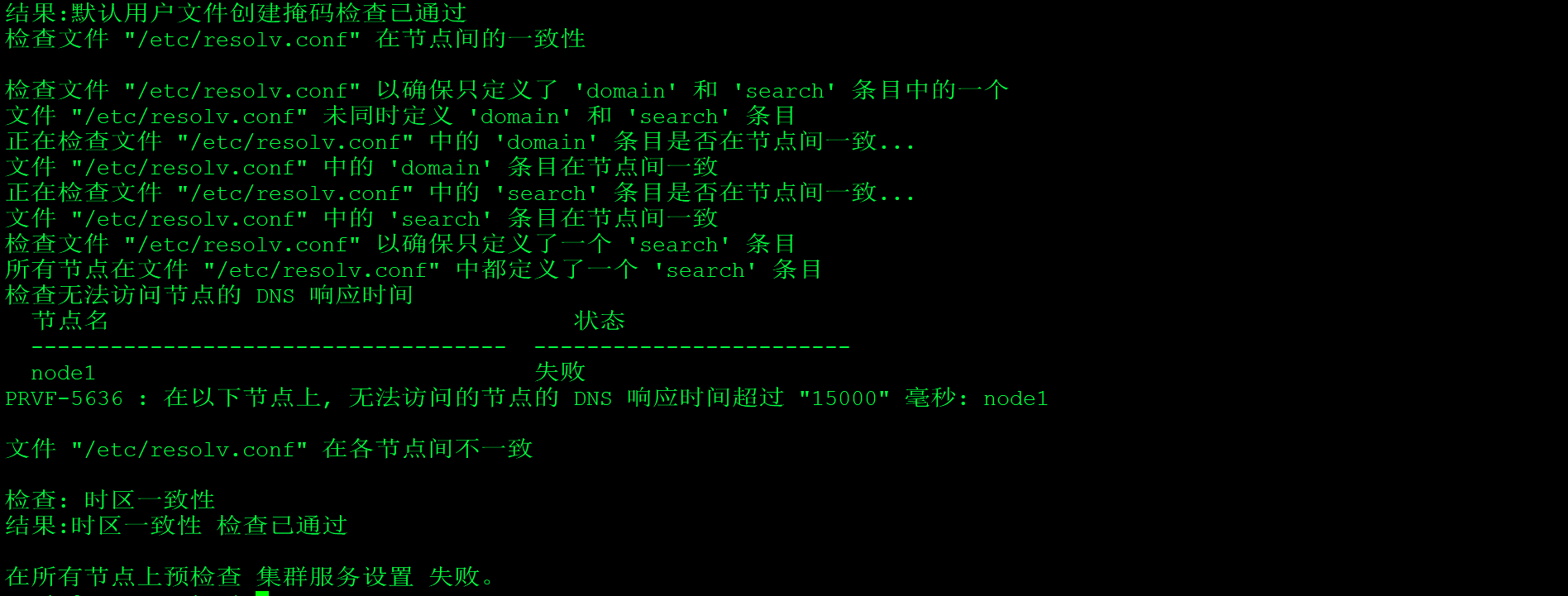

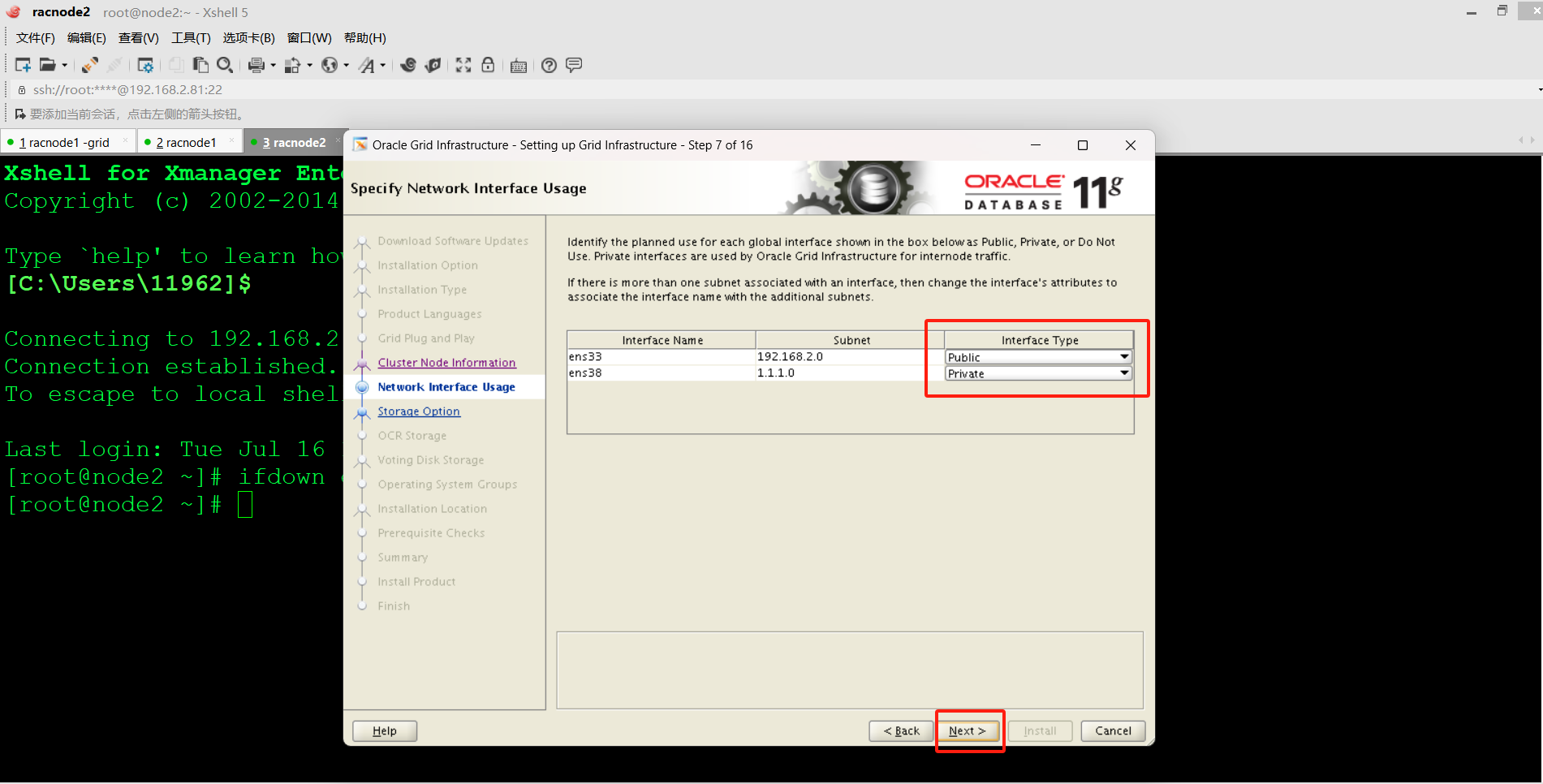

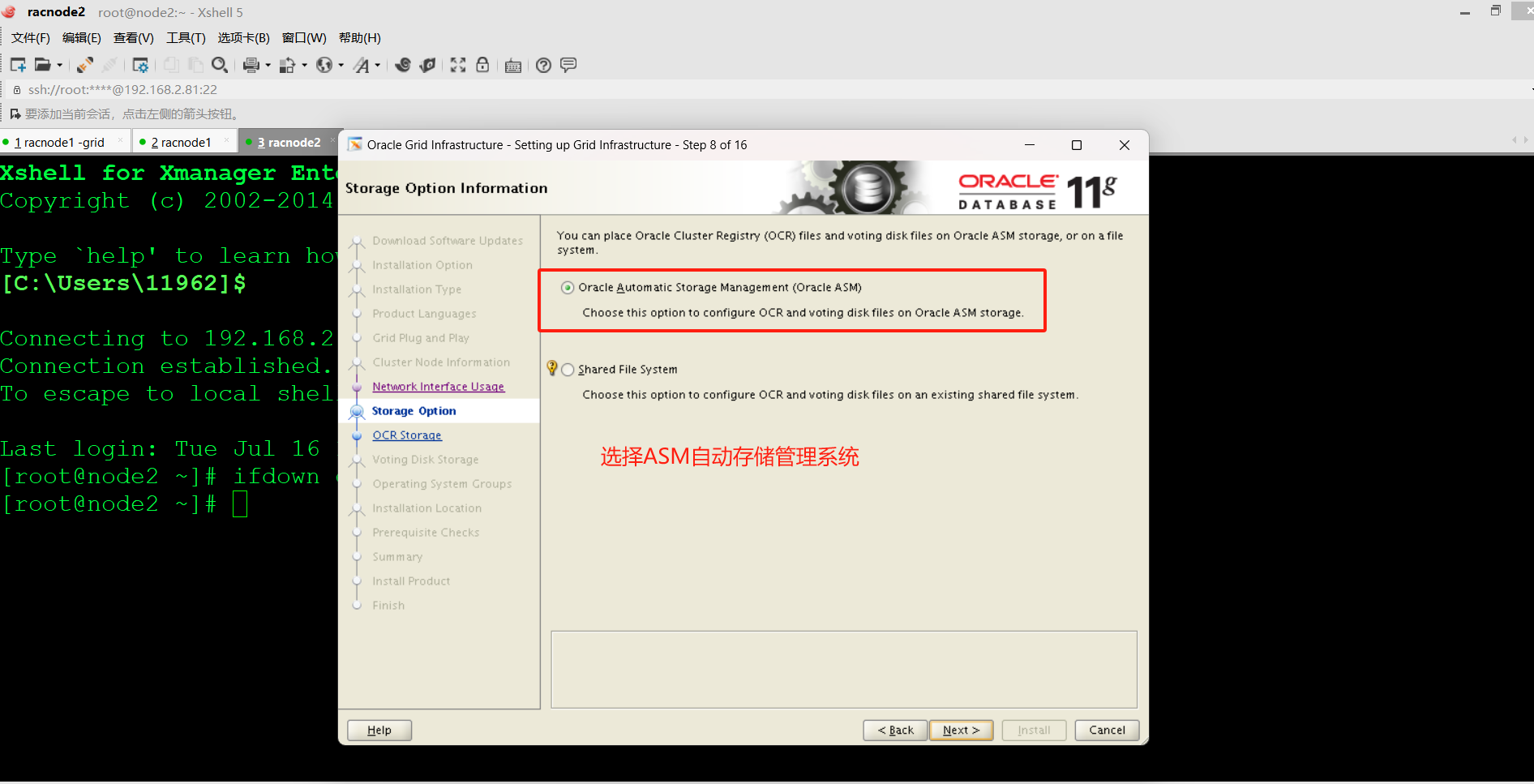

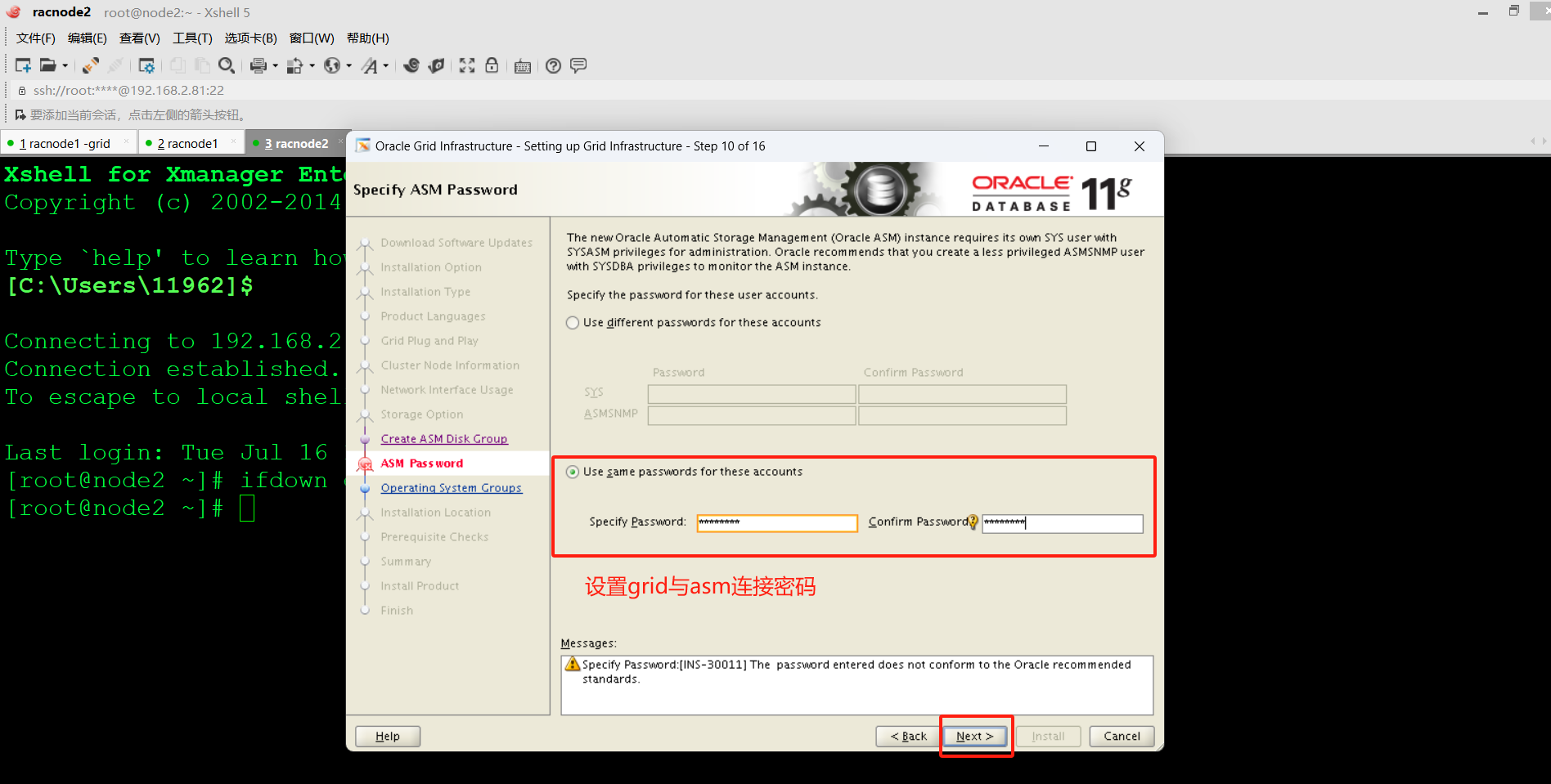

十一、安装前预检查配置信息

请使用 grid 用户,racnode1、racnode2 都要执行以下这个脚本

验证两个节点间连通性是否通过

./runcluvfy.sh stage -pre crsinst -n node1,node2 -fixup -verbose

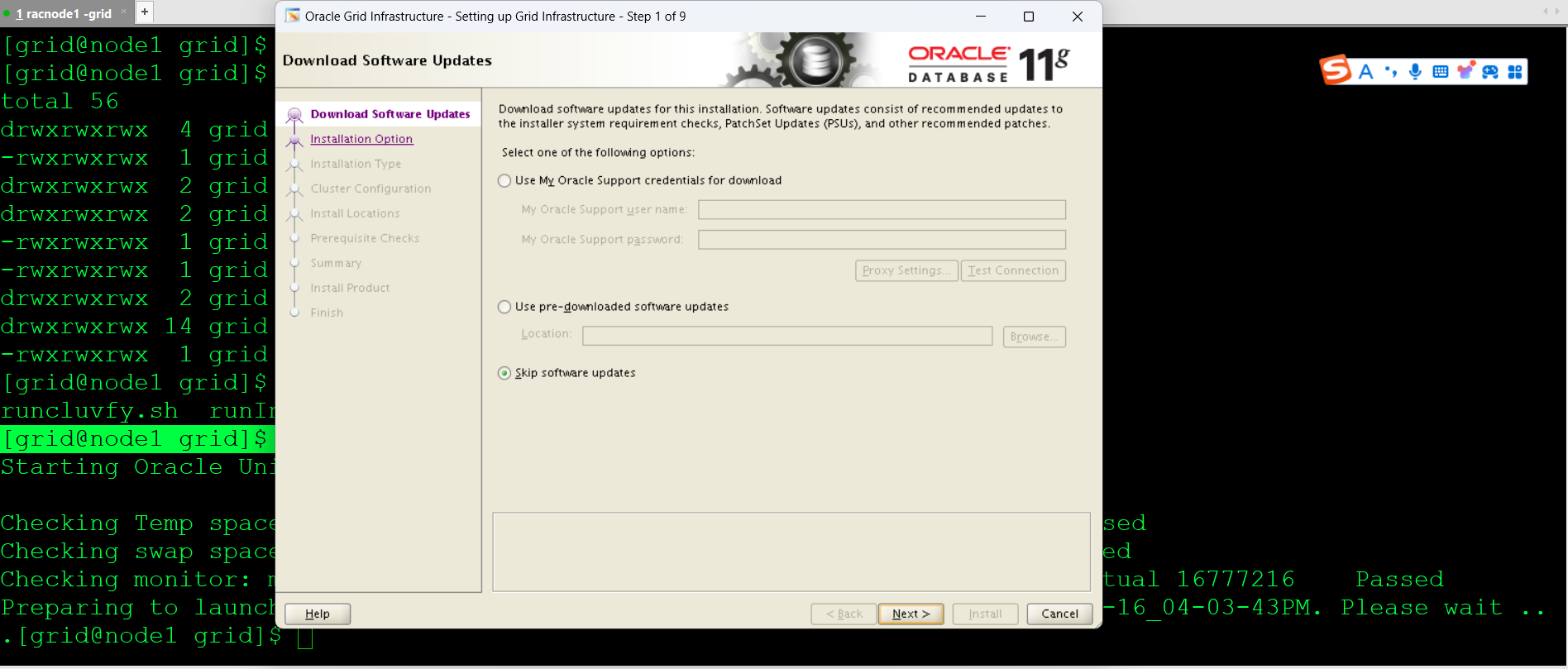

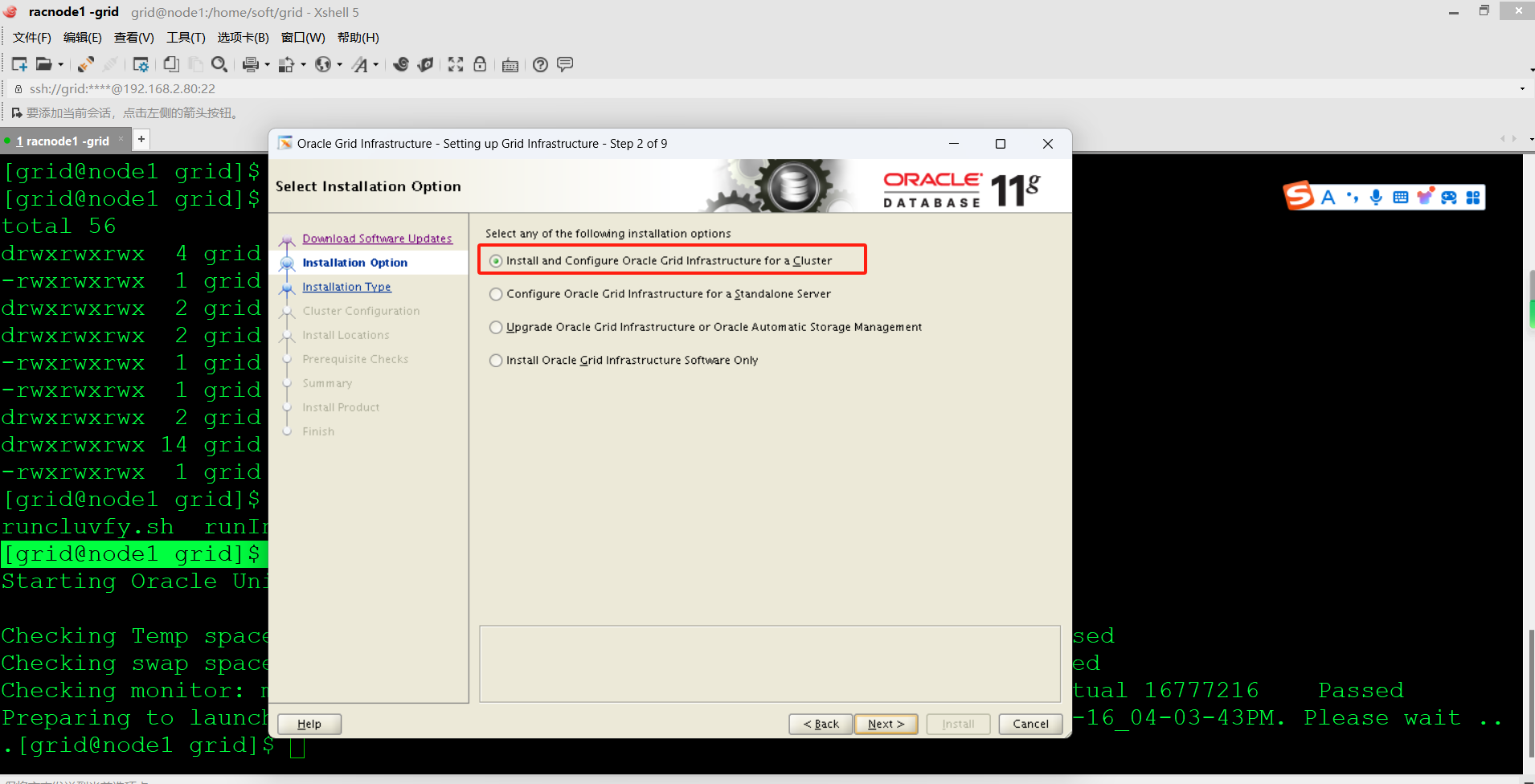

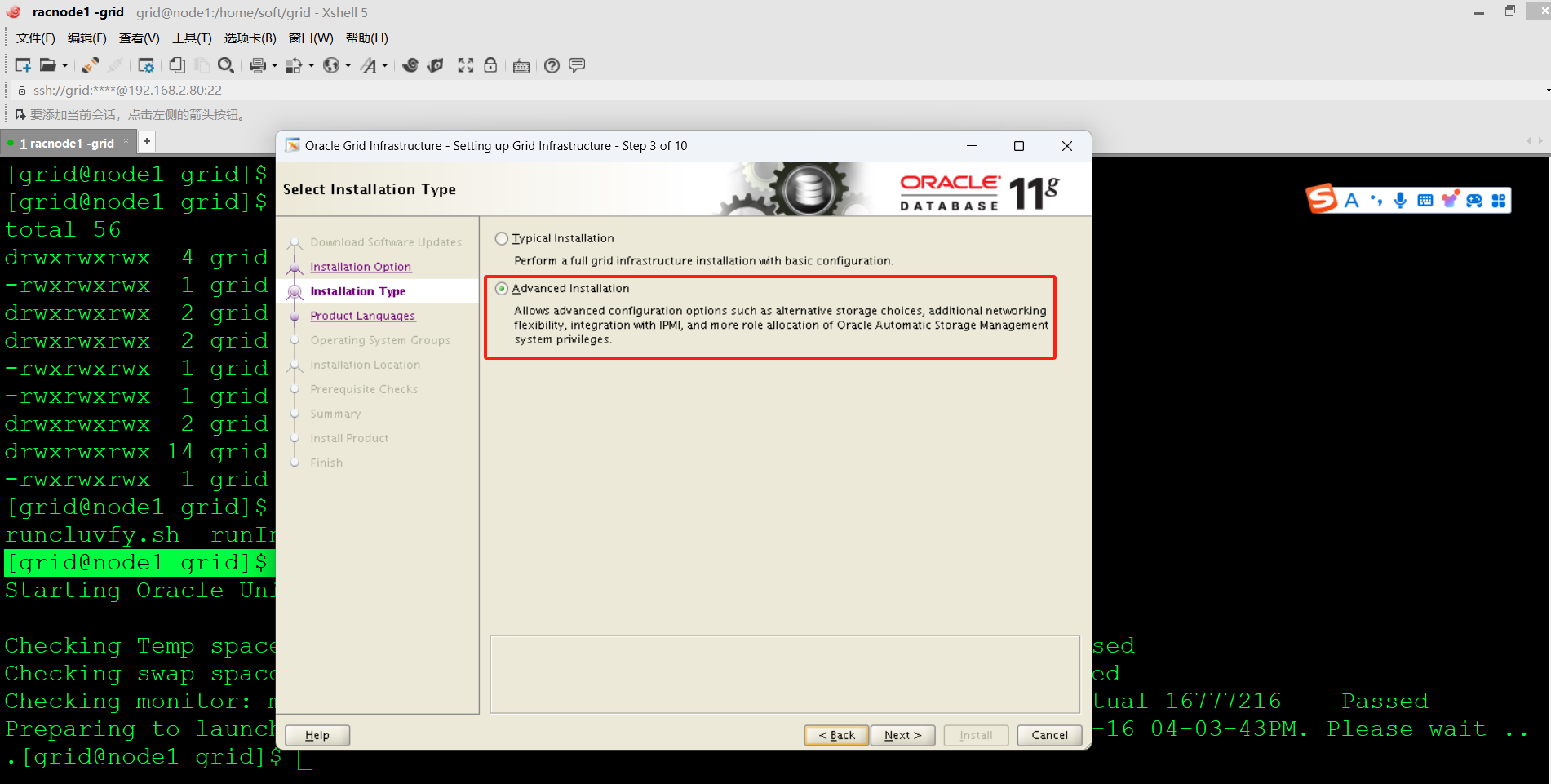

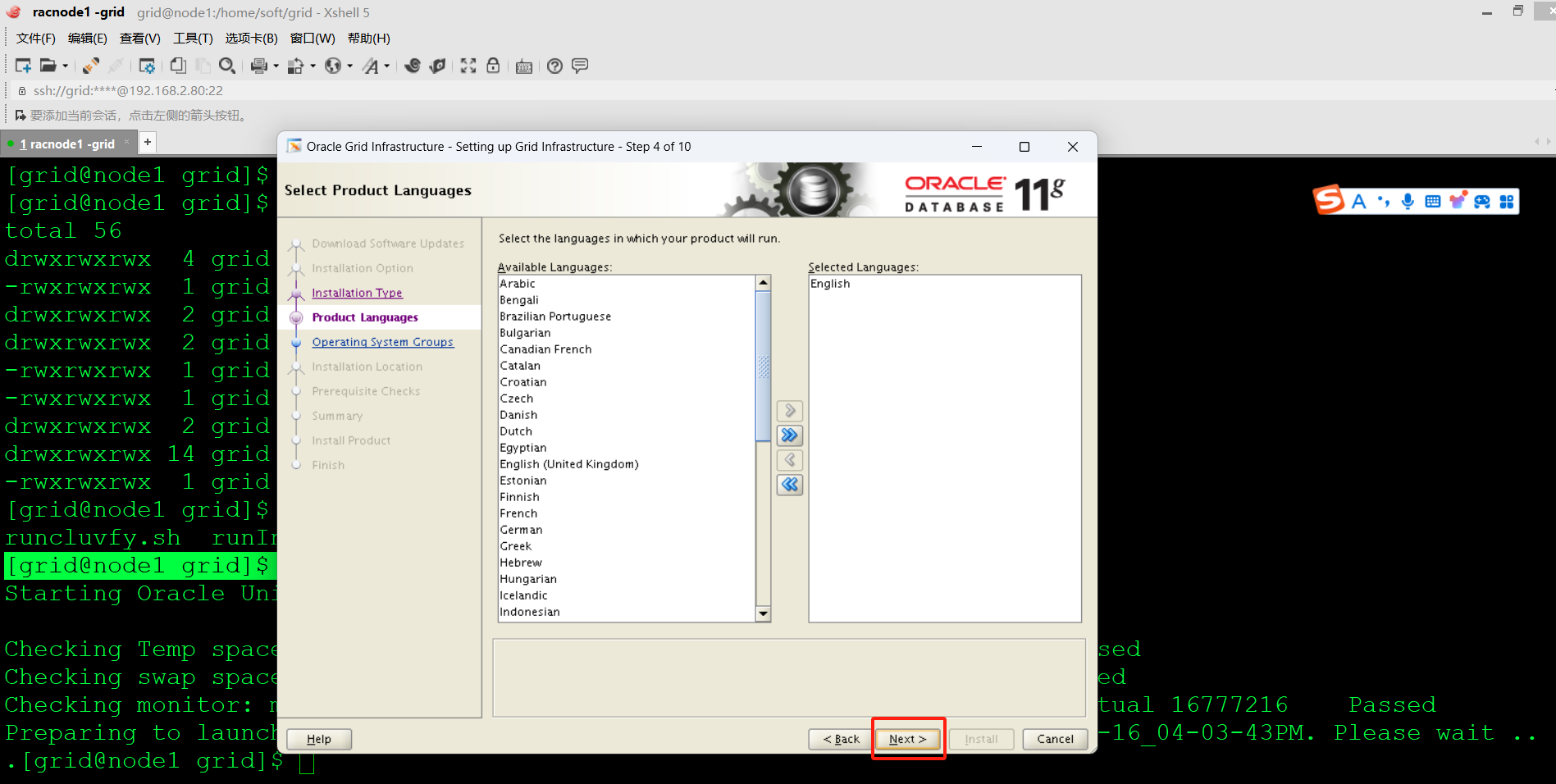

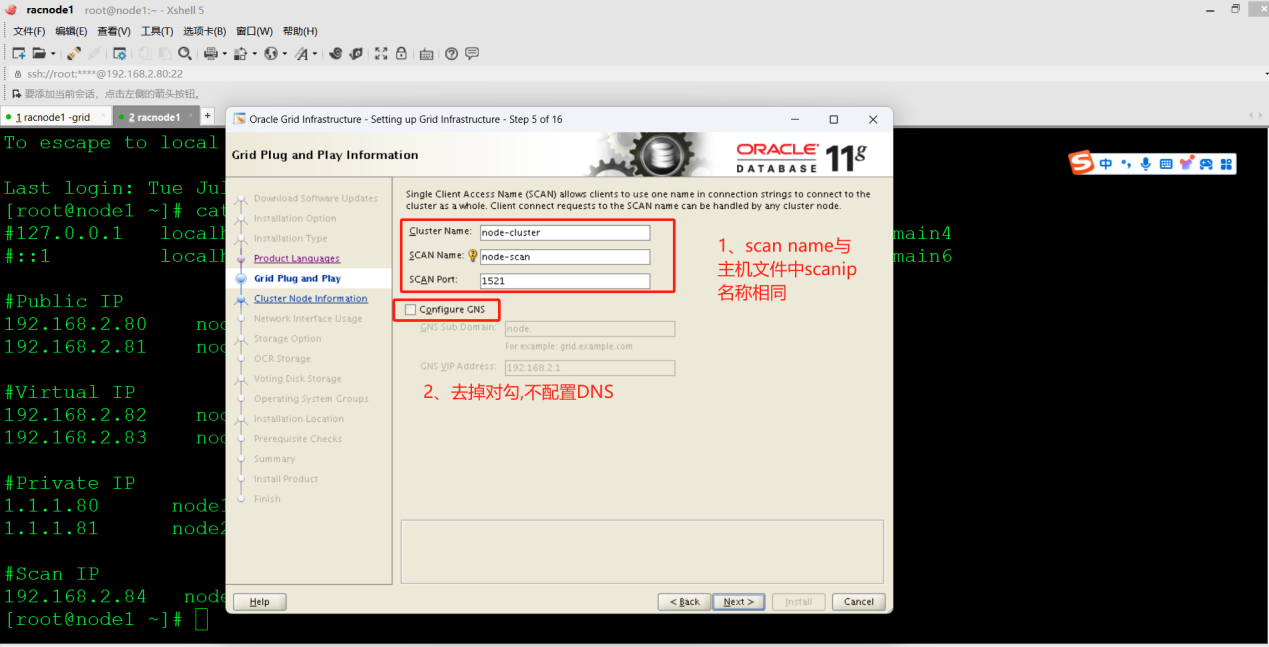

开始安装grid软件

[grid@node1 ~]$ cd /home/soft/grid/

[grid@node1 grid]$ export LANG=en_US.UTF-8

[grid@node1 grid]$ ./runInstaller

验证 ssh 等效性

1) 如果前置未设置 ssh 等效性:选择 ssh connectivty,输入 OS password:grid(grid 用户密码),点击 setup,等待即可,成功则下一步。

2) 如果前面已经设置了 ssh 等效性:可以点击“Test”,或直接Next下一步。

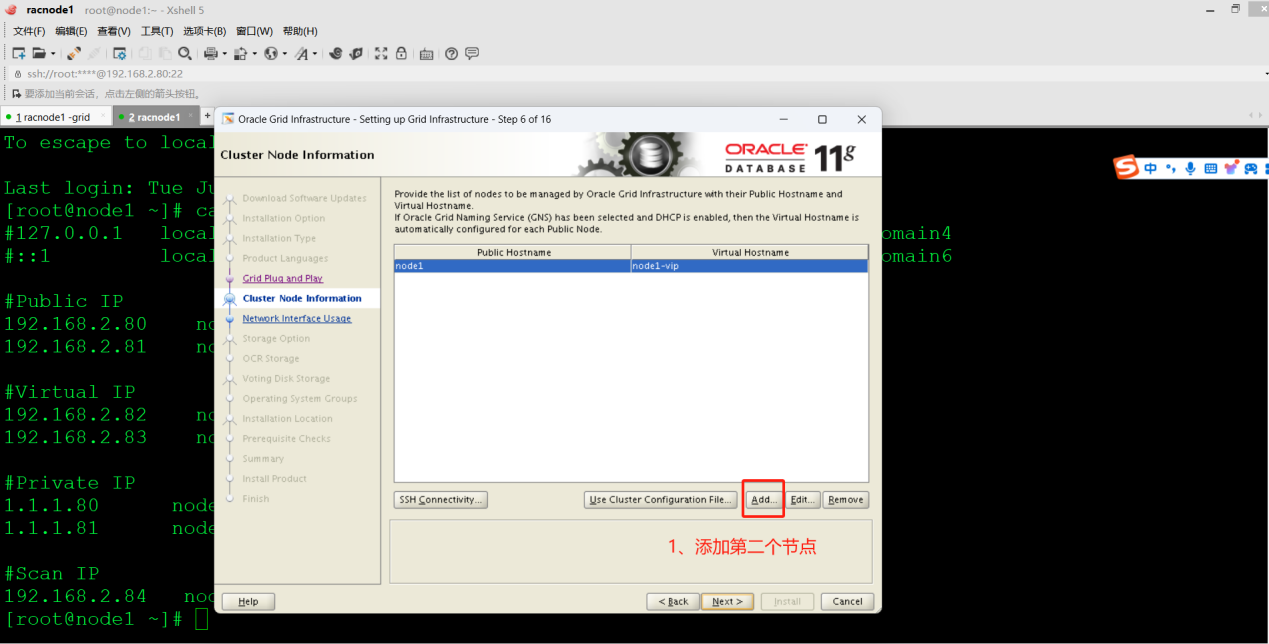

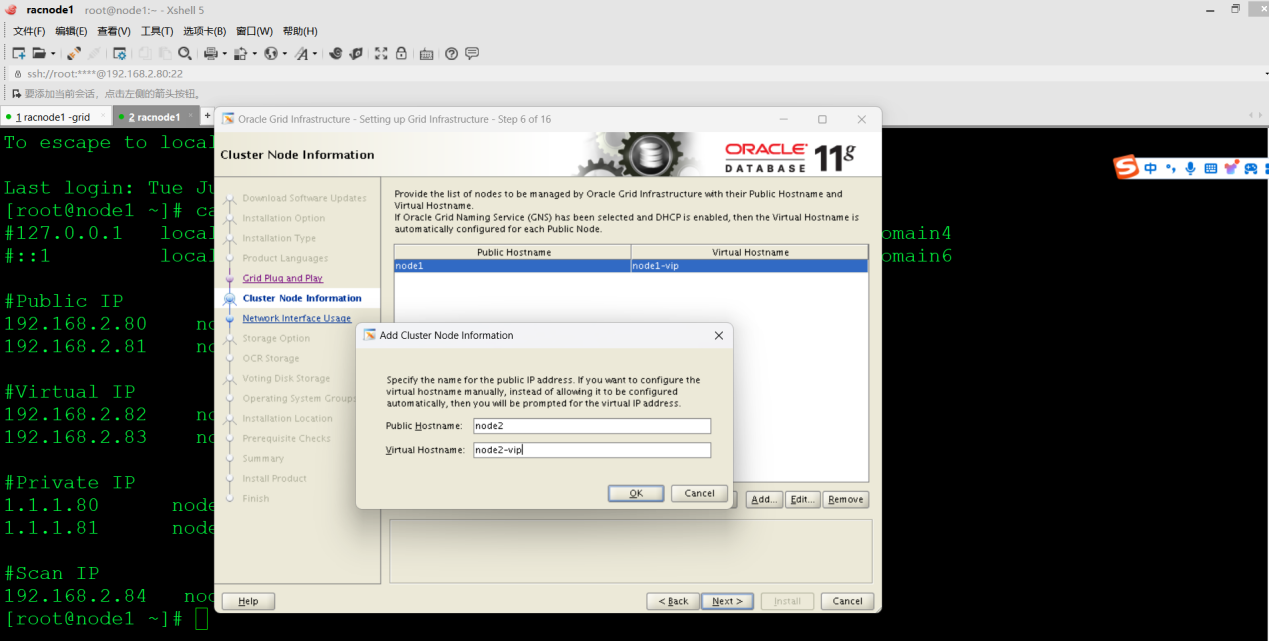

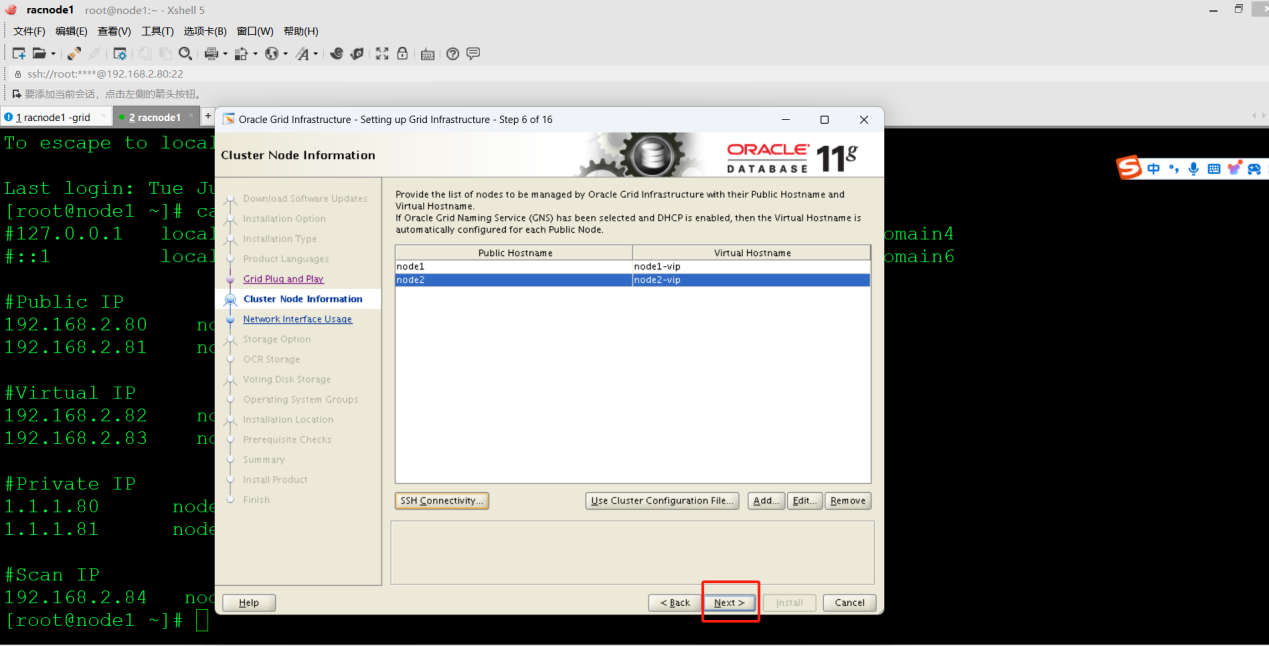

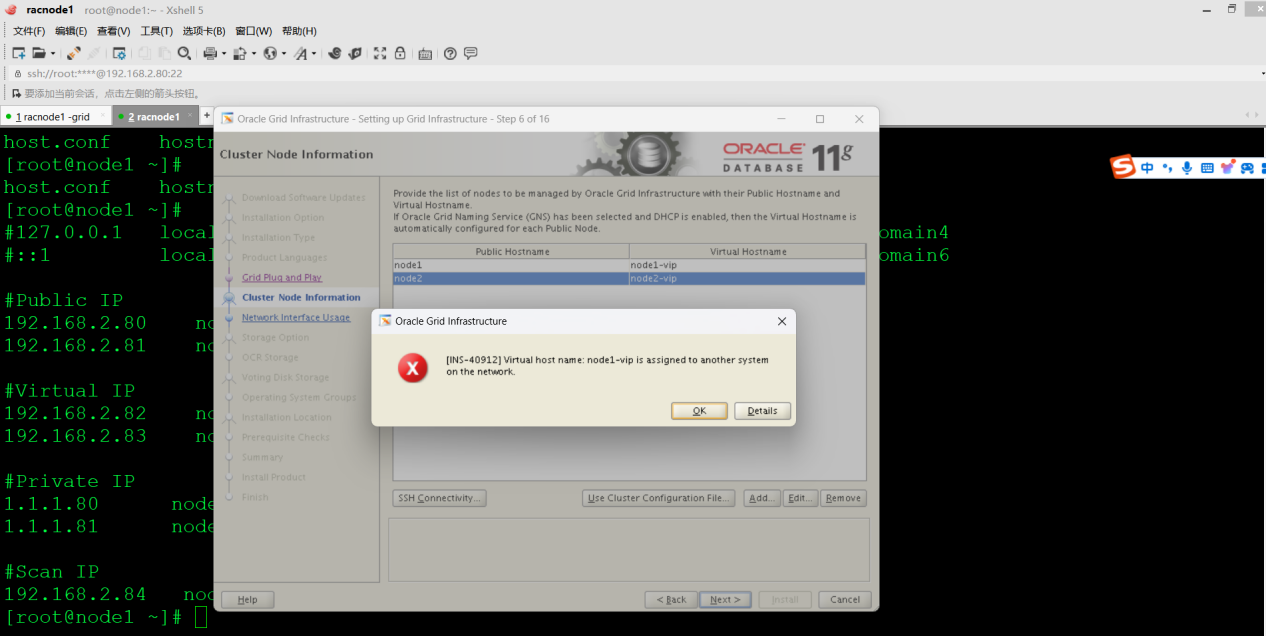

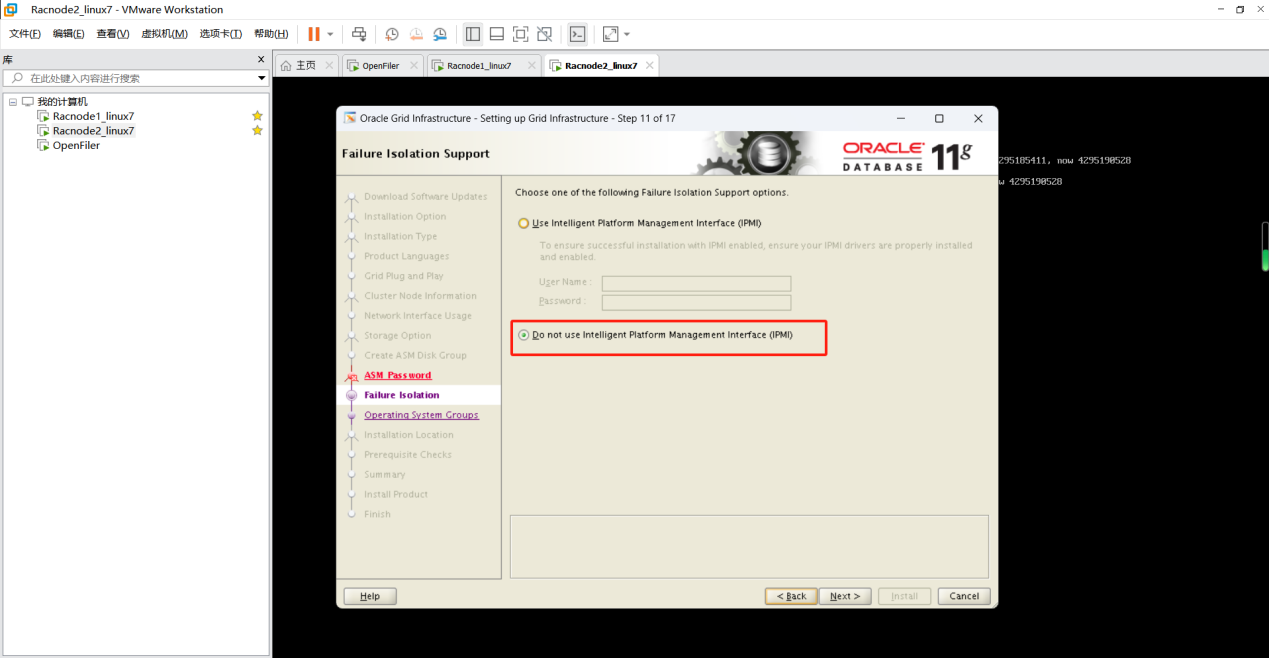

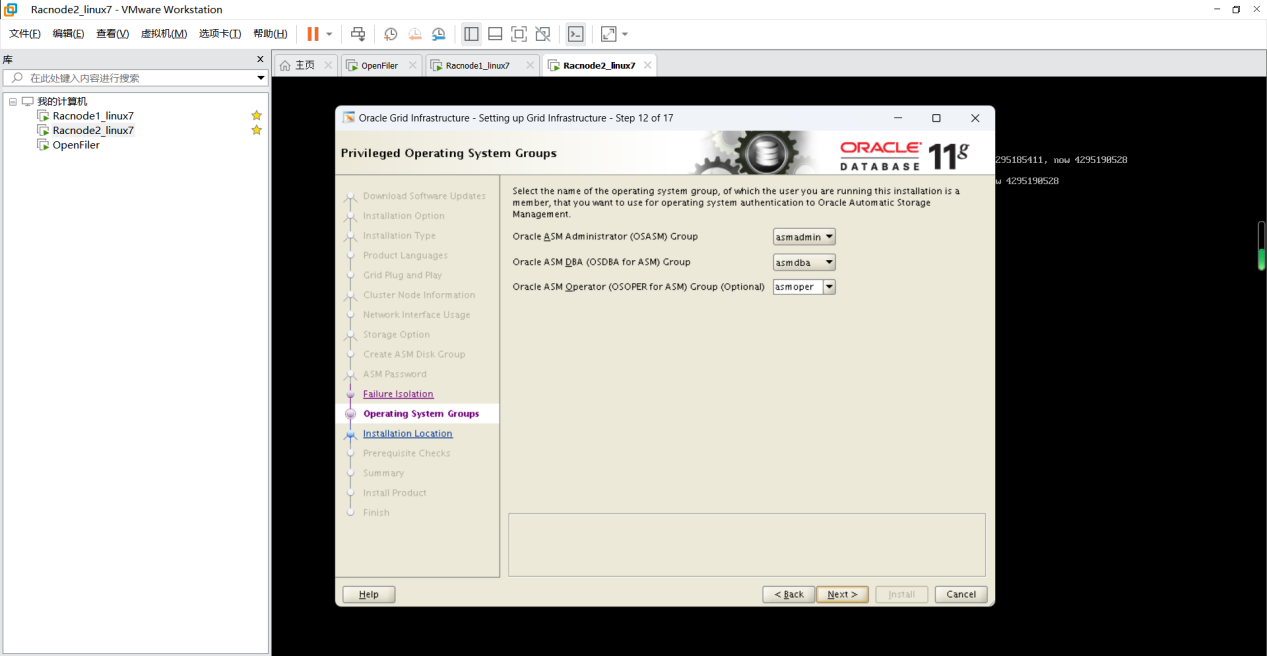

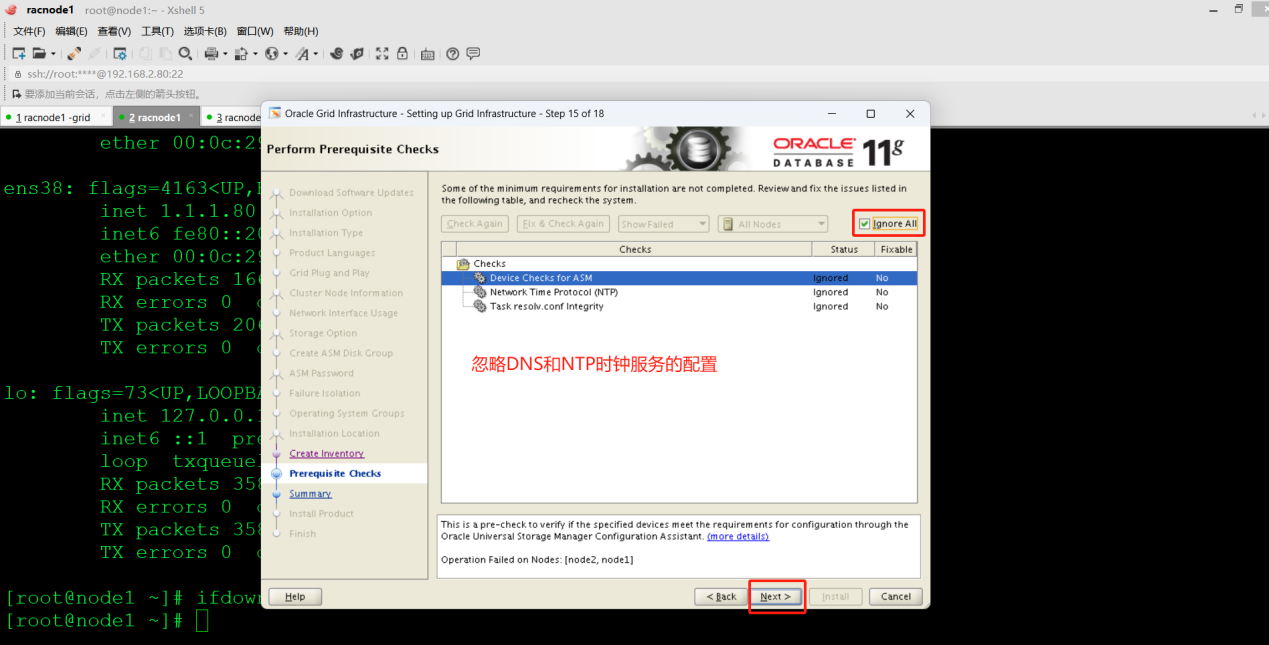

点击next出现[INS-40912]错误

【这个问题的解答】这是个虚拟 IP,当前你应该 ping 不通。目前你还没安装,暂时不会绑到网卡上。

这个应该在 RAC 安装完,启动成功后,才会随机往两台机器上的其中一个网卡绑定。

当其中一台机器坏了,自动飘移到另一台。

【解决办法】ifconfig ens33:1 down 或者 ifdown ens33:1 然后,点击"Next"

[root@node1 ~]# ifdown ens33:1 (node1、node2两个节点都要执行)

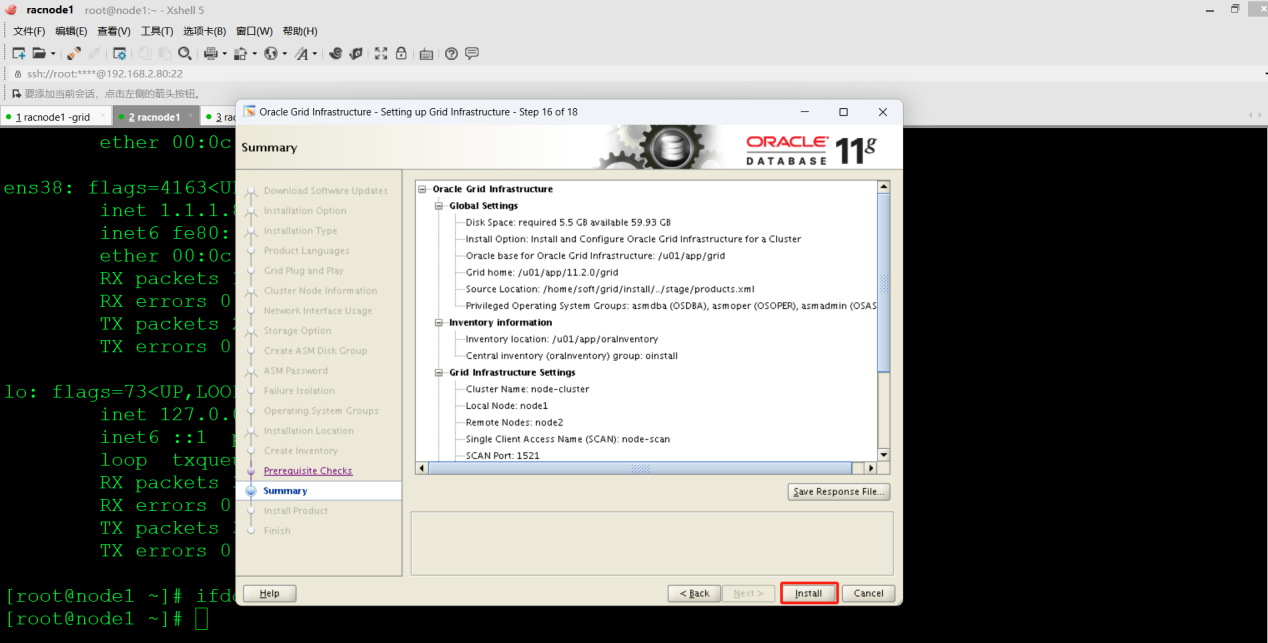

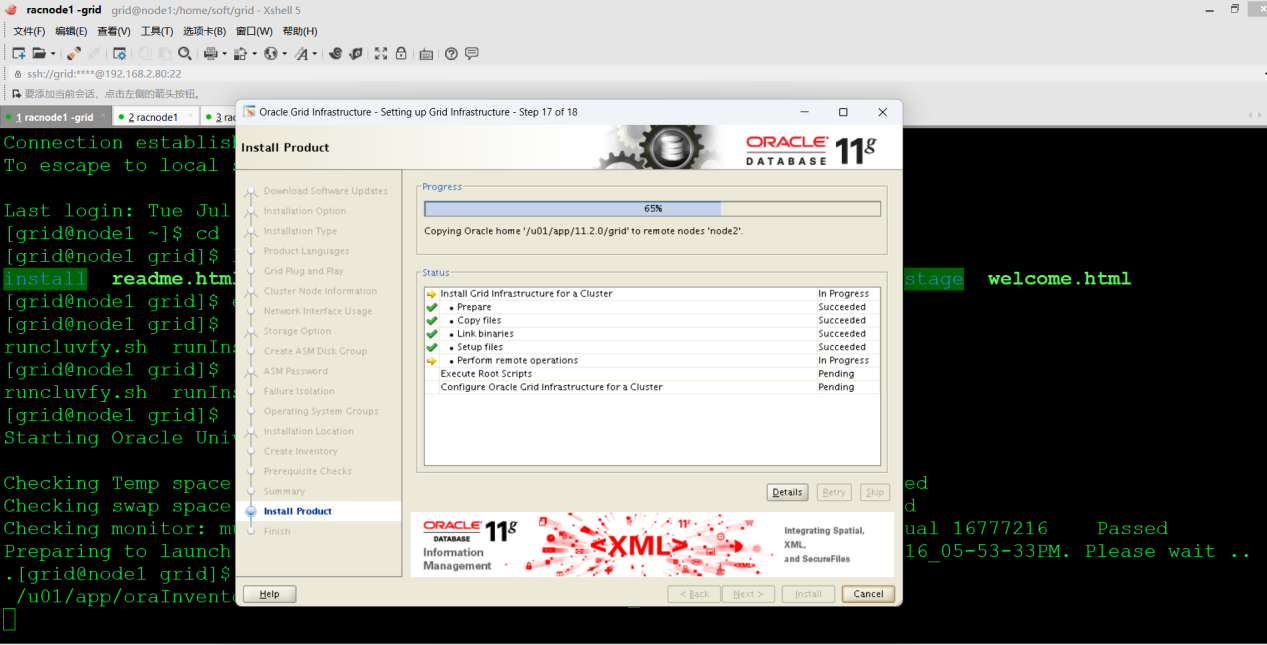

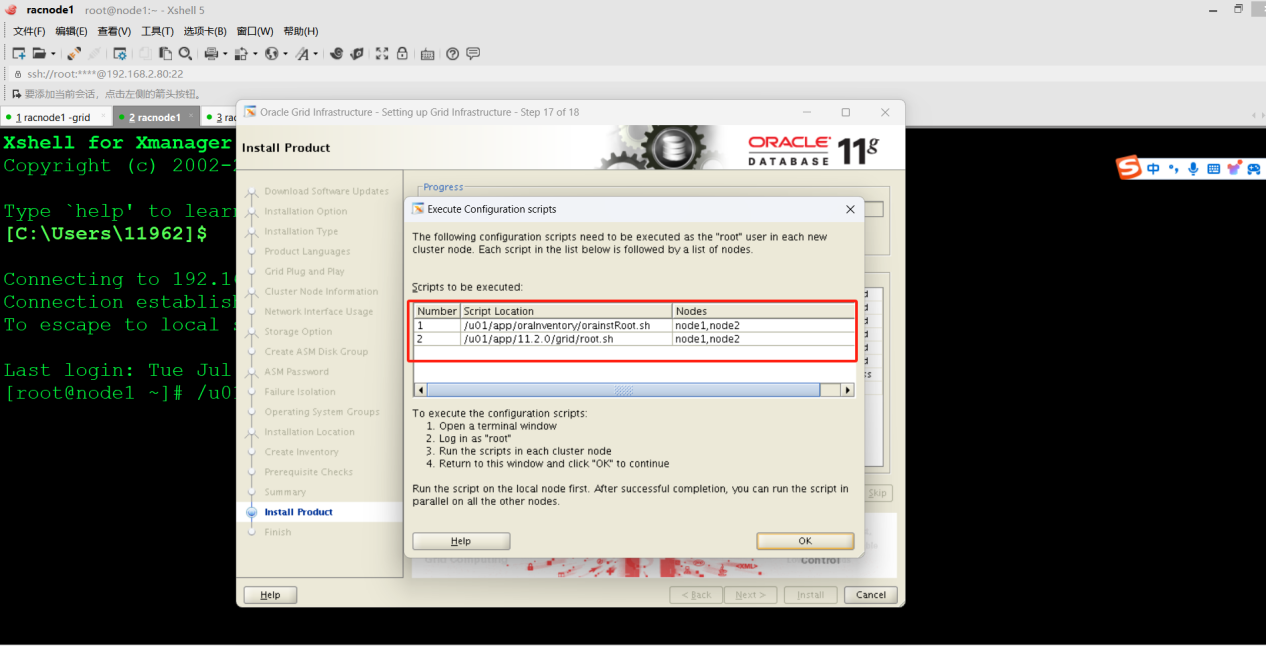

按照提示执行脚本,单独打开shell窗口。

一定要以 root 帐户执行,并且不能同时执行。

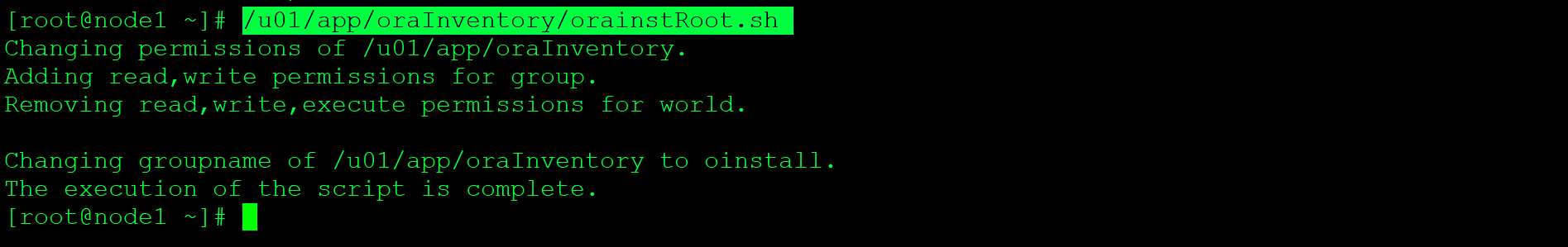

先执行 node1 /u01/app/oraInventory/orainstRoot.sh,

再执行 node2 /u01/app/oraInventory/orainstRoot.sh

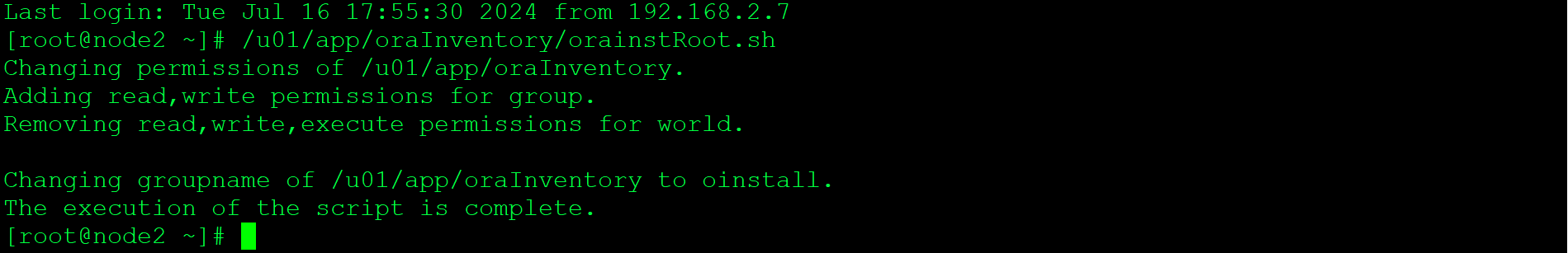

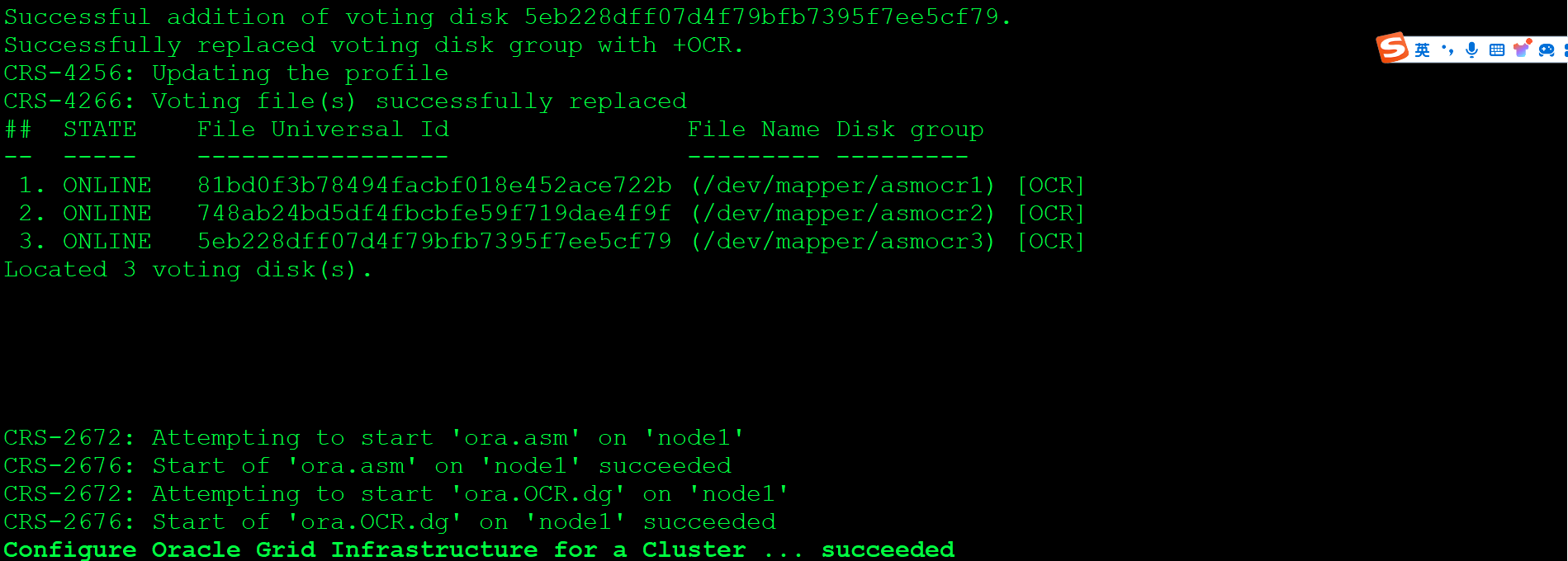

然后先执行 node1 /u01/app/11.2.0/grid/root.sh

再执行 node2 /u01/app/11.2.0/grid/root.sh

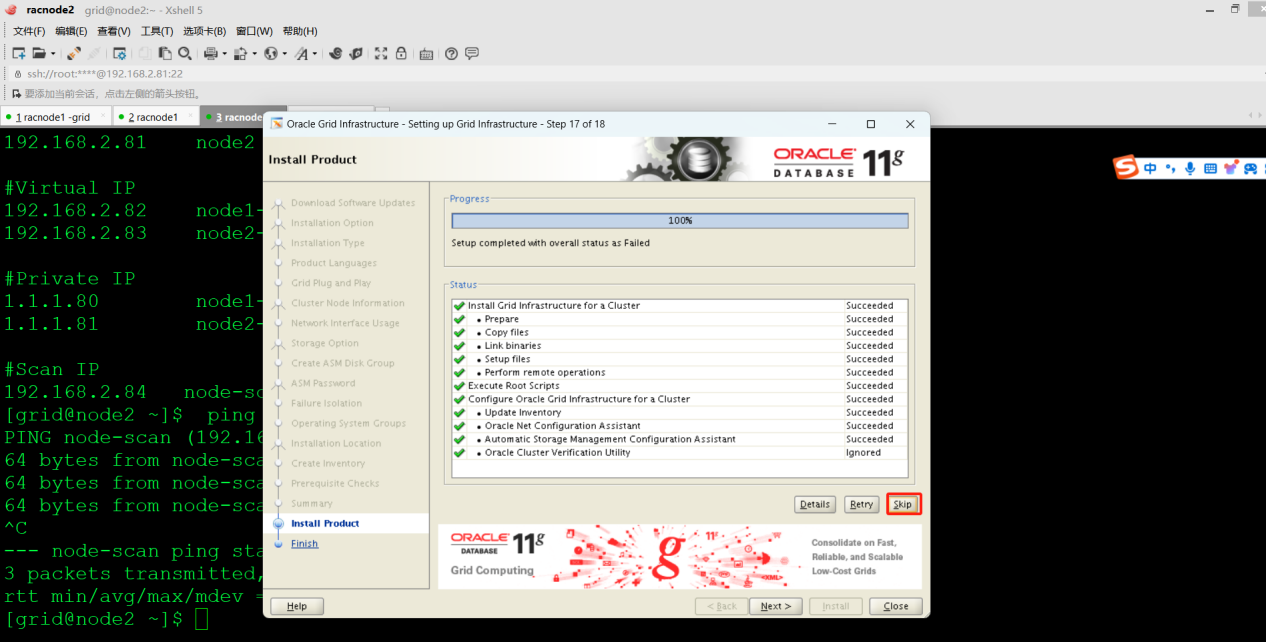

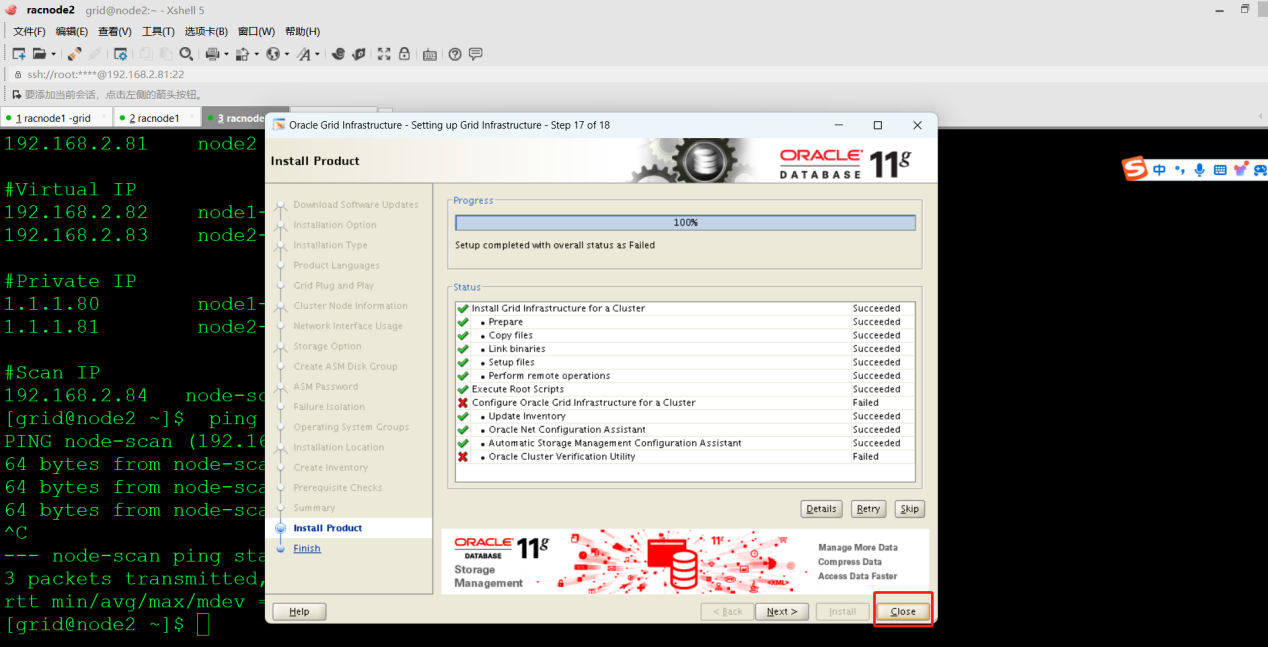

执行完第二个脚本后,会出现以下页面字样

Adding Clusterware entries to inittab

立即在另一个shell窗口用root用户执行以下命令,执行后如下图集群服务安装成功

dd if=/var/tmp/.oracle/npohasd of=/dev/null bs=1024 count=1

先点击skip,在点击 close ,至此 Grid 软件安装完毕,接下来要验证集群资源状。

以node1的执行结果为例,node2不在粘贴了

[grid@node1 ~]$ crsctl stat res -t -init -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.asm 1 ONLINE ONLINE node1 Started ora.cluster_interconnect.haip 1 ONLINE ONLINE node1 ora.crf 1 ONLINE ONLINE node1 ora.crsd 1 ONLINE ONLINE node1 ora.cssd 1 ONLINE ONLINE node1 ora.cssdmonitor 1 ONLINE ONLINE node1 ora.ctssd 1 ONLINE ONLINE node1 OBSERVER ora.diskmon 1 OFFLINE OFFLINE ora.evmd 1 ONLINE ONLINE node1 ora.gipcd 1 ONLINE ONLINE node1 ora.gpnpd 1 ONLINE ONLINE node1 ora.mdnsd 1 ONLINE ONLINE node1 |

node1、node2两节点的ASM实例启动状态 [grid@node1 ~]$ sqlplus / as sysasm SQL*Plus: Release 11.2.0.4.0 Production on Tue Jul 16 19:04:29 2024 Copyright (c) 1982, 2013, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Real Application Clusters and Automatic Storage Management options SQL> SQL> select status , instance_name from gv$instance ; STATUS INSTANCE_NAME ------------ ---------------- STARTED +ASM1 STARTED +ASM2 |

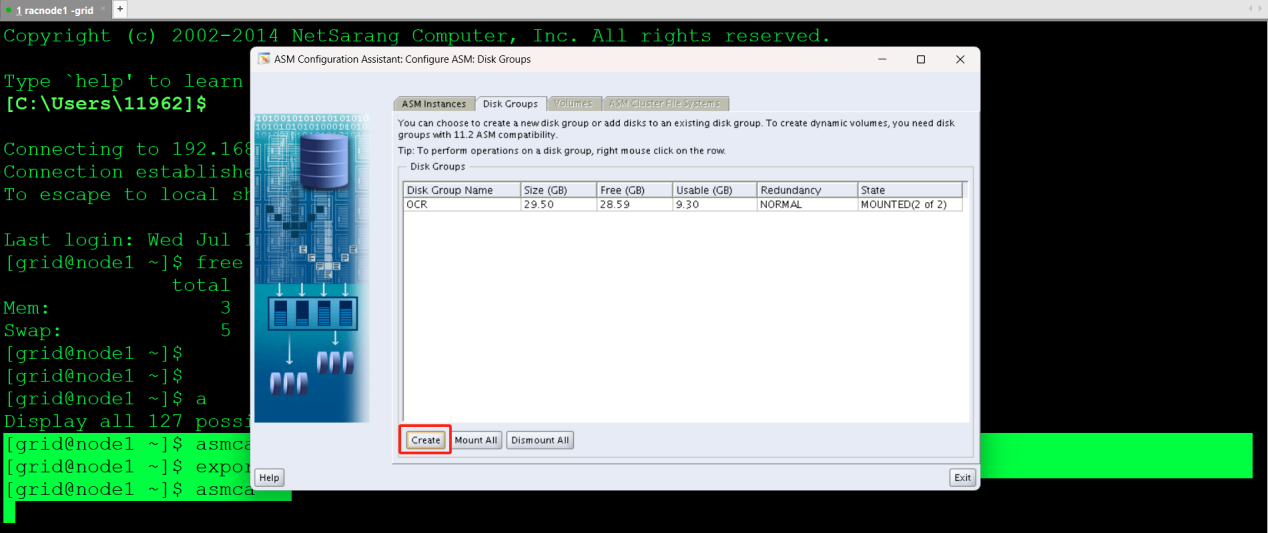

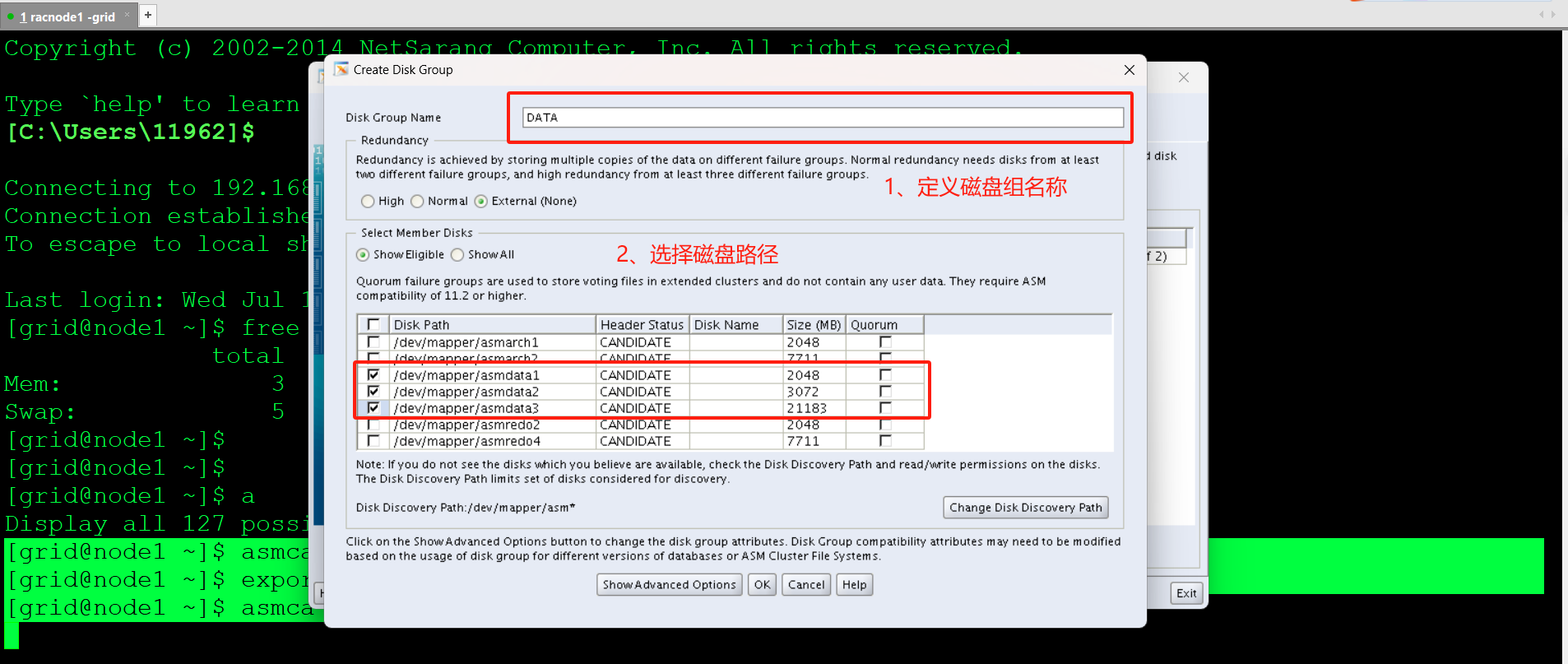

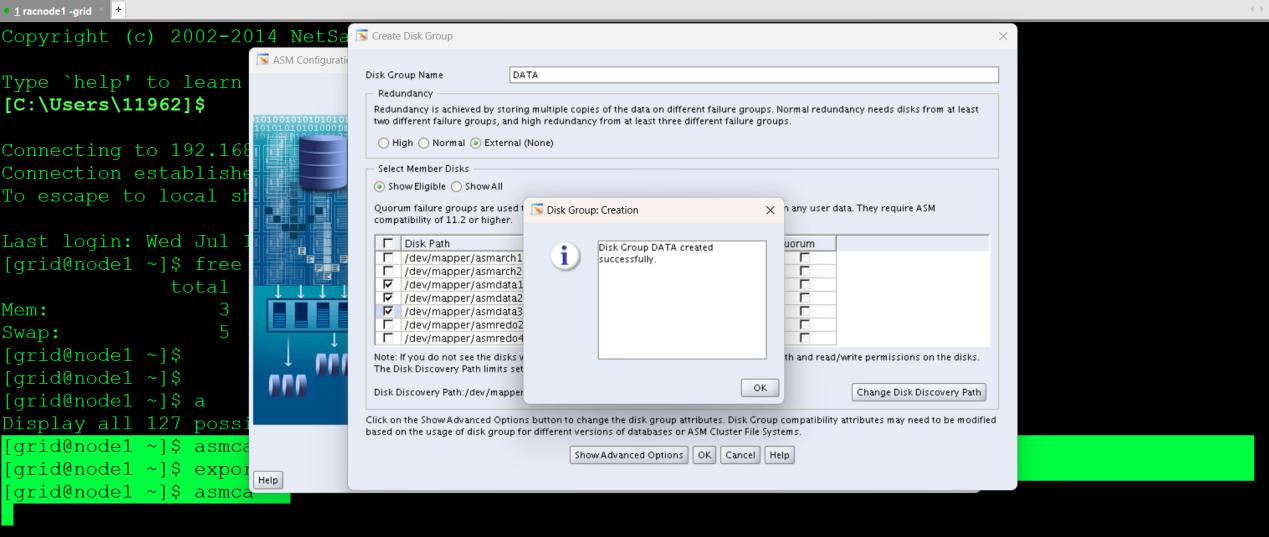

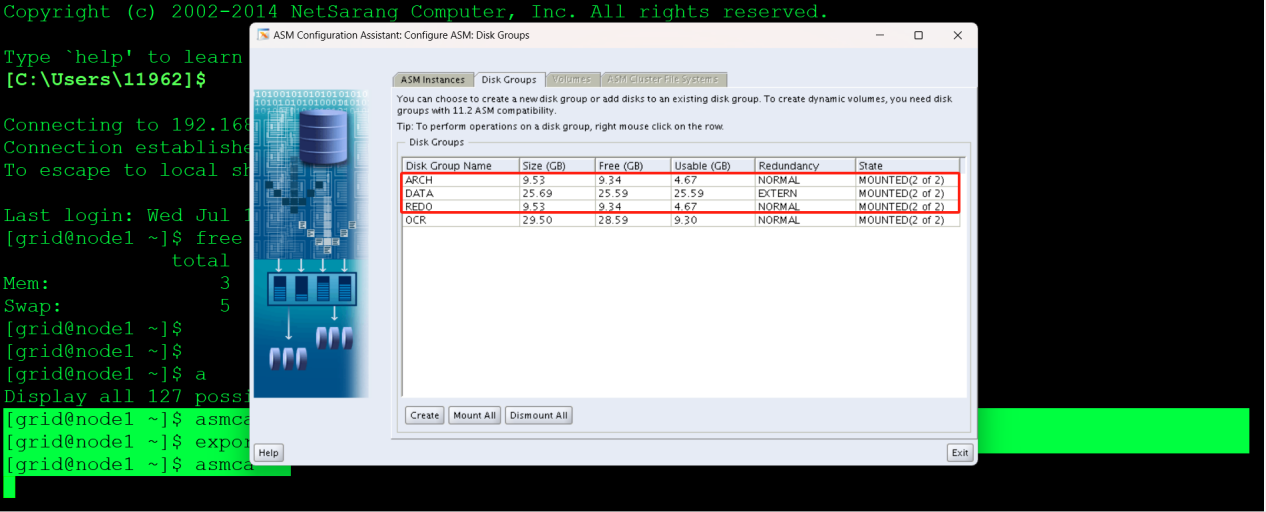

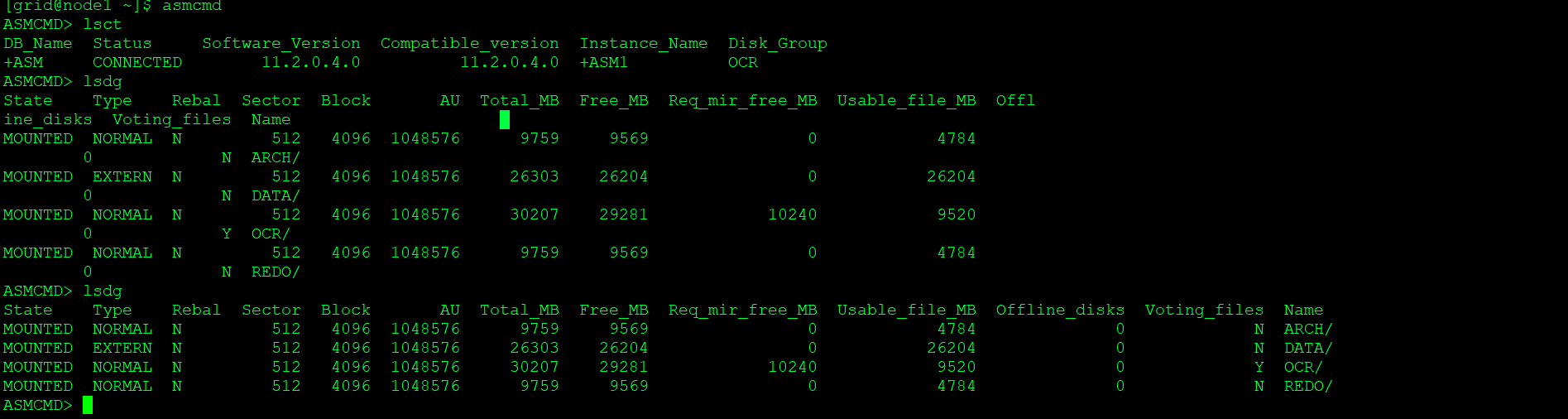

- 配置ASM磁盘

图形界面方式创建

OCR | 集群资源文件存储盘 |

Redo | Redo重做日志文件存储盘 |

DATA | 数据文件存储盘 |

ARCH | 归档日志存储盘 |

[grid@node1 ~]$ asmca

[grid@node1 ~]$ export LANG=en_US.UTF-8

[grid@node1 ~]$ asmca

定义磁盘组名称,选择磁盘路径,点击OK开始创建

查看已创建好的磁盘组

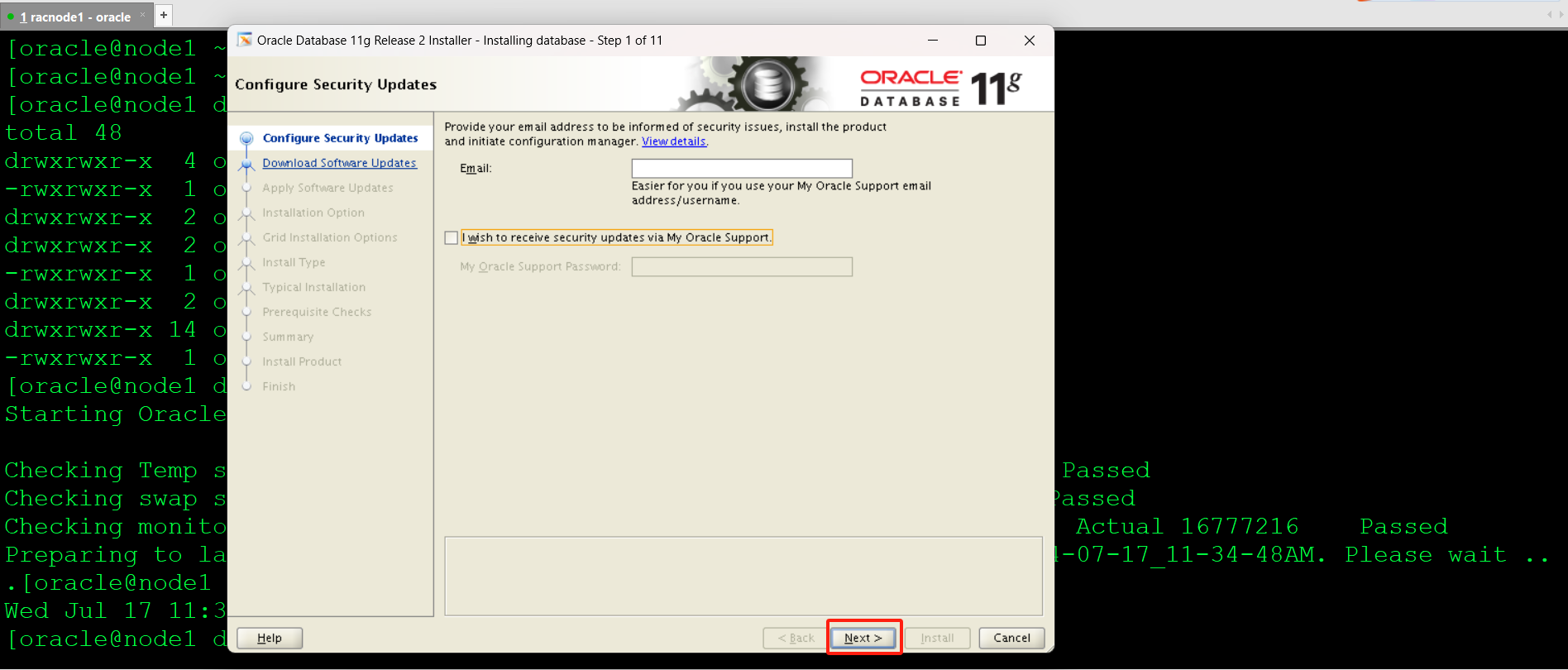

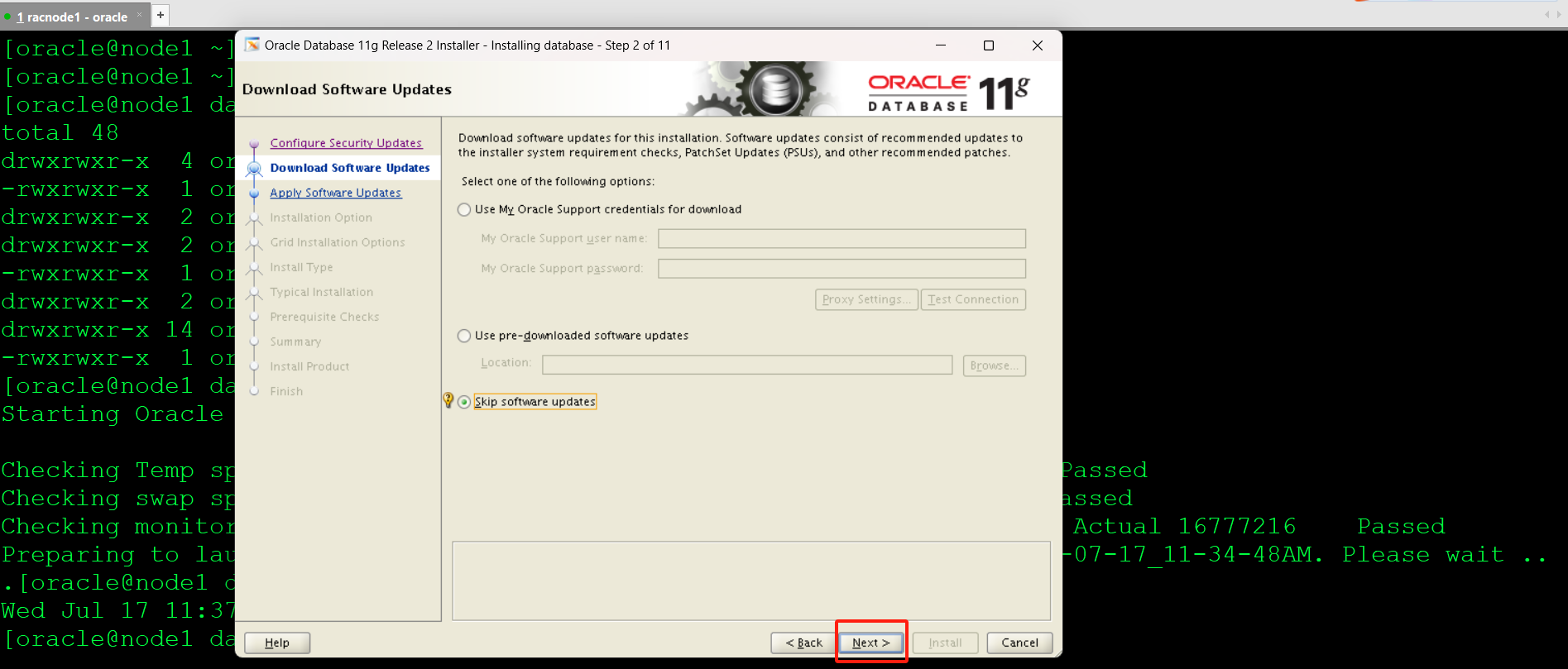

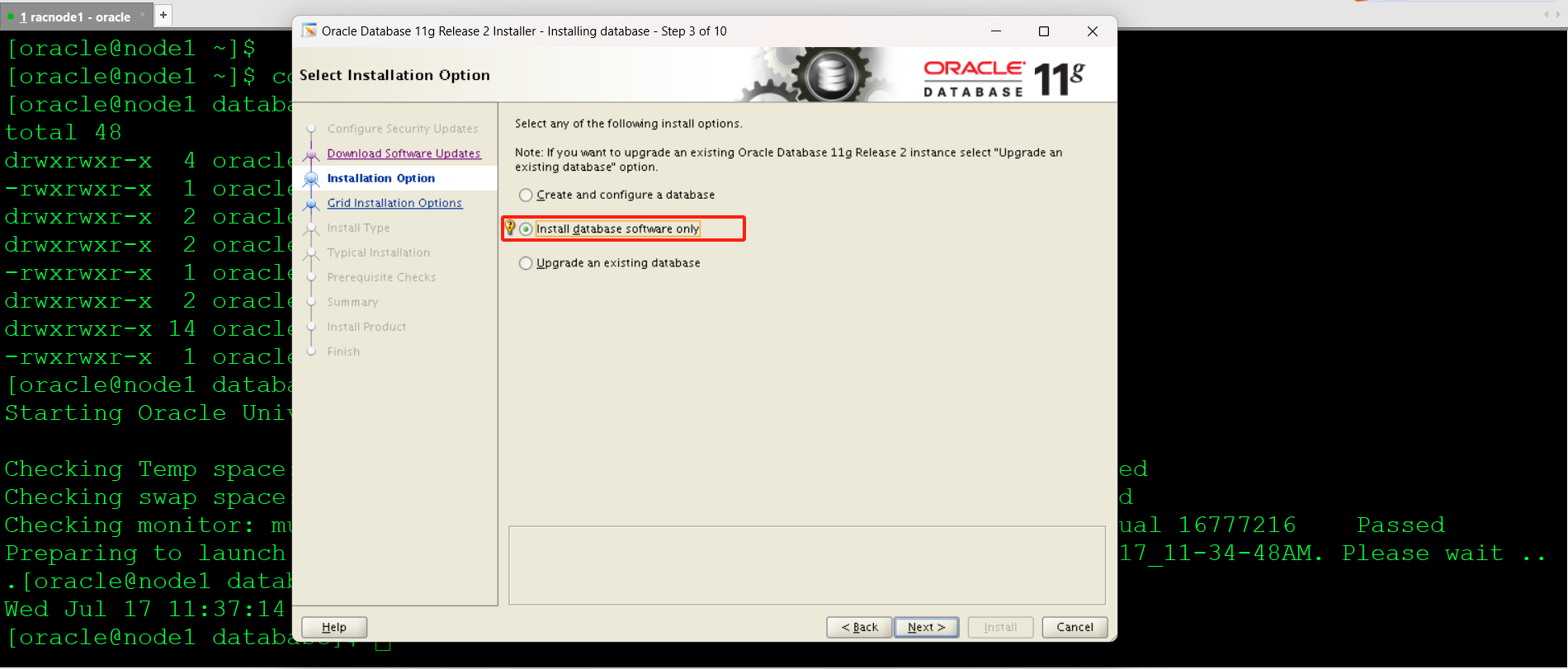

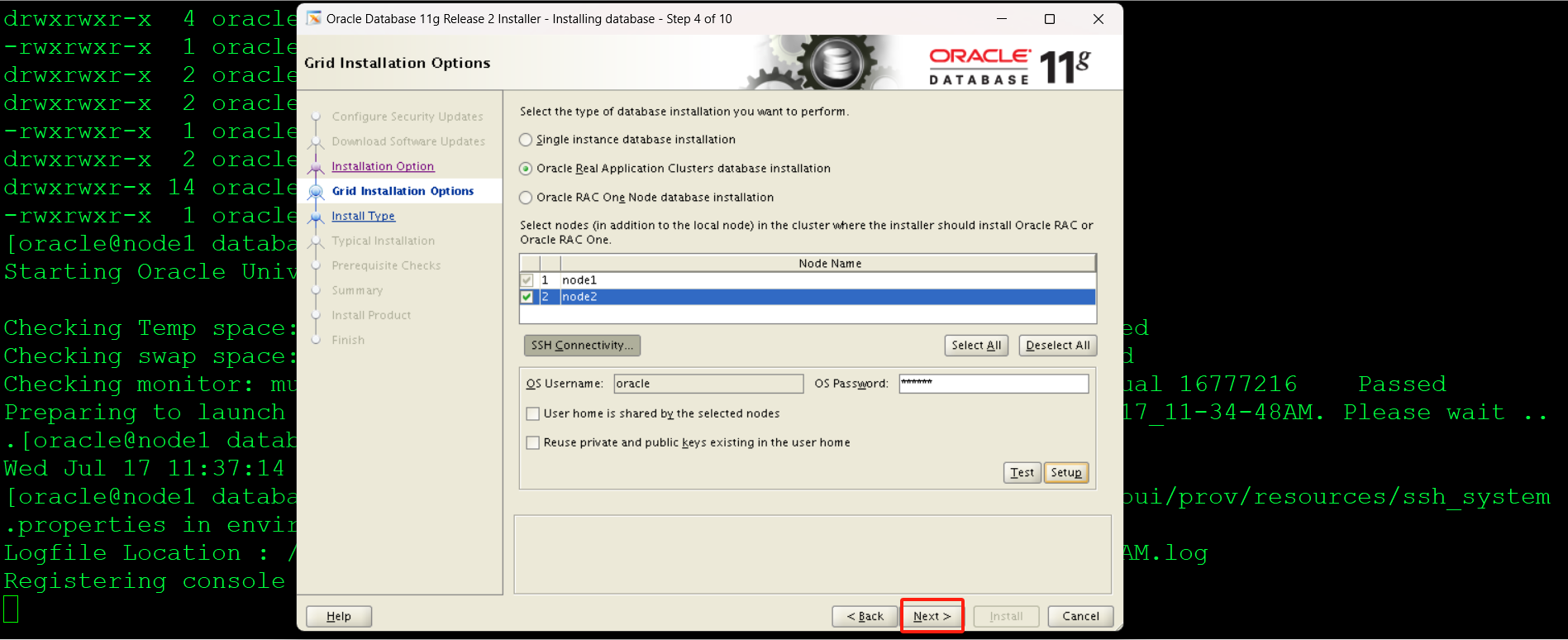

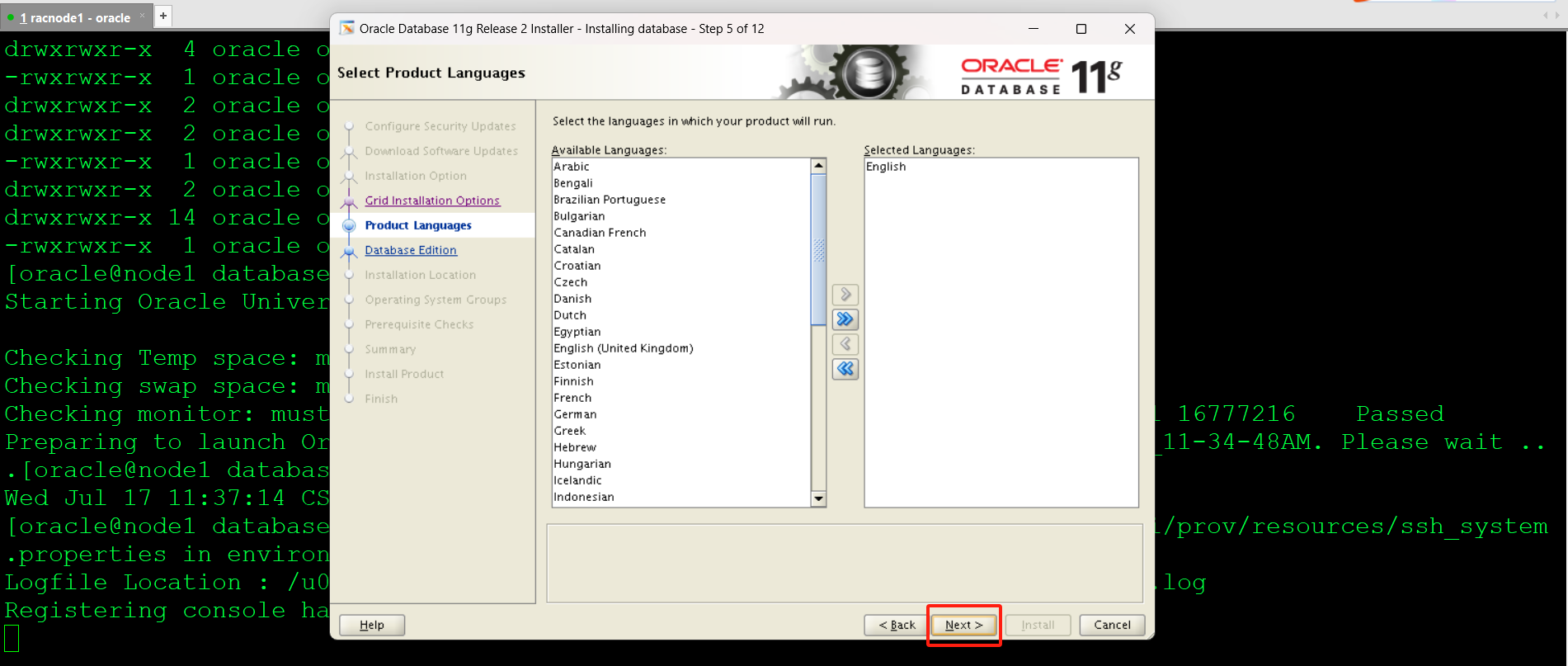

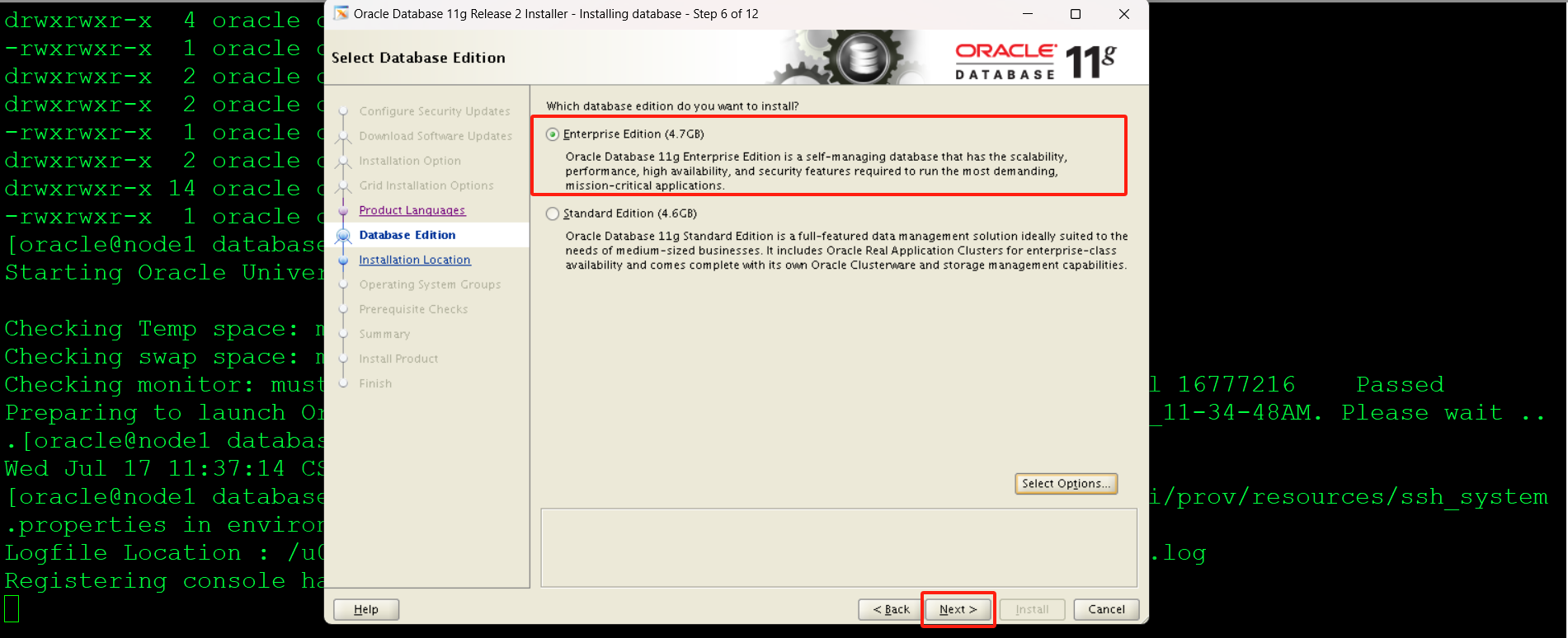

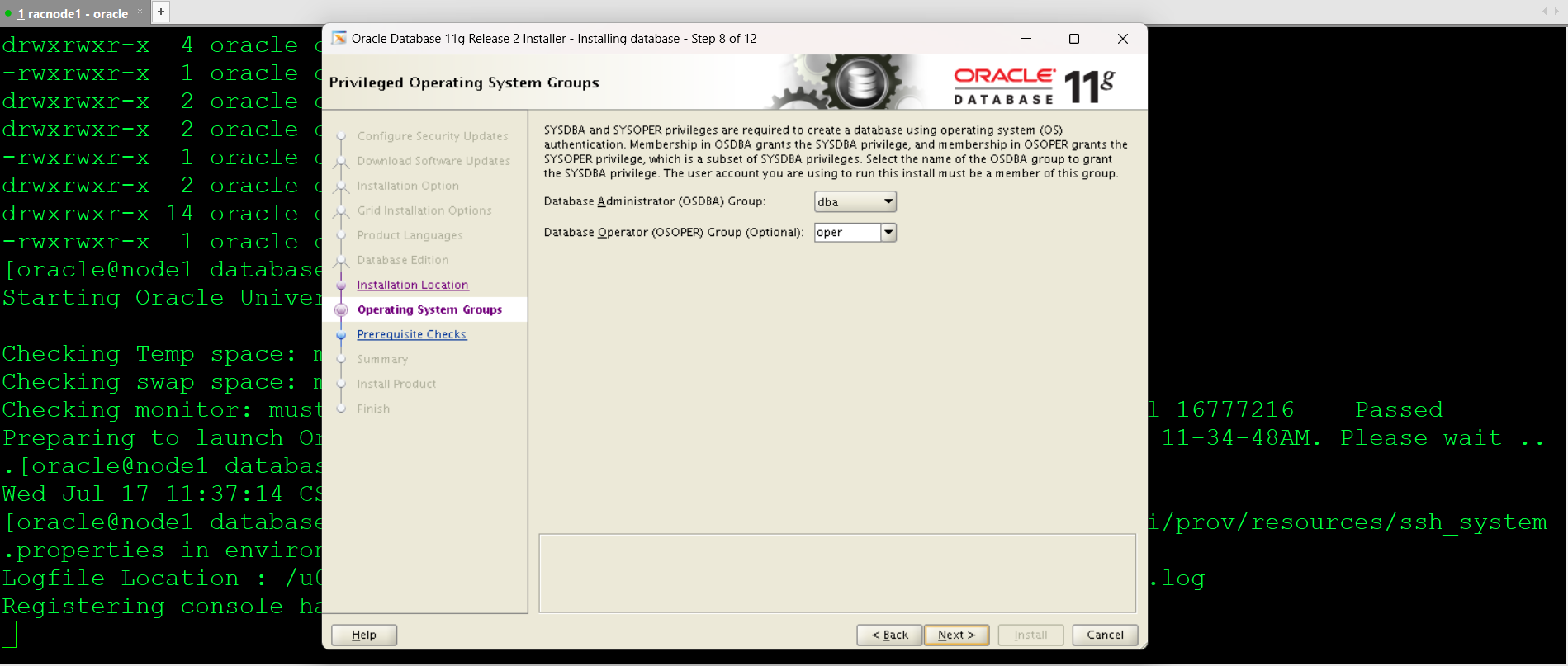

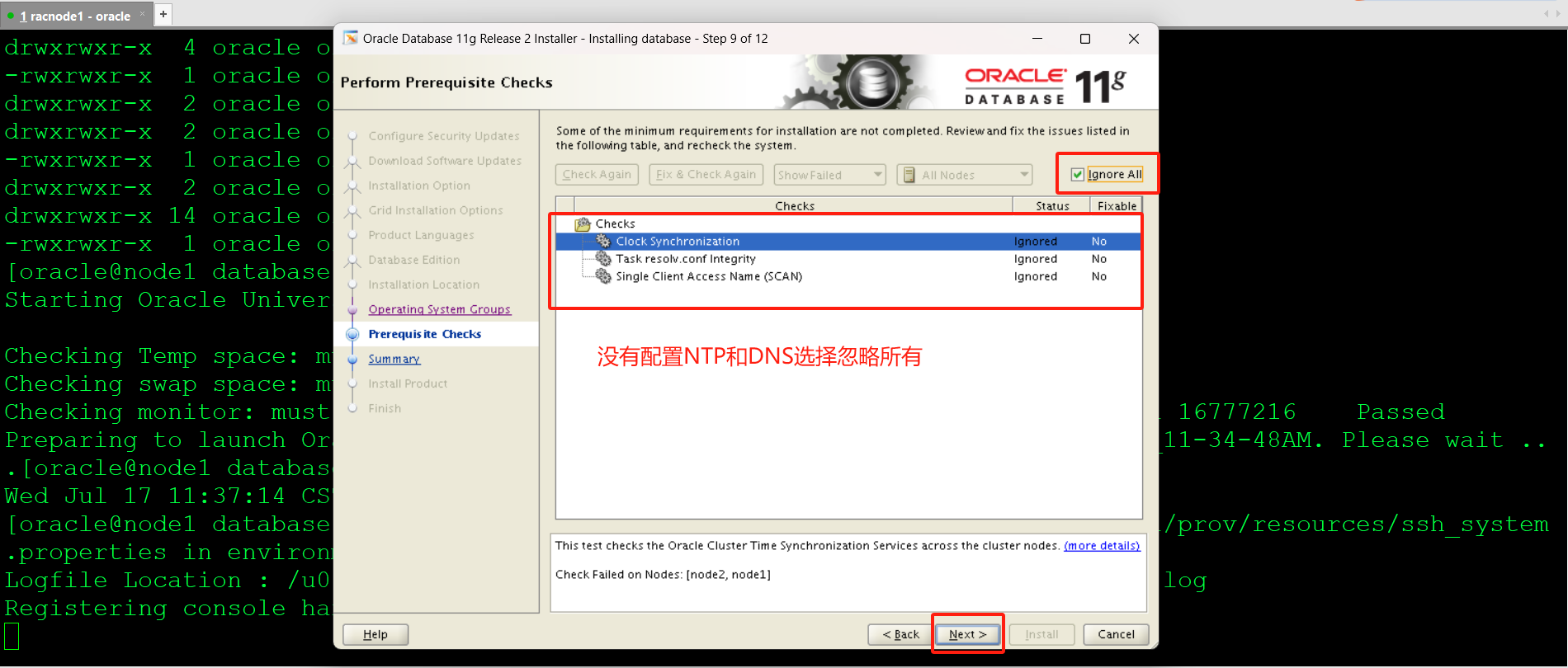

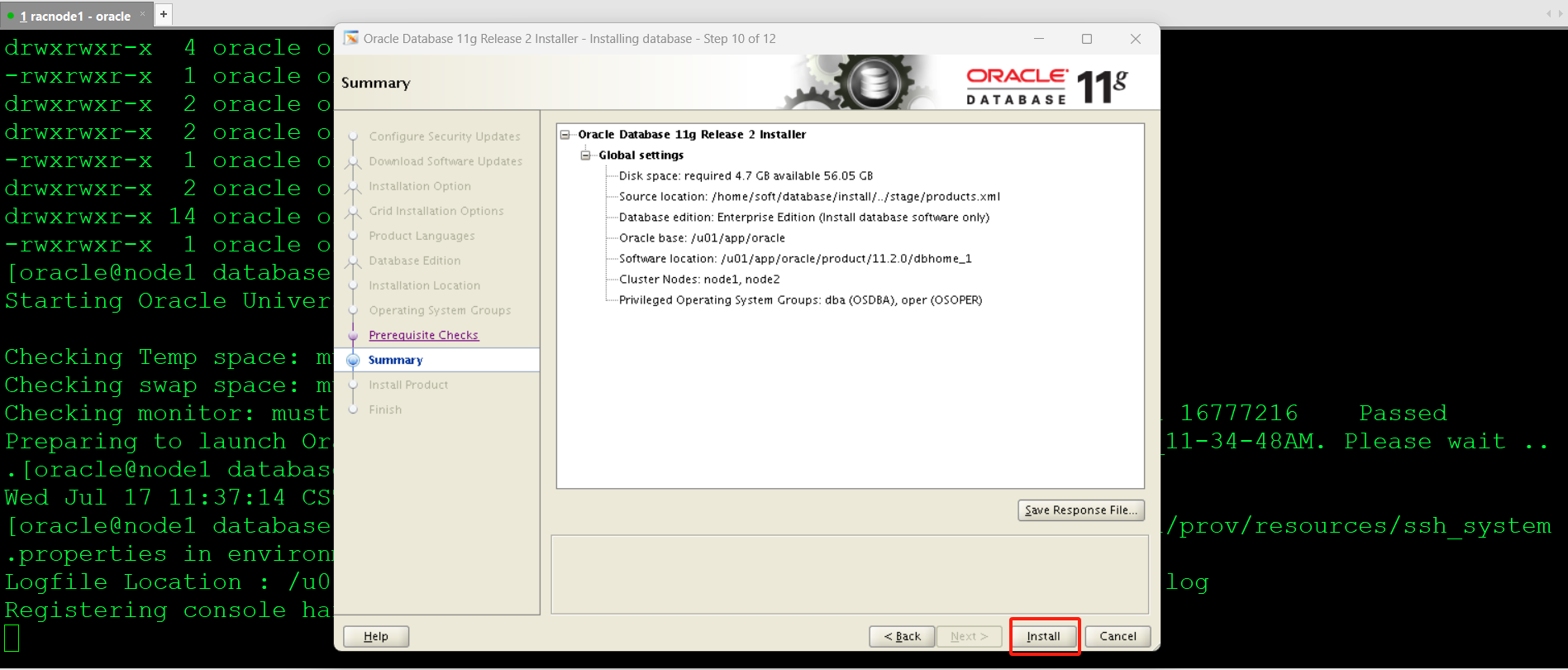

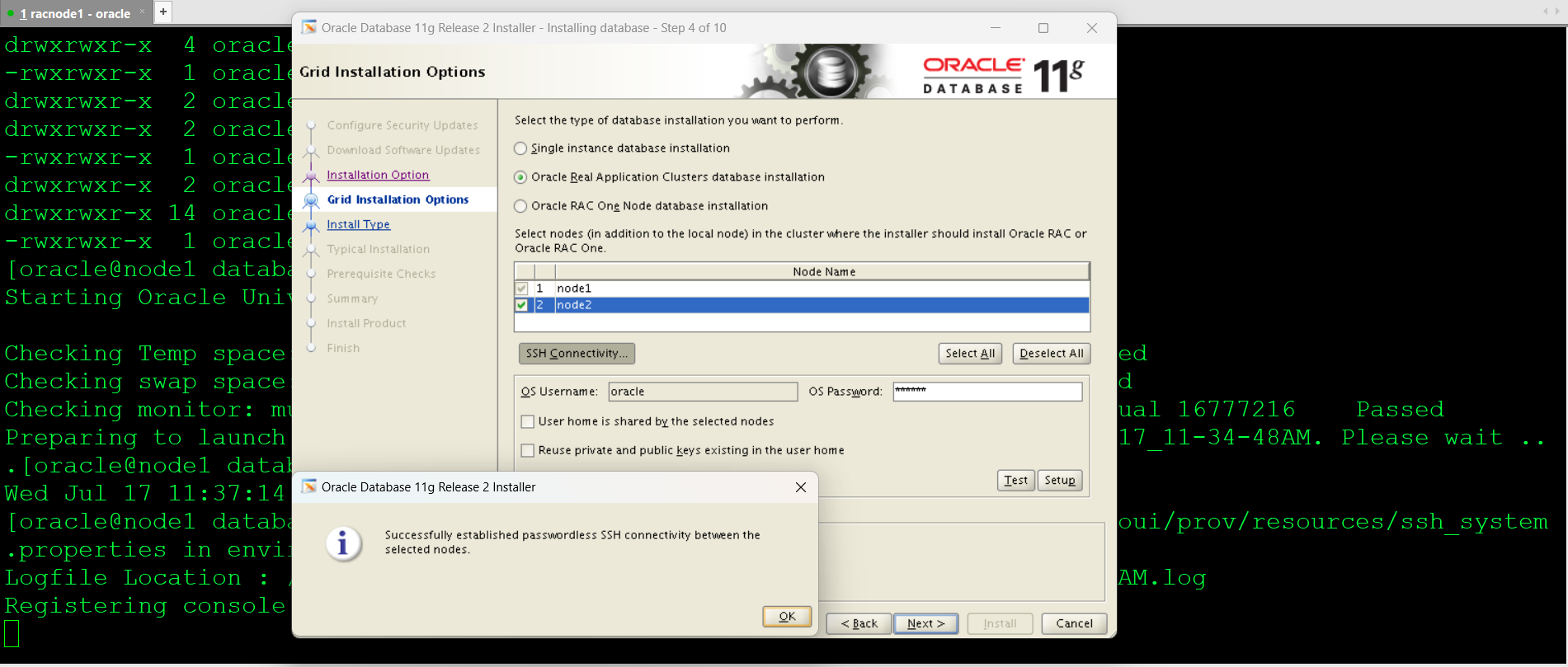

- 安装Oracle软件

[oracle@node1 ~]$ cd /home/soft/database/

[oracle@node1 install]$ export LANG=en_US.UTF-8

[oracle@node1 database]$ ./runInstaller

先点击Select ALL ,在点击Setup测试SSH互信,测试通过如下提示:

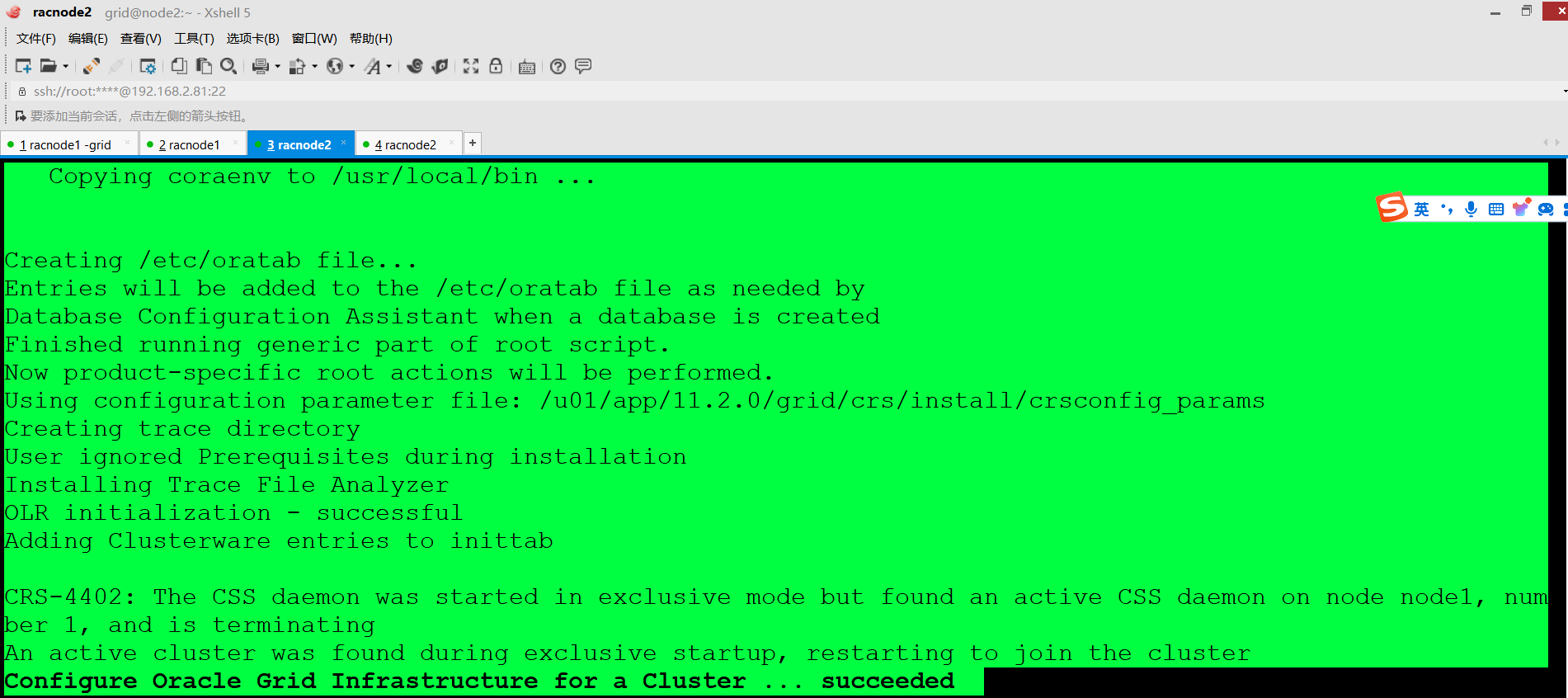

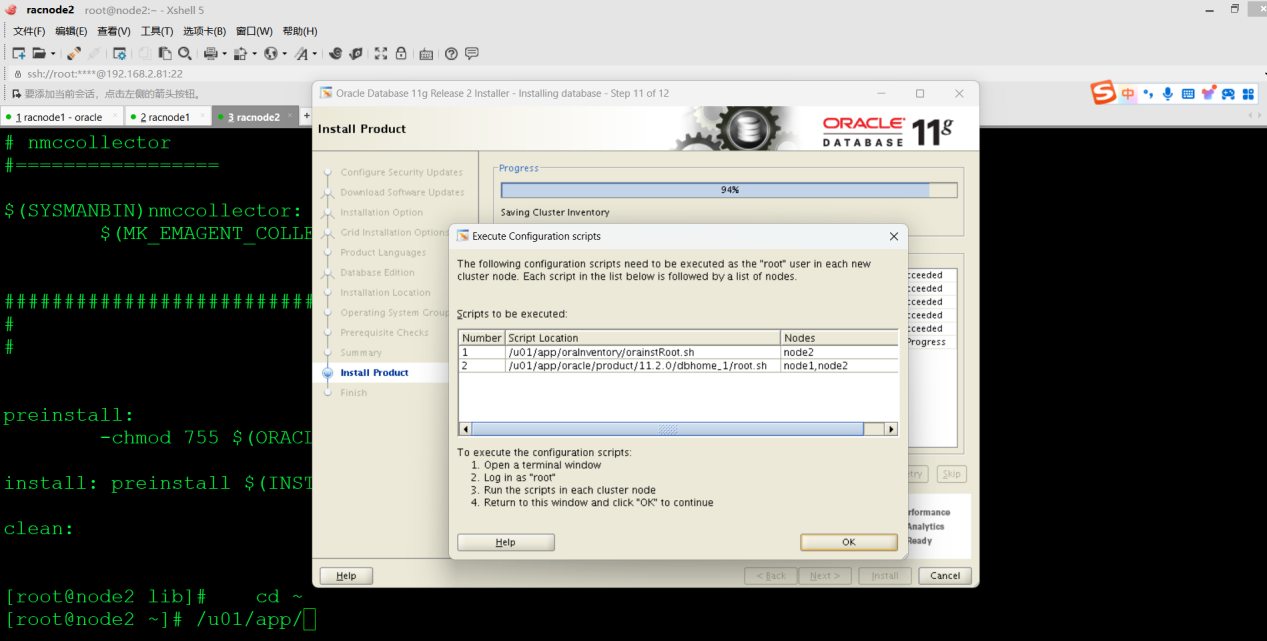

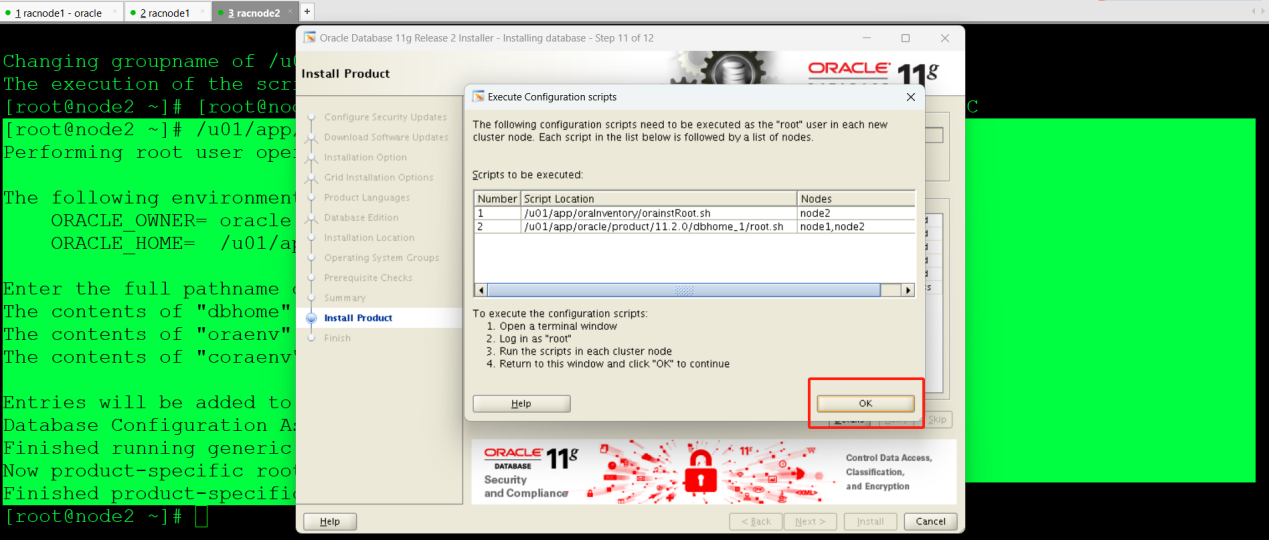

先在node2单独执行Root.sh脚本,然后在node1上执行root.sh执行脚本,最后在node2上执行root.sh执行脚本

顺序:先node2、在node1

参考如下图:

[root@node1 ~]# /u01/app/oracle/product/11.2.0/dbhome_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/11.2.0/dbhome_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions [root@node2 ~]# /u01/app/oracle/product/11.2.0/dbhome_1/root.sh Performing root user operation for Oracle 11g The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/11.2.0/dbhome_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Finished product-specific root actions. |

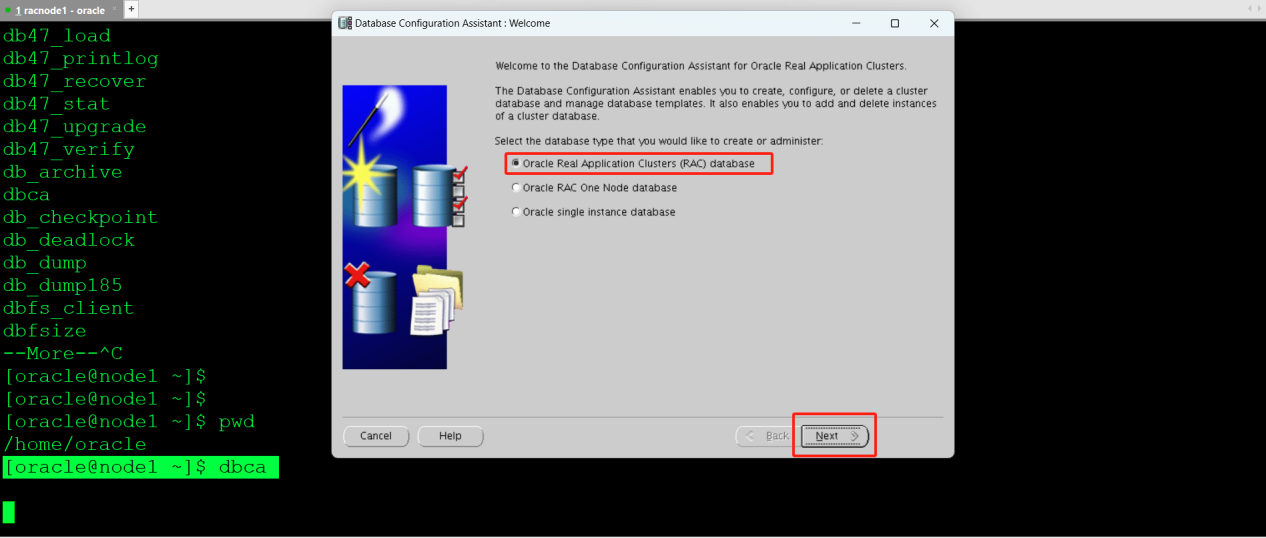

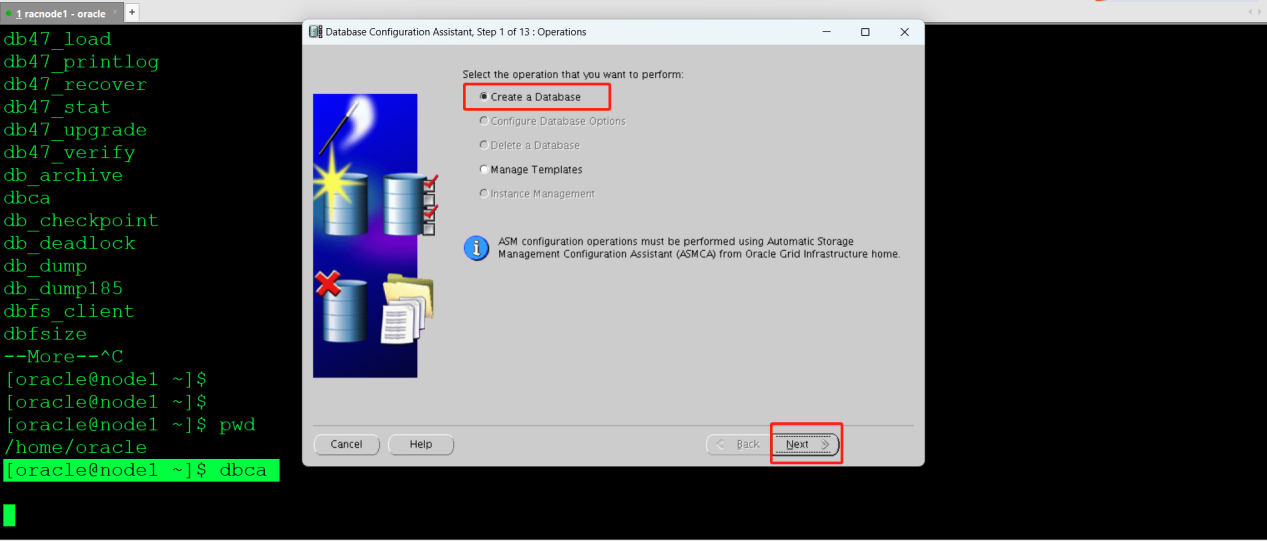

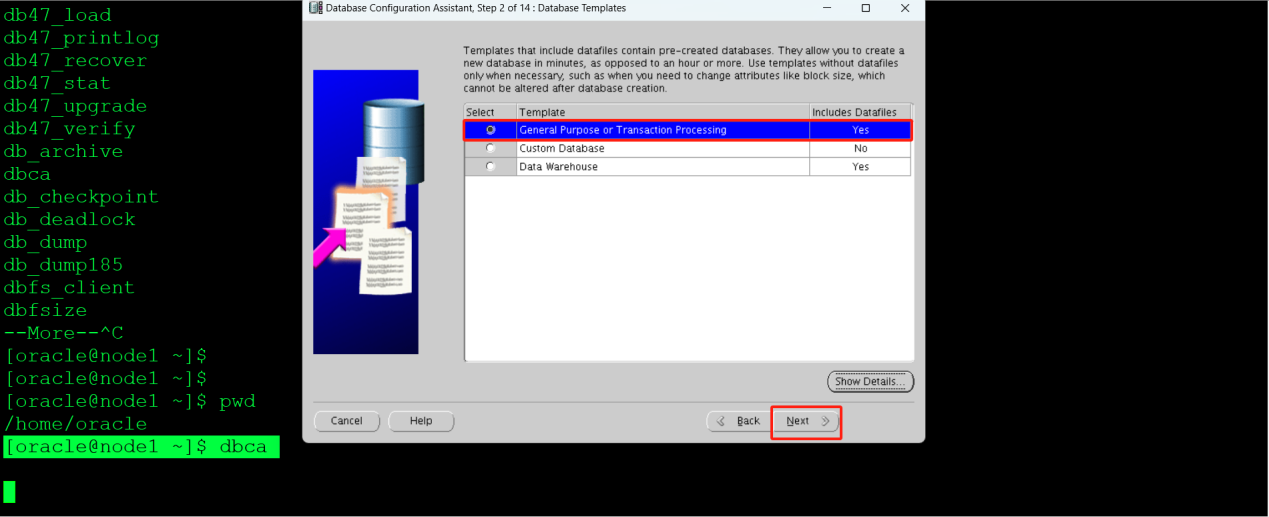

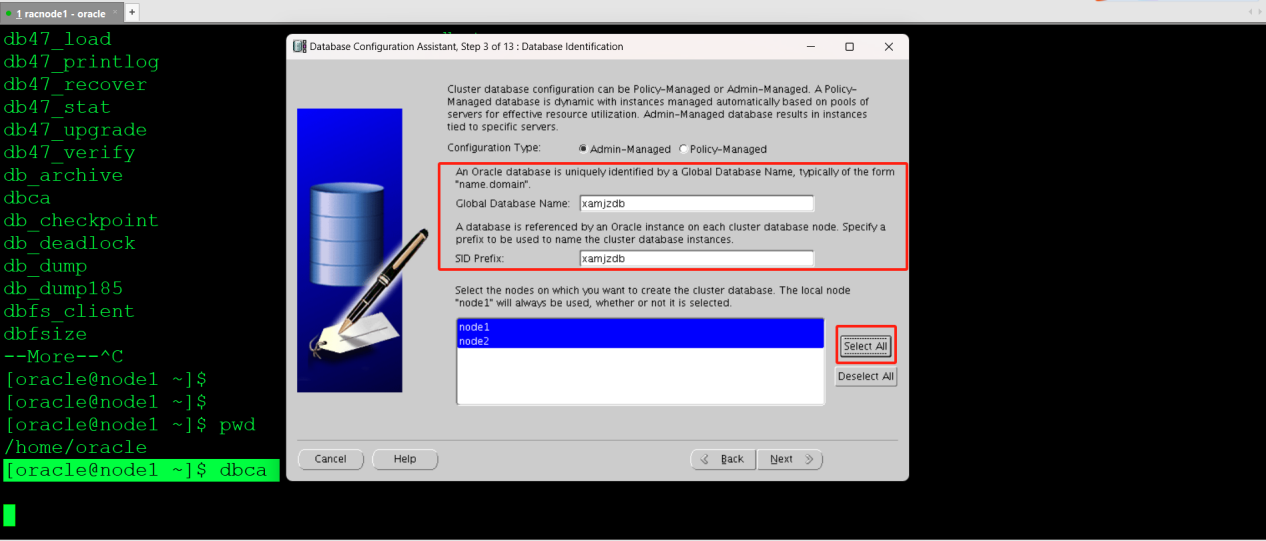

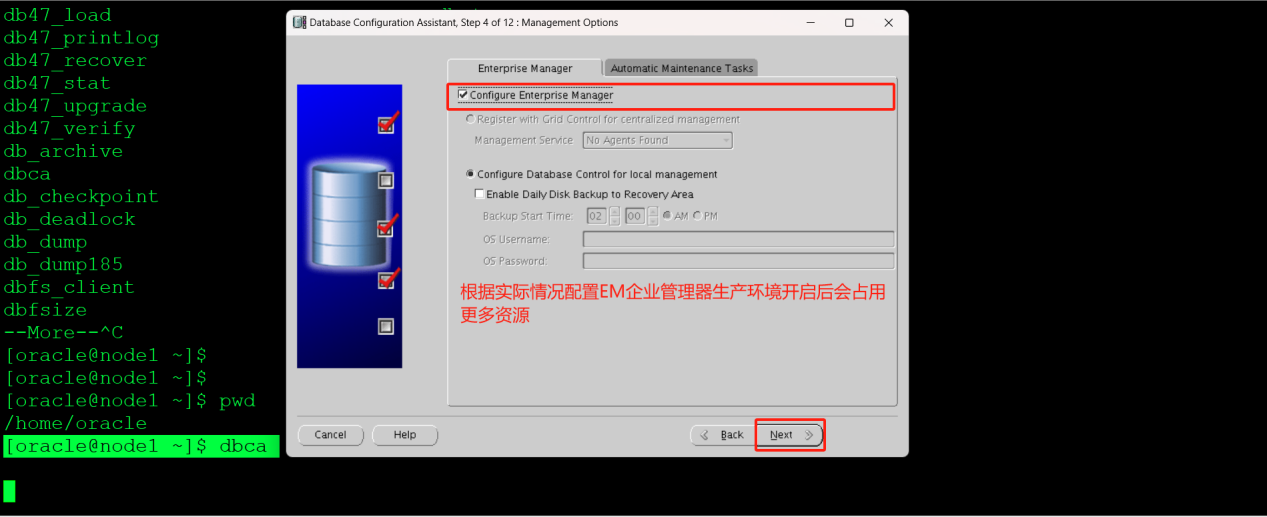

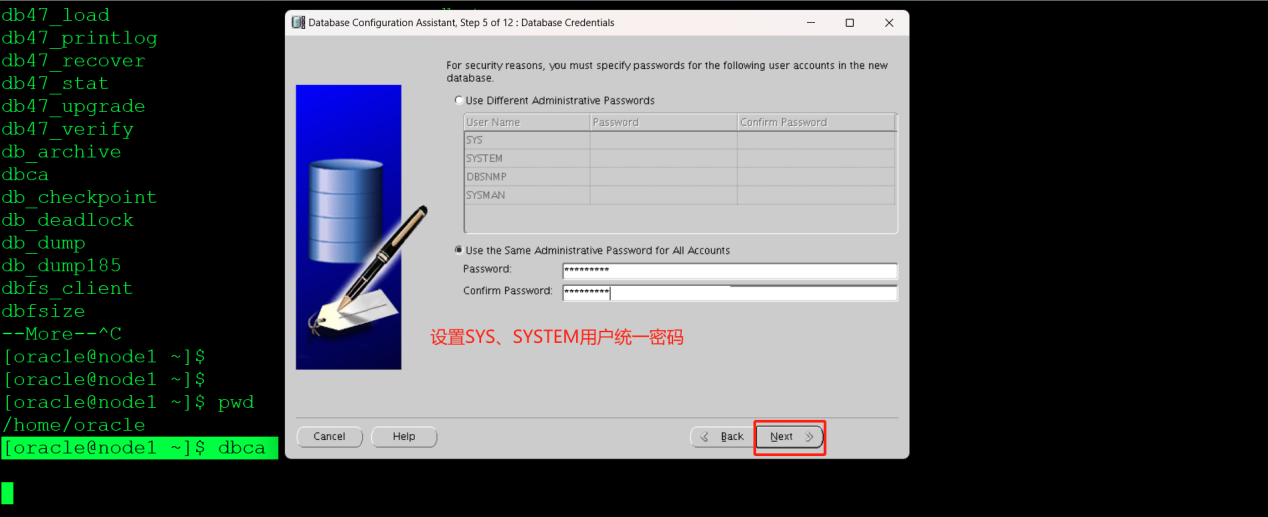

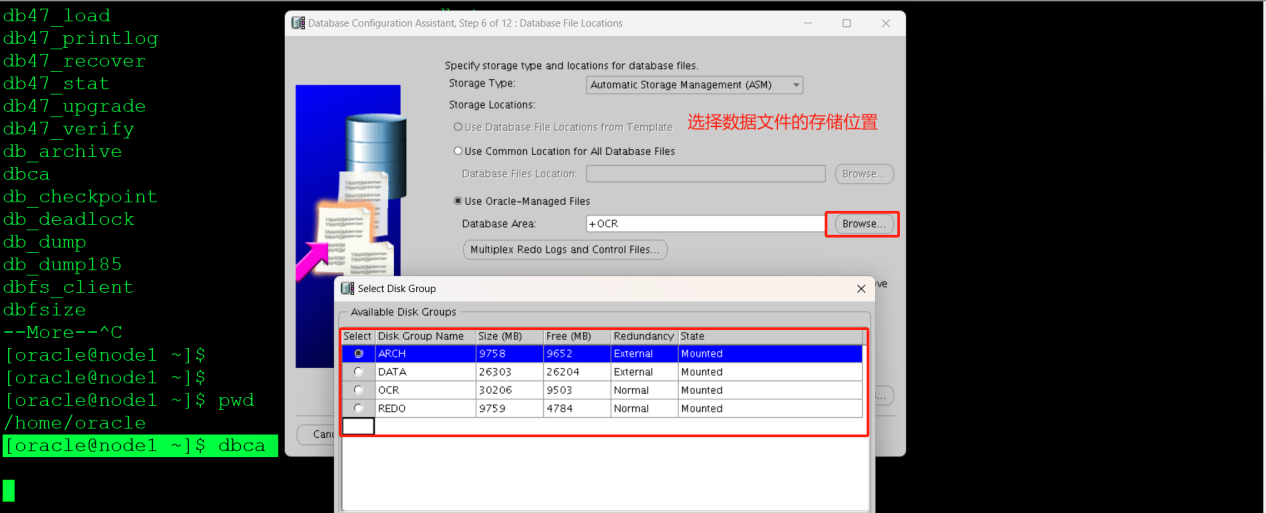

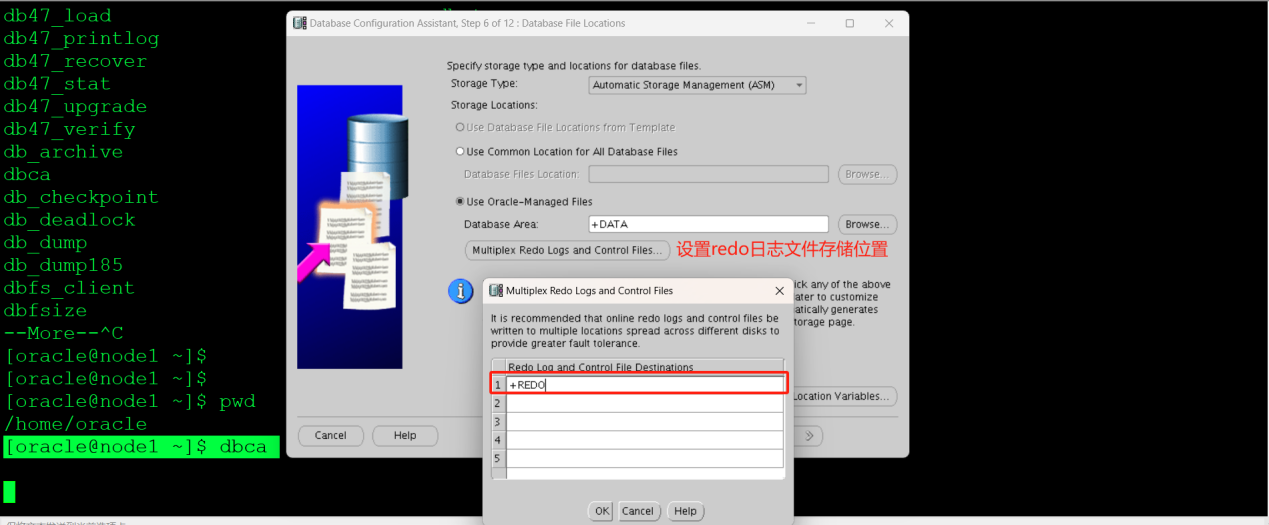

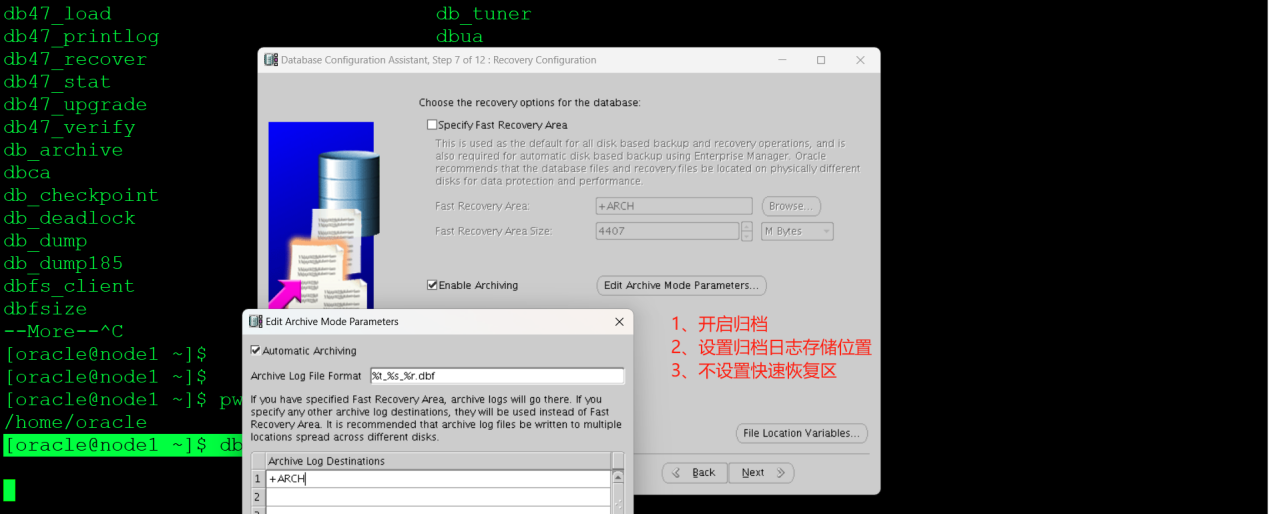

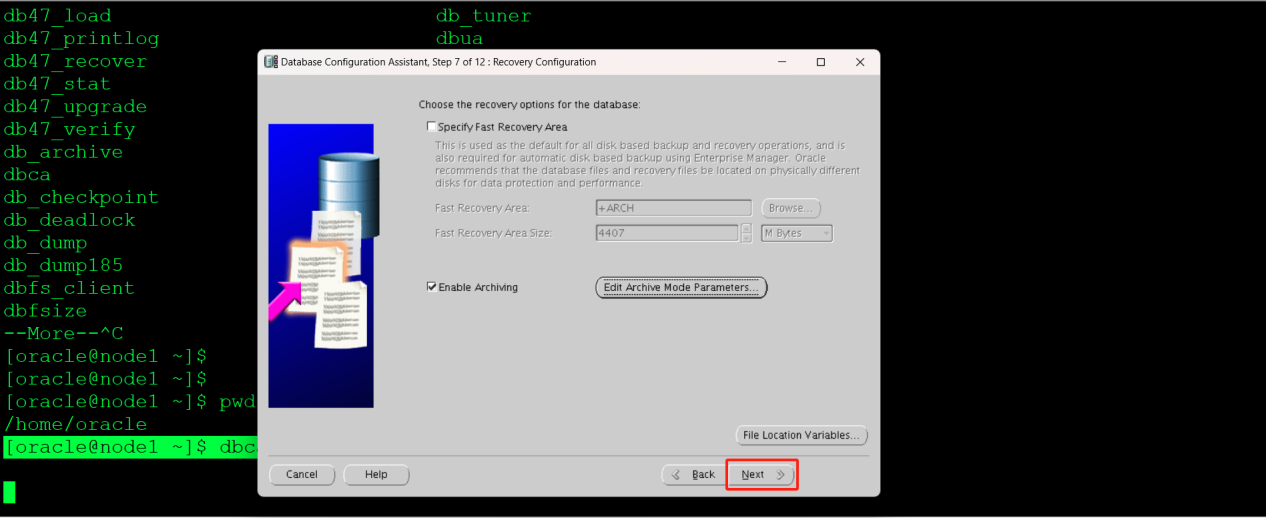

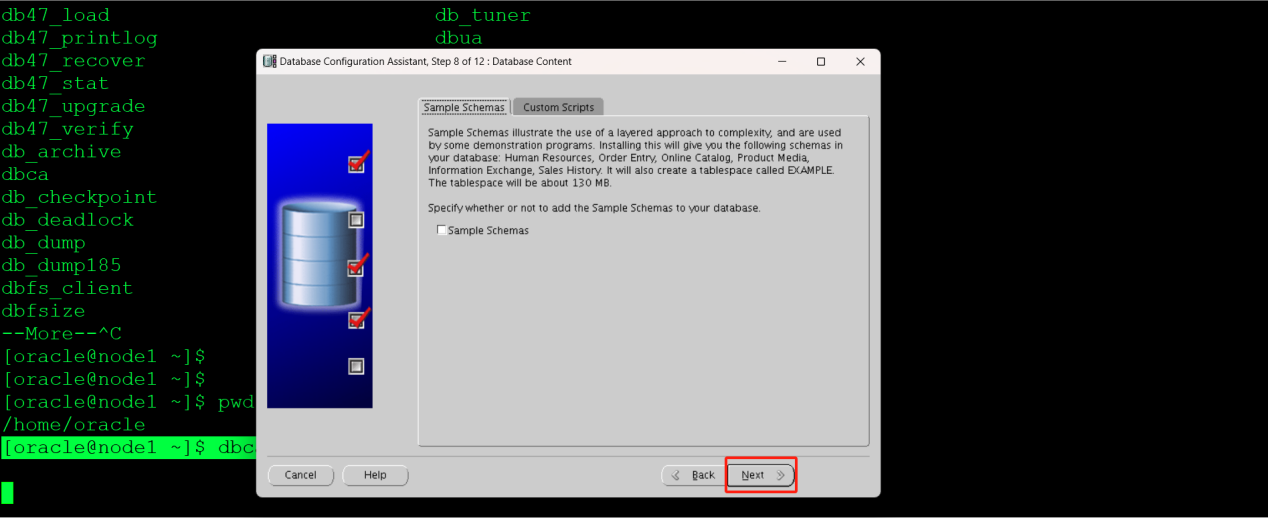

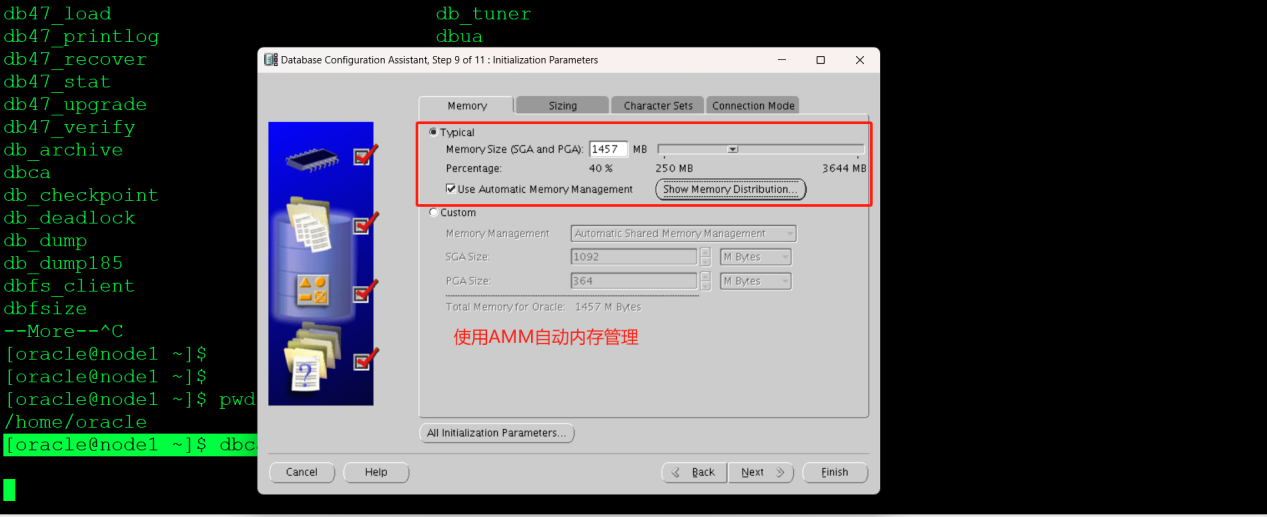

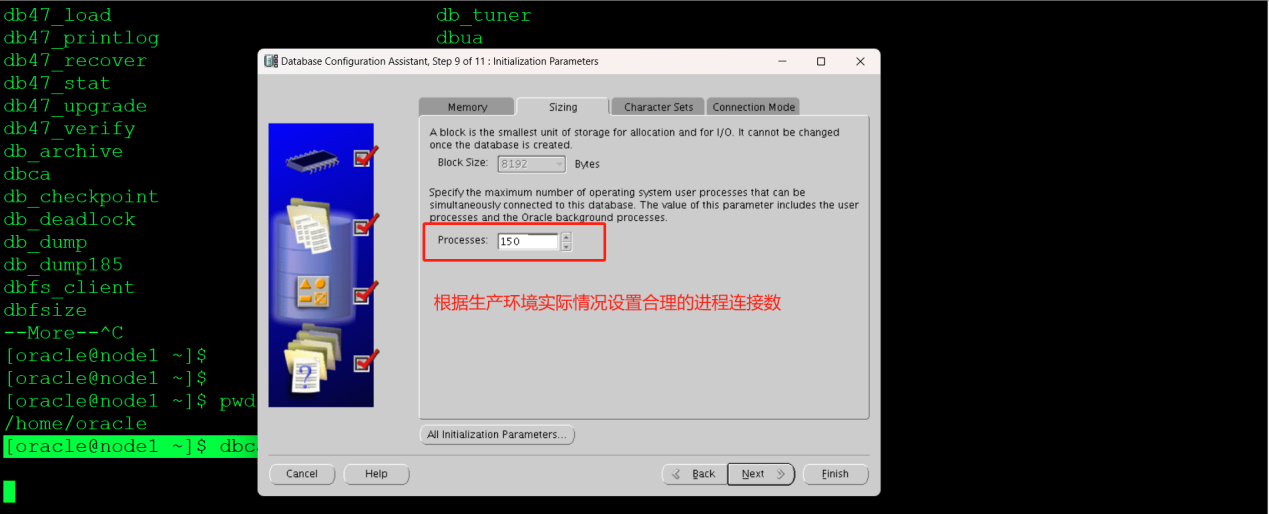

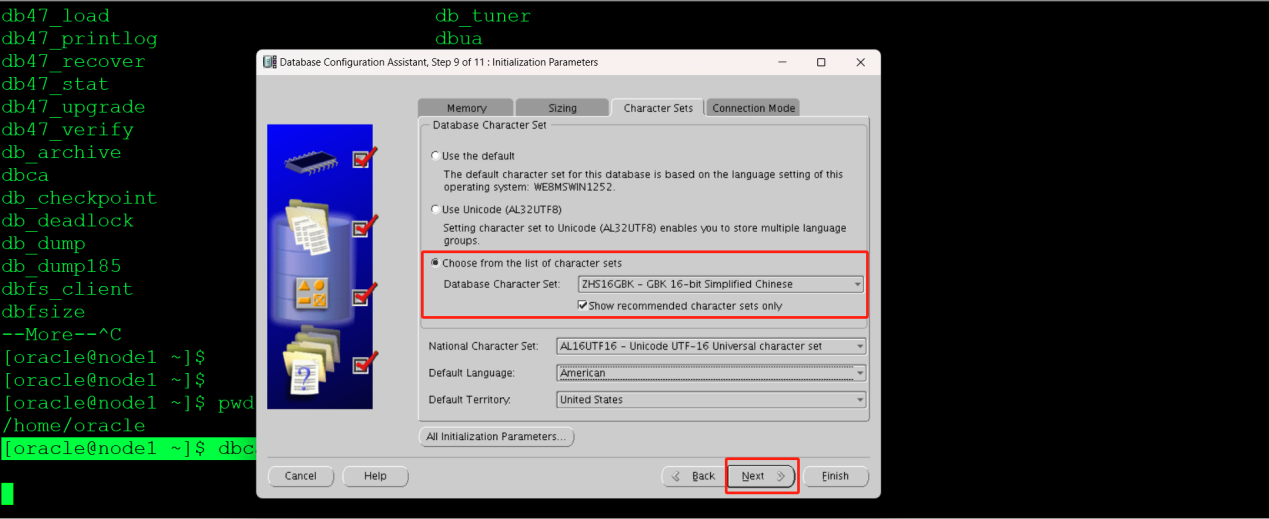

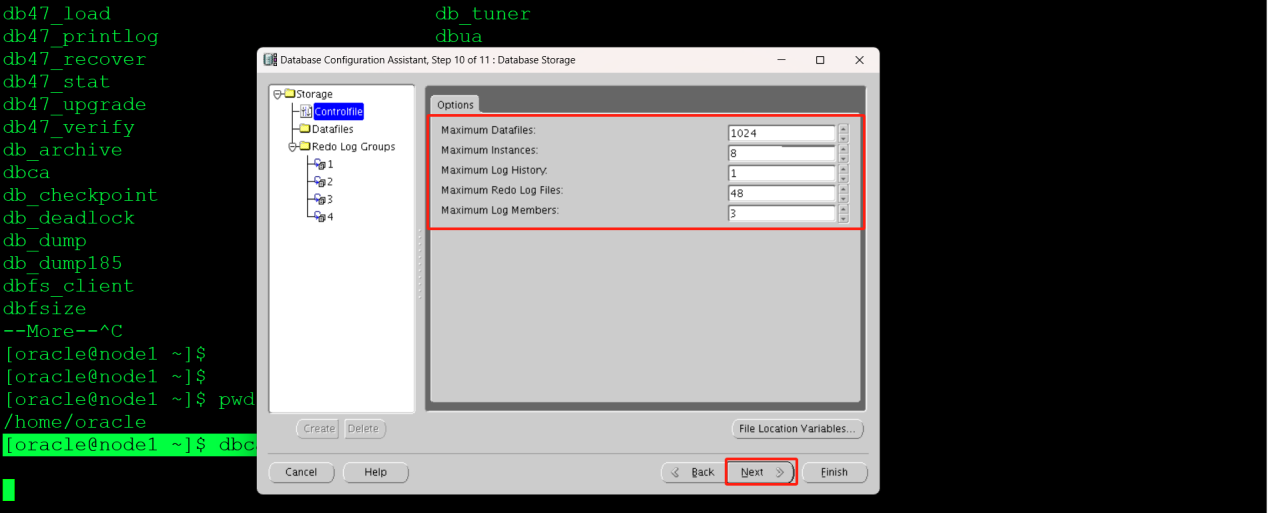

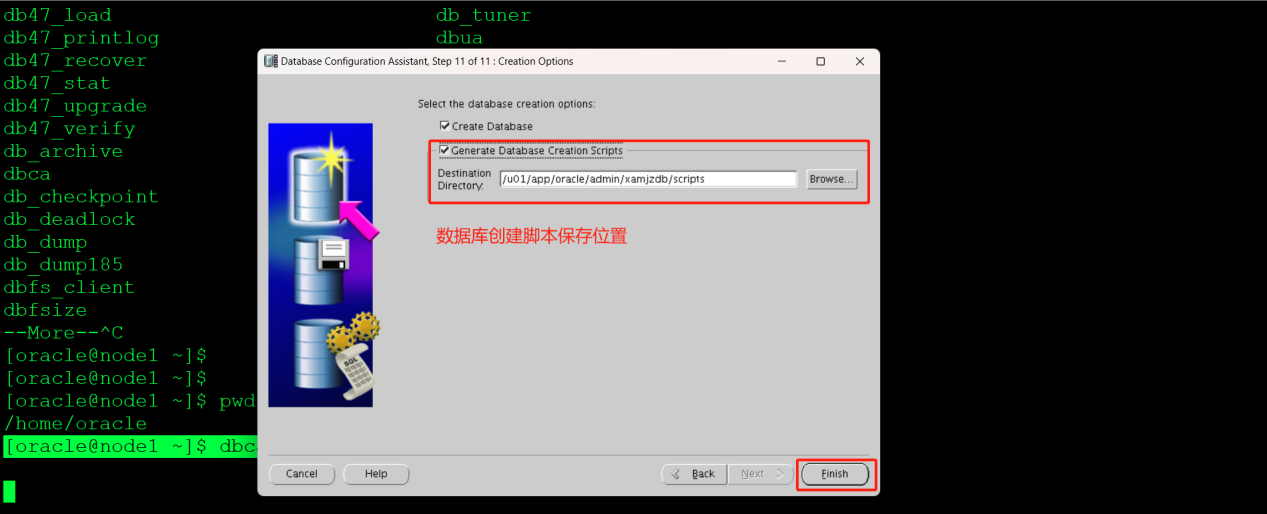

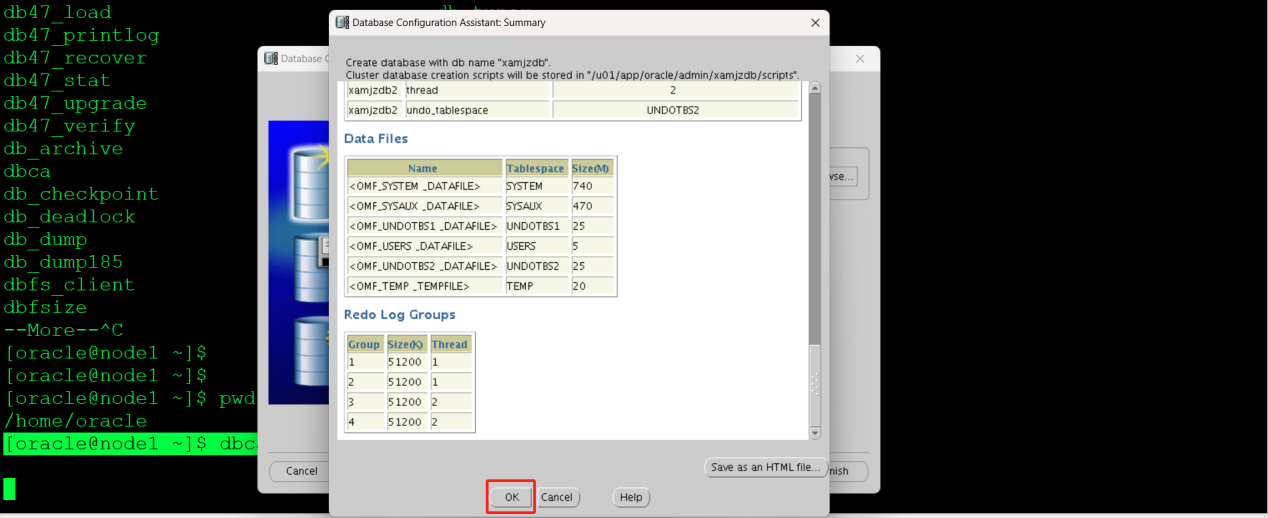

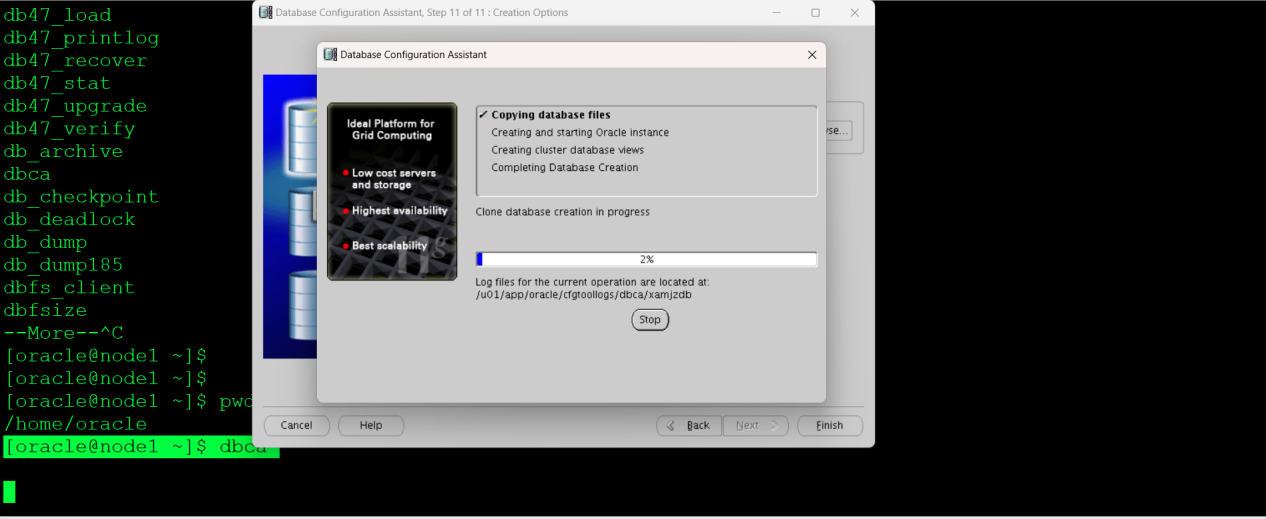

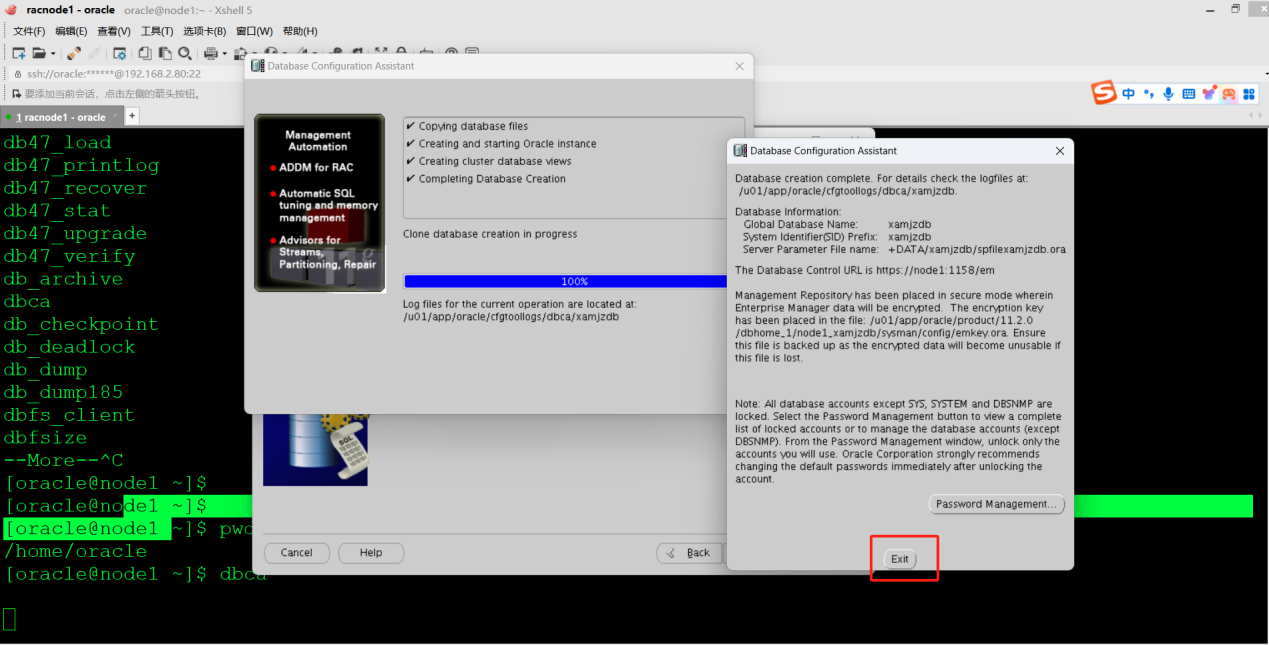

十四、创建配置Oracle RAC数据库

[oracle@node1 ~]$ pwd

/home/oracle

[oracle@node1 ~]$ dbca

输入数据库的服务名并同时选择两个节点

创建成功

查看Oracle实例状态

[oracle@node1 ~]$ sqlplus / as sysdba

SQL> select status , instance_name from gv$instance ;

STATUS INSTANCE_NAME

------------ ----------------

OPEN xamjzdb1

OPEN xamjzdb2

十五、集群数据库的关闭和启动方法

- 关闭集群数据库

关闭运行在节点上的所有Oracle实例(node1执行)

[root@node1 ~]# su - grid

[grid@node1 ~]$ srvctl stop database -d xamjzdb -o immediate

[grid@node1 ~]$ srvctl status database -d xamjzdb

实例 xamjzdb1 没有在 node1 节点上运行

实例 xamjzdb2 没有在 node2 节点上运行

- 关闭Has服务

[root@node1 ~]# /u01/app/11.2.0/grid/bin/crsctl stop has

[root@node2 ~]# /u01/app/11.2.0/grid/bin/crsctl stop has

- 启动Has服务

[root@node1 ~]# /u01/app/11.2.0/grid/bin/crsctl start has

[root@node2 ~]# /u01/app/11.2.0/grid/bin/crsctl start has

- 启动运行在节点上的所有Oracle实例(node1执行)

[grid@node1 ~]$ srvctl start database -d xamjzdb

[grid@node1 ~]$ srvctl status database -d xamjzdb

实例 xamjzdb1 正在节点 node1 上运行

实例 xamjzdb2 正在节点 node2 上运行

- 查看ASM实例和监听运行状态

[grid@node1 ~]$ srvctl status asm

ASM 正在 node2,node1 上运行

[grid@node1 ~]$ srvctl status listener

监听程序 LISTENER 已启用

监听程序 LISTENER 正在节点上运行: node2,node1

- 停止ASM、Listener

[grid@node1 ~]$ srvctl stop asm

[grid@node1 ~]$srvctl stop listener

--停止单个监听用法

[grid@node1 ~]$ srvctl stop listener -n node1

[grid@node2 ~]$ srvctl stop listener -n node2