Ceph分布式文件存储的安装

1. Ceph简介:

Ceph提供非凡的数据存储可扩展性 - 数以千计的客户端主机或KVM访问PB级到数据级的数据。它为应用程序提供对象存储、块存储、文件系统,存储在一个统一的存储集群中 。Ceph是开源产品可以免费使用,可以部署在经济实惠的商用服务器上。

Ceph分布式存储系统支持的三种接口:

- Object:有原生的API,而且也兼容Swift和S3的API

- Block:支持精简配置、快照、克隆

- File:Posix接口,支持快照

Ceph分布式存储系统的特点:

- 高扩展性:使用普通x86服务器,支持10~1000台服务器,支持TB到PB级的扩展。

- 高可靠性:没有单点故障,多数据副本,自动管理,自动修复。

- 高性能:数据分布均衡,并行化度高。对于objects storage和block storage,不需要元数据服务器。

Ceph分布式存储系统的基础概念:

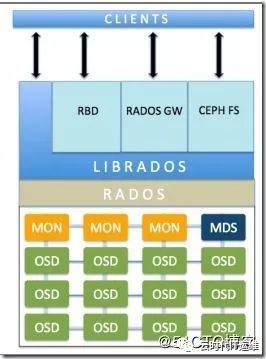

OSD:Object Storage Device,主要用于存储数据,处理数据,,恢复,平衡数据,并提供数据给monitor。

Monitor:Ceph监视器,主要用于集群健康状态维护,提供策略,包含Monitor Map ,OSD Map,PG ma和CRUSH MAP

MSD:Cpeh Metadata Server,主要保存ceph文件系统的元数据,快存储,对象存储不需要MSD。

RADOS:具备自我修复的特性,提供一个可靠,自动,智能的分布式存储

LIBRADOS:库文件,支持应用程序直接访问

RADOSGW:基于当前流行的RESTful协议的网关,并且兼容S3和Swift

RDB:通过Linux内核客户端和qemu-kvm驱动,来提供一个完全分布式的块设备

Ceph FS:兼容POSIX的文件系统

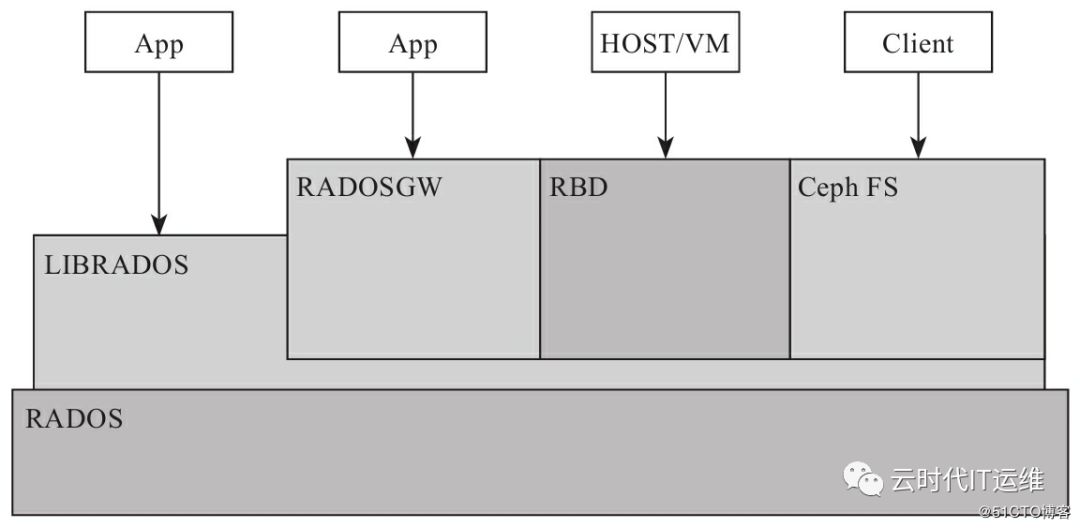

ceph存储逻辑上大致分为4个部分,RADOS基础存储系统,基于RADOS的cephFS,基于RADOS的LIBRADOS应用接口, 基于LIBRADOS的应用接口RBD。

- 基础存储系统RADOS:

本身是一个完整的对象文件系统,用户的数据由这一层来进行存储,物理上,由每一个节点组成。

- 基础库LIBRADOS:

这一层,将RADOS的功能进行抽象和封装,向上提供API。物理上,基于LIBRADOS开发的应用,与LIBRADOS位于同一台物理机上,应用通过调用本地的API,再有API通过SOCKET套接子与RADOS的节点进行通信,完成各种操作。

- 上层应用接口:

上层应用接口包括RADOSGW,RDB和ceph FS,其中除了CEPH FS,其余基于LIBRADOS库的基础上进一步进行抽象。

应用层:就是一些应用

RADOS:主要有OSD和MOnitor组成

(1). Monitor:监控整个集群的运行状况,包括节点的状态,集群的配置信息等,如monitor map,osd map,MDS map和CRUSH map。

(a).Monitor Map:记录着monitor节点端到端的信息,其中包括集群ID,监控主机IP和端口等信息。

查看Monitor的map: # ceph mon dump

(b).OSD Map:记录一下信息,如集群ID,创建OSD Map的版本信息和修改信息,pool的信息(pool的名称,ID,类型,副本数目等),OSD的数量,权重,状态等信息。

查看OSD的MAP:# ceph osd dump

(c).PG Map:记录PG的版本,时间戳,最新的OSD Map版本信息,空间使用比例等,还有PF ID,对象数目,状态,等。

查看PG Map: # ceph pg dump

(d).CRUSH Map:集群存储设备信息,故障域层次结构和存储数据时定义,失败域规则信息。

查看CRUSH Map: # ceph osd crush dump

(e).MDS Map:存储当前MDS Map的版本信息、创建当前Map的信息、修改时间、数据和元数据POOL ID、集群MDS数目和MDS状态.

查看MDS Map: # ceph mds dump

注:monitor节点需要需要足够的磁盘来存储集群的日志文件

Monitor集群中,集群节点的数为奇数,其中一个节点为Leader,用作监视器节点,当Leader不可用时,剩余的节点都可以成为leader,保证集群中至少有N/2个监控节点高可用

(2).OSD:OSD将数据以对象的形式存储在集群中的每个节点的物理磁盘上。

客户端从monitor拿到CLUSTER Map信息之后,直接与OSD进行交互进行数据的读写。

在OSD的上的每个对象都有一个主副本和若干个从副本,每个OSD及是某个对象的主OSD,也可能是某个对象的从OSD,从OSD收到主OSD的控制,当主OSD故障时,从OSD 成为主OSD,从新生成副本。

ceph支持的文件系统:BTRFS,XFS,EXT4

journal的存在能够减缓缓存突发负载,ceph写入数据时首先写入日志,然后在写入后备文件系统。

2. Ceph的安装:

| 节点 | 服务 | 集群网络 |

|---|---|---|

| k8sdemo-ceph1(admin-node) | osd.0,mon,mds,ceph-1 | eth0:10.83.32.0/24 |

| k8sdemo-ceph2 | osd.1,mon,mds,ceph-1 | eth0:10.83.32.0/24 |

| k8sdemo-ceph3 | osd.2,mon,mds,ceph-1 | eth0:10.83.32.0/24 |

安装ceph的节点最少要3台,并且每一台服务器至少有一个裸盘是没有安装操作系统的,用来做OSD盘;本实验中因为没有条件一台服务器准备2个硬盘,一个安装系统,一个用于OSD盘。所以使用单独的分区/dev/sdb4 来作为OSD盘;

集群共有3个osd进程,3个monitor进程,3个mds进程;管理节点用作ceph-deploy命令,使用k8sdemo-ceph1节点充当;

2.1. Ceph的初始化安装准备:

1. 首先设置IP地址、主机名、hosts文件,保证计算机名互相通信:

[root@k8sdemo-ceph1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=none DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=eth0 UUID=95183a09-c29b-46d3-92f2-ecb8dee346e0 DEVICE=eth0 ONBOOT=yes IPADDR=10.83.32.224 PREFIX=24 GATEWAY=10.83.32.1 IPV6_PRIVACY=no [root@k8sdemo-ceph1 ~]# hostnamectl set-hostname k8sdemo-ceph1 #设置主机名 echo -e "10.83.32.226 master-01\n10.83.32.227 master-02\n10.83.32.228 master-03\n10.83.32.229 node-01\n10.83.32.231 node-02\n10.83.32.232 node-03\n10.83.32.233 node-04\n10.83.32.223 node-05\n10.83.32.224 k8sdemo-ceph1\n10.83.32.225 k8sdemo-ceph2\n10.83.32.234 k8sdemo-ceph3" >> /etc/hosts #添加/etc/hosts [root@k8sdemo-ceph2 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.83.32.226 master-01 10.83.32.227 master-02 10.83.32.228 master-03 10.83.32.229 node-01 10.83.32.231 node-02 10.83.32.232 node-03 10.83.32.233 node-04 10.83.32.223 node-05 10.83.32.224 k8sdemo-ceph1 10.83.32.225 k8sdemo-ceph2 10.83.32.234 k8sdemo-ceph3 [root@k8sdemo-ceph2 ~]#复制

2. 设置每一台ceph主机的密钥,并设置互信:

[root@k8sdemo-ceph1 ~]# ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa Generating public/private rsa key pair. Created directory '/root/.ssh'. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:BzaNyJRmiqDq/1AGGylMBhw5Awm3LDeDmiO5OBU8+zE root@k8sdemo-ceph1 The key's randomart image is: +---[RSA 2048]----+ |O=+ .. | |*X ..o+. o | |++%= +o = . | |o=o*= . o | |B o.Eo S . | |++ .oo . | |= .. | | o . | | .... | +----[SHA256]-----+ [root@k8sdemo-ceph1 ~]# # 设置免Key登录 ssh-copy-id root@k8sdemo-ceph2 ssh-copy-id root@k8sdemo-ceph3 # 拷贝公钥到目标主机 # 以上步骤需要每台主机都要实施 ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa ssh-copy-id root@k8sdemo-ceph1 ssh-copy-id root@k8sdemo-ceph3 [root@k8sdemo-ceph2 ~]# # 在第二台ceph主机上面执行同样的操作 ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa ssh-copy-id root@k8sdemo-ceph1 ssh-copy-id root@k8sdemo-ceph2 [root@k8sdemo-ceph3 ~]# # 在第三台ceph主机上面执行同样的操作 ssh k8sdemo-ceph2 ssh k8sdemo-ceph3 # 在每一台ceph主机上面ssh 其他两台ceph主机,看看是否可以免密码登录复制

3. 关闭防火墙、SeLinux:

[root@k8sdemo-ceph1 ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service. Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service. [root@k8sdemo-ceph1 ~]# systemctl stop firewalld [root@k8sdemo-ceph1 ~]# iptables -L -n Chain INPUT (policy ACCEPT) target prot opt source destination Chain FORWARD (policy ACCEPT) target prot opt source destination Chain OUTPUT (policy ACCEPT) target prot opt source destination [root@k8sdemo-ceph1 ~]# # CentOS 7 操作系统的防火墙软件是firewalld,停止防火墙并设置开机不启动; sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config setenforce 0 [root@k8sdemo-ceph1 ~]# getenforce Disabled # 关闭selinux,注意通过配置文件修改的selinux配置,需要重启才可以生效复制

4. 设置yum源和时间同步:

cd /etc/yum.repos.d && mkdir -p repobak && mv *.repo repobak # 把CentOS默认的yum源备份,然后创建阿里云的yum源、epel源、ceph源 [root@k8sdemo-ceph1 yum.repos.d]# cat CentOS-Base.repo # CentOS-Base.repo # # The mirror system uses the connecting IP address of the client and the # update status of each mirror to pick mirrors that are updated to and # geographically close to the client. You should use this for CentOS updates # unless you are manually picking other mirrors. # # If the mirrorlist= does not work for you, as a fall back you can try the # remarked out baseurl= line instead. # # [base] name=CentOS-$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/ #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=os gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #released updates [updates] name=CentOS-$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/ #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=updates gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that may be useful [extras] name=CentOS-$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/ #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=extras gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that extend functionality of existing packages [centosplus] name=CentOS-$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/ #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=centosplus gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #contrib - packages by Centos Users [contrib] name=CentOS-$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/ #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=contrib gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 [root@k8sdemo-ceph1 yum.repos.d]# [root@k8sdemo-ceph1 yum.repos.d]# cat epel.repo [epel] name=Extra Packages for Enterprise Linux 7 - $basearch baseurl=http://mirrors.aliyun.com/epel/7/$basearch http://mirrors.aliyuncs.com/epel/7/$basearch #mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-7&arch=$basearch failovermethod=priority enabled=1 gpgcheck=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 [epel-debuginfo] name=Extra Packages for Enterprise Linux 7 - $basearch - Debug baseurl=http://mirrors.aliyun.com/epel/7/$basearch/debug http://mirrors.aliyuncs.com/epel/7/$basearch/debug #mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-debug-7&arch=$basearch failovermethod=priority enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 gpgcheck=0 [epel-source] name=Extra Packages for Enterprise Linux 7 - $basearch - Source baseurl=http://mirrors.aliyun.com/epel/7/SRPMS http://mirrors.aliyuncs.com/epel/7/SRPMS #mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-source-7&arch=$basearch failovermethod=priority enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 gpgcheck=0 [root@k8sdemo-ceph1 yum.repos.d]# [root@k8sdemo-ceph1 yum.repos.d]# cat ceph.repo [ceph] name=Ceph baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/ enabled=1 priority=1 gpgcheck=1 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-noarch] name=Ceph noarch packages baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/ enabled=1 priority=1 gpgcheck=1 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-source] name=Ceph source packages baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/SRPMS/ enabled=0 priority=1 gpgcheck=1 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc # 注意这里priority可以设置yum源的优先级,如果你其它源中也有ceph软件包 # 需要保证这里的ceph.repo的优先级priority比其它的高(值越小越高) # 为此需要安装下面这个软件包,用以支持yum源的优先级 # yum -y install yum-plugin-priorities [root@k8sdemo-ceph1 yum.repos.d]# scp -r *.repo root@k8sdemo-ceph2:/etc/yum.repos.d/ scp -r *.repo root@k8sdemo-ceph3:/etc/yum.repos.d/ #拷贝yum源文件到其他两个节点 [root@k8sdemo-ceph1 yum.repos.d]# timedatectl status Local time: Fri 2019-04-05 09:58:04 CST Universal time: Fri 2019-04-05 01:58:04 UTC RTC time: Fri 2019-04-05 01:58:04 Time zone: Asia/Shanghai (CST, +0800) NTP enabled: n/a NTP synchronized: no RTC in local TZ: no DST active: n/a [root@k8sdemo-ceph1 yum.repos.d]# # 查看时区中国上海 # 在k8sdemo-ceph1 这台机器上面配置时间服务器chrony,参考网址:https://blog.51cto.com/12643266/2349098 systemctl stop ntpd.service systemctl disable ntpd.service yum install chrony vim /etc/chrony.conf server ntp1.aliyun.com iburst server ntp2.aliyun.com iburst server ntp3.aliyun.com iburst server ntp4.aliyun.com iburst allow 10.83.32.0/24 systemctl start chronyd.service systemctl enable chronyd.service # 在另外两个节点上面配置时间服务器客户端 yum install ntp -y [root@k8sdemo-ceph1 yum.repos.d]# crontab -l 0 1 * * * /usr/sbin/ntpdate 10.83.32.224 > /dev/null 2>&1 # 安装ntp软件,设置时间同步复制

5. 系统优化:

#set max user processes sed -i 's/4096/102400/' /etc/security/limits.d/20-nproc.conf #set ulimit cat /etc/rc.local | grep "ulimit -SHn 102400" || echo "ulimit -SHn 102400" >> /etc/rc.local [root@k8sdemo-ceph1 yum.repos.d]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 1.8T 0 disk ├─sda1 8:1 0 200M 0 part /boot/efi ├─sda2 8:2 0 1G 0 part /boot └─sda3 8:3 0 1.8T 0 part ├─centos-root 253:0 0 50G 0 lvm / ├─centos-swap 253:1 0 7.8G 0 lvm [SWAP] └─centos-home 253:2 0 1.8T 0 lvm /home # 查看ceph服务器的硬盘数量,如果有多个硬盘,这里可以分别对sdb、sdc等设置如下的优化配置 # read_ahead, 通过数据预读并且记载到随机访问内存方式提高磁盘读操作,查看默认值 cat /sys/block/sda/queue/read_ahead_kb 128 根据一些 Ceph 的公开分享, 8192 是比较理想的值。 echo "8192" > /sys/block/sda/queue/read_ahead_kb # 这个优化的配置重启之后会失效,需要配置到/etc/rc.d/rc.local文件里面才行 chmod +x /etc/rc.d/rc.local [root@k8sdemo-ceph3 ~]# cat /etc/rc.d/rc.local #!/bin/bash # THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES # # It is highly advisable to create own systemd services or udev rules # to run scripts during boot instead of using this file. # # In contrast to previous versions due to parallel execution during boot # this script will NOT be run after all other services. # # Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure # that this script will be executed during boot. touch /var/lock/subsys/local echo "8192" > /sys/block/sda/queue/read_ahead_kb ulimit -SHn 102400 # 关闭swap分区 # swappiness, 主要控制系统对 swap 的使用,这个参数的调整最先见于 UnitedStack 公开的文档中,猜测调整的原因主要是使用 swap 会影响系统的性能。 echo "vm.swappiness = 0" | tee -a /etc/sysctl.conf # osd进程可以产生大量线程,如有需要,可以调整下内核最大允许线程数 允许更多的 PIDs (减少滚动翻转问题); 默认是 more /proc/sys/kernel/pid_max 49152 调整为: echo 4194303 > /proc/sys/kernel/pid_max 永久生效 echo "kernel.pid_max= 4194303" | tee -a /etc/sysctl.conf # 修改I/O Scheduler # I/O Scheduler,关于 I/O Scheculder 的调整网上已经有很多资料,这里不再赘述,简单说 SSD 要用 noop, SATA/SAS 使用 deadline。 [root@k8sdemo-ceph1 yum.repos.d]# echo "deadline" > /sys/block/sda/queue/scheduler [root@k8sdemo-ceph1 yum.repos.d]# cat /sys/block/sda/queue/scheduler noop [deadline] cfq [root@k8sdemo-ceph1 yum.repos.d]#复制

2.2. 安装部署主机(ceph-deploy):

ceph-deploy是ceph官方提供的部署工具,它通过ssh远程登录其它各个节点上执行命令完成部署过程,我们可以随意选择一台服务器安装此工具,为方便,这里我们选择k8sdemo-ceph1节点安装ceph-deploy

我们把k8sdemo-ceph1节点上的/cluster目录作为ceph-deploy部署目录,其部署过程中生成的配置文件,key密钥,日志等都位于此目录下,因此下面部署应当始终在此目录下进行.

ceph-deploy工具默认使用root用户SSH到各Ceph节点执行命令。为了方便,已经配置ceph-deploy免密码登陆各个节点。如果ceph-deploy以某个普通用户登陆,那么这个用户必须有无密码使用sudo的权限。

[root@k8sdemo-ceph1 yum.repos.d]# mkdir -p /workspace/ceph [root@k8sdemo-ceph1 yum.repos.d]# cd /workspace/ceph/ [root@k8sdemo-ceph1 ceph]# vim cephlist.txt [root@k8sdemo-ceph1 ceph]# cat cephlist.txt k8sdemo-ceph1 k8sdemo-ceph2 k8sdemo-ceph3 [root@k8sdemo-ceph1 ceph]# # 建立主机列表 for ip in `cat /workspace/ceph/cephlist.txt`;do echo ---$ip---;ssh root@$ip mkdir -p /etc/ceph;ssh root@$ip ls -l /etc/ceph;done # 为每一台ceph主机创建工作目录/etc/ceph yum -y install ceph-deploy # 在第一台主机安装ceph-deploy ceph-deploy install k8sdemo-ceph1 k8sdemo-ceph2 k8sdemo-ceph3 # 在第一台ceph主机上面执行批量安装操作,分别在k8sdemo-ceph1、k8sdemo-ceph2、k8sdemo-ceph3 3台主机上面安装 epel-release、yum-plugin-priorities、ceph-release、ceph、ceph-radosgw等包 # 软件推送安装完成复制

2.3. 配置Ceph集群:

mkdir -p /cluster cd /cluster ls # 在根目录下面创建cluster目录,进入cluster目录,ls 没有文件 # 初始化集群,告诉 ceph-deploy 哪些节点是监控节点,命令成功执行后会在 ceps-cluster 目录下生成 ceph.conf, ceph.log, ceph.mon.keyring 等相关文件: # 添加初始 monitor 节点和收集密钥 ceph-deploy new k8sdemo-ceph1 k8sdemo-ceph3 #会创建一个ceph.conf配置文件和一个监视器密钥环到各个节点的/etc/ceph/目录,ceph.conf中会有`fsid`,`mon_initial_members`,`mon_host`三个参数 #默认ceph使用集群名ceph ceph-deploy mon create-initial #Ceph Monitors之间默认使用6789端口通信, OSD之间默认用6800:7300 范围内的端口通信,多个集群应当保证端口不冲突 # 再次查看 /cluster目录下面已经有了三个文件了 [root@k8sdemo-ceph1 cluster]# ls ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring ceph.bootstrap-osd.keyring ceph.conf ceph.mon.keyring-20190405113026 [root@k8sdemo-ceph1 cluster]# ceph -s cluster 119b3a1c-17ad-43c8-9378-a625b8dd19d9 health HEALTH_ERR clock skew detected on mon.k8sdemo-ceph3 no osds Monitor clock skew detected monmap e1: 2 mons at {k8sdemo-ceph1=10.83.32.224:6789/0,k8sdemo-ceph3=10.83.32.234:6789/0} election epoch 6, quorum 0,1 k8sdemo-ceph1,k8sdemo-ceph3 osdmap e1: 0 osds: 0 up, 0 in flags sortbitwise,require_jewel_osds pgmap v2: 64 pgs, 1 pools, 0 bytes data, 0 objects 0 kB used, 0 kB / 0 kB avail 64 creating [root@k8sdemo-ceph1 cluster]# # 查看集群状态,提示没有osd ceph-deploy --overwrite-conf mds create k8sdemo-ceph1 k8sdemo-ceph3 # 部署mds元数据节点,元数据节点服务器只针对CephFS文件系统 ceph-deploy disk list k8sdemo-ceph1 k8sdemo-ceph2 k8sdemo-ceph3 # 检查主机的Ceph 存储节点的硬盘情况,查看可以用于OSD的分区 ceph-deploy osd prepare k8sdemo-ceph1:/dev/sda4 k8sdemo-ceph2:/dev/sda4 k8sdemo-ceph3:/dev/sda4 # 准备OSD ceph-deploy osd activate k8sdemo-ceph1:/dev/sda4 k8sdemo-ceph2:/dev/sda4 k8sdemo-ceph3:/dev/sda4 # 激活OSD [root@k8sdemo-ceph1 cluster]# ceph osd tree ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY -1 3.71986 root default -2 1.52179 host k8sdemo-ceph1 0 1.52179 osd.0 up 1.00000 1.00000 -3 1.52179 host k8sdemo-ceph2 1 1.52179 osd.1 up 1.00000 1.00000 -4 0.67628 host k8sdemo-ceph3 2 0.67628 osd.2 up 1.00000 1.00000 [root@k8sdemo-ceph1 cluster]# # 查看OSD结构 [root@k8sdemo-ceph1 cluster]# ceph -s cluster 119b3a1c-17ad-43c8-9378-a625b8dd19d9 health HEALTH_WARN clock skew detected on mon.k8sdemo-ceph3 Monitor clock skew detected monmap e1: 2 mons at {k8sdemo-ceph1=10.83.32.224:6789/0,k8sdemo-ceph3=10.83.32.234:6789/0} election epoch 6, quorum 0,1 k8sdemo-ceph1,k8sdemo-ceph3 osdmap e16: 3 osds: 3 up, 3 in flags sortbitwise,require_jewel_osds pgmap v28: 64 pgs, 1 pools, 0 bytes data, 0 objects 15683 MB used, 3793 GB / 3809 GB avail 64 active+clean # 查看ceph状态 ceph-deploy --overwrite-conf config push k8sdemo-ceph2 #把改过的配置文件分发给集群主机 ceph-deploy admin k8sdemo-ceph2 #允许主机以管理员权限执行 Ceph 命令 ceph-deploy --overwrite-conf config push k8sdemo-ceph3 ceph-deploy admin k8sdemo-ceph3 #把生成的配置文件从 ceph-deploy 同步部署到其他几个节点,使得每个节点的 ceph 配置一致 [root@k8sdemo-ceph1 cluster]# ceph df GLOBAL: SIZE AVAIL RAW USED %RAW USED 3809G 3793G 15683M 0.40 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS rbd 0 0 0 1196G 0 [root@k8sdemo-ceph1 cluster]# # 查看rbd卷信息 # 增加一个mon节点 ceph-deploy mon create k8sdemo-ceph2 # 原来的mon节点只有两个,现在增加一个 [k8sdemo-ceph2][INFO ] Running command: systemctl start ceph-mon@k8sdemo-ceph2 [k8sdemo-ceph2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.k8sdemo-ceph2.asok mon_status [k8sdemo-ceph2][ERROR ] admin_socket: exception getting command descriptions: [Errno 2] No such file or directory [k8sdemo-ceph2][WARNIN] monitor: mon.k8sdemo-ceph2, might not be running yet [k8sdemo-ceph2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.k8sdemo-ceph2.asok mon_status [k8sdemo-ceph2][ERROR ] admin_socket: exception getting command descriptions: [Errno 2] No such file or directory [k8sdemo-ceph2][WARNIN] k8sdemo-ceph2 is not defined in `mon initial members` [k8sdemo-ceph2][WARNIN] monitor k8sdemo-ceph2 does not exist in monmap [k8sdemo-ceph2][WARNIN] neither `public_addr` nor `public_network` keys are defined for monitors 发现有报错,按照网上的文档进行操作修复了 # 在一主机上新增监视器时,如果它不是由ceph-deploy new命令所定义的,那就必须把public network加入 ceph.conf配置文件 vim ceph.conf # 最后一行增加内容 public_network = 10.83.32.0/24 ceph-deploy --overwrite-conf config push k8sdemo-ceph2 #重新推送配置文件到第二个节点 ceph-deploy admin k8sdemo-ceph2 #推送client.admin key ceph-deploy --overwrite-conf mon add k8sdemo-ceph2 #增加一个mon节点成功 [root@k8sdemo-ceph1 cluster]# ceph -s cluster 119b3a1c-17ad-43c8-9378-a625b8dd19d9 health HEALTH_WARN clock skew detected on mon.k8sdemo-ceph2, mon.k8sdemo-ceph3 Monitor clock skew detected monmap e2: 3 mons at {k8sdemo-ceph1=10.83.32.224:6789/0,k8sdemo-ceph2=10.83.32.225:6789/0,k8sdemo-ceph3=10.83.32.234:6789/0} election epoch 8, quorum 0,1,2 k8sdemo-ceph1,k8sdemo-ceph2,k8sdemo-ceph3 osdmap e16: 3 osds: 3 up, 3 in flags sortbitwise,require_jewel_osds pgmap v28: 64 pgs, 1 pools, 0 bytes data, 0 objects 15683 MB used, 3793 GB / 3809 GB avail 64 active+clean 发现已经有3个osd节点了 ceph-deploy --overwrite-conf mds create k8sdemo-ceph2 # 增加一个mds节点 [root@k8sdemo-ceph1 cluster]# ceph mds stat e4:, 3 up:standby [root@k8sdemo-ceph1 cluster]# ceph osd stat osdmap e16: 3 osds: 3 up, 3 in flags sortbitwise,require_jewel_osds # 查看mds osd状态 [root@k8sdemo-ceph1 cluster]# ceph osd pool create k8sdemo 128 pool 'k8sdemo' created [root@k8sdemo-ceph1 cluster]# ceph df GLOBAL: SIZE AVAIL RAW USED %RAW USED 3809G 3793G 15683M 0.40 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS rbd 0 0 0 1196G 0 k8sdemo 1 0 0 1196G 0 [root@k8sdemo-ceph1 cluster]# # 创建卷和检查卷复制

推荐关注我的个人微信公众号 “云时代IT运维”,周期性更新最新的应用运维类技术文档。关注虚拟化和容器技术、CI/CD、自动化运维等最新前沿运维技术和趋势;