大家好,这里是公众号 DBA学习之路,分享一些学习国产数据库路上的知识和经验。

社群交流

为了给大家提供一些技术交流的平台,目前已成立的技术交流群:

- Oracle 数据库交流群

- 国产数据库交流群

- Linux 技术交流群

- ChatGPT 4o 免费体验群

需要进群(均已超过 200 人,需要邀请)可以添加号主微信:Lucifer-0622,备注对应的群名即可。

目录

- 社群交流

- 前言

- 介绍

- 安装前准备

- 系统要求

- 检查操作系统(所有节点)

- 关闭防火墙(所有节点)

- 禁用 selinux(所有节点)

- 配置软件源(所有节点)

- 安装依赖包(所有节点)

- 创建用户(所有节点)

- 创建目录(所有节点)

- 配置免密互信(所有节点)

- 源码下载(所有节点)

- 配置环境变量(所有节点)

- 集群部署

- 拓展

- 写在最后

- 往期精彩文章

前言

昨天看到一个 OpenTenBase创新应用挑战赛 活动,非常感兴趣,打算学习了解一下 OpenTenBase 开源数据库。

本文简单介绍 OpenTenBase 以及如何安装部署。

介绍

OpenTenBase 是一个提供写可靠性,多主节点数据同步的关系数据库集群平台。你可以将 OpenTenBase 配置一台或者多台主机上, OpenTenBase 数据存储在多台物理主机上面。数据表的存储有两种方式, 分别是 distributed 或者 replicated ,当向 OpenTenBase 发送查询 SQL 时, OpenTenBase 会自动向数据节点发出查询语句并获取最终结果。

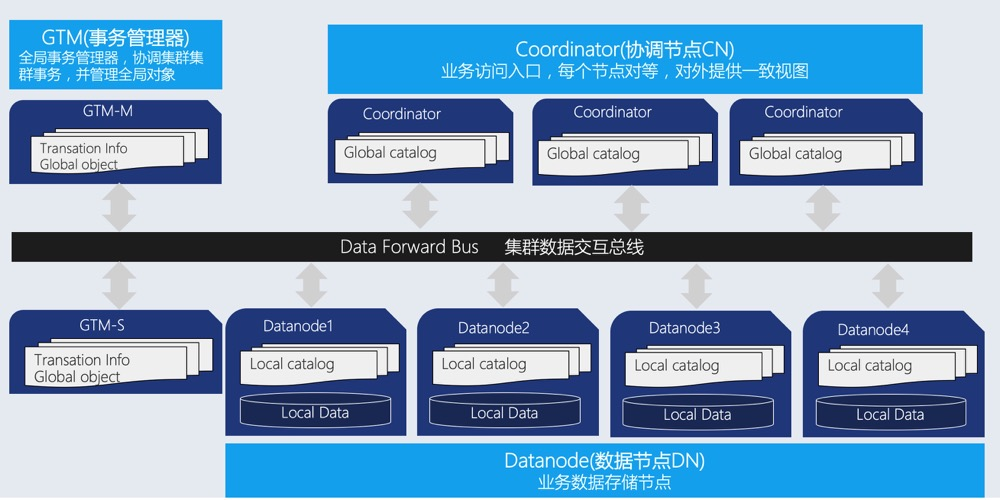

OpenTenBase 采用分布式集群架构(如下图), 该架构分布式为无共享(share nothing)模式,节点之间相应独立,各自处理自己的数据,处理后的结果可能向上层汇总或在节点间流转,各处理单元之间通过网络协议进行通信,并行处理和扩展能力更好,这也意味着只需要简单的 x86 服务器就可以部署 OpenTenBase 数据库集群。

OpenTenBase 架构图:

简单解读一下 OpenTenBase 的三大模块:

- Coordinator 协调节点(简称 CN):业务访问入口,负责数据的分发和查询规划,多个节点位置对等,每个节点都提供相同的数据库视图;在功能上 CN 上只存储系统的全局元数据,并不存储实际的业务数据。

- Datanode 数据节点(简称 DN):每个节点还存储业务数据的分片在功能上,DN 节点负责完成执行协调节点分发的执行请求。

- GTM 全局事务管理器(Global Transaction Manager):负责管理集群事务信息,同时管理集群的全局对象,比如序列等。

接下来,让我们来看看如何从源码开始,完成到 OpenTenBase 集群环境的搭建。

安装前准备

本文使用两台服务器上搭建 1GTM 主,1GTM 备,2CN 主(CN 主之间对等,因此无需备 CN),2DN 主,2DN 备的集群,该集群为具备容灾能力的最小配置。

系统要求

| 主机名 | IP | 版本 | CPU | 内存 | 硬盘 |

|---|---|---|---|---|---|

| otb01 | 192.168.6.87 | TencentOS 3.3 | x86 | 8G | 100G |

| otb02 | 192.168.6.88 | TencentOS 3.3 | x86 | 8G | 100G |

OpenTenBase 安装最低要求:

- 内存:4G RAM

- 操作系统:TencentOS 2/3、OpenCloudOS、CentOS 7/8、Ubuntu

集群规划如下:

| 节点名称 | IP | 数据目录 |

|---|---|---|

| GTM master | 192.168.6.87 | /opentenbase/data/gtm |

| GTM slave | 192.168.6.88 | /opentenbase/data/gtm |

| CN1 | 192.168.6.87 | /opentenbase/data/coord |

| CN2 | 192.168.6.88 | /opentenbase/data/coord |

| DN1 master | 192.168.6.87 | /opentenbase/data/dn001 |

| DN1 slave | 192.168.6.88 | /opentenbase/data/dn001 |

| DN2 master | 192.168.6.88 | /opentenbase/data/dn002 |

| DN2 slave | 192.168.6.87 | /opentenbase/data/dn002 |

检查操作系统(所有节点)

检查操作系统版本信息:

## 以节点一为例

[root@oct01 ~]# cat /etc/os-release

NAME="TencentOS Server"

VERSION="3.3 (Final)"

ID="tencentos"

ID_LIKE="rhel fedora centos"

VERSION_ID="3.3"

PLATFORM_ID="platform:el8"

PRETTY_NAME="TencentOS Server 3.3 (Final)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:tencentos:tencentos:3"

HOME_URL="https://cloud.tencent.com/product/ts"

TENCENT_SUPPORT_PRODUCT="tencentos"

TENCENT_SUPPORT_PRODUCT_VERSION="3"

NAME_ORIG="TencentOS Server"

复制关闭防火墙(所有节点)

数据库安装均建议关闭防火墙:

## 以节点一为例

[root@otc01 ~]# systemctl stop firewalld

[root@otc01 ~]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

复制禁用 selinux(所有节点)

所有节点建议关闭 SELNUX,防止造成一些不必要的问题:

## 以节点一为例

## 这里使用 setenforce 0 临时生效

[root@oct01 ~]# /usr/sbin/setenforce 0

[root@oct01 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@oct01 ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: permissive

Mode from config file: disabled

Policy MLS status: enabled

Policy deny_unknown status: allowed

Memory protection checking: actual (secure)

Max kernel policy version: 31

复制将 SELINUX 参数设置为 disabled,即 SELINUX=disabled 保存退出后,需要重新启动才能生效。

配置软件源(所有节点)

挂载 iso 镜像:

[root@oct01 ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: device write-protected, mounted read-only.

复制一键配置本地软件源:

## 备份系统初始配置文件

mkdir -p /etc/yum.repos.d/bak

mv /etc/yum.repos.d/* /etc/yum.repos.d/bak

## 一键配置软件源,默认 ISO 安装镜像挂载在 /mnt 目录下

cat <<-EOF > /etc/yum.repos.d/local.repo

[BaseOS]

name=BaseOS

baseurl=file:///mnt/BaseOS

enabled=1

gpgcheck=0

[AppStream]

name=AppStream

baseurl=file:///mnt/AppStream

enabled=1

gpgcheck=0

EOF

## 查看配置好的软件源

cat /etc/yum.repos.d/local.repo

复制安装依赖包(所有节点)

OpenTenBase 对部署的环境有一些依赖包要求:

## TencentOS 2、TencentOS 3、OpenCloudOS、CentOS 7、CentOS 8

[root@oct01 ~]# dnf install -y gcc make readline-devel zlib-devel openssl-devel uuid uuid-devel bison flex --skip-broken

复制安装完成后检查是否安装成功:

[root@oct01 ~]# rpm -q gcc make readline-devel zlib-devel openssl-devel uuid uuid-devel bison flex

gcc-8.5.0-22.tl3.1.x86_64

make-4.2.1-11.tl3.x86_64

readline-devel-7.0-10.tl3.x86_64

zlib-devel-1.2.11-25.tl3.x86_64

openssl-devel-1.1.1k-12.tl3.1.x86_64

未安装软件包 uuid-devel

bison-3.0.4-10.tl3.x86_64

flex-2.6.1-9.tl3.x86_64

复制📢 注意:在 TencentOS 3 的本地软件源中默认没有 uuid-devel 包,而是在 PowerTools 中,所以需要自行下载安装,否则 OpenTenBase 源码编译的时候会报错:

checking for uuid_export in -luuid... no

configure: error: library 'ossp-uuid' or 'uuid' is required for OSSP UUID

复制uuid-devel-1.6.2-43.tl3.x86_64.rpm 下载链接 🔗: https://mirrors.tencent.com/tlinux/3.3/PowerTools/x86_64/os/Packages/uuid-devel-1.6.2-43.tl3.x86_64.rpm

下载好之后上传安装即可:

## 以节点一为例

[root@oct01 ~]# rpm -ivh uuid-devel-1.6.2-43.tl3.x86_64.rpm

警告:uuid-devel-1.6.2-43.tl3.x86_64.rpm: 头V4 RSA/SHA256 Signature, 密钥 ID 6ca3b8cc: NOKEY

Verifying... ################################# [100%]

准备中... ################################# [100%]

正在升级/安装...

1:uuid-devel-1.6.2-43.tl3 ################################# [100%]

复制安装后再次检查依赖包是否安装:

[root@oct01 ~]# rpm -q gcc make readline-devel zlib-devel openssl-devel uuid uuid-devel bison flex

gcc-8.5.0-22.tl3.1.x86_64

make-4.2.1-11.tl3.x86_64

readline-devel-7.0-10.tl3.x86_64

zlib-devel-1.2.11-25.tl3.x86_64

openssl-devel-1.1.1k-12.tl3.1.x86_64

uuid-1.6.2-43.tl3.x86_64

uuid-devel-1.6.2-43.tl3.x86_64

bison-3.0.4-10.tl3.x86_64

flex-2.6.1-9.tl3.x86_64

复制依赖包全部安装完成。

创建用户(所有节点)

## 以节点一为例

[root@oct01 ~]# useradd -d /home/opentenbase -m opentenbase

## 设置用户密码为 opentenbase

[root@oct01 ~]# echo "opentenbase:opentenbase" | chpasswd

复制创建目录(所有节点)

创建安装所需目录并且授权:

## 以节点一为例

[root@oct01 ~]# mkdir -p /soft

[root@oct01 ~]# mkdir -p /opentenbase/{pgxc_ctl,install,global}

[root@oct01 ~]# mkdir -p /opentenbase/data/{gtm,coord,coord_archlog,dn001,dn002,datanode_archlog}

[root@oct01 ~]# chown -R opentenbase:opentenbase {/soft,/opentenbase}

[root@oct01 ~]# chmod -R 775 {/soft,/opentenbase}

复制配置免密互信(所有节点)

建议将所有节点之间的 SSH 配置免密登录,然后 deployment 和 init 就会 SSH 到各个节点的机器上,不需要输入密码了:

## 以节点一为例

## 切换到 opentenbase 用户

[root@oct01 ~]# su - opentenbase

## 生成 ssh 密钥,一路回车即可

[opentenbase@oct01 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/opentenbase/.ssh/id_rsa):

Created directory '/home/opentenbase/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/opentenbase/.ssh/id_rsa.

Your public key has been saved in /home/opentenbase/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:kCmuNwPhMiIue6ruEDC+ubrJvLL56WA7IA0Vb+QWeNc opentenbase@oct01

The key's randomart image is:

+---[RSA 3072]----+

| .oo . |

| o+...oE |

|o...*.+ |

|+o = . . |

|*+o . S |

|*++o |

|*=. + |

|B*+o o |

|//* |

+----[SHA256]-----+

## 将公钥拷贝到所有节点(包括自己),中间需要输入一次密码

[opentenbase@oct01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub opentenbase@192.168.6.87

[opentenbase@oct01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub opentenbase@192.168.6.88

## 测试免密互信是否成功

[opentenbase@oct01 ~]$ ssh 192.168.6.87

[opentenbase@oct01 ~]$ ssh 192.168.6.88

复制其他节点同样操作。

源码下载(所有节点)

建议在自己电脑获取 OpenTenBase 源码:

╭─lucifer@Lucifer-7 /Volumes/DBA/Github

╰─$ git clone https://github.com/OpenTenBase/OpenTenBase

正克隆到 'OpenTenBase'...

remote: Enumerating objects: 18129, done.

remote: Counting objects: 100% (4633/4633), done.

remote: Compressing objects: 100% (810/810), done.

remote: Total 18129 (delta 4102), reused 3863 (delta 3822), pack-reused 13496 (from 1)

接收对象中: 100% (18129/18129), 31.03 MiB | 20.64 MiB/s, 完成.

处理 delta 中: 100% (11304/11304), 完成.

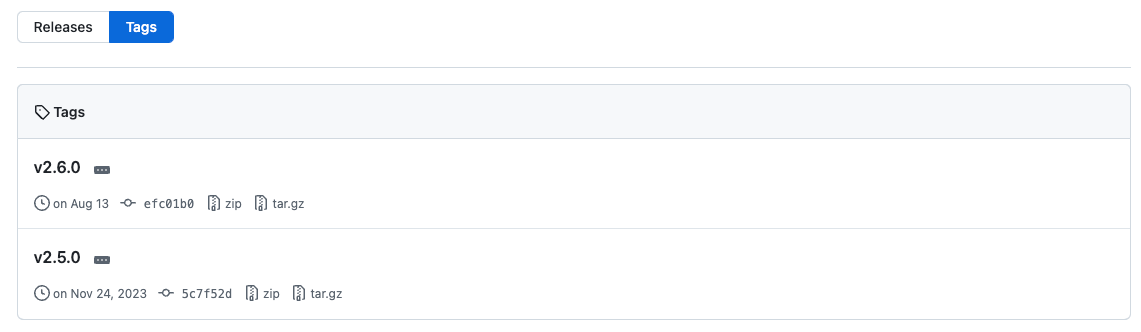

复制当然也可以直接去下载源码包:https://github.com/OpenTenBase/OpenTenBase/tags

下载后上传到数据库主机并解压(主节点):

[root@oct01 ~]# cd /soft/

[root@oct01 soft]# chown opentenbase:opentenbase OpenTenBase-2.6.0.tar.gz

[root@oct01 soft]# ll

总用量 24800

-rwxr-xr-x. 1 opentenbase opentenbase 25394783 11月 28 14:18 OpenTenBase-2.6.0.tar.gz

## 解压源码包

[root@oct01 soft]# su - opentenbase

[opentenbase@oct01 ~]$ cd /soft/

[opentenbase@oct01 soft]$ tar -xf OpenTenBase-2.6.0.tar.gz

[opentenbase@oct01 soft]$ ls

OpenTenBase-2.6.0 OpenTenBase-2.6.0.tar.gz

复制配置环境变量(所有节点)

使用 opentenbase 用户一键配置环境变量:

## 以节点一为例

[opentenbase@oct01 ~]$ cat<<-\EOF>>/home/opentenbase/.bash_profile

export OPENTENBASE_HOME=/opentenbase/install/opentenbase_bin_v2.6

export PATH=$OPENTENBASE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$OPENTENBASE_HOME/lib:${LD_LIBRARY_PATH}

export LC_ALL=C

EOF

复制至此,准备工作就完成了。

集群部署

主节点源码编译

主节点需要先编译安装源码包,用于后续集群发布部署:

## 进入源码目录

[opentenbase@oct01 ~]$ cd /soft/OpenTenBase-2.6.0

## 授予脚本执行权限

[opentenbase@oct01 OpenTenBase-2.6.0]$ chmod +x configure*

## 配置编译选项

[opentenbase@oct01 OpenTenBase-2.6.0]$ ./configure --prefix=/opentenbase/install/opentenbase_bin_v2.6 --enable-user-switch --with-openssl --with-ossp-uuid CFLAGS=-g

## 编译安装软件

[opentenbase@oct01 OpenTenBase-2.6.0]$ make clean && make -sj && make install

## 编译 contruib 工具

[opentenbase@oct01 OpenTenBase-2.6.0]$ chmod +x contrib/pgxc_ctl/make_signature

[opentenbase@oct01 OpenTenBase-2.6.0]$ cd contrib

[opentenbase@oct01 OpenTenBase-2.6.0]$ make -sj && make install

复制至此,主节点源码编译安装完成。

初始化 pgxc_ctl.conf 文件

接下来可以进入到集群初始化阶段,为了方便用户,OpenTenBase 提供了专用的配置和操作工具:pgxc_ctl 来协助用户快速搭建并管理集群:

## 查看 pgxc_ctl 命令帮助文档

[opentenbase@oct01 ~]$ pgxc_ctl --help

/usr/bin/bash

pgxc_ctl [option ...] [command]

option:

-c or --configuration conf_file: Specify configruration file.

-v or --verbose: Specify verbose output.

-V or --version: Print version and exit.

-l or --logdir log_directory: specifies what directory to write logs.

-L or --logfile log_file: Specifies log file.

--home home_direcotry: Specifies pgxc_ctl work director.

-i or --infile input_file: Specifies inptut file.

-o or --outfile output_file: Specifies output file.

-h or --help: Prints this message and exits.

For more deatils, refer to pgxc_ctl reference manual included in

postgres-xc reference manual.

复制首先需要将前文所述的节点的 ip,端口,目录写入到配置文件 pgxc_ctl.conf 中:

[opentenbase@oct01 ~]$ cd /opentenbase/pgxc_ctl/

[opentenbase@oct01 pgxc_ctl]$ vi pgxc_ctl.conf

复制配置文件内容如下:

#!/bin/bash

# Double Node Config

IP_1=192.168.6.87

IP_2=192.168.6.88

pgxcInstallDir=/opentenbase/install/opentenbase_bin_v2.6

pgxcOwner=opentenbase

defaultDatabase=postgres

pgxcUser=$pgxcOwner

tmpDir=/tmp

localTmpDir=$tmpDir

configBackup=n

configBackupHost=pgxc-linker

configBackupDir=$HOME/pgxc

configBackupFile=pgxc_ctl.bak

#---- GTM ----------

gtmName=gtm

gtmMasterServer=$IP_1

gtmMasterPort=50001

gtmMasterDir=/opentenbase/data/gtm

gtmExtraConfig=none

gtmMasterSpecificExtraConfig=none

gtmSlave=y

gtmSlaveServer=$IP_2

gtmSlavePort=50001

gtmSlaveDir=/opentenbase/data/gtm

gtmSlaveSpecificExtraConfig=none

#---- Coordinators -------

coordMasterDir=/opentenbase/data/coord

coordArchLogDir=/opentenbase/data/coord_archlog

coordNames=(cn001 cn002 )

coordPorts=(30004 30004 )

poolerPorts=(31110 31110 )

coordPgHbaEntries=(0.0.0.0/0)

coordMasterServers=($IP_1 $IP_2)

coordMasterDirs=($coordMasterDir $coordMasterDir)

coordMaxWALsernder=2

coordMaxWALSenders=($coordMaxWALsernder $coordMaxWALsernder )

coordSlave=n

coordSlaveSync=n

coordArchLogDirs=($coordArchLogDir $coordArchLogDir)

coordExtraConfig=coordExtraConfig

cat > $coordExtraConfig <<EOF

#================================================

# Added to all the coordinator postgresql.conf

# Original: $coordExtraConfig

include_if_exists = '/opentenbase/global/global_opentenbase.conf'

wal_level = replica

wal_keep_segments = 256

max_wal_senders = 4

archive_mode = on

archive_timeout = 1800

archive_command = 'echo 0'

log_truncate_on_rotation = on

log_filename = 'postgresql-%M.log'

log_rotation_age = 4h

log_rotation_size = 100MB

hot_standby = on

wal_sender_timeout = 30min

wal_receiver_timeout = 30min

shared_buffers = 1024MB

max_pool_size = 2000

log_statement = 'ddl'

log_destination = 'csvlog'

logging_collector = on

log_directory = 'pg_log'

listen_addresses = '*'

max_connections = 2000

EOF

coordSpecificExtraConfig=(none none)

coordExtraPgHba=coordExtraPgHba

cat > $coordExtraPgHba <<EOF

local all all trust

host all all 0.0.0.0/0 trust

host replication all 0.0.0.0/0 trust

host all all ::1/128 trust

host replication all ::1/128 trust

EOF

coordSpecificExtraPgHba=(none none)

coordAdditionalSlaves=n

cad1_Sync=n

#---- Datanodes ---------------------

dn1MstrDir=/opentenbase/data/dn001

dn2MstrDir=/opentenbase/data/dn002

dn1SlvDir=/opentenbase/data/dn001

dn2SlvDir=/opentenbase/data/dn002

dn1ALDir=/opentenbase/data/datanode_archlog

dn2ALDir=/opentenbase/data/datanode_archlog

primaryDatanode=dn001

datanodeNames=(dn001 dn002)

datanodePorts=(40004 40004)

datanodePoolerPorts=(41110 41110)

datanodePgHbaEntries=(0.0.0.0/0)

datanodeMasterServers=($IP_1 $IP_2)

datanodeMasterDirs=($dn1MstrDir $dn2MstrDir)

dnWALSndr=4

datanodeMaxWALSenders=($dnWALSndr $dnWALSndr)

datanodeSlave=y

datanodeSlaveServers=($IP_2 $IP_1)

datanodeSlavePorts=(50004 54004)

datanodeSlavePoolerPorts=(51110 51110)

datanodeSlaveSync=n

datanodeSlaveDirs=($dn1SlvDir $dn2SlvDir)

datanodeArchLogDirs=($dn1ALDir/dn001 $dn2ALDir/dn002)

datanodeExtraConfig=datanodeExtraConfig

cat > $datanodeExtraConfig <<EOF

#================================================

# Added to all the coordinator postgresql.conf

# Original: $datanodeExtraConfig

include_if_exists = '/opentenbase/global/global_opentenbase.conf'

listen_addresses = '*'

wal_level = replica

wal_keep_segments = 256

max_wal_senders = 4

archive_mode = on

archive_timeout = 1800

archive_command = 'echo 0'

log_directory = 'pg_log'

logging_collector = on

log_truncate_on_rotation = on

log_filename = 'postgresql-%M.log'

log_rotation_age = 4h

log_rotation_size = 100MB

hot_standby = on

wal_sender_timeout = 30min

wal_receiver_timeout = 30min

shared_buffers = 1024MB

max_connections = 4000

max_pool_size = 4000

log_statement = 'ddl'

log_destination = 'csvlog'

wal_buffers = 1GB

EOF

datanodeSpecificExtraConfig=(none none)

datanodeExtraPgHba=datanodeExtraPgHba

cat > $datanodeExtraPgHba <<EOF

local all all trust

host all all 0.0.0.0/0 trust

host replication all 0.0.0.0/0 trust

host all all ::1/128 trust

host replication all ::1/128 trust

EOF

datanodeSpecificExtraPgHba=(none none)

datanodeAdditionalSlaves=n

walArchive=n

复制如上是结合本文演示环境的 IP,端口,数据库目录,二进制目录等规划来写的 pgxc_ctl.conf 文件。具体实践中只需按照自己的实际情况配置好即可。

分发二进制包

在主节点配置好 pgxc_ctl.conf 文件后,使用 pgxc_ctl 工具将二进制包部署到所有节点:

[opentenbase@oct01 pgxc_ctl]$ pgxc_ctl -c /opentenbase/pgxc_ctl/pgxc_ctl.conf /usr/bin/bash Installing pgxc_ctl_bash script as /home/opentenbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /home/opentenbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /home/opentenbase/pgxc_ctl/pgxc_ctl_bash --home /home/opentenbase/pgxc_ctl --configuration /opentenbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /home/opentenbase/pgxc_ctl PGXC复制

这里输入 deploy all 命令来完成分发:

## PGXC 后面需要输入指令 deploy all 回车继续

PGXC deploy all

Deploying Postgres-XL components to all the target servers.

Prepare tarball to deploy ...

Deploying to the server 192.168.6.87.

Deploying to the server 192.168.6.88.

Deployment done.

## 输入 exit 退出 PGXC 工具

PGXC exit

复制登录到所有节点,检查二进制包是否分发完成:

[root@otc02 ~]# su - opentenbase

[opentenbase@otc02 ~]$ cd /opentenbase/install/opentenbase_bin_v2.6/

[opentenbase@otc02 opentenbase_bin_v2.6]$ ll

total 12

drwxrwxr-x. 2 opentenbase opentenbase 4096 Nov 28 16:52 bin

drwxrwxr-x. 4 opentenbase opentenbase 4096 Nov 28 16:51 include

drwxrwxr-x. 4 opentenbase opentenbase 4096 Nov 28 16:51 lib

drwxrwxr-x. 4 opentenbase opentenbase 35 Nov 28 16:52 share

复制至此,二进制包分发完成。

初始化集群

这里需要先配置一下 /etc/environment 文件,否则初始化会报错很多命令找不到:

## 报错如下,很多命令找不到

PGXC init all

Initialize GTM master

bash: initgtm: command not found

bash: gtm: command not found

bash: gtm_ctl: command not found

Done.

Start GTM master

bash: gtm_ctl: command not found

复制配置 /etc/environment 文件(所有节点均需配置):

## 首先查看 opentenbase 用户的 PATH 变量值

[opentenbase@oct01 ~]$ echo $PATH

/opentenbase/install/opentenbase_bin_v2.6/bin:/home/opentenbase/.local/bin:/home/opentenbase/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin

## 切换到 root 用户,写入 PATH 变量值

[root@oct01 ~]# cat<<-EOF>>/etc/environment

PATH=/opentenbase/install/opentenbase_bin_v2.6/bin:/home/opentenbase/.local/bin:/home/opentenbase/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin

EOF

## 尝试切换到 opentenbase 用户,未报错则代表配置正常

[root@oct01 ~]# su - opentenbase

[opentenbase@oct01 ~]$

复制所有节点均配置完成后,参照上一步分发二进制包,继续使用 pgxc_ctl 工具初始化集群,

[opentenbase@oct01 ~]$ pgxc_ctl -c /opentenbase/pgxc_ctl/pgxc_ctl.conf /usr/bin/bash Installing pgxc_ctl_bash script as /home/opentenbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /home/opentenbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /home/opentenbase/pgxc_ctl/pgxc_ctl_bash --home /home/opentenbase/pgxc_ctl --configuration /opentenbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /home/opentenbase/pgxc_ctl PGXC复制

执行 init all 命令:

## PGXC 后面需要输入指令 init all 回车继续

PGXC init all

Initialize GTM master

The files belonging to this GTM system will be owned by user "opentenbase".

This user must also own the server process.

fixing permissions on existing directory /opentenbase/data/gtm ... ok

creating configuration files ... ok

creating xlog dir ... ok

Success.

3076450112:2024-11-29 09:36:17.425 CST -LOG: listening on IPv4 address "0.0.0.0", port 50001

LOCATION: StreamServerPort, pqcomm.c:397

3076450112:2024-11-29 09:36:17.426 CST -LOG: listening on IPv6 address "::", port 50001

LOCATION: StreamServerPort, pqcomm.c:397

3076450112:2024-11-29 09:36:17.427 CST -LOG: listening on Unix socket "/tmp/.s.GTM.50001"

LOCATION: StreamServerPort, pqcomm.c:391

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData context:

LOCATION: GTM_PrintControlData, gtm_xlog.c:2013

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->gtm_control_version = 20180716

LOCATION: GTM_PrintControlData, gtm_xlog.c:2014

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->xlog_seg_size = 2097152

LOCATION: GTM_PrintControlData, gtm_xlog.c:2015

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->xlog_blcksz = 4096

LOCATION: GTM_PrintControlData, gtm_xlog.c:2016

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->state = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2017

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->CurrBytePos = 4080

LOCATION: GTM_PrintControlData, gtm_xlog.c:2018

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->PrevBytePos = 4080

LOCATION: GTM_PrintControlData, gtm_xlog.c:2019

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->thisTimeLineID = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2020

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->prevCheckPoint = 0/0

LOCATION: GTM_PrintControlData, gtm_xlog.c:2021

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->checkPoint = 0/1000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2022

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->gts = 1000000000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2023

3076450112:2024-11-29 09:36:17.429 CST -LOG: ControlData->time = 1732844177

LOCATION: GTM_PrintControlData, gtm_xlog.c:2024

waiting for server to shut down....Received signal 15

done

server stopped

Done.

## ....

## 中间部分省略

## ....

2024-11-29 09:36:56.519 CST [120196,coord(0.0)] HINT: Future log output will appear in directory "pg_log".

Done.

ALTER NODE cn001 WITH (HOST='192.168.6.87', PORT=30004);

ALTER NODE

CREATE NODE cn002 WITH (TYPE='coordinator', HOST='192.168.6.88', PORT=30004);

CREATE NODE

CREATE NODE dn001 WITH (TYPE='datanode', HOST='192.168.6.87', PORT=40004, PRIMARY, PREFERRED);

CREATE NODE

CREATE NODE dn002 WITH (TYPE='datanode', HOST='192.168.6.88', PORT=40004);

CREATE NODE

SELECT pgxc_pool_reload();

pgxc_pool_reload

------------------

t

(1 row)

CREATE NODE cn001 WITH (TYPE='coordinator', HOST='192.168.6.87', PORT=30004);

CREATE NODE

ALTER NODE cn002 WITH (HOST='192.168.6.88', PORT=30004);

ALTER NODE

CREATE NODE dn001 WITH (TYPE='datanode', HOST='192.168.6.87', PORT=40004, PRIMARY);

CREATE NODE

CREATE NODE dn002 WITH (TYPE='datanode', HOST='192.168.6.88', PORT=40004, PREFERRED);

CREATE NODE

SELECT pgxc_pool_reload();

pgxc_pool_reload

------------------

t

(1 row)

Done.

EXECUTE DIRECT ON (dn001) 'CREATE NODE cn001 WITH (TYPE=''coordinator'', HOST=''192.168.6.87'', PORT=30004)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn001) 'CREATE NODE cn002 WITH (TYPE=''coordinator'', HOST=''192.168.6.88'', PORT=30004)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn001) 'ALTER NODE dn001 WITH (TYPE=''datanode'', HOST=''192.168.6.87'', PORT=40004, PRIMARY, PREFERRED)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn001) 'CREATE NODE dn002 WITH (TYPE=''datanode'', HOST=''192.168.6.88'', PORT=40004, PREFERRED)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn001) 'SELECT pgxc_pool_reload()';

pgxc_pool_reload

------------------

t

(1 row)

EXECUTE DIRECT ON (dn002) 'CREATE NODE cn001 WITH (TYPE=''coordinator'', HOST=''192.168.6.87'', PORT=30004)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn002) 'CREATE NODE cn002 WITH (TYPE=''coordinator'', HOST=''192.168.6.88'', PORT=30004)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn002) 'CREATE NODE dn001 WITH (TYPE=''datanode'', HOST=''192.168.6.87'', PORT=40004, PRIMARY, PREFERRED)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn002) 'ALTER NODE dn002 WITH (TYPE=''datanode'', HOST=''192.168.6.88'', PORT=40004, PREFERRED)';

EXECUTE DIRECT

EXECUTE DIRECT ON (dn002) 'SELECT pgxc_pool_reload()';

pgxc_pool_reload

------------------

t

(1 row)

Done.

复制这里初始化日志太长,篇幅原因,只展示部分日志。

我在 init 集群这一步遇到了很多坑,很容易报错,终端会打印出错误日志,通过查看错误原因,更改配置即可。也可以通过查看错误日志来排查:

## 日志路径是 $HOME/pgxc_ctl/pgxc_log

[opentenbase@oct01 pgxc_log]$ pwd

/home/opentenbase/pgxc_ctl/pgxc_log

## 所有的日志都记录在这里

[opentenbase@oct01 pgxc_log]$ ls

115286_pgxc_ctl.log 123051_pgxc_ctl.log 123061_pgxc_ctl.log 123071_pgxc_ctl.log 123083_pgxc_ctl.log 125018_pgxc_ctl.log 125060_pgxc_ctl.log

复制重新初始化集群之前需要先执行 clean all 命令删除已经初始化的文件:

PGXC clean all复制

清理之后可以重新执行 init all 命令重新发起初始化。

检查集群状态

通过 pgxc_ctl 工具的 monitor all 命令可以查看集群状态:

## 输入命令 monitor all 查看集群状态

PGXC monitor all

Running: gtm master

Running: gtm slave

Running: coordinator master cn001

Running: coordinator master cn002

Running: datanode master dn001

Running: datanode slave dn001

Running: datanode master dn002

Running: datanode slave dn002

复制停止集群

通过 pgxc_ctl 工具的 stop all 命令来停止集群,stop all 后面可以加上参数 -m fast 或者是 -m immediate 来决定如何停止各个节点:

PGXC help stop

stop [ -m smart | fast | immediate ] all

stop gtm [ master | slave | all ]

stop gtm_proxy [ all | nodename ... ]

stop [ -m smart | fast | immediate ] coordinator nodename ...

stop [ -m smart | fast | immediate ] coordinator [ master | slave ] [ all | nodename ... ]

stop [ -m smart | fast | immediate ] datanode nodename ...

stop [ -m smart | fast | immediate ] datanode [ master | slave ] [ all | nodename ... ]

Stops specified node

For more details, please see the pgxc_ctl documentation

复制使用 stop all -m fast 命令关闭集群:

PGXC stop all -m fast

Stopping all the coordinator masters.

Stopping coordinator master cn001.

Stopping coordinator master cn002.

Done.

Stopping all the datanode slaves.

Stopping datanode slave dn001.

Stopping datanode slave dn002.

Stopping all the datanode masters.

Stopping datanode master dn001.

Stopping datanode master dn002.

Done.

Stop GTM slave

waiting for server to shut down..... done

server stopped

Stop GTM master

waiting for server to shut down.... done

server stopped

复制集群关闭成功。

启动集群

通过 pgxc_ctl 工具的 start all 命令来启动集群:

PGXC help start

start all

start nodename ...

start gtm [ master | slave | all ]

start gtm_proxy [ all | nodename ... ]

start coordinator nodename ...

start coordinator [ master | slave ] [ all | nodename ... ]

start datanode nodename ...

start datanode [ master | slave ] [ all | nodename ... ]

Starts specified node

For more details, please see the pgxc_ctl documentation

复制使用 start all 命令启动集群:

PGXC start all

Start GTM master

waiting for server to shut down.... done

server stopped

server starting

228882240:2024-11-29 09:58:17.525 CST -LOG: listening on IPv4 address "0.0.0.0", port 50001

LOCATION: StreamServerPort, pqcomm.c:397

228882240:2024-11-29 09:58:17.525 CST -LOG: listening on IPv6 address "::", port 50001

LOCATION: StreamServerPort, pqcomm.c:397

228882240:2024-11-29 09:58:17.525 CST -LOG: listening on Unix socket "/tmp/.s.GTM.50001"

LOCATION: StreamServerPort, pqcomm.c:391

228882240:2024-11-29 09:58:17.525 CST -LOG: ControlData context:

LOCATION: GTM_PrintControlData, gtm_xlog.c:2013

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->gtm_control_version = 20180716

LOCATION: GTM_PrintControlData, gtm_xlog.c:2014

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->xlog_seg_size = 2097152

LOCATION: GTM_PrintControlData, gtm_xlog.c:2015

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->xlog_blcksz = 4096

LOCATION: GTM_PrintControlData, gtm_xlog.c:2016

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->state = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2017

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->CurrBytePos = 8355920

LOCATION: GTM_PrintControlData, gtm_xlog.c:2018

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->PrevBytePos = 8355840

LOCATION: GTM_PrintControlData, gtm_xlog.c:2019

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->thisTimeLineID = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2020

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->prevCheckPoint = 0/600000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2021

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->checkPoint = 0/800000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2022

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->gts = 3138279454

LOCATION: GTM_PrintControlData, gtm_xlog.c:2023

228882240:2024-11-29 09:58:17.526 CST -LOG: ControlData->time = 1732845496

LOCATION: GTM_PrintControlData, gtm_xlog.c:2024

Start GTM slavewaiting for server to shut down..... done

server stopped

server starting

3723429696:2024-11-29 09:58:21.856 CST -LOG: listening on IPv4 address "0.0.0.0", port 50001

LOCATION: StreamServerPort, pqcomm.c:397

3723429696:2024-11-29 09:58:21.856 CST -LOG: listening on IPv6 address "::", port 50001

LOCATION: StreamServerPort, pqcomm.c:397

3723429696:2024-11-29 09:58:21.856 CST -LOG: listening on Unix socket "/tmp/.s.GTM.50001"

LOCATION: StreamServerPort, pqcomm.c:391

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData context:

LOCATION: GTM_PrintControlData, gtm_xlog.c:2013

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->gtm_control_version = 20180716

LOCATION: GTM_PrintControlData, gtm_xlog.c:2014

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->xlog_seg_size = 2097152

LOCATION: GTM_PrintControlData, gtm_xlog.c:2015

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->xlog_blcksz = 4096

LOCATION: GTM_PrintControlData, gtm_xlog.c:2016

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->state = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2017

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->CurrBytePos = 8356052

LOCATION: GTM_PrintControlData, gtm_xlog.c:2018

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->PrevBytePos = 8355920

LOCATION: GTM_PrintControlData, gtm_xlog.c:2019

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->thisTimeLineID = 1

LOCATION: GTM_PrintControlData, gtm_xlog.c:2020

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->prevCheckPoint = 0/6000E4

LOCATION: GTM_PrintControlData, gtm_xlog.c:2021

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->checkPoint = 0/800000

LOCATION: GTM_PrintControlData, gtm_xlog.c:2022

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->gts = 3738437793

LOCATION: GTM_PrintControlData, gtm_xlog.c:2023

3723429696:2024-11-29 09:58:21.857 CST -LOG: ControlData->time = 1732845500

LOCATION: GTM_PrintControlData, gtm_xlog.c:2024

Done.

Starting coordinator master.

Starting coordinator master cn001

ERROR: target coordinator master cn001 is already running now. Skip initialization.

Starting coordinator master cn002

ERROR: target coordinator master cn002 is already running now. Skip initialization.

Done.

Starting all the datanode masters.

Starting datanode master dn001.

WARNING: datanode master dn001 is running now. Skipping.

Starting datanode master dn002.

WARNING: datanode master dn002 is running now. Skipping.

Done.

Starting all the datanode slaves.

Starting datanode slave dn001.

Starting datanode slave dn002.

pg_ctl: another server might be running; trying to start server anyway

2024-11-29 01:58:23.665 GMT [103473,coord(0.0)] LOG: skipping missing configuration file "/opentenbase/global/global_opentenbase.conf"

2024-11-29 01:58:23.665 GMT [103473,coord(0.0)] LOG: skipping missing configuration file "/opentenbase/global/global_opentenbase.conf"

2024-11-29 09:58:23.703 CST [103473,coord(0.0)] LOG: other_pid is datanode, result:openten+ 102251 1 0 09:50 ? 00:00:02 /opentenbase/install/opentenbase_bin_v2.6/bin/postgres --datanode -D /opentenbase/data/dn001

2024-11-29 09:58:23.704 CST [103473,coord(0.0)] FATAL: lock file "postmaster.pid" already exists

2024-11-29 09:58:23.704 CST [103473,coord(0.0)] HINT: Is another postmaster (PID 102251) running in data directory "/opentenbase/data/dn001"?

pg_ctl: could not start server

Examine the log output.

pg_ctl: another server might be running; trying to start server anyway

2024-11-29 01:58:22.954 GMT [125626,coord(0.0)] LOG: skipping missing configuration file "/opentenbase/global/global_opentenbase.conf"

2024-11-29 01:58:22.955 GMT [125626,coord(0.0)] LOG: skipping missing configuration file "/opentenbase/global/global_opentenbase.conf"

2024-11-29 09:58:22.979 CST [125626,coord(0.0)] LOG: other_pid is datanode, result:openten+ 124233 1 0 09:50 ? 00:00:02 /opentenbase/install/opentenbase_bin_v2.6/bin/postgres --datanode -D /opentenbase/data/dn002

2024-11-29 09:58:22.980 CST [125626,coord(0.0)] FATAL: lock file "postmaster.pid" already exists

2024-11-29 09:58:22.980 CST [125626,coord(0.0)] HINT: Is another postmaster (PID 124233) running in data directory "/opentenbase/data/dn002"?

pg_ctl: could not start server

Examine the log output.

Done.

复制集群启动成功。

访问集群

访问 OpenTenBase 集群和访问单机的 PostgreSQL 基本上无差别,我们可以通过任意一个 CN 访问数据库集群:例如通过连接 CN 节点 select pgxc_node 表即可查看集群的拓扑结构(当前的配置下备机不会展示在 pgxc_node 中),在 Linux 命令行下通过 psql 访问的具体示例如下:

[opentenbase@oct01 ~]$ psql -h 192.168.6.87 -p 30004 -d postgres -U opentenbase

psql (PostgreSQL 10.0 OpenTenBase V2)

Type "help" for help.

postgres=# \d

Did not find any relations.

postgres=# select * from pgxc_node;

node_name | node_type | node_port | node_host | nodeis_primary | nodeis_preferred | node_id | node_cluster_name

-----------+-----------+-----------+--------------+----------------+------------------+------------+---------------------

gtm | G | 50001 | 192.168.6.87 | t | f | 428125959 | opentenbase_cluster

cn001 | C | 30004 | 192.168.6.87 | f | f | -264077367 | opentenbase_cluster

cn002 | C | 30004 | 192.168.6.88 | f | f | -674870440 | opentenbase_cluster

dn001 | D | 40004 | 192.168.6.87 | t | t | 2142761564 | opentenbase_cluster

dn002 | D | 40004 | 192.168.6.88 | f | f | -17499968 | opentenbase_cluster

(5 rows)

复制创建 default group 和 sharding 表

OpenTenBase 使用 datanode group 来增加节点的管理灵活度,要求有一个 default group 才能使用,因此需要预先创建。一般情况下,会将节点的所有 datanode 节点加入到 default group 里:

postgres=# create default node group default_group with (dn001,dn002);

CREATE NODE GROUP

复制另外一方面,OpenTenBase 的数据分布为了增加灵活度,加了中间逻辑层来维护数据记录到物理节点的映射,我们叫 sharding,所以需要预先创建 sharding 表:

postgres=# create sharding group to group default_group;

CREATE SHARDING GROUP

复制连接使用

创建数据库,用户,创建表,增删查改:

postgres=# create database lucifer;

CREATE DATABASE

postgres=# create user lucifer with password 'lucifer';

CREATE ROLE

postgres=# alter database lucifer owner to lucifer;

ALTER DATABASE

postgres=# \c lucifer lucifer

You are now connected to database "lucifer" as user "lucifer".

lucifer=> create table test(id bigint, str text) distribute by shard(id);

CREATE TABLE

lucifer=> insert into test values(1, 'tencent'), (2, 'shenzhen');

COPY 2

lucifer=> select * from test;

id | str

----+----------

1 | tencent

2 | shenzhen

(2 rows)

复制至此,就可以跟使用单机数据库一样来访问 OpenTenBase 数据库集群了。

拓展

TencentOS 3 在 PowerTools 中支持了 OpenTenBase 的 RPM 安装,可以直接安装单机版。

如果是联网情况,只需要开启 PowerTools 源即可实现一键安装 OpenTenBase:

[PowerTools]

name=TencentOS Server $releasever - PowerTools

baseurl=http://mirrors.tencent.com/tlinux/$releasever/PowerTools/$basearch/os/

http://mirrors.tencentyun.com/tlinux/$releasever/PowerTools/$basearch/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-TencentOS-3

复制一键安装单机 OpenTenBase:

yum install -y OpenTenBase-2.6.0-1.tl3.x86_64.rpm复制

如果是离线环境,则可以下载 OpenTenBase-2.6.0-1.tl3.x86_64.rpm:

上传主机后手动进行安装即可。

写在最后

本文主要介绍如何从源码开始,一步一步搭建一个完整的 OpenTenBase 集群,网上相关的文档比较少,摸索过程中遇到了不少坑,好在最终都解决!

如果有遗漏或者不足的地方,欢迎评论区补充或者投稿,感谢阅读!

往期精彩文章

达梦数据库安装最详细教程

一招教你学会达梦数据库的免密登录

一文讲透达梦数据库的大小写敏感

效率翻倍!达梦数据库 disql 使用技巧全攻略

达梦数据库参数配置与一键优化指南

达梦 AWR 报告快速上手指南

金仓数据库 KingbaseES V9 单机安装指南

KingbaseES KSQL 免密登录的几种方式

KingbaseES 控制文件冗余与恢复秘籍

金仓 KingbaseES RAC 入门指南

GBase 8a MPP 集群部署最佳实践

GBase 8a GDCA 认证课后练习题大全(题库)

南大通用 GBASE 8s V8.8 数据库最全安装指南

GBase 8s GDCA 认证课后练习题大全(题库)

GBase 8s 数据库巡检报告及一键巡检脚本

YashanDB 一键生成 AWR 报告

YashanDB 数据库安装部署

YashanDB 共享集群(YAC)入门指南与技术详解