1、Partnership and Status Table(PST) 介绍

Partnership and Status Table简称PST表,包含了一个磁盘组中所有磁盘的相关信息-磁盘号,磁盘状态,partner的磁盘号,心跳信息和failgroup的信息(11G及以上版本)。

简单理解,PST,就是存放diskgroup中disk的关系以及disk状态灯信息。

PST对于ASM非常重要,在读取其他ASM metadata之前会先检查PST,当mount diskgroup时,GMON进程会读取diskgroup中所有磁盘去找到和确认PST,通过PST可以确认哪些磁盘是可以ONLINE使用的,哪些是OFFLINE的。

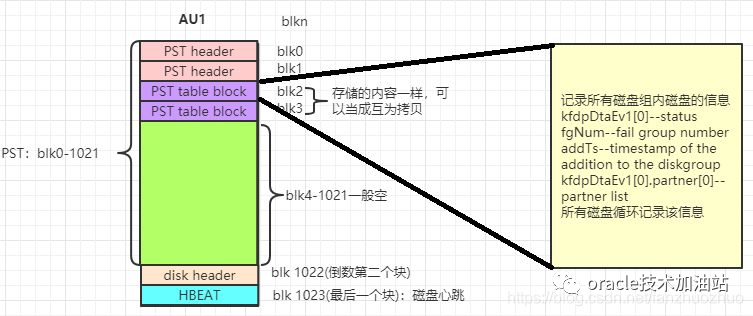

每个磁盘的AU 1是为PST表预留的,但是并不是每一个磁盘都有PST表的信息。

pst 份数

外部冗余磁盘组,只有1个磁盘上有PST信息;normal 有3份PST,high有5份PST(kfdpHdrPairBv1.first.super.copyCnt)

ASM的磁盘冗余机制是通过将extent的镜像副本复制到同一磁盘组不同failgroup的磁盘上来实现的。这个磁盘被称为partner磁盘。

磁盘关系

在external冗余方式的磁盘组内既没有failgroup,磁盘之间也没有partner关系。

如果normal冗余的磁盘组有2个磁盘,他们之间就是partner关系。所有在磁盘0上的extent都在磁盘1上有镜像副本。

如果normal冗余的磁盘组有3个磁盘,同时没有手工指定failgroup的情况下,每个磁盘将拥有2个partner,磁盘0与磁盘1,2有partner关系。磁盘2与磁盘0,1有partner关系。

注意:磁盘的Partnership是在normal或者high冗余的磁盘组上,在两个磁盘之间有一个“对称”的关系。在external冗余中,不存在disk partnership。

PST 可用性

在读取其它ASM metadata之前会先检查PST

当ASM实例被要求mount diskgroup时,GMON进程会读取diskgroup中所有磁盘去找到和确认可用的PST副本,一旦确认有足够的PST副本,就把磁盘组mount上来。

之后,PST会被缓存到ASM缓存中,以及GMON的PGA中并使用排他的PT.n.0 enqueue排他锁进行保护。

对于同一集群的其他ASM实例,在启动之后会把PST表缓存在自己的GMON PGA中,加上共享的PT.n.0 enqueue。

只有拥有排他PT enqueue锁的GMON进程(10gR1中为CKPT),可以更新磁盘中的PST表信息。

2、创建不同磁盘组 pst 份数在asm alert日志的体现

请看下面的例子,注意这个被创建的磁盘组是由不同磁盘数量组成:

1)、创建external磁盘组(至少1块盘)

create diskgroup wx_ext external redundancy disk '/dev/asmdisk5' disk '/dev/asmdata3' attribute 'au_size'='1M';

//查看alert日志,/u01/app/grid/diag/asm/+asm/+ASM1/trace/alert_+ASM1.log

SQL> create diskgroup wx_ext external redundancy disk '/dev/asmdisk5' disk '/dev/asmdata3' attribute 'au_size'='1M'

NOTE: Assigning number (4,0) to disk (/dev/asmdisk5)

NOTE: Assigning number (4,1) to disk (/dev/asmdata3)

NOTE: initializing header on grp 4 disk WX_EXT_0000

NOTE: initializing header on grp 4 disk WX_EXT_0001

NOTE: initiating PST update: grp = 4

Wed May 24 15:02:05 2023

GMON updating group 4 at 44 for pid 29, osid 9927

NOTE: group WX_EXT: initial PST location: disk 0000 (PST copy 0) --alert 日志可以看到ASM只创建了一份pst表,位于disk 0000 (即/dev/asmdisk5)

NOTE: PST update grp = 4 completed successfully

NOTE: cache registered group WX_EXT number=4 incarn=0xfe096f1a

NOTE: cache began mount (first) of group WX_EXT number=4 incarn=0xfe096f1a

NOTE: cache opening disk 0 of grp 4: WX_EXT_0000 path:/dev/asmdisk5

NOTE: cache opening disk 1 of grp 4: WX_EXT_0001 path:/dev/asmdata3

2)、创建normal磁盘组(至少2块盘)

create diskgroup wx_nor normal redundancy failgroup fg1 disk '/dev/asmdata6' failgroup fg2 disk '/dev/asmdata7'

attribute 'au_size'='1M','compatible.asm'='11.2','compatible.rdbms'='11.2';

//查看alert日志,/u01/app/grid/diag/asm/+asm/+ASM1/trace/alert_+ASM1.log

SQL> create diskgroup wx_nor normal redundancy failgroup fg1 disk '/dev/asmdata6' failgroup fg2 disk '/dev/asmdata7'

attribute 'au_size'='1M','compatible.asm'='11.2','compatible.rdbms'='11.2'

NOTE: Assigning number (5,0) to disk (/dev/asmdata6)

NOTE: Assigning number (5,1) to disk (/dev/asmdata7)

NOTE: initializing header on grp 5 disk WX_NOR_0000

NOTE: initializing header on grp 5 disk WX_NOR_0001

Wed May 24 15:38:37 2023

GMON updating for reconfiguration, group 5 at 68 for pid 29, osid 9927

NOTE: group 5 PST updated.

NOTE: initiating PST update: grp = 5

GMON updating group 5 at 69 for pid 29, osid 9927

NOTE: group WX_NOR: initial PST location: disk 0000 (PST copy 0)--alert 日志可以看到ASM只创建了2 份pst表,位于disk 0000~1 (即/dev/asmdisk6~7)

NOTE: group WX_NOR: initial PST location: disk 0001 (PST copy 1)

NOTE: group WX_NOR: updated PST location: disk 0000 (PST copy 0)

NOTE: group WX_NOR: updated PST location: disk 0001 (PST copy 1)

NOTE: PST update grp = 5 completed successfully

3)、创建high redundancy磁盘组(至少3块盘)

create diskgroup wx_high high redundancy failgroup fg1 disk '/dev/asmdata9' failgroup fg2 disk '/dev/asmdata10' failgroup fg3 disk '/dev/asmdata11'

attribute 'au_size'='1M','compatible.asm'='11.2','compatible.rdbms'='11.2';

//查看alert日志,/u01/app/grid/diag/asm/+asm/+ASM1/trace/alert_+ASM1.log

SQL> create diskgroup wx_high high redundancy failgroup fg1 disk '/dev/asmdata9' failgroup fg2 disk '/dev/asmdata10' failgroup fg3 disk '/dev/asmdata11'

attribute 'au_size'='1M','compatible.asm'='11.2','compatible.rdbms'='11.2'

NOTE: Assigning number (6,0) to disk (/dev/asmdata9)

NOTE: Assigning number (6,1) to disk (/dev/asmdata10)

NOTE: Assigning number (6,2) to disk (/dev/asmdata11)

NOTE: initializing header on grp 6 disk WX_HIGH_0000

NOTE: initializing header on grp 6 disk WX_HIGH_0001

NOTE: initializing header on grp 6 disk WX_HIGH_0002

Wed May 24 15:14:05 2023

GMON updating for reconfiguration, group 6 at 59 for pid 29, osid 9927

NOTE: group 6 PST updated.

NOTE: initiating PST update: grp = 6

GMON updating group 6 at 60 for pid 29, osid 9927

NOTE: group WX_HIGH: initial PST location: disk 0000 (PST copy 0) --alert 日志可以看到ASM只创建了3 份pst表,位于disk 0000~2 (即/dev/asmdisk9~11)

NOTE: group WX_HIGH: initial PST location: disk 0001 (PST copy 1)

NOTE: group WX_HIGH: initial PST location: disk 0002 (PST copy 2)

NOTE: group WX_HIGH: updated PST location: disk 0000 (PST copy 0)

NOTE: group WX_HIGH: updated PST location: disk 0001 (PST copy 1)

NOTE: group WX_HIGH: updated PST location: disk 0002 (PST copy 2)

NOTE: PST update grp = 6 completed successfully

NOTE: cache registered group WX_HIGH number=6 incarn=0x95e96f24

NOTE: cache began mount (first) of group WX_HIGH number=6 incarn=0x95e96f24

NOTE: cache opening disk 0 of grp 6: WX_HIGH_0000 path:/dev/asmdata9

NOTE: cache opening disk 1 of grp 6: WX_HIGH_0001 path:/dev/asmdata10

NOTE: cache opening disk 2 of grp 6: WX_HIGH_0002 path:/dev/asmdata11

3、Partnership and Status Table(PST) 存储位置

通过前面的学习,可以知道,pst位于物理元数据的AU 1中,通过下面语句扫对应的磁盘也可以确认这一点:

set line 200

col diskgroup_name for a15

col disk_name for a30

col path for a40

select a.name diskgroup_name,a.type,a.allocation_unit_size/1024/1024 unit_mb,a.voting_files,b.name disk_name,b.path from v$asm_diskgroup a,v$asm_disk b where a.group_number=b.group_number;

DISKGROUP_NAME TYPE UNIT_MB VO DISK_NAME PATH

--------------- ------------ ---------- -- ------------------------------ ----------------------------------------

DATA EXTERN 1 N DATA_0001 dev/asmdata2

DATA EXTERN 1 N DATA_0000 dev/asmdata1

FRA EXTERN 1 N FRA_0001 dev/asmdisk4

FRA EXTERN 1 N FRA_0000 dev/asmdisk6

OCR NORMAL 4 Y OCR_0001 dev/asmdisk2

OCR NORMAL 4 Y OCR_0000 dev/asmdisk1

OCR NORMAL 4 Y OCR_0002 dev/asmdisk3

WX_EXT EXTERN 1 N WX_EXT_0001 dev/asmdata3

WX_EXT EXTERN 1 N WX_EXT_0000 dev/asmdisk5

WX_NOR NORMAL 1 N WX_NOR_0002 dev/asmdata8

WX_NOR NORMAL 1 N WX_NOR_0001 dev/asmdata7

WX_NOR NORMAL 1 N WX_NOR_0000 dev/asmdata6

WX_HIGH HIGH 1 N WX_HIGH_0002 dev/asmdata11

WX_HIGH HIGH 1 N WX_HIGH_0001 dev/asmdata10

WX_HIGH HIGH 1 N WX_HIGH_0000 dev/asmdata9

for i in `ls dev/asmdata6;ls dev/asmdata7`

do

ausize=`kfed read $i|grep ausize|tr -s ' '|cut -d ' ' -f2`

blksize=`kfed read $i|grep blksize|tr -s ' '|cut -d ' ' -f2`

if [ ! -z $ausize ] || [ ! -z $blksize ] ;then

let n=$ausize/$blksize

echo "磁盘" $i "块数量为: " $n

#这里设置au,只看au 1,如果是范围,改为{0..2}

for k in 1;do

for((j=0;j<$n;j++));do

echo -n "磁盘 $i au $k" "BLOCK"$j "块类型为 > "

#kfed read $i aun=$k blkn=$j aus=$ausize|grep -i 'kfbh.type'|tr -d ' '|awk -F":" '{print $NF}'

type=`kfed read $i aun=$k blkn=$j aus=$ausize|egrep -i 'kfbh.type|kfbh.block.blk'|tr -d ' '|awk -F":" '{print $NF}'|paste -d ";" - -`

echo $type

done

done

fi

done

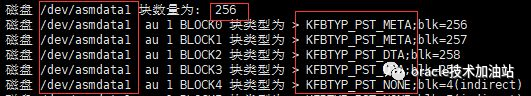

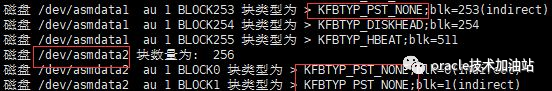

--扫描data磁盘组asmdata1/asmdata2的au 1全部块看数据块类型 `ls dev/asmdata1;ls dev/asmdata2`

--external 磁盘组情况,只有1份PST信息保存在1个磁盘,磁盘和磁盘之间没有关系;

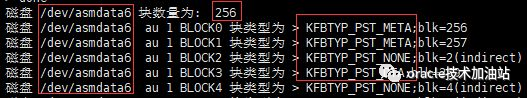

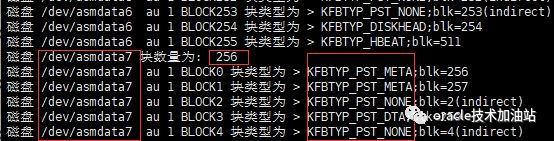

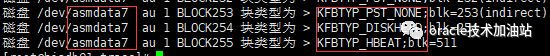

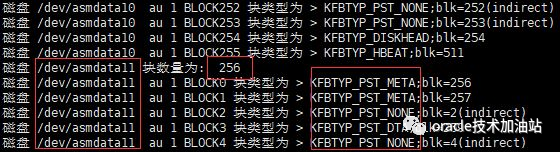

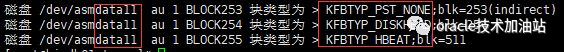

--扫描WX_NOR磁盘组asmdata6/asmdata7的au 1全部块看数据块类型 `ls dev/asmdata6;ls dev/asmdata7`

--normal 磁盘组情况,最多3份pst,这里2块盘,各种都有1份pst存放在自己的磁盘上,他们几个磁盘之间就是partner关系。

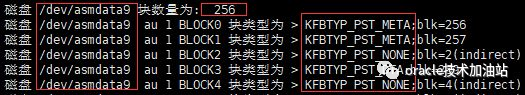

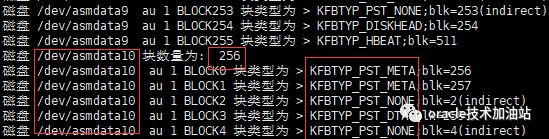

--扫描WX_HIGH磁盘组asmdata9/asmdata10/asmdata11的au 1全部块看数据块类型 `ls dev/asmdata9;ls dev/asmdata10;ls dev/asmdata11`

--high 磁盘组情况,最多5份pst,这里3块盘,各种都有1份pst存放在自己的磁盘上,他们两个磁盘之间就是partner关系。每个磁盘将拥有2个partner,磁盘0与磁盘1,2有partner关系。磁盘2与磁盘0,1有partner关系。

从上面信息可以看出,在前面2个block是存的pst元数据,而blk 2,3则是存放的pst数据。首先我们来分析pst的元数据:

//pst header

1) external disk

[root@hisdb01 ~]# kfed read dev/asmdata1 aun=1 blkn=0 aus=1048576|more

kfbh.endian: 1 ; 0x000: 0x01 --0表示big endian,1表示little endian

kfbh.hard: 130 ; 0x001: 0x82 --这里是HARD magic number

kfbh.type: 17 ; 0x002: KFBTYP_PST_META --数据块类型

kfbh.datfmt: 2 ; 0x003: 0x02 --表示原数据块格式

kfbh.block.blk: 256 ; 0x004: blk=256 --表示block位置

kfbh.block.obj: 2147483648 ; 0x008: disk=0

kfbh.check: 2642746608 ; 0x00c: 0x9d851cf0 --主要是做校验用的,check一致性

kfbh.fcn.base: 0 ; 0x010: 0x00000000

kfbh.fcn.wrap: 0 ; 0x014: 0x00000000

kfbh.spare1: 0 ; 0x018: 0x00000000

kfbh.spare2: 0 ; 0x01c: 0x00000000

kfdpHdrPairBv1.first.super.time.hi:33150705 ; 0x000: HOUR=0x11 DAYS=0x17 MNTH=0x5 YEAR=0x7e7 --最后一次pst元数据的更新时间,高位

kfdpHdrPairBv1.first.super.time.lo:340740096 ; 0x004: USEC=0x0 MSEC=0x3d2 SECS=0x4 MINS=0x5 --最后一次pst元数据的更新时间,低位

kfdpHdrPairBv1.first.super.last: 3 ; 0x008: 0x00000003 --最新的disk版本号

kfdpHdrPairBv1.first.super.next: 3 ; 0x00c: 0x00000003 --下一个disk的版本号

kfdpHdrPairBv1.first.super.copyCnt: 1 ; 0x010: 0x01 --表示PST元数据的份数,如果是1表示磁盘组是external,normal最小2,最大3,high 最小3,最大5

kfdpHdrPairBv1.first.super.version: 1 ; 0x011: 0x01

kfdpHdrPairBv1.first.super.ub2spare: 0 ; 0x012: 0x0000

kfdpHdrPairBv1.first.super.incarn: 1 ; 0x014: 0x00000001

kfdpHdrPairBv1.first.super.copy[0]: 0 ; 0x018: 0x0000

kfdpHdrPairBv1.first.super.copy[1]: 0 ; 0x01a: 0x0000

kfdpHdrPairBv1.first.super.copy[2]: 0 ; 0x01c: 0x0000

kfdpHdrPairBv1.first.super.copy[3]: 0 ; 0x01e: 0x0000

kfdpHdrPairBv1.first.super.copy[4]: 0 ; 0x020: 0x0000

kfdpHdrPairBv1.first.super.dtaSz: 2 ; 0x022: 0x0002 --表示pst元数据中,diskgroup所包含的disk个数

kfdpHdrPairBv1.first.asmCompat:186646528 ; 0x024: 0x0b200000

kfdpHdrPairBv1.first.newCopy[0]: 0 ; 0x028: 0x0000

kfdpHdrPairBv1.first.newCopy[1]: 0 ; 0x02a: 0x0000

kfdpHdrPairBv1.first.newCopy[2]: 0 ; 0x02c: 0x0000

kfdpHdrPairBv1.first.newCopy[3]: 0 ; 0x02e: 0x0000

kfdpHdrPairBv1.first.newCopy[4]: 0 ; 0x030: 0x0000

kfdpHdrPairBv1.first.newCopyCnt: 0 ; 0x032: 0x00

kfdpHdrPairBv1.first.contType: 1 ; 0x033: 0x01

kfdpHdrPairBv1.first.spare0: 0 ; 0x034: 0x00000000

kfdpHdrPairBv1.first.ppat[0]: 0 ; 0x038: 0x0000

kfdpHdrPairBv1.first.ppat[1]: 0 ; 0x03a: 0x0000

kfdpHdrPairBv1.first.ppat[2]: 0 ; 0x03c: 0x0000

kfdpHdrPairBv1.first.ppat[3]: 0 ; 0x03e: 0x0000

kfdpHdrPairBv1.first.ppatsz: 0 ; 0x040: 0x00

kfdpHdrPairBv1.first.spare1: 0 ; 0x041: 0x00

kfdpHdrPairBv1.first.spare2: 0 ; 0x042: 0x0000

kfdpHdrPairBv1.first.spares[0]: 0 ; 0x044: 0x00000000

..

kfdpHdrPairBv1.first.spares[14]: 0 ; 0x07c: 0x00000000

kfdpHdrPairBv1.first.spares[15]: 0 ; 0x080: 0x00000000

...

ub1[0]: 0 ; 0x108: 0x00

...

ub1[3799]: 0 ; 0xfdf: 0x00

2) normal disk

drop diskgroup wx_nor;

create diskgroup wx_nor normal redundancy failgroup fg1 disk '/dev/asmdata6' failgroup fg2 disk '/dev/asmdata7' failgroup fg3 disk '/dev/asmdata8'

attribute 'au_size'='1M','compatible.asm'='11.2','compatible.rdbms'='11.2';

[root@hisdb01 ~]# kfed read dev/asmdata6 aun=1 blkn=0 aus=1048576|more

kfbh.endian: 1 ; 0x000: 0x01

kfbh.hard: 130 ; 0x001: 0x82

kfbh.type: 17 ; 0x002: KFBTYP_PST_META

kfbh.datfmt: 2 ; 0x003: 0x02

kfbh.block.blk: 256 ; 0x004: blk=256

kfbh.block.obj: 2147483648 ; 0x008: disk=0

kfbh.check: 3090386217 ; 0x00c: 0xb8338d29

kfbh.fcn.base: 0 ; 0x010: 0x00000000

kfbh.fcn.wrap: 0 ; 0x014: 0x00000000

kfbh.spare1: 0 ; 0x018: 0x00000000

kfbh.spare2: 0 ; 0x01c: 0x00000000

kfdpHdrPairBv1.first.super.time.hi:33150762 ; 0x000: HOUR=0xa DAYS=0x19 MNTH=0x5 YEAR=0x7e7

kfdpHdrPairBv1.first.super.time.lo:838457344 ; 0x004: USEC=0x0 MSEC=0x276 SECS=0x1f MINS=0xc

kfdpHdrPairBv1.first.super.last: 2 ; 0x008: 0x00000002

kfdpHdrPairBv1.first.super.next: 2 ; 0x00c: 0x00000002

kfdpHdrPairBv1.first.super.copyCnt: 3 ; 0x010: 0x03 --PST有三个副本,分别在0、1、2三个磁盘上

kfdpHdrPairBv1.first.super.version: 1 ; 0x011: 0x01

kfdpHdrPairBv1.first.super.ub2spare: 0 ; 0x012: 0x0000

kfdpHdrPairBv1.first.super.incarn: 1 ; 0x014: 0x00000001

kfdpHdrPairBv1.first.super.copy[0]: 0 ; 0x018: 0x0000 --0号disk

kfdpHdrPairBv1.first.super.copy[1]: 1 ; 0x01a: 0x0001 --1号disk

kfdpHdrPairBv1.first.super.copy[2]: 2 ; 0x01c: 0x0002 --2号disk

kfdpHdrPairBv1.first.super.copy[3]: 0 ; 0x01e: 0x0000

kfdpHdrPairBv1.first.super.copy[4]: 0 ; 0x020: 0x0000

kfdpHdrPairBv1.first.super.dtaSz: 3 ; 0x022: 0x0003

kfdpHdrPairBv1.first.asmCompat:186646528 ; 0x024: 0x0b200000

kfdpHdrPairBv1.first.newCopy[0]: 0 ; 0x028: 0x0000

...

ub1[0]: 2 ; 0x108: 0x02

备注:external 磁盘kfed读取 au 1 blkn 0(kfdpHdrPairBv1.first.super.copyCnt),为1

备注:normal 磁盘kfed读取两块盘 au 1 blkn 0(kfdpHdrPairBv1.first.super.copyCnt),均为2,如果失败组超过了3个,那么最大pst副本为3

备注:high 磁盘kfed读取两块盘 au 1 blkn 0(kfdpHdrPairBv1.first.super.copyCnt),均为3,如果失败组超过了5个,那么最大pst副本为5

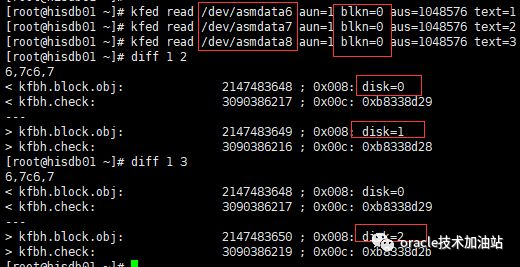

//对比normal 3个磁盘 1号au的相同块【blkn=0 blkn=1】,发现1处不一样,其余均相同;也就是说如果这部分数据出现问题,可以通过这些块重构恢复;

//相同磁盘,pst header block 0,1 {KFBTYP_PST_META} 对比

--由于块0和块1都是相同类型,我这里通过命令把两个块dump出来,然后通过diff 分析下内容区别:

[root@hisdb01 ~]# kfed read dev/asmdata1 aun=1 blkn=0 aus=1048576 text=pst0

[root@hisdb01 ~]# kfed read dev/asmdata1 aun=1 blkn=1 aus=1048576 text=pst1

[root@hisdb01 ~]# diff pst0 pst1

5c5

< kfbh.block.blk: 256 ; 0x004: blk=256

---

> kfbh.block.blk: 257 ; 0x004: blk=257

7c7

< kfbh.check: 2642746608 ; 0x00c: 0x9d851cf0

---

> kfbh.check: 2408841459 ; 0x00c: 0x8f9400f3

13,14c13,14

< kfdpHdrPairBv1.first.super.time.lo:340740096 ; 0x004: USEC=0x0 MSEC=0x3d2 SECS=0x4 MINS=0x5

< kfdpHdrPairBv1.first.super.last: 3 ; 0x008: 0x00000003

---

> kfdpHdrPairBv1.first.super.time.lo:106845184 ; 0x004: USEC=0x0 MSEC=0x395 SECS=0x25 MINS=0x1

> kfdpHdrPairBv1.first.super.last: 2 ; 0x008: 0x00000002

104c104

< ub1[0]: 0 ; 0x108: 0x00

---

> ub1[0]: 3 ; 0x108: 0x03

--通过分析相同磁盘AU 1 块0 和块1{KFBTYP_PST_META},发现有四处不同,但是只有两处重要,第一是块号,第二是ub1位置(观察其它磁盘块发现都是0,3两个值);

kfed read dev/asmdata8 aun=1 blkn=0 aus=1048576|grep "ub1\[0\]"

kfed read dev/asmdata8 aun=1 blkn=1 aus=1048576|grep "ub1\[0\]"

//pst table block

1) external disk

[root@hisdb01 ~]# kfed read dev/asmdata1 aun=1 blkn=2 aus=1048576|more

kfbh.endian: 1 ; 0x000: 0x01

kfbh.hard: 130 ; 0x001: 0x82

kfbh.type: 18 ; 0x002: KFBTYP_PST_DTA

kfbh.datfmt: 2 ; 0x003: 0x02

kfbh.block.blk: 258 ; 0x004: blk=258

kfbh.block.obj: 2147483648 ; 0x008: disk=0 --磁盘编号

kfbh.check: 2182185731 ; 0x00c: 0x82118303

kfbh.fcn.base: 0 ; 0x010: 0x00000000

kfbh.fcn.wrap: 0 ; 0x014: 0x00000000

kfbh.spare1: 0 ; 0x018: 0x00000000

kfbh.spare2: 0 ; 0x01c: 0x00000000

kfdpDtaEv1[0].status: 127 ; 0x000: I=1 V=1 V=1 P=1 P=1 A=1 D=1 --磁盘状态

kfdpDtaEv1[0].fgNum: 1 ; 0x002: 0x0001 --failgroup number

kfdpDtaEv1[0].addTs: 2641301605 ; 0x004: 0x9d6f1065 --添加到磁盘组的时间戳

kfdpDtaEv1[0].partner[0]: 10000 ; 0x008: P=0 P=0 PART=0x2710 --partner list(这里表示没有)

kfdpDtaEv1[0].partner[1]: 0 ; 0x00a: P=0 P=0 PART=0x0

kfdpDtaEv1[0].partner[2]: 0 ; 0x00c: P=0 P=0 PART=0x0

kfdpDtaEv1[0].partner[3]: 0 ; 0x00e: P=0 P=0 PART=0x0

...

kfdpDtaEv1[1].status: 127 ; 0x030: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[1].fgNum: 2 ; 0x032: 0x0002

kfdpDtaEv1[1].addTs: 2641301605 ; 0x034: 0x9d6f1065

kfdpDtaEv1[1].partner[0]: 10000 ; 0x038: P=0 P=0 PART=0x2710

kfdpDtaEv1[1].partner[1]: 0 ; 0x03a: P=0 P=0 PART=0x0

...

kfdpDtaEv1[83].status: 0 ; 0xf90: I=0 V=0 V=0 P=0 P=0 A=0 D=0

...

2) normal disk(注意块2是未使用的)

[root@hisdb01 ~]# kfed read dev/asmdata6 aun=1 blkn=3 aus=1048576|more

...

kfdpDtaEv1[0].status: 127 ; 0x000: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[0].fgNum: 1 ; 0x002: 0x0001

kfdpDtaEv1[0].addTs: 2641426853 ; 0x004: 0x9d70f9a5

kfdpDtaEv1[0].partner[0]: 49153 ; 0x008: P=1 P=1 PART=0x1 --partner 磁盘关系 0 ->> 1

kfdpDtaEv1[0].partner[1]: 10000 ; 0x00a: P=0 P=0 PART=0x2710

...

kfdpDtaEv1[1].status: 127 ; 0x030: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[1].fgNum: 2 ; 0x032: 0x0002

kfdpDtaEv1[1].addTs: 2641426853 ; 0x034: 0x9d70f9a5

kfdpDtaEv1[1].partner[0]: 49152 ; 0x038: P=1 P=1 PART=0x0

kfdpDtaEv1[1].partner[1]: 10000 ; 0x03a: P=0 P=0 PART=0x2710

...

以上输出代表磁盘0(kfdpDtaEv1[0])所在failgroup号为1,它有1个partner磁盘,为PART=0x1

[root@hisdb01 ~]# kfed read dev/asmdata7 aun=1 blkn=3 aus=1048576|more

...

kfdpDtaEv1[0].status: 127 ; 0x000: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[0].fgNum: 1 ; 0x002: 0x0001

kfdpDtaEv1[0].addTs: 2641426853 ; 0x004: 0x9d70f9a5

kfdpDtaEv1[0].partner[0]: 49153 ; 0x008: P=1 P=1 PART=0x1 --partner 磁盘关系 0 ->> 1

kfdpDtaEv1[0].partner[1]: 10000 ; 0x00a: P=0 P=0 PART=0x2710

kfdpDtaEv1[0].partner[2]: 0 ; 0x00c: P=0 P=0 PART=0x0

...

kfdpDtaEv1[1].status: 127 ; 0x030: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[1].fgNum: 2 ; 0x032: 0x0002

kfdpDtaEv1[1].addTs: 2641426853 ; 0x034: 0x9d70f9a5

kfdpDtaEv1[1].partner[0]: 49152 ; 0x038: P=1 P=1 PART=0x0

kfdpDtaEv1[1].partner[1]: 10000 ; 0x03a: P=0 P=0 PART=0x2710

...

以上输出代表磁盘0(kfdpDtaEv1[0])所在failgroup号为1,它有1个partner磁盘,为PART=0x1

--通过观察normal冗余下,两块磁盘的pst blkn 3 上面的值基本上都是一样的,只是磁盘号、校验码这里有所不同;

[root@hisdb01 trace]# kfed read dev/asmdata6 aun=1 blkn=3 aus=1048576 text=pstn1

[root@hisdb01 trace]# kfed read /dev/asmdata7 aun=1 blkn=3 aus=1048576 text=pstn2

[root@hisdb01 trace]# diff pstn1 pstn2

6,7c6,7

< kfbh.block.obj: 2147483648 ; 0x008: disk=0

< kfbh.check: 2182185731 ; 0x00c: 0x82118303

---

> kfbh.block.obj: 2147483649 ; 0x008: disk=1

> kfbh.check: 2182185730 ; 0x00c: 0x82118302

3) high disk(注意块2是未使用的)

[root@hisdb01 ~]# kfed read /dev/asmdata9 aun=1 blkn=3 aus=1048576|more

...

kfdpDtaEv1[0].status: 127 ; 0x000: I=1 V=1 V=1 P=1 P=1 A=1 D=1 --磁盘组状态

kfdpDtaEv1[0].fgNum: 1 ; 0x002: 0x0001 --failgroup 编号

kfdpDtaEv1[0].addTs: 2641425285 ; 0x004: 0x9d70f385

kfdpDtaEv1[0].partner[0]: 49153 ; 0x008: P=1 P=1 PART=0x1 --partner 磁盘关系 0 ->> 1

kfdpDtaEv1[0].partner[1]: 49154 ; 0x00a: P=1 P=1 PART=0x2 --partner 磁盘关系 0 ->> 2

kfdpDtaEv1[0].partner[2]: 10000 ; 0x00c: P=0 P=0 PART=0x2710 --简单理解,上面就是说磁盘0与磁盘1、2有关系

...

kfdpDtaEv1[1].status: 127 ; 0x030: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[1].fgNum: 2 ; 0x032: 0x0002

kfdpDtaEv1[1].addTs: 2641425285 ; 0x034: 0x9d70f385

kfdpDtaEv1[1].partner[0]: 49152 ; 0x038: P=1 P=1 PART=0x0 --partner 磁盘关系 1 ->> 0

kfdpDtaEv1[1].partner[1]: 49154 ; 0x03a: P=1 P=1 PART=0x2 --partner 磁盘关系 1 ->> 2

kfdpDtaEv1[1].partner[2]: 10000 ; 0x03c: P=0 P=0 PART=0x2710 --简单理解,上面就是说磁盘1与磁盘0、2有关系

...

kfdpDtaEv1[2].status: 127 ; 0x060: I=1 V=1 V=1 P=1 P=1 A=1 D=1

kfdpDtaEv1[2].fgNum: 3 ; 0x062: 0x0003

kfdpDtaEv1[2].addTs: 2641425285 ; 0x064: 0x9d70f385

kfdpDtaEv1[2].partner[0]: 49152 ; 0x068: P=1 P=1 PART=0x0 --partner 磁盘关系 2 ->> 0

kfdpDtaEv1[2].partner[1]: 49153 ; 0x06a: P=1 P=1 PART=0x1 --partner 磁盘关系 2 ->> 1

kfdpDtaEv1[2].partner[2]: 10000 ; 0x06c: P=0 P=0 PART=0x2710 --简单理解,上面就是说磁盘2与磁盘0、1有关系

--下面两个磁盘的相同块位置内容相同,不记录了,跳过

[root@hisdb01 ~]# kfed read /dev/asmdata10 aun=1 blkn=3 aus=1048576|more

[root@hisdb01 ~]# kfed read /dev/asmdata11 aun=1 blkn=3 aus=1048576|more

--通过观察high冗余下,三块磁盘的pst blkn 3 上面的值基本上都是一样的,只是磁盘号、校验码这里有所不同;

[root@hisdb01 trace]# kfed read /dev/asmdata9 aun=1 blkn=3 aus=1048576 text=psth1

[root@hisdb01 trace]# kfed read /dev/asmdata10 aun=1 blkn=3 aus=1048576 text=psth2

[root@hisdb01 trace]# kfed read /dev/asmdata11 aun=1 blkn=3 aus=1048576 text=psth3

[root@hisdb01 trace]# diff psth1 psth2

6,7c6,7

< kfbh.block.obj: 2147483648 ; 0x008: disk=0

< kfbh.check: 3747846121 ; 0x00c: 0xdf6397e9

---

> kfbh.block.obj: 2147483649 ; 0x008: disk=1

> kfbh.check: 3747846120 ; 0x00c: 0xdf6397e8

[root@hisdb01 trace]# diff psth1 psth3

6,7c6,7

< kfbh.block.obj: 2147483648 ; 0x008: disk=0

< kfbh.check: 3747846121 ; 0x00c: 0xdf6397e9

---

> kfbh.block.obj: 2147483650 ; 0x008: disk=2

> kfbh.check: 3747846123 ; 0x00c: 0xdf6397eb

4、Partnership and Status Table(PST) 迁移

PST在下列情况下会再分配:

● PST所在磁盘不可用(ASM实例启动时)

● 磁盘offline

● PST的读写发生IO错误

● 磁盘被正常的删除掉(手工或后台)

这些情况下,如果相同失败组中有其他可用的磁盘,PST将会被重分配到其他磁盘;否则会重分配到其他没有PST副本的失败组。

举例:

create diskgroup wx_nor normal redundancy failgroup fg1 disk '/dev/asmdata6' failgroup fg2 disk '/dev/asmdata7' failgroup fg3 disk '/dev/asmdata8' failgroup fg4 disk '/dev/asmdata9'

attribute 'au_size'='1M','compatible.asm'='11.2','compatible.rdbms'='11.2';

//查看alert日志,/u01/app/grid/diag/asm/+asm/+ASM1/trace/alert_+ASM1.log

alert日志会显示pst表拷贝信息分别存放到磁盘0,1,2

NOTE: Assigning number (5,0) to disk (/dev/asmdata6)

NOTE: Assigning number (5,1) to disk (/dev/asmdata7)

NOTE: Assigning number (5,2) to disk (/dev/asmdata8)

NOTE: Assigning number (5,3) to disk (/dev/asmdata9)

...

NOTE: group WX_NOR: initial PST location: disk 0000 (PST copy 0)

NOTE: group WX_NOR: initial PST location: disk 0001 (PST copy 1)

NOTE: group WX_NOR: initial PST location: disk 0002 (PST copy 2)

NOTE: PST update grp = 5 completed successfully

删除磁盘0

alter diskgroup wx_nor drop disk wx_nor_0000;

alert日志会看到pst表信息从磁盘0转移到了磁盘3

NOTE: initiating PST update: grp = 5, dsk = 0/0xe9699ecf, mask = 0x6a, op = clear

GMON updating disk modes for group 5 at 106 for pid 34, osid 117868

NOTE: group WX_NOR: updated PST location: disk 0001 (PST copy 0)

NOTE: group WX_NOR: updated PST location: disk 0002 (PST copy 1)

NOTE: group WX_NOR: updated PST location: disk 0003 (PST copy 2)

NOTE: PST update grp = 5 completed successfully

NOTE: initiating PST update: grp = 5, dsk = 0/0xe9699ecf, mask = 0x7e, op = clear

5、(PST) GMON 跟踪

每次尝试mount磁盘组时,GMON trace文件就会记录PST信息。注意我说的是尝试,不是已经mount上。换句话说,GMON不管mount动作成功与否,都会记录信息。这些信息对于Oracle Support诊断磁盘组mount失败故障可能会有价值。

下面是典型的GMON trace文件记录的一个磁盘组mount的信息:

[root@hisdb01 trace]# ps -ef|grep gmon

grid 11594 1 0 May22 ? 00:01:41 asm_gmon_+ASM1

[root@hisdb01 trace]# ll /proc/11594/fd|grep trc

l-wx------ 1 grid oinstall 64 May 24 14:53 26 -> /u01/app/grid/diag/asm/+asm/+ASM1/trace/+ASM1_gmon_11594.trc

=============== PST ==================== --标记的部分显示了磁盘组号(grpNum),类型(grpTyp),和状态

grpNum: 5

state: 1

callCnt: 112

(lockvalue) valid=0 ver=1.1 ndisks=0 flags=0x2 from inst=1 (I am 1) last=7

--------------- HDR -------------------- --标记的部分显示了PST的副本数(pst count)和所在的磁盘号。

next: 7

last: 7

pst count: 3 --pst副本数

pst locations: 1 2 3 --1,2,3号disk

incarn: 4

dta size: 4 --有4个磁盘

version: 1

ASM version: 186646528 = 11.2.0.0.0

contenttype: 1

partnering pattern: [ ]

--------------- LOC MAP ----------------

0: dirty 0 cur_loc: 0 stable_loc: 0

1: dirty 0 cur_loc: 0 stable_loc: 0

--------------- DTA -------------------- --显示了PST所在磁盘的状态。

1: sts v v(rw) p(rw) a(x) d(x) fg# = 2 addTs = 2641431428 parts: 2 (amp) 3 (amp) --全部是在线,也就是127,如果是离线 v v(--) p(--) a(--) d(--)

2: sts v v(rw) p(rw) a(x) d(x) fg# = 3 addTs = 2641431428 parts: 1 (amp) 3 (amp)

3: sts v v(rw) p(rw) a(x) d(x) fg# = 4 addTs = 2641431428 parts: 1 (amp) 2 (amp)

--------------- HBEAT ------------------

kfdpHbeat_dump: state=3, inst=1, ts=33150737.622478336,

rnd=1802800170.600557930.3201202811.400069086.

kfk io-queue: 0x7f5478e41040

kfdpHbeatCB_dump: at 0x7f5478e413c0 with ts=05/24/2023 17:09:16 iop=0x7f5478e413d0, grp=5, disk=1/3916013273, isWrite=1 Hbeat #56 state=2 iostate=4

kfdpHbeatCB_dump: at 0x7f5478e411f8 with ts=05/24/2023 17:09:16 iop=0x7f5478e41208, grp=5, disk=2/3916013272, isWrite=1 Hbeat #56 state=2 iostate=4

kfdpHbeatCB_dump: at 0x7f5478e41030 with ts=05/24/2023 17:09:16 iop=0x7f5478e41040, grp=5, disk=3/3916013271, isWrite=1 Hbeat #56 state=2 iostate=4

--------------- KFGRP ------------------

kfgrp: WX_NOR number: 5/1436118858 type: 2 compat: 11.2.0.0.0 dbcompat:1.0.0.0.1

timestamp: 447636825 state: 4 flags: 10 gpnlist: 9ef826a0 9ef826a0

KFGPN at 9ef825e0 in dependent chain

kfdsk:0x9ef739f8

disk: WX_NOR_0001 num: 1/7084547265882857177 grp: 5/7084547263402962762 compat: 11.2.0.0.0 dbcompat:11.2.0.0.0

fg: FG2 path: /dev/asmdata7

mnt: O hdr: M mode: v v(rw) p(rw) a(x) d(x) sta: N flg: 1001

slot 65535 ddeslot 65535 numslots 65535 dtype 0 enc 0 part 0 flags 0

kfts: 2023/05/24 16:46:04.091000

kfts: 2023/05/24 16:46:12.306000

pcnt: 2 (2 3)

kfkid: 0x9efa19d8, kfknm: , status: IDENTIFIED

fob: (KSFD)a0805ae0, magic: bebe ausize: 1048576

kfdds: dn=1 inc=3916013273 dsk=0x9ef739f8 usrp=(nil)

kfkds 0x7f5478f87040, kfkid 0x9efa19d8, magic abbe, libnum 0, bpau 2048, fob 0xa08066d8

kfdsk:0x9ef732e0

disk: WX_NOR_0002 num: 2/7084547265882857176 grp: 5/7084547263402962762 compat: 11.2.0.0.0 dbcompat:11.2.0.0.0

fg: FG3 path: /dev/asmdata8

mnt: O hdr: M mode: v v(rw) p(rw) a(x) d(x) sta: N flg: 1001

slot 65535 ddeslot 65535 numslots 65535 dtype 0 enc 0 part 0 flags 0

kfts: 2023/05/24 16:46:04.091000

kfts: 2023/05/24 16:46:12.306000

pcnt: 2 (1 3)

kfkid: 0x9efa11f0, kfknm: , status: IDENTIFIED

fob: (KSFD)a0803f48, magic: bebe ausize: 1048576

kfdds: dn=2 inc=3916013272 dsk=0x9ef732e0 usrp=(nil)

kfkds 0x7f5478f86f98, kfkid 0x9efa11f0, magic abbe, libnum 0, bpau 2048, fob 0xa0806808

kfdsk:0x9ef72f60

disk: WX_NOR_0003 num: 3/7084547265882857175 grp: 5/7084547263402962762 compat: 11.2.0.0.0 dbcompat:11.2.0.0.0

fg: FG4 path: /dev/asmdata9

mnt: O hdr: M mode: v v(rw) p(rw) a(x) d(x) sta: N flg: 1001

slot 65535 ddeslot 65535 numslots 65535 dtype 0 enc 0 part 0 flags 0

kfts: 2023/05/24 16:46:04.091000

kfts: 2023/05/24 16:46:12.306000

pcnt: 2 (1 2)

kfkid: 0x9efa0e08, kfknm: , status: IDENTIFIED

fob: (KSFD)a0803e18, magic: bebe ausize: 1048576

kfdds: dn=3 inc=3916013271 dsk=0x9ef72f60 usrp=(nil)

kfkds 0x7f5478f86cf8, kfkid 0x9efa0e08, magic abbe, libnum 0, bpau 2048, fob 0xa0806938

*** 2023-05-24 17:09:23.687

GMON querying group 5 at 114 for pid 29, osid 9927

6、(PST) GMON strace跟踪

模拟删除磁盘组的一块盘来观察gmon做了哪些动作;

//会话1

SQL> select a.name diskgroup_name,a.type,a.allocation_unit_size/1024/1024 unit_mb,a.voting_files,b.name disk_name,b.path from v$asm_diskgroup a,v$asm_disk b where a.group_number=b.group_number;

DISKGROUP_NAME TYPE UNIT_MB VO DISK_NAME PATH

--------------- ------------ ---------- -- ------------------------------ ----------------------------------------

DATA EXTERN 1 N DATA_0001 /dev/asmdata2

DATA EXTERN 1 N DATA_0000 /dev/asmdata1

FRA EXTERN 1 N FRA_0001 /dev/asmdisk4

FRA EXTERN 1 N FRA_0000 /dev/asmdisk6

OCR NORMAL 4 Y OCR_0001 /dev/asmdisk2

OCR NORMAL 4 Y OCR_0002 /dev/asmdisk3

OCR NORMAL 4 Y OCR_0000 /dev/asmdisk1

WX_EXT EXTERN 1 N WX_EXT_0001 /dev/asmdata3

WX_EXT EXTERN 1 N WX_EXT_0000 /dev/asmdisk5

WX_NOR NORMAL 1 N WX_NOR_0000 /dev/asmdata6

WX_NOR NORMAL 1 N WX_NOR_0001 /dev/asmdata7

WX_NOR NORMAL 1 N WX_NOR_0002 /dev/asmdata8

12 rows selected.

//会话2

[root@hisdb01 ~]# ps -ef|grep gmon

grid 11594 1 0 May22 ? 00:02:36 asm_gmon_+ASM1

root 95167 70455 0 10:49 pts/3 00:00:00 grep --color=auto gmon

[root@hisdb01 ~]# strace -fr -o gmon.txt -p 11594

strace: Process 11594 attached

//会话1

SQL> alter diskgroup wx_nor drop disk WX_NOR_0001;

Diskgroup altered.

分析产生的日志

[root@hisdb01 ~]# ll /proc/11594/fd|egrep "267|265|266"

lrwx------ 1 grid oinstall 64 May 24 15:09 265 -> /dev/asmdata6

lrwx------ 1 grid oinstall 64 May 24 15:09 266 -> /dev/asmdata7

lrwx------ 1 grid oinstall 64 May 24 15:09 267 -> /dev/asmdata8

[root@hisdb01 ~]# cat gmon.txt |egrep "fildes=267|fildes=266"

11594 0.000256 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200\36\347\3501\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200\35\347\3501\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\34\347\3501\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3 --心跳

11594 0.000322 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200 \321\35\314\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200#\321\35\314\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\"\321\35\314\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

11594 0.000607 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\21\2\1\1\0\0\2\0\0\200*\2153\270\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1052672}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\21\2\1\1\0\0\1\0\0\200)\2153\270\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1052672}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\21\2\1\1\0\0\0\0\0\200(\2153\270\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1052672}]) = 3

11594 0.000616 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\22\2\2\1\0\0\2\0\0\200p\307a\337\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1056768}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\22\2\2\1\0\0\1\0\0\200s\307a\337\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1056768}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\22\2\2\1\0\0\0\0\0\200r\307a\337\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1056768}]) = 3

11594 0.000272 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\21\2\0\1\0\0\2\0\0\200)\1\377A\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1048576}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\21\2\0\1\0\0\1\0\0\200*\1\377A\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1048576}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\21\2\0\1\0\0\0\0\0\200+\1\377A\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1048576}]) = 3

11594 0.000732 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200\324g\255\254\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200\327g\255\254\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\326g\255\254\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

11594 0.000943 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200\363v\252\251\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200\360v\252\251\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\361v\252\251\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

[root@hisdb01 ~]# cat gmon.txt |egrep "fildes=267|fildes=266|fildes=265"

11594 0.000256 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200\36\347\3501\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200\35\347\3501\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\34\347\3501\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

11594 0.000322 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200 \321\35\314\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200#\321\35\314\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\"\321\35\314\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

11594 0.000607 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\21\2\1\1\0\0\2\0\0\200*\2153\270\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1052672}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\21\2\1\1\0\0\1\0\0\200)\2153\270\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1052672}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\21\2\1\1\0\0\0\0\0\200(\2153\270\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1052672}]) = 3

11594 0.000616 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\22\2\2\1\0\0\2\0\0\200p\307a\337\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1056768}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\22\2\2\1\0\0\1\0\0\200s\307a\337\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1056768}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\22\2\2\1\0\0\0\0\0\200r\307a\337\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1056768}]) = 3

11594 0.000272 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\21\2\0\1\0\0\2\0\0\200)\1\377A\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1048576}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\21\2\0\1\0\0\1\0\0\200*\1\377A\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1048576}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\21\2\0\1\0\0\0\0\0\200+\1\377A\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=1048576}]) = 3

11594 0.000732 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200\324g\255\254\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200\327g\255\254\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\326g\255\254\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

11594 0.000943 io_submit(140000838344704, 3, [{data=0x7f5478d800b0, pwrite, fildes=267, str="\1\202\23\2\377\1\0\0\2\0\0\200\363v\252\251\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f210, pwrite, fildes=266, str="\1\202\23\2\377\1\0\0\1\0\0\200\360v\252\251\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}, {data=0x7f5478d7f960, pwrite, fildes=265, str="\1\202\23\2\377\1\0\0\0\0\0\200\361v\252\251\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0"..., nbytes=4096, offset=2093056}]) = 3

SQL> select 1048576/4096,1056768/4096,1052672/4096,2093056/4096 from dual;

1048576/4096 1056768/4096 1052672/4096 2093056/4096

------------ ------------ ------------ ------------

256 258 257 511

--从上面可以看到,读了3个设备的256、257、258号块及511块,这些块是什么内容呢?

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=256|grep type

kfbh.type: 17 ; 0x002: KFBTYP_PST_META

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=257|grep type

kfbh.type: 17 ; 0x002: KFBTYP_PST_META

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=258|grep type

kfbh.type: 18 ; 0x002: KFBTYP_PST_DTA

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=511|grep type

kfbh.type: 19 ; 0x002: KFBTYP_HBEAT

KFBTYP_HBEAT,很显然这是asm的disk心跳验证,oracle正是通过这个来判断某个disk是否属于某个磁盘组,以防止其他磁盘组将该disk挂载。这里我猜测oracle是通过hash算法来计算hash值的,也就是上面的kfbh.check,通过观察,正常情况下,该值基本上是几秒就会被更新,如下:

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=511|grep che

kfbh.check: 4290101644 ; 0x00c: 0xffb5c18c

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=511|grep che

kfbh.check: 3533215339 ; 0x00c: 0xd298966b

[root@hisdb01 ~]# kfed read /dev/asmdata6 blkn=511|grep che

kfbh.check: 1100204834 ; 0x00c: 0x4193cb22