环境信息:

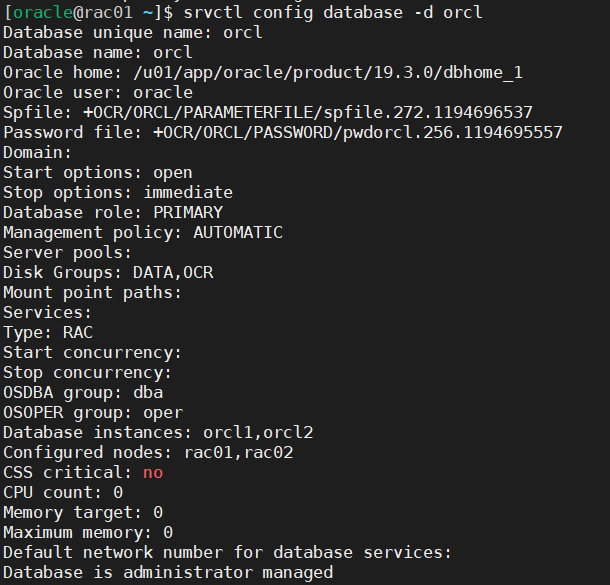

[oracle@rac01 ~]$ srvctl config database -d orcl

Database unique name: orcl

Database name: orcl

Oracle home: /u01/app/oracle/product/19.3.0/dbhome_1

Oracle user: oracle

Spfile: +OCR/ORCL/PARAMETERFILE/spfile.272.1194696537

Password file: +OCR/ORCL/PASSWORD/pwdorcl.256.1194695557

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools:

Disk Groups: DATA,OCR

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

OSDBA group: dba

OSOPER group: oper

Database instances: orcl1,orcl2

Configured nodes: rac01,rac02

CSS critical: no

CPU count: 0

Memory target: 0

Maximum memory: 0

Default network number for database services:

Database is administrator managed

删除节点rac01

1.1 删除节点DB instance

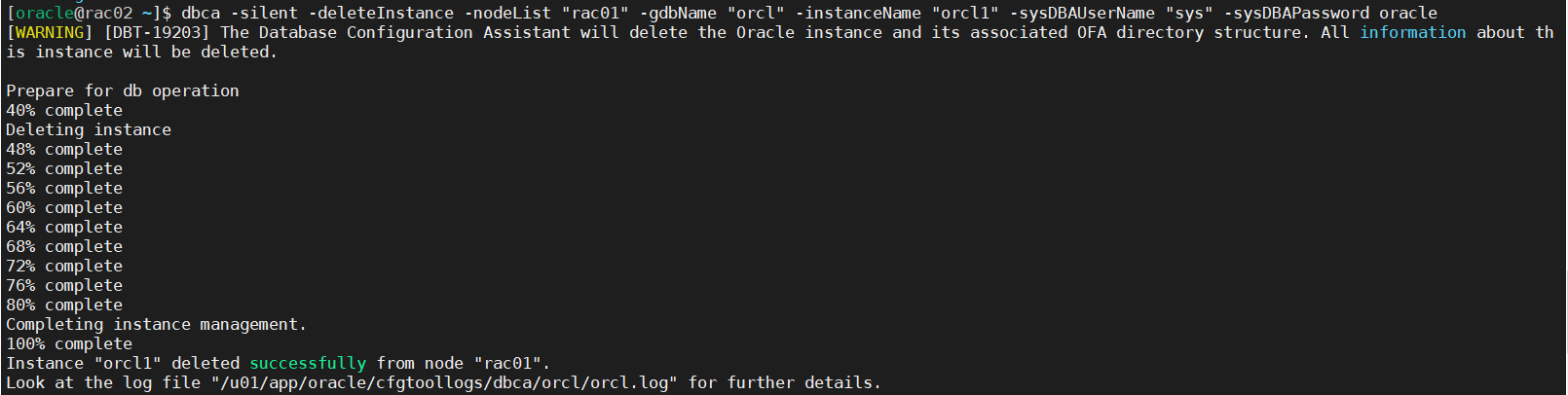

方案1:oracle用户在保留节点使用dbca的静默模式进行删除实例$ dbca -silent -deleteInstance -nodeList "rac01" -gdbName "orcl" -instanceName "orcl1" -sysDBAUserName "sys" -sysDBAPassword oracle

方案2:oracle用户在保留节点运行dbca

oracle RAC database instance management–>delete an instence

检查

[oracle]$ srvctl config database -d orcl

Type: RAC

Database instances: orcl1,orcl2

Configured nodes: rac01,rac02

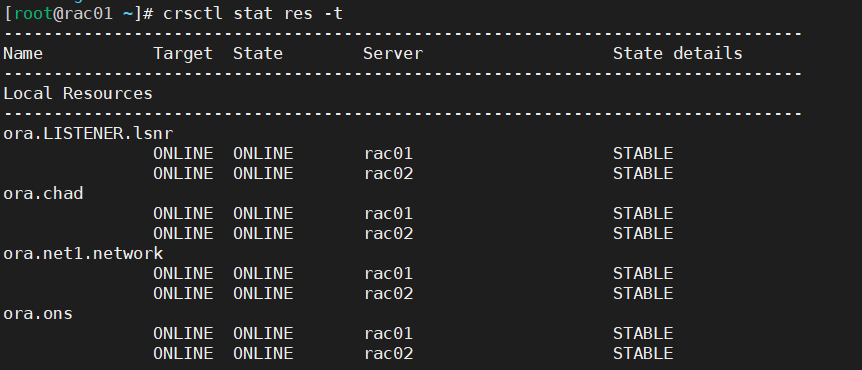

[grid]$ crsctl status res -t

1.2 卸载节点Database软件

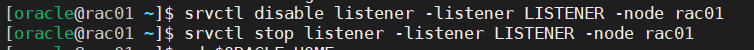

禁用和停止预删除节点的监听[grid]$ srvctl disable listener -listener LISTENER -node rac01

[grid]$ srvctl stop listener -listener LISTENER -node rac01

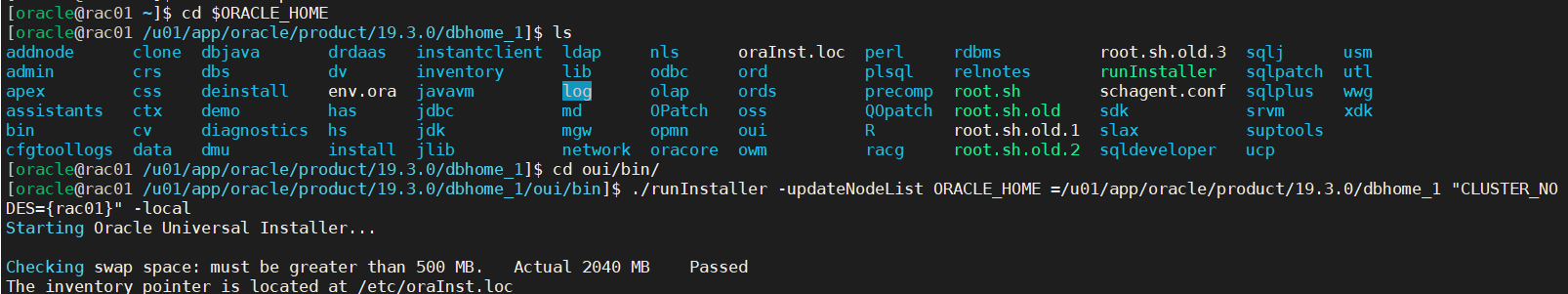

在预删除的节点上执行以下命令更新Inventory

[oracle]$ cd $ORACLE_HOME/oui/bin

[oracle]$ ./runInstaller -updateNodeList ORACLE_HOME =/u01/app/oracle/product/19.3.0/dbhome_1 "CLUSTER_NODES={rac01}" -local

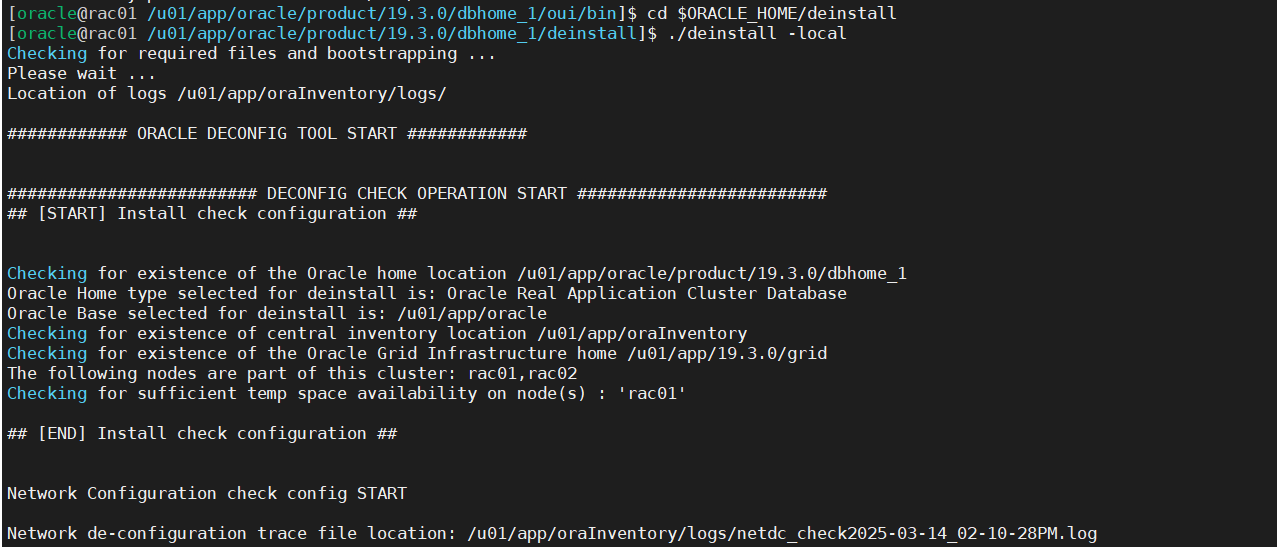

移除预删除的节点上RAC database home,在删除的节点上执行

[oracle]$ cd $ORACLE_HOME/deinstall

[oracle]$ ./deinstall -local

Specify the list of database names that are configured locally on this node for this Oracle home. Local configurations of the discovered databases will be removed []: 回车,默认读取当前oracle_home目录

Do you want to continue (y - yes, n - no)? [n]: y

此时会保留$ORACLE_BASE/admin目录,可以手动删除。

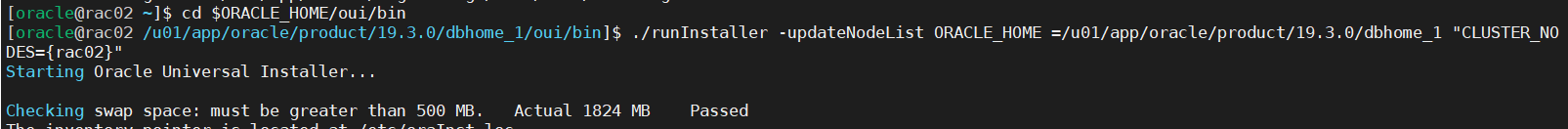

在集群中所有保留的节点上执行以下命令更新Inventory

[oracle]$ cd $ORACLE_HOME/oui/bin

[oracle]$ ./runInstaller -updateNodeList ORACLE_HOME =/u01/app/oracle/product/19.3.0/dbhome_1 "CLUSTER_NODES={rac02}"

1.3 卸载节点Clusterware软件

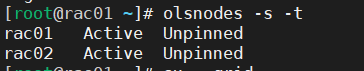

查看预删除的节点是否为pinned状态[grid]$ olsnodes -s -t

如果返回的结果是pinned状态,执行以下命令;如果是unpinned状态,跳过此步骤

[root]# cd /u01/app/19.3.0/grid/bin

[root]# ./crsctl unpin css -n rac1

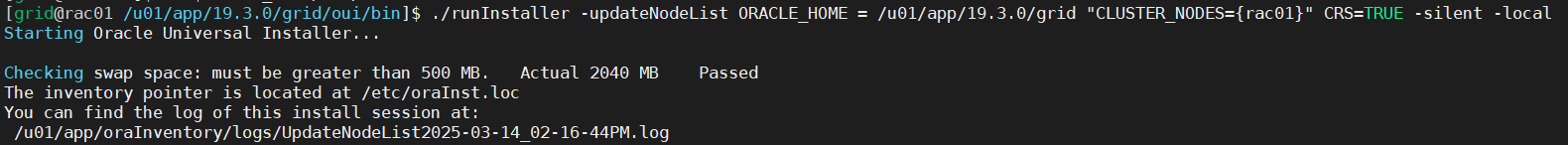

在预删除的节点上执行以下命令更新Inventory

[grid]$ cd $ORACLE_HOME/oui/bin

[grid]$ ./runInstaller -updateNodeList ORACLE_HOME =/u01/app/19.3.0/grid "CLUSTER_NODES={rac01}" CRS=TRUE -silent -local

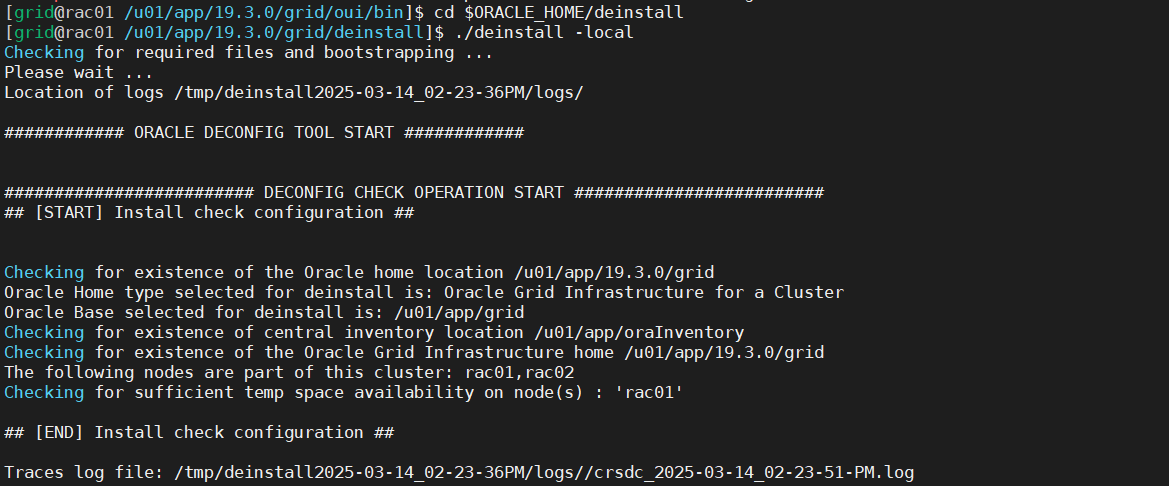

移除RAC grid home,在删除的节点上执行

[grid]$ cd $ORACLE_HOME/deinstall

[grid]$ ./deinstall -local

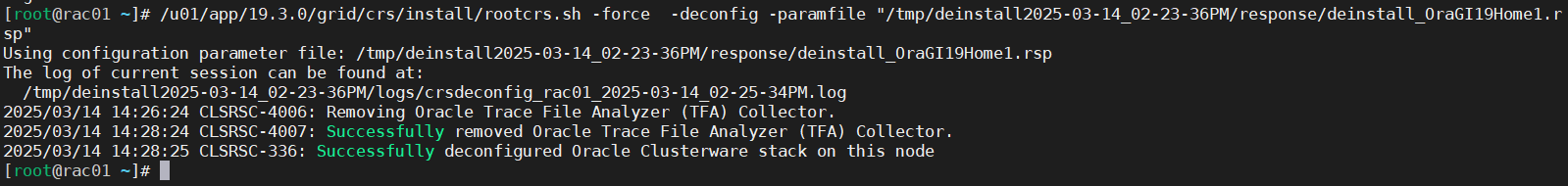

会提示以root用户运行rootcrs.sh脚本

/u01/app/19.3.0/grid/crs/install/rootcrs.sh -force -deconfig -paramfile "/tmp/deinstall2025-03-14_02-23-36PM/response/deinstall_OraGI19Home1.rsp"

新开页面执行,执行完后回原页面enter确认

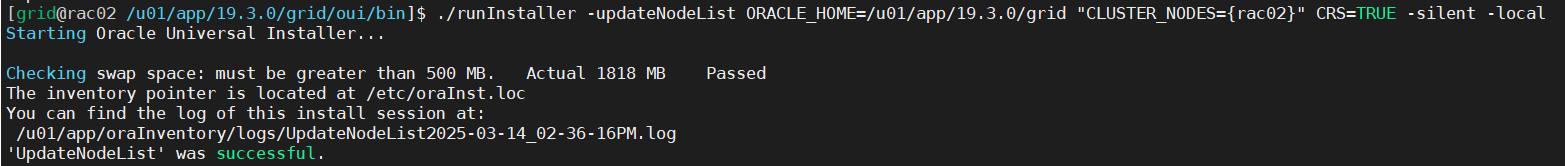

在所有保留节点上以grid用户 更新保留节点的Inventory

[grid]$ cd $ORACLE_HOME/oui/bin

[grid]$ ./runInstaller -updateNodeList ORACLE_HOME=/u01/app/19.3.0/grid "CLUSTER_NODES={rac02}" CRS=TRUE -silent -local

此时会保留目录/u01/app/19.3.0和/u01/app/grid

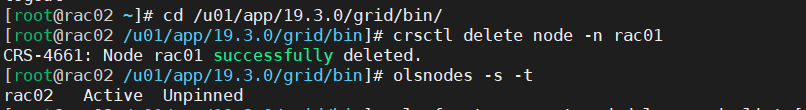

在保留节点的其中一个节点上运行以下命令删除群集节点:

[root@rac2 ~]# cd /u01/app/19.3.0/grid/bin/

[root@rac2 bin]# ./crsctl delete node -n rac1

[root@rac2 bin]# ./olsnodes -s -t.

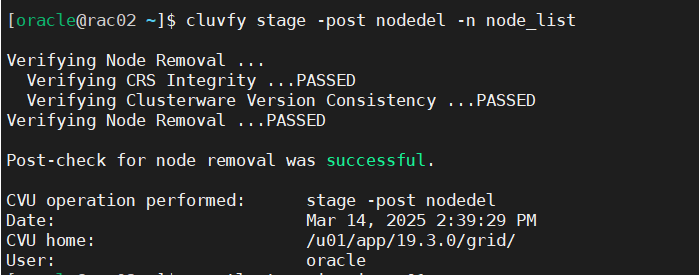

运行以下CVU命令以验证指定节点是否已成功从群集中删除:

$ cluvfy stage -post nodedel -n node_list [-verbose]

停止并删除VIP

此步骤需要在 要删除的节点已损坏并重做系统 时完成,如果执行了上一步,此步骤可跳过

[root@rac2 ~]# cd /u01/app/19.3.0/grid/bin

[root@rac2 bin]# ./srvctl stop vip -i rac1

[root@rac2 bin]# ./srvctl remove vip -i rac1 -f

[root@rac2 bin]# ./crsctl stat res -t

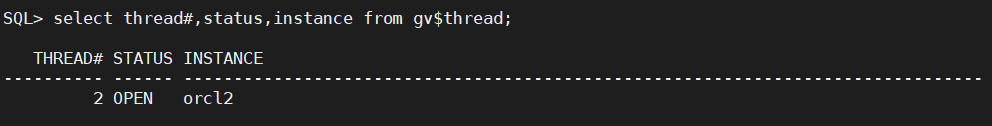

SQL> select thread#,status,instance from gv$thread;

增加节点rac01

2.1 配置SSH互信

对 grid 和 oracle 用户配置 SSH互信# cd $ORACLE_HOME/oui/prov/resources/scripts

# ./sshUserSetup.sh -user grid -hosts "rac01 rac02" -advanced -noPromptPassphrase

# ./sshUserSetup.sh -user oracle -hosts "rac01 rac02" -advanced -noPromptPassphrase

2.2 使用CVU验证添加的节点是否满足要求

在现有集群节点的grid用户下执行以下命令验证添加的节点是否满足GI软件的要求

[grid]$ cluvfy stage -pre nodeadd -n rac1 -verbose -fixup 自动产生修复脚本

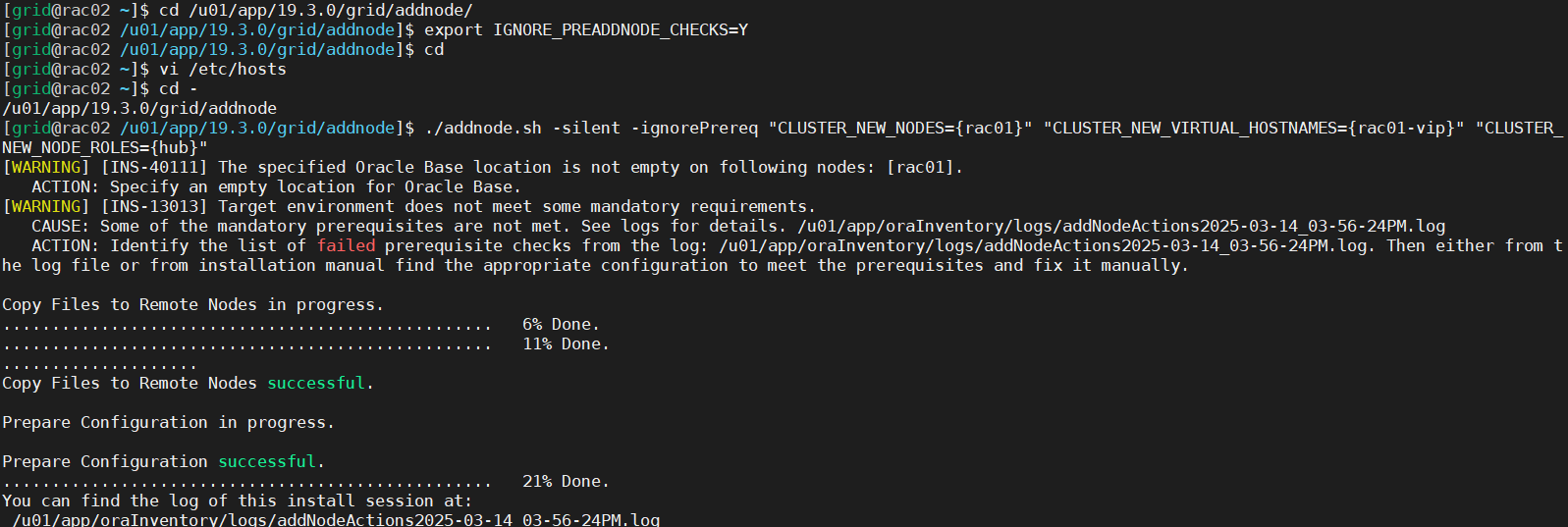

2.3 添加Clusterware

执行以下命令将添加新节点Clusterware软件 (在现有集群节点的grid用户执行)

[grid]$ cd /u01/app/19.3.0/grid/addnode/

[grid]$ export IGNORE_PREADDNODE_CHECKS=Y

[grid]$ ./addnode.sh -silent -ignorePrereq "CLUSTER_NEW_NODES={rac1}" "CLUSTER_NEW_VIRTUAL_HOSTNAMES={rac1-vip}" "CLUSTER_NEW_NODE_ROLES={hub}"

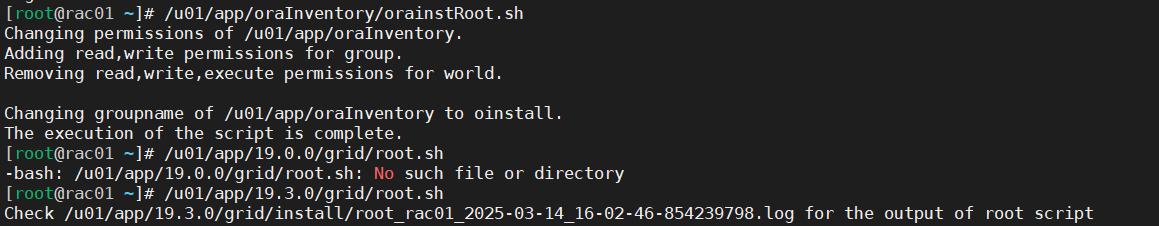

上一步执行成功之后,在新节点以root用户身份运行以下两个脚本

# /u01/app/oraInventory/orainstRoot.sh

# /u01/app/19.3.0/grid/root.sh

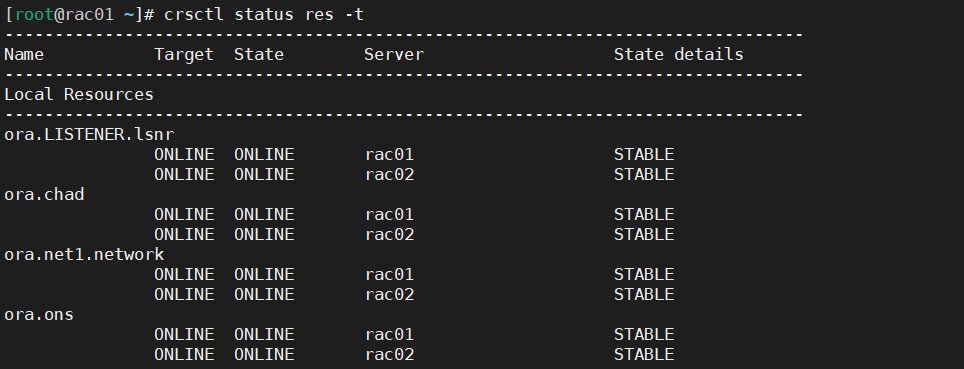

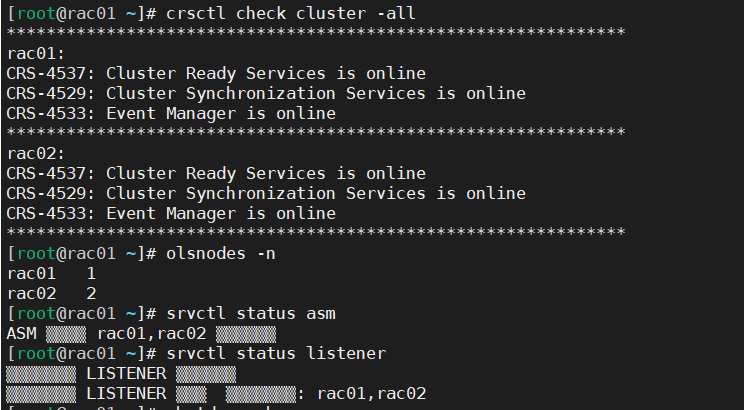

验证

[grid@rac1 ~]$ crsctl status res -t

[grid@rac1 ~]$ crsctl status res -t -init

[grid@rac1 ~]$ crsctl check cluster -all

[grid@rac1 ~]$ olsnodes -n

[grid@rac1 ~]$ srvctl status asm

[grid@rac1 ~]$ srvctl status listener

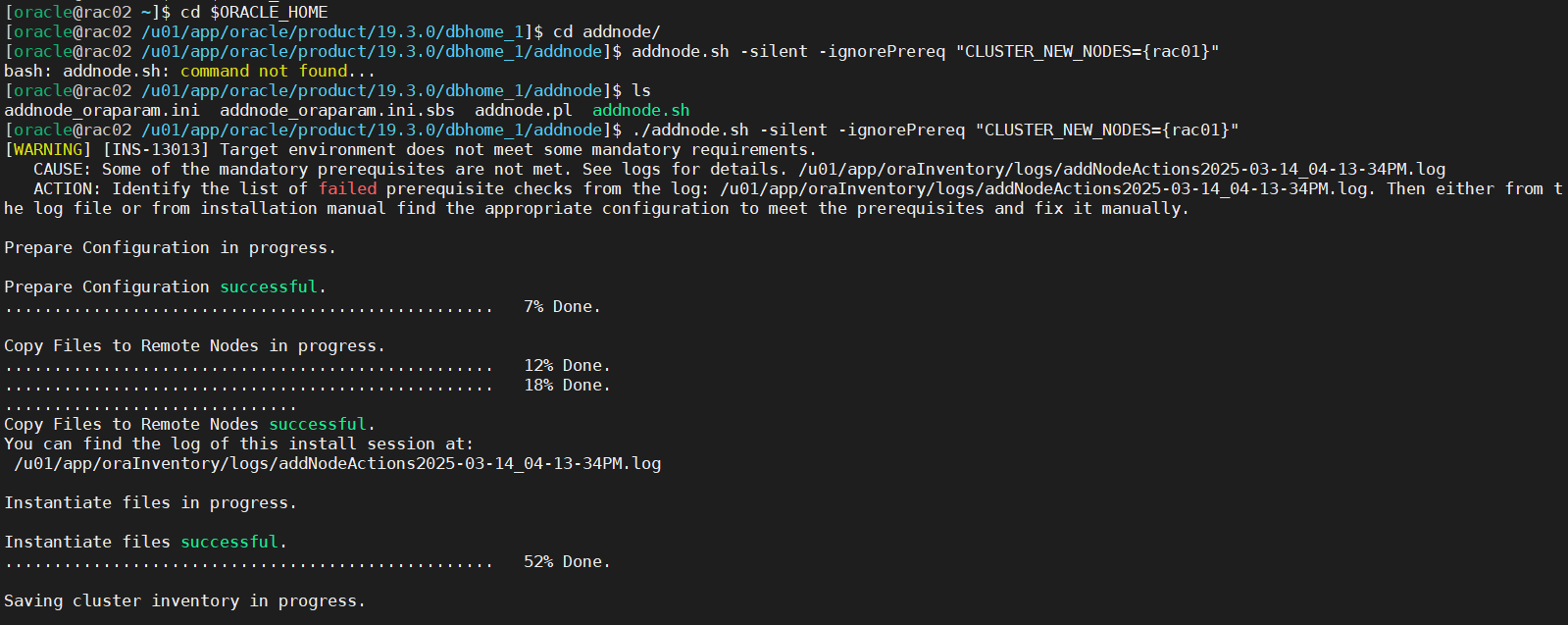

2.4 添加Database软件

为新节点添加Database软件 (在现有集群节点以oracle用户执行)[oracle]$ cd /u01/app/oracle/product/19.3.0/dbhome_1/addnode/

[oracle]$ ./addnode.sh -silent -ignorePrereq "CLUSTER_NEW_NODES={rac1}"

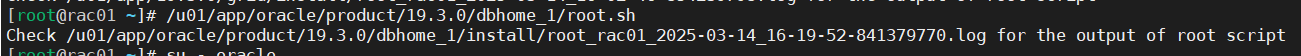

上一步完成之后,在新的节点以root用户身份运行以下脚本

[root@rac1 ~]# /u02/app/oracle/product/19.3.0/dbhome_1/root.sh

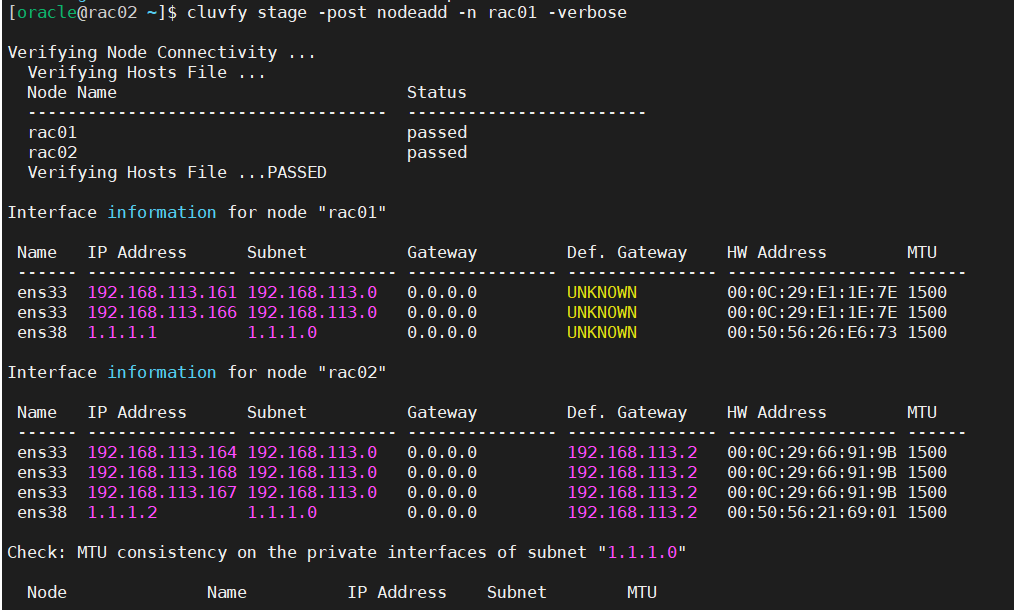

在现有集群节点或新节点,在grid和oracle用户下执行以下命令验证Clusterware和Database软件是否添加正确

[grid]$ cluvfy stage -post nodeadd -n rac01 -verbose

2.5 添加DB instance

方案1:

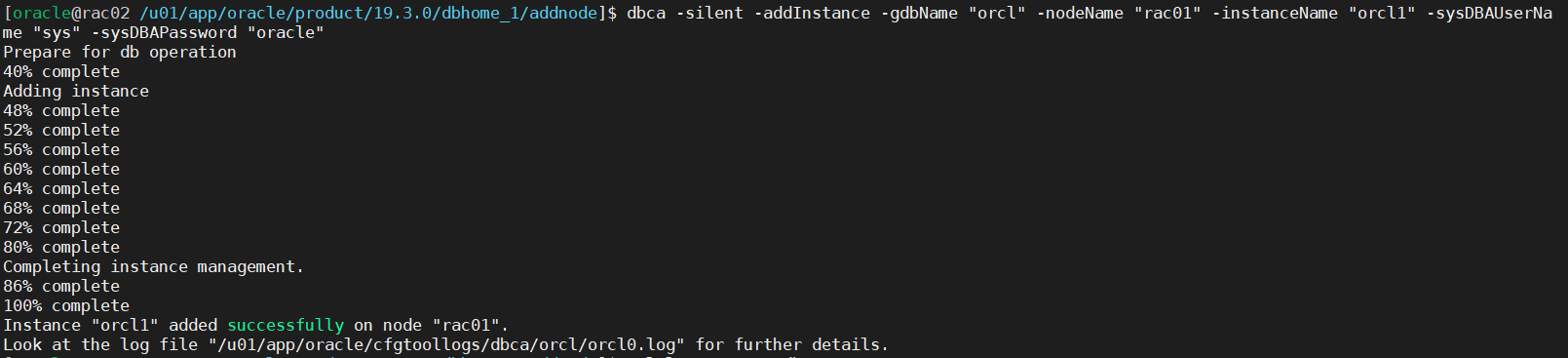

使用dbca工具执行以下命令,以静默模式添加新节点数据库实例(在现有集群节点以oracle用户执行)

[oracle@rac2 ~]$ dbca -silent -addInstance -gdbName "orcl" -nodeName "rac01" -instanceName "orcl1" -sysDBAUserName "sys" -sysDBAPassword "oracle"

方案2:

在现有节点以 oracle 用户运行 dbca

oracle RAC database instance management–>add an instence

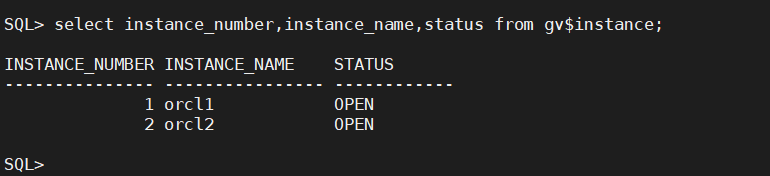

3.7 检查集群和数据库是否正常

SQL> select instance_number,instance_name,status from gv$instance;

SQL> select thread#,status,instance from gv$thread;

[grid@rac1 ~]$ crsctl status res -t