环境配置

| 操作系统 | 配置 | 数据盘 | 系统盘 | IP | 节点 |

|---|---|---|---|---|---|

| Centos 7.8 | 4H16G | 100G | 大于8G | 10.10.100.236 | node1 |

| Centos 7.8 | 4H16G | 100G | 大于8G | 10.10.100.235 | node2 |

1.双主故障之修复

RWC主备关闭之后,误操作在本启动集群,导致备库状态异常

1.1 启动异常如下

[kingbase@dba236 bin]$ ./sys_monitor.sh start

2025-03-20 21:13:20 Ready to start all DB ...

2025-03-20 21:13:20 begin to start DB on "[10.10.100.236]".

waiting for server to start.... done

server started

2025-03-20 21:13:21 execute to start DB on "[10.10.100.236]" success, connect to check it.

2025-03-20 21:13:22 DB on "[10.10.100.236]" start success.

2025-03-20 21:13:22 Try to ping trusted_servers on host 10.10.100.236 ...

2025-03-20 21:13:25 Try to ping trusted_servers on host 10.10.100.235 ...

2025-03-20 21:13:28 begin to start DB on "[10.10.100.235]".

waiting for server to start.... done

server started

2025-03-20 21:13:29 execute to start DB on "[10.10.100.235]" success, connect to check it.

2025-03-20 21:13:30 DB on "[10.10.100.235]" start success.

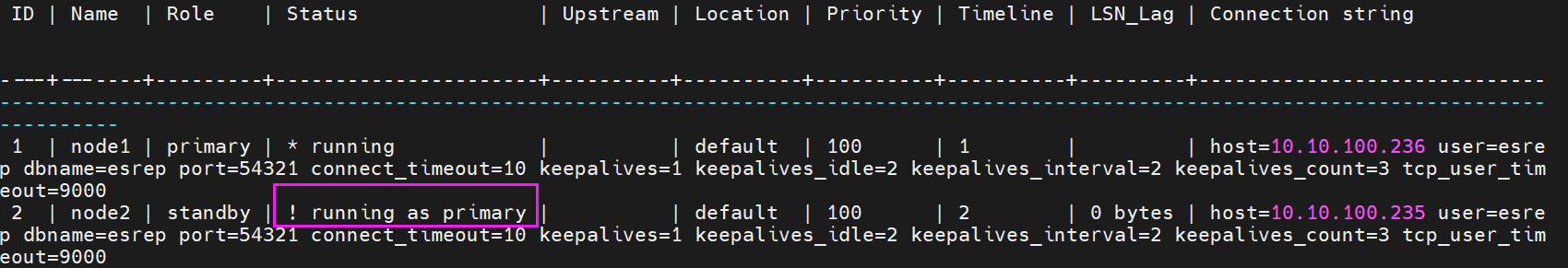

[WARNING] following issues were detected

- node "node2" (ID: 2) is registered as standby but running as primary

ID | Name | Role | Status | Upstream | Location | Priority | Timeline | LSN_Lag | Connection string

----+-------+---------+----------------------+----------+----------+----------+----------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1 | node1 | primary | * running | | default | 100 | 1 | | host=10.10.100.236 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000

2 | node2 | standby | ! running as primary | | default | 100 | 2 | 0 bytes | host=10.10.100.235 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000

2025-03-20 21:13:30 There are more than one primary DBs([2] DBs are running), will stop DB and cron and exit.

2025-03-20 21:13:35 begin to stop DB on "[10.10.100.236]".

waiting for server to shut down.... done

server stopped

2025-03-20 21:13:36 DB on "[10.10.100.236]" stop success.

2025-03-20 21:13:40 begin to stop DB on "[10.10.100.235]".

waiting for server to shut down.... done

server stopped

2025-03-20 21:13:41 DB on "[10.10.100.235]" stop success.

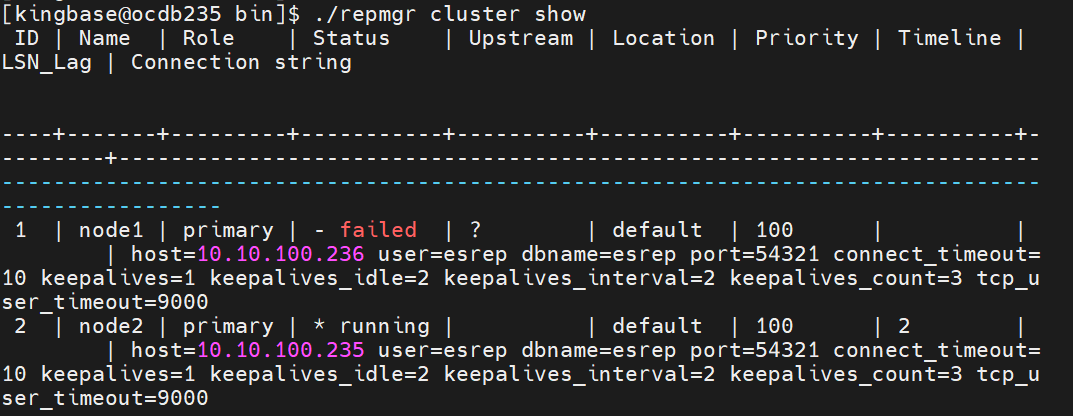

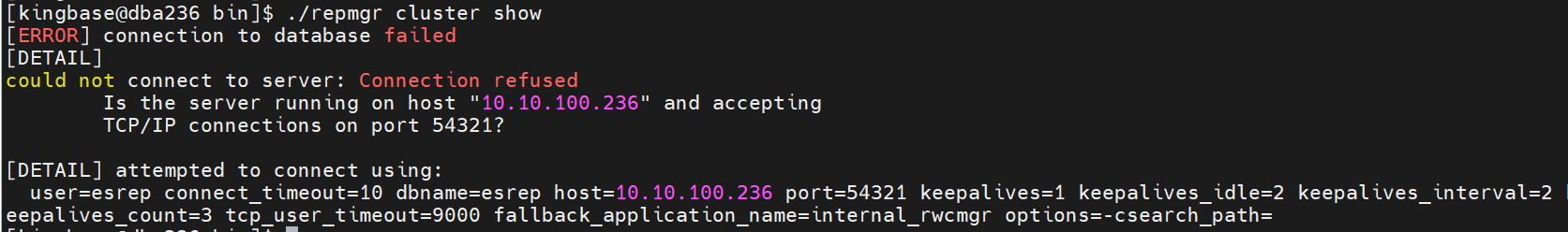

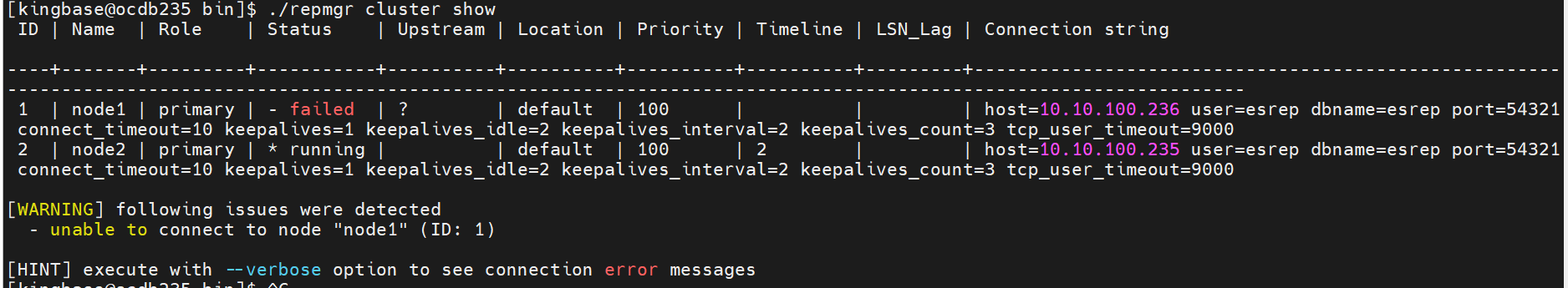

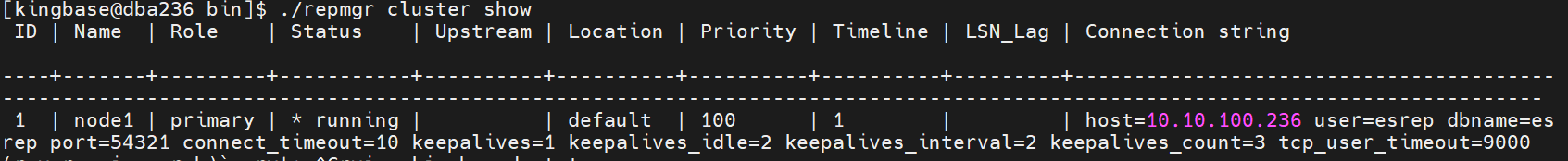

1.2 状态查看

./repmgr cluster show

1.3 查看控制文件对比节点数据差异

node2节点

[kingbase@ocdb235 bin]$ ./sys_controldata -D /yk/data/Kingbase/V9/data

sys_control version number: 1201

Catalog version number: 202404121

Database system identifier: 7472217260596738986

Database cluster state: shut down

sys_control last modified: Thu 20 Mar 2025 09:44:46 PM CST

Latest checkpoint location: 0/F000028

Latest checkpoint's REDO location: 0/F000028

Latest checkpoint's REDO WAL file: 00000002000000000000000F

Latest checkpoint's WalTimeLineID: 2

Latest checkpoint's PrevTimeLineID: 2

Latest checkpoint's full_page_writes: on

Latest checkpoint's NextXID: 0:1136

Latest checkpoint's NextOID: 16467

Latest checkpoint's NextMultiXactId: 1

Latest checkpoint's NextMultiOffset: 0

Latest checkpoint's oldestXID: 1078

Latest checkpoint's oldestXID's DB: 1

Latest checkpoint's oldestActiveXID: 0

Latest checkpoint's oldestMultiXid: 1

Latest checkpoint's oldestMulti's DB: 1

Latest checkpoint's oldestCommitTsXid:0

Latest checkpoint's newestCommitTsXid:0

Time of latest checkpoint: Thu 20 Mar 2025 09:44:46 PM CST

Fake LSN counter for unlogged rels: 0/3E8

Minimum recovery ending location: 0/0

Min recovery ending loc's timeline: 0

Backup start location: 0/0

Backup end location: 0/0

End-of-backup record required: no

wal_level setting: replica

wal_log_hints setting: on

max_connections setting: 100

max_worker_processes setting: 8

max_wal_senders setting: 32

max_prepared_xacts setting: 100

max_locks_per_xact setting: 64

track_commit_timestamp setting: off

Maximum data alignment: 8

Database block size: 8192

Blocks per segment of large relation: 131072

WAL block size: 8192

Bytes per WAL segment: 16777216

Maximum length of identifiers: 64

Maximum columns in an index: 32

Maximum size of a TOAST chunk: 1988

Size of a large-object chunk: 2048

Date/time type storage: 64-bit integers

Float4 argument passing: by value

Float8 argument passing: by value

Data page checksum version: 1

Data page checksum device: 0

Mock authentication nonce: 4e5145da4a12f0a0039d7fcfb32e5a8cc7e7c37c16ff44a43baf8c295105b96f

database mode: 1

auth method mode: 0

node1节点

[kingbase@dba236 bin]$ ./sys_controldata -D /yk/data/Kingbase/V9/data

sys_control version number: 1201

Catalog version number: 202404121

Database system identifier: 7472217260596738986

Database cluster state: shut down

sys_control last modified: Thu 20 Mar 2025 09:44:41 PM CST

Latest checkpoint location: 0/F000028

Latest checkpoint's REDO location: 0/F000028

Latest checkpoint's REDO WAL file: 00000001000000000000000F

Latest checkpoint's WalTimeLineID: 1

Latest checkpoint's PrevTimeLineID: 1

Latest checkpoint's full_page_writes: on

Latest checkpoint's NextXID: 0:1133

Latest checkpoint's NextOID: 16467

Latest checkpoint's NextMultiXactId: 1

Latest checkpoint's NextMultiOffset: 0

Latest checkpoint's oldestXID: 1078

Latest checkpoint's oldestXID's DB: 1

Latest checkpoint's oldestActiveXID: 0

Latest checkpoint's oldestMultiXid: 1

Latest checkpoint's oldestMulti's DB: 1

Latest checkpoint's oldestCommitTsXid:0

Latest checkpoint's newestCommitTsXid:0

Time of latest checkpoint: Thu 20 Mar 2025 09:44:41 PM CST

Fake LSN counter for unlogged rels: 0/3E8

Minimum recovery ending location: 0/0

Min recovery ending loc's timeline: 0

Backup start location: 0/0

Backup end location: 0/0

End-of-backup record required: no

wal_level setting: replica

wal_log_hints setting: on

max_connections setting: 100

max_worker_processes setting: 8

max_wal_senders setting: 32

max_prepared_xacts setting: 100

max_locks_per_xact setting: 64

track_commit_timestamp setting: off

Maximum data alignment: 8

Database block size: 8192

Blocks per segment of large relation: 131072

WAL block size: 8192

Bytes per WAL segment: 16777216

Maximum length of identifiers: 64

Maximum columns in an index: 32

Maximum size of a TOAST chunk: 1988

Size of a large-object chunk: 2048

Date/time type storage: 64-bit integers

Float4 argument passing: by value

Float8 argument passing: by value

Data page checksum version: 1

Data page checksum device: 0

Mock authentication nonce: 4e5145da4a12f0a0039d7fcfb32e5a8cc7e7c37c16ff44a43baf8c295105b96f

database mode: 1

auth method mode: 0

查看日志,我发现node2的Latest checkpoint’s WalTimeLineID 为2,高于node1的1,且NextXID值note2为1136高于node1的1133,说明node2的数据库更新,故选择node2作为新主库

2. 双主故障集群修复

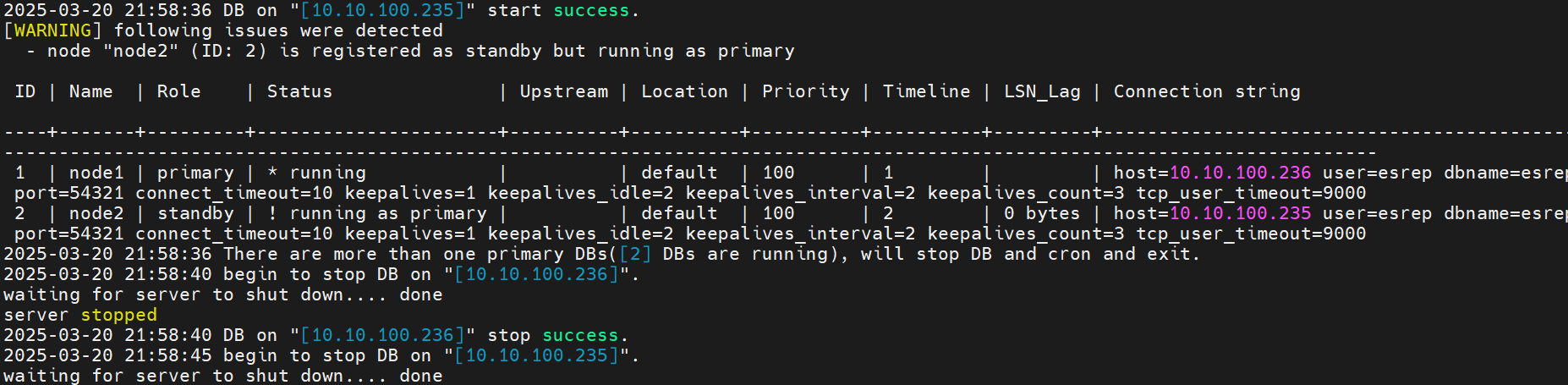

2.1 启动数据库

[kingbase@ocdb235 bin]$ ./sys_ctl -D /yk/data/Kingbase/V9/data

sys_ctl: no operation specified

Try "sys_ctl --help" for more information.

[kingbase@ocdb235 bin]$ ./sys_ctl -D /yk/data/Kingbase/V9/data restart

sys_ctl: PID file "/yk/data/Kingbase/V9/data/kingbase.pid" does not exist

Is server running?

trying to start server anyway

waiting for server to start....2025-03-20 22:06:27.467 CST [26257] LOG: config the real archive_command string as soon as possible to archive WAL files

2025-03-20 22:06:27.475 CST [26257] LOG: sepapower extension initialized

2025-03-20 22:06:27.481 CST [26257] LOG: starting KingbaseES V009R001C002B0014 on x86_64-pc-linux-gnu, compiled by gcc (GCC) 4.8.5 20150623 (Red Hat 4.8.5-28), 64-bit

2025-03-20 22:06:27.482 CST [26257] LOG: listening on IPv4 address "0.0.0.0", port 54321

2025-03-20 22:06:27.482 CST [26257] LOG: listening on IPv6 address "::", port 54321

2025-03-20 22:06:27.487 CST [26257] LOG: listening on Unix socket "/tmp/.s.KINGBASE.54321"

2025-03-20 22:06:27.562 CST [26257] LOG: redirecting log output to logging collector process

2025-03-20 22:06:27.562 CST [26257] HINT: Future log output will appear in directory "sys_log".

done

server started

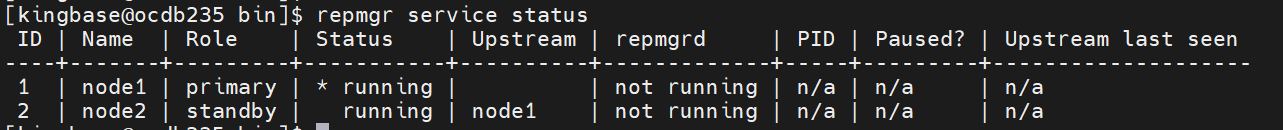

2.2 再次查看状态

./repmgr cluster show

2.3 将原主库node1加入集群

[kingbase@ocdb235 bin]$ ./repmgr node rejoin -h 10.10.100.236 -U esrep -d esrep

[NOTICE] rejoin target is node "node1" (ID: 1)

[NOTICE] sys_rewind execution required for this node to attach to rejoin target node 1

[HINT] provide --force-rewind

[kingbase@ocdb235 bin]$ ./repmgr node rejoin -h 10.10.100.236 -U esrep -d esrep --force-rewind

[NOTICE] rejoin target is node "node1" (ID: 1)

[NOTICE] executing sys_rewind

[DETAIL] sys_rewind command is "/yk/data/Kingbase/cluster/kingbase/bin/sys_rewind -D '/yk/data/Kingbase/V9/data' --source-server='host=10.10.100.236 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000' -X stream"

sys_rewind: servers diverged at WAL location 0/A0000A0 on timeline 1

sys_rewind: rewinding from last common checkpoint at 0/A000028(redo lsn at 0/A000028) on timeline 1

sys_rewind: find last common checkpoint start time from 2025-03-20 22:12:26.479567 CST to 2025-03-20 22:12:26.584334 CST, in "0.104767" seconds.

sys_rewind: collect the number of WAL files to be processed:8, start time from 2025-03-20 22:12:26.584523 CST to 2025-03-20 22:12:26.625223 CST, cost "0.040700" seconds.

sys_rewind: read WAL in target server from 0/A000028 to 0/110000A0 (endpoint 0/110000A0)

sys_rewind: read the local Wal file information, start time from 2025-03-20 22:12:26.584523 CST to 2025-03-20 22:12:26.626283 CST, cost "0.001030" seconds.

sys_rewind: waiting for background process to finish streaming ...

sys_rewind: file replication start time from 2025-03-20 22:12:26.626313 CST to 2025-03-20 22:12:28.761475 CST, cost "2.135162" seconds.

sys_rewind: update the control file: minRecoveryPoint is '0/1100E900', minRecoveryPointTLI is '1', and database state is 'in archive recovery'

sys_rewind: rewind start wal location 0/A000028 (file 00000001000000000000000A), end wal location 0/1100E900 (file 000000010000000000000011). wal data increment:114746(kB). time from 2025-03-20 22:12:26.626313 CST to 2025-03-20 22:12:29.243817 CST, in "2.764250" seconds.

sys_rewind: Done!

[NOTICE] 0 files copied to /yk/data/Kingbase/V9/data

[INFO] creating replication slot as user "esrep"

[NOTICE] setting node 2's upstream to node 1

[WARNING] unable to ping "host=10.10.100.235 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000"

[DETAIL] KCIping() returned "KCIPING_NO_RESPONSE"

[NOTICE] begin to start server at 2025-03-20 22:12:29.256344

[NOTICE] starting server using "/yk/data/Kingbase/cluster/kingbase/bin/sys_ctl -w -t 90 -D '/yk/data/Kingbase/V9/data' -l /yk/data/Kingbase/cluster/kingbase/bin/logfile start"

[NOTICE] start server finish at 2025-03-20 22:12:29.469731

[NOTICE] NODE REJOIN successful

[DETAIL] node 2 is now attached to node 1

(reverse-i-search)`repm': ./^Cpmgr node rejoin -h 10.10.100.236 -U esrep -d esrep --force-rewind

[kingbase@ocdb235 bin]$ repmgr service status

ID | Name | Role | Status | Upstream | repmgrd | PID | Paused? | Upstream last seen

----+-------+---------+-----------+----------+-------------+-----+---------+--------------------

1 | node1 | primary | * running | | not running | n/a | n/a | n/a

2 | node2 | standby | running | node1 | not running | n/a | n/a | n/a

[kingbase@ocdb235 bin]$

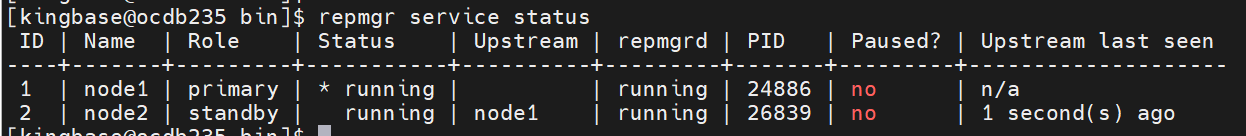

查看状态 repmgrd是 not running的,接下来重启集群

./sys_monitor.sh restart

再次查看状态,集群已经重新恢复正常

4. 集群缩容,删除节点node1

删除节点可以通过先unregister备机节点、再停止备机standby数据库方式,将standby节点从数据库集群删除。最后,要在主节点上查询复制槽,将可能残留的原备机数据库的复制槽删除。

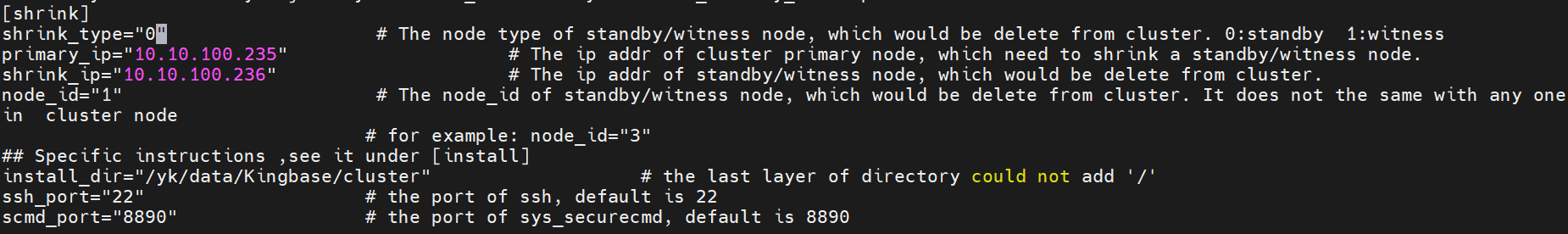

4.1 修改配置文件 install.conf (在安装目录的bin目录下)

修改如下配置:

[shrink]

shrink_type="0" # The node type of standby/witness node, which would be delete from cluster. 0:standby 1:witness

primary_ip="10.10.100.236" # The ip addr of cluster primary node, which need to shrink a standby/witness node.

shrink_ip="10.10.100.235" # The ip addr of standby/witness node, which would be delete from cluster.

node_id="2" # The node_id of standby/witness node, which would be delete from cluster. It does not the same with any one in cluster node

# for example: node_id="3"

## Specific instructions ,see it under [install]

install_dir="/yk/data/Kingbase/cluster"

4.2 节点缩容来了

./cluster_install.sh shrink

[CONFIG_CHECK] will deploy the cluster of

[RUNNING] success connect to the target "10.10.100.235" ..... OK

[RUNNING] success connect to "10.10.100.235" from current node by 'ssh' ..... OK

[RUNNING] success connect to the target "10.10.100.236" ..... OK

[RUNNING] success connect to "10.10.100.236" from current node by 'ssh' ..... OK

[RUNNING] Primary node ip is 10.10.100.236 ...

[RUNNING] Primary node ip is 10.10.100.236 ... OK

[CONFIG_CHECK] set install_with_root=1

[RUNNING] success connect to "" from current node by 'ssh' ..... OK

[RUNNING] success connect to the target "10.10.100.236" ..... OK

[RUNNING] success connect to "10.10.100.236" from current node by 'ssh' ..... OK

[INSTALL] load config from cluster.....

[INFO] db_user=system

[INFO] db_port=54321

[INFO] use_scmd=1

[INFO] auto_cluster_recovery_level=1

[INFO] synchronous=quorum

[INSTALL] load config from cluster.....OK

[CONFIG_CHECK] check database connection ...

[CONFIG_CHECK] check database connection ... OK

[CONFIG_CHECK] shrink_ip[10.10.100.235] is a standby node IP in the cluster ...

[CONFIG_CHECK] shrink_ip[10.10.100.235] is a standby node IP in the cluster ...ok

[CONFIG_CHECK] The localhost is shrink_ip:[10.10.100.235] or primary_ip:[10.10.100.236]...

[CONFIG_CHECK] The localhost is shrink_ip:[10.10.100.235] or primary_ip:[10.10.100.236]...ok

[RUNNING] Primary node ip is 10.10.100.236 ...

[RUNNING] Primary node ip is 10.10.100.236 ... OK

[CONFIG_CHECK] check node_id is in cluster ...

[CONFIG_CHECK] check node_id is in cluster ...OK

[RUNNING] The /yk/data/Kingbase/cluster/kingbase/bin dir exist on "10.10.100.235" ...

[RUNNING] The /yk/data/Kingbase/cluster/kingbase/bin dir exist on "10.10.100.235" ... OK

ID | Name | Role | Status | Upstream | Location | Priority | Timeline | LSN_Lag | Connection string

----+-------+---------+-----------+----------+----------+----------+----------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1 | node1 | primary | * running | | default | 100 | 1 | | host=10.10.100.236 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000

2 | node2 | standby | running | node1 | default | 100 | 1 | 0 bytes | host=10.10.100.235 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000

[RUNNING] Del node is standby ...

[INFO] node:10.10.100.235 can be deleted ... OK

[RUNNING] query archive command at 10.10.100.236 ...

[RUNNING] current cluster not config sys_rman,return.

[Thu Mar 20 23:13:38 CST 2025] [INFO] /yk/data/Kingbase/cluster/kingbase/bin/repmgr standby unregister --node-id=2 ...

[INFO] connecting to local standby

[INFO] connecting to primary database

[NOTICE] unregistering node 2

[INFO] SET synchronous TO "async" on primary host

[INFO] change synchronous_standby_names from "ANY 1( node2)" to ""

[INFO] try to drop slot "repmgr_slot_2" of node 2 on primary node

[WARNING] replication slot "repmgr_slot_2" is still active on node 2

[INFO] standby unregistration complete

[Thu Mar 20 23:13:39 CST 2025] [INFO] /yk/data/Kingbase/cluster/kingbase/bin/repmgr standby unregister --node-id=2 ...OK

[Thu Mar 20 23:13:39 CST 2025] [INFO] check db connection ...

[Thu Mar 20 23:13:40 CST 2025] [INFO] check db connection ...ok

2025-03-20 23:13:40 Ready to stop local kbha daemon and repmgrd daemon ...

2025-03-20 23:13:45 begin to stop repmgrd on "[localhost]".

2025-03-20 23:13:46 repmgrd on "[localhost]" stop success.

2025-03-20 23:13:46 Done.

2025-03-20 23:13:46 begin to stop DB on "[localhost]".

waiting for server to shut down.... done

server stopped

2025-03-20 23:13:47 DB on "[localhost]" stop success.

ID | Name | Role | Status | Upstream | Location | Priority | Timeline | LSN_Lag | Connection string

----+-------+---------+-----------+----------+----------+----------+----------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1 | node1 | primary | * running | | default | 100 | 1 | | host=10.10.100.236 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000

[Thu Mar 20 23:13:47 CST 2025] [INFO] drop replication slot:repmgr_slot_2...

pg_drop_replication_slot

--------------------------

(1 row)

[Thu Mar 20 23:13:47 CST 2025] [INFO] drop replication slot:repmgr_slot_2...OK

[Thu Mar 20 23:13:47 CST 2025] [INFO] modify synchronous parameter configuration...

[Thu Mar 20 23:13:48 CST 2025] [INFO] modify synchronous parameter configuration...ok

ID | Name | Role | Status | Upstream | Location | Priority | Timeline | LSN_Lag | Connection string

----+-------+---------+-----------+----------+----------+----------+----------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1 | node1 | primary | * running | | default | 100 | 1 | | host=10.10.100.236 user=esrep dbname=esrep port=54321 connect_timeout=10 keepalives=1 keepalives_idle=2 keepalives_interval=2 keepalives_count=3 tcp_user_timeout=9000

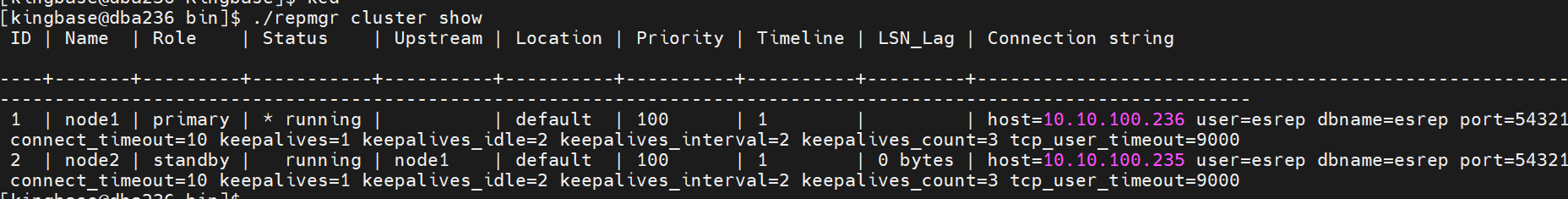

4.3 检查集群状态

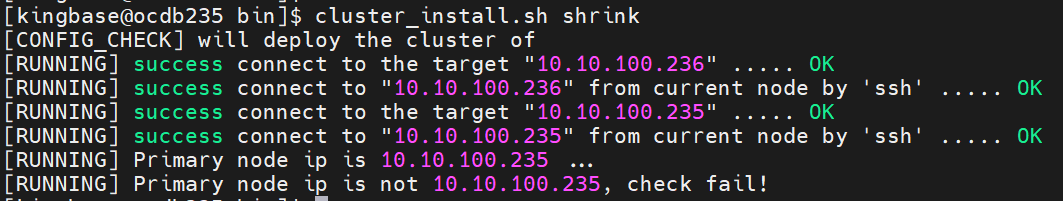

5. 遇到的小坎坎~

5.1 若是要缩容的是带有备节点的主节点,是不允许删除的

[kingbase@ocdb235 bin]$ cluster_install.sh shrink

[CONFIG_CHECK] will deploy the cluster of

[RUNNING] success connect to the target "10.10.100.236" ..... OK

[RUNNING] success connect to "10.10.100.236" from current node by 'ssh' ..... OK

[RUNNING] success connect to the target "10.10.100.235" ..... OK

[RUNNING] success connect to "10.10.100.235" from current node by 'ssh' ..... OK

[RUNNING] Primary node ip is 10.10.100.235 ...

[RUNNING] Primary node ip is not 10.10.100.235, check fail!

5.2 install.conf 的安装目录未配置 install_dir

./cluster_install.sh shrink

[CONFIG_CHECK] will deploy the cluster of

[ERROR] param [install_dir] is not set in config file "/yk/data/Kingbase/cluster/kingbase/bin/install.conf" or in myself shell sc

5.3 install.conf 的安装目录install_dir配置错误,应为 repmgr所在目录

./cluster_install.sh shrink

[CONFIG_CHECK] will deploy the cluster of

[RUNNING] success connect to the target "10.10.100.235" ..... OK

[RUNNING] success connect to "10.10.100.235" from current node by 'ssh' ..... OK

[RUNNING] success connect to the target "10.10.100.236" ..... OK

[RUNNING] success connect to "10.10.100.236" from current node by 'ssh' ..... OK

[RUNNING] Primary node ip is 10.10.100.236 ...

bash: /yk/data/Kingbase/kingbase/bin/repmgr: No such file or directory

[RUNNING] Primary node ip is not 10.10.100.236, check fail!

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。