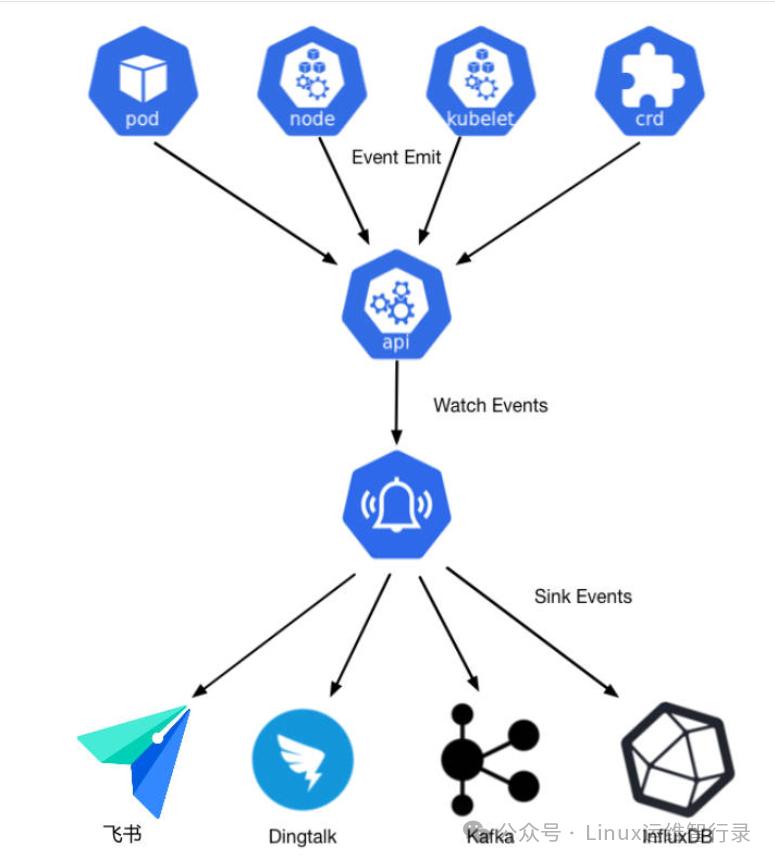

k8s集群在线上跑了一段时间,可是我发现我对集群内部的变化没有办法把控的很清楚,好比某个pod被重新调度了、某个node节点上的image gc失败了、某个hpa被触发了等等,而这些都是能够经过events拿到的,可是events并非永久存储的,它包含了集群各类资源的状态变化,因此咱们能够经过收集分析events来了解整个集群内部的变化。

该工具允许将经常错过的 Kubernetes 事件导出到各种输出,以便将它们用于可观察性或警报目的。你不会相信你错过了资源的变化。

chart包下载并上传

$ helm repo add bitnami "https://helm-charts.itboon.top/bitnami" --force-update

"bitnami" has been added to your repositories

$ helm pull bitnami/kubernetes-event-exporter --version 3.4.1

$ helm push kubernetes-event-exporter-3.4.1.tgz oci://core.jiaxzeng.com/plugins

Pushed: core.jiaxzeng.com/plugins/kubernetes-event-exporter:3.4.1

Digest: sha256:75c05c9d038924186924cde8cb7f6008b816e4c5036b9151fdd9e00b58c00a3d

镜像下载并上传

$ sudo docker pull bitnami/kubernetes-event-exporter:1.7.0-debian-12-r27

1.7.0-debian-12-r27: Pulling from bitnami/kubernetes-event-exporter

1ef56bd32736: Pull complete

Digest: sha256:fc8de74ff97b2b602576bc450b41365846d70f848e840c44b85b96e84dce73bd

Status: Downloaded newer image for bitnami/kubernetes-event-exporter:1.7.0-debian-12-r27

docker.io/bitnami/kubernetes-event-exporter:1.7.0-debian-12-r27

$ sudo docker tag bitnami/kubernetes-event-exporter:1.7.0-debian-12-r27 core.jiaxzeng.com/library/kubernetes-event-exporter:1.7.0-debian-12-r27

$ sudo docker push core.jiaxzeng.com/library/kubernetes-event-exporter:1.7.0-debian-12-r27

The push refers to repository [core.jiaxzeng.com/library/kubernetes-event-exporter]

fcee824489c8: Pushed

1.7.0-debian-12-r27: digest: sha256:8d6e7db4a9f7f6394fa2425d86f353563187670e1e0b9826b3597d088a4a281a size: 529

从p12证书获取ca,cert和key文件

# 获取私钥

$ openssl pkcs12 -in app/kafka/pki/kafka.server.keystore.p12 -nocerts -nodes -out tmp/private.key

Enter Import Password:

MAC verified OK

# 获取证书

$ openssl pkcs12 -in app/kafka/pki/kafka.server.keystore.p12 -clcerts -nokeys -out tmp/kafka-client.crt

Enter Import Password:

MAC verified OK

# 获取ca证书

$ openssl pkcs12 -in app/kafka/pki/kafka.server.keystore.p12 -cacerts -nokeys -chain -out tmp/kafka-ca.crt

Enter Import Password:

MAC verified OK

上传证书给kubernetes

$ kubectl -n obs-system create secret generic kafka-ssl-secret --from-file=/tmp/kafka-ca.crt --from-file=/tmp/kafka-client.crt --from-file=/tmp/kafka-client.key

secret/kafka-ssl-secret created

chart配置文件

# 设置私有镜像

global:

security:

allowInsecureImages:true

image:

registry:core.jiaxzeng.com

repository:library/kubernetes-event-exporter

tag:1.7.0-debian-12-r27

# 资源名称

fullnameOverride:kubernetes-event-exporter

# 副本数

replicaCount:2

# 资源限制

resources:

requests:

cpu:1

memory:512Mi

limits:

cpu:2

memory:1024Mi

# 配置文件

config:

logLevel:info# fatal, error, warn, info or debug

logFormat:json# pretty or json

route:

routes:

-match:

-receiver:kafka

receivers:

-name:"kafka"

kafka:

version:"3.7.2"

clientId:"kubernetes-event"

topic:"kube-event"

brokers:

-"172.139.20.17:9093"

-"172.139.20.81:9093"

-"172.139.20.177:9093"

compressionCodec:"snappy"

tls:

enable:true

certFile:"/data/pki/kafka-client.crt"

keyFile:"/data/pki/kafka-client.key"

caFile:"/data/pki/kafka-ca.crt"

#sasl:

# enable: true

# username: "admin"

# password: "admin-password"

# mechanism: "sha512"

layout:

kind:"{{ .InvolvedObject.Kind }}"

namespace:"{{ .InvolvedObject.Namespace }}"

name:"{{ .InvolvedObject.Name }}"

reason:"{{ .Reason }}"

message:"{{ .Message }}"

type:"{{ .Type }}"

createdAt:"{{ .GetTimestampISO8601 }}"

# 挂载Kafka证书

extraVolumes:

-name:kafka-ssl

secret:

secretName:kafka-ssl-secret

extraVolumeMounts:

-mountPath:/data/pki/

name:kafka-ssl

# 调试模式

# diagnosticMode:

# enabled: true

Tip:可以看到Kafka sasl配置是注释的。经过验证是mechanism为sha512但从Kafka日志来看使用的是plain,各位大佬如果知晓怎么解决的话,麻烦告知下,在此感谢各位...

$ helm -n obs-system install kubernetes-event-exporter -f kubernetes-event-exporter-values.yaml kubernetes-event-exporter

NAME: kubernetes-event-exporter

LAST DEPLOYED: Wed Mar 26 14:59:44 2025

NAMESPACE: obs-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: kubernetes-event-exporter

CHART VERSION: 3.4.1

APP VERSION: 1.7.0

$ bin/kafka-get-offsets.sh --bootstrap-server 172.139.20.17:9092 --topic kube-event

kube-event:0:5

kube-event:1:5

kube-event:2:4

Tip:所有pod都启动成功且随便重启一个pod后,在查看没有数据的话,则说明event采集没有推送到Kafka中。

复用上一篇文章打造百万级日志洪峰!Logstash容器化部署如何扛住压力? 的logstash,从Kafka拉取数据后推送给es,最后kibana面板展示。

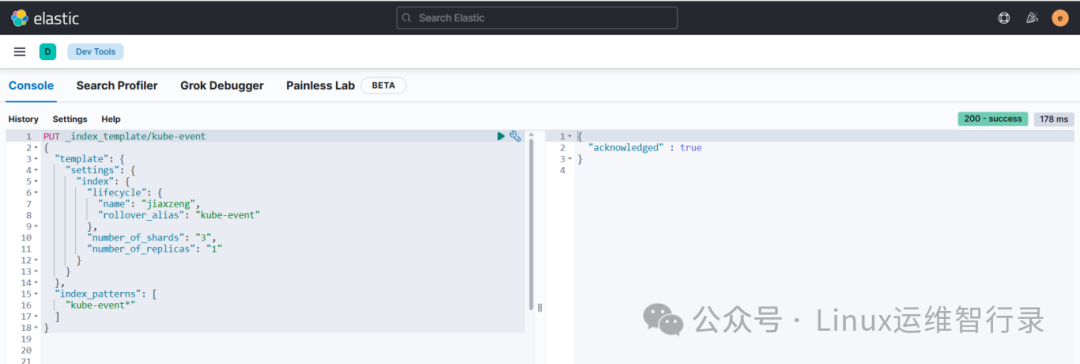

PUT _index_template/kube-event

{

"template": {

"settings": {

"index": {

"lifecycle": {

"name": "jiaxzeng",

"rollover_alias": "kube-event"

},

"number_of_shards": "3",

"number_of_replicas": "1"

}

}

},

"index_patterns": [

"kube-event*"

]

}

k8s-envents.conf: |

input {

kafka {

bootstrap_servers => "172.139.20.17:9095,172.139.20.81:9095,172.139.20.177:9095"

topics => ["kube-event"]

group_id => "kube-event"

security_protocol => "SASL_SSL"

sasl_mechanism => "SCRAM-SHA-512"

sasl_jaas_config => "org.apache.kafka.common.security.scram.ScramLoginModule required username='admin' password='admin-password';"

ssl_truststore_location => "/usr/share/logstash/certs/kafka/kafka.server.truststore.p12"

ssl_truststore_password => "truststore_password"

ssl_truststore_type => "PKCS12"

}

}

output{

elasticsearch{

hosts=>["https://elasticsearch.obs-system.svc:9200"]

ilm_enabled=>true

ilm_rollover_alias=>"kube-event"

ilm_pattern=>"{now/d}-000001"

ilm_policy=>"jiaxzeng"

manage_template=>false

template_name=>"kube-event"

user=>"elastic"

password=>"admin@123"

ssl=>true

ssl_certificate_verification=>true

truststore=>"/usr/share/logstash/certs/es/http.p12"

truststore_password=>"http.p12"

}

}

Tip:helm部署logstash的配置文件中 `logstashPipeline` 添加以上配置

$ helm -n obs-system upgrade logstash -f logstash-values.yaml logstash

Release "logstash" has been upgraded. Happy Helming!

NAME: logstash

LAST DEPLOYED: Thu Mar 27 16:14:51 2025

NAMESPACE: obs-system

STATUS: deployed

REVISION: 5

TEST SUITE: None

NOTES:

1. Watch all cluster members come up.

$ kubectl get pods --namespace=obs-system -l app=logstash -w

envent数据展示

Tip:有数据则恭喜各位少侠已打通整个流程;若没有数据则还需要历练。

当Kubernetes事件与Elasticsearch强强联合,运维的视角从此不再局限于“当下”。通过本文的实践,您已能将转瞬即逝的事件数据沉淀为持续增值的运维知识库:

✅ 故障回溯:精准定位3天前的节点驱逐根因

✅ 趋势预判:从Deployment扩容失败事件中识别资源瓶颈规律

✅ 自动化响应:基于异常事件频率触发自愈脚本

【推荐阅读】点击下方蓝色标题跳转至详细内容!

别忘了,关注我们的公众号,获取更多关于容器技术和云原生领域的深度洞察和技术实战,让我们携手在技术的海洋中乘风破浪!