历史Prometheus文章推荐:

【原创|译】Prometheus2.X(从2.0.0到2.13.0)十四个重大版本变迁说明

【原创|译文】Prometheus 2.0: 新的存储层极大地提高了Kubernetes和其他分布式系统的监控可伸缩性

基于Prometheus搭建SpringCloud全方位立体监控体系

【原创|译】Prometheus的Counter、Gauge、Summary、Histogram是如何工作的?(完整版)

【文末双十一购书大促】HikariCP可视化监控已支持Prometheus接入

【原创|译】Prometheus 2.X里程碑更新及Prometheus 2.X访问1.X数据

【原创|译】Prometheus将规则Rules从1.X转化为2.0的格式

【2019.11.11】New Features in Prometheus 2.14.0

As of Prometheus 2.12.0 there's a new feature to help find problematic queries.

从Prometheus 2.12.0开始,有一个新功能可以帮助查找有问题的查询。

While Prometheus has many features to limit the potential impacts of expensive PromQL queries on your monitoring, it's still possible that you'll run into something not covered or there aren't sufficient resources provisioned.

尽管Prometheus具有许多功能来限制昂贵的PromQL查询对您的监视的潜在影响,但您仍然有可能遇到一些未涵盖的问题或没有提供足够的资源。

As of Prometheus 2.12.0 any queries which were running when Prometheus shuts down will be printed on the next startup. As all running queries are cancelled on a clean shutdown, in practice this means that they'll be printed only if Prometheus is OOM killed or similar. On the next startup you'll see log lines like these:

从Prometheus 2.12.0开始,Prometheus关闭时正在运行的任何查询都将在下次启动时打印。由于所有正在运行的查询都会在完全关闭时被取消,因此实际上这意味着仅在Prometheus被OOM杀死或类似情况下才打印它们。在下次启动时,您将看到以下日志行:

level=info ts=2019-08-28T14:30:09.142Z caller=main.go:329 msg="Starting Prometheus" version="(version=2.12.0, branch=HEAD, revision=43acd0e2e93f9f70c49b2267efa0124f1e759e86)"

level=info ts=2019-08-28T14:30:09.142Z caller=main.go:330 build_context="(go=go1.12.8, user=root@7a9dbdbe0cc7, date=20190818-13:53:16)"

level=info ts=2019-08-28T14:30:09.142Z caller=main.go:331 host_details="(Linux 4.15.0-55-generic #60-Ubuntu SMP Tue Jul 2 18:22:20 UTC 2019 x86_64 mari (none))"

level=info ts=2019-08-28T14:30:09.142Z caller=main.go:332 fd_limits="(soft=1000000, hard=1000000)"

level=info ts=2019-08-28T14:30:09.142Z caller=main.go:333 vm_limits="(soft=unlimited, hard=unlimited)"

level=info ts=2019-08-28T14:30:09.143Z caller=query_logger.go:74 component=activeQueryTracker msg="These queries didn't finish in prometheus' last run:" queries="[{\"query\":\"changes(changes(prometheus_http_request_duration_seconds_bucket[1h:1s])[1h:1s])\",\"timestamp_sec\":1567002604}]"

level=info ts=2019-08-28T14:30:09.144Z caller=main.go:654 msg="Starting TSDB ..."level=info

Here there was only one query running when Prometheus died unnaturally (which I had to go out of my way to make slow so it'd show up), so it's a likely culprit if Prometheus ran out of resources. However there could have been other queries running that had only trivial usage, or indeed it could have been that something else entirely triggered the termination, so a query being in this list doesn't automatically mean it's a problem.

当Prometheus不自然地死掉时,这里只有一个查询在运行(我必须竭尽全力使它变慢,这样才能显示出来),因此,如果Prometheus耗尽了资源,这可能是罪魁祸首。但是,可能有其他查询仅在琐碎的情况下运行,或者实际上可能是其他原因完全触发了终止,因此,此列表中的查询并不会自动意味着有问题。

If the issue was an overly expensive query and you can't just throw resources at it, some flags you may wish to tweak are --query.max-concurrency

, --query.max-samples

, and --query.timeout

.

如果问题是过于昂贵的查询,它不能只是扔资源,您可能希望调整一些标志

--query.max-concurrency

,--query.max-samples

,--query.timeout

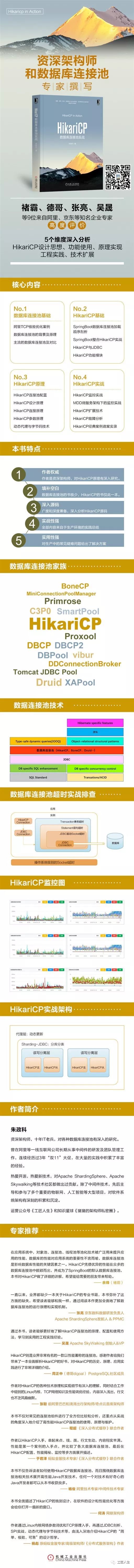

数据库及数据库连接池领域的新书推荐,京东、淘宝、当当有售:

你点的每个“在看”,我都认真当成了喜欢