LINUX

Oracle 18C RAC安装实施文档

目录

1、 安装环境 3

1、 操作系统 3

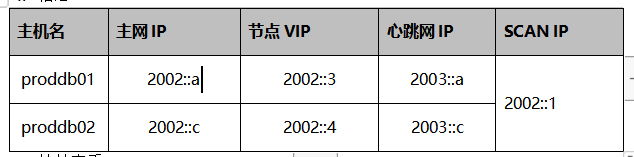

2、 对集群中节点配置IPV6的IP地址 3

3、IP信息 4

4、创建用户和组以及安装目录 5

5、 配置主机名/etc/hosts 6

6、 检查nsswitch.conf配置 6

7、 设置内核参数 7

8、 Root用户配置Shell Limits 9

9、部署disk_scheduler调度脚本,在所有节点上操作: 10

10、设置grid&oracle用户环境变量 12

11、其他服务设置 13

12、 准备软件包 13

13、安装cvuqdisk RPM包 14

2、安装grid软件 14

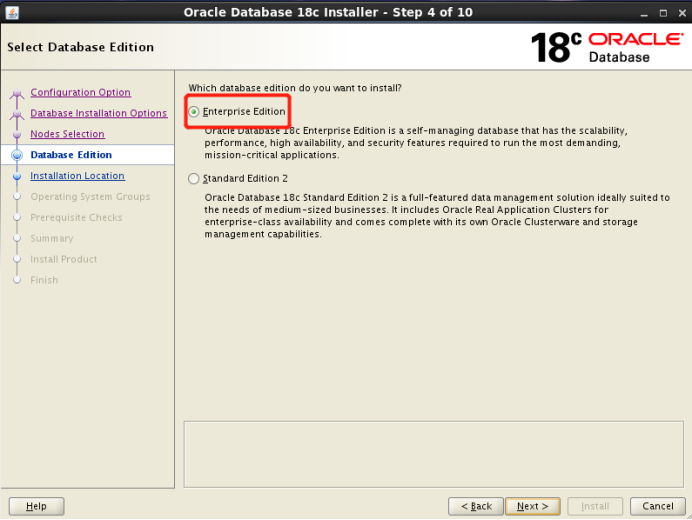

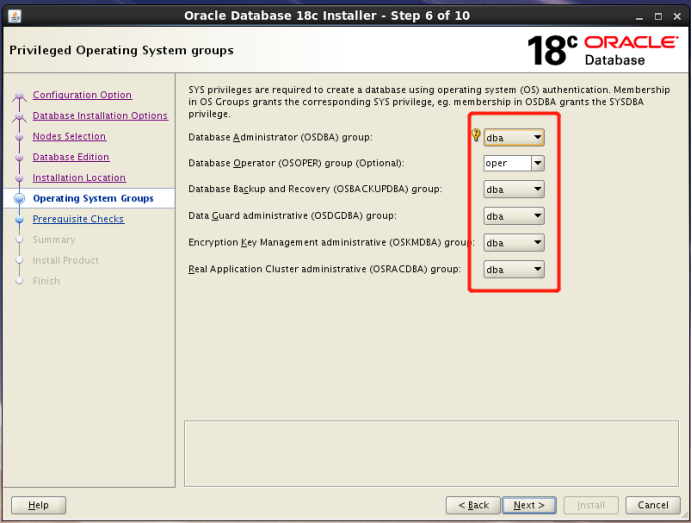

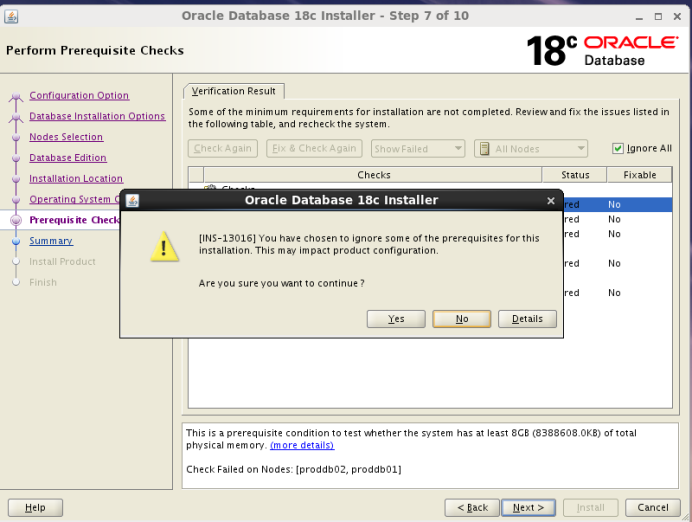

3、 安装oracle软件 36

4、 打补丁包 45

5、 创建数据库实例 84

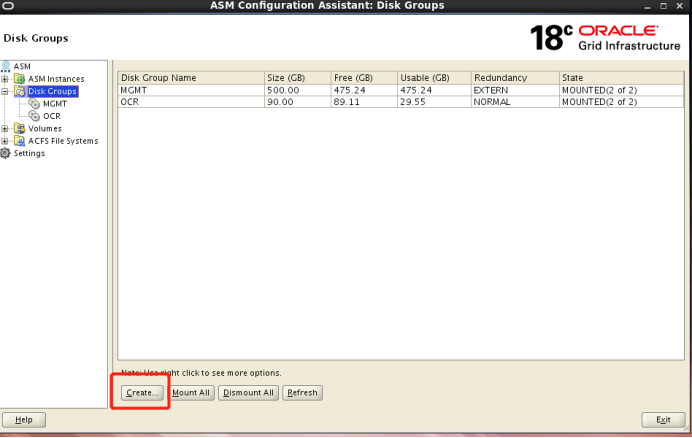

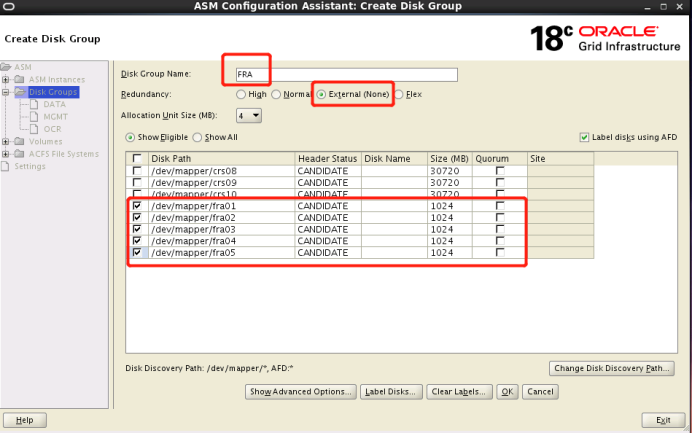

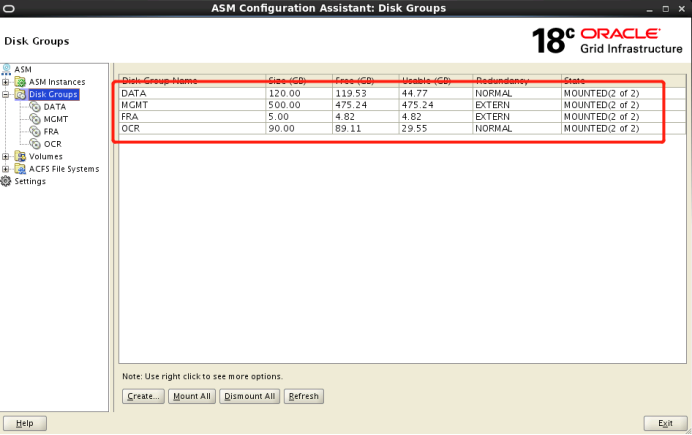

1、 创建asm磁盘组 84

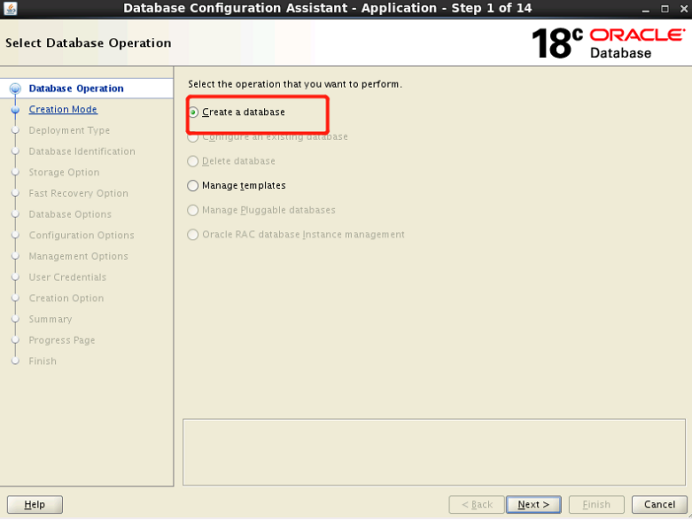

2、创建实例 86

1、安装环境

1、操作系统

在红帽6.7上安装集群

[root@proddb01 ~]# uname -r

2.6.32-573.el6.x86_64

[root@proddb01 ~]# uname -a

Linux proddb01 2.6.32-573.el6.x86_64 #1 SMP Wed Jul 1 18:23:37 EDT 2015 x86_64 x86_64 x86_64 GNU/Linux

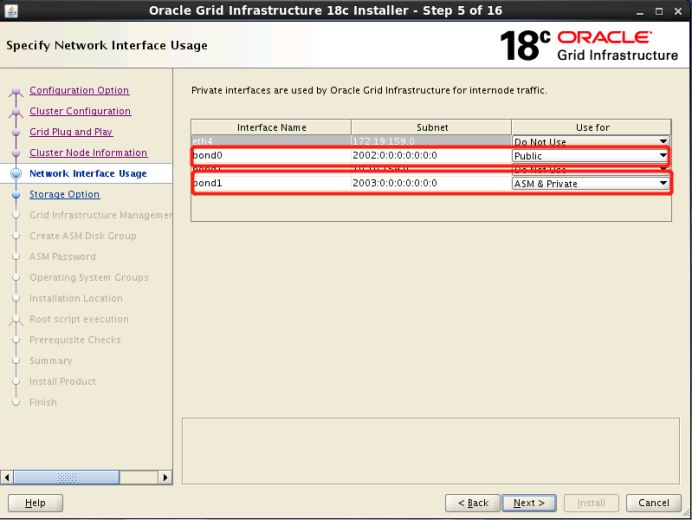

2、对集群中节点配置IPV6的IP地址

对于rac中的节点需要配置两块网卡

节点1

[root@proddb01 network-scripts]# cd /etc/sysconfig/network-scripts/

[root@proddb01 network-scripts]# cat ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

IPADDR=192.168.138.11

NETMASK=255.255.255.0

GATEWAY=192.168.138.2

DNS1=211.137.32.178

IPV6INIT=yes

IPV6ADDR=2002::A/64

[root@proddb01 network-scripts]# cat ifcfg-eth2

DEVICE=eth2

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

DNS1=211.137.32.178

IPV6INIT=yes

IPV6ADDR=2003::a/64

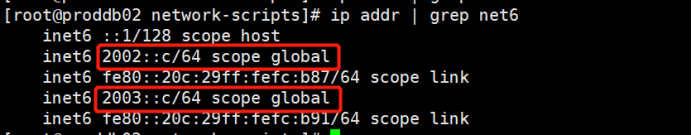

节点2

[root@proddb02 ~]# cd /etc/sysconfig/network-scripts/

[root@proddb02 network-scripts]# cat ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

IPADDR=192.168.138.19

NETMASK=255.255.255.0

GATEWAY=192.168.138.2

IPV6INIT=yes

IPV6ADDR=2002::c/64

[root@proddb02 network-scripts]# cat ifcfg-eth2

DEVICE=eth2

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

IPV6INIT=yes

IPV6ADDR=2003::c/64

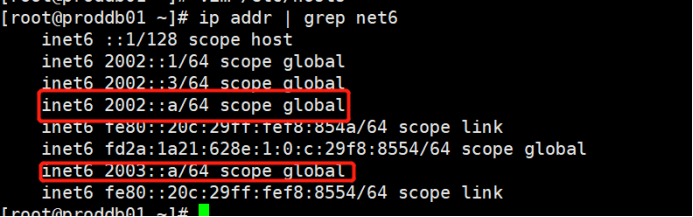

3、IP信息

IP信息

IP地址查看

4、创建用户和组以及安装目录

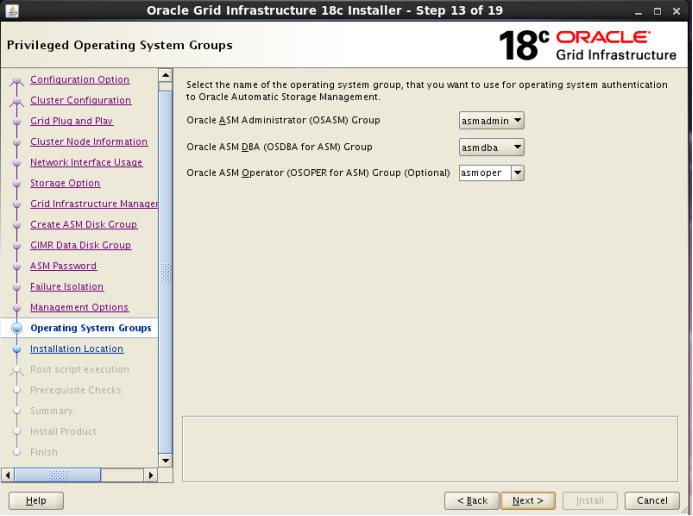

[root@proddb01 ~]# /usr/sbin/groupadd -g 1000 oinstall

[root@proddb01 ~]# /usr/sbin/groupadd -g 1001 dba

[root@proddb01 ~]# /usr/sbin/groupadd -g 1002 asmadmin

[root@proddb01 ~]# /usr/sbin/groupadd -g 1003 asmdba

[root@proddb01 ~]# /usr/sbin/groupadd -g 1005 oper

[root@proddb01 ~]# /usr/sbin/groupadd -g 1004 asmoper

[root@proddb01 ~]# useradd -u 1000 -g oinstall -G dba,asmadmin,asmdba,asmoper grid

[root@proddb01 ~]# useradd -u 1001 -g oinstall -G dba,asmadmin,asmdba,oper oracle

[root@proddb02 ~]# /usr/sbin/groupadd -g 1000 oinstall

[root@proddb02 ~]# /usr/sbin/groupadd -g 1001 dba

[root@proddb02 ~]# /usr/sbin/groupadd -g 1002 asmadmin

[root@proddb02 ~]# /usr/sbin/groupadd -g 1003 asmdba

[root@proddb02 ~]# /usr/sbin/groupadd -g 1005 oper

[root@proddb02 ~]# /usr/sbin/groupadd -g 1004 asmoper

[root@proddb02 ~]# useradd -u 1000 -g oinstall -G dba,asmadmin,asmdba,asmoper grid

[root@proddb02 ~]# useradd -u 1001 -g oinstall -G dba,asmadmin,asmdba,oper oracle

在节点1、2上设置oracle和grid的密码,设置密码相同

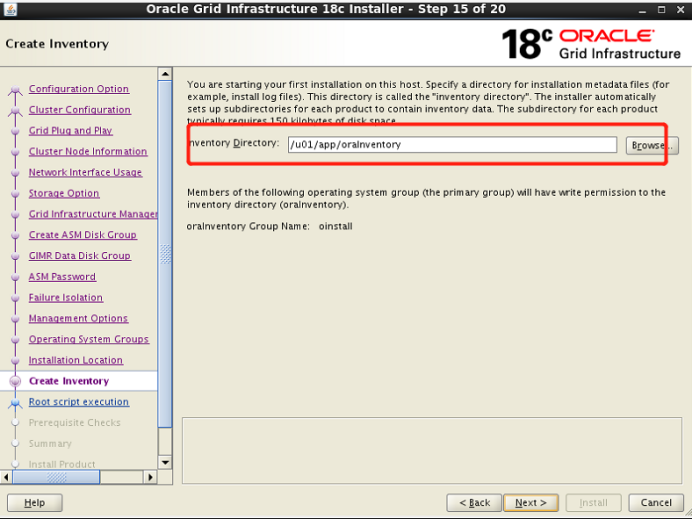

创建安装路径

[root@proddb01 ~]#mkdir -p /u01/app/18.5.0/grid

[root@proddb01 ~]# mkdir -p /u01/app/grid

[root@proddb01 ~]# chown -R grid:oinstall /u01/app/

[root@proddb01 ~]# mkdir –p /u01/app/oracle

[root@proddb01 ~]# chown oracle:oinstall /u01/app/oracle

[root@proddb01 ~]#chmod -R 775 /u01/

[root@proddb01 ~]#mkdir /u01/app/oracle/product/18.5.0/dbhome_1 -p

[root@proddb01 ~]#chown oracle.oinstall /u01/app/oracle/product/18.5.0/dbhome_1 -p

[root@proddb02 ~]#mkdir -p /u01/app/18.5.0/grid

[root@proddb02 ~]# mkdir -p /u01/app/grid

[root@proddb02 ~]# chown -R grid:oinstall /u01/app/

[root@proddb02 ~]# mkdir –p /u01/app/oracle

[root@proddb02 ~]# chown oracle:oinstall /u01/app/oracle

[root@proddb02 ~]#chmod -R 775 /u01/

[root@proddb02 ~]#mkdir /u01/app/oracle/product/18.5.0/dbhome_1 -p

[root@proddb02 ~]#chown oracle.oinstall /u01/app/oracle/product/18.5.0/dbhome_1 -p

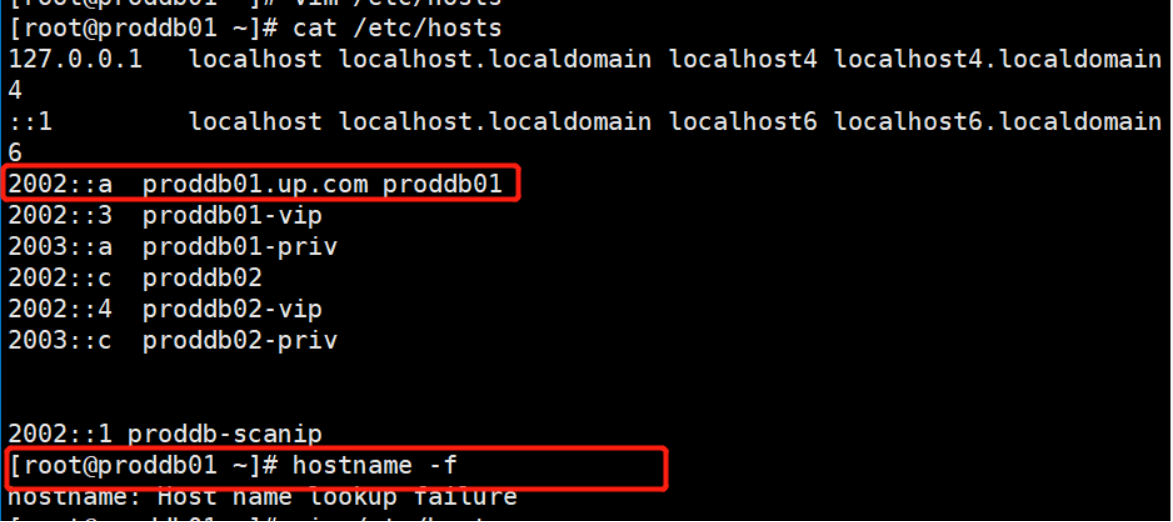

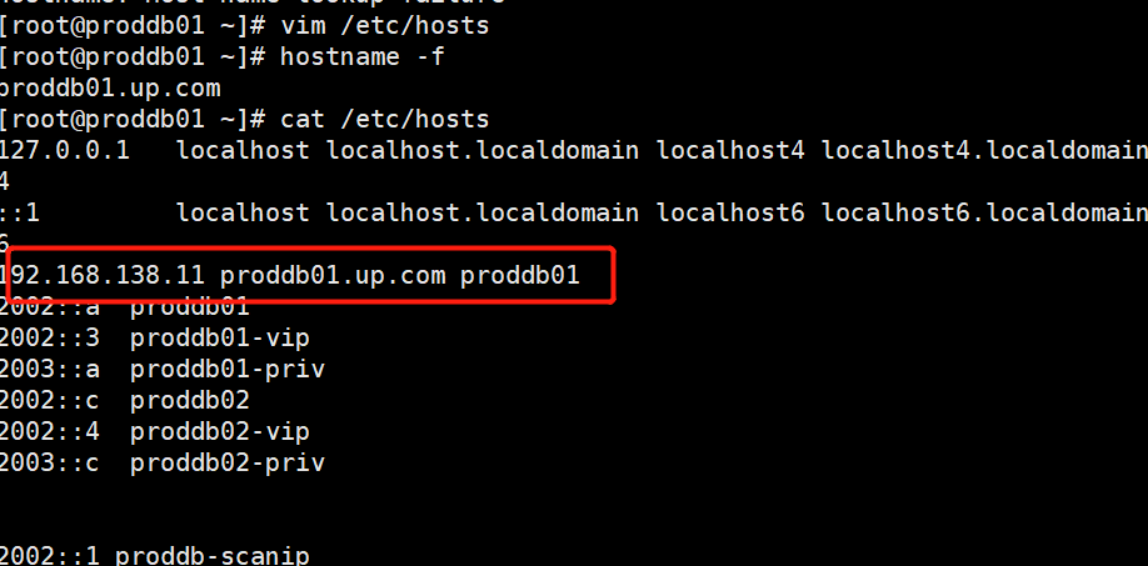

5、配置主机名/etc/hosts

[root@proddb01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.138.44 proddb01

2002::a proddb01

2002::3 proddb01-vip

2003::a proddb01-priv

2002::c proddb02

2002::4 proddb02-vip

2003::c proddb02-priv

2002::1 proddb-scanip

[root@proddb02 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

102.168.138.45 proddb02

2002::a proddb01

2002::3 proddb01-vip

2003::a proddb01-priv

2002::c proddb02

2002::4 proddb02-vip

2003::c proddb02-priv

2002::1 proddb-scanip

6、检查nsswitch.conf配置

将所有节点上如下的行修改为:

[root@proddb01 ~]# cat /etc/nsswitch.conf | grep ^hosts

hosts: files dns

[root@proddb02 ~]# cat /etc/nsswitch.conf | grep ^hosts

hosts: files dns

7、设置内核参数

在vm虚拟机上内核参数得需求,可能和生产环境不同(生产按照业务)

利用执行内核参数脚本来设置

sysctl.sh脚本内容如下,上传rac节点,在rac节点上执行

#configure system kernel parameter

if grep -q ‘kernel.shmall’ /etc/sysctl.conf

then

sed -i.bak ‘/kernel.shmall/c kernel.shmall = 2097152’ /etc/sysctl.conf

else

echo "kernel.shmall = 2097152 " >> /etc/sysctl.conf

fi

if grep -q ‘kernel.shmmax’ /etc/sysctl.conf

then

sed -i ‘/shmmax/c kernel.shmmax = 4096000000’ /etc/sysctl.conf

else

echo “kernel.shmmax = 4096000000” >> /etc/sysctl.conf

fi

if grep -q ‘kernel.shmmni’ /etc/sysctl.conf

then

sed -i ‘/shmmni/c kernel.shmmni = 4096’ /etc/sysctl.conf

else

echo “kernel.shmmni = 4096” >> /etc/sysctl.conf

fi

if grep -q ‘kernel.sem’ /etc/sysctl.conf

then

sed -i ‘/kernel.sem/c kernel.sem = 250 32000 100 128’ /etc/sysctl.conf

else

echo “kernel.sem = 250 32000 100 128” >> /etc/sysctl.conf

fi

if grep -q ‘fs.file’ /etc/sysctl.conf

then

sed -i ‘/fs.file/c fs.file-max = 6815744’ /etc/sysctl.conf

else

echo “fs.file-max = 6815744” >> /etc/sysctl.conf

fi

if grep -q ‘ip_local_port_range’ /etc/sysctl.conf

then

sed -i ‘/port_range/c net.ipv4.ip_local_port_range = 9000 65500’ /etc/sysctl.conf

else

echo “net.ipv4.ip_local_port_range = 9000 65500” >> /etc/sysctl.conf

fi

if grep -q ‘rmem_default’ /etc/sysctl.conf

then

sed -i ‘/rmem_default/c net.core.rmem_default = 262144’ /etc/sysctl.conf

else

echo “net.core.rmem_default = 262144” >> /etc/sysctl.conf

fi

if grep -q ‘rmem_max’ /etc/sysctl.conf

then

sed -i ‘/rmem_max/c net.core.rmem_max = 4194304’ /etc/sysctl.conf

else

echo “net.core.rmem_max = 4194304” >> /etc/sysctl.conf

fi

if grep -q ‘wmem_default’ /etc/sysctl.conf

then

sed -i ‘/wmem_default/c net.core.wmem_default = 262144’ /etc/sysctl.conf

else

echo “net.core.wmem_default = 262144” >> /etc/sysctl.conf

fi

if grep -q ‘wmem_max’ /etc/sysctl.conf

then

sed -i ‘/wmem_max/c net.core.wmem_max = 1048576’ /etc/sysctl.conf

else

echo “net.core.wmem_max = 1048576” >> /etc/sysctl.conf

fi

if grep -q ‘aio-max-nr’ /etc/sysctl.conf

then

sed -i ‘/aio-max-nr/c fs.aio-max-nr = 1048576’ /etc/sysctl.conf

else

echo “fs.aio-max-nr = 1048576” >> /etc/sysctl.conf

fi

sysctl -p

8、Root用户配置Shell Limits

修改/etc/security/limits.conf,添加如下的行,在rac节点上操作:

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 4096

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 4096

grid hard nofile 65536

grid soft stack 32768

grid hardstack 32768

- soft memlock 130000000

- hard memlock 130000000

修改/etc/security/limits.d/90-nproc.conf,添加如下的行,在rac节点上操作:

grid soft nproc 2047

grid hard nproc16384

oracle soft nproc 2047

oracle hard nproc 16384

修改/etc/pam.d/login,添加如下的行,在rac节点上操作:

session required pam_limits.so

修改grid&oracle用户的/etc/profile,添加如下的行,在rac节点上操作:

vi /etc/profile

if [ $USER = “oracle” ] || [ $USER = “grid” ]; then

if [ $SHELL = “/bin/ksh” ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

fi

umask 022

9、部署disk_scheduler调度脚本,在所有节点上操作:

cd /usr/bin

cat set_disk_scheduler.sh.txt>>set_disk_scheduler.sh

chmod +x set_disk_scheduler.sh

cat >> /etc/rc.local << e

/usr/bin/set_disk_scheduler.sh deadline

e

cat set_disk_scheduler.sh

cat /usr/bin/set_disk_scheduler.sh

#!/bin/bash

current_user=whoami

SCHEDULER_NAME=$1

lsscsi_value=which lsscsi

lsscsi_value_return=$?

if [ $current_user != ‘root’ ]

then

echo ‘please run as root’

elif [ -z $SCHEDULER_NAME ]

then

echo 'Invalid SCHEDULER_NAME,Please run it as ./set_scheduler.sh deadline/noop/anticipatory/cfq ’

elif [ $SCHEDULER_NAME != ‘noop’ ] && [ $SCHEDULER_NAME != ‘anticipatory’ ] && [ $SCHEDULER_NAME != ‘deadline’ ] && [ $SCHEDULER_NAME != ‘cfq’ ]

then

echo ‘Invalid SCHEDULER_NAME,Please run it as ./set_scheduler.sh deadline/noop/anticipatory/cfq ’

elif [ $lsscsi_value_return -eq 1 ]

then

echo ‘lsscsi RPM package is not installed,please install it’

else

for disk_name in lsscsi |grep disk|cut -d'/' -f3

do

#echo disk_name/queue/scheduler ]

then

#echo ’ scheduler exists’

is_deadline=cat /sys/block/$disk_name/queue/scheduler | grep "\[$SCHEDULER_NAME\]" |wc -l

if [ $is_deadline -eq 1 ]

then

echo $disk_name "current is disk_name/queue/scheduler|awk '{print 1}'|grep '\['|wc -l`

value_anti=`cat /sys/block/disk_name/queue/scheduler|awk '{print 2}'|grep '\['|wc -l`

value_deadline=`cat /sys/block/disk_name/queue/scheduler|awk '{print 3}'|grep '\['|wc -l`

value_cfq=`cat /sys/block/disk_name/queue/scheduler|awk ‘{print $4}’|grep ‘[’|wc -l`

if [ $value_noop -eq 1 ] then echo $disk_name 'current is noop' elif [ $value_anti -eq 1 ] then echo $disk_name 'current is anticipatory' elif [ $value_deadline -eq 1 ] then echo $disk_name 'current is deadline' elif [ $value_cfq -eq 1 ] then echo $disk_name 'current is cfq' fi echo $SCHEDULER_NAME > /sys/block/$disk_name/queue/scheduler fi else echo 'scheduler not exists' fi done复制

fi

将这个脚本保存为:/usr/bin/set_disk_scheduler.sh,然后在/etc/rc.local中加上:

/usr/bin/set_disk_scheduler.sh deadline

chmod +x /etc/rc.d/rc.local

10、设置grid&oracle用户环境变量

修改grid用户的.bashrc,添加如下的行,在rac节点上操作:

set -o vi

export TMP=/tmp

export TMPDIR=ORACLE_HOME/network/admin

export PATH=HOME/bin:LD_LIBRARY_PATH:/lib:/lib64:/usr/lib:/usr/lib64:/usr/local/lib:/usr/local/lib64:$ORACLE_HOME/lib

umask 022

修改oracle用户的.bashrc,添加如下的行,在rac节点上操作:

set -o vi

export TMP=/tmp

export TMPDIR=ORACLE_BASE/product/18.5.0/dbhome_1

export PATH=HOME/bin:LD_LIBRARY_PATH:/lib:/lib64:/usr/lib:/usr/lib64:/usr/local/lib:/usr/local/lib64:$ORACLE_HOME/lib

11、其他服务设置

在rac节点使用root执行

service NetworkManager stop

service auditd stop

service cpuspeed stop

service iptables stop

service ip6tables stop

chkconfig NetworkManager off

chkconfig auditd off

chkconfig cpuspeed off

chkconfig iptables off

chkconfig auditd off

chkconfig ip6tables off

cat >>/etc/sysconfig/network << g

NOZEROCONF=yes

g

12、准备软件包

在节点1上传压缩包,并且进行解压

[grid@proddb01 grid]$ ls LINUX.X64_180000_grid_home.zip

LINUX.X64_180000_grid_home.zip

[grid@proddb01 grid]$ pwd

/u01/app/18.5.0/grid

[grid@proddb01 grid]$ unzip LINUX.X64_180000_grid_home.zip

[oracle@proddb01 ~]$ cd /u01/app/oracle/product/18.5.0/dbhome_1/

[oracle@proddb01 dbhome_1]$ ls LINUX.X64_180000_db_home.zip

LINUX.X64_180000_db_home.zip

[oracle@proddb01 dbhome_1]$ unzip LINUX.X64_180000_db_home.zip

13、安装cvuqdisk RPM包

[root@proddb01 ~]# rpm -ivh /u01/app/18.5.0/grid/cv/rpm/cvuqdisk-1.0.10-1.rpm

将节点1的包传给节点2

[root@proddb02 ~]# rpm cvuqdisk-1.0.10-1.rpm

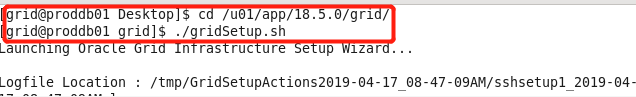

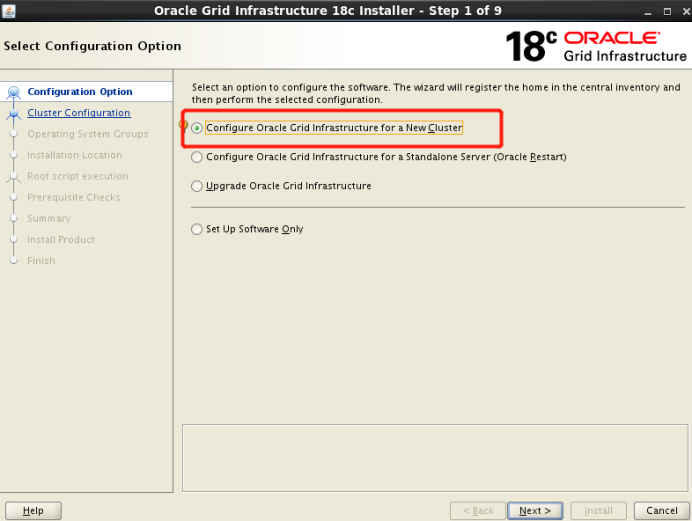

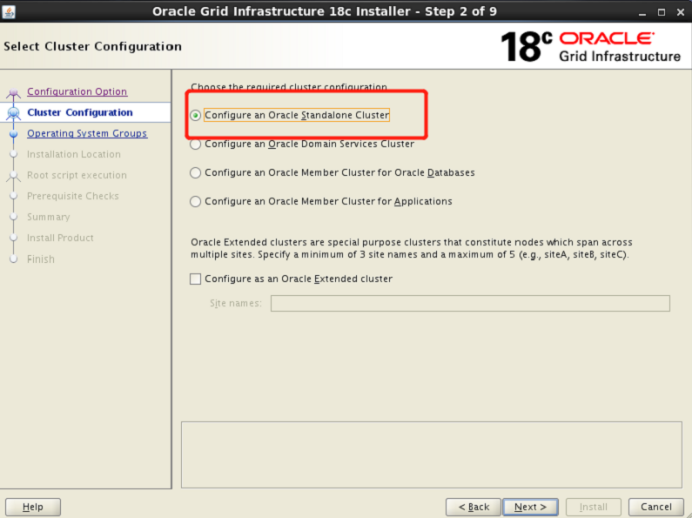

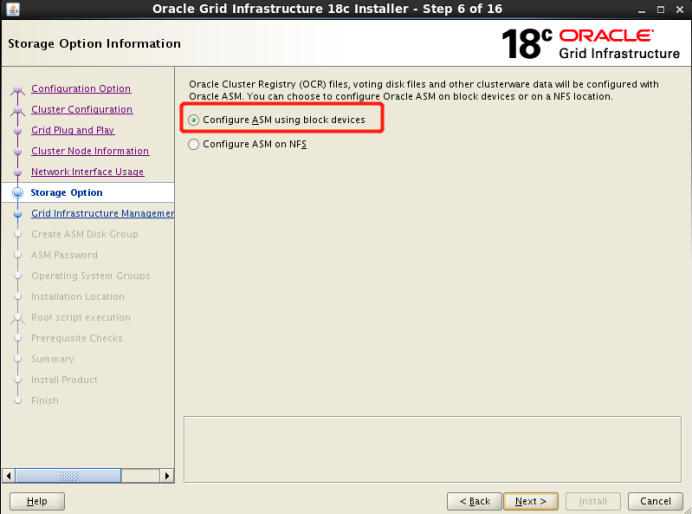

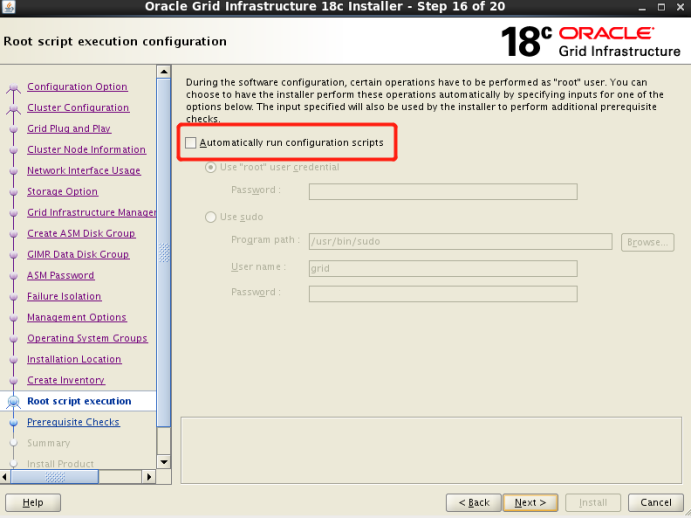

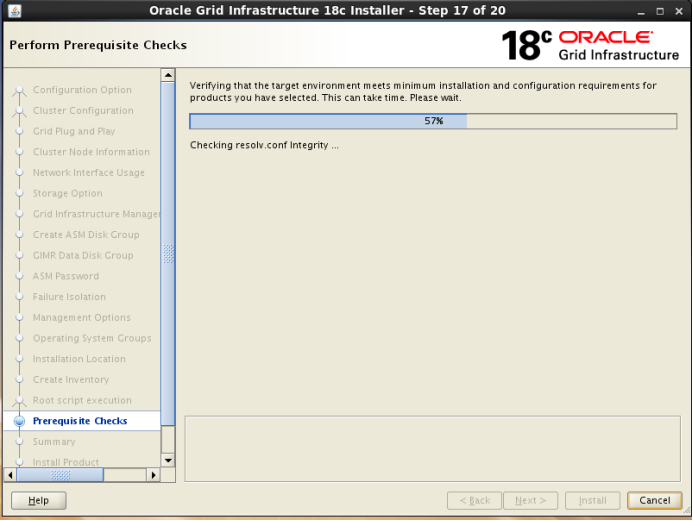

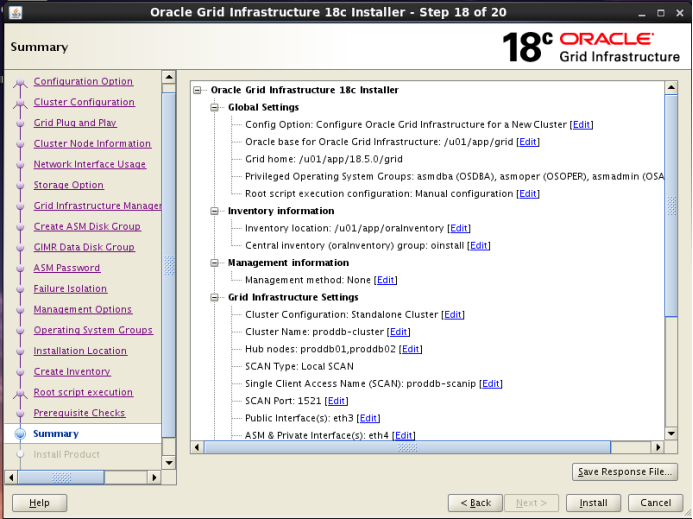

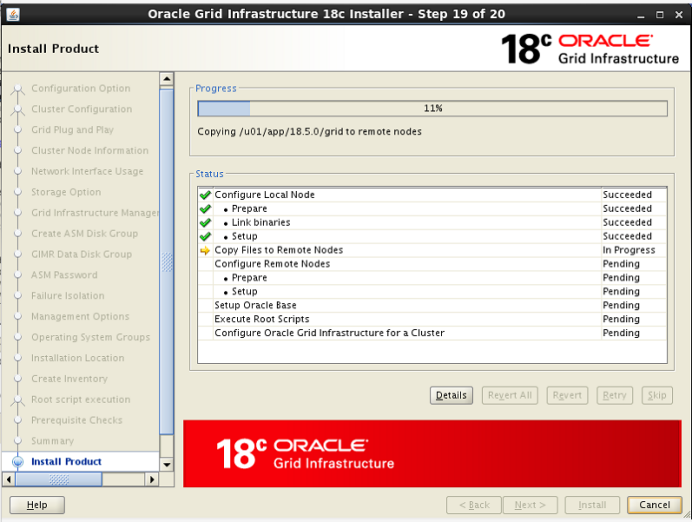

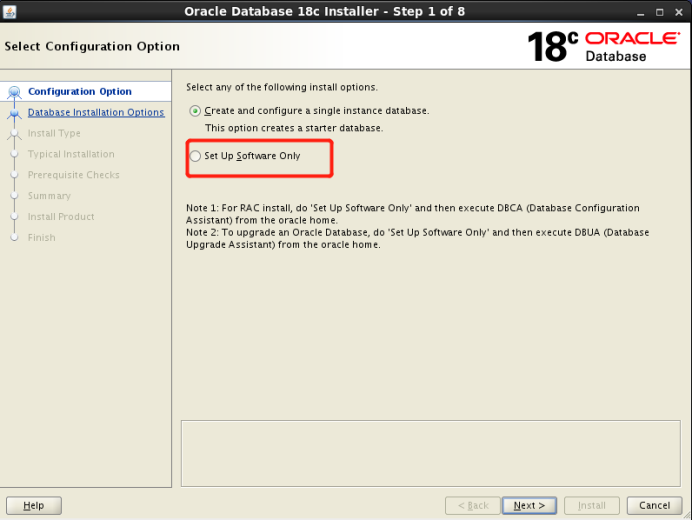

2、安装grid软件

在图形化界面执行grid安装脚本

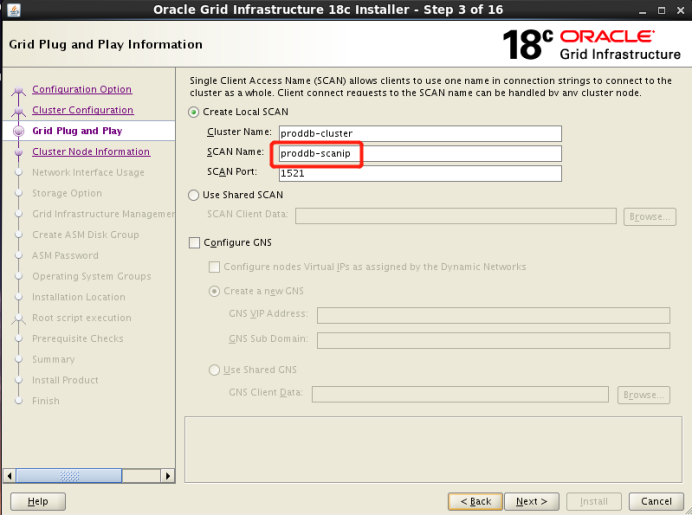

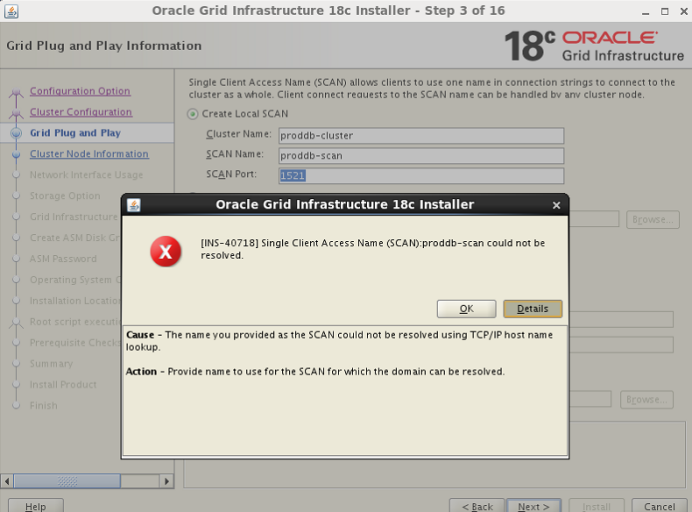

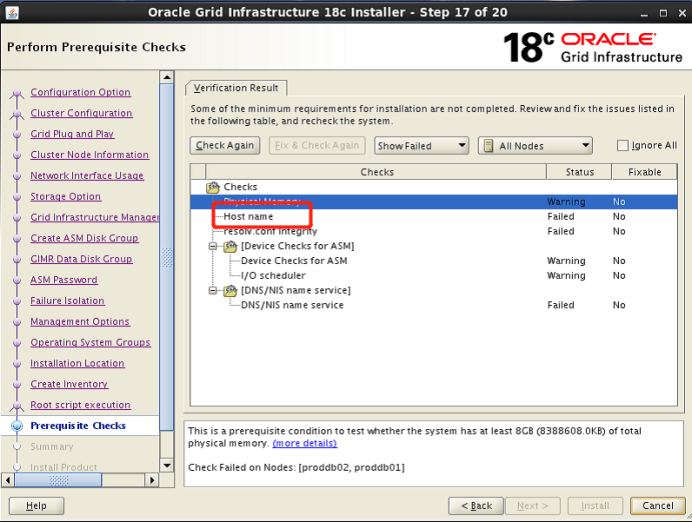

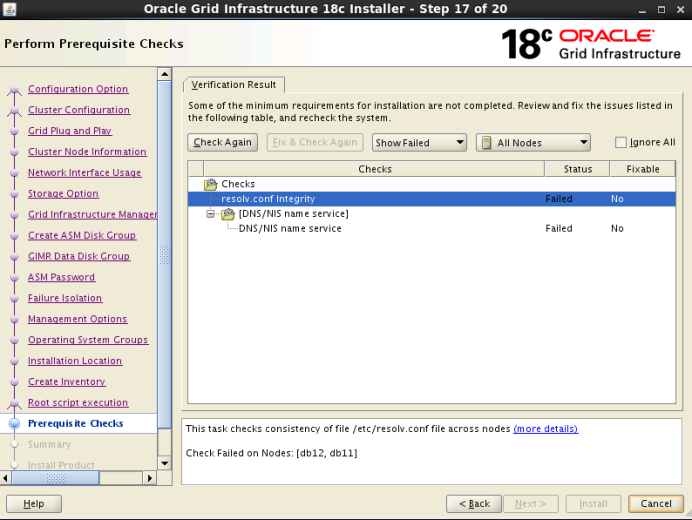

如果next出现以上报错,原因有二:

1、在rac节点中的/etc/hosts的scanip的名字和scan name填写不一致

2、/etc/hosts文件的IP地址不正确

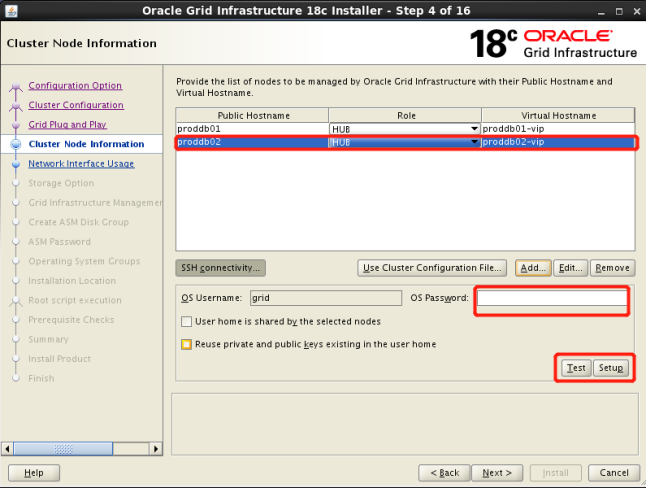

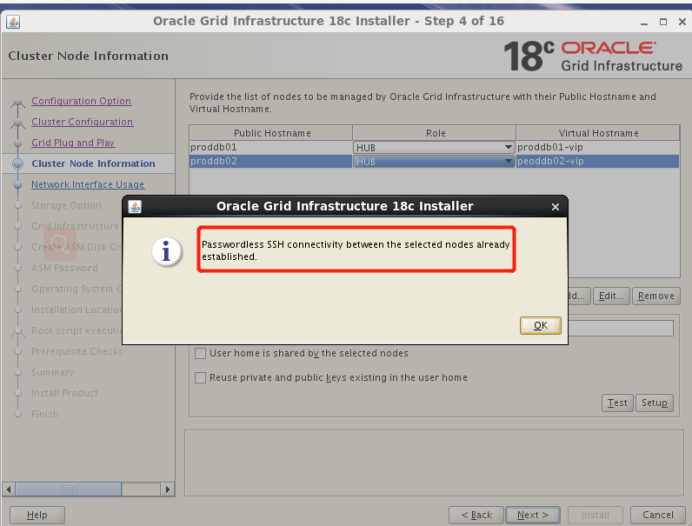

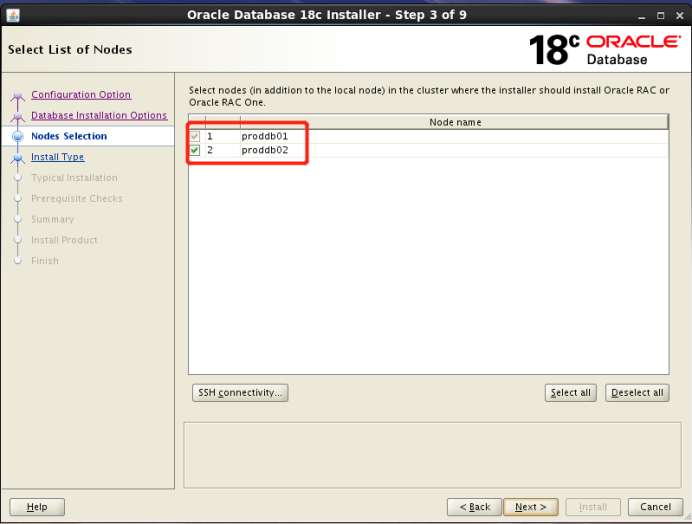

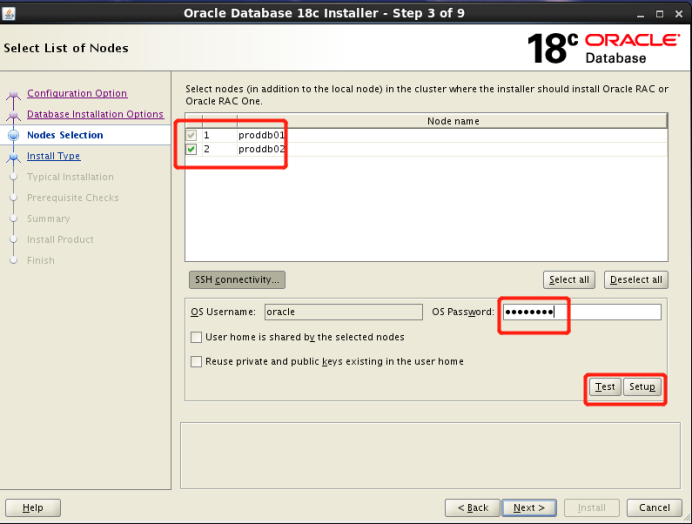

next后点击add,添加节点2的公网IP和VIP对的域名,注意和/etc/hosts文件对应

再点击ssh connectivity后,输入grid用户的密码

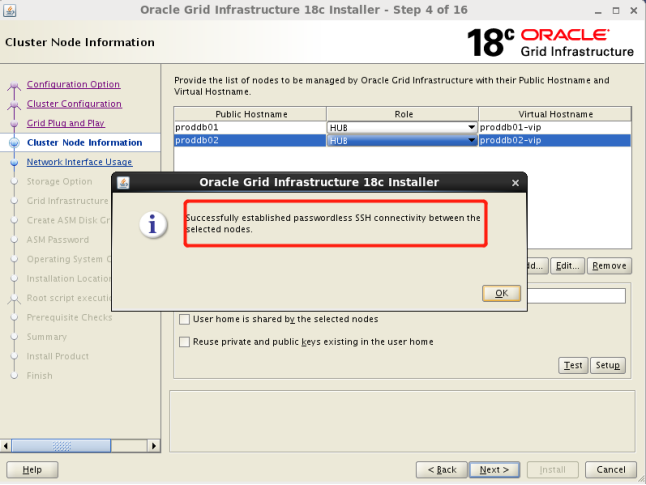

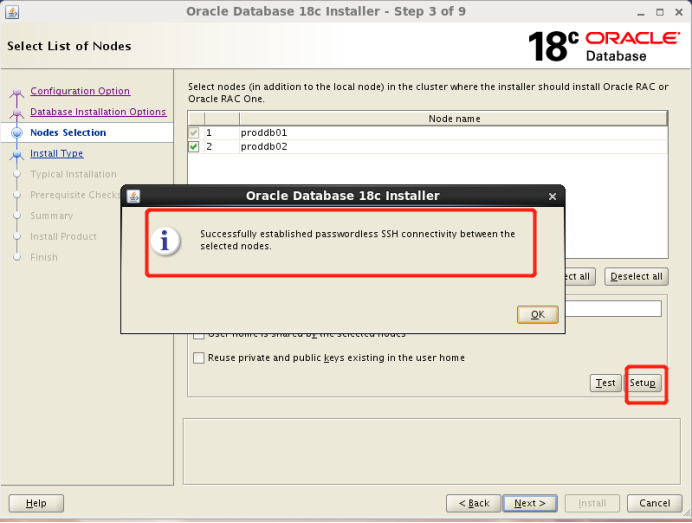

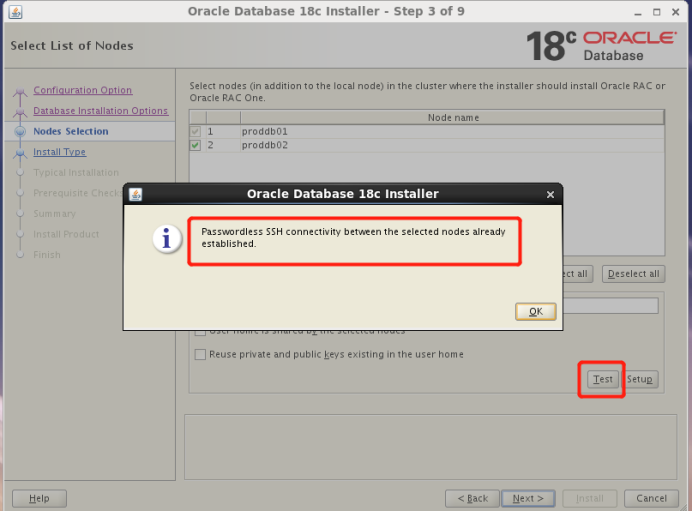

Setup ->test->next

setup

test

Next

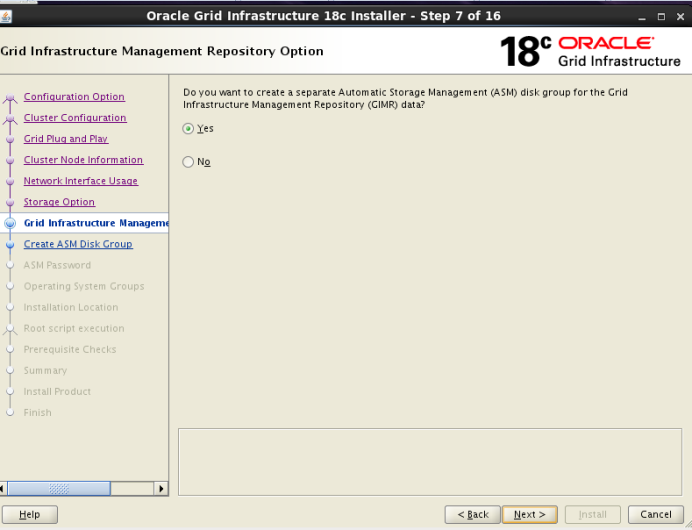

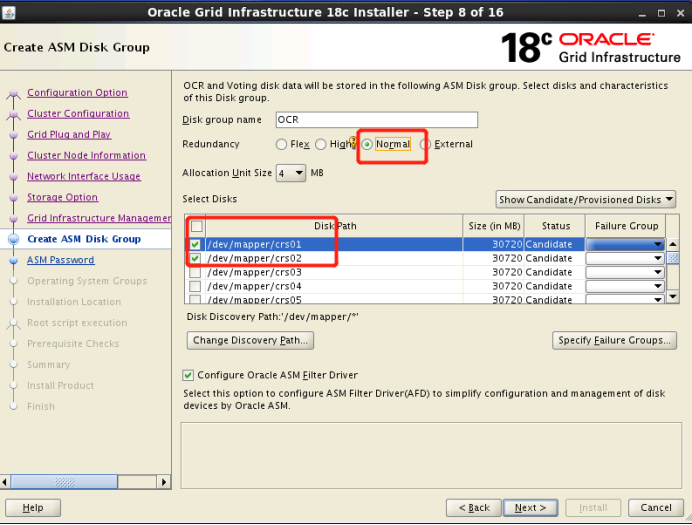

创建ASM磁盘组时需要注意,从12C版本以上在grid安装过程中增加了mcmt,需要保证mcmt对应的磁盘大小超过100G

此问题解决方法,在/etc/hosts文件中加ipv4地址,关闭安装界面,重新执行

前后对比

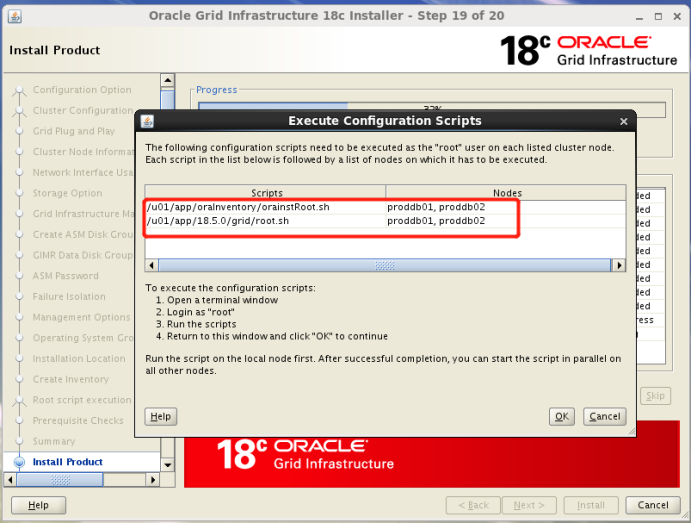

在节点1、2执行脚本

[root@proddb01 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@proddb02 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@proddb01 ~]# /u01/app/18.5.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/18.5.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin …

Copying oraenv to /usr/local/bin …

Copying coraenv to /usr/local/bin …

Creating /etc/oratab file…

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/18.5.0/grid/crs/insta

The log of current session can be found at:

/u01/app/grid/crsdata/proddb01/crsconfig/rootcrs_proddb01_2019-0

2019/04/17 12:09:21 CLSRSC-594: Executing installation step 1 of 2

2019/04/17 12:09:21 CLSRSC-4001: Installing Oracle Trace File Anal

2019/04/17 12:09:48 CLSRSC-4002: Successfully installed Oracle Tra.

2019/04/17 12:09:48 CLSRSC-594: Executing installation step 2 of 2

2019/04/17 12:09:48 CLSRSC-363: User ignored prerequisites during

2019/04/17 12:09:48 CLSRSC-594: Executing installation step 3 of 2

2019/04/17 12:09:50 CLSRSC-594: Executing installation step 4 of 2

2019/04/17 12:09:53 CLSRSC-594: Executing installation step 5 of 2

2019/04/17 12:10:06 CLSRSC-594: Executing installation step 6 of 2

2019/04/17 12:10:06 CLSRSC-594: Executing installation step 7 of 2

2019/04/17 12:10:06 CLSRSC-594: Executing installation step 8 of 2

2019/04/17 12:10:33 CLSRSC-594: Executing installation step 9 of 2

2019/04/17 12:10:40 CLSRSC-594: Executing installation step 10 of

2019/04/17 12:10:52 CLSRSC-594: Executing installation step 11 of

2019/04/17 12:10:52 CLSRSC-594: Executing installation step 12 of

2019/04/17 12:11:01 CLSRSC-594: Executing installation step 13 of

2019/04/17 12:11:01 CLSRSC-330: Adding Clusterware entries to file

2019/04/17 12:11:39 CLSRSC-594: Executing installation step 14 of

2019/04/17 12:12:15 CLSRSC-594: Executing installation step 15 of

CRS-2791: Starting shutdown of Oracle High Availability Services-m

CRS-2793: Shutdown of Oracle High Availability Services-managed reted

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2019/04/17 12:12:50 CLSRSC-594: Executing installation step 16 of

2019/04/17 12:12:58 CLSRSC-594: Executing installation step 17 of

CRS-2791: Starting shutdown of Oracle High Availability Services-m

CRS-2793: Shutdown of Oracle High Availability Services-managed reted

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2672: Attempting to start ‘ora.driver.afd’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.evmd’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.mdnsd’ on ‘proddb01’

CRS-2676: Start of ‘ora.driver.afd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ‘proddb01’

CRS-2676: Start of ‘ora.cssdmonitor’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.evmd’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.mdnsd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.gpnpd’ on ‘proddb01’

CRS-2676: Start of ‘ora.gpnpd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.gipcd’ on ‘proddb01’

CRS-2676: Start of ‘ora.gipcd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.cssd’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.diskmon’ on ‘proddb01’

CRS-2676: Start of ‘ora.diskmon’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.cssd’ on ‘proddb01’ succeeded

[INFO] [DBT-30161] Disk label(s) created successfully. Check /u01/-190417PM121339.log for details.

[INFO] [DBT-30001] Disk groups created successfully. Check /u01/ap90417PM121339.log for details.

2019/04/17 12:17:25 CLSRSC-482: Running command: ‘/u01/app/18.5.0/ oinstall’

CRS-2672: Attempting to start ‘ora.crf’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.storage’ on ‘proddb01’

CRS-2676: Start of ‘ora.crf’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.storage’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.crsd’ on ‘proddb01’

CRS-2676: Start of ‘ora.crsd’ on ‘proddb01’ succeeded

CRS-4256: Updating the profile

Successful addition of voting disk bcaaa013fbed4f46bff80115a6692b8

Successfully replaced voting disk group with +ocr.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

STATE File Universal Id File Name Disk group

- ONLINE bcaaa013fbed4f46bff80115a6692b8a (AFD:OCR1) [OCR]

Located 1 voting disk(s).

CRS-2791: Starting shutdown of Oracle High Availability Services-m

CRS-2673: Attempting to stop ‘ora.crsd’ on ‘proddb01’

CRS-2677: Stop of ‘ora.crsd’ on ‘proddb01’ succeeded

CRS-2673: Attempting to stop ‘ora.storage’ on ‘proddb01’

CRS-2673: Attempting to stop ‘ora.crf’ on ‘proddb01’

CRS-2673: Attempting to stop ‘ora.drivers.acfs’ on ‘proddb01’

CRS-2673: Attempting to stop ‘ora.mdnsd’ on ‘proddb01’

CRS-2677: Stop of ‘ora.storage’ on ‘proddb01’ succeeded

CRS-2673: Attempting to stop ‘ora.asm’ on ‘proddb01’

CRS-2677: Stop of ‘ora.drivers.acfs’ on ‘proddb01’ succeeded

CRS-2677: Stop of ‘ora.mdnsd’ on ‘proddb01’ succeeded

CRS-2677: Stop of ‘ora.asm’ on ‘proddb01’ succeeded

CRS-2673: Attempting to stop ‘ora.cluster_interconnect.haip’ on 'p

CRS-2677: Stop of ‘ora.cluster_interconnect.haip’ on ‘proddb01’ su

CRS-2673: Attempting to stop ‘ora.ctssd’ on ‘proddb01’

CRS-2673: Attempting to stop ‘ora.evmd’ on ‘proddb01’

CRS-2677: Stop of ‘ora.ctssd’ on ‘proddb01’ succeeded

CRS-2677: Stop of ‘ora.evmd’ on ‘proddb01’ succeeded

CRS-2673: Attempting to stop ‘ora.cssd’ on ‘proddb01’

CRS-2677: Stop of ‘ora.cssd’ on ‘proddb01’ succeeded

CRS-2673: Attempting to stop ‘ora.driver.afd’ on ‘proddb01’

CRS-2673: Attempting to stop ‘ora.gpnpd’ on ‘proddb01’

CRS-2677: Stop of ‘ora.driver.afd’ on ‘proddb01’ succeeded

CRS-2677: Stop of ‘ora.gpnpd’ on ‘proddb01’ succeeded

CRS-2677: Stop of ‘ora.crf’ on ‘proddb01’ succeeded

CRS-2673: Attempting to stop ‘ora.gipcd’ on ‘proddb01’

CRS-2677: Stop of ‘ora.gipcd’ on ‘proddb01’ succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed reted

CRS-4133: Oracle High Availability Services has been stopped.

2019/04/17 12:23:28 CLSRSC-594: Executing installation step 18 of

CRS-4123: Starting Oracle High Availability Services-managed resou

CRS-2672: Attempting to start ‘ora.evmd’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.mdnsd’ on ‘proddb01’

CRS-2676: Start of ‘ora.evmd’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.mdnsd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.gpnpd’ on ‘proddb01’

CRS-2676: Start of ‘ora.gpnpd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.gipcd’ on ‘proddb01’

CRS-2676: Start of ‘ora.gipcd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ‘proddb01’

CRS-2676: Start of ‘ora.cssdmonitor’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.crf’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.cssd’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.diskmon’ on ‘proddb01’

CRS-2676: Start of ‘ora.diskmon’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.crf’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.cssd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.cluster_interconnect.haip’ on ’

CRS-2672: Attempting to start ‘ora.ctssd’ on ‘proddb01’

CRS-2676: Start of ‘ora.cluster_interconnect.haip’ on ‘proddb01’ s

CRS-2676: Start of ‘ora.ctssd’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.asm’ on ‘proddb01’

CRS-2676: Start of ‘ora.asm’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.storage’ on ‘proddb01’

CRS-2676: Start of ‘ora.storage’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.crsd’ on ‘proddb01’

CRS-2676: Start of ‘ora.crsd’ on ‘proddb01’ succeeded

CRS-6023: Starting Oracle Cluster Ready Services-managed resources

CRS-6017: Processing resource auto-start for servers: proddb01

CRS-6016: Resource auto-start has completed for server proddb01

CRS-6024: Completed start of Oracle Cluster Ready Services-managed

CRS-4123: Oracle High Availability Services has been started.

2019/04/17 12:26:08 CLSRSC-343: Successfully started Oracle Cluste

2019/04/17 12:26:08 CLSRSC-594: Executing installation step 19 of

CRS-2672: Attempting to start ‘ora.ASMNET1LSNR_ASM.lsnr’ on 'prodd

CRS-2676: Start of ‘ora.ASMNET1LSNR_ASM.lsnr’ on ‘proddb01’ succee

CRS-2672: Attempting to start ‘ora.asm’ on ‘proddb01’

CRS-2676: Start of ‘ora.asm’ on ‘proddb01’ succeeded

CRS-2672: Attempting to start ‘ora.OCR.dg’ on ‘proddb01’

CRS-2676: Start of ‘ora.OCR.dg’ on ‘proddb01’ succeeded

2019/04/17 12:31:10 CLSRSC-594: Executing installation step 20 of

[INFO] [DBT-30161] Disk label(s) created successfully. Check /u01/-190417PM123127.log for details.

[INFO] [DBT-30001] Disk groups created successfully. Check /u01/ap90417PM123127.log for details.

2019/04/17 12:38:52 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster … succeeded

[root@proddb01 ~]#

[root@proddb02 network-scripts]# /u01/app/18.5.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/18.5.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin …

Copying oraenv to /usr/local/bin …

Copying coraenv to /usr/local/bin …

Creating /etc/oratab file…

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/18.5.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/proddb02/crsconfig/rootcrs_proddb02_2019-04-17_12-49-40AM.log

2019/04/17 12:49:50 CLSRSC-594: Executing installation step 1 of 20: ‘SetupTFA’.

2019/04/17 12:49:50 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector.

2019/04/17 12:50:17 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/04/17 12:50:17 CLSRSC-594: Executing installation step 2 of 20: ‘ValidateEnv’.

2019/04/17 12:50:17 CLSRSC-363: User ignored prerequisites during installation

2019/04/17 12:50:17 CLSRSC-594: Executing installation step 3 of 20: ‘CheckFirstNode’.

2019/04/17 12:50:18 CLSRSC-594: Executing installation step 4 of 20: ‘GenSiteGUIDs’.

2019/04/17 12:50:18 CLSRSC-594: Executing installation step 5 of 20: ‘SaveParamFile’.

2019/04/17 12:50:21 CLSRSC-594: Executing installation step 6 of 20: ‘SetupOSD’.

2019/04/17 12:50:21 CLSRSC-594: Executing installation step 7 of 20: ‘CheckCRSConfig’.

2019/04/17 12:50:22 CLSRSC-594: Executing installation step 8 of 20: ‘SetupLocalGPNP’.

2019/04/17 12:50:25 CLSRSC-594: Executing installation step 9 of 20: ‘CreateRootCert’.

2019/04/17 12:50:25 CLSRSC-594: Executing installation step 10 of 20: ‘ConfigOLR’.

2019/04/17 12:50:28 CLSRSC-594: Executing installation step 11 of 20: ‘ConfigCHMOS’.

2019/04/17 12:50:28 CLSRSC-594: Executing installation step 12 of 20: ‘CreateOHASD’.

2019/04/17 12:50:30 CLSRSC-594: Executing installation step 13 of 20: ‘ConfigOHASD’.

2019/04/17 12:50:30 CLSRSC-330: Adding Clusterware entries to file ‘oracle-ohasd.conf’

2019/04/17 12:50:57 CLSRSC-594: Executing installation step 14 of 20: ‘InstallAFD’.

2019/04/17 12:51:24 CLSRSC-594: Executing installation step 15 of 20: ‘InstallACFS’.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on ‘proddb02’

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on ‘proddb02’ has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2019/04/17 12:51:50 CLSRSC-594: Executing installation step 16 of 20: ‘InstallKA’.

2019/04/17 12:51:51 CLSRSC-594: Executing installation step 17 of 20: ‘InitConfig’.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on ‘proddb02’

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on ‘proddb02’ has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on ‘proddb02’

CRS-2673: Attempting to stop ‘ora.drivers.acfs’ on ‘proddb02’

CRS-2677: Stop of ‘ora.drivers.acfs’ on ‘proddb02’ succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on ‘proddb02’ has completed

CRS-4133: Oracle High Availability Services has been stopped.

2019/04/17 12:52:02 CLSRSC-594: Executing installation step 18 of 20: ‘StartCluster’.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start ‘ora.evmd’ on ‘proddb02’

CRS-2672: Attempting to start ‘ora.mdnsd’ on ‘proddb02’

CRS-2676: Start of ‘ora.evmd’ on ‘proddb02’ succeeded

CRS-2676: Start of ‘ora.mdnsd’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.gpnpd’ on ‘proddb02’

CRS-2676: Start of ‘ora.gpnpd’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.gipcd’ on ‘proddb02’

CRS-2676: Start of ‘ora.gipcd’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ‘proddb02’

CRS-2676: Start of ‘ora.cssdmonitor’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.crf’ on ‘proddb02’

CRS-2672: Attempting to start ‘ora.cssd’ on ‘proddb02’

CRS-2672: Attempting to start ‘ora.diskmon’ on ‘proddb02’

CRS-2676: Start of ‘ora.diskmon’ on ‘proddb02’ succeeded

CRS-2676: Start of ‘ora.crf’ on ‘proddb02’ succeeded

CRS-2676: Start of ‘ora.cssd’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.cluster_interconnect.haip’ on ‘proddb02’

CRS-2672: Attempting to start ‘ora.ctssd’ on ‘proddb02’

CRS-2676: Start of ‘ora.cluster_interconnect.haip’ on ‘proddb02’ succeeded

CRS-2676: Start of ‘ora.ctssd’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.asm’ on ‘proddb02’

CRS-2672: Attempting to start ‘ora.crsd’ on ‘proddb02’

CRS-2676: Start of ‘ora.asm’ on ‘proddb02’ succeeded

CRS-2676: Start of ‘ora.crsd’ on ‘proddb02’ succeeded

CRS-6017: Processing resource auto-start for servers: proddb02

CRS-2672: Attempting to start ‘ora.ASMNET1LSNR_ASM.lsnr’ on ‘proddb02’

CRS-2672: Attempting to start ‘ora.ons’ on ‘proddb02’

CRS-2676: Start of ‘ora.ons’ on ‘proddb02’ succeeded

CRS-2676: Start of ‘ora.ASMNET1LSNR_ASM.lsnr’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.asm’ on ‘proddb02’

CRS-2676: Start of ‘ora.asm’ on ‘proddb02’ succeeded

CRS-2672: Attempting to start ‘ora.proxy_advm’ on ‘proddb01’

CRS-2672: Attempting to start ‘ora.proxy_advm’ on ‘proddb02’

CRS-2676: Start of ‘ora.proxy_advm’ on ‘proddb01’ succeeded

CRS-2676: Start of ‘ora.proxy_advm’ on ‘proddb02’ succeeded

CRS-6016: Resource auto-start has completed for server proddb02

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2019/04/17 12:58:10 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/04/17 12:58:10 CLSRSC-594: Executing installation step 19 of 20: ‘ConfigNode’.

2019/04/17 12:58:36 CLSRSC-594: Executing installation step 20 of 20: ‘PostConfig’.

2019/04/17 12:59:55 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster … succeeded

执行结束点击ok,继续执行

完成后,检查集群状态

[grid@proddb01 ~]$ crsctl stat res -t

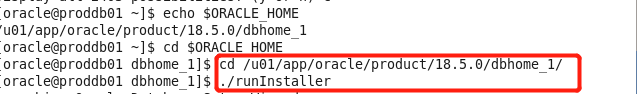

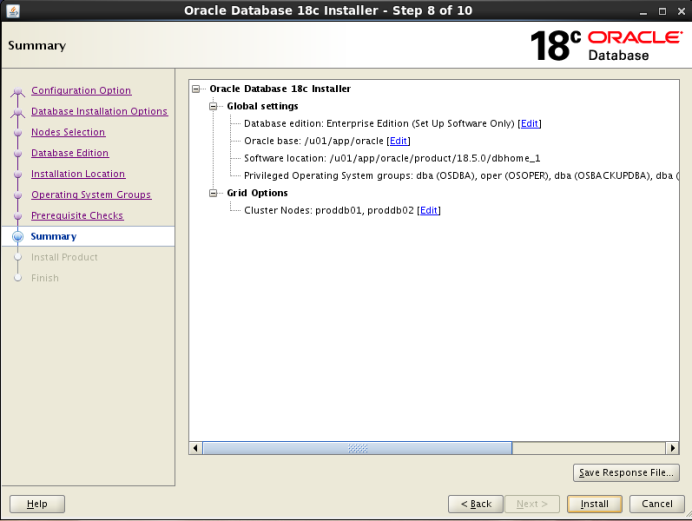

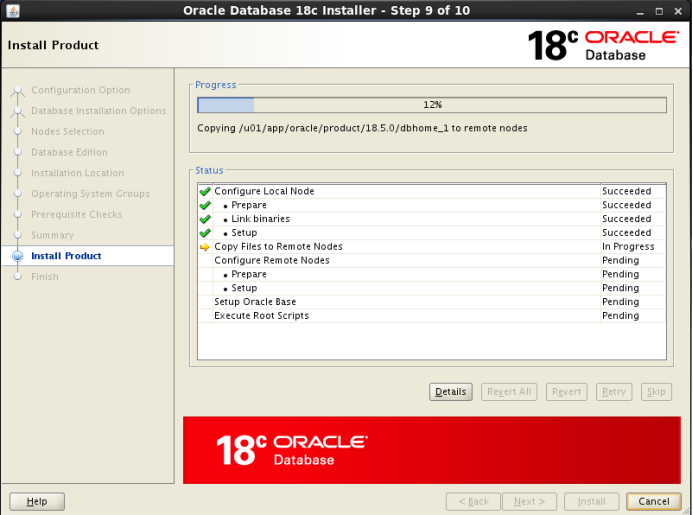

3、安装oracle软件

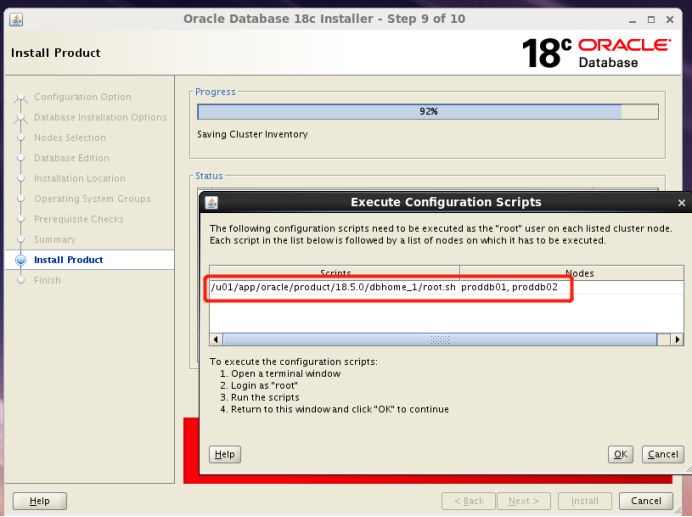

[root@proddb01 grid]# /u01/app/oracle/product/18.5.0/dbhome_1/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/18.5.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of “dbhome” have not changed. No need to overwrite.

The contents of “oraenv” have not changed. No need to overwrite.

The contents of “coraenv” have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

[root@proddb02 grid]# /u01/app/oracle/product/18.5.0/dbhome_1/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/18.5.0/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of “dbhome” have not changed. No need to overwrite.

The contents of “oraenv” have not changed. No need to overwrite.

The contents of “coraenv” have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

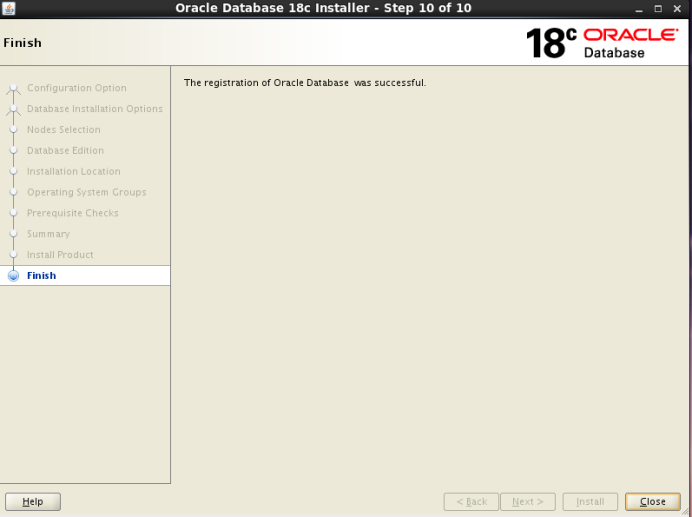

点击ok,安装成功

4、打补丁包

更新opatch

[root@proddb01 grid]# unzip p6880880_121010_Linux-x86-64.zip

[root@proddb01 ~]# cd /u01/app/18.5.0/grid/

[root@proddb01 grid]# mv OPatch OPatch_old

[root@proddb01grid]# cp -rp /tools/OPatch ./

[root@proddb01 grid]# chmod -R 775 OPatch

[root@proddb01 grid]# chown -R grid:oinstall OPatch

[root@proddb01 grid]# cd /u01/app/oracle/product/18.5.0/dbhome_1/

[root@proddb01 dbhome_1]# mv OPatch OPatch_old

[root@proddb01 dbhome_1]# cp -rp /tools/OPatch ./

[root@proddb01 dbhome_1]# chmod -R 775 OPatch

[root@proddb01 dbhome_1]# chown -R oracle:oinstall OPatch

[root@proddb01 dbhome_1]# su - grid

[grid@proddb01 ~]$ /u01/app/18.5.0/grid/OPatch/opatch version

OPatch Version: 12.2.0.1.17

OPatch succeeded.

[grid@proddb01 ~]$ exit

logout

[root@proddb01 dbhome_1]# su - oracle

[oracle@proddb01 ~]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch version

OPatch Version: 12.2.0.1.17

OPatch succeeded.

[root@proddb02 grid]# unzip p6880880_121010_Linux-x86-64.zip

[root@proddb02 ~]# cd /u01/app/18.5.0/grid/

[root@proddb02 grid]# mv OPatch OPatch_old

[root@proddb02grid]# cp -rp /tools/OPatch ./

[root@proddb02 grid]# chmod -R 775 OPatch

[root@proddb02 grid]# chown -R grid:oinstall OPatch

[root@proddb02 grid]# cd /u01/app/oracle/product/18.5.0/dbhome_1/

[root@proddb02 dbhome_1]# mv OPatch OPatch_old

[root@proddb02dbhome_1]# cp -rp /tools/OPatch ./

[root@proddb02 dbhome_1]# chmod -R 775 OPatch

[root@proddb02 dbhome_1]# chown -R oracle:oinstall OPatch

[root@proddb02 dbhome_1]# su - grid

[grid@proddb02 ~]$ /u01/app/18.5.0/grid/OPatch/opatch version

OPatch Version: 12.2.0.1.17

OPatch succeeded.

[grid@proddb02 ~]$ exit

logout

[root@proddb02 dbhome_1]# su - oracle

[oracle@proddb02 ~]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch version

OPatch Version: 12.2.0.1.17

OPatch succeeded.

节点一开始grid补丁

[root@proddb01 tproddb01 ~]# cd /tools/

[root@proddb01 ttools]# unzip p28980105_180000_Linux-x86-64.zip

[root@proddb01 tools]# chown oracle.oinstall 28980105/ -R

[root@proddb01 tools]# chmod 777 28980105/

[root@proddb01 tools]# su - grid

[grid@proddb01 ~]$ /u01/app/18.5.0/grid/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28822489

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-17_18-16-07PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[grid@proddb01 ~]$ /u01/app/18.5.0/grid/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28864593/

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-17_18-17-08PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[grid@proddb01 ~]$ /u01/app/18.5.0/grid/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28864607/

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-17_18-17-25PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[grid@proddb01 ~]$ su - oracle

Password:

[oracle@proddb01 ~]$ echo /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28864593/

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-17_18-18-42PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[oracle@proddb01 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28822489

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-17_18-20-51PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[root@proddb01 ~]# vim /tmp/patch_list_gihome.txt

[root@proddb01 ~]# vim /tmp/patch_list_dbhome.txt

[root@proddb01 tools]# cat /tmp/patch_list_gihome.txt

/tools/28980105/28828717/28864593/

/tools/28980105/28828717/28822489

/tools/28980105/28828717/28864607/

[root@proddb01 tools]# cat /tmp/patch_list_dbhome.txt

/tools/28980105/28828717/28864593/

/tools/28980105/28828717/28822489

[root@proddb01 ~]# su - grid

[grid@proddb01 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckSystemSpace -phBaseFile /tmp/patch_list_gihome.txt

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-17_18-25-06PM_1.log

Invoking prereq “checksystemspace”

Prereq “checkSystemSpace” passed.

OPatch succeeded.

[grid@proddb01 ~]$ su - oracle

Password:

[oracle@proddb01 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckSystemSpace -phBaseFile /tmp/patch_list_dbhome.txt

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-17_18-25-30PM_1.log

Invoking prereq “checksystemspace”

Prereq “checkSystemSpace” passed.

OPatch succeeded.

[oracle@proddb01 ~]$

使用root用户打补丁,打补丁之前加-analyze选项进行分析

[root@proddb01 tools]# /u01/app/18.5.0/grid/OPatch/opatchauto apply /tools/28980105/28828717/ -oh /u01/app/18.5.0/grid/ -analyze

OPatchauto session is initiated at Fri Apr 19 15:24:56 2019

System initialization log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchautodb/systemconfig2019-04-19_03-25-01PM.log.

Session log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/opatchauto2019-04-19_03-25-33PM.log

The id for this session is TU5Q

Executing OPatch prereq operations to verify patch applicability on home /u01/app/18.5.0/grid

Patch applicability verified successfully on home /u01/app/18.5.0/grid

OPatchAuto successful.

--------------------------------Summary--------------------------------

Analysis for applying patches has completed successfully:

Host:proddb01

CRS Home:/u01/app/18.5.0/grid

Version:18.0.0.0.0

==Following patches were SUCCESSFULLY analyzed to be applied:

Patch: /tools/28980105/28828717/28864593

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-25-47PM_1.log

Patch: /tools/28980105/28828717/28864607

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-25-47PM_1.log

Patch: /tools/28980105/28828717/28435192

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-25-47PM_1.log

Patch: /tools/28980105/28828717/28547619

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-25-47PM_1.log

Patch: /tools/28980105/28828717/28822489

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-25-47PM_1.log

OPatchauto session completed at Fri Apr 19 15:25:57 2019

Time taken to complete the session 1 minute, 1 second

[oracle@proddb01 ~]$ sudo su -

[root@proddb01 ~]# /u01/app/18.5.0/grid/OPatch/opatchauto apply /tools/28980105/28828717/ -oh /u01/app/18.5.0/grid/

OPatchauto session is initiated at Fri Apr 19 15:33:08 2019

System initialization log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchautodb/systemconfig2019-04-19_03-33-12PM.log.

Session log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/opatchauto2019-04-19_03-33-44PM.log

The id for this session is XGWI

Executing OPatch prereq operations to verify patch applicability on home /u01/app/18.5.0/grid

Patch applicability verified successfully on home /u01/app/18.5.0/grid

Bringing down CRS service on home /u01/app/18.5.0/grid

CRS service brought down successfully on home /u01/app/18.5.0/grid

Start applying binary patch on home /u01/app/18.5.0/grid

Binary patch applied successfully on home /u01/app/18.5.0/grid

Starting CRS service on home /u01/app/18.5.0/grid

CRS service started successfully on home /u01/app/18.5.0/grid

OPatchAuto successful.

--------------------------------Summary--------------------------------

Patching is completed successfully. Please find the summary as follows:

Host:proddb01

CRS Home:/u01/app/18.5.0/grid

Version:18.0.0.0.0

Summary:

==Following patches were SUCCESSFULLY applied:

Patch: /tools/28980105/28828717/28435192

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-36-25PM_1.log

Patch: /tools/28980105/28828717/28547619

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-36-25PM_1.log

Patch: /tools/28980105/28828717/28822489

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-36-25PM_1.log

Patch: /tools/28980105/28828717/28864593

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-36-25PM_1.log

Patch: /tools/28980105/28828717/28864607

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-36-25PM_1.log

OPatchauto session completed at Fri Apr 19 15:43:30 2019

Time taken to complete the session 10 minutes, 23 seconds

节点二开始打grid补丁

[root@proddb02 tproddb01 ~]# cd /tools/

[root@proddb02 ttools]# unzip p28980105_180000_Linux-x86-64.zip

[root@proddb02 tools]# chown oracle.oinstall 28980105/ -R

[root@proddb02 tools]# chmod 777 28980105/

[root@proddb02 tools]# su - grid

[grid@proddb02 ~]$ /u01/app/18.5.0/grid/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28864607/

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-19_15-18-41PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[grid@proddb02 ~]$ /u01/app/18.5.0/grid/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28822489

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-19_15-19-03PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[grid@proddb02 ~]$ /u01/app/18.5.0/grid/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28864593/

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-19_15-19-15PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[grid@proddb02 ~]$ su - oracle

Password:

[grid@proddb02 ~]$ exit

logout

[root@proddb02 dbhome_1]# su - oracle

[oracle@proddb02 ~]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28864593/

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_15-19-50PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[oracle@proddb02 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /tools/28980105/28828717/28822489

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_15-20-32PM_1.log

Invoking prereq “checkconflictagainstohwithdetail”

Prereq “checkConflictAgainstOHWithDetail” passed.

OPatch succeeded.

[oracle@proddb02 ~]$ exit

logout

[root@proddb02 dbhome_1]# vim /tmp/patch_list_gihome.txt

/tools/28980105/28828717/28864593/

/tools/28980105/28828717/28822489

/tools/28980105/28828717/28864607/

[root@proddb01 tools]# cat /tmp/patch_list_dbhome.txt

[root@proddb02 dbhome_1]# vim /tmp/patch_list_dbhome.txt

/tools/28980105/28828717/28864593/

/tools/28980105/28828717/28822489

[root@proddb02 dbhome_1]# su - grid

[grid@proddb02 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckSystemSpace -phBaseFile /tmp/patch_list_gihome.txt

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/18.5.0/grid

Central Inventory : /u01/app/oraInventory

from : /u01/app/18.5.0/grid/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/18.5.0/grid/cfgtoollogs/opatch/opatch2019-04-19_15-26-52PM_1.log

Invoking prereq “checksystemspace”

Prereq “checkSystemSpace” passed.

OPatch succeeded.

[grid@proddb02 ~]$ exit

logout

[root@proddb02 dbhome_1]# su - oracle

[oracle@proddb02 ~]$ $ORACLE_HOME/OPatch/opatch prereq CheckSystemSpace -phBaseFile /tmp/patch_list_dbhome.txt

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

PREREQ session

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_15-27-36PM_1.log

Invoking prereq “checksystemspace”

Prereq “checkSystemSpace” passed.

OPatch succeeded.

[oracle@proddb02 ~]$ exit

logout

[root@proddb02 dbhome_1]# /u01/app/18.5.0/grid/OPatch/opatchauto apply /tools/28980105/28828717/ -oh /u01/app/18.5.0/grid/ -analyze

OPatchauto session is initiated at Fri Apr 19 15:29:30 2019

System initialization log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchautodb/systemconfig2019-04-19_03-29-35PM.log.

Session log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/opatchauto2019-04-19_03-30-07PM.log

The id for this session is FE46

Executing OPatch prereq operations to verify patch applicability on home /u01/app/18.5.0/grid

Patch applicability verified successfully on home /u01/app/18.5.0/grid

OPatchAuto successful.

--------------------------------Summary--------------------------------

Analysis for applying patches has completed successfully:

Host:proddb02

CRS Home:/u01/app/18.5.0/grid

Version:18.0.0.0.0

==Following patches were SUCCESSFULLY analyzed to be applied:

Patch: /tools/28980105/28828717/28864593

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-30-21PM_1.log

Patch: /tools/28980105/28828717/28864607

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-30-21PM_1.log

Patch: /tools/28980105/28828717/28435192

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-30-21PM_1.log

Patch: /tools/28980105/28828717/28547619

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-30-21PM_1.log

Patch: /tools/28980105/28828717/28822489

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-30-21PM_1.log

OPatchauto session completed at Fri Apr 19 15:30:31 2019

Time taken to complete the session 1 minute, 1 second

[root@proddb02 ~]# /u01/app/18.5.0/grid/OPatch/opatchauto apply /tools/28980105/28828717/ -oh /u01/app/18.5.0/grid/

OPatchauto session is initiated at Fri Apr 19 15:45:23 2019

System initialization log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchautodb/systemconfig2019-04-19_03-45-27PM.log.

Session log file is /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/opatchauto2019-04-19_03-45-58PM.log

The id for this session is 2QY5

Executing OPatch prereq operations to verify patch applicability on home /u01/app/18.5.0/grid

Patch applicability verified successfully on home /u01/app/18.5.0/grid

Bringing down CRS service on home /u01/app/18.5.0/grid

CRS service brought down successfully on home /u01/app/18.5.0/grid

Start applying binary patch on home /u01/app/18.5.0/grid

Binary patch applied successfully on home /u01/app/18.5.0/grid

Starting CRS service on home /u01/app/18.5.0/grid

CRS service started successfully on home /u01/app/18.5.0/grid

OPatchAuto successful.

--------------------------------Summary--------------------------------

Patching is completed successfully. Please find the summary as follows:

Host:proddb02

CRS Home:/u01/app/18.5.0/grid

Version:18.0.0.0.0

Summary:

==Following patches were SUCCESSFULLY applied:

Patch: /tools/28980105/28828717/28435192

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-48-31PM_1.log

Patch: /tools/28980105/28828717/28547619

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-48-31PM_1.log

Patch: /tools/28980105/28828717/28822489

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-48-31PM_1.log

Patch: /tools/28980105/28828717/28864593

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-48-31PM_1.log

Patch: /tools/28980105/28828717/28864607

Log: /u01/app/18.5.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2019-04-19_15-48-31PM_1.log

OPatchauto session completed at Fri Apr 19 16:10:34 2019

Time taken to complete the session 25 minutes, 11 seconds

打javavm补丁

[root@proddb01 28980105]# chown oracle.oinstall 28790647

[root@proddb01 28980105]# su - oracle

[root@proddb01 28980105]# cd /tools/28980105/28790647

[oracle@proddb01 28790647]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch apply

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-16-05PM_1.log

Verifying environment and performing prerequisite checks…

OPatch continues with these patches: 28790647

Do you want to proceed? [y|n]

y

User Responded with: Y

All checks passed.

Provide your email address to be informed of security issues, install and

initiate Oracle Configuration Manager. Easier for you if you use your My

Oracle Support Email address/User Name.

Visit http://www.oracle.com/support/policies.html for details.

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: n

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: N

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: y

Please shutdown Oracle instances running out of this ORACLE_HOME on the local system.

(Oracle Home = ‘/u01/app/oracle/product/18.5.0/dbhome_1’)

Is the local system ready for patching? [y|n]

y

User Responded with: Y

Backing up files…

Applying interim patch ‘28790647’ to OH ‘/u01/app/oracle/product/18.5.0/dbhome_1’

Patching component oracle.javavm.server, 18.0.0.0.0…

Patching component oracle.javavm.server.core, 18.0.0.0.0…

Patching component oracle.rdbms.dbscripts, 18.0.0.0.0…

Patching component oracle.rdbms, 18.0.0.0.0…

Patching component oracle.javavm.client, 18.0.0.0.0…

Patch 28790647 successfully applied.

Sub-set patch [27923415] has become inactive due to the application of a super-set patch [28790647].

Please refer to Doc ID 2161861.1 for any possible further required actions.

Log file location: /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-16-05PM_1.log

OPatch succeeded.

[root@proddb02 28980105]# chown oracle.oinstall 28790647

[root@proddb02 28980105]# su - oracle

[root@proddb02 28980105]# cd /tools/28980105/28790647

[oracle@proddb02 28790647]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch apply

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-18-44PM_1.log

Verifying environment and performing prerequisite checks…

OPatch continues with these patches: 28790647

Do you want to proceed? [y|n]

y

User Responded with: Y

All checks passed.

Provide your email address to be informed of security issues, install and

initiate Oracle Configuration Manager. Easier for you if you use your My

Oracle Support Email address/User Name.

Visit http://www.oracle.com/support/policies.html for details.

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: Y

Please shutdown Oracle instances running out of this ORACLE_HOME on the local system.

(Oracle Home = ‘/u01/app/oracle/product/18.5.0/dbhome_1’)

Is the local system ready for patching? [y|n]

y

User Responded with: Y

Backing up files…

Applying interim patch ‘28790647’ to OH ‘/u01/app/oracle/product/18.5.0/dbhome_1’

Patching component oracle.javavm.server, 18.0.0.0.0…

Patching component oracle.javavm.server.core, 18.0.0.0.0…

Patching component oracle.rdbms.dbscripts, 18.0.0.0.0…

Patching component oracle.rdbms, 18.0.0.0.0…

Patching component oracle.javavm.client, 18.0.0.0.0…

Patch 28790647 successfully applied.

Sub-set patch [27923415] has become inactive due to the application of a super-set patch [28790647].

Please refer to Doc ID 2161861.1 for any possible further required actions.

Log file location: /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-18-44PM_1.log

OPatch succeeded.

打oracle补丁

[root@proddb01 tools]# unzip p28980087_180000_Linux-x86-64.zip

[root@proddb01 tools]# chown oracle.oinstall 28980087 -R

[root@proddb01 tools]# chmod 777 28980087/

[oracle@proddb01 ~]$ cd /tools/28980087/ 28822489

[oracle@proddb01 28822489]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch apply

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-29-57PM_1.log

Verifying environment and performing prerequisite checks…

OPatch continues with these patches: 28822489

Do you want to proceed? [y|n]

y

User Responded with: Y

All checks passed.

Provide your email address to be informed of security issues, install and

initiate Oracle Configuration Manager. Easier for you if you use your My

Oracle Support Email address/User Name.

Visit http://www.oracle.com/support/policies.html for details.

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: y

Please shutdown Oracle instances running out of this ORACLE_HOME on the local system.

(Oracle Home = ‘/u01/app/oracle/product/18.5.0/dbhome_1’)

Is the local system ready for patching? [y|n]

y

User Responded with: Y

Backing up files…

Applying interim patch ‘28822489’ to OH ‘/u01/app/oracle/product/18.5.0/dbhome_1’

ApplySession: Optional component(s) [ oracle.assistants.asm, 18.0.0.0.0 ] , [ oracle.net.cman, 18.0.0.0.0 ] , [ oracle.ons.daemon, 18.0.0.0.0 ] , [ oracle.tfa, 18.0.0.0.0 ] , [ oracle.crs, 18.0.0.0.0 ] , [ oracle.network.cman, 18.0.0.0.0 ] , [ oracle.assistants.usm, 18.0.0.0.0 ] , [ oracle.assistants.server.oui, 18.0.0.0.0 ] , [ oracle.has.crs, 18.0.0.0.0 ] not present in the Oracle Home or a higher version is found.

Patching component oracle.oracore.rsf, 18.0.0.0.0…

Patching component oracle.rdbms, 18.0.0.0.0…

Patching component oracle.dbjava.jdbc, 18.0.0.0.0…

Patching component oracle.dbjava.ic, 18.0.0.0.0…

Patching component oracle.network.listener, 18.0.0.0.0…

Patching component oracle.assistants.acf, 18.0.0.0.0…

Patching component oracle.rdbms.rsf.ic, 18.0.0.0.0…

Patching component oracle.server, 18.0.0.0.0…

Patching component oracle.ctx, 18.0.0.0.0…

Patching component oracle.ons, 18.0.0.0.0…

Patching component oracle.rdbms.deconfig, 18.0.0.0.0…

Patching component oracle.rdbms.util, 18.0.0.0.0…

Patching component oracle.sdo.locator.jrf, 18.0.0.0.0…

Patching component oracle.xdk.parser.java, 18.0.0.0.0…

Patching component oracle.assistants.server, 18.0.0.0.0…

Patching component oracle.rdbms.crs, 18.0.0.0.0…

Patching component oracle.rdbms.rman, 18.0.0.0.0…

Patching component oracle.xdk, 18.0.0.0.0…

Patching component oracle.ctx.atg, 18.0.0.0.0…

Patching component oracle.dbjava.ucp, 18.0.0.0.0…

Patching component oracle.install.deinstalltool, 18.0.0.0.0…

Patching component oracle.rdbms.dbscripts, 18.0.0.0.0…

Patching component oracle.rdbms.rsf, 18.0.0.0.0…

Patching component oracle.xdk.rsf, 18.0.0.0.0…

Patching component oracle.network.client, 18.0.0.0.0…

Patching component oracle.rdbms.install.plugins, 18.0.0.0.0…

Patching component oracle.sdo, 18.0.0.0.0…

Patching component oracle.rdbms.oci, 18.0.0.0.0…

Patching component oracle.ctx.rsf, 18.0.0.0.0…

Patching component oracle.oraolap.dbscripts, 18.0.0.0.0…

Patching component oracle.assistants.deconfig, 18.0.0.0.0…

Patching component oracle.nlsrtl.rsf, 18.0.0.0.0…

Patching component oracle.precomp.rsf, 18.0.0.0.0…

Patching component oracle.network.rsf, 18.0.0.0.0…

Patching component oracle.sqlplus.ic, 18.0.0.0.0…

Patching component oracle.sdo.locator, 18.0.0.0.0…

Patching component oracle.nlsrtl.rsf.core, 18.0.0.0.0…

Patching component oracle.sqlplus, 18.0.0.0.0…

Patching component oracle.javavm.client, 18.0.0.0.0…

Patching component oracle.ldap.owm, 18.0.0.0.0…

Patching component oracle.ldap.security.osdt, 18.0.0.0.0…

Patching component oracle.rdbms.install.common, 18.0.0.0.0…

Patching component oracle.precomp.lang, 18.0.0.0.0…

Patching component oracle.precomp.common, 18.0.0.0.0…

Patch 28822489 successfully applied.

Sub-set patch [28090523] has become inactive due to the application of a super-set patch [28822489].

Please refer to Doc ID 2161861.1 for any possible further required actions.

Log file location: /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-29-57PM_1.log

OPatch succeeded.

[[root@proddb02 tools]# unzip p28980087_180000_Linux-x86-64.zip

[root@proddb02 tools]# chown oracle.oinstall 28980087 -R

[root@proddb02 tools]# chmod 777 28980087/

[oracle@proddb02~]$ cd /tools/28980087/ 28822489

[oracle@proddb02 28822489]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch apply

Oracle Interim Patch Installer version 12.2.0.1.17

Copyright © 2019, Oracle Corporation. All rights reserved.

Oracle Home : /u01/app/oracle/product/18.5.0/dbhome_1

Central Inventory : /u01/app/oraInventory

from : /u01/app/oracle/product/18.5.0/dbhome_1/oraInst.loc

OPatch version : 12.2.0.1.17

OUI version : 12.2.0.4.0

Log file location : /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-32-20PM_1.log

Verifying environment and performing prerequisite checks…

OPatch continues with these patches: 28822489

Do you want to proceed? [y|n]

y

User Responded with: Y

All checks passed.

Provide your email address to be informed of security issues, install and

initiate Oracle Configuration Manager. Easier for you if you use your My

Oracle Support Email address/User Name.

Visit http://www.oracle.com/support/policies.html for details.

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: y

Please shutdown Oracle instances running out of this ORACLE_HOME on the local system.

(Oracle Home = ‘/u01/app/oracle/product/18.5.0/dbhome_1’)

Is the local system ready for patching? [y|n]

y

User Responded with: Y

Backing up files…

Applying interim patch ‘28822489’ to OH ‘/u01/app/oracle/product/18.5.0/dbhome_1’

ApplySession: Optional component(s) [ oracle.assistants.asm, 18.0.0.0.0 ] , [ oracle.net.cman, 18.0.0.0.0 ] , [ oracle.ons.daemon, 18.0.0.0.0 ] , [ oracle.tfa, 18.0.0.0.0 ] , [ oracle.crs, 18.0.0.0.0 ] , [ oracle.network.cman, 18.0.0.0.0 ] , [ oracle.assistants.usm, 18.0.0.0.0 ] , [ oracle.assistants.server.oui, 18.0.0.0.0 ] , [ oracle.has.crs, 18.0.0.0.0 ] not present in the Oracle Home or a higher version is found.

Patching component oracle.oracore.rsf, 18.0.0.0.0…

Patching component oracle.rdbms, 18.0.0.0.0…

Patching component oracle.dbjava.jdbc, 18.0.0.0.0…

Patching component oracle.dbjava.ic, 18.0.0.0.0…

Patching component oracle.network.listener, 18.0.0.0.0…

Patching component oracle.assistants.acf, 18.0.0.0.0…

Patching component oracle.rdbms.rsf.ic, 18.0.0.0.0…

Patching component oracle.server, 18.0.0.0.0…

Patching component oracle.ctx, 18.0.0.0.0…

Patching component oracle.ons, 18.0.0.0.0…

Patching component oracle.rdbms.deconfig, 18.0.0.0.0…

Patching component oracle.rdbms.util, 18.0.0.0.0…

Patching component oracle.sdo.locator.jrf, 18.0.0.0.0…

Patching component oracle.xdk.parser.java, 18.0.0.0.0…

Patching component oracle.assistants.server, 18.0.0.0.0…

Patching component oracle.rdbms.crs, 18.0.0.0.0…

Patching component oracle.rdbms.rman, 18.0.0.0.0…

Patching component oracle.xdk, 18.0.0.0.0…

Patching component oracle.ctx.atg, 18.0.0.0.0…

Patching component oracle.dbjava.ucp, 18.0.0.0.0…

Patching component oracle.install.deinstalltool, 18.0.0.0.0…

Patching component oracle.rdbms.dbscripts, 18.0.0.0.0…

Patching component oracle.rdbms.rsf, 18.0.0.0.0…

Patching component oracle.xdk.rsf, 18.0.0.0.0…

Patching component oracle.network.client, 18.0.0.0.0…

Patching component oracle.rdbms.install.plugins, 18.0.0.0.0…

Patching component oracle.sdo, 18.0.0.0.0…

Patching component oracle.rdbms.oci, 18.0.0.0.0…

Patching component oracle.ctx.rsf, 18.0.0.0.0…

Patching component oracle.oraolap.dbscripts, 18.0.0.0.0…

Patching component oracle.assistants.deconfig, 18.0.0.0.0…

Patching component oracle.nlsrtl.rsf, 18.0.0.0.0…

Patching component oracle.precomp.rsf, 18.0.0.0.0…

Patching component oracle.network.rsf, 18.0.0.0.0…

Patching component oracle.sqlplus.ic, 18.0.0.0.0…

Patching component oracle.sdo.locator, 18.0.0.0.0…

Patching component oracle.nlsrtl.rsf.core, 18.0.0.0.0…

Patching component oracle.sqlplus, 18.0.0.0.0…

Patching component oracle.javavm.client, 18.0.0.0.0…

Patching component oracle.ldap.owm, 18.0.0.0.0…

Patching component oracle.ldap.security.osdt, 18.0.0.0.0…

Patching component oracle.rdbms.install.common, 18.0.0.0.0…

Patching component oracle.precomp.lang, 18.0.0.0.0…

Patching component oracle.precomp.common, 18.0.0.0.0…

Patch 28822489 successfully applied.

Sub-set patch [28090523] has become inactive due to the application of a super-set patch [28822489].

Please refer to Doc ID 2161861.1 for any possible further required actions.

Log file location: /u01/app/oracle/product/18.5.0/dbhome_1/cfgtoollogs/opatch/opatch2019-04-19_16-32-20PM_1.log

OPatch succeeded.

检测补丁版本

[oracle@proddb01 ~]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch lspatches

28822489;Database Release Update : 18.5.0.0.190115 (28822489)

28790647;OJVM RELEASE UPDATE: 18.5.0.0.190115 (28790647)

27908644;UPDATE 18.3 DATABASE CLIENT JDK IN ORACLE HOME TO JDK8U171

28090553;OCW RELEASE UPDATE 18.3.0.0.0 (28090553)

OPatch succeeded.

[oracle@proddb01 ~]$ su - grid

Password:

[grid@proddb01 ~]$ /u01/app/18.5.0/grid/OPatch/opatch lspatches

28864607;ACFS RELEASE UPDATE 18.5.0.0.0 (28864607)

28864593;OCW RELEASE UPDATE 18.5.0.0.0 (28864593)

28822489;Database Release Update : 18.5.0.0.190115 (28822489)

28547619;TOMCAT RELEASE UPDATE 18.0.0.0.0 (28547619)

28435192;DBWLM RELEASE UPDATE 18.0.0.0.0 (28435192)

27908644;UPDATE 18.3 DATABASE CLIENT JDK IN ORACLE HOME TO JDK8U171

27923415;OJVM RELEASE UPDATE: 18.3.0.0.180717 (27923415)

OPatch succeeded.

[root@proddb02 28980087]# su - oracle

[oracle@proddb02 ~]$ /u01/app/oracle/product/18.5.0/dbhome_1/OPatch/opatch lspatches

28822489;Database Release Update : 18.5.0.0.190115 (28822489)

28790647;OJVM RELEASE UPDATE: 18.5.0.0.190115 (28790647)

27908644;UPDATE 18.3 DATABASE CLIENT JDK IN ORACLE HOME TO JDK8U171

28090553;OCW RELEASE UPDATE 18.3.0.0.0 (28090553)

OPatch succeeded.

[oracle@proddb02 ~]$ su - grid

Password:

[grid@proddb02 ~]$ /u01/app/18.5.0/grid/OPatch/opatch lspatches

28864607;ACFS RELEASE UPDATE 18.5.0.0.0 (28864607)

28864593;OCW RELEASE UPDATE 18.5.0.0.0 (28864593)

28822489;Database Release Update : 18.5.0.0.190115 (28822489)

28547619;TOMCAT RELEASE UPDATE 18.0.0.0.0 (28547619)

28435192;DBWLM RELEASE UPDATE 18.0.0.0.0 (28435192)

27908644;UPDATE 18.3 DATABASE CLIENT JDK IN ORACLE HOME TO JDK8U171

27923415;OJVM RELEASE UPDATE: 18.3.0.0.180717 (27923415)

OPatch succeeded.

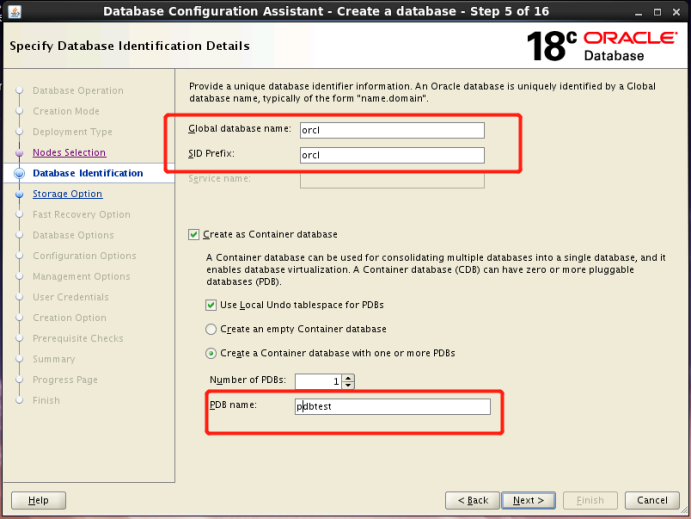

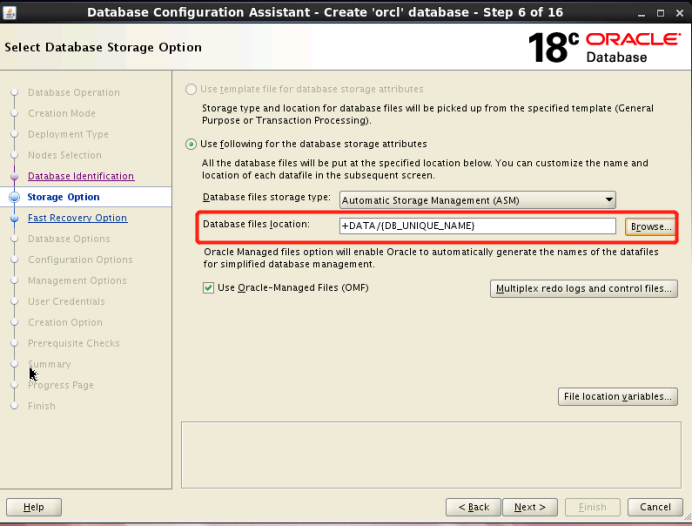

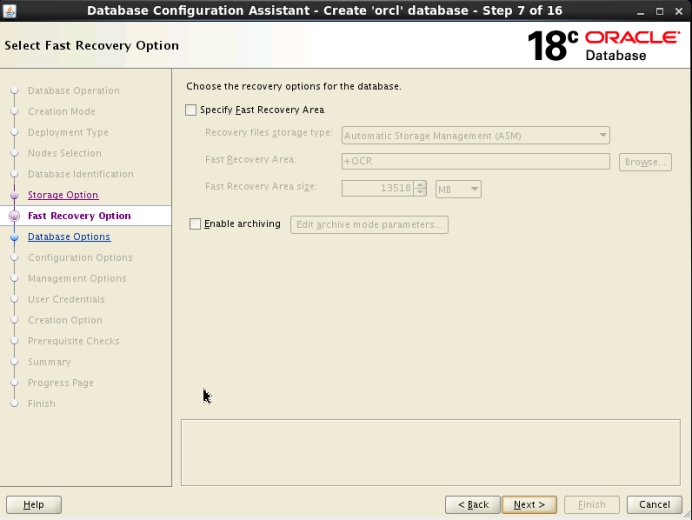

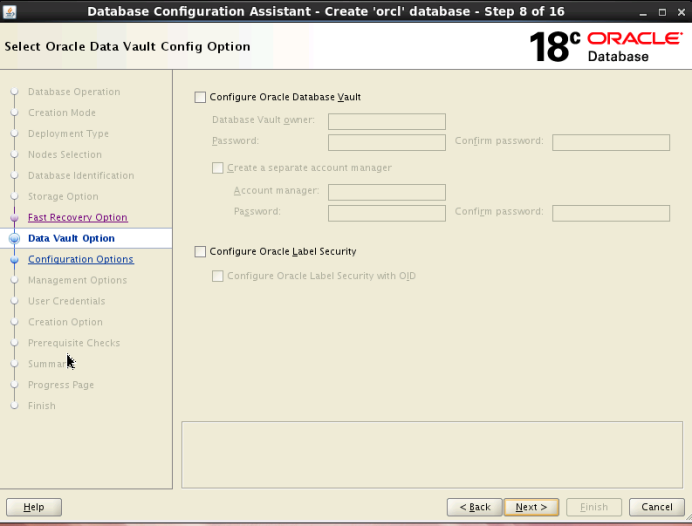

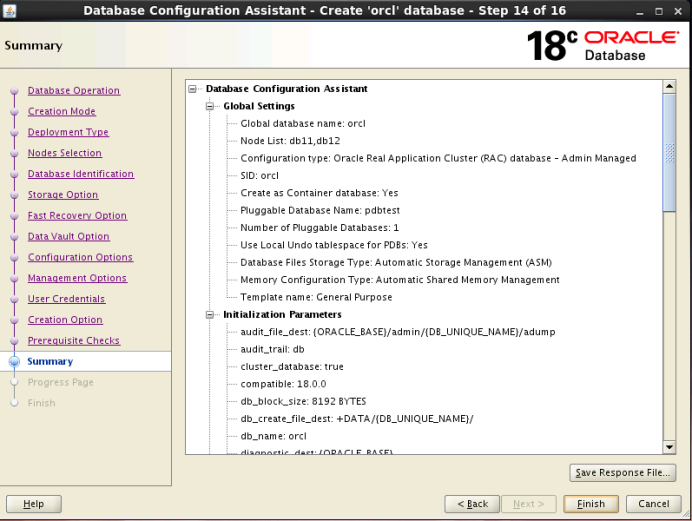

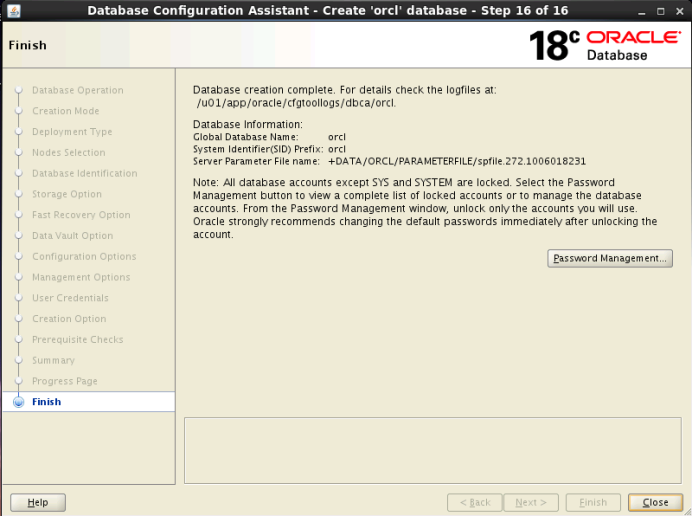

5、创建数据库实例

1、创建asm磁盘组

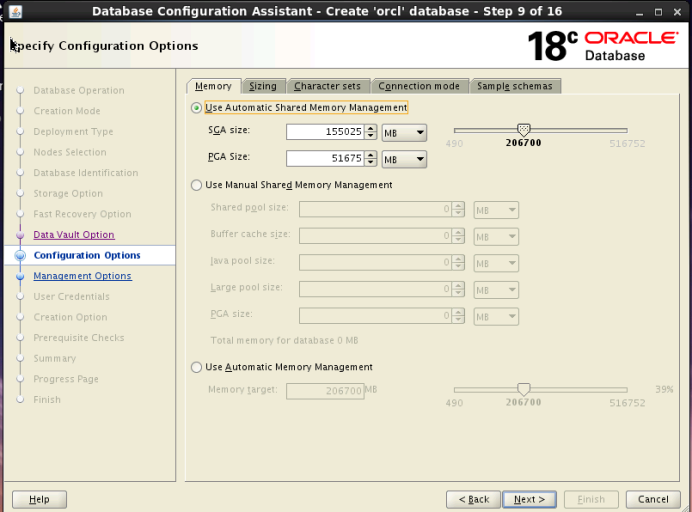

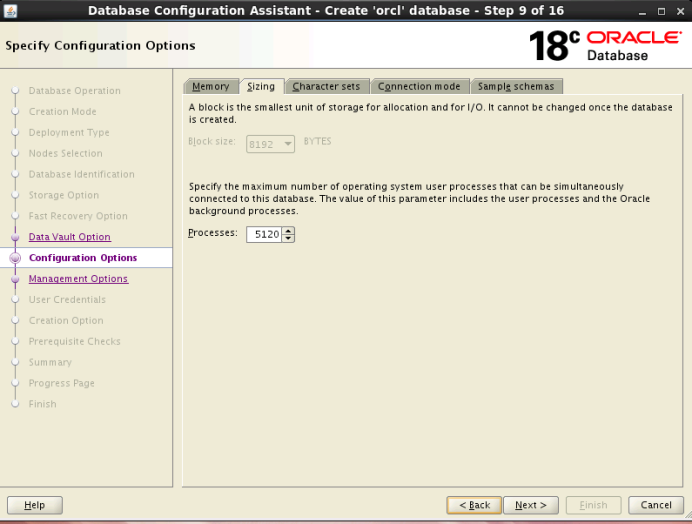

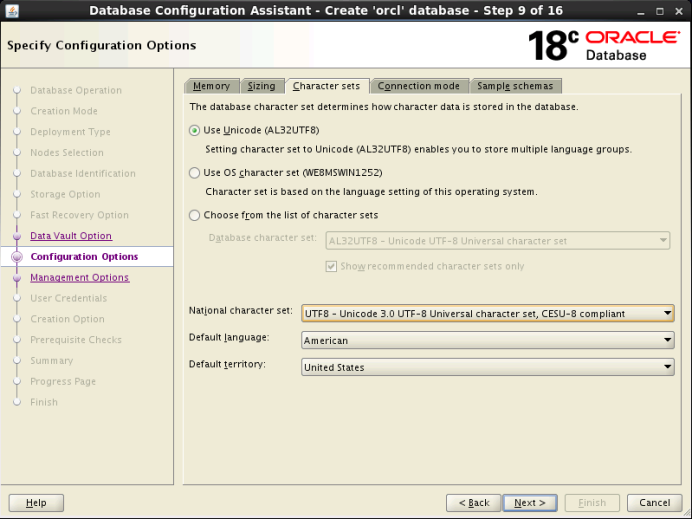

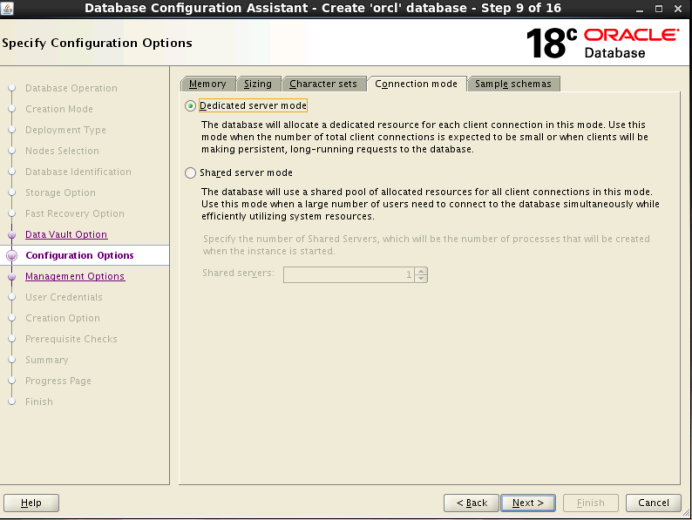

2、创建实例

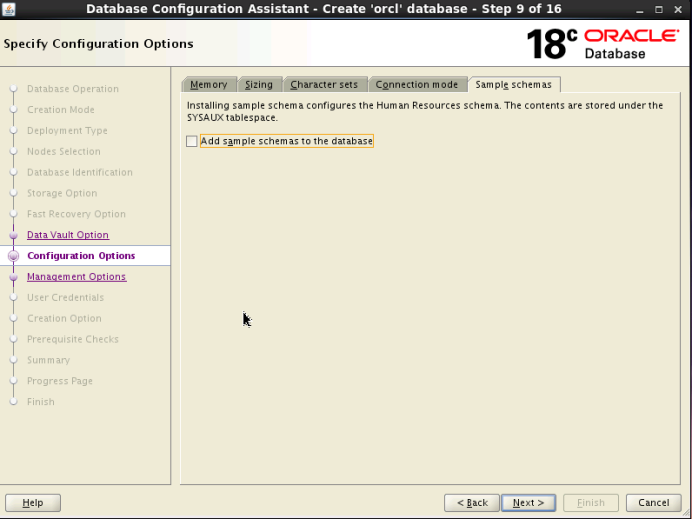

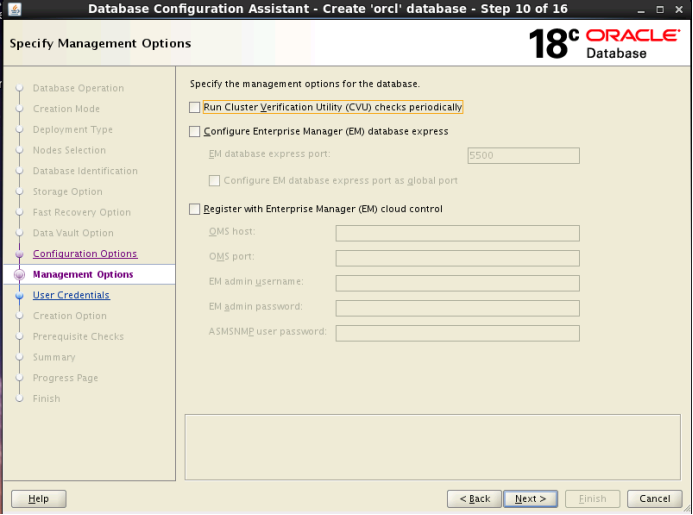

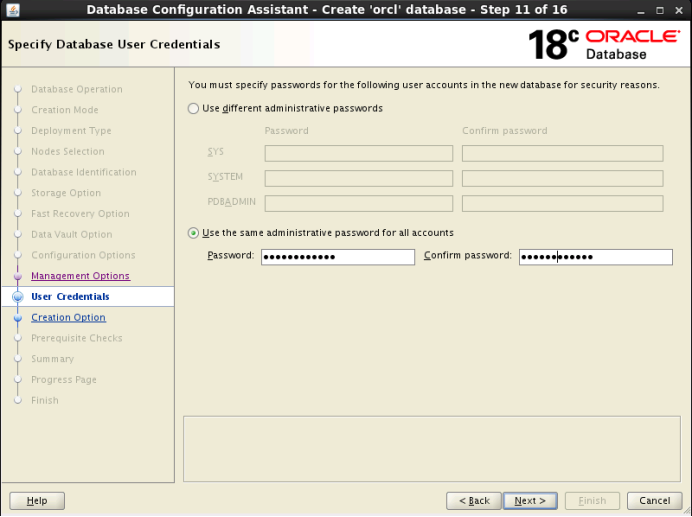

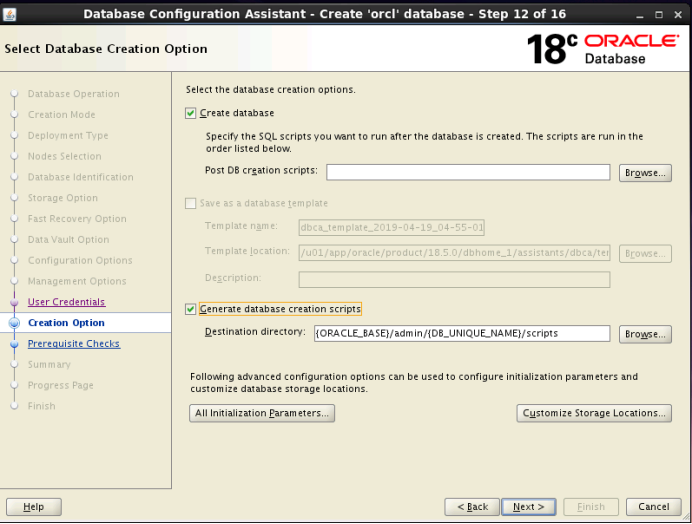

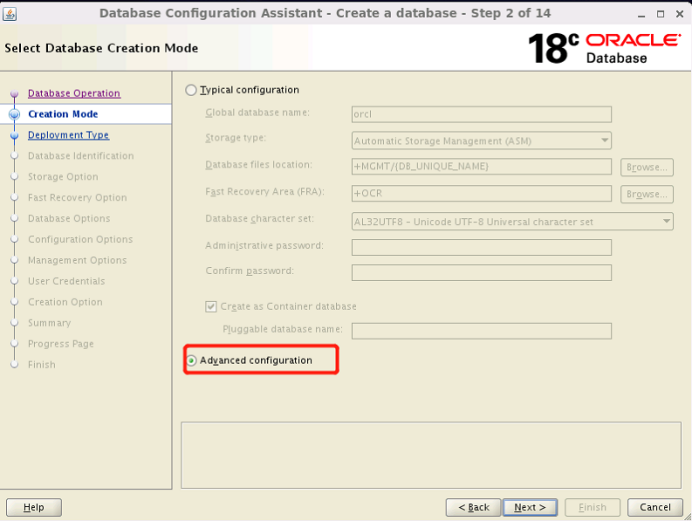

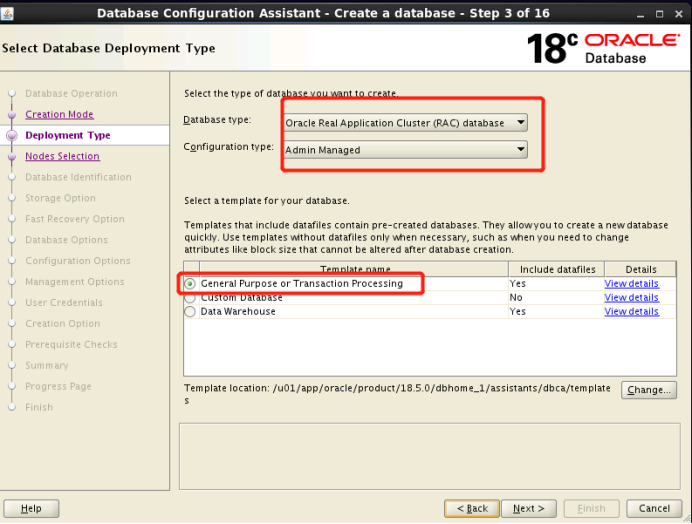

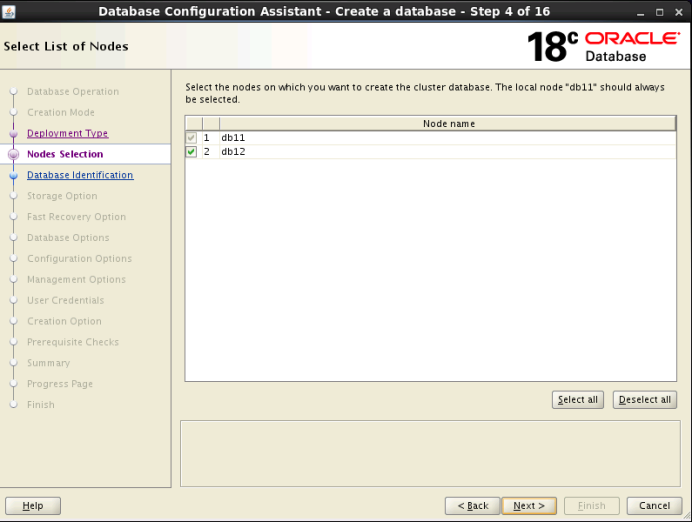

在图形化界面dbca

创建一个数据库

选择Advanced configuration

创建一个cdb的同时,创建一个pdb