一步一步学习OceanBase系列回顾:

第一篇:OceanBase 1.4 集群手动安装步骤

第二篇:obproxy的安装、配置和使用

第三篇:OceanBase 1.4 集群——租户的创建和使用

本篇是第四篇 ob1.4集群扩容体验,从1-1-1架构扩容到2-2-2架构。

OceanBase 1.4集群扩容

前面的文章里,已经搭建了一个最小化资源(8cpu+16g内存)安装的 1-1-1 架构的OceanBase 1.4 集群,现需要再添加3个节点,扩容到2-2-2架构。

以下是实操体验:

1、新节点安装环境准备

新加的节点服务器配置,跟之前一样8cpu+16g内存

ob04: 192.168.0.106

ob05: 192.168.0.89

ob06: 192.168.0.143

新节点的安装环境准备,以及OB软件安装等,这里忽略,参考第一篇文章。

2、新节点启动observer进程

注意修改zone, ip, port, 网卡名字

特别注意:

-r 指定原集群的rpc_list ,新节点只跟集群建立联系,就是跟 rootservice 建立联系,建立联系后才有可能被 add server 到这个集群中。

ob04节点:

cd /home/admin/oceanbase && bin/observer -i eth0 -P 2882 -p 2881 -z zone1 -d /home/admin/oceanbase/store/obdemo -r '192.168.0.151:2882:2881;192.168.0.43:2882:2881;192.168.0.41:2882:2881' -c 20200806 -n obdemo

ps -ef|grep observer

vi log/observer.log

ob05节点:

cd /home/admin/oceanbase && bin/observer -i eth0 -P 2882 -p 2881 -z zone2 -d /home/admin/oceanbase/store/obdemo -r '192.168.0.151:2882:2881;192.168.0.43:2882:2881;192.168.0.41:2882:2881' -c 20200806 -n obdemo

ps -ef|grep observer

vi log/observer.log

ob06节点:

cd /home/admin/oceanbase && bin/observer -i eth0 -P 2882 -p 2881 -z zone3 -d /home/admin/oceanbase/store/obdemo -r '192.168.0.151:2882:2881;192.168.0.43:2882:2881;192.168.0.41:2882:2881' -c 20200806 -n obdemo

ps -ef|grep observer

vi log/observer.log

3、添加节点

(1)、添加节点服务器之前,查看ob集群现有服务器资源情况

$ mysql -h192.168.0.151 -uroot@sys#obdemo -P2883 -padmin123 -c -A oceanbase

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 11

Server version: 5.6.25 OceanBase 1.4.60 (r1571952-758a58e85846f9efb907b1c14057204cb6353846) (Built Mar 9 2018 14:32:07)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [oceanbase]>

添加节点服务器之前,查看OB集群所有节点信息

MySQL [oceanbase]> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+---------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+---------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.0.151 | 2882 | 2881 | 1 | active | 2020-08-07 11:30:28.623847 |

| zone2 | 192.168.0.43 | 2882 | 2881 | 0 | active | 2020-08-07 11:30:28.604907 |

| zone3 | 192.168.0.41 | 2882 | 2881 | 0 | active | 2020-08-07 11:30:28.613459 |

+-------+---------------+----------+------------+-----------------+--------+----------------------------+

3 rows in set (0.01 sec)

MySQL [oceanbase]>

添加节点服务器之前,查看ob集群现有服务器资源情况

MySQL [oceanbase]> select zone, svr_ip, svr_port,inner_port, cpu_total, cpu_assigned,

-> round(mem_total/1024/1024/1024) mem_total_gb,

-> round(mem_assigned/1024/1024/1024) mem_ass_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,

-> unit_num, substr(build_version,1,6) version

-> from __all_virtual_server_stat

-> order by zone, svr_ip, inner_port;

+-------+---------------+----------+------------+-----------+--------------+--------------+------------+---------------+----------+---------+

| zone | svr_ip | svr_port | inner_port | cpu_total | cpu_assigned | mem_total_gb | mem_ass_gb | disk_total_gb | unit_num | version |

+-------+---------------+----------+------------+-----------+--------------+--------------+------------+---------------+----------+---------+

| zone1 | 192.168.0.151 | 2882 | 2881 | 6 | 3.5 | 10 | 4 | 83 | 2 | 1.4.60 |

| zone2 | 192.168.0.43 | 2882 | 2881 | 6 | 3.5 | 10 | 4 | 83 | 2 | 1.4.60 |

| zone3 | 192.168.0.41 | 2882 | 2881 | 6 | 3.5 | 10 | 4 | 83 | 2 | 1.4.60 |

+-------+---------------+----------+------------+-----------+--------------+--------------+------------+---------------+----------+---------+

3 rows in set (0.00 sec)

MySQL [oceanbase]>

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+--------------------+-----------+----------+--------------+-------------+---------------+---------+----------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | version | start_service_time |

+-------+--------------------+-----------+----------+--------------+-------------+---------------+---------+----------------------------+

| zone1 | 192.168.0.151:2882 | 6 | 2.5 | 10 | 5 | 83 | 1.4.60 | 2020-08-07 11:30:35.152342 |

| zone2 | 192.168.0.43:2882 | 6 | 2.5 | 10 | 5 | 83 | 1.4.60 | 2020-08-07 11:30:31.722452 |

| zone3 | 192.168.0.41:2882 | 6 | 2.5 | 10 | 5 | 83 | 1.4.60 | 2020-08-07 11:30:32.381524 |

+-------+--------------------+-----------+----------+--------------+-------------+---------------+---------+----------------------------+

3 rows in set (0.00 sec)

MySQL [oceanbase]> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+--------------------+-----------+--------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+--------------------+-----------+--------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 3 | 2 | 1 | zone1 | 192.168.0.151:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 3 | 2 | 2 | zone2 | 192.168.0.43:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 3 | 2 | 3 | zone3 | 192.168.0.41:2882 | 1 | sys |

| my_pool_test | my_unit_1c2g | 2 | 1 | 3 | 2 | 1001 | zone1 | 192.168.0.151:2882 | 1001 | my_test_tent |

| my_pool_test | my_unit_1c2g | 2 | 1 | 3 | 2 | 1002 | zone2 | 192.168.0.43:2882 | 1001 | my_test_tent |

| my_pool_test | my_unit_1c2g | 2 | 1 | 3 | 2 | 1003 | zone3 | 192.168.0.41:2882 | 1001 | my_test_tent |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+--------------------+-----------+--------------+

6 rows in set (0.00 sec)

MySQL [oceanbase]>

可以看到,整个ob1.4集群扩容之前,节点151、43、41资源池还可用2.5cpu, 5g内存。

其中系统sys租户占用2.5cpu+3g内存,业务租户my_test_tent占用1cpu+2g内存。

(2)、开始将新增的3节点加入到OceanBase集群中。注意zone名称和IP要对应正确。

MySQL [oceanbase]> alter system add server '192.168.0.106:2882' zone 'zone1';

Query OK, 0 rows affected (0.01 sec)

MySQL [oceanbase]> alter system add server '192.168.0.89:2882' zone 'zone2';

Query OK, 0 rows affected (0.01 sec)

MySQL [oceanbase]> alter system add server '192.168.0.143:2882' zone 'zone3';

Query OK, 0 rows affected (0.01 sec)

MySQL [oceanbase]>

4、查看扩容后的集群信息

添加节点后,查看OB集群所有节点信息,新节点106、89、143已加入OB集群

MySQL [oceanbase]> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+---------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+---------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.0.106 | 2882 | 2881 | 0 | active | 2020-08-07 18:21:17.519966 |

| zone1 | 192.168.0.151 | 2882 | 2881 | 1 | active | 2020-08-07 11:30:28.623847 |

| zone2 | 192.168.0.43 | 2882 | 2881 | 0 | active | 2020-08-07 11:30:28.604907 |

| zone2 | 192.168.0.89 | 2882 | 2881 | 0 | active | 2020-08-07 18:21:23.741350 |

| zone3 | 192.168.0.143 | 2882 | 2881 | 0 | active | 2020-08-07 18:21:29.227633 |

| zone3 | 192.168.0.41 | 2882 | 2881 | 0 | active | 2020-08-07 11:30:28.613459 |

+-------+---------------+----------+------------+-----------------+--------+----------------------------+

6 rows in set (0.01 sec)

MySQL [oceanbase]>

添加节点后,查看OceanBase集群所有节点可用资源情况,新节点服务器资源已加入整个OB集群

MySQL [oceanbase]> select zone, svr_ip, svr_port,inner_port, cpu_total, cpu_assigned,

-> round(mem_total/1024/1024/1024) mem_total_gb,

-> round(mem_assigned/1024/1024/1024) mem_ass_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,

-> unit_num, substr(build_version,1,6) version

-> from __all_virtual_server_stat

-> order by zone, svr_ip, inner_port;

+-------+---------------+----------+------------+-----------+--------------+--------------+------------+---------------+----------+---------+

| zone | svr_ip | svr_port | inner_port | cpu_total | cpu_assigned | mem_total_gb | mem_ass_gb | disk_total_gb | unit_num | version |

+-------+---------------+----------+------------+-----------+--------------+--------------+------------+---------------+----------+---------+

| zone1 | 192.168.0.106 | 2882 | 2881 | 6 | 1 | 10 | 2 | 83 | 1 | 1.4.60 |

| zone1 | 192.168.0.151 | 2882 | 2881 | 6 | 2.5 | 10 | 2 | 83 | 1 | 1.4.60 |

| zone2 | 192.168.0.43 | 2882 | 2881 | 6 | 2.5 | 10 | 2 | 83 | 1 | 1.4.60 |

| zone2 | 192.168.0.89 | 2882 | 2881 | 6 | 1 | 10 | 2 | 83 | 1 | 1.4.60 |

| zone3 | 192.168.0.143 | 2882 | 2881 | 6 | 1 | 10 | 2 | 83 | 1 | 1.4.60 |

| zone3 | 192.168.0.41 | 2882 | 2881 | 6 | 2.5 | 10 | 2 | 83 | 1 | 1.4.60 |

+-------+---------------+----------+------------+-----------+--------------+--------------+------------+---------------+----------+---------+

6 rows in set (0.00 sec)

MySQL [oceanbase]>

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+--------------------+-----------+----------+--------------+-------------+---------------+---------+----------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | version | start_service_time |

+-------+--------------------+-----------+----------+--------------+-------------+---------------+---------+----------------------------+

| zone1 | 192.168.0.106:2882 | 6 | 5 | 10 | 8 | 83 | 1.4.60 | 2020-08-07 18:21:25.038841 |

| zone1 | 192.168.0.151:2882 | 6 | 3.5 | 10 | 7 | 83 | 1.4.60 | 2020-08-07 11:30:35.152342 |

| zone2 | 192.168.0.43:2882 | 6 | 3.5 | 10 | 7 | 83 | 1.4.60 | 2020-08-07 11:30:31.722452 |

| zone2 | 192.168.0.89:2882 | 6 | 5 | 10 | 8 | 83 | 1.4.60 | 2020-08-07 18:21:28.865203 |

| zone3 | 192.168.0.143:2882 | 6 | 5 | 10 | 8 | 83 | 1.4.60 | 2020-08-07 18:21:42.012719 |

| zone3 | 192.168.0.41:2882 | 6 | 3.5 | 10 | 7 | 83 | 1.4.60 | 2020-08-07 11:30:32.381524 |

+-------+--------------------+-----------+----------+--------------+-------------+---------------+---------+----------------------------+

6 rows in set (0.01 sec)

MySQL [oceanbase]>

查看集群资源池具体使用情况

MySQL [oceanbase]> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+--------------------+-----------+--------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+--------------------+-----------+--------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 3 | 2 | 1 | zone1 | 192.168.0.151:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 3 | 2 | 2 | zone2 | 192.168.0.43:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 3 | 2 | 3 | zone3 | 192.168.0.41:2882 | 1 | sys |

| my_pool_test | my_unit_1c2g | 2 | 1 | 3 | 2 | 1001 | zone1 | 192.168.0.106:2882 | 1001 | my_test_tent |

| my_pool_test | my_unit_1c2g | 2 | 1 | 3 | 2 | 1002 | zone2 | 192.168.0.89:2882 | 1001 | my_test_tent |

| my_pool_test | my_unit_1c2g | 2 | 1 | 3 | 2 | 1003 | zone3 | 192.168.0.143:2882 | 1001 | my_test_tent |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+--------------------+-----------+--------------+

6 rows in set (0.00 sec)

MySQL [oceanbase]>

从以上可以看出,原三个节点151、43、41,在扩容之前,资源池还可用2.5cpu, 5g内存,其中系统sys租户占用2.5cpu+3g内存,业务租户my_test_tent占用1cpu+2g内存。

扩容后,原三个节点151、43、41,资源可用得到释放,将业务租户my_test_tent占用的1cpu+2g内存资源,转移到新加入集群的106、89、143三个节点。

这是OceanBase集群负载均衡的体现,最终集群扩容后,所有节点资源大概可用情况:

原节点151、43、41,大概可用3.5cpu+7g内存

新节点106、89、143,大概可用5cpu+8g内存

5、再来看集群扩容前后,业务租户my_test_tent下的表分区主副本和备副本所在的节点变化情况。

扩容前:如下sql: 其中role=1为主副本,role=2为备副本

MySQL [oceanbase]> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id = 1001

-> order by t3.tablegroup_id, t3.table_name,t4.partition_Id ;

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.0.41 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.0.151 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.0.41 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.0.151 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.0.41 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.0.151 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.0.41 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.0.151 | 1 | 0 |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

12 rows in set (0.05 sec)

MySQL [oceanbase]>

my_test_tent租户下的testdb库有一张非分区表test,一个hash分区表test_hash,

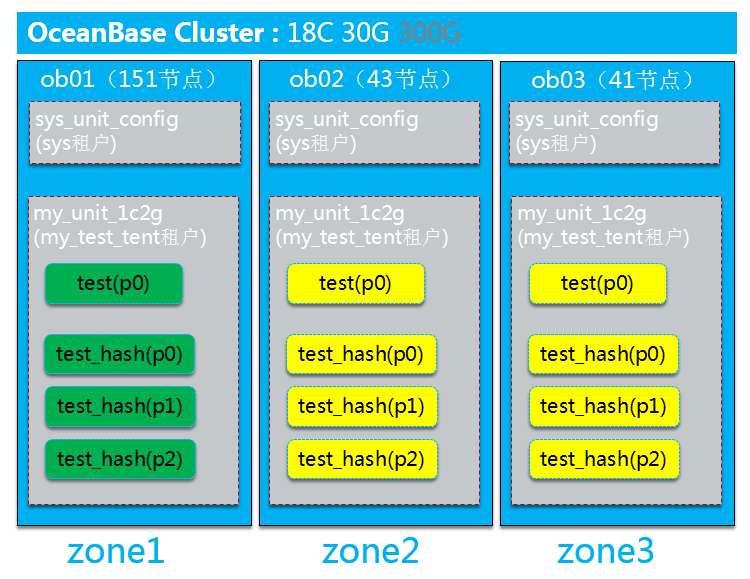

从以上可以看到集群扩容之前,非分区表test,只有一个p0号分区,主副本在zone1下的151节点,两个备副本在43、41节点上。test_hash分区表的p0-p2三个分区的主副本也都在zone1下的151节点,两个备副本也都在43、41节点上。

扩容后,原三个节点151、43、41,资源可用得到释放,将业务租户my_test_tent占用的1cpu+2g内存资源,转移到新加入集群的106、89、143三个节点。

那么再来看扩容后,是否业务租户my_test_tent下对应的表分区是否负载均衡到了新节点呢?

扩容后:如下sql: 其中role=1为主副本,role=2为备副本

MySQL [oceanbase]> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.ro

le in (1,2)) -> where t1.tenant_id = 1001

-> order by t3.tablegroup_id, t3.table_name,t4.partition_Id ;

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.0.106 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.0.106 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.0.106 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.0.106 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.0.89 | 2 | 0 |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

12 rows in set (0.05 sec)

MySQL [oceanbase]>

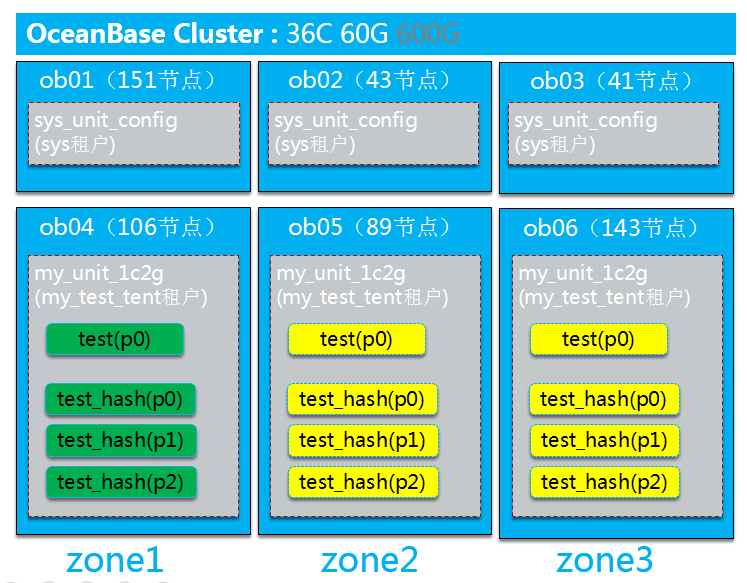

从以上结果可以看到,确实集群扩容后,集群负载均衡机制,导致扩容前原业务租户my_test_tent在集群扩容后,资源负载到了新节点。

扩容后,非分区表test的p0号分区,主副本在zone1下的151节点转移到了zone1下的106新节点,两个备副本也转移到了新节点143、89。

test_hash分区表的p0-p2三个分区的主副本也都由zone1下的151节点转移到了zone1下的106新节点,两个备副本也转移到了新节点143、89。。

复制6、最后以图形式,来展现集群扩容前后资源和表数据分布情况

(1)、扩容前:

(2)、扩容后:节点数增加,资源池大小增加一倍

到此OB1.4集群从1-1-1架构扩容到2-2-2架构已完成。 本次OceanBase 1.4集群扩容体验,到此结束。 一步一步学习OceanBase系列 下一篇准备进行oceanbase可扩展体验————租户扩容。。 下次实操继续更新~~~~~复制

最后修改时间:2020-08-09 17:50:55

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。