一步一步学习OceanBase系列回顾:

第一篇:OceanBase 1.4 集群手动安装步骤

第二篇:obproxy的安装、配置和使用

第三篇:OceanBase 1.4 集群——租户的创建和使用

第四篇:OceanBase1.4 集群扩容

第五篇:OceanBase1.4 集群——租户的扩容

本篇是第六篇 ob1.4集群下,实操体验节点宕机故障,了解数据是怎样自动恢复和转移。。

oceanbase 1.4集群下,模拟节点宕机故障,分析数据分区变化情况

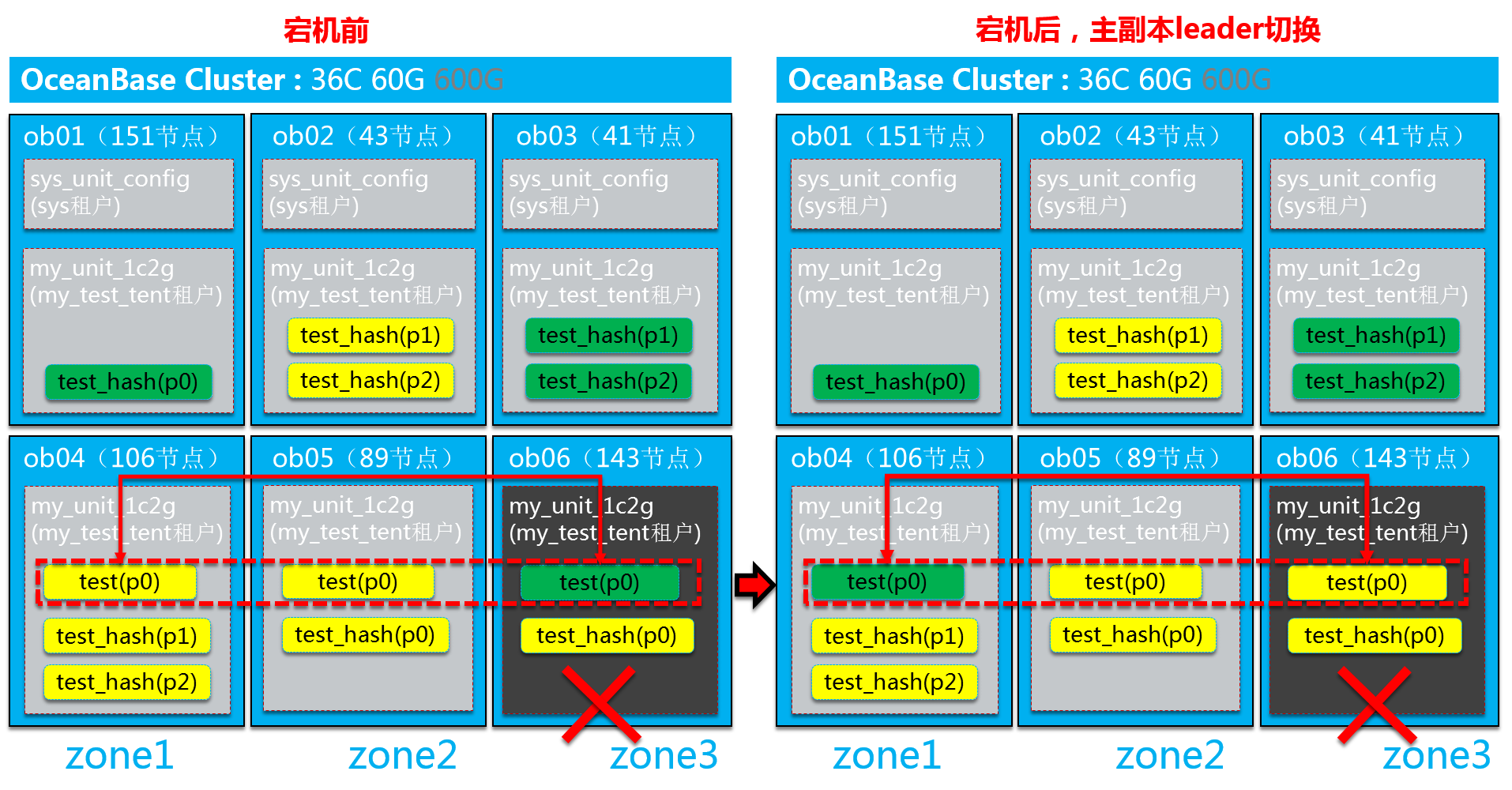

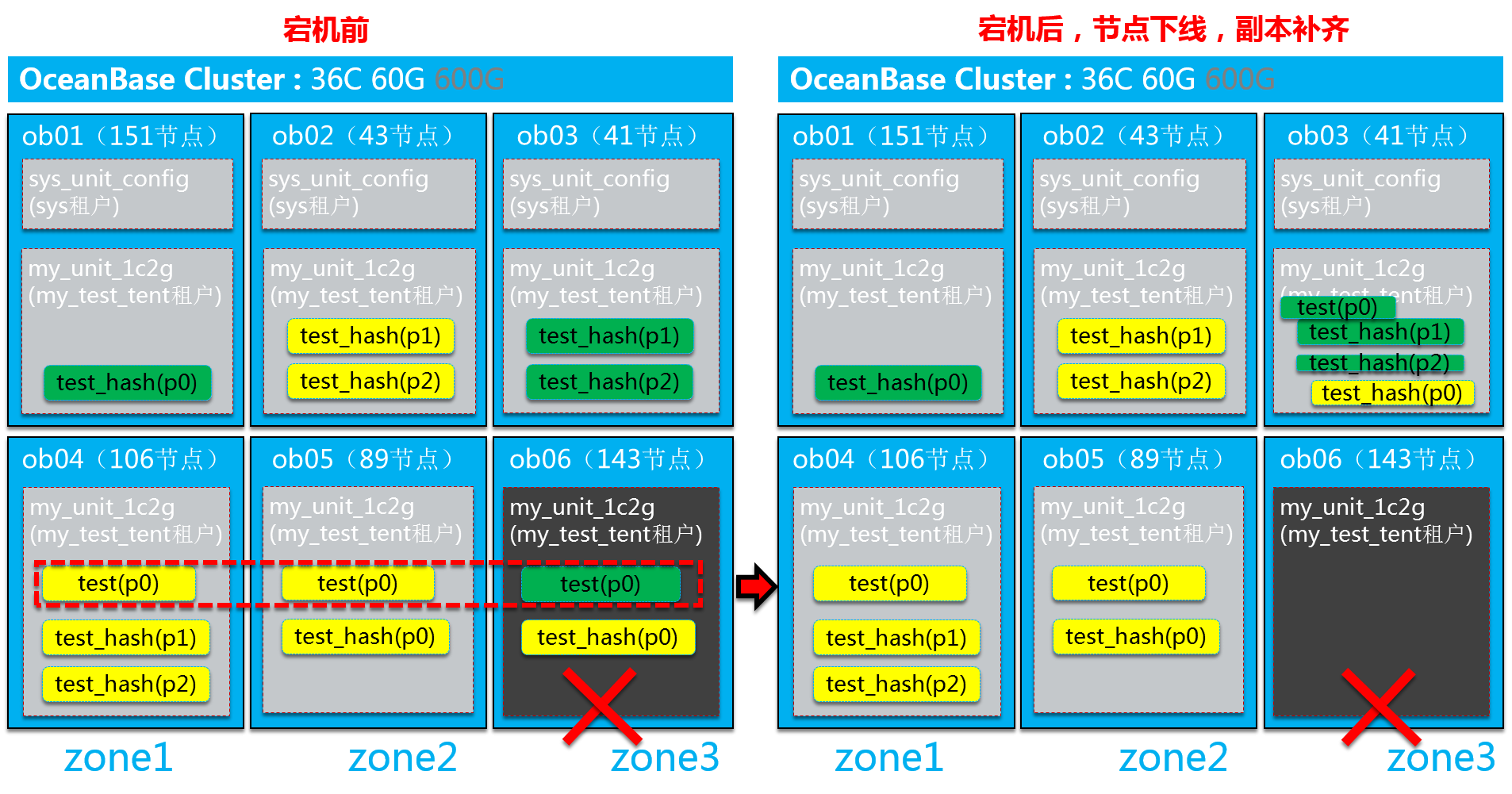

节点宕机,OB集群会经历两个阶段:一是leader主副本切换,二是节点下线副本补齐。

以下是实操体验:

1、宕机之前,查看业务租户my_test_tent下testdb库下,所有的表分区主备副本节点分布情况:

MySQL [oceanbase]> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id = 1001

-> order by t3.tablegroup_id, t3.table_name,t4.partition_Id ;

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.0.143 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.0.151 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.0.43 | 2 | 0 |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

12 rows in set (0.06 sec)

MySQL [oceanbase]>

非分区表test的p0号分区,主副本在zone3下的143节点,两个备副本在zone1的106节点和zone2的89节点。

test_hash分区表的p0分区,主副本在zone1下的151节点,两个备副本在zone2的89节点和zone3的143节点。

test_hash分区表的p1分区,主副本在zone3下的41节点,两个备副本在zone1的106节点和zone2的43节点。

test_hash分区表的p2分区,主副本在zone3下的41节点,两个备副本在zone1的106节点和zone2的43节点。

2、模拟节点宕机,宕机zone3下的143节点,kill observer进程

MySQL [oceanbase]> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+---------------+----------+------------+-----------------+----------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+---------------+----------+------------+-----------------+----------+----------------------------+

| zone1 | 192.168.0.106 | 2882 | 2881 | 0 | active | 2020-08-07 18:21:17.519966 |

| zone1 | 192.168.0.151 | 2882 | 2881 | 1 | active | 2020-08-07 11:30:28.623847 |

| zone2 | 192.168.0.43 | 2882 | 2881 | 0 | active | 2020-08-07 11:30:28.604907 |

| zone2 | 192.168.0.89 | 2882 | 2881 | 0 | active | 2020-08-07 18:21:23.741350 |

| zone3 | 192.168.0.143 | 2882 | 2881 | 0 | inactive | 2020-08-07 18:21:29.227633 |

| zone3 | 192.168.0.41 | 2882 | 2881 | 0 | active | 2020-08-07 11:30:28.613459 |

+-------+---------------+----------+------------+-----------------+----------+----------------------------+

6 rows in set (0.00 sec)

MySQL [oceanbase]>

3、节点宕机对业务操作的影响:

宕机之前,查询test表,会连接到test(p0)主副本所在的143节点

MySQL [testdb]> select * from test;

+------+---------+------+---------------------+

| id | name | age | dt |

+------+---------+------+---------------------+

| 1 | ob1.4.6 | 20 | 2020-08-07 14:05:40 |

| 2 | 张三 | 26 | 1998-06-17 00:00:00 |

| 3 | 李四 | 32 | 1989-10-13 00:00:00 |

| 4 | Tom | 30 | 1990-02-21 00:00:00 |

+------+---------+------+---------------------+

4 rows in set (0.00 sec)

宕机发生时,只会导致局部业务访问会中断,如:查询test表,会存在短暂的中断,因为主副本143节点宕机,ob内部会进行分区迁移,导致test(p0)此分区的业务会有短暂的中断或卡顿。

MySQL [testdb]> select * from test;

ERROR 4012 (HY000): Timeout

MySQL [testdb]>

当原143节点上的test主副本迁移到其它节点后,再次查询test表,数据正常。

MySQL [testdb]> select * from test;

+------+---------+------+---------------------+

| id | name | age | dt |

+------+---------+------+---------------------+

| 1 | ob1.4.6 | 20 | 2020-08-07 14:05:40 |

| 2 | 张三 | 26 | 1998-06-17 00:00:00 |

| 3 | 李四 | 32 | 1989-10-13 00:00:00 |

| 4 | Tom | 30 | 1990-02-21 00:00:00 |

+------+---------+------+---------------------+

4 rows in set (1.63 sec)

MySQL [testdb]>

4、宕机后,查看所有的表分区主备副本节点分布情况:

MySQL [oceanbase]> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id = 1001

-> order by t3.tablegroup_id, t3.table_name,t4.partition_Id ;

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.0.106 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.0.151 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.0.143 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.0.43 | 2 | 0 |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

12 rows in set (0.06 sec)

MySQL [oceanbase]>

143节点宕机后,leader主副本进行切换,即:test表主副本由原zone3下143节点,转移到zone1下的106节点,两个备副本在zone2的89节点和zone3的143节点不变。

这里143 observer节点还未真正下线,当某个节点宕机后,OB集群会等待管理员去修复,如果1小时还未修复此节点,那么到时OB集群会剔除此节点,让该宕机节点下线,此时该节点下的所有分区备副本也将全部进行数据迁移到正常节点,即副本补齐策略。

5、节点下线,副本补齐。

MySQL [oceanbase]> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id = 1001

-> order by t3.tablegroup_id, t3.table_name,t4.partition_Id ;

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.0.89 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.0.41 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.0.151 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.0.43 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.0.41 | 1 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.0.106 | 2 | 0 |

| 1001 | my_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.0.43 | 2 | 0 |

+-----------+--------------+---------------+------------------+------------+---------------+----------+--------------+-------+---------------+------+--------------+

12 rows in set (0.06 sec)

MySQL [oceanbase]>

这时再查看所有的表分区主备副本节点分布情况,已经没有143节点,原143节点上的副本都补齐到其它节点。

最终,143节点下线后,数据分布为:

非分区表test的p0号分区,宕机下线后,主副本从zone3下的143节点,转移到了zone3下的41节点,两个备副本在zone1的106节点和zone2的89节点。

test_hash分区表的p0分区,主副本在zone1下的151节点不变,原zone2下的89备副本不变,原zone3的143节点的备副本,转移到zone3下的41节点。

test_hash分区表的p1分区,主副本在zone3下的41节点不变,两个备副本在zone1的106节点和zone2的43节点不变。

test_hash分区表的p2分区,主副本在zone3下的41节点不变,两个备副本在zone1的106节点和zone2的43节点不变。

复制6、以图的形式,展现143节点宕机前后数据的变化:

(1)、143节点宕机,leader主副本切换:

(2)、143节点宕机1小时后,节点下线,副本补齐

本次OceanBase 1.4集群节点宕机体验,到此结束。 一步一步学习OceanBase系列 下次实操继续更新~~~~~复制

最后修改时间:2020-08-10 13:46:47

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

评论

相关阅读

2025年4月中国数据库流行度排行榜:OB高分复登顶,崖山稳驭撼十强

墨天轮编辑部

1736次阅读

2025-04-09 15:33:27

2025年3月国产数据库大事记

墨天轮编辑部

837次阅读

2025-04-03 15:21:16

OceanBase 接入 MCP 架构:贯通数据孤岛,释放 AI 创新潜能

OceanBase数据库

319次阅读

2025-03-28 15:32:52

OceanBase 单机版发布,针对中小规模业务场景

通讯员

251次阅读

2025-03-28 12:01:19

AI关键场景得到全面支持!OceanBase入选Forrester报告三大领域代表厂商

OceanBase数据库

211次阅读

2025-04-19 22:27:54

数据库管理-第313期 分布式挑战单机,OceanBase单机版试玩(20250411)

胖头鱼的鱼缸

202次阅读

2025-04-10 22:41:56

OceanBase CEO杨冰:2025年分布式数据库将迎来本地部署和国产升级的全面爆发

通讯员

194次阅读

2025-04-03 09:35:26

OceanBase亮相「党政信息化产品技术选型供需对接会」,助力党政关键业务系统升级

OceanBase

184次阅读

2025-03-27 09:55:58

OceanBase单机版产品解读

多明戈教你玩狼人杀

166次阅读

2025-04-11 15:28:33

TP与AP共生之道:OceanBase 4.3.5 HTAP混合负载实战

shunwahⓂ️

154次阅读

2025-03-27 15:04:42