导读: 全文阅读需要将近 30 分钟,关心结果的可以直接跳到尾部,内含压测办法及脚本。

最近几年 MySQL 是非常稳定,各种架构也非常成熟。现在带来的一个新的业务需求是数据越来越大,分析场景 MySQL 就显得有点难以支撑,这也是现在 HTAP 各种架构出现了的原因。如果你的场景跑新型的 HTAP 成本有点超预算,那就可以使用 Clickhouse 和 Databend 来支撑业务。本篇文章基于对象存储测试一下 Clickhouse 和 Databend 的性能区别,这两款产品目前都可以支持 S3 做存储,实现按存储按需分配。

Clickhouse 是大家都知道的一款地球上宽表查询性最快的数据库:https://clickhouse.com/ Clickhouse 去年拿了投资后也在做云原生这个方向,其中一个重要内容就是支持 S3 作为新型存储。

Databend 是一款:云原生新型基于对象存储的数仓,实现了:低成本,高性能,弹性伸缩。

文档: https://databend.rs/doc (含 Databend 架构图)

Databend Repo: https://github.com/datafuselabs/databend (欢迎 Star 关注)

首先我们需要了解一下什么是云原生?这个概念也快被玩坏了,很多人认为云上的 MySQL 就是云原生 MySQL,其实不是,真正的云原生应该符合:

•不需做硬件方面的管理及配置

•不需要做软件方面安装及管理

•不需要关心故障管理,升级和优化

•支持快速的弹性扩容和缩容

•只为使用时的存储和资源付费,如果没有业务请求,就可以不付费

•不用为使用的资源操太多的心

Databend 为什么要使用 S3 对象存储?

•支持高可用

•不用关心副本数

•多 IDC 可用,及多云切换

•支持全球内数据共享及分布

•不用考虑预留空间,只为使用的空间付费

•支持基于同一份数据多集群并发读写,并提供快照级隔离能力

• 完善的事务支持

•不用管理备份,支持在规定的时间内随意闪回(以表,DB 这样的粒度)

测试的步骤

- 系统:ubuntu 20

- Clickhouse:22.2.3 (参考官网安装)

- Databend : 获取 Github 当天的二进制版本部

https://github.com/datafuselabs/databend/releases

数据下载

wget --no-check-certificate --continue https://transtats.bts.gov/PREZIP/On_Time_Reporting_Carrier_On_Time_Performance_1987_present_{1987..2021}_{1..12}.zip复制

Clickhouse 配置及表结构

<yandex>

<storage_configuration>

<disks>

<s3>

<type>s3</type>

<endpoint>https://databend-shared.s3.us-east-2.amazonaws.com/ch-data-s3/</endpoint>

<access_key_id>your-key-id</access_key_id>

<secret_access_key>your-key</secret_access_key>

<cache_enabled>true</cache_enabled>

</s3>

</disks>

<policies>

<s3>

<volumes>

<main>

<disk>s3</disk>

</main>

</volumes>

</s3>

</policies>

</storage_configuration>

</yandex>复制

CREATE TABLE `ontime`

(

...

) ENGINE = MergeTree

PARTITION BY Year

ORDER BY (IATA_CODE_Reporting_Airline, FlightDate)

SETTINGS index_granularity = 8192, storage_policy='s3' ;复制

Databend 配置及表结构

CREATE TABLE ontime

(

Year UInt16 NOT NULL,

Quarter UInt8 NOT NULL,

Month UInt8 NOT NULL,

DayofMonth UInt8 NOT NULL,

DayOfWeek UInt8 NOT NULL,

FlightDate Date NOT NULL,

Reporting_Airline String NOT NULL,

DOT_ID_Reporting_Airline Int32 NOT NULL,

IATA_CODE_Reporting_Airline String NOT NULL,

Tail_Number String NOT NULL,

Flight_Number_Reporting_Airline String NOT NULL,

OriginAirportID Int32 NOT NULL,

OriginAirportSeqID Int32 NOT NULL,

OriginCityMarketID Int32 NOT NULL,

Origin String NOT NULL,

OriginCityName String NOT NULL,

OriginState String NOT NULL,

OriginStateFips String NOT NULL,

OriginStateName String NOT NULL,

OriginWac Int32 NOT NULL,

DestAirportID Int32 NOT NULL,

DestAirportSeqID Int32 NOT NULL,

DestCityMarketID Int32 NOT NULL,

Dest String NOT NULL,

DestCityName String NOT NULL,

DestState String NOT NULL,

DestStateFips String NOT NULL,

DestStateName String NOT NULL,

DestWac Int32 NOT NULL,

CRSDepTime Int32 NOT NULL,

DepTime Int32 NOT NULL,

DepDelay Int32 NOT NULL,

DepDelayMinutes Int32 NOT NULL,

DepDel15 Int32 NOT NULL,

DepartureDelayGroups String NOT NULL,

DepTimeBlk String NOT NULL,

TaxiOut Int32 NOT NULL,

WheelsOff Int32 NOT NULL,

WheelsOn Int32 NOT NULL,

TaxiIn Int32 NOT NULL,

CRSArrTime Int32 NOT NULL,

ArrTime Int32 NOT NULL,

ArrDelay Int32 NOT NULL,

ArrDelayMinutes Int32 NOT NULL,

ArrDel15 Int32 NOT NULL,

ArrivalDelayGroups Int32 NOT NULL,

ArrTimeBlk String NOT NULL,

Cancelled UInt8 NOT NULL,

CancellationCode String NOT NULL,

Diverted UInt8 NOT NULL,

CRSElapsedTime Int32 NOT NULL,

ActualElapsedTime Int32 NOT NULL,

AirTime Int32 NOT NULL,

Flights Int32 NOT NULL,

Distance Int32 NOT NULL,

DistanceGroup UInt8 NOT NULL,

CarrierDelay Int32 NOT NULL,

WeatherDelay Int32 NOT NULL,

NASDelay Int32 NOT NULL,

SecurityDelay Int32 NOT NULL,

LateAircraftDelay Int32 NOT NULL,

FirstDepTime String NOT NULL,

TotalAddGTime String NOT NULL,

LongestAddGTime String NOT NULL,

DivAirportLandings String NOT NULL,

DivReachedDest String NOT NULL,

DivActualElapsedTime String NOT NULL,

DivArrDelay String NOT NULL,

DivDistance String NOT NULL,

Div1Airport String NOT NULL,

Div1AirportID Int32 NOT NULL,

Div1AirportSeqID Int32 NOT NULL,

Div1WheelsOn String NOT NULL,

Div1TotalGTime String NOT NULL,

Div1LongestGTime String NOT NULL,

Div1WheelsOff String NOT NULL,

Div1TailNum String NOT NULL,

Div2Airport String NOT NULL,

Div2AirportID Int32 NOT NULL,

Div2AirportSeqID Int32 NOT NULL,

Div2WheelsOn String NOT NULL,

Div2TotalGTime String NOT NULL,

Div2LongestGTime String NOT NULL,

Div2WheelsOff String NOT NULL,

Div2TailNum String NOT NULL,

Div3Airport String NOT NULL,

Div3AirportID Int32 NOT NULL,

Div3AirportSeqID Int32 NOT NULL,

Div3WheelsOn String NOT NULL,

Div3TotalGTime String NOT NULL,

Div3LongestGTime String NOT NULL,

Div3WheelsOff String NOT NULL,

Div3TailNum String NOT NULL,

Div4Airport String NOT NULL,

Div4AirportID Int32 NOT NULL,

Div4AirportSeqID Int32 NOT NULL,

Div4WheelsOn String NOT NULL,

Div4TotalGTime String NOT NULL,

Div4LongestGTime String NOT NULL,

Div4WheelsOff String NOT NULL,

Div4TailNum String NOT NULL,

Div5Airport String NOT NULL,

Div5AirportID Int32 NOT NULL,

Div5AirportSeqID Int32 NOT NULL,

Div5WheelsOn String NOT NULL,

Div5TotalGTime String NOT NULL,

Div5LongestGTime String NOT NULL,

Div5WheelsOff String NOT NULL,

Div5TailNum String NOT NULL

);复制

echo "unzip ontime ,input your ontime zip dir: ./load_ontime.sh zip_dir"

ls $1/*.zip |xargs -I{} -P 4 bash -c "echo {}; unzip -q {} '*.csv' -d ./dataset"

if [ $? -eq 0 ];

then

echo "unzip success"

else

echo "unzip was wrong!!!"

exit 1

fi

cat create_ontime.sql |mysql -h127.0.0.1 -P3307 -uroot

if [ $? -eq 0 ];

then

echo "Ontime table create success"

else

echo "Ontime table create was wrong!!!"

exit 1

fi

time ls ./dataset/*.csv|xargs -P 8 -I{} curl -H "insert_sql:insert into ontime format CSV" -H "skip_header:1" -F "upload=@{}" -XPUT http://localhost:8081/v1/streaming_load复制

# 把 local_ontime.sh 给可执行权限

chmod +x load_ontime.sh

#安装 MySQL 客户端

sudo apt-get install mysql-client复制

测试脚本

wget https://github.com/sharkdp/hyperfine/releases/download/v1.13.0/hyperfine_1.13.0_amd64.deb

sudo dpkg -i hyperfine_1.13.0_amd64.deb复制

#!/bin/bash

cat << EOF > bench.sql

SELECT DayOfWeek, count(*) AS c FROM ontime WHERE Year >= 2000 AND Year <= 2008 GROUP BY DayOfWeek ORDER BY c DESC;

SELECT DayOfWeek, count(*) AS c FROM ontime WHERE DepDelay>10 AND Year >= 2000 AND Year <= 2008 GROUP BY DayOfWeek ORDER BY c DESC;

SELECT Origin, count(*) AS c FROM ontime WHERE DepDelay>10 AND Year >= 2000 AND Year <= 2008 GROUP BY Origin ORDER BY c DESC LIMIT 10;

SELECT IATA_CODE_Reporting_Airline AS Carrier, count(*) FROM ontime WHERE DepDelay>10 AND Year = 2007 GROUP BY Carrier ORDER BY count(*) DESC;

SELECT IATA_CODE_Reporting_Airline AS Carrier, avg(DepDelay>10)*1000 AS c3 FROM ontime WHERE Year=2007 GROUP BY Carrier ORDER BY c3 DESC;

SELECT IATA_CODE_Reporting_Airline AS Carrier, avg(DepDelay>10)*1000 AS c3 FROM ontime WHERE Year>=2000 AND Year <=2008 GROUP BY Carrier ORDER BY c3 DESC;

SELECT IATA_CODE_Reporting_Airline AS Carrier, avg(DepDelay) * 1000 AS c3 FROM ontime WHERE Year >= 2000 AND Year <= 2008 GROUP BY Carrier;

SELECT Year, avg(DepDelay) FROM ontime GROUP BY Year;

select Year, count(*) as c1 from ontime group by Year;

SELECT avg(cnt) FROM (SELECT Year,Month,count(*) AS cnt FROM ontime WHERE DepDel15=1 GROUP BY Year,Month) a;

select avg(c1) from (select Year,Month,count(*) as c1 from ontime group by Year,Month) a;

SELECT OriginCityName, DestCityName, count(*) AS c FROM ontime GROUP BY OriginCityName, DestCityName ORDER BY c DESC LIMIT 10;

SELECT OriginCityName, count(*) AS c FROM ontime GROUP BY OriginCityName ORDER BY c DESC LIMIT 10;

EOF

WARMUP=3

RUN=10

export script="hyperfine -w $WARMUP -r $RUN"

script=""

function run() {

port=$1

result=$2

script="hyperfine -w $WARMUP -r $RUN"

i=0

while read SQL; do

f=/tmp/bench_${i}.sql

echo "$before_sql" > $f

echo "$SQL" >> $f

#s="cat $f | clickhouse-client --host 127.0.0.1 --port $port"

s="cat $f | mysql -h127.0.0.1 -P$port -uroot -s"

script="$script '$s'"

i=$[i+1]

done <./bench.sql

script="$script --export-markdown $result"

echo $script | bash -x

}

run "3307" "$1"

echo "select version() as version" |mysql -h127.0.0.1 -P3307 -uroot >> $result复制

script=""

function run() {

port=$1

result=$2

script="hyperfine -w $WARMUP -r $RUN"

i=0

while read SQL; do

f=/tmp/bench_${i}.sql

echo "$SQL" > $f

s="cat $f | clickhouse-client --host 127.0.0.1 --port $port"

script="$script '$s'"

i=$[i+1]

done <<< $(cat bench.sql)

script="$script --export-markdown $result"

echo $script | bash -x

}

run "9000" "$1"复制

./run_time.sh D20220322.md

./ch_run.sh C202220322.md复制

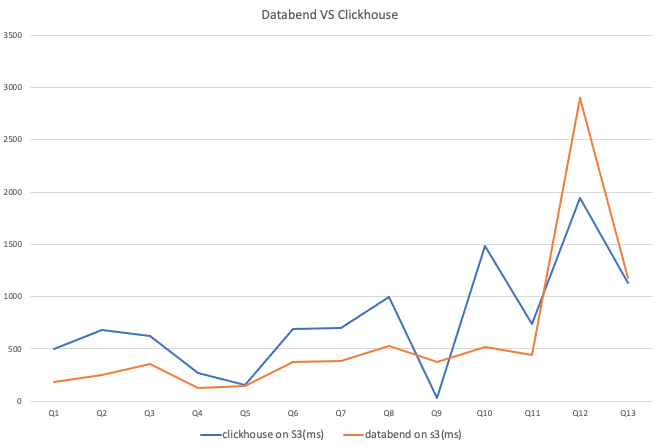

对比结果