logminer 使用在线目录挖掘

继上一篇https://www.modb.pro/db/380999#logminer_434,介绍了logmnr的基本概念,验证了使用数据字典存储在redo日志中的方法来挖掘,然后还有一种使用在线目录的方法进行挖掘,下面就对这种方法进行介绍,并最后做一个总结。

强调几点

logminer的作用

- 分析数据库的逻辑更改

- 检查并更正用户的误操作

- 执行事后审计

- 数据库性能趋势分析

logminer能够分析两种日志

LogMiner能够通过SQL的方式,将日志中的数据改变提取出来。Logminer能够分析什么日志?

- Online redo logfile

- Archived logfile

关于添加重做日志

有两种:自动和手动。

- 自动

LogMiner将使用数据库控制文件来查找并将满足指定时间或SCN范围的重做日志文件添加到LogMiner重做日志文件列表中。例如:

alter session set nls_date_language=‘AMERICAN’;

ALTER SESSION SET NLS_DATE_FORMAT=‘YYYY-MM-DD HH24:MI:SS’;

EXECUTE DBMS_LOGMNR.START_LOGMNR(-

starttime => ‘2022-03-29 20:47:04’, -

endtime => ‘2022-03-29 20:48:15’, -

OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.CONTINUOUS_MINE + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

其中重要特点就是:1、启用了特殊参数CONTINUOUS_MINE;2、没有添加日志过程,直接start logminer。关于此过程,后面会做详细说明,此处只是和手动做一个比较。 - 手动

新建第一个日志:EXECUTE DBMS_LOGMNR.ADD_LOGFILE (LOGFILENAME =>’ / oracle/logs/log1.f’, OPTIONS => DBMS_LOGMNR.NEW);

后续添加日志:EXECUTE DBMS_LOGMNR.ADD_LOGFILE ( - LOGFILENAME =>’ / oracle/logs/log2.f’,OPTIONS => DBMS_LOGMNR.ADDFILE);

Using the Online Catalog

start_logmnr,DICT字典 是从(FROM) 在线数据字典(ONLINE_CATALOG)中获取。联机字典包括最新的数据库的信息,是最快的分析重做日志的方法。操作也最简单。当然有一个局限。因为联机字典只反映最近表的版本,所以如果表的结构被改动,重做日志中包含以前该表格版本的SQL,logmnr将没办法解析,只会显示16进制的数据。库和挖掘库是同一个库,对源库会有一定的影响

DICT_FROM_ONLINE_CATALOG。

是否需要开启归档

接上一篇的测试表t1,我们换个asm环境。这个asm环境是11204 asm standalone。

conn scott/tiger;

create table t1 (id int primary key,name varchar2(10),key number);

insert into t1 values(1,‘aaa’,10);

insert into t1 values(2,‘bbb’,11);

insert into t1 values(3,‘ccc’,12);

insert into t1 values(4,‘ddd’,13);

commit;

用到的挖掘语句:

EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => ‘+DATADG/orcl/onlinelog/group_2.258.1023146137’, OPTIONS => DBMS_LOGMNR.NEW);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => ‘+DATADG/orcl/onlinelog/group_3.259.1023146137’,OPTIONS => DBMS_LOGMNR.ADDFILE);

EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

set linesize 200

set pagesize 200

col USR for a10

col sql_redo for a50

col SQL_UNDO for a50

SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

V$LOGMNR_CONTENTS

WHERE SEG_OWNER =‘SCOTT’;

注意:Oracle的重作日志分为两种,在线(online)和离线(offline)归档日志文件,两种都可以分析,下面使用online redo log分析。

不开启最小附加日志

session 1:

SQL> alter system switch logfile;

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIM NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- --------- ------------ ---------

1 1 52 52428800 512 1 NO CURRENT 497874 29-MAR-22 2.8147E+14

2 1 50 52428800 512 1 NO INACTIVE 476500 29-MAR-22 484846 29-MAR-22

3 1 51 52428800 512 1 NO ACTIVE 484846 29-MAR-22 497874 29-MAR-22

SQL> update t1 set name='eee' where key=10;

1 row updated.

SQL> commit;

Commit complete.

复制session 2:

SQL> archive log list;

Database log mode No Archive Mode

Automatic archival Disabled

Archive destination /u01/app/oracle/product/11.2.0/dbhome_1/dbs/arch

Oldest online log sequence 50

Current log sequence 52

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/onlinelog/group_1.257.1023146137', OPTIONS => DBMS_LOGMNR.NEW);

PL/SQL procedure successfully completed.

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

PL/SQL procedure successfully completed.

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

no rows selected

复制不开启附加日志仍然挖掘不到。

开启最小附加日志

session 1:

SQL> alter database add supplemental log data;

Database altered.

SQL> SELECT supplemental_log_data_min min,

2 supplemental_log_data_pk pk,

3 supplemental_log_data_ui ui,

4 supplemental_log_data_fk fk,

5 supplemental_log_data_all allc

6 FROM v$database;

MIN PK UI FK ALL

-------- --- --- --- ---

YES NO NO NO NO

SQL> alter system switch logfile;

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIM NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- --------- ------------ ---------

1 1 52 52428800 512 1 NO ACTIVE 497874 29-MAR-22 497970 29-MAR-22

2 1 53 52428800 512 1 NO CURRENT 497970 29-MAR-22 2.8147E+14

3 1 51 52428800 512 1 NO ACTIVE 484846 29-MAR-22 497874 29-MAR-22

SQL> update t1 set name='fff' where key=11;

1 row updated.

SQL> commit;

Commit complete.

复制session 3:

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/onlinelog/group_2.258.1023146137', OPTIONS => DBMS_LOGMNR.NEW);

PL/SQL procedure successfully completed.

SQL> select * from v$logmnr_logs;

LOG_ID FILENAME LOW_TIME HIGH_TIME DB_ID DB_NAME RESET_SCN RESET_SCN COMPATIBLE THREAD_ID THREAD_SQN LOW_SCN NEXT_SCN DIC DIC TYPE

---------- -------------------------------------------------- --------- --------- ---------- -------- ---------- --------- ----------------- ---------- ---------- ---------- ---------- --- --- -------

BLOCKSIZE FILESIZE INFO STATUS

---------- ---------- -------------------------------- ----------

53 +DATADG/orcl/onlinelog/group_2.258.1023146137 29-MAR-22 01-JAN-88 1550926869 ORCL 1 31-OCT-19 11.2.0.4.0 1 53 497970 2.8147E+14 NO NO ONLINE

512 0

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

PL/SQL procedure successfully completed.

SQL> select * from v$logmnr_parameters;

START_DAT REQUIRED_ END_DATE START_SCN REQUIRED_START_SCN END_SCN OPTIONS INFO STATUS

--------- --------- --------- ---------- ------------------ ---------- ---------- -------------------------------- ----------

01-JAN-11 497970 0 0 534 0

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT update "SCOTT"."T1" update "SCOTT"."T1"

set set

"NAME" = 'fff' "NAME" = 'bbb'

where where

"NAME" = 'bbb' and "NAME" = 'fff' and

ROWID = 'AAAE+jAAEAAAACuAAB'; ROWID = 'AAAE+jAAEAAAACuAAB';

复制涉及的logmnr对象,都是会话临时表和临时对象,会话退出,内容就都没了。

结论,可以不用开启归档也能挖掘,在线挖掘对归档没要求。在11204 asm standalone环境中,挖掘之前,一定要开启最小附加日志,要不然挖掘不到任何东西。

以下我们分析归档日志,所以打开数据库归档,并且开启了最小附加日志,进行挖掘。

归档日志挖掘

session 1:

conn scott/tiger;

create table t3 (id int primary key,name varchar2(10),key number);

insert into t3 values(1,‘aaa’,10);

insert into t3 values(2,‘bbb’,11);

insert into t3 values(3,‘ccc’,12);

insert into t3 values(4,‘ddd’,13);

commit;

SQL> alter system switch logfile;

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIM NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- --------- ------------ ---------

1 1 61 52428800 512 1 NO CURRENT 505524 29-MAR-22 2.8147E+14

2 1 59 52428800 512 1 YES INACTIVE 505386 29-MAR-22 505397 29-MAR-22

3 1 60 52428800 512 1 YES ACTIVE 505397 29-MAR-22 505524 29-MAR-22

SQL> alter system switch logfile;

System altered.

SQL> /

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIM NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- --------- ------------ ---------

1 1 61 52428800 512 1 YES ACTIVE 505524 29-MAR-22 505532 29-MAR-22

2 1 62 52428800 512 1 YES ACTIVE 505532 29-MAR-22 505537 29-MAR-22

3 1 63 52428800 512 1 NO CURRENT 505537 29-MAR-22 2.8147E+14

SQL> select SEQUENCE#,name from v$archived_log;

SEQUENCE# NAME

---------- ----------------------------------------------------------------------

53 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_53.267.1100635157

54 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_54.268.1100635333

55 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_55.269.1100635503

56 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_56.270.1100635545

57 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_57.271.1100635567

58 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_58.272.1100636013

59 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_59.273.1100636035

60 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_60.274.1100636055

61 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_61.275.1100636069

62 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_62.276.1100636071

10 rows selected.

复制我们就分析60,61,62 号日志,建表和插数的语句都在60上面。

session 4:

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/archivelog/2022_03_29/thread_1_seq_60.274.1100636055', OPTIONS => DBMS_LOGMNR.NEW);

PL/SQL procedure successfully completed.

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/archivelog/2022_03_29/thread_1_seq_61.275.1100636069',OPTIONS => DBMS_LOGMNR.ADDFILE);

PL/SQL procedure successfully completed.

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/archivelog/2022_03_29/thread_1_seq_62.276.1100636071',OPTIONS => DBMS_LOGMNR.ADDFILE);

PL/SQL procedure successfully completed.

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

PL/SQL procedure successfully completed.

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT CREATE UNIQUE INDEX "SCOTT"."SYS_C007425" on "SCOT

T"."T3"("ID") NOPARALLEL;

SCOTT create table t3 (id int primary key,name varchar2(

10),key number);

SCOTT insert into "SCOTT"."T3" delete from "SCOTT"."T3"

values where

"ID" = 1, "ID" = 1 and

"NAME" = 'aaa', "NAME" = 'aaa' and

"KEY" = 10; "KEY" = 10 and

ROWID = 'AAAE+qAAEAAAAC/AAA';

SCOTT insert into "SCOTT"."T3" delete from "SCOTT"."T3"

values where

"ID" = 2, "ID" = 2 and

"NAME" = 'bbb', "NAME" = 'bbb' and

"KEY" = 11; "KEY" = 11 and

ROWID = 'AAAE+qAAEAAAAC/AAB';

SCOTT insert into "SCOTT"."T3" delete from "SCOTT"."T3"

values where

"ID" = 3, "ID" = 3 and

"NAME" = 'ccc', "NAME" = 'ccc' and

"KEY" = 12; "KEY" = 12 and

ROWID = 'AAAE+qAAEAAAAC/AAC';

SCOTT insert into "SCOTT"."T3" delete from "SCOTT"."T3"

values where

"ID" = 4, "ID" = 4 and

"NAME" = 'ddd', "NAME" = 'ddd' and

"KEY" = 13; "KEY" = 13 and

ROWID = 'AAAE+qAAEAAAAC/AAD';

6 rows selected.

复制我们可以看到,这是根据事务顺序来的,DDL语句和DML语句都可以挖掘到。

session 4继续:

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.DDL_DICT_TRACKING + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

BEGIN DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + DBMS_LOGMNR.COMMITTED_DATA_ONLY + DBMS_LOGMNR.PRINT_PRETTY_SQL + DBMS_LOGMNR.DDL_DICT_TRACKING + DBMS_LOGMNR.SKIP_CORRUPTION); END;

*

ERROR at line 1:

ORA-01298: conflicting dictionary option

ORA-06512: at "SYS.DBMS_LOGMNR", line 58

ORA-06512: at line 1

复制++DDL_DICT_TRACKING-“在LogMiner词典中跟踪DDL语句”。只适用于数据字典flat和redo日志中的字典,online catalog是不能启用这个参数的,否则会报错ORA-01298。 我们也可以看到,在11204中,DDL跟踪是默认开启的。 ++

有限制条件–StartTime/EndTime,startscn/endscn 指定分析范围并自动添加日志

一般分析的时候,我们添加的日志比较多,也很难分辨到底要添加哪些日志,我们就可以根据误操作的时间,来限定分析范围,缩小范围,然后oracle根据控制文件,自动添加日志。

如下

session 1:

SQL> select sysdate from dual;

SYSDATE

-------------------

2022-03-29 20:47:04

SQL> alter system switch logfile;

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------------- ------------ -------------------

1 1 64 52428800 512 1 NO CURRENT 507746 2022-03-29 20:47:31 2.8147E+14

2 1 62 52428800 512 1 YES INACTIVE 505532 2022-03-29 20:14:28 505537 2022-03-29 20:14:30

3 1 63 52428800 512 1 YES ACTIVE 505537 2022-03-29 20:14:30 507746 2022-03-29 20:47:31

SQL> select SEQUENCE#,name from v$archived_log;

SEQUENCE# NAME

---------- ----------------------------------------------------------------------

53 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_53.267.1100635157

54 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_54.268.1100635333

55 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_55.269.1100635503

56 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_56.270.1100635545

57 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_57.271.1100635567

58 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_58.272.1100636013

59 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_59.273.1100636035

60 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_60.274.1100636055

61 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_61.275.1100636069

62 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_62.276.1100636071

63 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_63.277.1100638051

11 rows selected.

SQL> create table t4 (id int primary key,name varchar2(10),key number);

insert into t4 values(1,'aaa',10);

Table created.

SQL> insert into t4 values(2,'bbb',11);

1 row created.

SQL>

1 row created.

SQL> insert into t4 values(3,'ccc',12);

1 row created.

SQL> insert into t4 values(4,'ddd',13);

1 row created.

SQL> commit;

Commit complete.

SQL> select sysdate from dual;

SYSDATE

-------------------

2022-03-29 20:48:15

复制我们明确知道了操作的时间范围,就如生产,我们知道是大概什么时间段产生的操作,限定范围,然后进行下面分析。

session 5:

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(-

> starttime => to_date('2022-03-29 20:47:04','YYYY-MM-DD HH24:MI:SS'), -

> endtime => to_date('2022-03-29 20:48:15','YYYY-MM-DD HH24:MI:SS'), -

> OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.CONTINUOUS_MINE + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

PL/SQL procedure successfully completed.

SQL>

SQL> set pages 1000 lines 1000

SQL> select * from v$logmnr_logs;

LOG_ID FILENAME LOW_TIME HIGH_TIME DB_ID DB_NAME RESET_SCN RESET_SCN COMPATIBLE THREAD_ID THREAD_SQN LOW_SCN NEXT_SCN DIC DIC TYPE BLOCKSIZE FILESIZE INFO STATUS

---------- -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- --------- --------- ---------- -------- ---------- --------- ----------------- ---------- ---------- ---------- ---------- --- --- ------- ---------- ---------- -------------------------------- ----------

63 +DATADG/orcl/archivelog/2022_03_29/thread_1_seq_63.277.1100638051 29-MAR-22 29-MAR-22 1550926869 ORCL 1 31-OCT-19 11.2.0.4.0 1 63 505537 507746 NO NO ARCHIVE 512 1463808 0

--根据控制文件,自动识别需要添加的日志。

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT CREATE UNIQUE INDEX "SCOTT"."SYS_C007426" on "SCOT

T"."T4"("ID") NOPARALLEL;

SCOTT create table t4 (id int primary key,name varchar2(

10),key number);

SCOTT insert into "SCOTT"."T4" delete from "SCOTT"."T4"

values where

"ID" = 1, "ID" = 1 and

"NAME" = 'aaa', "NAME" = 'aaa' and

"KEY" = 10; "KEY" = 10 and

ROWID = 'AAAE+sAAEAAAADPAAA';

SCOTT insert into "SCOTT"."T4" delete from "SCOTT"."T4"

values where

"ID" = 2, "ID" = 2 and

"NAME" = 'bbb', "NAME" = 'bbb' and

"KEY" = 11; "KEY" = 11 and

ROWID = 'AAAE+sAAEAAAADPAAB';

SCOTT insert into "SCOTT"."T4" delete from "SCOTT"."T4"

values where

"ID" = 3, "ID" = 3 and

"NAME" = 'ccc', "NAME" = 'ccc' and

"KEY" = 12; "KEY" = 12 and

ROWID = 'AAAE+sAAEAAAADPAAC';

SCOTT insert into "SCOTT"."T4" delete from "SCOTT"."T4"

values where

"ID" = 4, "ID" = 4 and

"NAME" = 'ddd', "NAME" = 'ddd' and

"KEY" = 13; "KEY" = 13 and

ROWID = 'AAAE+sAAEAAAADPAAD';

6 rows selected.

SQL> EXECUTE DBMS_LOGMNR.END_LOGMNR();

复制如上,我们使用了新的参数:CONTINUOUS_MINE。当我们在源数据库上使用logmnr来分析日志时,我们可以不添加日志,而是使用CONTINUOUS_MINE来让LOGMNR自动搜索控制文件。通过指定SCN或者TIME的范围,来找出对应的日志文件自动添加。

如上,我们没有添加日志的过程,而是直接start启动logmnr的。

以下为命令:

alter session set nls_date_language=‘AMERICAN’;

ALTER SESSION SET NLS_DATE_FORMAT=‘YYYY-MM-DD HH24:MI:SS’;

EXECUTE DBMS_LOGMNR.START_LOGMNR(-

starttime => ‘2022-03-29 20:47:04’, -

endtime => ‘2022-03-29 20:48:15’, -

OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.CONTINUOUS_MINE + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

或者

EXECUTE DBMS_LOGMNR.START_LOGMNR(-

starttime => to_date(‘2022-03-29 20:47:04’,‘YYYY-MM-DD HH24:MI:SS’), -

endtime => to_date(‘2022-03-29 20:48:15’,‘YYYY-MM-DD HH24:MI:SS’), -

OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.CONTINUOUS_MINE + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

DBMS_LOGMNR.START_LOGMNR参数测试

NO_ROWID_IN_STMT

上一篇中说到,ROWID在两个逻辑数据库上应用是不一致的,特别是没有开启最小附加日志的,根据rowid进行的主备更新甚至是错误的,那么我们干脆就把ROWID去掉,否则还影响SQL的可用性。如下:

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT update "SCOTT"."T1" update "SCOTT"."T1"

set set

"NAME" = 'fff' "NAME" = 'bbb'

where where

"NAME" = 'bbb' and "NAME" = 'fff' and

ROWID = 'AAATr3AAEAAAACvAAB'; ROWID = 'AAATr3AAEAAAACvAAB';

SQL> EXECUTE DBMS_LOGMNR.END_LOGMNR();

复制下面我们去掉rowid

session 1:

进行update操作

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------------- ------------ -------------------

1 1 64 52428800 512 1 NO CURRENT 507746 2022-03-29 20:47:31 2.8147E+14

2 1 62 52428800 512 1 YES INACTIVE 505532 2022-03-29 20:14:28 505537 2022-03-29 20:14:30

3 1 63 52428800 512 1 YES INACTIVE 505537 2022-03-29 20:14:30 507746 2022-03-29 20:47:31

SQL> alter system switch logfile;

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------------- ------------ -------------------

1 1 64 52428800 512 1 YES ACTIVE 507746 2022-03-29 20:47:31 509981 2022-03-29 21:18:50

2 1 65 52428800 512 1 NO CURRENT 509981 2022-03-29 21:18:50 2.8147E+14

3 1 63 52428800 512 1 YES INACTIVE 505537 2022-03-29 20:14:30 507746 2022-03-29 20:47:31

SQL> select * from v$logfile;

GROUP# STATUS TYPE MEMBER IS_

---------- ------- ------- ---------------------------------------------------------------------- ---

1 ONLINE +DATADG/orcl/onlinelog/group_1.257.1023146137 NO

2 ONLINE +DATADG/orcl/onlinelog/group_2.258.1023146137 NO

3 ONLINE +DATADG/orcl/onlinelog/group_3.259.1023146137 NO

SQL> update t4 set name='dsds' where id=4;

1 row updated.

SQL> commit;

Commit complete.

SQL> alter system switch logfile;

System altered.

复制我们就分析65号文件。

session 6:

分析DML所在的日志文件

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/onlinelog/group_2.258.1023146137', OPTIONS => DBMS_LOGMNR.NEW);

PL/SQL procedure successfully completed.

启动logmnr,增加NO_ROWID_IN_STMT选项。实际上,就增加一个选项就OK了

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.NO_ROWID_IN_STMT);

PL/SQL procedure successfully completed.

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT update "SCOTT"."T4" update "SCOTT"."T4"

set set

"NAME" = 'dsds' "NAME" = 'ddd'

where where

"NAME" = 'ddd'; "NAME" = 'dsds';

SQL> EXECUTE DBMS_LOGMNR.END_LOGMNR();

复制可以看到没有rowid了。

PRINT_PRETTY_SQL

顺便测试下选项PRINT_PRETTY_SQL:

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/onlinelog/group_2.258.1023146137', OPTIONS => DBMS_LOGMNR.NEW);

PL/SQL procedure successfully completed.

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.NO_ROWID_IN_STMT);

PL/SQL procedure successfully completed.

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT update "SCOTT"."T4" set "NAME" = 'dsds' where "NAM update "SCOTT"."T4" set "NAME" = 'ddd' where "NAME

E" = 'ddd';

SQL> EXECUTE DBMS_LOGMNR.END_LOGMNR();

复制其实就是为了格式更好看。

COMMITTED_DATA_ONLY

这个选项的目的是,只生成已提交了的redo数据。我们知道日志里有已提交和未提交的redo,那我们如何让LOGMNR只生成已提交的REDO。只要已经提交的数据,所以未提交的不用转换成SQL了。那么在启动logminer时,加此参数。

做两条DML,delete做完了之后马上COMMIT,而UPDATE未提交 .

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------------- ------------ -------------------

1 1 67 52428800 512 1 NO CURRENT 510441 2022-03-29 21:28:08 2.8147E+14

2 1 65 52428800 512 1 YES INACTIVE 509981 2022-03-29 21:18:50 510006 2022-03-29 21:19:22

3 1 66 52428800 512 1 YES ACTIVE 510006 2022-03-29 21:19:22 510441 2022-03-29 21:28:08

SQL> delete from t4 where id=4;

1 row deleted.

SQL> commit;

Commit complete.

SQL> update t4 set name='sdfs' where id=3;

1 row updated.

切换后再commit。

SQL> alter system switch logfile;

System altered.

SQL> select * from v$log;

GROUP# THREAD# SEQUENCE# BYTES BLOCKSIZE MEMBERS ARC STATUS FIRST_CHANGE# FIRST_TIME NEXT_CHANGE# NEXT_TIME

---------- ---------- ---------- ---------- ---------- ---------- --- ---------------- ------------- ------------------- ------------ -------------------

1 1 67 52428800 512 1 YES ACTIVE 510441 2022-03-29 21:28:08 510492 2022-03-29 21:29:38

2 1 68 52428800 512 1 NO CURRENT 510492 2022-03-29 21:29:38 2.8147E+14

3 1 66 52428800 512 1 YES ACTIVE 510006 2022-03-29 21:19:22 510441 2022-03-29 21:28:08

通过查看日志,发现delete和update的REDO都记录在67号日志文件。

因为切换后再commit。这样update的数据在67号日志里仍然是没有提交的

SQL> commit;

Commit complete.

COMMIT的REDO在下个日志文件里,也就是68号。

SQL> alter system switch logfile;

System altered.

复制下面我们分析67号日志

session 8:

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/onlinelog/group_1.257.1023146137', OPTIONS => DBMS_LOGMNR.NEW);

PL/SQL procedure successfully completed.

然后启动logmnr,使用COMMITTED_DATA_ONLY选项.

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

PL/SQL procedure successfully completed.

这样,我们查出来的数据里,是没有update这条数据的:

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT delete from "SCOTT"."T4" insert into "SCOTT"."T4"

where values

"ID" = 4 and "ID" = 4,

"NAME" = 'dsds' and "NAME" = 'dsds',

"KEY" = 13 and "KEY" = 13;

ROWID = 'AAAE+sAAEAAAADPAAD';

SQL> EXECUTE DBMS_LOGMNR.END_LOGMNR();

复制因为67号日志中没有UPDATE的COMMIT,Logmnr在分析了67号日志后,仅生成该日志中已提交的DML的redo信息.如果要让update 执行也能展示出来,那我们可以把68号日志也加进来就行了 .

session 8:

SQL> EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => '+DATADG/orcl/onlinelog/group_2.258.1023146137',OPTIONS => DBMS_LOGMNR.ADDFILE);

PL/SQL procedure successfully completed.

SQL> EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

> DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

> DBMS_LOGMNR.PRINT_PRETTY_SQL + -

> DBMS_LOGMNR.SKIP_CORRUPTION);

PL/SQL procedure successfully completed.

SQL> set linesize 200

SQL> set pagesize 200

SQL> col USR for a10

SQL> col sql_redo for a50

SQL> col SQL_UNDO for a50

SQL> SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

2 V$LOGMNR_CONTENTS

3 WHERE SEG_OWNER ='SCOTT';

USR SQL_REDO SQL_UNDO

---------- -------------------------------------------------- --------------------------------------------------

SCOTT delete from "SCOTT"."T4" insert into "SCOTT"."T4"

where values

"ID" = 4 and "ID" = 4,

"NAME" = 'dsds' and "NAME" = 'dsds',

"KEY" = 13 and "KEY" = 13;

ROWID = 'AAAE+sAAEAAAADPAAD';

SCOTT update "SCOTT"."T4" update "SCOTT"."T4"

set set

"NAME" = 'sdfs' "NAME" = 'ccc'

where where

"NAME" = 'ccc' and "NAME" = 'sdfs' and

ROWID = 'AAAE+sAAEAAAADPAAC'; ROWID = 'AAAE+sAAEAAAADPAAC';

SQL> EXECUTE DBMS_LOGMNR.END_LOGMNR();

复制总结

1、知道操作大概的时间段,可以采用自动添加日志的方式分析:

alter session set nls_date_language=‘AMERICAN’;

ALTER SESSION SET NLS_DATE_FORMAT=‘YYYY-MM-DD HH24:MI:SS’;

EXECUTE DBMS_LOGMNR.START_LOGMNR(-

starttime => ‘2022-03-29 20:47:04’, -

endtime => ‘2022-03-29 20:48:15’, -

OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.CONTINUOUS_MINE + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

set linesize 200

set pagesize 200

col USR for a10

col sql_redo for a50

col SQL_UNDO for a50

SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

V$LOGMNR_CONTENTS

WHERE SEG_OWNER =‘SCOTT’;

EXECUTE DBMS_LOGMNR.END_LOGMNR();

或者

EXECUTE DBMS_LOGMNR.START_LOGMNR(-

starttime => to_date(‘2022-03-29 20:47:04’,‘YYYY-MM-DD HH24:MI:SS’), -

endtime => to_date(‘2022-03-29 20:48:15’,‘YYYY-MM-DD HH24:MI:SS’), -

OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.CONTINUOUS_MINE + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

主要是参数DBMS_LOGMNR.CONTINUOUS_MINE起作用。

2、手动添加日志文件的方式,这就比较常见我们平常做一些测试,明确知道,需要添加哪些日志文件。

EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => ‘+DATADG/orcl/onlinelog/group_2.258.1023146137’, OPTIONS => DBMS_LOGMNR.NEW);

EXECUTE DBMS_LOGMNR.ADD_LOGFILE(LOGFILENAME => ‘+DATADG/orcl/onlinelog/group_3.259.1023146137’,OPTIONS => DBMS_LOGMNR.ADDFILE);

EXECUTE DBMS_LOGMNR.START_LOGMNR(OPTIONS => DBMS_LOGMNR.DICT_FROM_ONLINE_CATALOG + -

DBMS_LOGMNR.COMMITTED_DATA_ONLY + -

DBMS_LOGMNR.PRINT_PRETTY_SQL + -

DBMS_LOGMNR.SKIP_CORRUPTION);

set linesize 200

set pagesize 200

col USR for a10

col sql_redo for a50

col SQL_UNDO for a50

SELECT USERNAME AS usr,SQL_REDO,SQL_UNDO FROM

V$LOGMNR_CONTENTS

WHERE SEG_OWNER =‘SCOTT’;

EXECUTE DBMS_LOGMNR.END_LOGMNR();

3、DBMS_LOGMNR.START_LOGMNR程序启动LogMiner。DBMS_LOGMNR.START_LOGMNR启动选项,可以控制VLOGMNR_CONTENTS输出格式,你必须先使用DBMS_LOGMNR.START_LOGMNR启动分析程序,再查询VLOGMNR_CONTENTS视图,上面我们对比较重要的几个参数进行了测试。最佳实践就如1,2所示即可。参数意思如下:

选择字典的方式:

DICT_FROM_ONLINE_CATALOG-使用在线目录

DICT_FROM_REDO_LOGS-解析数据字典到重做日志中

其他:

CONTINUOUS_MINE-LOGMNR自动搜索控制文件。通过指定SCN或者TIME的范围,来找出对应的日志文件自动添加。

COMMITTED_DATA_ONLY-“仅显示已提交的事务”

SKIP_CORRUPTION-“跳过重做失败”

NO_SQL_DELIMITER-“格式化重新执行的SQL语句”

PRINT_PRETTY_SQL-“格式化可读性返回数据的外观”

NO_ROWID_IN_STMT-去掉SQL_REDO中的rowid,避免造成逻辑错误

DDL_DICT_TRACKING-跟踪显示DDL语句,选项只能用在字典在redo中和flat文件,online catalog是不支持的。11204默认不适用此选项,DDL也是可以跟踪到的

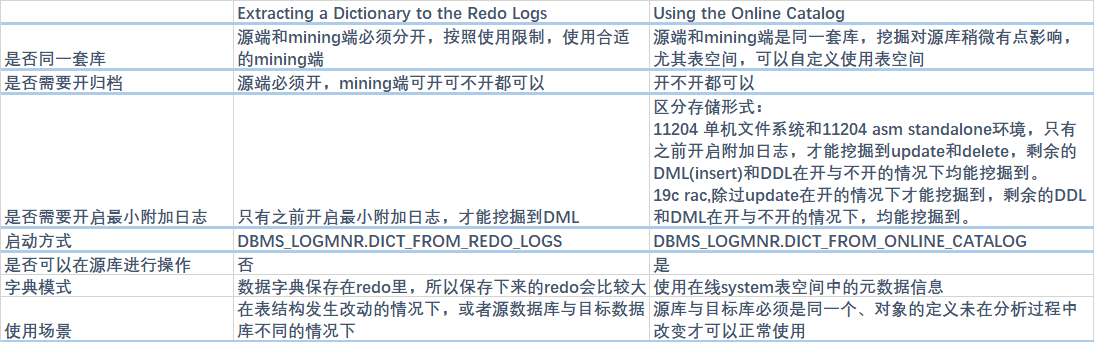

4、总结下Extracting a Dictionary to the Redo Logs与Using the Online Catalog 两种常用数据字典的使用场景与限制。

5、其实还有第三种数据字典的方式,提取LogMiner字典到一个平面文件。为了与以前的版本向后兼容,这个选项被保留。该选项不保证事务一致性。Oracle建议您使用联机目录,或者使用重做日志文件提取字典。在这里就不测试了。