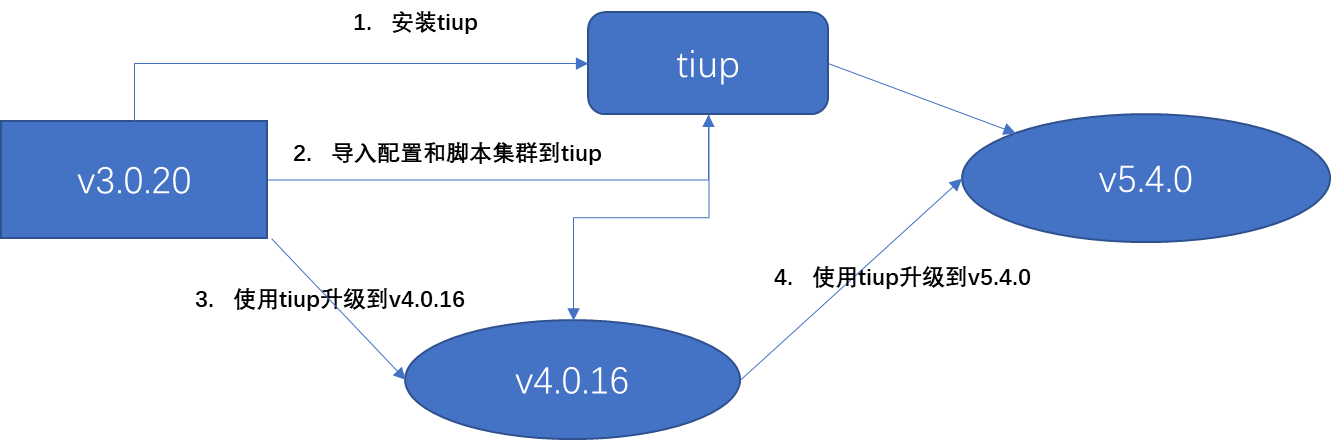

0.tidb升级说明

tidb3.0时通常使用的tidb-ansiable管理的集群,高版本都是使用tiup进行管理,需要安装tiup及tiup cluster

从tidb3.0不可以直接升级到5.4,需要先升级到4.0版本

1.安装 Tiup及Tiup cluster

$ curl --proto '=https' --tlsv1.2 -sSf https://tiup-mirrors.pingcap.com/install.sh | sh $ tiup cluster复制

2.tidb-ansible导入到tiup中

[tidb@tidbser1 ~]$ tiup cluster import -d /home/tidb/tidb-ansible tiup is checking updates for component cluster ...timeout! Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster import -d /home/tidb/tidb-ansible Found inventory file /home/tidb/tidb-ansible/inventory.ini, parsing... Found cluster "test-cluster" (v3.0.20), deployed with user tidb. TiDB-Ansible and TiUP Cluster can NOT be used together, please DO NOT try to use ansible to manage the imported cluster anymore to avoid metadata conflict. The ansible directory will be moved to /home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-backup after import. Do you want to continue? [y/N]: (default=N) y Prepared to import TiDB v3.0.20 cluster test-cluster. Do you want to continue? [y/N]:(default=N) y Imported 2 TiDB node(s). Imported 2 TiKV node(s). Imported 2 PD node(s). Imported 1 monitoring node(s). Imported 1 Alertmanager node(s). Imported 1 Grafana node(s). Imported 1 Pump node(s). Imported 1 Drainer node(s). Copying config file(s) of pd... Copying config file(s) of tikv... Copying config file(s) of pump... Copying config file(s) of tidb... Copying config file(s) of tiflash... Copying config file(s) of drainer... Copying config file(s) of cdc... Copying config file(s) of prometheus... Copying config file(s) of grafana... Copying config file(s) of alertmanager... Copying config file(s) of tispark... Copying config file(s) of tispark... + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy2/conf/pd.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/pd-192.168.40.62-2381.toml + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy/pump/conf/pump.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/pump-192.168.40.62-8250.toml + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy2/conf/tikv.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/tikv-192.168.40.62-20161.toml + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy/conf/tikv.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/tikv-192.168.40.62-20160.toml + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy/conf/pd.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/pd-192.168.40.62-2379.toml + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy/conf/tidb.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/tidb-192.168.40.62-4000.toml + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy2/conf/tidb.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/tidb-192.168.40.62-4001.toml + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [ Serial ] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - CopyFile: remote=192.168.40.62:/u01/deploy/drainer/conf/drainer.toml, local=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ansible-imported-configs/drainer-192.168.40.62-8249.toml Finished copying configs.复制

3.升级tidb到v4.0.16

[tidb@tidbser1 config-cache]$ tiup cluster upgrade test-cluster v4.0.16 tiup is checking updates for component cluster ... Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster upgrade test-cluster v4.0.16 This operation will upgrade tidb v3.0.20 cluster test-cluster to v4.0.16. Do you want to continue? [y/N]:(default=N) y Upgrading cluster... + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - Download: component=drainer, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=tikv, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=pump, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=tidb, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=pd, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=prometheus, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=grafana, version=v4.0.16, os=linux, arch=amd64 + [ Serial ] - Download: component=alertmanager, version=, os=linux, arch=amd64 + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/pump/data.pump' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/data.pd' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy2/data' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/data' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy2/data.pd' + [ Serial ] - BackupComponent: component=tikv, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy + [ Serial ] - BackupComponent: component=tikv, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy2 + [ Serial ] - BackupComponent: component=pd, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy + [ Serial ] - BackupComponent: component=pd, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy2 + [ Serial ] - CopyComponent: component=tikv, version=v4.0.16, remote=192.168.40.62:/u01/deploy2 os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=tikv, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=pd, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=pd, version=v4.0.16, remote=192.168.40.62:/u01/deploy2 os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=pump, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy/pump + [ Serial ] - CopyComponent: component=pump, version=v4.0.16, remote=192.168.40.62:/u01/deploy/pump os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/pump-8250.service, deploy_dir=/u01/deploy/pump, data_dir=[/u01/deploy/pump/data.pump], log_dir=/u01/deploy/pump/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/pd-2381.service, deploy_dir=/u01/deploy2, data_dir=[/u01/deploy2/data.pd], log_dir=/u01/deploy2/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/pd-2379.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/data.pd], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - Mkdir: host=192.168.40.62, directories='' + [ Serial ] - BackupComponent: component=tidb, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=tidb, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tikv-20160.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/data], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tikv-20161.service, deploy_dir=/u01/deploy2, data_dir=[/u01/deploy2/data], log_dir=/u01/deploy2/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - Mkdir: host=192.168.40.62, directories='' + [ Serial ] - BackupComponent: component=tidb, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy2 + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/drainer/data.drainer' + [ Serial ] - CopyComponent: component=tidb, version=v4.0.16, remote=192.168.40.62:/u01/deploy2 os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tidb-4000.service, deploy_dir=/u01/deploy, data_dir=[], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - BackupComponent: component=drainer, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy/drainer + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/prometheus2.0.0.data.metrics' + [ Serial ] - Mkdir: host=192.168.40.62, directories='' + [ Serial ] - CopyComponent: component=grafana, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=drainer, version=v4.0.16, remote=192.168.40.62:/u01/deploy/drainer os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=prometheus, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tidb-4001.service, deploy_dir=/u01/deploy2, data_dir=[], log_dir=/u01/deploy2/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/drainer-8249.service, deploy_dir=/u01/deploy/drainer, data_dir=[/u01/deploy/drainer/data.drainer], log_dir=/u01/deploy/drainer/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/data.alertmanager' + [ Serial ] - CopyComponent: component=alertmanager, version=, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=prometheus, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy + [ Serial ] - BackupComponent: component=grafana, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=prometheus, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=grafana, version=v4.0.16, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=alertmanager, currentVersion=v3.0.20, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=alertmanager, version=, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/prometheus-9090.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/prometheus2.0.0.data.metrics], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/grafana-3000.service, deploy_dir=/u01/deploy, data_dir=[], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/alertmanager-9093.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/data.alertmanager], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - UpgradeCluster Upgrading component pd Restarting instance 192.168.40.62:2381 Restart instance 192.168.40.62:2381 success Restarting instance 192.168.40.62:2379 Restart instance 192.168.40.62:2379 success Upgrading component tikv Evicting 91 leaders from store 192.168.40.62:20161... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Ignore evicting store leader from 192.168.40.62:20161, error evicting store leader from 192.168.40.62:20161, operation timed out after 5m0s Restarting instance 192.168.40.62:20161 Restart instance 192.168.40.62:20161 success Restarting instance 192.168.40.62:20160 Restart instance 192.168.40.62:20160 success Upgrading component pump Restarting instance 192.168.40.62:8250 Restart instance 192.168.40.62:8250 success Upgrading component tidb Restarting instance 192.168.40.62:4000 Restart instance 192.168.40.62:4000 success Restarting instance 192.168.40.62:4001 Restart instance 192.168.40.62:4001 success Upgrading component drainer Restarting instance 192.168.40.62:8249 Restart instance 192.168.40.62:8249 success Upgrading component prometheus Restarting instance 192.168.40.62:9090 Restart instance 192.168.40.62:9090 success Upgrading component grafana Restarting instance 192.168.40.62:3000 Restart instance 192.168.40.62:3000 success Upgrading component alertmanager Restarting instance 192.168.40.62:9093 Restart instance 192.168.40.62:9093 success Stopping component node_exporter Stopping instance 192.168.40.62 Stop 192.168.40.62 success Stopping component blackbox_exporter Stopping instance 192.168.40.62 Stop 192.168.40.62 success Starting component node_exporter Starting instance 192.168.40.62 Start 192.168.40.62 success Starting component blackbox_exporter Starting instance 192.168.40.62 Start 192.168.40.62 success Upgraded cluster `test-cluster` successfully复制

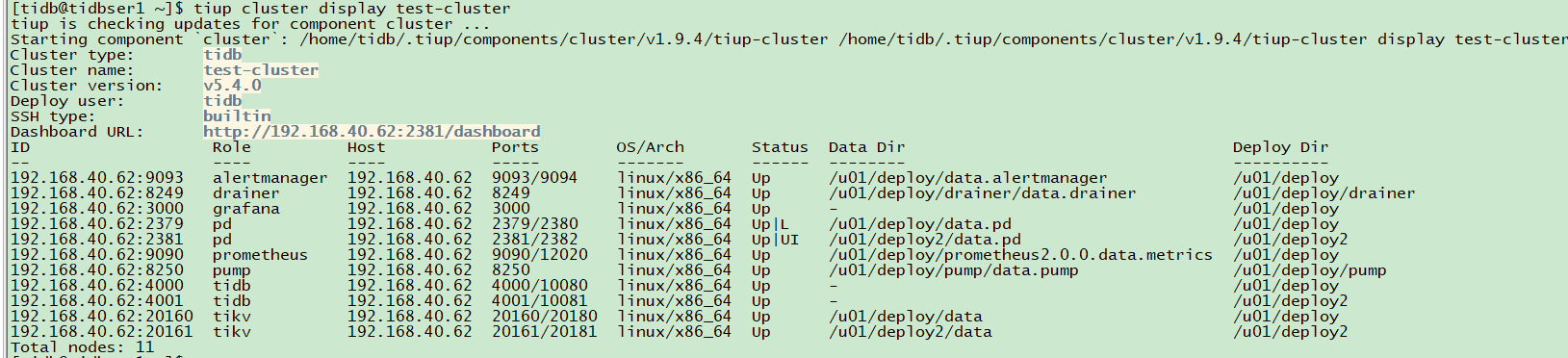

4.升级tidb到v5.4.0

4.1.去掉v5.4.0不支持的参数

pessimistic-txn.enabled --此参数在v5.4.0中不支持需要去掉 tiup cluster edit-config test-cluster tiup cluster reload test-cluster复制

4.2.检查是否满足升级要求

[tidb@tidbser1 manifests]$ tiup cluster check test-cluster --cluster tiup is checking updates for component cluster ... Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster check test-cluster --cluster + Download necessary tools - Downloading check tools for linux/amd64 ... Done + Collect basic system information + Collect basic system information - Getting system info of 192.168.40.62:22 ... Done + Check system requirements + Check system requirements + Check system requirements - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done - Checking node 192.168.40.62 ... Done + Cleanup check files - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done - Cleanup check files on 192.168.40.62:22 ... Done Node Check Result Message ---- ----- ------ ------- 192.168.40.62 os-version Pass OS is Red Hat Enterprise Linux Server 7.6 (Maipo) 7.6 192.168.40.62 cpu-governor Warn Unable to determine current CPU frequency governor policy 192.168.40.62 memory Pass memory size is 16384MB 192.168.40.62 selinux Pass SELinux is disabled 192.168.40.62 command Pass numactl: policy: default 192.168.40.62 permission Pass /u01/deploy2 is writable 192.168.40.62 permission Pass /u01/deploy/data is writable 192.168.40.62 permission Pass /u01/deploy/data.pd is writable 192.168.40.62 permission Pass /u01/deploy2/data is writable 192.168.40.62 permission Pass /u01/deploy2/data.pd is writable 192.168.40.62 permission Pass /u01/deploy/prometheus2.0.0.data.metrics is writable 192.168.40.62 permission Pass /u01/deploy is writable 192.168.40.62 permission Pass /u01/deploy/pump is writable 192.168.40.62 permission Pass /u01/deploy/pump/data.pump is writable 192.168.40.62 permission Pass /u01/deploy/drainer is writable 192.168.40.62 permission Pass /u01/deploy/drainer/data.drainer is writable 192.168.40.62 permission Pass /u01/deploy/data.alertmanager is writable 192.168.40.62 network Pass network speed of ens33 is 1000MB 192.168.40.62 network Pass network speed of ens32 is 1000MB 192.168.40.62 disk Fail multiple components tikv:/u01/deploy2/data,tikv:/u01/deploy/data are using the same partition 192.168.40.62:/u01 as data dir 192.168.40.62 thp Pass THP is disabled 192.168.40.62 cpu-cores Pass number of CPU cores / threads: 8 Checking region status of the cluster test-cluster... All regions are healthy.复制

4.3.升级tidb

[tidb@tidbser1 data]$ tiup cluster upgrade test-cluster v5.4.0 tiup is checking updates for component cluster ... Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.4/tiup-cluster upgrade test-cluster v5.4.0 This operation will upgrade tidb v4.0.16 cluster test-cluster to v5.4.0. Do you want to continue? [y/N]:(default=N) y Upgrading cluster... + [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/ssh/id_rsa.pub + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [Parallel] - UserSSH: user=tidb, host=192.168.40.62 + [ Serial ] - Download: component=drainer, version=v5.4.0, os=linux, arch=amd64 + [ Serial ] - Download: component=tikv, version=v5.4.0, os=linux, arch=amd64 + [ Serial ] - Download: component=pump, version=v5.4.0, os=linux, arch=amd64 + [ Serial ] - Download: component=tidb, version=v5.4.0, os=linux, arch=amd64 + [ Serial ] - Download: component=pd, version=v5.4.0, os=linux, arch=amd64 failed to download /pd-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/pd-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 1; INTERNAL_ERROR; received from peer), retrying... + [ Serial ] - Download: component=prometheus, version=v5.4.0, os=linux, arch=amd64 + [ Serial ] - Download: component=grafana, version=v5.4.0, os=linux, arch=amd64 failed to download /pump-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/pump-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 1; INTERNAL_ERROR; received from peer), retrying... failed to download /tikv-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/tikv-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 1; INTERNAL_ERROR; received from peer), retrying... failed to download /tidb-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/tidb-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 1; INTERNAL_ERROR; received from peer), retrying... + [ Serial ] - Download: component=alertmanager, version=, os=linux, arch=amd64 failed to download /prometheus-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/prometheus-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 1; INTERNAL_ERROR; received from peer), retrying... failed to download /grafana-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/grafana-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 1; INTERNAL_ERROR; received from peer), retrying... failed to download /tikv-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/tikv-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 3; INTERNAL_ERROR; received from peer), retrying... failed to download /prometheus-v5.4.0-linux-amd64.tar.gz(download from https://tiup-mirrors.pingcap.com/prometheus-v5.4.0-linux-amd64.tar.gz failed: stream error: stream ID 3; INTERNAL_ERROR; received from peer), retrying... + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/pump/data.pump' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/data.pd' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy2/data' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/data' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy2/data.pd' + [ Serial ] - BackupComponent: component=tikv, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy + [ Serial ] - BackupComponent: component=pd, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy2 + [ Serial ] - BackupComponent: component=tikv, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy2 + [ Serial ] - BackupComponent: component=pd, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=pd, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=tikv, version=v5.4.0, remote=192.168.40.62:/u01/deploy2 os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=pump, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy/pump + [ Serial ] - CopyComponent: component=pump, version=v5.4.0, remote=192.168.40.62:/u01/deploy/pump os=linux, arch=amd64 + [ Serial ] - CopyComponent: component=pd, version=v5.4.0, remote=192.168.40.62:/u01/deploy2 os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/pd-2379.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/data.pd], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/pump-8250.service, deploy_dir=/u01/deploy/pump, data_dir=[/u01/deploy/pump/data.pump], log_dir=/u01/deploy/pump/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tikv-20161.service, deploy_dir=/u01/deploy2, data_dir=[/u01/deploy2/data], log_dir=/u01/deploy2/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - CopyComponent: component=tikv, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/pd-2381.service, deploy_dir=/u01/deploy2, data_dir=[/u01/deploy2/data.pd], log_dir=/u01/deploy2/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - Mkdir: host=192.168.40.62, directories='' + [ Serial ] - BackupComponent: component=tidb, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=tidb, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tikv-20160.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/data], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - Mkdir: host=192.168.40.62, directories='' + [ Serial ] - BackupComponent: component=tidb, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy2 + [ Serial ] - CopyComponent: component=tidb, version=v5.4.0, remote=192.168.40.62:/u01/deploy2 os=linux, arch=amd64 + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/drainer/data.drainer' + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/prometheus2.0.0.data.metrics' + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tidb-4000.service, deploy_dir=/u01/deploy, data_dir=[], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - CopyComponent: component=prometheus, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/tidb-4001.service, deploy_dir=/u01/deploy2, data_dir=[], log_dir=/u01/deploy2/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - BackupComponent: component=drainer, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy/drainer + [ Serial ] - Mkdir: host=192.168.40.62, directories='' + [ Serial ] - CopyComponent: component=grafana, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=prometheus, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=drainer, version=v5.4.0, remote=192.168.40.62:/u01/deploy/drainer os=linux, arch=amd64 + [ Serial ] - Mkdir: host=192.168.40.62, directories='/u01/deploy/data.alertmanager' + [ Serial ] - CopyComponent: component=prometheus, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/drainer-8249.service, deploy_dir=/u01/deploy/drainer, data_dir=[/u01/deploy/drainer/data.drainer], log_dir=/u01/deploy/drainer/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - CopyComponent: component=alertmanager, version=, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=grafana, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/prometheus-9090.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/prometheus2.0.0.data.metrics], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - CopyComponent: component=grafana, version=v5.4.0, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - BackupComponent: component=alertmanager, currentVersion=v4.0.16, remote=192.168.40.62:/u01/deploy + [ Serial ] - CopyComponent: component=alertmanager, version=, remote=192.168.40.62:/u01/deploy os=linux, arch=amd64 + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/alertmanager-9093.service, deploy_dir=/u01/deploy, data_dir=[/u01/deploy/data.alertmanager], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - InitConfig: cluster=test-cluster, user=tidb, host=192.168.40.62, path=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache/grafana-3000.service, deploy_dir=/u01/deploy, data_dir=[], log_dir=/u01/deploy/log, cache_dir=/home/tidb/.tiup/storage/cluster/clusters/test-cluster/config-cache + [ Serial ] - UpgradeCluster Upgrading component pd Restarting instance 192.168.40.62:2379 Restart instance 192.168.40.62:2379 success Restarting instance 192.168.40.62:2381 Restart instance 192.168.40.62:2381 success Upgrading component tikv Evicting 91 leaders from store 192.168.40.62:20161... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Still waitting for 91 store leaders to transfer... Ignore evicting store leader from 192.168.40.62:20161, error evicting store leader from 192.168.40.62:20161, operation timed out after 5m0s Restarting instance 192.168.40.62:20161 Restart instance 192.168.40.62:20161 success Restarting instance 192.168.40.62:20160 Restart instance 192.168.40.62:20160 success Upgrading component pump Restarting instance 192.168.40.62:8250 Restart instance 192.168.40.62:8250 success Upgrading component tidb Restarting instance 192.168.40.62:4000 Restart instance 192.168.40.62:4000 success Restarting instance 192.168.40.62:4001 Restart instance 192.168.40.62:4001 success Upgrading component drainer Restarting instance 192.168.40.62:8249 Restart instance 192.168.40.62:8249 success Upgrading component prometheus Restarting instance 192.168.40.62:9090 Restart instance 192.168.40.62:9090 success Upgrading component grafana Restarting instance 192.168.40.62:3000 Restart instance 192.168.40.62:3000 success Upgrading component alertmanager Restarting instance 192.168.40.62:9093 Restart instance 192.168.40.62:9093 success Stopping component node_exporter Stopping instance 192.168.40.62 Stop 192.168.40.62 success Stopping component blackbox_exporter Stopping instance 192.168.40.62 Stop 192.168.40.62 success Starting component node_exporter Starting instance 192.168.40.62 Start 192.168.40.62 success Starting component blackbox_exporter Starting instance 192.168.40.62 Start 192.168.40.62 success Upgraded cluster `test-cluster` successfully复制

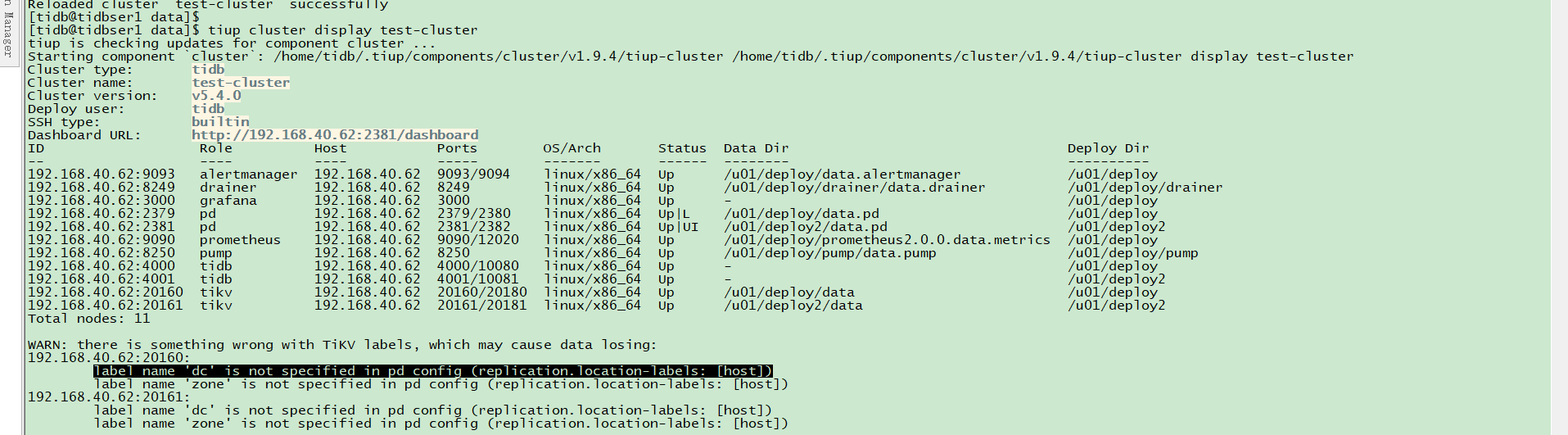

5.遇到的问题

下图问题通过tiup 编辑配置文件修改,然后reload都无法解决,最后通过单独连接pd后解决

$ pd-ctl -u http://192.168.40.62:2379 -i » config set location-labels dc,zone,host;复制

最后修改时间:2022-04-29 11:07:29

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

评论

所有向下扎根的日子,都是厚积薄发的过程。沉下心来,在自己的赛道上日日精进,总有一天你也会成为高手。

1年前

点赞

点赞 评论

hi,这是poc环境?所有组件都在一个机器上啊,这个机器什么配置?

2年前

点赞

点赞 评论

666

3年前

点赞

点赞 评论

您好,您的文章已入选墨力原创作者计划合格奖,10墨值奖励已经到账请查收!

❤️我们还会实时派发您的流量收益。

3年前

点赞

点赞 评论

相关阅读

国产数据库需要扩大场景覆盖面才能在竞争中更有优势

白鳝的洞穴

548次阅读

2025-04-14 09:40:20

TiDB 社区第四届专栏征文大赛联合墨天轮火热开启!TiDB 业务场景实战、运维开发攻略两大赛道,重磅礼品等你来挑战!

墨天轮编辑部

408次阅读

2025-04-15 17:01:41

轻松上手:使用 Docker Compose 部署 TiDB 的简易指南

shunwahⓂ️

85次阅读

2025-04-27 16:19:49

Dify 基于 TiDB 的数据架构重构实践

PingCAP

60次阅读

2025-04-10 11:52:56

APTSell x TiDB AutoFlow:AI 数字员工,助力销售业绩持续增长

PingCAP

48次阅读

2025-04-21 10:35:16

从单一到多活,麦当劳中国的数据库架构迁移实战

PingCAP

40次阅读

2025-04-18 10:01:03

倒计时三天!TiDB 社区活动@南京:传统技术栈替换和 AI 浪潮正当时,面向未来的国产数据库如何选择?

PingCAP

40次阅读

2025-04-10 11:52:56

卷疯了!众数据库厂商的征文汇

严少安

36次阅读

2025-04-23 02:19:36

从开源到全球认可:TiDB 在 DB-Engine 排名中的十年跃迁

韩锋频道

35次阅读

2025-04-24 09:53:42

TiDB 社区第四届专栏征文大赛联合墨天轮火热开启,TiDB 业务场景实战、运维开发攻略两大赛道,重磅礼品等你来拿!

小周的数据库进阶之路

35次阅读

2025-04-16 10:33:58