在最大的基友交友网站GitHub上,来自世界各地的开源开发者们进行着数百万个项目。这里每天都有大量的代码文档、修复和提交BUG之类的事件信息产生。

而GitHub Archive项目,正是搜集了这些GitHub timeline上记录的公共数据,并对其存档,使其易于访问,以进行进一步分析。

项目地址:

https://www.gharchive.org/

GitHub Archive数据包含了自2011年以来GitHub存储的所有事件。

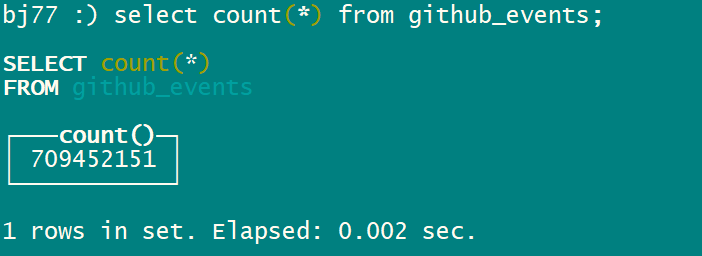

记录的数据量有31亿条,总数据压缩后为73GB。

这样的数据集不放在ch里真是浪费了,下面就看看里面有啥好玩的东西,一起来数星星吧。

1 环境准备:

数据的获取方式有以下几种,没有试验环境的可以直接第三种。

方式1:下载文件载入数据集

# 1. 下载数据文件

wget https://datasets.clickhouse.tech/github_events_v2.native.xz

# 2. 建表:

CREATE TABLE test.github_events( file_time DateTime, event_type Enum('CommitCommentEvent' = 1, 'CreateEvent' = 2, 'DeleteEvent' = 3, 'ForkEvent' = 4, 'GollumEvent' = 5, 'IssueCommentEvent' = 6, 'IssuesEvent' = 7, 'MemberEvent' = 8, 'PublicEvent' = 9, 'PullRequestEvent' = 10, 'PullRequestReviewCommentEvent' = 11, 'PushEvent' = 12, 'ReleaseEvent' = 13, 'SponsorshipEvent' = 14, 'WatchEvent' = 15, 'GistEvent' = 16, 'FollowEvent' = 17, 'DownloadEvent' = 18, 'PullRequestReviewEvent' = 19, 'ForkApplyEvent' = 20, 'Event' = 21, 'TeamAddEvent' = 22), actor_login LowCardinality(String), repo_name LowCardinality(String), created_at DateTime, updated_at DateTime, action Enum('none' = 0, 'created' = 1, 'added' = 2, 'edited' = 3, 'deleted' = 4, 'opened' = 5, 'closed' = 6, 'reopened' = 7, 'assigned' = 8, 'unassigned' = 9, 'labeled' = 10, 'unlabeled' = 11, 'review_requested' = 12, 'review_request_removed' = 13, 'synchronize' = 14, 'started' = 15, 'published' = 16, 'update' = 17, 'create' = 18, 'fork' = 19, 'merged' = 20), comment_id UInt64, body String, path String, position Int32, line Int32, ref LowCardinality(String), ref_type Enum('none' = 0, 'branch' = 1, 'tag' = 2, 'repository' = 3, 'unknown' = 4), creator_user_login LowCardinality(String), number UInt32, title String, labels Array(LowCardinality(String)), state Enum('none' = 0, 'open' = 1, 'closed' = 2), locked UInt8, assignee LowCardinality(String), assignees Array(LowCardinality(String)), comments UInt32, author_association Enum('NONE' = 0, 'CONTRIBUTOR' = 1, 'OWNER' = 2, 'COLLABORATOR' = 3, 'MEMBER' = 4, 'MANNEQUIN' = 5), closed_at DateTime, merged_at DateTime, merge_commit_sha String, requested_reviewers Array(LowCardinality(String)), requested_teams Array(LowCardinality(String)), head_ref LowCardinality(String), head_sha String, base_ref LowCardinality(String), base_sha String, merged UInt8, mergeable UInt8, rebaseable UInt8, mergeable_state Enum('unknown' = 0, 'dirty' = 1, 'clean' = 2, 'unstable' = 3, 'draft' = 4), merged_by LowCardinality(String), review_comments UInt32, maintainer_can_modify UInt8, commits UInt32, additions UInt32, deletions UInt32, changed_files UInt32, diff_hunk String, original_position UInt32, commit_id String, original_commit_id String, push_size UInt32, push_distinct_size UInt32, member_login LowCardinality(String), release_tag_name String, release_name String, review_state Enum('none' = 0, 'approved' = 1, 'changes_requested' = 2, 'commented' = 3, 'dismissed' = 4, 'pending' = 5))ENGINE = MergeTreeORDER BY (event_type, repo_name, created_at);

# 3. 导入数据

xz -d < github_events_v2.native.xz | clickhouse-client --query "INSERT INTO test.github_events FORMAT Native"

# 4. 可以看到导入速度还是很快的

方式2:URL地址方式导入数据集

如果觉得下载导入的方式比较慢,可以创建一个外部表,直接从URL地址中读取数据,省去了单独下载和解压缩步骤。

注意:要求ClickHouse版本20.12以上,并且操作系统支持xz解压。

# 1. 建立url外部表

CREATE TABLE github_events_url( file_time DateTime, event_type Enum('CommitCommentEvent' = 1, 'CreateEvent' = 2, 'DeleteEvent' = 3, 'ForkEvent' = 4, 'GollumEvent' = 5, 'IssueCommentEvent' = 6, 'IssuesEvent' = 7, 'MemberEvent' = 8, 'PublicEvent' = 9, 'PullRequestEvent' = 10, 'PullRequestReviewCommentEvent' = 11, 'PushEvent' = 12, 'ReleaseEvent' = 13, 'SponsorshipEvent' = 14, 'WatchEvent' = 15, 'GistEvent' = 16, 'FollowEvent' = 17, 'DownloadEvent' = 18, 'PullRequestReviewEvent' = 19, 'ForkApplyEvent' = 20, 'Event' = 21, 'TeamAddEvent' = 22), actor_login LowCardinality(String), repo_name LowCardinality(String), created_at DateTime, updated_at DateTime, action Enum('none' = 0, 'created' = 1, 'added' = 2, 'edited' = 3, 'deleted' = 4, 'opened' = 5, 'closed' = 6, 'reopened' = 7, 'assigned' = 8, 'unassigned' = 9, 'labeled' = 10, 'unlabeled' = 11, 'review_requested' = 12, 'review_request_removed' = 13, 'synchronize' = 14, 'started' = 15, 'published' = 16, 'update' = 17, 'create' = 18, 'fork' = 19, 'merged' = 20), comment_id UInt64, body String, path String, position Int32, line Int32, ref LowCardinality(String), ref_type Enum('none' = 0, 'branch' = 1, 'tag' = 2, 'repository' = 3, 'unknown' = 4), creator_user_login LowCardinality(String), number UInt32, title String, labels Array(LowCardinality(String)), state Enum('none' = 0, 'open' = 1, 'closed' = 2), locked UInt8, assignee LowCardinality(String), assignees Array(LowCardinality(String)), comments UInt32, author_association Enum('NONE' = 0, 'CONTRIBUTOR' = 1, 'OWNER' = 2, 'COLLABORATOR' = 3, 'MEMBER' = 4, 'MANNEQUIN' = 5), closed_at DateTime, merged_at DateTime, merge_commit_sha String, requested_reviewers Array(LowCardinality(String)), requested_teams Array(LowCardinality(String)), head_ref LowCardinality(String), head_sha String, base_ref LowCardinality(String), base_sha String, merged UInt8, mergeable UInt8, rebaseable UInt8, mergeable_state Enum('unknown' = 0, 'dirty' = 1, 'clean' = 2, 'unstable' = 3, 'draft' = 4), merged_by LowCardinality(String), review_comments UInt32, maintainer_can_modify UInt8, commits UInt32, additions UInt32, deletions UInt32, changed_files UInt32, diff_hunk String, original_position UInt32, commit_id String, original_commit_id String, push_size UInt32, push_distinct_size UInt32, member_login LowCardinality(String), release_tag_name String, release_name String, review_state Enum('none' = 0, 'approved' = 1, 'changes_requested' = 2, 'commented' = 3, 'dismissed' = 4, 'pending' = 5)) ENGINE = URL('https://datasets.clickhouse.tech/github_events_v2.native.xz', Native);

# 2. 创建目标表并插入数据:

CREATE TABLE github_events ENGINE = MergeTree ORDER BY (event_type, repo_name, created_at) AS SELECT * FROM github_events_url;

这时候,有两个年轻人,三十多岁,一个直接导入,一个用RUL导入。

他们说,我佐田啊,搞到现在数据都没载入完,很慢啊!

公老师你能不能教教我浑元功法,哎…帮助加快下速度?

我说:可以

方式3:拿来现成的直接用

感谢慷慨大方的Yandex 和 Altinity大老爷,提供了的完整的demo环境,可以拿来直接跑SQL。

# Yandex.Cloud 提供的连接方式

--客户端连接:

clickhouse-client -m --secure --host gh-api.clickhouse.tech --user explorer

HTTPS interface:

https://gh-api.clickhouse.tech/ (port 443)

# Altinity.Cloud 提供的连接方式

--客户端连接:

clickhouse-client -m -h github.demo.trial.altinity.cloud --port 9440 -s --user=demo --password=demo

--使用DBeaver通过 HTTPS 或 JDBC方式连接:

https://demo:demo@github.demo.trial.altinity.cloud:8443jdbc:clickhouse://github.demo.trial.altinity.cloud:8443

有人又说了,那我这里刚通网,连个能装客户端的电脑都没有。

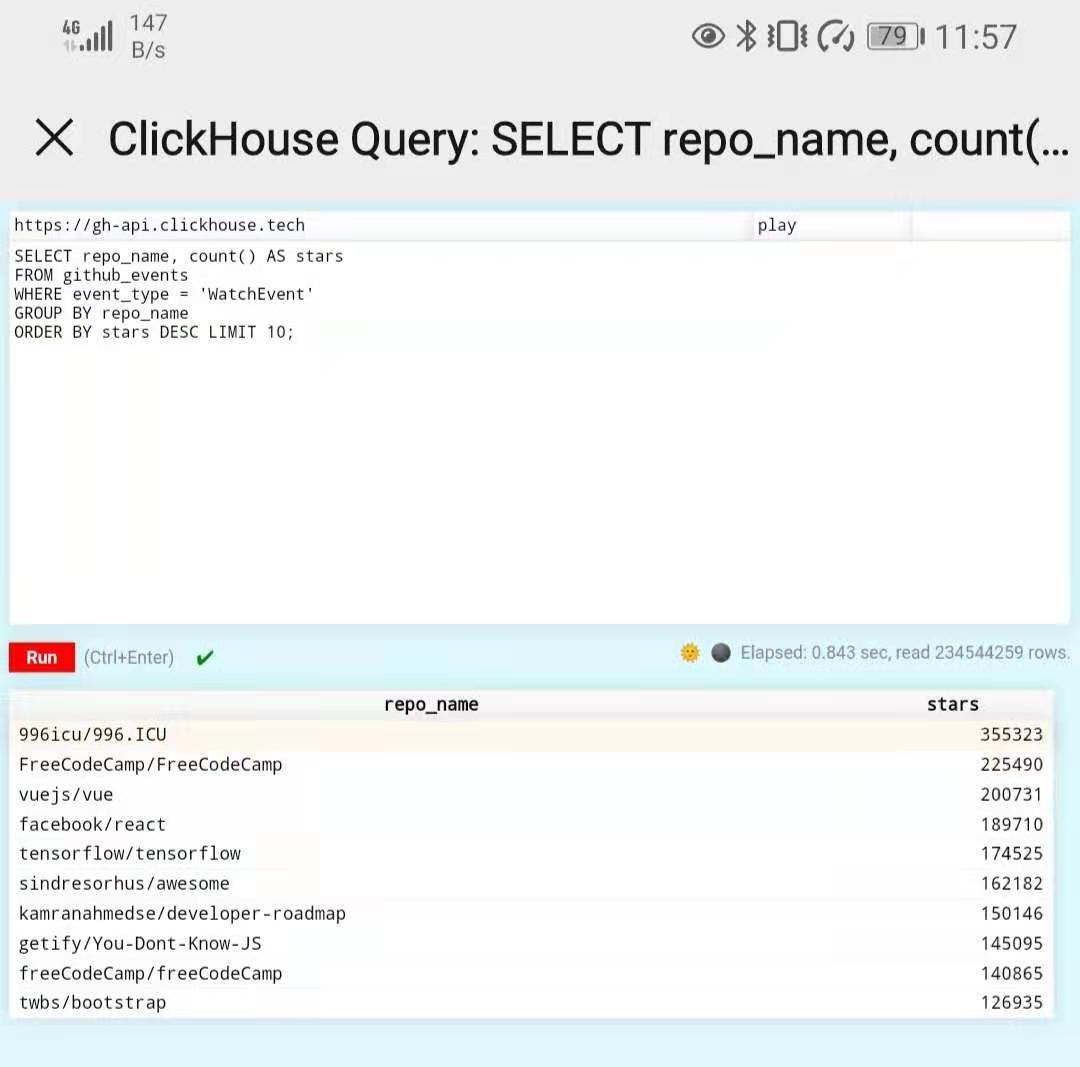

没关系,还能通过Web UI手机直连:

https://gh-api.clickhouse.tech/play?user=play

手机微信里点开效果是这样的:

2 查询:

# GitHub上所有的项目库数量

SELECT uniq(repo_name)FROM github_eventsQuery id: 1ce49a10-5847-4913-97cc-14057961ac16┌─uniq(repo_name)─┐│ 165892137 │└─────────────────┘1 rows in set. Elapsed: 6.098 sec. Processed 3.17 billion rows, 25.39 GB (519.31 million rows/s., 4.16 GB/s.)

# GitHub上所有项目星星的数量

SELECT count()FROM github_eventsWHERE event_type = 'WatchEvent'Query id: 0e025870-afcd-4376-ba05-7cfb418a2e04┌───count()─┐│ 234497476 │└───────────┘

# 星星分布情况,超过10万星的有21个项目。

SELECT exp10(floor(log10(c))) AS stars, uniq(k)FROM ( SELECT repo_name AS k, count() AS c FROM github_events WHERE event_type = 'WatchEvent' GROUP BY k)GROUP BY starsORDER BY stars ASCQuery id: b5defdb0-2ce6-46cb-911a-70b4ba3de038┌──stars─┬──uniq(k)─┐│ 1 │ 15129932 ││ 10 │ 1207927 ││ 100 │ 214942 ││ 1000 │ 29202 ││ 10000 │ 1864 ││ 100000 │ 21 │└────────┴──────────┘6 rows in set. Elapsed: 3.895 sec. Processed 234.53 million rows, 1.84 GB (60.21 million rows/s., 472.73 MB/s.)

# 每年增长的星星数量

SELECT toYear(created_at) AS year, count() AS stars, bar(stars, 0, 50000000, 10) AS barFROM github_eventsWHERE event_type = 'WatchEvent'GROUP BY yearORDER BY year ASCQuery id: 79d1086b-dba9-4a23-a066-9ac945e3fb3a┌─year─┬────stars─┬─bar────────┐│ 2011 │ 1831742 │ ▎ ││ 2012 │ 4048676 │ ▋ ││ 2013 │ 7432800 │ █▍ ││ 2014 │ 11952935 │ ██▍ ││ 2015 │ 18994833 │ ███▋ ││ 2016 │ 26166310 │ █████▏ ││ 2017 │ 32640040 │ ██████▌ ││ 2018 │ 37068153 │ ███████▍ ││ 2019 │ 46118187 │ █████████▏ ││ 2020 │ 48266671 │ █████████▋ │└──────┴──────────┴────────────┘10 rows in set. Elapsed: 1.135 sec. Processed 234.56 million rows, 1.17 GB (206.75 million rows/s., 1.03 GB/s.)

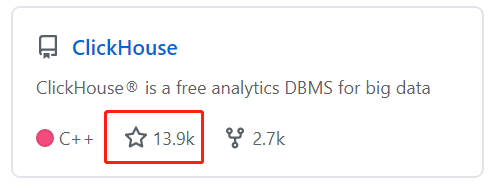

# ClickHouse项目的星星数

SELECT count()FROM github_eventsWHERE (event_type = 'WatchEvent') AND (repo_name IN ('ClickHouse/ClickHouse', 'yandex/ClickHouse'))GROUP BY actionQuery id: f1aeab13-9359-4661-83ca-e0e73c3ead19┌─count()─┐│ 14613 │└─────────┘

这个数字和当前GitHub页面中的数量还是很接近的。

# 星星数量排名前10的项目库

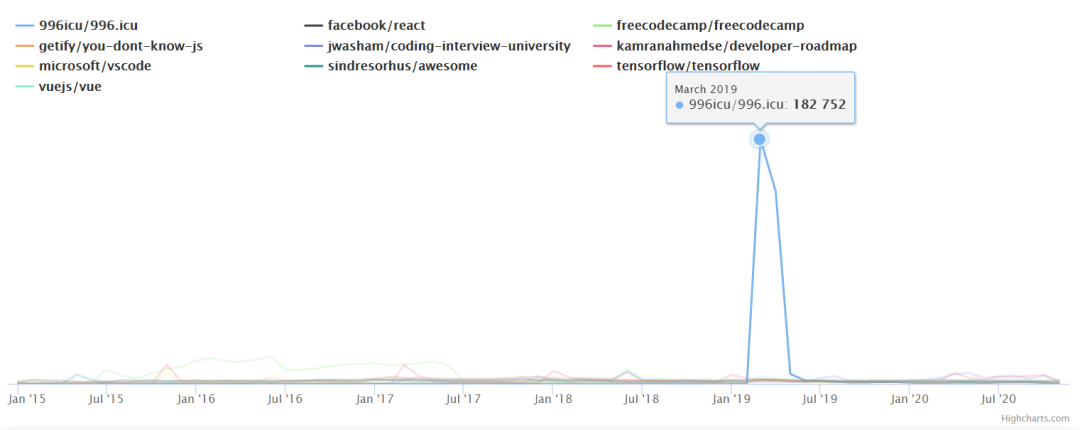

SELECT repo_name, count() AS starsFROM github_eventsWHERE event_type = 'WatchEvent'GROUP BY repo_nameORDER BY stars DESCLIMIT 10Query id: 1b693fe3-69ce-4a12-bb9d-7a1bb42c85b1┌─repo_name───────────────────────┬──stars─┐│ 996icu/996.ICU │ 355326 ││ FreeCodeCamp/FreeCodeCamp │ 225490 ││ vuejs/vue │ 200737 ││ facebook/react │ 189715 ││ tensorflow/tensorflow │ 174528 ││ sindresorhus/awesome │ 162187 ││ kamranahmedse/developer-roadmap │ 150154 ││ getify/You-Dont-Know-JS │ 145096 ││ freeCodeCamp/freeCodeCamp │ 140868 ││ twbs/bootstrap │ 126939 │└─────────────────────────────────┴────────┘10 rows in set. Elapsed: 2.052 sec. Processed 234.53 million rows, 1.84 GB (114.32 million rows/s., 897.53 MB/s.)

排名靠前的很多都是学习教育类的项目,编程学习类项目FreeCodeCamp由于大小写问题被分成了2项,它实际上是星数最多的。

排名第一,完全不讲武德的996.ICU。

it's not for software, but more like a project to improve awareness about work schedules in different Chinese companies. But wait... it's not the top repo.

# 历年的TOP5项目

SELECT year, lower(repo_name) AS repo, count()FROM github_eventsWHERE (event_type = 'WatchEvent') AND (year >= 2015)GROUP BY repo, toYear(created_at) AS yearORDER BY year ASC, count() DESCLIMIT 5 BY yearQuery id: d4c78b77-0827-4588-89ef-a18bdd3f236e┌─year─┬─repo──────────────────────┬─count()─┐│ 2015 │ freecodecamp/freecodecamp │ 53806 ││ 2015 │ facebook/react-native │ 25888 ││ 2015 │ apple/swift │ 25834 ││ 2015 │ sindresorhus/awesome │ 24420 ││ 2015 │ facebook/react │ 22977 │└──────┴───────────────────────────┴─────────┘┌─year─┬─repo────────────────────────────────┬─count()─┐│ 2016 │ freecodecamp/freecodecamp │ 182203 ││ 2016 │ jwasham/google-interview-university │ 31522 ││ 2016 │ vhf/free-programming-books │ 28870 ││ 2016 │ vuejs/vue │ 28831 ││ 2016 │ tensorflow/tensorflow │ 28282 │└──────┴─────────────────────────────────────┴─────────┘┌─year─┬─repo────────────────────────────────┬─count()─┐│ 2017 │ freecodecamp/freecodecamp │ 96359 ││ 2017 │ tensorflow/tensorflow │ 49278 ││ 2017 │ vuejs/vue │ 48185 ││ 2017 │ facebook/react │ 34524 ││ 2017 │ mr-mig/every-programmer-should-know │ 30991 │└──────┴─────────────────────────────────────┴─────────┘┌─year─┬─repo────────────────────────────┬─count()─┐│ 2018 │ vuejs/vue │ 51515 ││ 2018 │ trekhleb/javascript-algorithms │ 39249 ││ 2018 │ facebook/react │ 38817 ││ 2018 │ flutter/flutter │ 38357 ││ 2018 │ danistefanovic/build-your-own-x │ 37815 │└──────┴─────────────────────────────────┴─────────┘┌─year─┬─repo──────────────────────┬─count()─┐│ 2019 │ 996icu/996.icu │ 344825 ││ 2019 │ jackfrued/python-100-days │ 76845 ││ 2019 │ m4cs/babysploit │ 71013 ││ 2019 │ microsoft/terminal │ 56844 ││ 2019 │ snailclimb/javaguide │ 53444 │└──────┴───────────────────────────┴─────────┘┌─year─┬─repo────────────────────────────────┬─count()─┐│ 2020 │ labuladong/fucking-algorithm │ 80938 ││ 2020 │ jwasham/coding-interview-university │ 60509 ││ 2020 │ kamranahmedse/developer-roadmap │ 53550 ││ 2020 │ donnemartin/system-design-primer │ 39731 ││ 2020 │ public-apis/public-apis │ 39552 │└──────┴─────────────────────────────────────┴─────────┘30 rows in set. Elapsed: 18.161 sec. Processed 233.93 million rows, 2.75 GB (12.88 million rows/s., 151.52 MB/s.)

每年的历代王者:

freecodecamp (2015-2017 )

vue (2018 )

996.icu (2019)

国产算法刷题 fucking-algorithm (2020)

# 各大公司组织的星星数量(阿里还是挺NB)

SELECT lower(substring(repo_name, 1, position(repo_name, '/'))) AS org, count() AS starsFROM github_eventsWHERE event_type = 'WatchEvent'GROUP BY orgORDER BY stars DESCLIMIT 10Query id: 2db5a630-1b1f-4755-af5d-b58d29ab0596┌─org───────────┬───stars─┐│ google/ │ 1425341 ││ microsoft/ │ 1382470 ││ facebook/ │ 1128478 ││ alibaba/ │ 586424 ││ sindresorhus/ │ 572216 ││ apache/ │ 558924 ││ vuejs/ │ 497920 ││ tensorflow/ │ 428196 ││ freecodecamp/ │ 408759 ││ fossasia/ │ 403761 │└───────────────┴─────────┘10 rows in set. Elapsed: 2.041 sec. Processed 234.56 million rows, 1.84 GB (114.91 million rows/s., 903.00 MB/s.)

类似的分析还有很多,感兴趣的可以参照原文地址挨个试试

https://gh.clickhouse.tech/explorer/#counting-stars

# 历史文章

GitHub都在用的高可用工具Orch:

Orchestrator:03 高可用方案ProxySQL篇

Percona 全力打造的监控平台 PMM:

QQ群号:763628645

QQ群二维码如下, 添加请注明:姓名+地区+职位,否则不予通过