1、写在前面

MogDB的扩容和缩容是针对于备机而言,扩容最多支持一主八备,缩容支持只剩一个主库,扩容缩容也比较方便,MogDB提供了gs_expansion和gs_dropnode两个工具,我的环境清单如下:

NODE1(主库) | NODE2(从库) | |

Hostname | pkt_mogdb1 | pkt_mogdb2 |

IP | 10.80.9.249 | 10.80.9.250 |

磁盘 | 20G | 20G |

内存 | 2G | 2G |

2、gs_expansion扩容

扩容后的架构如下:

NODE1(主库) | NODE2(从库) | NODE3(新从库) | |

Hostname | pkt_mogdb1 | pkt_mogdb2 | pkt_mogdb3 |

IP | 10.80.9.249 | 10.80.9.250 | 10.80.9.251 |

磁盘 | 20G | 20G | 20G |

内存 | 2G | 2G | 2G |

一、扩容

1、准备服务器

参考前面的安装备机,配置好ip、hostname,安装依赖包,配置chronyd时间同步,关闭防火墙等

2、创建用户和组

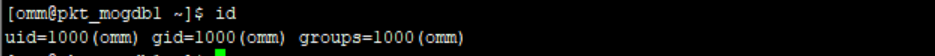

待扩容服务器创建与主机一样的用户和组,登录主机查看omm的用户和组id。

待扩容服务器创建用户和组

groupadd omm -g 1000 useradd omm -g 1000 -u 1000 #修改密码 passwd omm |

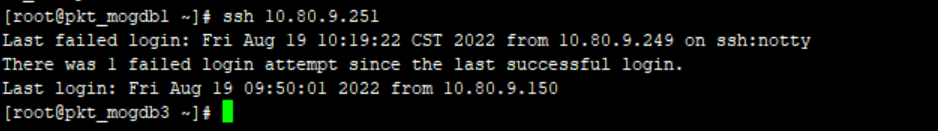

3、配置主机到新从库的ssh互信

由于在扩容过程中会拷贝文件到新的从库,因此需要配置ssh互信,root和omm用户都需要配置

#一 主机检查是否已经存在秘钥 ls ~/.ssh/id_rsa.pub #二 如果秘钥不存在重新生成秘钥文件 ssh-keygen -t rsa #三 拷贝秘钥问价到待扩容的机器 scp -r ~/.ssh/id_rsa.pub root@10.80.9.251:~/.ssh/ #四 在目标机器将主机秘钥写入文件中 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys #五 主机验证ssh互信 ssh 10.80.9.251 |

没有输入密码提示表示配置成功

相同的操作使用omm用户在执行一遍

如果免密不好用可能是authorized_keys文件权限的问题,权限需要是700;chmod 700 authorized_keys。

注意node1到node2和node3的互信必须都配置

4、配置主库服务器上的配置文件,增加扩容的机器

[omm@pkt_mogdb1 software]$ cat cluster3.xml <?xml version="1.0" encoding="UTF-8"?> <ROOT> <!-- MogDB整体信息 --> <CLUSTER> <PARAM name="clusterName" value="mogdb_cluster1" /> <PARAM name="nodeNames" value="pkt_mogdb1,pkt_mogdb2,pkt_mogdb3" /> <PARAM name="gaussdbToolPath" value="/opt/mogdb/data/tool" /> <PARAM name="corePath" value="/opt/mogdb/corefile"/> <PARAM name="gaussdbAppPath" value="/opt/mogdb/data/app" /> <PARAM name="gaussdbLogPath" value="/opt/mogdb/data/log" /> <PARAM name="tmpMppdbPath" value="/opt/mogdb/data/tmp"/> <PARAM name="backIp1s" value="10.80.9.249,10.80.9.250,10.80.9.251"/> </CLUSTER> <!-- 每台服务器上的节点部署信息 --> <DEVICELIST> <!-- node1上的节点部署信息 --> <DEVICE sn="pkt_mogdb1"> <!-- 节点1的主机名称 --> <PARAM name="name" value="pkt_mogdb1"/> <!-- 节点1所在的AZ及AZ优先级 --> <PARAM name="azName" value="AZ1"/> <PARAM name="azPriority" value="1"/> <!-- 节点1的IP,如果服务器只有一个网卡可用,将backIP1和sshIP1配置成同一个IP --> <PARAM name="backIp1" value="10.80.9.249"/> <PARAM name="sshIp1" value="10.80.9.249"/> <!--dn--> <PARAM name="dataNum" value="1"/> <PARAM name="dataNode1" value="/opt/mogdb/data/data,pkt_mogdb2,/opt/mogdb/data/data,pkt_mogdb3,/opt/mogdb/data/data"/> </DEVICE> <!-- 节点2上的节点部署信息,其中“name”的值配置为主机名称 --> <DEVICE sn="pkt_mogdb2"> <!-- 节点2的主机名称 --> <PARAM name="name" value="pkt_mogdb2"/> <!-- 节点2所在的AZ及AZ优先级 --> <PARAM name="azName" value="AZ1"/> <PARAM name="azPriority" value="1"/> <!-- 节点2的IP,如果服务器只有一个网卡可用,将backIP1和sshIP1配置成同一个IP --> <PARAM name="backIp1" value="10.80.9.250"/> <PARAM name="sshIp1" value="10.80.9.250"/> </DEVICE> <DEVICE sn="pkt_mogdb3"> <!-- 节点2的主机名称 --> <PARAM name="name" value="pkt_mogdb3"/> <!-- 节点2所在的AZ及AZ优先级 --> <PARAM name="azName" value="AZ1"/> <PARAM name="azPriority" value="1"/> <!-- 节点2的IP,如果服务器只有一个网卡可用,将backIP1和sshIP1配置成同一个IP --> <PARAM name="backIp1" value="10.80.9.251"/> <PARAM name="sshIp1" value="10.80.9.251"/> </DEVICE> </DEVICELIST> </ROOT> |

5、插播一个小插曲(可以跳过广告);

摸爬滚打过坑一:我的主从环境是ptk安装的,从主机到备机的ssh互信都是ptk生成的,因为我在配置待扩容备机(node3)互信的时候,修改了node2的omm密码导致gs_om需要输入密码。于是重新修改node1到node2的互信。在修改node2的互信的时候需要注意~/.ssh/config文件里的信息,需要修改成新的密钥名称。

摸爬滚打过坑二:莫名奇妙我的主库启动不了了,看日志也没发现什么原因,但是从库可以启动,启动日志如下:

[omm@pkt_mogdb1 data]$ gs_om -t start Starting cluster. ========================================= [SUCCESS] pkt_mogdb2 2022-08-19 12:55:17.312 [unknown] [unknown] localhost 47526557277696 0[0:0#0] 0 [BACKEND] WARNING: could not create any HA TCP/IP sockets ========================================= [GAUSS-53600]: Can not start the database, the cmd is . /home/omm/.bashrc; python3 '/opt/mogdb/data/tool/script/local/StartInstance.py' -U omm -R /opt/mogdb/data/app -t 300 --security-mode=off, Error: [FAILURE] pkt_mogdb1: [GAUSS-51607] : Failed to start instance. Error: Please check the gs_ctl log for failure details. [2022-08-19 12:55:13.709][16249][][gs_ctl]: gs_ctl started,datadir is /opt/mogdb/data/data [2022-08-19 12:55:13.808][16249][][gs_ctl]: waiting for server to start... .0 LOG: [Alarm Module]can not read GAUSS_WARNING_TYPE env.

0 LOG: [Alarm Module]Host Name: pkt_mogdb1

0 LOG: [Alarm Module]Host IP: 10.80.9.249

0 LOG: [Alarm Module]Cluster Name: mogdb_cluster1

0 WARNING: failed to open feature control file, please check whether it exists: FileName=gaussdb.version, Errno=2, Errmessage=No such file or directory. 0 WARNING: failed to parse feature control file: gaussdb.version. 0 WARNING: Failed to load the product control file, so gaussdb cannot distinguish product version. The core dump path from /proc/sys/kernel/core_pattern is an invalid directory:|/usr/libexec/ 2022-08-19 12:55:14.017 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: when starting as multi_standby mode, we couldn't support data replicaton. 2022-08-19 12:55:14.023 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: [Alarm Module]can not read GAUSS_WARNING_TYPE env.

2022-08-19 12:55:14.023 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: [Alarm Module]Host Name: pkt_mogdb1

2022-08-19 12:55:14.023 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: [Alarm Module]Host IP: 10.80.9.249

2022-08-19 12:55:14.023 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: [Alarm Module]Cluster Name: mogdb_cluster1

2022-08-19 12:55:14.027 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: loaded library "security_plugin" 2022-08-19 12:55:14.028 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] WARNING: could not create any HA TCP/IP sockets 2022-08-19 12:55:14.031 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: gstrace initializes with failure. errno = 1. 2022-08-19 12:55:14.031 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: InitNuma numaNodeNum: 1 numa_distribute_mode: none inheritThreadPool: 0. 2022-08-19 12:55:14.031 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: reserved memory for backend threads is: 220 MB 2022-08-19 12:55:14.031 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: reserved memory for WAL buffers is: 128 MB 2022-08-19 12:55:14.031 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: Set max backend reserve memory is: 348 MB, max dynamic memory is: 11067 MB 2022-08-19 12:55:14.031 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: shared memory 360 Mbytes, memory context 11415 Mbytes, max process memory 12288 Mbytes 2022-08-19 12:55:14.057 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [CACHE] LOG: set data cache size(402653184) 2022-08-19 12:55:14.067 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [CACHE] LOG: set metadata cache size(134217728) 2022-08-19 12:55:14.109 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [SEGMENT_PAGE] LOG: Segment-page constants: DF_MAP_SIZE: 8156, DF_MAP_BIT_CNT: 65248, DF_MAP_GROUP_EXTENTS: 4175872, IPBLOCK_SIZE: 8168, EXTENTS_PER_IPBLOCK: 1021, IPBLOCK_GROUP_SIZE: 4090, BMT_HEADER_LEVEL0_TOTAL_PAGES: 8323072, BktMapEntryNumberPerBlock: 2038, BktMapBlockNumber: 25, BktBitMaxMapCnt: 512 2022-08-19 12:55:14.114 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: mogdb: fsync file "/opt/mogdb/data/data/gaussdb.state.temp" success 2022-08-19 12:55:14.114 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: create gaussdb state file success: db state(STARTING_STATE), server mode(Primary), connection index(1) 2022-08-19 12:55:14.116 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: max_safe_fds = 976, usable_fds = 1000, already_open = 14 The core dump path from /proc/sys/kernel/core_pattern is an invalid directory:|/usr/libexec/ 2022-08-19 12:55:14.118 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: the configure file /opt/mogdb/data/app/etc/gscgroup_omm.cfg doesn't exist or the size of configure file has changed. Please create it by root user! 2022-08-19 12:55:14.118 [unknown] [unknown] localhost 47691868622336 0[0:0#0] 0 [BACKEND] LOG: Failed to parse cgroup config file. .[2022-08-19 12:55:15.813][16249][][gs_ctl]: waitpid 16252 failed, exitstatus is 256, ret is 2 [2022-08-19 12:55:15.813][16249][][gs_ctl]: stopped waiting [2022-08-19 12:55:15.813][16249][][gs_ctl]: could not start server |

看gs_ctl日志、om日志没有发现明显的错误,只是pg_log中关于MOT相关的日志比较可疑,因为我上一次测试MOT表,并且临时扩大了内存,而且关机的时候也没有手工停止MogDB。为了测试扩容我把node1的内存又缩小了,怀疑是这个导致的问题。

我的解决步骤如下:

一 mot.conf配置文件注释掉

二 临时启动node2节点为primary

gs_ctl restart -D /opt/mogdb/data/data/ -M primary |

三 重建主库为从库

gs_ctl build -D /opt/mogdb/data/data -b full |

四 重启集群,由于没有执行gs_om -t refreshconf更新主从节点信息,所以启动还是以node1为primary。

gs_om -t restart |

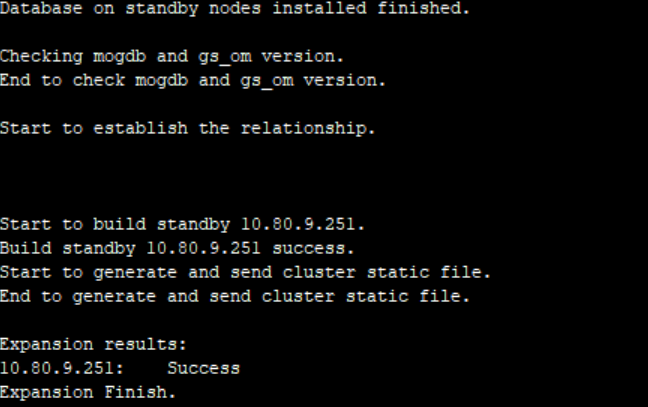

6、开始扩容,必须使用root用户

#因为root用户没有设置环境变量,需要执行omm下的环境变量 source /home/omm/.bashrc gs_expansion -U omm -G omm -X /opt/mogdb/software/cluster3.xml -h 10.80.9.251 |

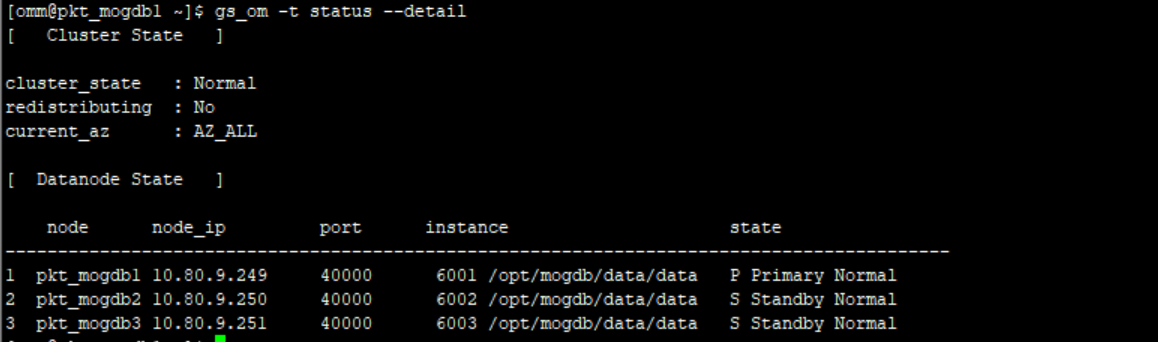

7、检查扩容后的集群状态

8、说说我在扩容的时候遇到的问题

1)[GAUSS-35706] Fail to preinstall on all new hosts.

这个问题是因为在新备库上无法执行预安装命令,我的新备库中没有安装python3,安装完python3解决

2)[GAUSS-51100] : Failed to verify SSH trust on these nodes: pkt_mogdb1, pkt_mogdb2, 10.80.9.249, 10.80.9.250 by root.

这个问题是因为主节点到其他备机的ssh互信没有配置,逐一检查配置互信即可解决,,还需要配置主节点的/etc/hosts 添加新增的节点名

- [GAUSS-50021] : Failed to query wal_keep_segments parameter.

这个问题是因为我的xml配置文件中dataNode1参数配置失败,导致gs_expansion无法读取到postgresql.conf

4)Build standby 10.80.9.251 failed.

[GAUSS-35706] Fail to build on all new hosts.

这个问题实在不知道什么原因,我是使用了一台新的服务器,然后预先安装了MogDB才成功,在扩容的过程中遇到了挺多问题,建议新备机先使用ptk安装好MogDB单实例,然后然进行扩容,这样环境变量什么的都没有问题,只需要在最后加入一个-L参数即可.

gs_expansion -U omm -G omm -X /opt/mogdb/software/cluster3.xml -h 10.80.9.251 -L |

3、缩容

缩容就比较简单了,必须在主节点上的omm用户下执行

gs_dropnode -U omm -G omm -h 10.80.9.251 |

5、写在最后

这次笔记测试了扩容和缩容,扩容的时候建议待扩容的备机上先使用ptk预安装MogDB,然后再进行扩容,可以避免很多粗心导致的问题.另外还需要注意pg_hba.conf认证文件,否则也会出现一些问题。