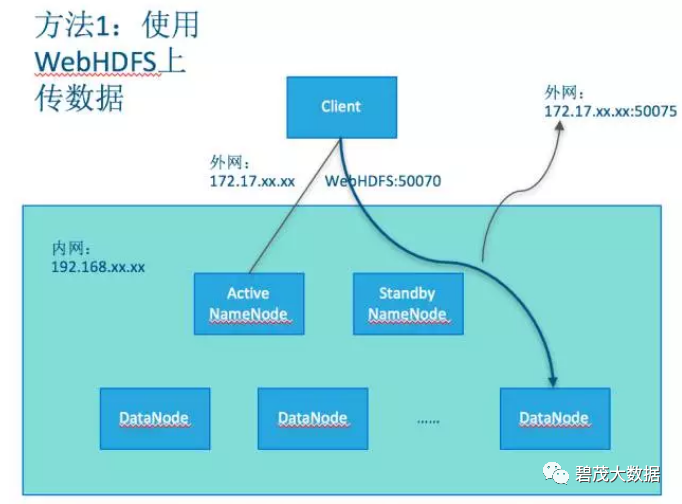

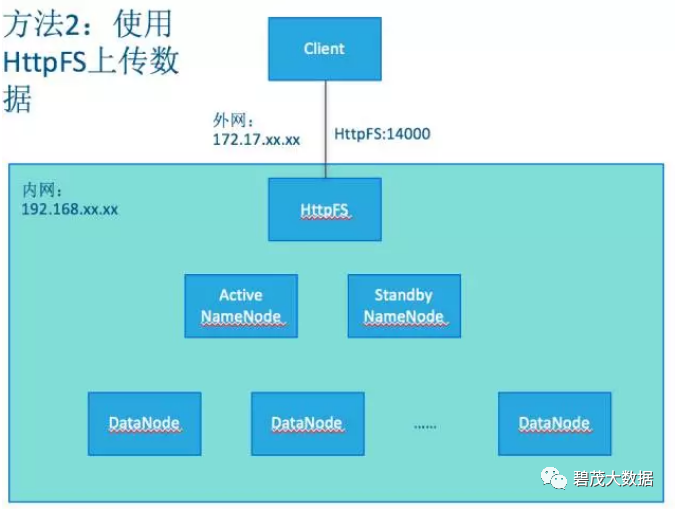

两种方式具体架构如下图:

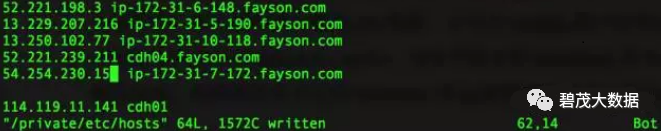

注:所有Hadoop节点同时配置有两个网段,用于内网通信的192网段以及用于外网的172网段,而客户端机器只与外网网段172通。

注:所有Hadoop节点同时配置有两个网段,用于内网通信的192网段以及用于外网的172网段,而客户端机器只与外网网段172通。

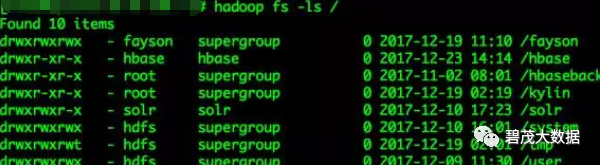

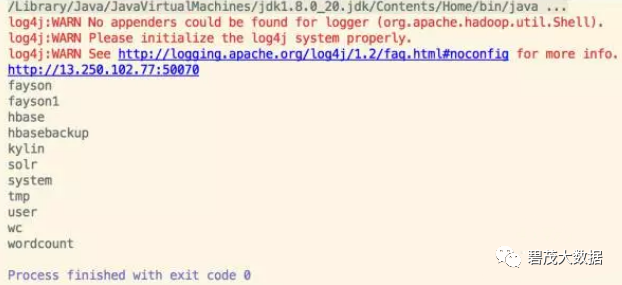

WebHDFS访问HDFS

package com.cloudera.hdfs.nonekerberos;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FileStatus;import org.apache.hadoop.fs.Path;import org.apache.hadoop.hdfs.web.WebHdfsFileSystem;import java.io.IOException;import java.net.URI;public class WebHDFSTest { public static void main(String[] args) {

Configuration configuration = new Configuration();

WebHdfsFileSystem webHdfsFileSystem = new WebHdfsFileSystem(); try {

webHdfsFileSystem.initialize(new URI("http://13.250.102.77:50070"), configuration);

System.out.println(webHdfsFileSystem.getUri()); //向HDFS Put文件

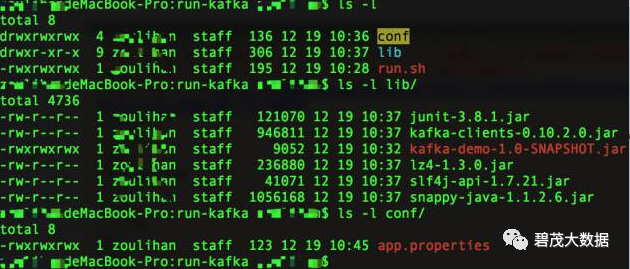

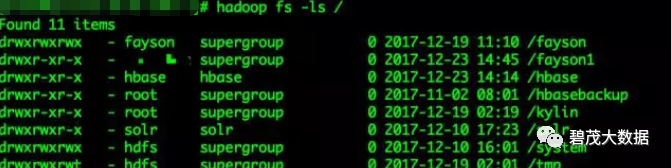

webHdfsFileSystem.copyFromLocalFile(new Path("/Users/fayson/Desktop/run-kafka"), new Path("/fayson1")); //列出HDFS根目录下的所有文件

FileStatus[] fileStatuses = webHdfsFileSystem.listStatus(new Path("/")); for (FileStatus fileStatus : fileStatuses) {

System.out.println(fileStatus.getPath().getName());

}

webHdfsFileSystem.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}复制

HttpFS访问HDFS

package com.cloudera.hdfs.nonekerberos;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FileStatus;import org.apache.hadoop.fs.Path;import org.apache.hadoop.hdfs.web.WebHdfsFileSystem;import org.apache.hadoop.security.UserGroupInformation;import java.net.URI;public class HttpFSDemo { public static void main(String[] args) {

Configuration configuration = new Configuration();

UserGroupInformation.createRemoteUser("fayson");

WebHdfsFileSystem webHdfsFileSystem = new WebHdfsFileSystem(); try {

webHdfsFileSystem.initialize(new URI("http://52.221.198.3:14000"), configuration);

System.out.println(webHdfsFileSystem.getUri()); //向HDFS Put文件

webHdfsFileSystem.copyFromLocalFile(new Path("/Users/fayson/Desktop/run-kafka/"), new Path("/fayson1-httpfs")); //列出HDFS根目录下的所有文件

FileStatus[] fileStatuses = webHdfsFileSystem.listStatus(new Path("/")); for (FileStatus fileStatus : fileStatuses) {

System.out.println(fileStatus.getPath().getName());

}

webHdfsFileSystem.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}复制

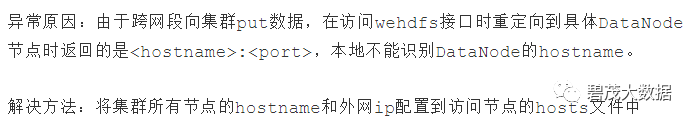

使用webhdfs向HDFS put数据时异常

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.http://13.250.102.77:50070

java.net.UnknownHostException: cdh04.fayson.com:50075: cdh04.fayson.com

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:408)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.runWithRetry(WebHdfsFileSystem.java:693)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.access$100(WebHdfsFileSystem.java:519)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner$1.run(WebHdfsFileSystem.java:549)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1920)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem$AbstractRunner.run(WebHdfsFileSystem.java:545)

at org.apache.hadoop.hdfs.web.WebHdfsFileSystem.create(WebHdfsFileSystem.java:1252)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:925)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:906)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:803)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:368)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:359)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:341)

at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:2057)

at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:2025)

at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:1990)

at com.cloudera.hdfs.nonekerberos.WebHDFSTest.main(WebHDFSTest.java:31)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:147)复制

文章转载自碧茂大数据,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。