| 作者 | 时间 | QQ技术交流群 |

|---|---|---|

| perrynzhou@gmail.com | 2022/11/13 | 672152841 |

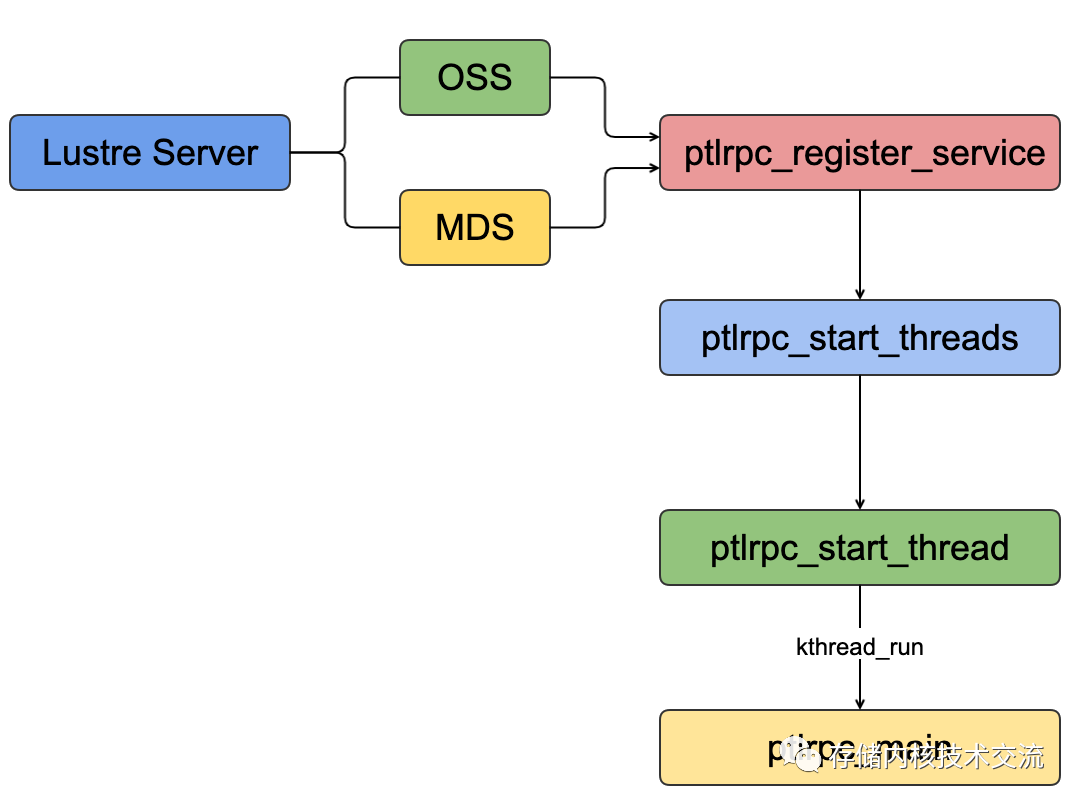

OSS IO

服务线程参数

OSS

中的IO线程

初始化是通过ost_init

进行初始化,这里设定了初始化的IO

线程数

static const struct obd_ops ost_obd_ops = {

.o_owner = THIS_MODULE,

.o_setup = ost_setup,

.o_cleanup = ost_cleanup,

.o_health_check = ost_health_check,

};

static int __init ost_init(void)

{

int rc;

ENTRY;

rc = class_register_type(&ost_obd_ops, NULL, false,

LUSTRE_OSS_NAME, NULL);

RETURN(rc);

}

OSS

中的lustre 2.15

版本的默认的oss_max_threads

最大值是512

,最小是定义在OSS_NTHRS_INIT(3)

. 这是硬编码定义.这些线程核心目的是执行网络IO

操作。

// oss中最大的服务线程数

int oss_max_threads = 512;

// 内置的oss最大的服务线程数

module_param(oss_max_threads, int, 0444);

MODULE_PARM_DESC(oss_max_threads, "maximum number of OSS service threads");

// 这个是oss的中给动态的服务启动线程数

static int oss_num_threads;

module_param(oss_num_threads, int, 0444);

MODULE_PARM_DESC(oss_num_threads, "number of OSS service threads to start");

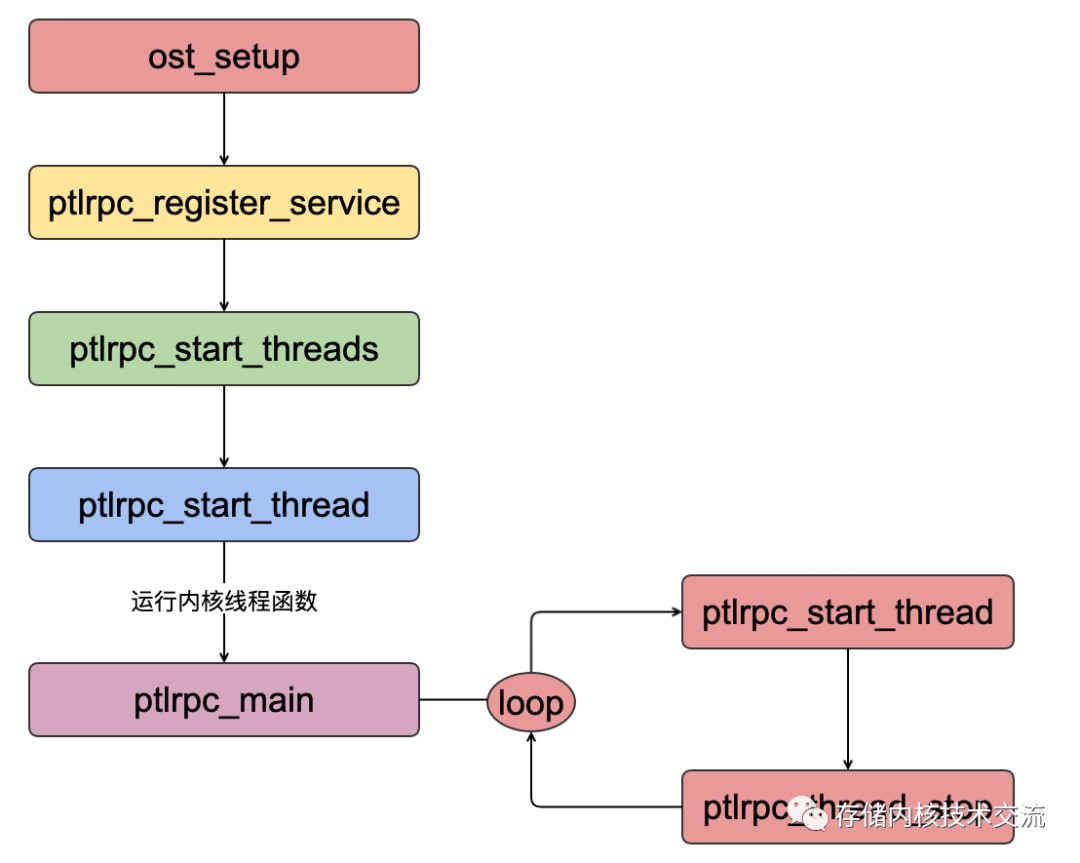

OSS

中IO

线程的动态创建和销毁的过程,这个过程会接受来自网络的参数调整,在初始化过程中创建内核线程

中运行ptlrpc_start_thread

函数,在ptlrpc_main

中接受请求然后动态的创建ost的IO

线程

// 设置ost_setup初始化设置

static int ost_setup(struct obd_device *obd, struct lustre_cfg *lcfg)

{

// 定义ost io的配置

static struct ptlrpc_service_conf svc_conf;

svc_conf = (typeof(svc_conf)) {

.psc_name = "ost_io",

.psc_watchdog_factor = OSS_SERVICE_WATCHDOG_FACTOR,

.psc_buf = {

.bc_nbufs = OST_NBUFS,

.bc_buf_size = OST_IO_BUFSIZE,

.bc_req_max_size = OST_IO_MAXREQSIZE,

.bc_rep_max_size = OST_IO_MAXREPSIZE,

.bc_req_portal = OST_IO_PORTAL,

.bc_rep_portal = OSC_REPLY_PORTAL,

},

.psc_thr = {

.tc_thr_name = "ll_ost_io",

.tc_thr_factor = OSS_THR_FACTOR,

.tc_nthrs_init = OSS_NTHRS_INIT,

.tc_nthrs_base = OSS_NTHRS_BASE,

.tc_nthrs_max = oss_max_threads,

.tc_nthrs_user = oss_num_threads,

.tc_cpu_bind = oss_cpu_bind,

.tc_ctx_tags = LCT_DT_THREAD,

}

};

// ost的IO服务线程

ost->ost_io_service = ptlrpc_register_service()

{

ptlrpc_start_threads(...)

{

ptlrpc_start_thread(...)

{

// ptlrpc_main线程是运行在内核线程中

static int ptlrpc_main(void *arg)

{

// 接受oss线程的配置的请求,进行创建或者销毁线程

while (!ptlrpc_thread_stopping(thread))

{

if (ptlrpc_threads_need_create(svcpt))

{

ptlrpc_start_thread(svcpt, 0);

}

if (unlikely(ptlrpc_thread_should_stop(thread)))

{

ptlrpc_thread_stop(thread);

}

}

}

}

}

}

}

OSS

中的线程可以在运行期通过lctl {get,set}_param {service}.threads_{min,max,started}

来动态更改.这些参数定义在struct ptlrpc_service_part

中。

struct ptlrpc_service_part {

/** 启动的创建的线程 */

int scp_nthrs_starting;

/** 运行的线程数 */

int scp_nthrs_running;

}

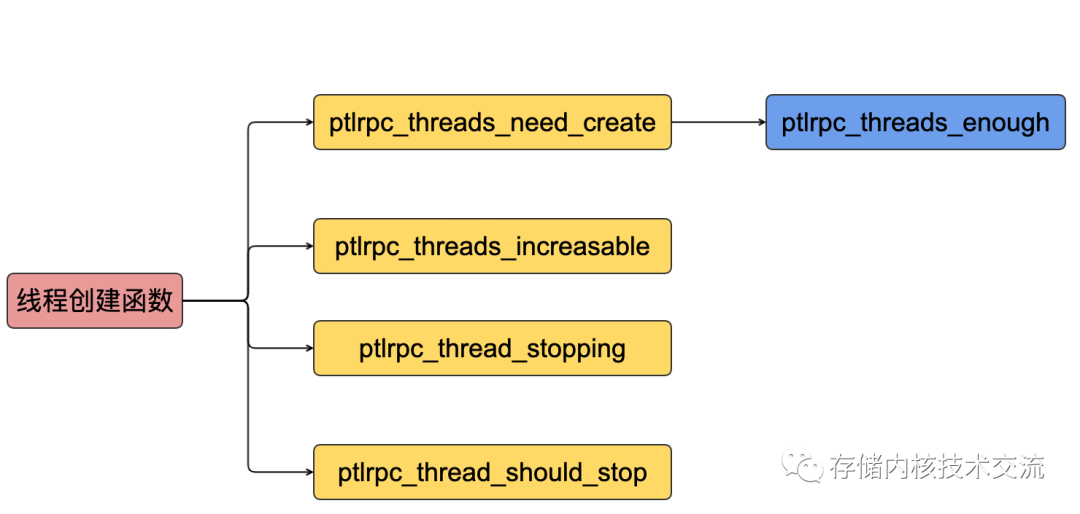

// 检查当前线程是否足够

static inline int ptlrpc_threads_enough(struct ptlrpc_service_part *svcpt)

{

return svcpt->scp_nreqs_active <

svcpt->scp_nthrs_running - 1 -

(svcpt->scp_service->srv_ops.so_hpreq_handler != NULL);

}

// 线程增加的判断

static inline int ptlrpc_threads_increasable(struct ptlrpc_service_part *svcpt)

{

return svcpt->scp_nthrs_running +

svcpt->scp_nthrs_starting <

svcpt->scp_service->srv_nthrs_cpt_limit;

}

// 创建线程的判断

static inline int ptlrpc_threads_need_create(struct ptlrpc_service_part *svcpt)

{

return !ptlrpc_threads_enough(svcpt) &&

ptlrpc_threads_increasable(svcpt);

}

// 判断线程是否要停止

static inline int ptlrpc_thread_stopping(struct ptlrpc_thread *thread)

{

return thread_is_stopping(thread) ||

thread->t_svcpt->scp_service->srv_is_stopping;

}

// 如果线程很多则判断线程是否停止线程

static inline bool ptlrpc_thread_should_stop(struct ptlrpc_thread *thread)

{

struct ptlrpc_service_part *svcpt = thread->t_svcpt;

return thread->t_id >= svcpt->scp_service->srv_nthrs_cpt_limit &&

thread->t_id == svcpt->scp_thr_nextid - 1;

}

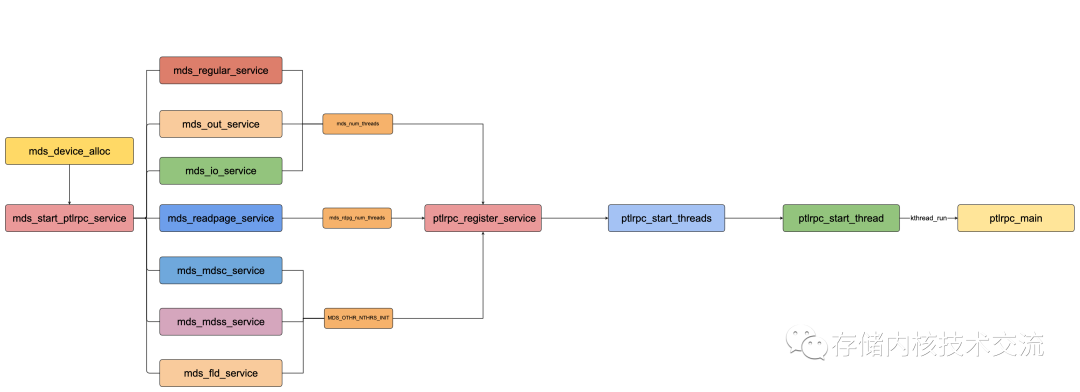

MDS IO

服务线程参数

MDS

中的meta的IO

线程的初始化是通过mds_start_ptlrpc_service

函数读取mds_num_threads

参数来设定。大部分的内置服务运行的的线程通过mds_num_threads

来设定.

// mds中内服服务的启动线程

static unsigned long mds_num_threads;

module_param(mds_num_threads, ulong, 0444);

MODULE_PARM_DESC(mds_num_threads, "number of MDS service threads to start");

// mds的IO的最大服务线程

int mds_max_io_threads = 512;

module_param(mds_max_io_threads, int, 0444);

MODULE_PARM_DESC(mds_max_io_threads,

"maximum number of MDS IO service threads");

static int mds_start_ptlrpc_service(struct mds_device *m)

{

static struct ptlrpc_service_conf conf;

conf = (typeof(conf)) {

.psc_name = LUSTRE_MDT_NAME "_io",

.psc_watchdog_factor = MDT_SERVICE_WATCHDOG_FACTOR,

.psc_buf = {

.bc_nbufs = OST_NBUFS,

.bc_buf_size = OST_IO_BUFSIZE,

.bc_req_max_size = OST_IO_MAXREQSIZE,

.bc_rep_max_size = OST_IO_MAXREPSIZE,

.bc_req_portal = MDS_IO_PORTAL,

.bc_rep_portal = MDC_REPLY_PORTAL,

},

.psc_thr = {

.tc_thr_name = LUSTRE_MDT_NAME "_io",

.tc_thr_factor = OSS_THR_FACTOR,

.tc_nthrs_init = OSS_NTHRS_INIT,

.tc_nthrs_base = OSS_NTHRS_BASE,

// 最大的meta的IO服务线程

.tc_nthrs_max = mds_max_io_threads,

// 当前服务的IO服务线程

.tc_nthrs_user = mds_num_threads,

.tc_cpu_bind = mds_io_cpu_bind,

.tc_ctx_tags = LCT_DT_THREAD | LCT_MD_THREAD,

},

.psc_cpt = {

.cc_cptable = mdt_io_cptable,

.cc_pattern = mdt_io_cptable == NULL ?

mds_io_num_cpts : NULL,

.cc_affinity = true,

},

.psc_ops = {

.so_thr_init = tgt_io_thread_init,

.so_thr_done = tgt_io_thread_done,

.so_req_handler = tgt_request_handle,

.so_req_printer = target_print_req,

.so_hpreq_handler = tgt_hpreq_handler,

},

};

m->mds_io_service = ptlrpc_register_service(&conf, &obd->obd_kset,

obd->obd_debugfs_entry);

}

MDS

中的threads_min、threads_max、threads_started

其调整的运行机制和OSS

中的保持一致.这里的抽象机制的实现非常巧妙,非常值得一读。

文章转载自存储内核技术交流,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。