关于yolov5训练自己的数据集的文章网上已经有一大把,自己之前也摸索了数据标注有一阵子,可一直迟迟未动手,感觉对原理不熟悉,很多东西浮光掠影有些肤浅,思来想去还是花了整整一天实践一下吧,毕竟这是基础。

凡是写东西,必然会借鉴别人的内容和代码,毕竟造轮子不是玩的,当然文章中提到的必然是自己经历和解决过的。每个人经历的问题千奇百怪,自己亲自动手解决或者自己到网上找方案解决或者想办法规避,都是解决问题的办法。

一、安装和配置环境

1、第一步新建了一个pytorch38的工程

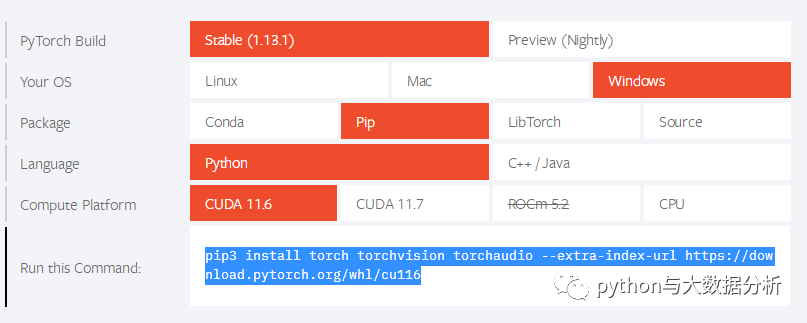

2、登陆pytorch网站,选择合适自己的pytorch安装包

3、下载yolov5,到https://github.com/ultralytics/yolov5,github有,但时灵时不灵,我是在gitee上下载的yolov5 6.0版本,整个yolov5作为一个目录放到了pytorch38目录下。

4、安装所需package,pip install requirement.txt

二、关于数据集的选择和下载

1、自己写爬虫获取数据集,当然都是未标注的

2、获取到数据集之后,再通过labelimg自行标注

3、可自行到很多共享平台下载数据集,知名如kaggle

这些平台的数据集有原始图片也有一些标注过的图片,这里为了省事,就到kaggle平台下载facemask数据集,具体地址为:

https://www.kaggle.com/datasets/andrewmvd/face-mask-detection

该数据集主要针对用户口罩佩戴行为的识别,包含属于3 个类别的853张图像,以及它们的 PASCAL VOC 格式的边界框。3个类别分别是

解压缩后,可以看到有两个目录annotations和images;其中annotations为格式xml的数据标注文件;images为853张原始照片

xml数据标注样例如下:

<annotation>

<folder>images</folder>

<filename>maksssksksss470.png</filename>

<size>

<width>400</width>

<height>200</height>

<depth>3</depth>

</size>

<segmented>0</segmented>

<object>

<name>with_mask</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<occluded>0</occluded>

<difficult>0</difficult>

<bndbox>

<xmin>52</xmin>

<ymin>28</ymin>

<xmax>100</xmax>

<ymax>75</ymax>

</bndbox>

</object>

<object>

</object></annotation>

├─DataSets

│ ├─annotations

│ ├─data

│ ├─images

│ ├─ImageSets

│ │ └─Main

│ └─labels

下面的代码是按照9:1的比例划分训练集和测试集,又在训练集中按照9:1的比例分为训练集和验证集。

# coding:utf-8

# 划分数据集

import os

import random

import argparse

parser = argparse.ArgumentParser()

# xml文件的地址,根据自己的数据进行修改 xml一般存放在Annotations下

parser.add_argument('--xml_path', default='./DataSets/annotations', type=str, help='xml path')

# xml文件的地址,根据自己的数据进行修改 xml一般存放在Annotations下

parser.add_argument('--img_path', default='./DataSets/images', type=str, help='img path')

# 数据集划分后txt文件的存储地址,地址选择自己数据下的ImageSets/Main

parser.add_argument('--txt_path', default='./DataSets/ImageSets/Main', type=str, help='output txt label path')

opt = parser.parse_args()

trainval_percent = 0.9 # 训练集和验证集的比例

train_percent = 0.9 # 训练集占总数据的比例

imgfilepath = opt.img_path

txtsavepath = opt.txt_path

total_xml = os.listdir(imgfilepath)

if not os.path.exists(txtsavepath):

os.makedirs(txtsavepath)

num = len(total_xml)

list_index = range(num)

tv = int(num * trainval_percent)

tr = int(tv * train_percent)

# 训练集+验证集 767

trainval = random.sample(list_index, tv)

# 训练集 690

train = random.sample(trainval, tr)

# 划分生成的文件名称

file_trainval = open(txtsavepath + '/trainval.txt', 'w')

file_test = open(txtsavepath + '/test.txt', 'w')

file_train = open(txtsavepath + '/train.txt', 'w')

file_val = open(txtsavepath + '/val.txt', 'w')

# 遍历range表,将训练集+验证集、训练集、验证集、测试集分别写入相应文件

for i in list_index:

name = total_xml[i][:-4] + '\n'

if i in trainval:

file_trainval.write(name)

if i in train:

file_train.write(name)

else:

file_val.write(name)

else:

file_test.write(name)

file_trainval.close()

file_train.close()

file_val.close()

file_test.close()

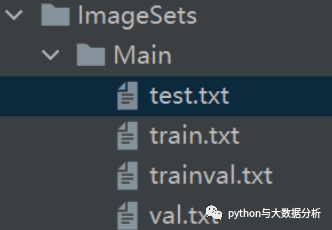

完成后在ImageSets/Main目录下创建了四个文件,里面包含了图片文件名

2、将xml转换为YOLO格式的label

yolov5训练模型用到的标注文件是一种归一化的数据形式,所以需要把XML进行格式转换,以下是格式转换代码:

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

import shutil

# VOC生成txt的文件

sets = ['train', 'val', 'test'] # 数据集,最后会生成以这三个数据集命名的txt文件

classes = ['with_mask', 'without_mask', 'mask_weared_incorrect'] # 标签名,注意一定不要出错

def convert(size, box):

# yolo x,y,w,h

# x:中心点x值/图片宽度,

# y:中心点y值/图片高度,

# w:目标框的宽度/图片宽度,

# h:目标框的高度/图片高度。

dw = 1. / size[0]

dh = 1. / size[1]

x = (box[0] + box[1]) / 2.0

y = (box[2] + box[3]) / 2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)

def convert_annotation(image_id):

in_file = open('./DataSets/annotations/%s.xml' % (image_id), 'r', encoding="UTF-8")

out_file = open('./DataSets/labels/%s.txt' % (image_id), 'w')

tree = ET.parse(in_file)

root = tree.getroot()

# 获取图片大小,为后续坐标转换做准备

# <annotation>

# <folder>images</folder>

# <filename>maksssksksss603.png</filename>

# <size>

# <width>400</width>

# <height>278</height>

# <depth>3</depth>

# </size>

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

# 获取标注框的标签和位置,并进行转换

# <object>

# <name>with_mask</name>

# <pose>Unspecified</pose>

# <truncated>0</truncated>

# <occluded>0</occluded>

# <difficult>0</difficult>

# <bndbox>

# <xmin>49</xmin>

# <ymin>11</ymin>

# <xmax>55</xmax>

# <ymax>17</ymax>

# </bndbox>

# </object>

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

box = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text),

float(xmlbox.find('ymin').text), float(xmlbox.find('ymax').text))

bb = convert((w, h), box)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

if not os.path.exists('./DataSets/labels/'): # 创建label文件夹

os.makedirs('./DataSets/labels/')

for image_set in sets:

image_ids = open('./DataSets/ImageSets/Main/%s.txt' % (image_set)).read().strip().split()

list_file = open('./DataSets/%s.txt' % (image_set), 'w')

for image_id in image_ids:

list_file.write('D:/JetBrains/PycharmProjects/pytorch38/DataSets/images/%s.png\n' % (image_id)) # 这里最好用全局路径

convert_annotation(image_id)

list_file.close()

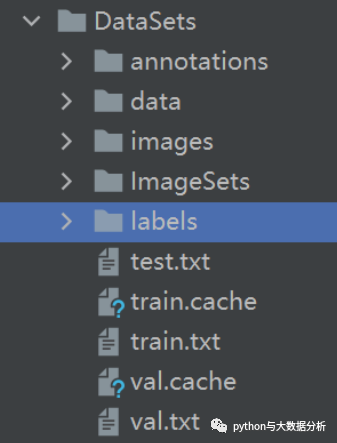

运行完成后,在DataSets根目录下创建了labels目录和test.txt、train.txt、val.txt三个文件

这几个文件中分别记录了测试集/验证集/训练集文件对应的图片文件路径和文件名,方便后续处理数据使用。

D:/JetBrains/PycharmProjects/pytorch38/DataSets/images/maksssksksss107.png

D:/JetBrains/PycharmProjects/pytorch38/DataSets/images/maksssksksss13.png

D:/JetBrains/PycharmProjects/pytorch38/DataSets/images/maksssksksss143.png

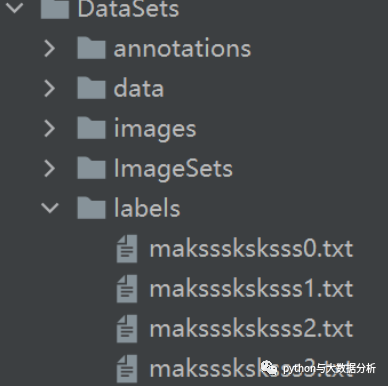

在labels文件夹,分别为原有的xml创建了一个对应的yolov5格式的标注文件

yolov5格式的标注文件,记录了下标签号和对应的转换坐标

0 0.34875 0.28195488721804507 0.1075 0.15789473684210525

1 0.95625 0.18045112781954886 0.0925 0.13533834586466165

1 0.52625 0.4699248120300752 0.0925 0.14285714285714285

0 0.675 0.4398496240601504 0.105 0.16541353383458646

3、下载权重文件

Yolov5官方代码中,给出的目标检测网络中一共有4个版本,分别是Yolov5s、Yolov5m、Yolov5l、Yolov5x四个模型。

在yolov5的model文件夹下,包括了Yolov5s.yaml、Yolov5m.yaml、Yolov5l.yaml、Yolov5x.yaml这四个版本的yolo网络结构,四种结构的区别主要是网络深度和宽度控制,决定了模型的大小和准确率。depth_multiple控制网络的深度,width_multiple控制网络的宽度。

同时Yolov5也提供了这几个不同版本的权重文件,yolov5l.pt、yolov5m.pt、yolov5s.pt、yolov5x.pt,可自行下载,并存放到yolov5的weights文件夹下,其中缺省的和用的最多的是yolo5s,这个对应train.py中的参数--weights

4、yolov5配置

本文在原有DataSets下新建了一个data文件夹,在data文件夹下创建一个mask.yaml文件,配置各train,val,test各数据子集;分类个数,此处为3个,标签值为list类型,这个对应train.py中的参数--data

train: D:/JetBrains/PycharmProjects/pytorch38/DataSets/train.txt

val: D:/JetBrains/PycharmProjects/pytorch38/DataSets/val.txt

test: D:/JetBrains/PycharmProjects/pytorch38/DataSets/test.txt

nc: 3 #分类个数

names: ['with_mask;', 'without_mask', 'mask_weared_incorrect']

到yolov5目录下的models文件夹下拷贝yolov5s.yaml,并重新命名为yolov5s_mask.yaml,这个对应train.py中的参数--cfg

yolov5s_mask.yaml存储了yolv模型的网络结构和标签类型,修改nc为3,depth_multiple模型深度数量和width_multiple层通道的数量,其他不变

# parameters

nc: 3 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Focus, [64, 3]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 9, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 1, SPP, [1024, [5, 9, 13]]],

[-1, 3, C3, [1024, False]], # 9

]

# YOLOv5 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

5、训练过程可视化配置

在深度学习训练网络的过程中,由于网络训练过程时间长,不可能一直关注训练中的每一轮结果,因此我们需要将训练过程中的结果可视化,留作后续的查看,从而确定训练过程是否出错。因此,我们需要使用到可视化工具,常用的两种可视化工具有:wandb(在线可视化)、tensorboard。

wandb的安装和操作:

pip install wandb

可到https://wandb.ai注册账号

tensorboard的安装:

pip install tensorboard

6、修改yolov5根目录下的train.py

首先需要修改的是weights、cfg、data的default参数,这个步骤3、4已有提及。

如果训练中断,可调整epochs,设置default=100;调整batch-size,设置default=8,减少轮次和批大小,以减少GPU或内存消耗。

如果训练中断还想从断点处继续开始训练,可调整resume,设置default=True

def parse_opt(known=False):

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default=ROOT / 'weights/yolov5s.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='models/yolov5s_mask.yaml', help='model.yaml path')

parser.add_argument('--data', type=str, default=ROOT / '../DataSets/data/mask.yaml', help='dataset.yaml path')

parser.add_argument('--hyp', type=str, default=ROOT / 'data/hyps/hyp.scratch-low.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300, help='total training epochs')

parser.add_argument('--batch-size', type=int, default=16, help='total batch size for all GPUs, -1 for autobatch')

parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=640, help='train, val image size (pixels)')

parser.add_argument('--rect', action='store_true', help='rectangular training')

parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')

parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')

parser.add_argument('--noval', action='store_true', help='only validate final epoch')

parser.add_argument('--noautoanchor', action='store_true', help='disable AutoAnchor')

parser.add_argument('--noplots', action='store_true', help='save no plot files')

parser.add_argument('--evolve', type=int, nargs='?', const=300, help='evolve hyperparameters for x generations')

parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')

parser.add_argument('--cache', type=str, nargs='?', const='ram', help='--cache images in "ram" (default) or "disk"')

parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')

parser.add_argument('--single-cls', action='store_true', help='train multi-class data as single-class')

parser.add_argument('--optimizer', type=str, choices=['SGD', 'Adam', 'AdamW'], default='SGD', help='optimizer')

parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')

parser.add_argument('--workers', type=int, default=8, help='max dataloader workers (per RANK in DDP mode)')

parser.add_argument('--project', default=ROOT / 'runs/train', help='save to project/name')

parser.add_argument('--name', default='exp', help='save to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--quad', action='store_true', help='quad dataloader')

parser.add_argument('--cos-lr', action='store_true', help='cosine LR scheduler')

parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')

parser.add_argument('--patience', type=int, default=100, help='EarlyStopping patience (epochs without improvement)')

parser.add_argument('--freeze', nargs='+', type=int, default=[0], help='Freeze layers: backbone=10, first3=0 1 2')

parser.add_argument('--save-period', type=int, default=-1, help='Save checkpoint every x epochs (disabled if < 1)')

parser.add_argument('--seed', type=int, default=0, help='Global training seed')

parser.add_argument('--local_rank', type=int, default=-1, help='Automatic DDP Multi-GPU argument, do not modify')

# Logger arguments

parser.add_argument('--entity', default=None, help='Entity')

parser.add_argument('--upload_dataset', nargs='?', const=True, default=False, help='Upload data, "val" option')

parser.add_argument('--bbox_interval', type=int, default=-1, help='Set bounding-box image logging interval')

parser.add_argument('--artifact_alias', type=str, default='latest', help='Version of dataset artifact to use')

return parser.parse_known_args()[0] if known else parser.parse_args()

7、开始执行train.py

四、关于yolov5训练数据集错误处理

1、Downloading https://ultralytics.com/assets/Arial.ttf to C:\Users\bq_wang\AppData\Roaming\Ultralytics\Arial.ttf... TimeoutError: [WinError 10060] 由于连接方在一段时间后没有正确答复或连接的主机没有反应,连接尝试失败。

具体错误如下:

D:\JetBrains\PycharmProjects\pytorch38\venv\Scripts\python.exe D:/JetBrains/PycharmProjects/pytorch38/yolov5/train.py

wandb: Currently logged in as: wangbaoqiang. Use `wandb login --relogin` to force relogin

train: weights=weights\yolov5s.pt, cfg=models/yolov5s_mask.yaml, data=..\DataSets\data\mask.yaml, hyp=data\hyps\hyp.scratch-low.yaml, epochs=300, batch_size=16, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=None, image_weights=False, device=, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs\train, name=exp, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, entity=None, upload_dataset=False, bbox_interval=-1, artifact_alias=latest

fatal: unable to access 'https://github.com/ultralytics/yolov5.git/': OpenSSL SSL_read: Connection was reset, errno 10054

Command 'git fetch origin' timed out after 5 seconds

YOLOv5 v6.2-224-g82a5585 Python-3.8.10 torch-1.13.0+cu117 CUDA:0 (NVIDIA GeForce GTX 1660 SUPER, 6144MiB)

hyperparameters: lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0

ClearML: run 'pip install clearml' to automatically track, visualize and remotely train YOLOv5 in ClearML

Comet: run 'pip install comet_ml' to automatically track and visualize YOLOv5 runs in Comet

TensorBoard: Start with 'tensorboard --logdir runs\train', view at http://localhost:6006/

wandb: wandb version 0.13.7 is available! To upgrade, please run:

wandb: $ pip install wandb --upgrade

wandb: Tracking run with wandb version 0.13.5

wandb: Run data is saved locally in D:\JetBrains\PycharmProjects\pytorch38\yolov5\wandb\run-20230110_091421-2f6a707x

wandb: Run `wandb offline` to turn off syncing.

wandb: Syncing run treasured-snowball-5

wandb: View project at https://wandb.ai/wangbaoqiang/train

wandb: View run at https://wandb.ai/wangbaoqiang/train/runs/2f6a707x

Downloading https://ultralytics.com/assets/Arial.ttf to C:\Users\bq_wang\AppData\Roaming\Ultralytics\Arial.ttf...

Traceback (most recent call last):

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 1354, in do_open

h.request(req.get_method(), req.selector, req.data, headers,

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 1252, in request

self._send_request(method, url, body, headers, encode_chunked)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 1298, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 1247, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 1007, in _send_output

self.send(msg)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 947, in send

self.connect()

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 1414, in connect

super().connect()

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\http\client.py", line 918, in connect

self.sock = self._create_connection(

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\socket.py", line 808, in create_connection

raise err

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\socket.py", line 796, in create_connection

sock.connect(sa)

TimeoutError: [WinError 10060] 由于连接方在一段时间后没有正确答复或连接的主机没有反应,连接尝试失败。

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "D:/JetBrains/PycharmProjects/pytorch38/yolov5/train.py", line 630, in <module>

main(opt)

File "D:/JetBrains/PycharmProjects/pytorch38/yolov5/train.py", line 524, in main

train(opt.hyp, opt, device, callbacks)

File "D:/JetBrains/PycharmProjects/pytorch38/yolov5/train.py", line 93, in train

loggers = Loggers(save_dir, weights, opt, hyp, LOGGER) # loggers instance

File "D:\JetBrains\PycharmProjects\pytorch38\yolov5\utils\loggers\__init__.py", line 111, in __init__

self.wandb = WandbLogger(self.opt, run_id)

File "D:\JetBrains\PycharmProjects\pytorch38\yolov5\utils\loggers\wandb\wandb_utils.py", line 192, in __init__

self.data_dict = check_wandb_dataset(opt.data)

File "D:\JetBrains\PycharmProjects\pytorch38\yolov5\utils\loggers\wandb\wandb_utils.py", line 59, in check_wandb_dataset

return check_dataset(data_file)

File "D:\JetBrains\PycharmProjects\pytorch38\yolov5\utils\general.py", line 530, in check_dataset

check_font('Arial.ttf' if is_ascii(data['names']) else 'Arial.Unicode.ttf', progress=True) # download fonts

File "D:\JetBrains\PycharmProjects\pytorch38\yolov5\utils\general.py", line 466, in check_font

torch.hub.download_url_to_file(url, str(file), progress=progress)

File "D:\JetBrains\PycharmProjects\pytorch38\venv\lib\site-packages\torch\hub.py", line 597, in download_url_to_file

u = urlopen(req)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 222, in urlopen

return opener.open(url, data, timeout)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 531, in open

response = meth(req, response)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 640, in http_response

response = self.parent.error(

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 563, in error

result = self._call_chain(*args)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 502, in _call_chain

result = func(*args)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 755, in http_error_302

return self.parent.open(new, timeout=req.timeout)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 525, in open

response = self._open(req, data)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 542, in _open

result = self._call_chain(self.handle_open, protocol, protocol +

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 502, in _call_chain

result = func(*args)

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 1397, in https_open

return self.do_open(http.client.HTTPSConnection, req,

File "C:\Users\bq_wang\AppData\Local\Programs\Python\Python38\lib\urllib\request.py", line 1357, in do_open

raise URLError(err)

urllib.error.URLError: <urlopen error [WinError 10060] 由于连接方在一段时间后没有正确答复或连接的主机没有反应,连接尝试失败。>

wandb: Waiting for W&B process to finish... (failed 1). Press Ctrl-C to abort syncing.

wandb: Synced treasured-snowball-5: https://wandb.ai/wangbaoqiang/train/runs/2f6a707x

wandb: Synced 5 W&B file(s), 0 media file(s), 0 artifact file(s) and 0 other file(s)

wandb: Find logs at: .\wandb\run-20230110_091421-2f6a707x\logs

Process finished with exit code 1

解决方案:从本机找到Arial.ttf文件,拷贝至C:\Users\Default\AppData\Roaming\Ultralytics目录下,即可解决

2、numpy.core._exceptions.MemoryError: Unable to allocate 1.04 MiB for an array

每次执行到几十个Epoch就中断了,

解决方案:

1、增加虚拟内存大小,从10240改到20480就可以了

2、修改batch-size和epochs的default值

3、修改resume的default值

不过很奇怪,后来想重现,又重现不了了

3、dashboards无法正常打开

No dashboards are active for the current data set.

解决方案:

主要是logdir的缺省路径问题,在terminal中执行以下命令即可

tensorboard --logdir yolov5/runs/train/exp9

五、正常的执行界面

1、run运行结果

中间也会报一下警告,但不影响运行

D:\JetBrains\PycharmProjects\pytorch38\venv\Scripts\python.exe D:/JetBrains/PycharmProjects/pytorch38/yolov5/train.py

wandb: Currently logged in as: wangbaoqiang. Use `wandb login --relogin` to force relogin

train: weights=weights\yolov5s.pt, cfg=models/yolov5s_mask.yaml, data=..\DataSets\data\mask.yaml, hyp=data\hyps\hyp.scratch-low.yaml, epochs=300, batch_size=16, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=None, image_weights=False, device=, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs\train, name=exp, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, entity=None, upload_dataset=False, bbox_interval=-1, artifact_alias=latest

github: YOLOv5 is out of date by 105 commits. Use `git pull` or `git clone https://github.com/ultralytics/yolov5` to update.

YOLOv5 v6.2-224-g82a5585 Python-3.8.10 torch-1.13.0+cu117 CUDA:0 (NVIDIA GeForce GTX 1660 SUPER, 6144MiB)

hyperparameters: lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0

ClearML: run 'pip install clearml' to automatically track, visualize and remotely train YOLOv5 in ClearML

Comet: run 'pip install comet_ml' to automatically track and visualize YOLOv5 runs in Comet

TensorBoard: Start with 'tensorboard --logdir runs\train', view at http://localhost:6006/

wandb: wandb version 0.13.7 is available! To upgrade, please run:

wandb: $ pip install wandb --upgrade

wandb: Tracking run with wandb version 0.13.5

wandb: Run data is saved locally in D:\JetBrains\PycharmProjects\pytorch38\yolov5\wandb\run-20230110_154830-zjf37o5d

wandb: Run `wandb offline` to turn off syncing.

wandb: Syncing run sleek-bird-11

wandb: View project at https://wandb.ai/wangbaoqiang/train

wandb: View run at https://wandb.ai/wangbaoqiang/train/runs/zjf37o5d

D:\JetBrains\PycharmProjects\pytorch38\venv\lib\site-packages\torch\serialization.py:868: SourceChangeWarning: source code of class 'models.yolo.DetectionModel' has changed. you can retrieve the original source code by accessing the object's source attribute or set `torch.nn.Module.dump_patches = True` and use the patch tool to revert the changes.

warnings.warn(msg, SourceChangeWarning)

...

D:\JetBrains\PycharmProjects\pytorch38\venv\lib\site-packages\torch\serialization.py:868: SourceChangeWarning: source code of class 'models.yolo.Detect' has changed. you can retrieve the original source code by accessing the object's source attribute or set `torch.nn.Module.dump_patches = True` and use the patch tool to revert the changes.

warnings.warn(msg, SourceChangeWarning)

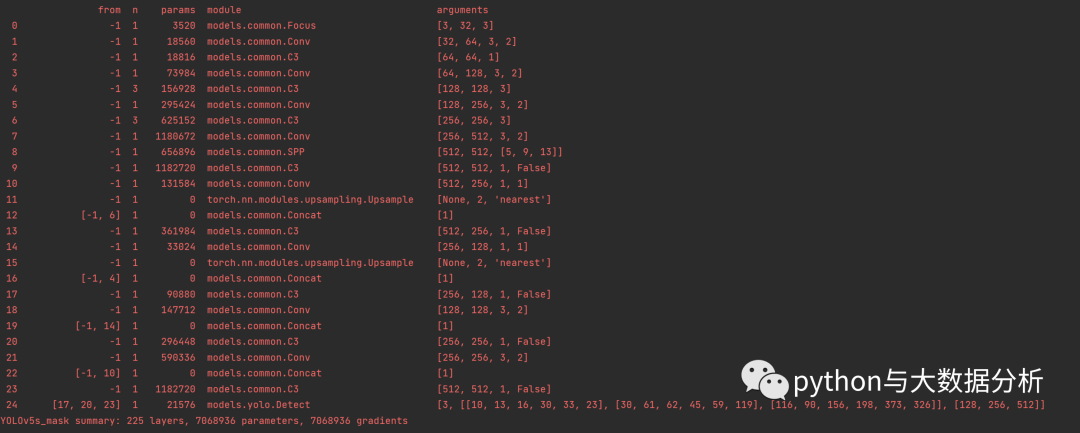

from n params module arguments

0 -1 1 3520 models.common.Focus [3, 32, 3]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 18816 models.common.C3 [64, 64, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 3 156928 models.common.C3 [128, 128, 3]

5 -1 1 295424 models.common.Conv [128, 256, 3, 2]

6 -1 3 625152 models.common.C3 [256, 256, 3]

7 -1 1 1180672 models.common.Conv [256, 512, 3, 2]

8 -1 1 656896 models.common.SPP [512, 512, [5, 9, 13]]

9 -1 1 1182720 models.common.C3 [512, 512, 1, False]

10 -1 1 131584 models.common.Conv [512, 256, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 361984 models.common.C3 [512, 256, 1, False]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 90880 models.common.C3 [256, 128, 1, False]

18 -1 1 147712 models.common.Conv [128, 128, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 296448 models.common.C3 [256, 256, 1, False]

21 -1 1 590336 models.common.Conv [256, 256, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 21576 models.yolo.Detect [3, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

YOLOv5s_mask summary: 225 layers, 7068936 parameters, 7068936 gradients

Transferred 215/361 items from weights\yolov5s.pt

AMP: checks passed

optimizer: SGD(lr=0.01) with parameter groups 59 weight(decay=0.0), 62 weight(decay=0.0005), 62 bias

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

train: Scanning 'D:\JetBrains\PycharmProjects\pytorch38\DataSets\train.cache' images and labels... 690 found, 0 missing, 0 empty, 0 corrupt: 100%|██████████| 690/690 [00:00<?, ?it/s]

wandb: Currently logged in as: wangbaoqiang. Use `wandb login --relogin` to force relogin

val: Scanning 'D:\JetBrains\PycharmProjects\pytorch38\DataSets\val.cache' images and labels... 77 found, 0 missing, 0 empty, 0 corrupt: 100%|██████████| 77/77 [00:00<?, ?it/s]

wandb: Currently logged in as: wangbaoqiang. Use `wandb login --relogin` to force relogin

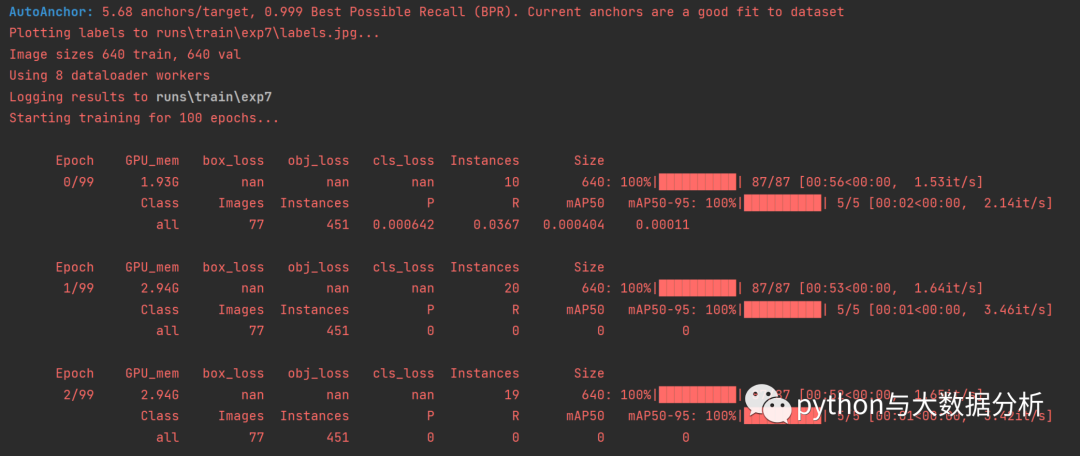

AutoAnchor: 5.68 anchors/target, 0.999 Best Possible Recall (BPR). Current anchors are a good fit to dataset

Plotting labels to runs\train\exp9\labels.jpg...

Image sizes 640 train, 640 val

Using 8 dataloader workers

Logging results to runs\train\exp9

Starting training for 300 epochs...

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

0/299 3.6G nan nan nan 22 640: 100%|██████████| 44/44 [00:52<00:00, 1.19s/it]

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 3/3 [00:02<00:00, 1.08it/s]

all 77 451 0 0 0 0

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

1/299 4.71G nan nan nan 10 640: 100%|██████████| 44/44 [00:48<00:00, 1.10s/it]

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 3/3 [00:01<00:00, 1.96it/s]

all 77 451 0 0 0 0

libpng warning: iCCP: Not recognizing known sRGB profile that has been edited

...

libpng warning: iCCP: Not recognizing known sRGB profile that has been edited

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

299/299 4.71G nan nan nan 9 640: 100%|██████████| 44/44 [00:49<00:00, 1.13s/it]

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 3/3 [00:01<00:00, 1.89it/s]

all 77 451 0 0 0 0

300 epochs completed in 4.393 hours.

Optimizer stripped from runs\train\exp9\weights\last.pt, 14.5MB

Optimizer stripped from runs\train\exp9\weights\best.pt, 14.5MB

Validating runs\train\exp9\weights\best.pt...

Fusing layers...

YOLOv5s_mask summary: 166 layers, 7059304 parameters, 0 gradients

Class Images Instances P R mAP50 mAP50-95: 100%|██████████| 3/3 [00:02<00:00, 1.48it/s]

all 77 451 0 0 0 0

Results saved to runs\train\exp9

wandb: Waiting for W&B process to finish... (success).

wandb:

wandb: Run history:

wandb: metrics/mAP_0.5 ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁

wandb: metrics/mAP_0.5:0.95 ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁

wandb: metrics/precision ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁

wandb: metrics/recall ▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁

wandb: x/lr0 ████▇▇▇▇▇▆▆▆▆▆▆▅▅▅▅▅▅▄▄▄▄▄▃▃▃▃▃▃▂▂▂▂▂▁▁▁

wandb: x/lr1 ████▇▇▇▇▇▆▆▆▆▆▆▅▅▅▅▅▅▄▄▄▄▄▃▃▃▃▃▃▂▂▂▂▂▁▁▁

wandb: x/lr2 ████▇▇▇▇▇▆▆▆▆▆▆▅▅▅▅▅▅▄▄▄▄▄▃▃▃▃▃▃▂▂▂▂▂▁▁▁

wandb:

wandb: Run summary:

wandb: best/epoch 299

wandb: best/mAP_0.5 0.0

wandb: best/mAP_0.5:0.95 0.0

wandb: best/precision 0.0

wandb: best/recall 0.0

wandb: metrics/mAP_0.5 0.0

wandb: metrics/mAP_0.5:0.95 0.0

wandb: metrics/precision 0.0

wandb: metrics/recall 0.0

wandb: train/box_loss nan

wandb: train/cls_loss nan

wandb: train/obj_loss nan

wandb: val/box_loss nan

wandb: val/cls_loss nan

wandb: val/obj_loss nan

wandb: x/lr0 0.00017

wandb: x/lr1 0.00017

wandb: x/lr2 0.00017

wandb:

wandb: Synced sleek-bird-11: https://wandb.ai/wangbaoqiang/train/runs/zjf37o5d

wandb: Synced 5 W&B file(s), 13 media file(s), 1 artifact file(s) and 0 other file(s)

wandb: Find logs at: .\wandb\run-20230110_154830-zjf37o5d\logs

libpng warning: iCCP: Not recognizing known sRGB profile that has been edited

...

libpng warning: iCCP: Not recognizing known sRGB profile that has been edited

Process finished with exit code 0

相关运行截图

2、tensorboard监控

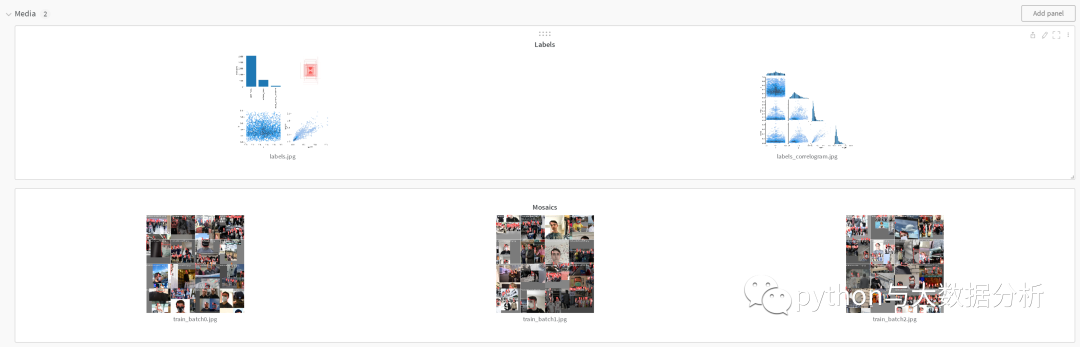

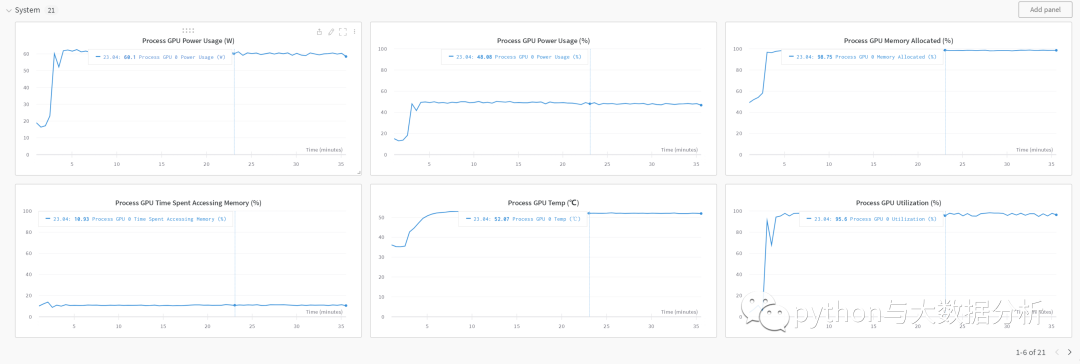

3、wandb监控

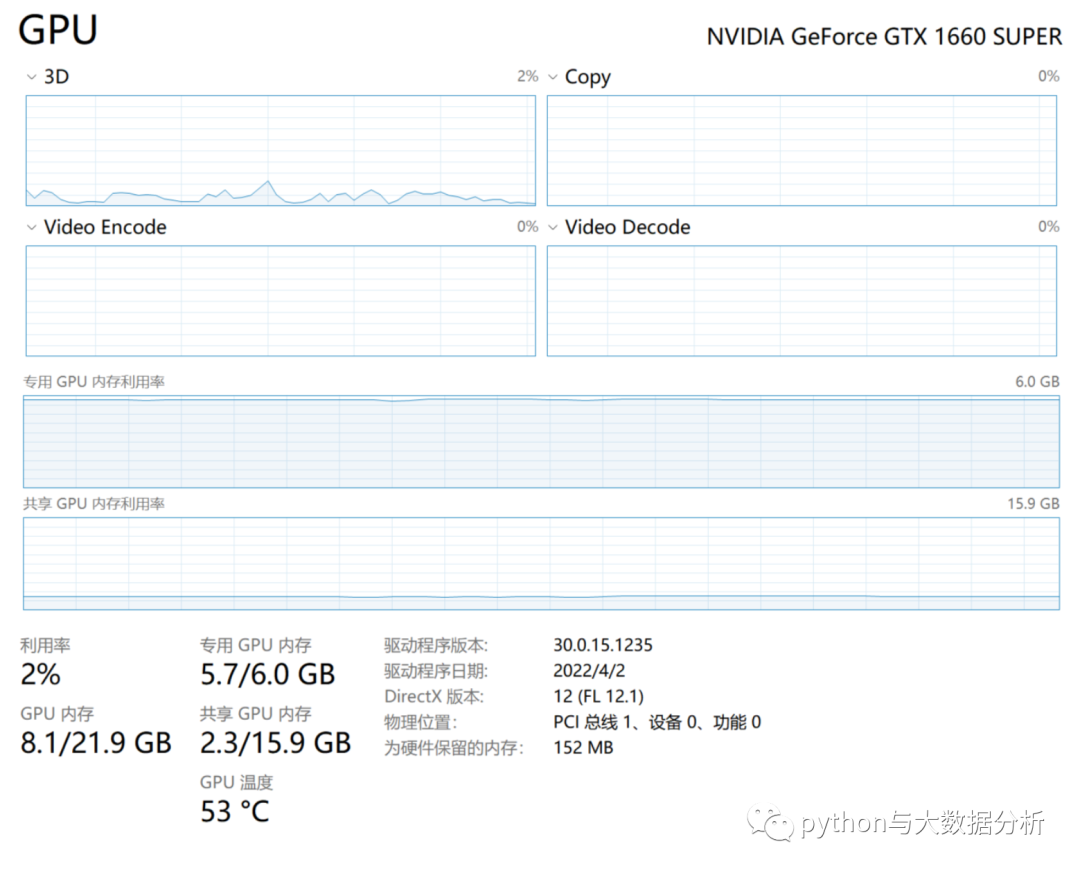

4、任务管理器GPU使用监控

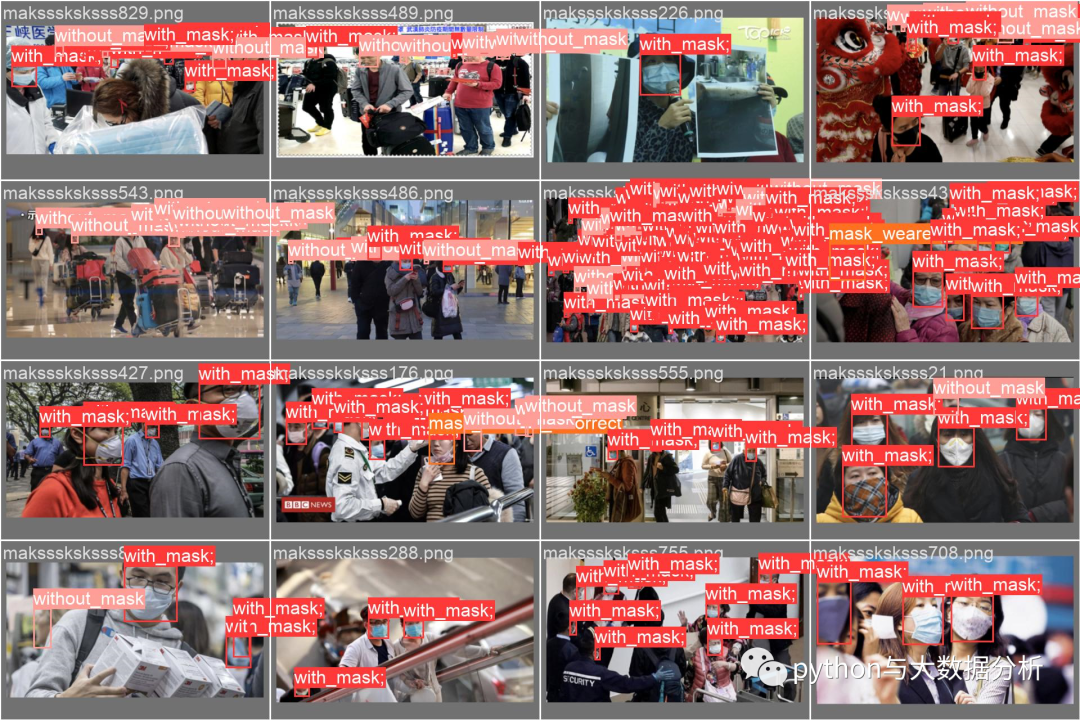

5、Yolov5运行过程

yolov5的runs目录下有expn目录,每一次训练都会新增一个exp+序号的目录,这个目录下记录了该次训练的全过程日志,也是tensorboard数据的来源,这个目录下还有个weights目录,下面有best.pt和last.pt两个模型文件。

接下来的计划是,训练完成了,怎么开始应用?

欢迎关注python与大数据分析