PG菜鸟入门学习中,欢迎各位大佬留言技术指导。

题记

对于某个技能点、知识点,没有实验或者没有质量的实验,都只是纸上谈兵。

今天在群里请教了一个问题,

pg里日志轮转有什么比较优雅的办法?

手动触发日志轮转除了 pg_rotate_logfile()还有别的方法么?

手动触发日志归档,而不用等定时轮转 log_roration_age,或者测试只看新的日志,我现在就只能把老的删掉,再触发生成新的。

当时的情况其实是,日志文件命名的粒度为“小时” (log_filename = postgresql-%Y-%m-%d_%H.log)。

所以执行 pg_rotate_logfile 时,并没任何反应。

日志轮转

PostgreSQL 提供了两种方式进行日志轮转:

pg_ctl logrotate– PG工具,可在 postgres 用户直接执行,日志会自动轮转并生成新日志文件。也可以加-s参数,静默执行。

[postgres@centos7 log]$ pg_ctl logrotate server signaled to rotate log file [postgres@centos7 log]$ [postgres@centos7 log]$ pg_ctl logrotate -s [postgres@centos7 log]$复制

pg_rotate_logfile()– PG系统方法,需要 superuser 权限。

(postgres@[local]) [postgres] 23:01:45# select pg_rotate_logfile();

+-------------------+

| pg_rotate_logfile |

+-------------------+

| t |

+-------------------+

(1 row)

[postgres@centos7 log]$ psql -U sbtest -d postgres

psql (15.2-Yan)

Type "help" for help.

(sbtest@[local]) [postgres] 23:03:20> select pg_rotate_logfile();

ERROR: permission denied for function pg_rotate_logfile

Time: 0.726 ms

(sbtest@[local]) [postgres] 23:03:53> \dg

List of roles

+-----------+------------------------------------------------------------+-----------+

| Role name | Attributes | Member of |

+-----------+------------------------------------------------------------+-----------+

| postgres | Superuser, Create role, Create DB, Replication, Bypass RLS | {} |

| sbtest | | {} |

+-----------+------------------------------------------------------------+-----------+

复制不过,需要注意,需要 PostgreSQL 已经开启 logging_collector。

此外,还可以通过插件 adminpack 或者 pgAdmin 工具进行日志文件管理。

再者,可以使用操作系统层工具 logrotate 对日志文件进行定时轮转。

一般 JSON 日志

JSON 格式的日志非常适合记录结构化数据,因为它易于读取和处理。另外,由于 JSON 格式的日志只是普通的文本文件,所以可以使用任何文本编辑器或命令行工具来查看和处理。

以下是一个示例 JSON 格式的日志条目:

{

"timestamp": "2022-02-23T14:30:00Z",

"level": "INFO",

"message": "User login",

"user_id": "1234",

"ip_address": "192.168.1.1"

}

复制在这个示例中,日志包含了时间戳、日志级别、事件消息、用户ID和IP地址。这些属性可以通过解析JSON格式的日志条目来轻松地提取和分析。

使用 JSON 格式的日志有许多优点,例如易于使用、易于读取和解析、可扩展性好等。因此,JSON 格式的日志在现代应用程序中非常流行。

这里介绍一个格式化 JSON 的工具 - - jq。

jq 可以以各种方式转换 JSON,可以选择、迭代、缩减或分解 JSON 文档。例如,运行命令 jq ´map(.price) | add´ 将接受一个 JSON 对象数组作为输入,并返回它们的 “price” 字段的和。

jq 也可以接受文本输入,但默认情况下,jq 从 stdin 读取 JSON 实体流(包括数字和其他文字)。

例如,上例就可由 jq 将普通文本从 stdin 读取,并进行格式化。

$ echo '{"timestamp": "2022-02-23T14:30:00Z","level": "INFO","message": "User login","user_id": "1234","ip_address": "192.168.1.1"}' | jq

{

"timestamp": "2022-02-23T14:30:00Z",

"level": "INFO",

"message": "User login",

"user_id": "1234",

"ip_address": "192.168.1.1"

}

复制PostgreSQL 15 中的 jsonlog 日志文件

JSON 格式的日志文件,是 PostgreSQL 15 的新特性之一。

https://www.postgresql.org/docs/release/15.0/

PostgreSQL 15 contains many new features and enhancements, including:

…

Support for structured server log output using the JSON format.

…

Allow log output in JSON format (Sehrope Sarkuni, Michael Paquier)

The new setting is log_destination = jsonlog.

“实践是检验真理的唯一标准”

先来看日志输出格式示例:

{"timestamp":"2023-03-08 20:29:31.596 CST","pid":1254,"session_id":"64087fab.4e6","line_num":1,"session_start":"2023-03-08 20:29:31 CST","txid":0,"error_severity":"LOG","message":"ending log output to stderr","hint":"Future log output will go to log destination \"csvlog, jsonlog\".","backend_type":"postmaster","query_id":0}

{"timestamp":"2023-03-08 20:54:29.431 CST","pid":1945,"remote_host":"192.168.8.121","remote_port":47620,"session_id":"64088585.799","line_num":1,"ps":"","session_start":"2023-03-08 20:54:29 CST","txid":0,"error_severity":"LOG","message":"connection received: host=192.168.8.121 port=47620","backend_type":"not initialized","query_id":0}

{"timestamp":"2023-03-08 20:54:29.432 CST","user":"sbtest","dbname":"postgres","pid":1945,"remote_host":"192.168.8.121","remote_port":47620,"session_id":"64088585.799","line_num":2,"ps":"authentication","session_start":"2023-03-08 20:54:29 CST","vxid":"3/83","txid":0,"error_severity":"LOG","message":"connection authorized: user=sbtest database=postgres application_name=psql","backend_type":"client backend","query_id":0}

{"timestamp":"2023-03-08 20:54:29.434 CST","user":"sbtest","dbname":"postgres","pid":1943,"remote_host":"192.168.8.121","remote_port":47618,"session_id":"64088579.797","line_num":3,"ps":"idle","session_start":"2023-03-08 20:54:17 CST","txid":0,"error_severity":"LOG","message":"disconnection: session time: 0:00:11.618 user=sbtest database=postgres host=192.168.8.121 port=47618","application_name":"psql","backend_type":"client backend","query_id":0}

复制对照传统日志文件和 csv 格式日志文件查看。

- log

a:, u:, d:, r:, h:, b:postmaster, p:1254, P:, t:2023-03-08 20:29:31 CST, m:2023-03-08 20:29:31.596 CST, n:1678278571.596, Q:0, i:, e:00000, c:64087fab.4e6, l:3, s:2023-03-08 20:29:31 CST, v:, x:0, q:LOG: ending log output to stderr

a:, u:, d:, r:, h:, b:postmaster, p:1254, P:, t:2023-03-08 20:29:31 CST, m:2023-03-08 20:29:31.596 CST, n:1678278571.596, Q:0, i:, e:00000, c:64087fab.4e6, l:4, s:2023-03-08 20:29:31 CST, v:, x:0, q:HINT: Future log output will go to log destination "csvlog, jsonlog".

- csv

2023-03-08 20:29:31.596 CST,,,1254,,64087fab.4e6,1,,2023-03-08 20:29:31 CST,,0,LOG,00000,"ending log output to stderr",,"Future log output will go to log destination ""csvlog, jsonlog"".",,,,,,,"","postmaster",,0

复制至少可以观测到三点:

- json 格式日志由于自身属性原因,日志大小会大于 csv 格式,甚至大小翻倍。

- 但是,json 格式的有点也很明显,对于各种日志分析系统友好,不需要再转格式,也不会出现 csv 多行转换的问题。

- json/csv 的格式相对固定,而传统日志的格式可以自定义。

由于我目前还是 PG 入门级选手,对于第三点,也是在反复实验中发现,log_line_prefix 参数设定并不会影响到 json/csv 格式文件。

同时,查阅源码 (src\backend\utils\error*log.c) 后这一推测得到验证。

传统日志文件可以自定义格式,同时指定了一些特殊值,便于定制化编排日志格式,上文的实例中就是按照这种格式进行编排:

log_line_prefix = 'a:%a, u:%u, d:%d, r:%r, h:%h, b:%b, p:%p, P:%P, t:%t, m:%m, n:%n, Q:%Q, i:%i, e:%e, c:%c, l:%l, s:%s, v:%v, x:%x, q:%q'

复制经过若干小时的梳理,已将传统日志和 json 日志的字段对应整理如下:

# special values:

# %a = application name -> json : application_name (psql, HeidiSQL)

# %u = user name -> json : user

# %d = database name -> json : dbname

# %r = remote host and port -> json : remote_host, remote_port

# %h = remote host -> json : remote_host ([local])

# %b = backend type -> json : backend_type (postmaster, not initialized, client backend)

# %p = process ID -> json : pid

# %P = process ID of parallel group leader -> json : leader_pid

# %t = timestamp without milliseconds -> json : --

# %m = timestamp with milliseconds -> json : timestamp

# %n = timestamp with milliseconds (as a Unix epoch) -> json : --

# %Q = query ID (0 if none or not computed) -> json : query_id

# %i = command tag -> json : ps (authentication, idle, ALTER SYSTEM, SELECT)

# %e = SQL state -> json : state_code

# %c = session ID -> json : session_id (%lx.%x)

# %l = session line number -> json : line_num

# %s = session start timestamp -> json : session_start

# %v = virtual transaction ID -> json : vxid (%d/%u)

# %x = transaction ID (0 if none) -> json : txid

# %q = stop here in non-session -> json : message, hint, detail, context

复制json 日志仍有若干字段未匹配:

error_severity (LOG, ERROR) internal_query, internal_position statement, cursor_position func_name, file_name, file_line_num复制

关于 json 日志,上文提到的多行展示,可以参考如下示例:

- 截取一段日志内容

{"timestamp":"2023-03-08 23:41:48.152 CST","user":"postgres","dbname":"postgres","pid":4601,"remote_host":"[local]","session_id":"6408ac11.11f9","line_num":6,"ps":"idle","session_start":"2023-03-08 23:38:57 CST","vxid":"3/5","txid":0,"error_severity":"LOG","message":"statement: SELECT d.datname as \"Name\",\n pg_catalog.pg_get_userbyid(d.datdba) as \"Owner\",\n pg_catalog.pg_encoding_to_char(d.encoding) as \"Encoding\",\n d.datcollate as \"Collate\",\n d.datctype as \"Ctype\",\n d.daticulocale as \"ICU Locale\",\n CASE d.datlocprovider WHEN 'c' THEN 'libc' WHEN 'i' THEN 'icu' END AS \"Locale Provider\",\n pg_catalog.array_to_string(d.datacl, E'\\n') AS \"Access privileges\"\nFROM pg_catalog.pg_database d\nORDER BY 1;","application_name":"psql","backend_type":"client backend","query_id":0}

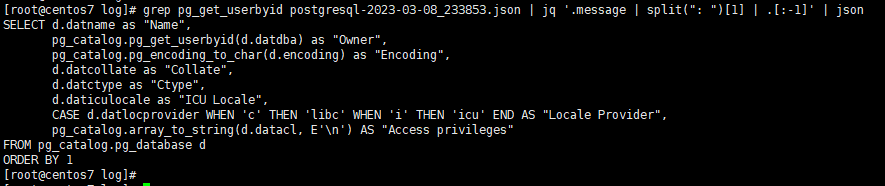

复制- 将日志中的 message 字段中的 statement 查询语句提取出来,并进行过滤、转化,可得到以下结果。

[root@centos7 log]# grep pg_get_userbyid postgresql-2023-03-08_233853.json | jq '.message | split(": ")[1] | .[:-1]' | json

SELECT d.datname as "Name",

pg_catalog.pg_get_userbyid(d.datdba) as "Owner",

pg_catalog.pg_encoding_to_char(d.encoding) as "Encoding",

d.datcollate as "Collate",

d.datctype as "Ctype",

d.daticulocale as "ICU Locale",

CASE d.datlocprovider WHEN 'c' THEN 'libc' WHEN 'i' THEN 'icu' END AS "Locale Provider",

pg_catalog.array_to_string(d.datacl, E'\n') AS "Access privileges"

FROM pg_catalog.pg_database d

ORDER BY 1

[root@centos7 log]#

复制

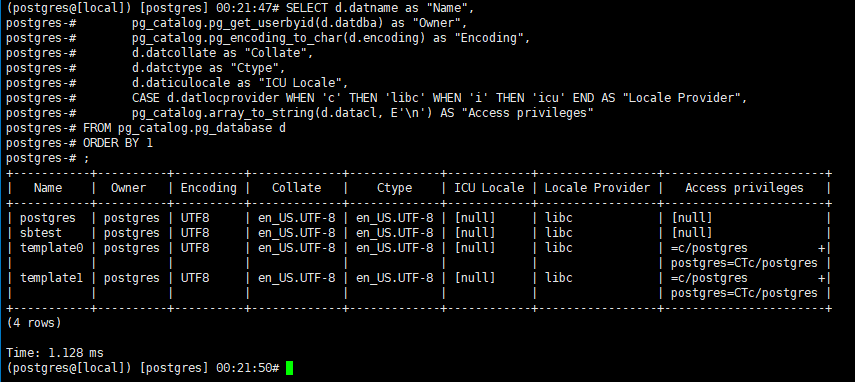

- 将格式化好的 SQL 语句放到 psql 中执行,得到如下结果。

End

到此,实验先告一段落,因为很晚了要休息了。

文章被以下合辑收录

评论

1

1