升级orachk工具

原版本:22.3 新版本23.2

下载地址:

Autonomous Health Framework (AHF) - Including TFA and ORAchk/EXAchk (Doc ID 2550798.1)

https://support.oracle.com/epmos/faces/DocumentDisplay?_afrLoop=350181324094172&id=2550798.1&displayIndex=1&_afrWindowMode=0&_adf.ctrl-state=1ajw0ejz6e_248

[root@rac1 soft]# unzip AHF-LINUX_v23.2.0.zip

Archive: AHF-LINUX_v23.2.0.zip

inflating: README.txt

inflating: ahf_setup

extracting: ahf_setup.dat

inflating: oracle-tfa.pub

[root@rac1 soft]# ll

total 767196

-rw-r--r-- 1 root root 382181907 Mar 23 14:15 AHF-LINUX_v23.2.0.zip

-rwx------ 1 root root 403405022 Mar 4 02:46 ahf_setup

-rw------- 1 root root 384 Mar 4 02:46 ahf_setup.dat

drwxr-xr-x 2 root root 42 Mar 16 10:14 db

drwxr-xr-x. 65 root root 4096 Mar 15 20:35 grid

-rw-r--r-- 1 root root 625 Mar 4 02:46 oracle-tfa.pub

drwxr-xr-x 3 root root 16 Mar 16 13:18 patch

-rw-r--r-- 1 root root 1525 Mar 4 02:46 README.txt

[root@rac1 soft]# chmod +x ahf_setup

[root@rac1 soft]# ./ahf_setup

AHF Installer for Platform Linux Architecture x86_64

AHF Installation Log : /tmp/ahf_install_232000_15026_2023_03_23-14_19_29.log

Starting Autonomous Health Framework (AHF) Installation

AHF Version: 23.2.0 Build Date: 202303021115

AHF is already installed at /opt/oracle.ahf

Installed AHF Version: 22.3.0 Build Date: 202211210342

Do you want to upgrade AHF [Y]|N : Y

AHF will also be installed/upgraded on these Cluster Nodes :

1. rac2

The AHF Location and AHF Data Directory must exist on the above nodes

AHF Location : /opt/oracle.ahf

AHF Data Directory : /oracle/app/grid/oracle.ahf/data

Do you want to install/upgrade AHF on Cluster Nodes ? [Y]|N : Y

Upgrading /opt/oracle.ahf

Shutting down AHF Services

Upgrading AHF Services

Starting AHF Services

No new directories were added to TFA

Directory /oracle/app/grid/crsdata/rac1/trace/chad was already added to TFA Directories.

AHF upgrade completed on rac1

Upgrading AHF on Remote Nodes :

AHF will be installed on rac2, Please wait.

AHF will prompt twice to install/upgrade per Remote Node. So total 2 prompts

Do you want to continue Y|[N] : Y

AHF will continue with Upgrading on remote nodes

Upgrading AHF on rac2 :

[rac2] Copying AHF Installer

root@rac2's password:

[rac2] Running AHF Installer

root@rac2's password:

Do you want AHF to store your My Oracle Support Credentials for Automatic Upload ? Y|[N] :

.------------------------------------------------------------.

| Host | TFA Version | TFA Build ID | Upgrade Status |

+------+-------------+----------------------+----------------+

| rac1 | 23.2.0.0.0 | 23200020230302111526 | UPGRADED |

| rac2 | 23.2.0.0.0 | 23200020230302111526 | UPGRADED |

'------+-------------+----------------------+----------------'

Setting up AHF CLI and SDK

AHF is successfully upgraded to latest version

Moving /tmp/ahf_install_232000_15026_2023_03_23-14_19_29.log to /oracle/app/grid/oracle.ahf/data/rac1/diag/ahf/

[root@rac1 soft]# orachk -v

ORACHK VERSION: 23.2.0_20230302

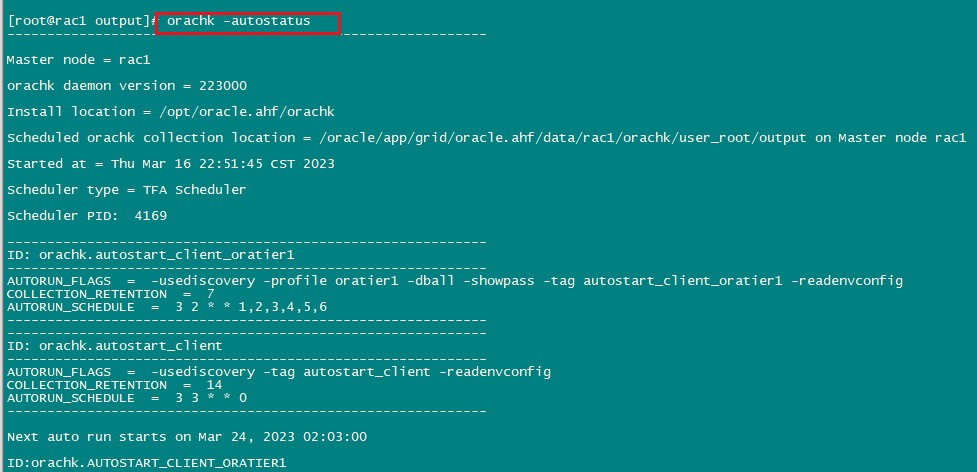

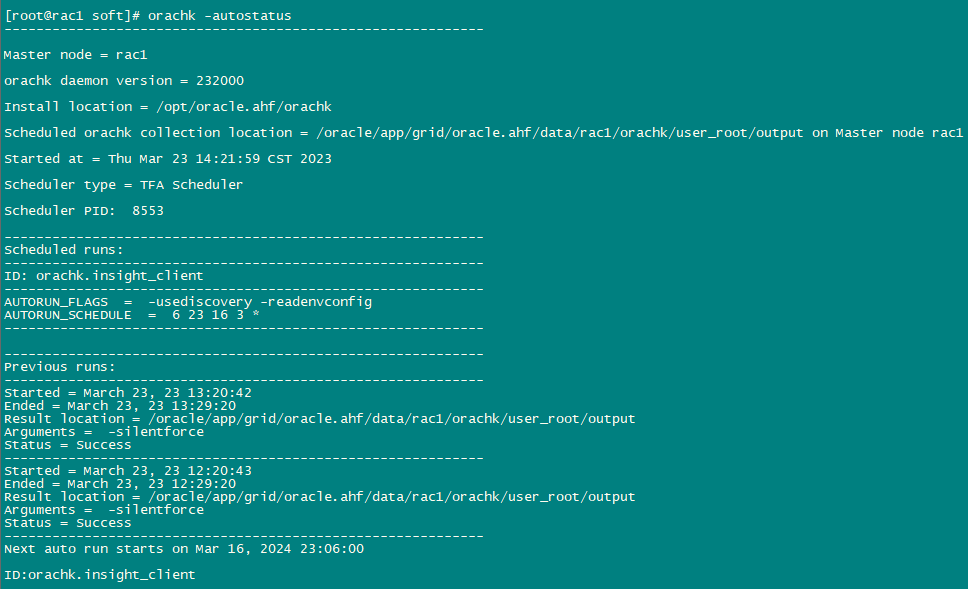

[root@rac1 soft]# orachk -autostatus

------------------------------------------------------------

Master node = rac1

orachk daemon version = 232000

Install location = /opt/oracle.ahf/orachk

Scheduled orachk collection location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output on Master node rac1

Started at = Thu Mar 23 14:21:59 CST 2023

Scheduler type = TFA Scheduler

Scheduler PID: 8553

------------------------------------------------------------

Scheduled runs:

------------------------------------------------------------

ID: orachk.insight_client

------------------------------------------------------------

AUTORUN_FLAGS = -usediscovery -readenvconfig

AUTORUN_SCHEDULE = 6 23 16 3 *

------------------------------------------------------------

------------------------------------------------------------

Previous runs:

------------------------------------------------------------

Started = March 23, 23 13:20:42

Ended = March 23, 23 13:29:20

Result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output

Arguments = -silentforce

Status = Success

------------------------------------------------------------

Started = March 23, 23 12:20:43

Ended = March 23, 23 12:29:20

Result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output

Arguments = -silentforce

Status = Success

------------------------------------------------------------

Next auto run starts on Mar 16, 2024 23:06:00

ID:orachk.insight_client

由于后台日志一直输出报错。

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

2023-03-23T13:21:13.064313+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_14914.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

ORA-01017: 2023-03-23T13:21:13.858875+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15038.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

2023-03-23T13:21:13.863078+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15038.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

ORA-01017: 2023-03-23T13:21:14.219992+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15090.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

2023-03-23T13:21:14.224496+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15090.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

ORA-01017: 2023-03-23T13:21:14.699104+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15128.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

2023-03-23T13:21:14.703345+08:00

Errors in file /oracle/app/oracle/diag/rdbms/cis/cis2/trace/cis2_ora_15128.trc:

ORA-17503: ksfdopn: 2 δDATA/CIS/PASSWORD/pwdcis.263.1131705075

ORA-27300: ϵͳϵͳ: open ʧ״̬Ϊ: 13

ORA-27301: ϵͳ: Permission denied

ORA-27302: sskgmsmr_7

所以临时停止任务:

orachk -autostop

然后重启两节点。

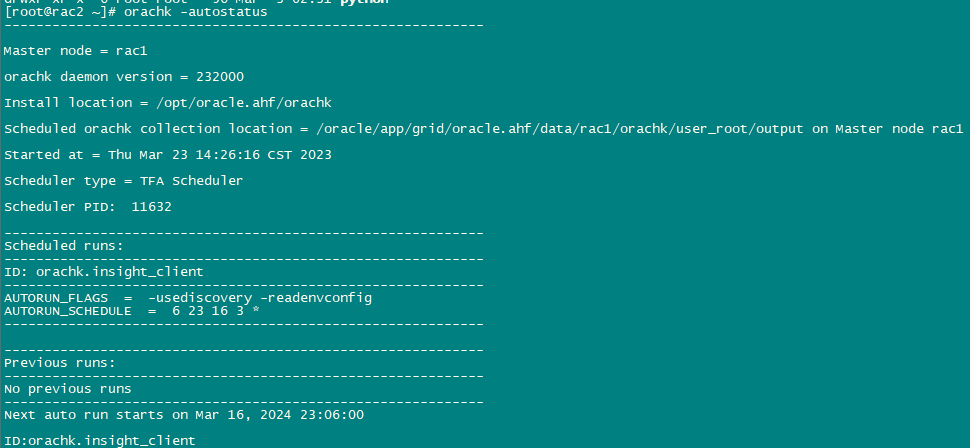

先在oracle用户下手动执行收集,测试查看oracle alert日志是否会报错。

[oracle@rac1 ~]$ orachk

Clusterware stack is running from /oracle/app/19c/grid. Is this the correct Clusterware Home?[y/n][y]

Searching for running databases . . . . .

. .

List of running databases registered in OCR

1. cis

2. None of above

Select databases from list for checking best practices. For multiple databases, select 1 for All or comma separated number like 1,2 etc [1-2][1].

. . . . . .

Some audit checks might require root privileged data collection on . Is sudo configured for oracle user to execute root_orachk.sh script?[y/n][n] y

Either Cluster Verification Utility pack (cvupack) does not exist at /opt/oracle.ahf/common/cvu or it is an old or invalid cvupack

Checking Cluster Verification Utility (CVU) version at CRS Home - /oracle/app/19c/grid

Starting to run orachk in background on rac2 using socket

.

. . . .

. .

Checking Status of Oracle Software Stack - Clusterware, ASM, RDBMS on rac1

. . . . . .

. . . . . . . . . . . . .

-------------------------------------------------------------------------------------------------------

Oracle Stack Status

-------------------------------------------------------------------------------------------------------

Host Name CRS Installed RDBMS Installed CRS UP ASM UP RDBMS UP DB Instance Name

-------------------------------------------------------------------------------------------------------

rac1 Yes Yes Yes Yes Yes cis1

-------------------------------------------------------------------------------------------------------

.

. . . . . .

.

.

*** Checking Best Practice Recommendations ( Pass / Warning / Fail ) ***

.

============================================================

Node name - rac1

============================================================

. . . . . .

Collecting - ASM Disk Groups

Collecting - ASM Disk I/O stats

Collecting - ASM Diskgroup Attributes

Collecting - ASM disk partnership imbalance

Collecting - ASM diskgroup attributes

Collecting - ASM diskgroup usable free space

Collecting - ASM initialization parameters

Collecting - Active sessions load balance for cis database

Collecting - Archived Destination Status for cis database

Collecting - Cluster Interconnect Config for cis database

Collecting - Database Archive Destinations for cis database

Collecting - Database Files for cis database

Collecting - Database Instance Settings for cis database

Collecting - Database Parameters for cis database

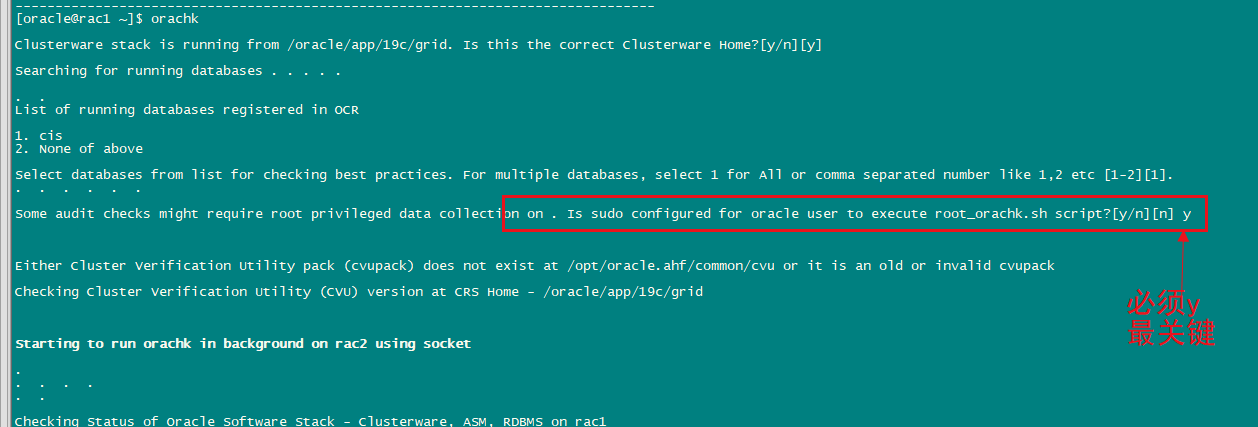

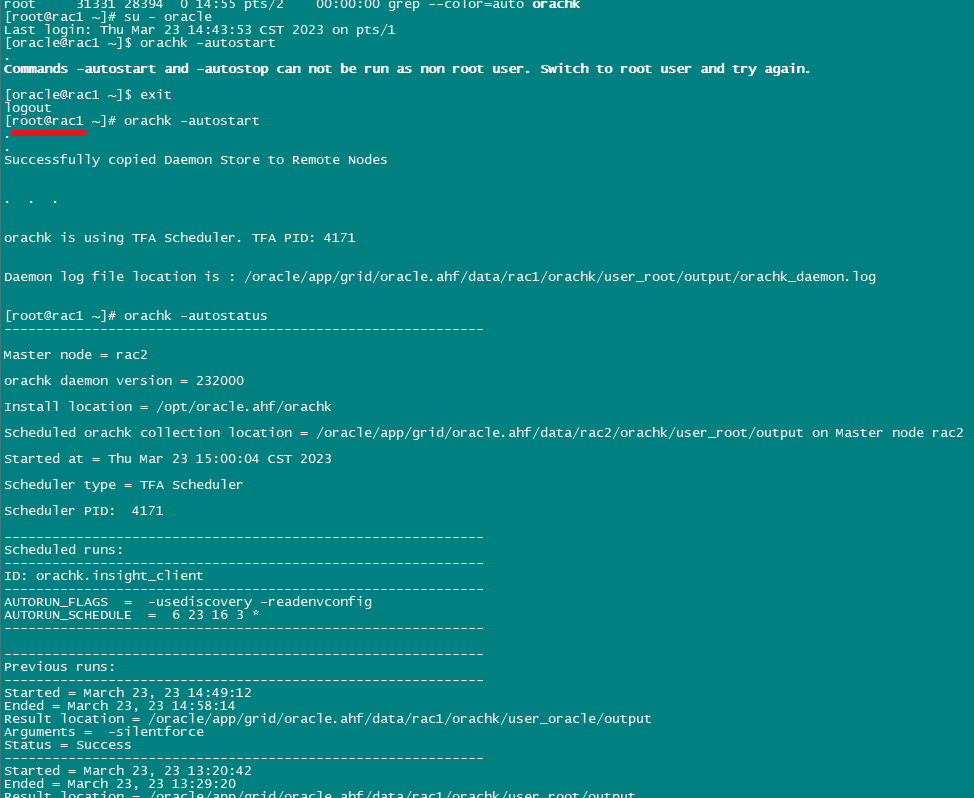

在root用户下设置自动启动,并手动测试orachk,看oracle的alert日志是否报错?

[oracle@rac1 ~]$ orachk -autostart

.

Commands -autostart and -autostop can not be run as non root user. Switch to root user and try again.

[oracle@rac1 ~]$ exit

logout

[root@rac1 ~]# orachk -autostart

.

.

Successfully copied Daemon Store to Remote Nodes

. . .

orachk is using TFA Scheduler. TFA PID: 4171

Daemon log file location is : /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output/orachk_daemon.log

[root@rac1 ~]# orachk -autostatus

------------------------------------------------------------

Master node = rac2

orachk daemon version = 232000

Install location = /opt/oracle.ahf/orachk

Scheduled orachk collection location = /oracle/app/grid/oracle.ahf/data/rac2/orachk/user_root/output on Master node rac2

Started at = Thu Mar 23 15:00:04 CST 2023

Scheduler type = TFA Scheduler

Scheduler PID: 4171

------------------------------------------------------------

Scheduled runs:

------------------------------------------------------------

ID: orachk.insight_client

------------------------------------------------------------

AUTORUN_FLAGS = -usediscovery -readenvconfig

AUTORUN_SCHEDULE = 6 23 16 3 *

------------------------------------------------------------

------------------------------------------------------------

Previous runs:

------------------------------------------------------------

Started = March 23, 23 14:49:12

Ended = March 23, 23 14:58:14

Result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_oracle/output

Arguments = -silentforce

Status = Success

------------------------------------------------------------

Started = March 23, 23 13:20:42

Ended = March 23, 23 13:29:20

Result location = /oracle/app/grid/oracle.ahf/data/rac1/orachk/user_root/output

Arguments = -silentforce

Status = Success

------------------------------------------------------------

Next auto run starts on Mar 16, 2024 23:06:00

ID:orachk.insight_client

[root@rac1 ~]# orachk

Clusterware stack is running from /oracle/app/19c/grid. Is this the correct Clusterware Home?[y/n][y]

Searching for running databases . . . . .

. .

List of running databases registered in OCR

1. cis

2. None of above

Select databases from list for checking best practices. For multiple databases, select 1 for All or comma separated number like 1,2 etc [1-2][1].

. . . . . .

Either Cluster Verification Utility pack (cvupack) does not exist at /opt/oracle.ahf/common/cvu or it is an old or invalid cvupack

Checking Cluster Verification Utility (CVU) version at CRS Home - /oracle/app/19c/grid

Starting to run orachk in background on rac2 using socket

.

. . . .

. .

Checking Status of Oracle Software Stack - Clusterware, ASM, RDBMS on rac1

. . . . . .

. . . . . . . . . . . . .

-------------------------------------------------------------------------------------------------------

Oracle Stack Status

-------------------------------------------------------------------------------------------------------

Host Name CRS Installed RDBMS Installed CRS UP ASM UP RDBMS UP DB Instance Name

-------------------------------------------------------------------------------------------------------

rac1 Yes Yes Yes Yes Yes cis1

-------------------------------------------------------------------------------------------------------

.

. . . . . .

.

.

*** Checking Best Practice Recommendations ( Pass / Warning / Fail ) ***

.

============================================================

Node name - rac1

============================================================

. . . . . .

Collecting - ASM Disk Groups

Collecting - ASM Disk I/O stats

Collecting - ASM Diskgroup Attributes

Collecting - ASM disk partnership imbalance

Collecting - ASM diskgroup attributes

Collecting - ASM diskgroup usable free space

Collecting - ASM initialization parameters

Collecting - Active sessions load balance for cis database

Collecting - Archived Destination Status for cis database

Collecting - Cluster Interconnect Config for cis database

Collecting - Database Archive Destinations for cis database

Collecting - Database Files for cis database

Collecting - Database Instance Settings for cis database

Collecting - Database Parameters for cis database

Collecting - Database Properties for cis database

Collecting - Database Registry for cis database

Collecting - Database Sequences for cis database

Collecting - Database Undocumented Parameters for cis database

Collecting - Database Undocumented Parameters for cis database

Collecting - Database Workload Services for cis database

Collecting - Dataguard Status for cis database

Collecting - Files not opened by ASM

Collecting - List of active logon and logoff triggers for cis database

Collecting - Log Sequence Numbers for cis database

Collecting - Percentage of asm disk Imbalance

Collecting - Process for shipping Redo to standby for cis database

Collecting - Redo Log information for cis database

Collecting - Standby redo log creation status before switchover for cis database

Collecting - /proc/cmdline

Collecting - /proc/modules

Collecting - CPU Information

Collecting - CRS active version

Collecting - CRS oifcfg

Collecting - CRS software version

Collecting - CSS Reboot time

Collecting - Cluster interconnect (clusterware)

Collecting - Clusterware OCR healthcheck

Collecting - Clusterware Resource Status

Collecting - Disk I/O Scheduler on Linux

Collecting - DiskFree Information

Collecting - DiskMount Information

Collecting - Huge pages configuration

Collecting - Interconnect network card speed

Collecting - Kernel parameters

Collecting - Linux module config.

Collecting - Maximum number of semaphore sets on system

Collecting - Maximum number of semaphores on system

Collecting - Maximum number of semaphores per semaphore set

Collecting - Memory Information

Collecting - NUMA Configuration

Collecting - Network Interface Configuration

Collecting - Network Performance

Collecting - Network Service Switch

Collecting - OS Packages

Collecting - OS version

Collecting - Operating system release information and kernel version

Collecting - Oracle executable attributes

Collecting - Patches for Grid Infrastructure

Collecting - Patches for RDBMS Home

Collecting - RDBMS and GRID software owner UID across cluster

Collecting - RDBMS patch inventory

Collecting - Shared memory segments

Collecting - Table of file system defaults

Collecting - Voting disks (clusterware)

Collecting - number of semaphore operations per semop system call

Collecting - CHMAnalyzer to report potential Operating system resources usage

[root@rac1 ~]# tfactl status

.-------------------------------------------------------------------------------------------.

| Host | Status of TFA | PID | Port | Version | Build ID | Inventory Status |

+------+---------------+------+------+------------+----------------------+------------------+

| rac1 | RUNNING | 4141 | 5000 | 23.2.0.0.0 | 23200020230302111526 | COMPLETE |

| rac2 | RUNNING | 4076 | 5000 | 23.2.0.0.0 | 23200020230302111526 | COMPLETE |

'------+---------------+------+------+------------+----------------------+------------------'

[root@rac1 ~]# tfactl print config

.-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------.

| rac1 |

+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+------------+

| Configuration Parameter | Value |

+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+------------+

| TFA Version ( tfaversion ) | 23.2.0.0.0 |

| Java Version ( javaVersion ) | 1.8 |

| Public IP Network ( publicIp ) | true |

| Repository current size (MB) ( currentsizemegabytes ) | 903 |

| Repository maximum size (MB) ( maxsizemegabytes ) | 10240 |

| Enables the execution of sqls throught SQL Agent process ( sqlAgent ) | ON |

| Cluster Event Monitor ( clustereventmonitor ) | ON |

| scanacfslog | OFF |

| Automatic Purging ( autoPurge ) | ON |

| Internal Search String ( internalSearchString ) | ON |

| Trim Files ( trimfiles ) | ON |

| collectTrm | OFF |

| chmdataapi | ON |

| chanotification ( chanotification ) | ON |

| reloadCrsDataAfterBlackout | OFF |

| Consolidate similar events (COUNT shows number of events occurences) ( consolidate_events ) | OFF |

| Managelogs Auto Purge ( manageLogsAutoPurge ) | OFF |

| Applin Incidents automatic Collections ( applin_incidents ) | OFF |

| Alert Log Scan ( rtscan ) | ON |

| generateZipMetadataJson | ON |

| singlefileupload | OFF |

| Auto Sync Certificates ( autosynccertificates ) | ON |

| Auto Diagcollection ( autodiagcollect ) | ON |

| smartprobclassifier | ON |

| Send CEF metrics to OCI Monitoring ( defaultOciMonitoring ) | OFF |

| Generation of Mini Collections ( minicollection ) | ON |

| Disk Usage Monitor ( diskUsageMon ) | ON |

| Send user events ( send_user_initiated_events ) | ON |

| Generation of Auto Collections from alarm definitions ( alarmAutocollect ) | ON |

| Send audit event for collections ( cloudOpsLogCollection ) | OFF |

| analyze | OFF |

| Generation of Telemetry Data ( telemetry ) | OFF |

| chaautocollect | OFF |

| queryAPI | ON |

| scandiskmon | OFF |

| File Data Collection ( inventory ) | ON |

| ISA Data Gathering ( collection.isa ) | ON |

| Skip event if it was flood controlled ( floodcontrol_events ) | OFF |

| Automatic AHF Insights Report Generation ( autoInsight ) | OFF |

| collectonsystemstate | ON |

| chmretention | OFF |

| scanacfseventlog | OFF |

| debugips | OFF |

| collectAllDirsByFile | ON |

| scanvarlog | OFF |

| restart_telemetry_adapter | OFF |

| Telemetry Adapter ( telemetry_adapter ) | OFF |

| Public IP Network ( publicIp ) | ON |

| Flood Control ( floodcontrol ) | ON |

| odscan | ON |

| Start consuming data provided by SQLTicker ( sqlticker ) | OFF |

| Discovery ( discovery ) | ON |

| indexInventory | ON |

| Send CEF notifications ( customerDiagnosticsNotifications ) | OFF |

| Granular Tracing ( granulartracing ) | OFF |

| Send CEF metrics ( cloudOpsHealthMonitoring ) | OFF |

| minPossibleSpaceForPurge | 1024 |

| disk.threshold | 90 |

| Minimum space in MB required to run TFA. TFA will be stopped until at least this amount of space is available in the DATA Directory (Takes effect at next startup) ( minSpaceToRunTFA ) | 20 |

| mem.swapfree | 5120 |

| mem.util.samples | 4 |

| Max Number Cells for Diag Collection ( maxcollectedcells ) | 14 |

| inventoryThreadPoolSize | 1 |

| mem.swaptotal.samples | 2 |

| maxFileAgeToPurge | 1440 |

| mem.free | 20480 |

| actionrestartlimit | 30 |

| Minimum Free Space to enable Alert Log Scan (MB) ( minSpaceForRTScan ) | 500 |

| cpu.io.samples | 30 |

| mem.util | 80 |

| Waiting period before retry loading OTTO endpoint details (minutes) ( ottoEndpointRetryPolicy ) | 60 |

| Maximum single Zip File Size (MB) ( maxZipSize ) | 2048 |

| Time interval between consecutive Disk Usage Snapshot(minutes) ( diskUsageMonInterval ) | 60 |

| TFA ISA Purge Thread Delay (minutes) ( tfaDbUtlPurgeThreadDelay ) | 60 |

| firstDiscovery | 1 |

| TFA IPS Pool Size ( tfaIpsPoolSize ) | 5 |

| Maximum File Collection Size (MB) ( maxFileCollectionSize ) | 1024 |

| Time interval between consecutive Managelogs Auto Purge(minutes) ( manageLogsAutoPurgeInterval ) | 60 |

| arc.backupmissing.samples | 2 |

| cpu.util.samples | 2 |

| cpu.usr.samples | 2 |

| cpu.sys | 50 |

| Flood Control Limit Count ( fc.limit ) | 3 |

| Flood Control Pause Time (minutes) ( fc.pauseTime ) | 120 |

| Maximum Number of TFA Logs ( maxLogCount ) | 10 |

| DB Backup Delay Hours ( dbbackupdelayhours ) | 27 |

| cdb.backup.samples | 1 |

| arc.backupstatus | 1 |

| Automatic Purging Frequency ( purgeFrequency ) | 4 |

| TFA ISA Purge Age (seconds) ( tfaDbUtlPurgeAge ) | 2592000 |

| Maximum Collection Size of Core Files (MB) ( maxCoreCollectionSize ) | 500 |

| Maximum Compliance Index Size (MB) ( maxcompliancesize ) | 150 |

| cpu.util | 80 |

| Maximum Size in MB allowed for alert file inside IPS Zip ( ipsAlertlogTrimsizeMB ) | 20 |

| mem.swapfree.samples | 2 |

| cdb.backupstatus | 1 |

| mem.swaputl.samples | 2 |

| arc.backup.samples | 3 |

| unreachablenodeTimeOut | 3600 |

| Flood Control Limit Time (minutes) ( fc.limitTime ) | 60 |

| mem.swaputl | 10 |

| mem.free.samples | 2 |

| Maximum Size of Core File (MB) ( maxCoreFileSize ) | 50 |

| disk.samples | 1 |

| cpu.sys.samples | 30 |

| cpu.usr | 98 |

| arc.backupmissing | 1 |

| cpu.io | 20 |

| Archive Backup Delay Minutes ( archbackupdelaymins ) | 40 |

| Allowed Sqlticker Delay in Minutes ( sqltickerdelay ) | 3 |

| inventoryPurgeThreadInterval | 720 |

| Age of Purging Collections (Hours) ( minFileAgeToPurge ) | 12 |

| cpu.idle.samples | 2 |

| unreachablenodeSleepTime | 300 |

| cpu.idle | 20 |

| mem.swaptotal | 24 |

| TFA ISA CRS Profile Delay (minutes) ( tfaDbUtlCrsProfileDelay ) | 2 |

| Frequency at which the fleet configurations will be updated ( cloudRefreshConfigRate ) | 60 |

| Maximum Compliance Runs to be Indexed ( maxcomplianceruns ) | 100 |

| cdb.backupmissing | 1 |

| cdb.backupmissing.samples | 2 |

| Trim Size ( trimsize ) | 488.28 KB |

| Maximum Size of TFA Log (MB) ( maxLogSize ) | 52428800 |

| minTimeForAutoDiagCollection | 300 |

| skipScanThreshold | 100 |

| fileCountInventorySwitch | 5000 |

| TFA ISA Purge Mode ( tfaDbUtlPurgeMode ) | simple |

| country | US |

| Debug Mask (Hex) ( debugmask ) | 0x000000 |

| Object Store Secure Upload ( oss.secure.upload ) | true |

| Rediscovery Interval ( rediscoveryInterval ) | 30m |

| Setting for ACR redaction (none|SANITIZE|MASK) ( redact ) | none |

| language | en |

| AlertLogLevel | ALL |

| BaseLogPath | ERROR |

| encoding | UTF-8 |

| Lucene index recovery mode ( indexRecoveryMode ) | recreate |

| Automatic Purge Strategy ( purgeStrategy ) | SIZE |

| UserLogLevel | ALL |

| Logs older than the time period will be auto purged(days[d]|hours[h]) ( manageLogsAutoPurgePolicyAge ) | 30d |

| isaMode | enabled |

'------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+------------'

[root@rac1 ~]# tfactl toolstatus

Running command tfactltoolstatus on rac2 ...

.------------------------------------------------------------------.

| TOOLS STATUS - HOST : rac2 |

+----------------------+--------------+--------------+-------------+

| Tool Type | Tool | Version | Status |

+----------------------+--------------+--------------+-------------+

| AHF Utilities | alertsummary | 23.2.0 | DEPLOYED |

| | calog | 23.2.0 | DEPLOYED |

| | dbglevel | 23.2.0 | DEPLOYED |

| | grep | 23.2.0 | DEPLOYED |

| | history | 23.2.0 | DEPLOYED |

| | ls | 23.2.0 | DEPLOYED |

| | managelogs | 23.2.0 | DEPLOYED |

| | menu | 23.2.0 | DEPLOYED |

| | orachk | 23.2.0 | DEPLOYED |

| | param | 23.2.0 | DEPLOYED |

| | ps | 23.2.0 | DEPLOYED |

| | pstack | 23.2.0 | DEPLOYED |

| | summary | 23.2.0 | DEPLOYED |

| | tail | 23.2.0 | DEPLOYED |

| | triage | 23.2.0 | DEPLOYED |

| | vi | 23.2.0 | DEPLOYED |

+----------------------+--------------+--------------+-------------+

| Development Tools | oratop | 14.1.2 | DEPLOYED |

+----------------------+--------------+--------------+-------------+

| Support Tools Bundle | darda | 2.10.0.R6036 | DEPLOYED |

| | oswbb | 22.1.0AHF | RUNNING |

| | prw | 12.1.13.11.4 | NOT RUNNING |

'----------------------+--------------+--------------+-------------'

Note :-

DEPLOYED : Installed and Available - To be configured or run interactively.

NOT RUNNING : Configured and Available - Currently turned off interactively.

RUNNING : Configured and Available.

.------------------------------------------------------------------.

| TOOLS STATUS - HOST : rac1 |

+----------------------+--------------+--------------+-------------+

| Tool Type | Tool | Version | Status |

+----------------------+--------------+--------------+-------------+

| AHF Utilities | alertsummary | 23.2.0 | DEPLOYED |

| | calog | 23.2.0 | DEPLOYED |

| | dbglevel | 23.2.0 | DEPLOYED |

| | grep | 23.2.0 | DEPLOYED |

| | history | 23.2.0 | DEPLOYED |

| | ls | 23.2.0 | DEPLOYED |

| | managelogs | 23.2.0 | DEPLOYED |

| | menu | 23.2.0 | DEPLOYED |

| | orachk | 23.2.0 | DEPLOYED |

| | param | 23.2.0 | DEPLOYED |

| | ps | 23.2.0 | DEPLOYED |

| | pstack | 23.2.0 | DEPLOYED |

| | summary | 23.2.0 | DEPLOYED |

| | tail | 23.2.0 | DEPLOYED |

| | triage | 23.2.0 | DEPLOYED |

| | vi | 23.2.0 | DEPLOYED |

+----------------------+--------------+--------------+-------------+

| Development Tools | oratop | 14.1.2 | DEPLOYED |

+----------------------+--------------+--------------+-------------+

| Support Tools Bundle | darda | 2.10.0.R6036 | DEPLOYED |

| | oswbb | 22.1.0AHF | RUNNING |

| | prw | 12.1.13.11.4 | NOT RUNNING |

'----------------------+--------------+--------------+-------------'

Note :-

DEPLOYED : Installed and Available - To be configured or run interactively.

NOT RUNNING : Configured and Available - Currently turned off interactively.

RUNNING : Configured and Available.

问题参考:

https://support.oracle.com/epmos/faces/CommunityDisplay?resultUrl=https%3A%2F%2Fcommunity.oracle.com%2Fmosc%2Fdiscussion%2Fcomment%2F16908759&_afrLoop=350147762629898&resultTitle=Re%3A+ora-17503+ora-27301+ora-1017+duration+10+second+at+02%3A04+every+day&commId=&displayIndex=1&_afrWindowMode=0&_adf.ctrl-state=1ajw0ejz6e_231#Comment_16908759

http://blog.itpub.net/26442936/viewspace-2878853/