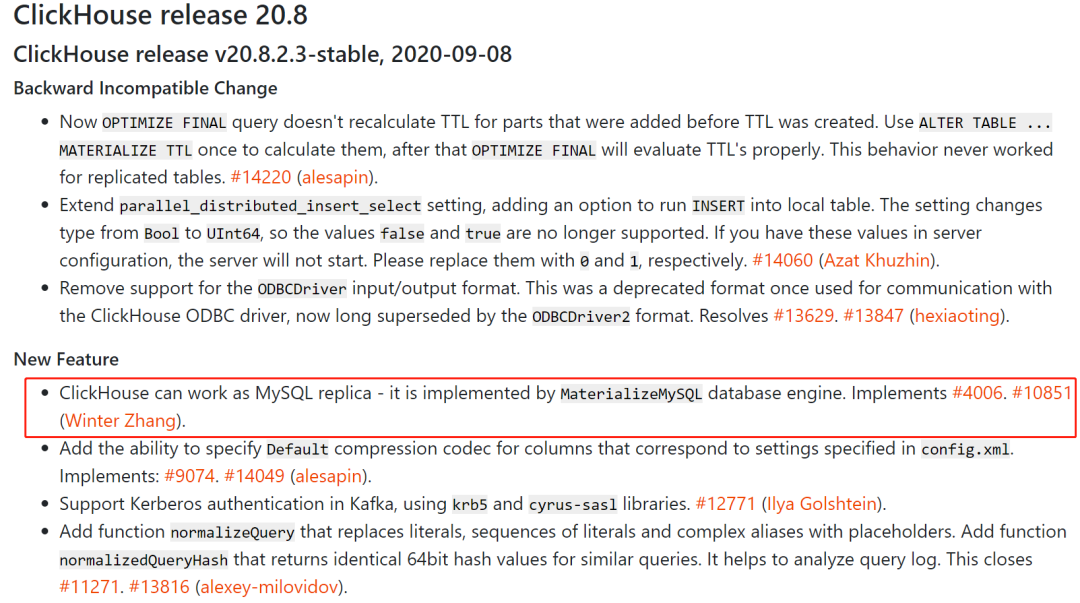

ClickHouse的数据同步功能一直是万众期待,之前有一些开源工具来做,比如Bifrost、mysql-clickhouse-replication、clickhouse-mysql-data-reader 等。

这个月官方终于推出了带同步功能的稳定版本--20.8. X。值得注意的是,该功能是两位国人技术大牛 BohuTANG 和 zhang1024 提交的。

但根据小道消息,目前这个稳定版的同步功能还有些小问题,因此今天的测试使用了最新的测试版本,而非稳定版。

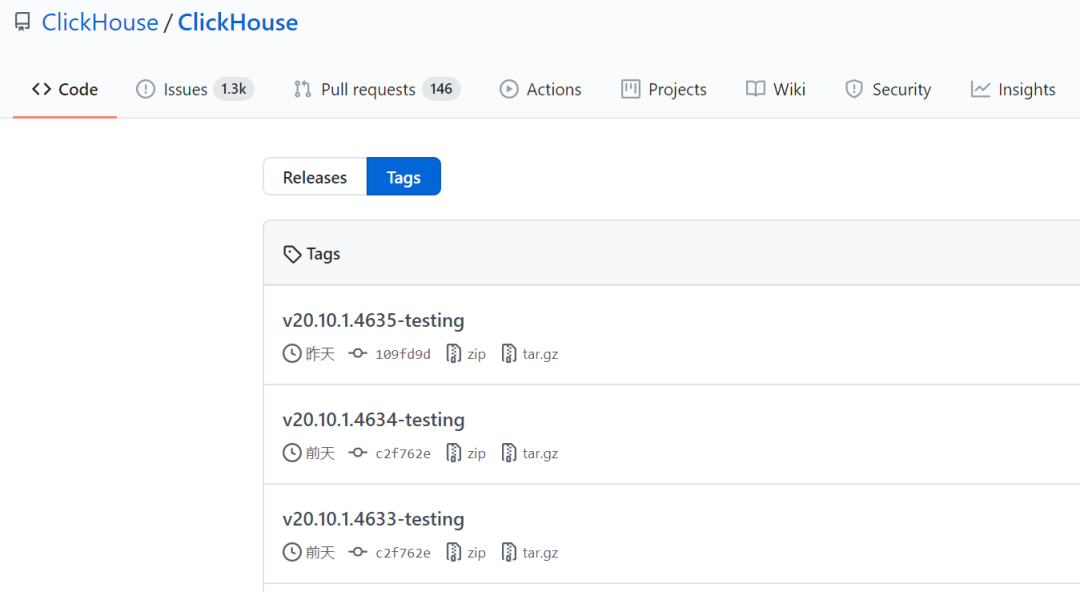

1 版本选择

以下罗列了所有版本tag

https://github.com/ClickHouse/ClickHouse/tags

可以看到最新的测试版是:v20.10.1.4635-testing

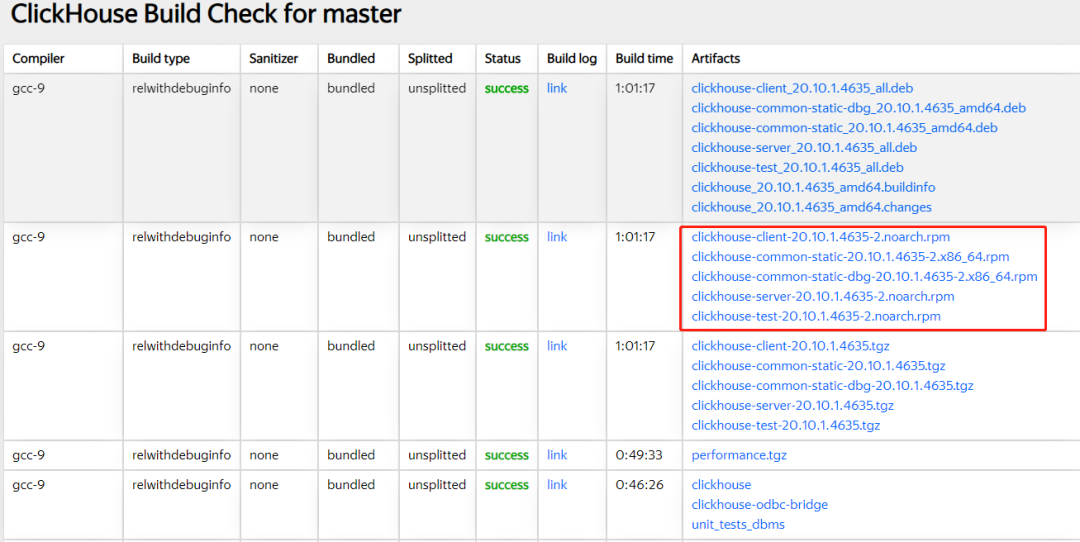

编译好的RPM包大全

https://clickhouse-builds.s3.yandex.net/0/109fd9d6d7a0e053bc8272dbef25febb9ba9efc1/clickhouse_build_check/report.html复制

找到Linux下的rpm包下载即可

2 安装新版本

# 找了个测试环境,检查当前CH版本:

[root@bj79 ~]# rpm -qa|grep clickclickhouse-common-static-20.3.5.21-2.x86_64clickhouse-client-20.3.5.21-2.noarchclickhouse-server-20.3.5.21-2.noarch复制

# 停CH服务

[root@bj79 ~]# service clickhouse-server statusclickhouse-server service is running[root@bj79 ~]#[root@bj79 ~]# service clickhouse-server stopStop clickhouse-server service: DONE复制

# 准备升级包

[root@bj79 ch_20]# lltotal 138620-rw-r--r-- 1 root root 125126 Sep 14 11:28 clickhouse-client-20.10.1.4635-2.noarch.rpm-rw-r--r-- 1 root root 141662768 Sep 14 11:30 clickhouse-common-static-20.10.1.4635-2.x86_64.rpm-rw-r--r-- 1 root root 149103 Sep 14 11:29 clickhouse-server-20.10.1.4635-2.noarch.rpm复制

# 版本升级

[root@bj79 ch_20]# rpm -Uvh clickhouse*.rpmPreparing... ################################# [100%]Updating installing...1:clickhouse-common-static-20.10.1.################################# [ 17%]2:clickhouse-client-20.10.1.4635-2 ################################# [ 33%]3:clickhouse-server-20.10.1.4635-2 ################################# [ 50%]Path to data directory in etc/clickhouse-server/config.xml: var/lib/clickhouse/Cleaning up removing...4:clickhouse-server-20.3.5.21-2 ################################# [ 67%]5:clickhouse-client-20.3.5.21-2 ################################# [ 83%]6:clickhouse-common-static-20.3.5.2################################# [100%][root@bj79 ch_20]#复制

# 启动服务,检查版本已经更新成功

[root@bj79 ch_20]# systemctl start clickhouse-server[root@bj79 ch_20]#[root@bj79 ch_20]# clickhouse-client -mClickHouse client version 20.10.1.4635 (official build).Connecting to localhost:9000 as user default.Connected to ClickHouse server version 20.10.1 revision 54440.bj79 :)bj79 :) show databases;SHOW DATABASES┌─name───────────────────────────┐│ _temporary_and_external_tables ││ default ││ system │└────────────────────────────────┘3 rows in set. Elapsed: 0.002 sec.复制

3 准备测试数据

# MySQL:建立复制的用户

GRANT REPLICATION SLAVE,REPLICATION CLIENT,RELOAD,SELECT ON *.* to 'ch_repl'@'%' identified by 'Ch_repl_123';复制

# 建立测试表:

CREATE TABLE `t` (`id` int(11) NOT NULL,`a` int(11) DEFAULT NULL,`b` int(11) DEFAULT NULL,PRIMARY KEY (`id`),KEY `a` (`a`),KEY `b` (`b`)) ENGINE=InnoDB;复制

# 生成10万的测试数据:

delimiter ;;create procedure idata()begindeclare i int;set i=1;while(i<=100000)doinsert into t values(i, i, i);set i=i+1;end while;end;;delimiter ;复制

# 执行时间很慢:

[root@localhost][caihao_test]> call idata();Query OK, 1 row affected (6 min 48.02 sec)复制

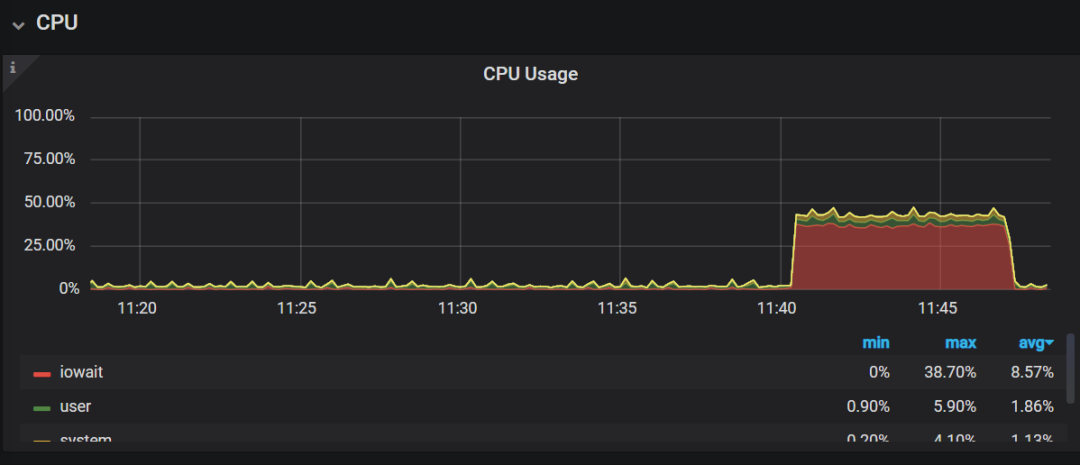

# 期间的系统负载:

# 为了方便观察,MySQL上开启general_log

[root@localhost][caihao_test]> set global general_log=1;Query OK, 0 rows affected (0.00 sec)复制

4 开始数据同步

# 语法的格式:

CREATE DATABASE ckdb ENGINE = MaterializeMySQL('[mysql-host]:[mysql-port]', '[mysql-database]', '[mysql-user]', '[mysql-password]');复制

# 执行同步:

bj79 :) SET allow_experimental_database_materialize_mysql=1;SET allow_experimental_database_materialize_mysql = 1Ok.0 rows in set. Elapsed: 0.001 sec.bj79 :) CREATE DATABASE caihao_test ENGINE = MaterializeMySQL('192.168.0.77:3306', 'caihao_test', 'ch_repl', 'Ch_repl_123');CREATE DATABASE caihao_testENGINE = MaterializeMySQL('192.168.0.77:3306', 'caihao_test', 'ch_repl', 'Ch_repl_123')Ok.0 rows in set. Elapsed: 0.056 sec.复制

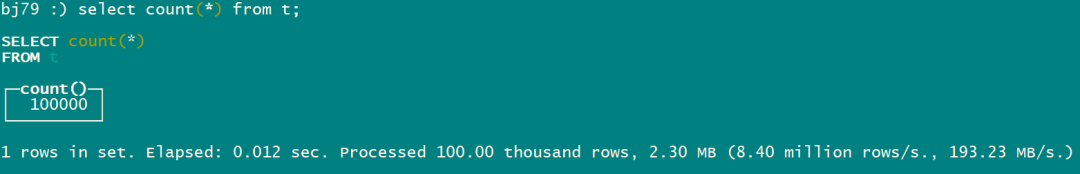

# 检查同步后的数据量:

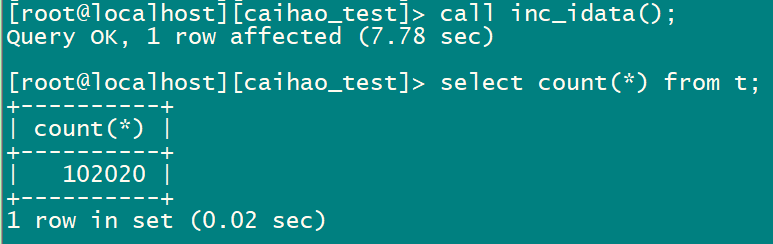

# MySQL端,继续插入2020条数据:

delimiter ;;create procedure inc_idata()begindeclare i int;set i=100001;while(i<=102020)doinsert into t values(i, i, i);set i=i+1;end while;end;;delimiter ;call inc_idata();复制

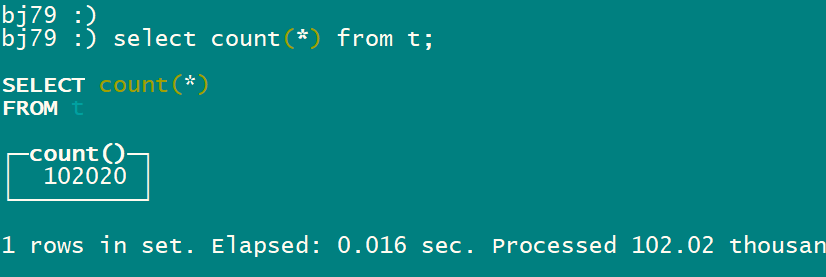

# 对比两边数据一致,增量数据一条不少

# 两边的位点对比:

# 源端MySQL:[root@localhost][(none)]> show master status\G*************************** 1. row ***************************File: binlog.000001Position: 32936647Binlog_Do_DB:Binlog_Ignore_DB:Executed_Gtid_Set: 431b0ec6-3561-11ea-a9a1-00163e0ec877:1-1001151 row in set (0.00 sec)# 目标端CH:[root@bj79 caihao_test]# more var/lib/clickhouse/metadata/caihao_test/.metadataVersion: 2Binlog File: binlog.000001Executed GTID: 431b0ec6-3561-11ea-a9a1-00163e0ec877:1-100115Binlog Position: 32936647Data Version: 1复制

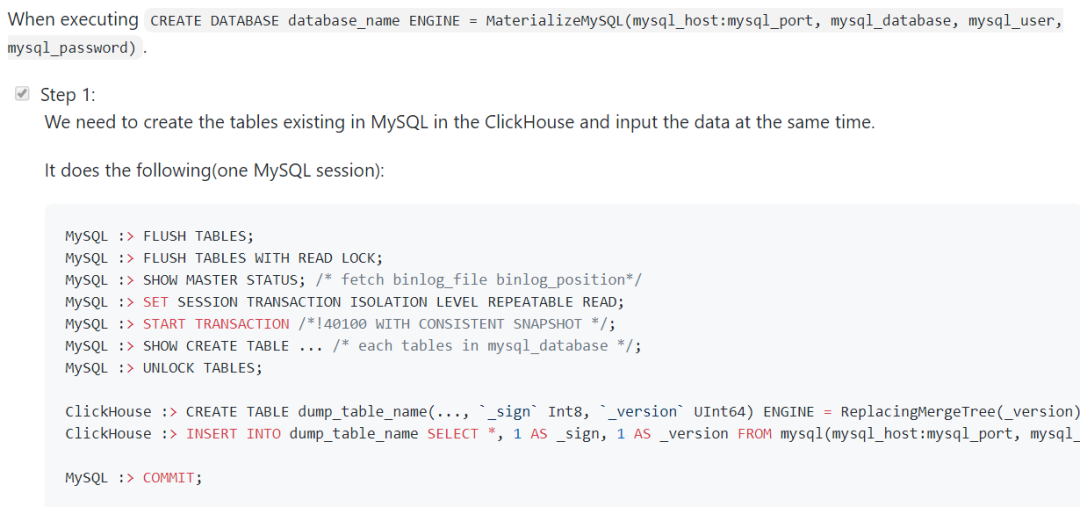

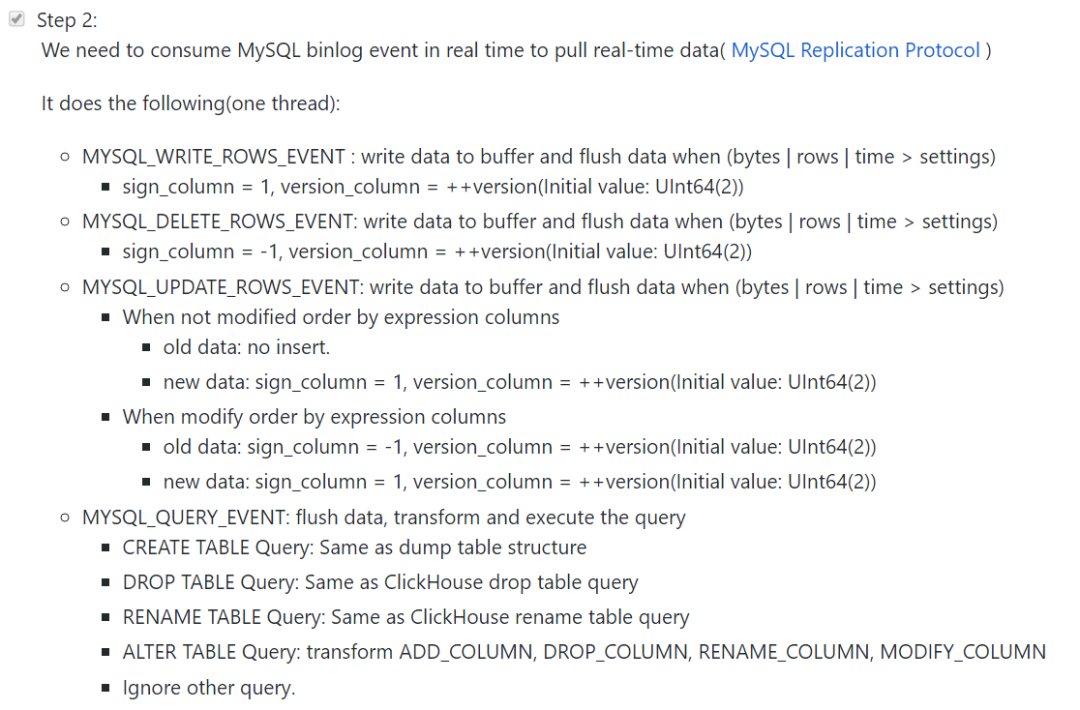

5 数据同步过程

在下面的地址中,作者简要记录了一下数据同步的过程:

https://github.com/ClickHouse/ClickHouse/issues/4006

通过观察上面同步过程的日志,也可以看到一些中间的操作过程

----------------- MySQL的日志 -----------------

2020-09-15T15:09:56.963739+08:00 1056 Query SELECT @@global.read_only2020-09-15T15:09:57.503217+08:00 24476 Connect ch_repl@192.168.0.79 on caihao_test using SSL/TLS2020-09-15T15:09:57.503523+08:00 24476 Query SET NAMES utf82020-09-15T15:09:57.503971+08:00 24476 Query SHOW VARIABLES WHERE (Variable_name = 'log_bin' AND upper(Value) = 'ON') OR (Variable_name = 'binlog_format' AND upper(Value) = 'ROW') OR (Variable_name = 'binlog_row_image' AND upper(Value) = 'FULL') OR (Variable_name = 'default_authentication_plugin' AND upper(Value) = 'MYSQL_NATIVE_PASSWORD')2020-09-15T15:09:57.504854+08:00 24476 Query SELECT version() AS version2020-09-15T15:09:57.505242+08:00 24476 Query FLUSH TABLES2020-09-15T15:09:57.520117+08:00 24476 Query FLUSH TABLES WITH READ LOCK2020-09-15T15:09:57.520408+08:00 24476 Query SHOW MASTER STATUS2020-09-15T15:09:57.520756+08:00 24476 Query SET SESSION TRANSACTION ISOLATION LEVEL REPEATABLE READ2020-09-15T15:09:57.520985+08:00 24476 Query START TRANSACTION /*!40100 WITH CONSISTENT SNAPSHOT */2020-09-15T15:09:57.521274+08:00 24476 Query SELECT TABLE_NAME AS table_name FROM INFORMATION_SCHEMA.TABLES WHERE TABLE_SCHEMA = 'caihao_test'2020-09-15T15:09:57.521723+08:00 24476 Query SHOW CREATE TABLE caihao_test.t2020-09-15T15:09:57.522263+08:00 24476 Query UNLOCK TABLES2020-09-15T15:09:57.531608+08:00 24476 Query SELECT * FROM caihao_test.t2020-09-15T15:09:57.614572+08:00 24476 Query COMMIT2020-09-15T15:09:57.615380+08:00 24477 Connect ch_repl@192.168.0.79 on using TCP/IP2020-09-15T15:09:57.615574+08:00 24477 Query SET @master_binlog_checksum = 'CRC32'2020-09-15T15:09:57.615742+08:00 24477 Query SET @master_heartbeat_period = 10000000002020-09-15T15:09:57.615997+08:00 24477 Binlog Dump GTID Log: '' Pos: 4 GTIDs: '431b0ec6-3561-11ea-a9a1-00163e0ec877:1-100115'复制

----------------- CH的日志 -----------------

2020.09.15 15:09:57.491745 [ 26310 ] {5b265ba7-183c-4399-85ce-c7f696cf1d9b} <Debug> executeQuery: (from 127.0.0.1:57674) CREATE DATABASE caihao_test ENGINE = MaterializeMySQL('192.168.0.77:3306', 'caihao_test', 'ch_repl', 'Ch_repl_123');2020.09.15 15:09:57.491890 [ 26310 ] {5b265ba7-183c-4399-85ce-c7f696cf1d9b} <Trace> ContextAccess (default): Access granted: CREATE DATABASE ON caihao_test.*2020.09.15 15:09:57.494874 [ 26310 ] {5b265ba7-183c-4399-85ce-c7f696cf1d9b} <Information> DatabaseOrdinary (caihao_test): Total 0 tables and 0 dictionaries.2020.09.15 15:09:57.494890 [ 26310 ] {5b265ba7-183c-4399-85ce-c7f696cf1d9b} <Information> DatabaseOrdinary (caihao_test): Starting up tables.2020.09.15 15:09:57.494899 [ 26310 ] {5b265ba7-183c-4399-85ce-c7f696cf1d9b} <Information> Application: MYSQL: Connecting to caihao_test@192.168.0.77:3306 as user ch_repl2020.09.15 15:09:57.504937 [ 26310 ] {5b265ba7-183c-4399-85ce-c7f696cf1d9b} <Debug> MemoryTracker: Peak memory usage (for query): 0.00 B.2020.09.15 15:09:57.505069 [ 26310 ] {} <Information> TCPHandler: Processed in 0.013530009 sec.2020.09.15 15:09:57.525443 [ 26340 ] {} <Debug> MemoryTracker: Peak memory usage (for query): 0.00 B.2020.09.15 15:09:57.525945 [ 26340 ] {} <Debug> executeQuery: (internal) /*Materialize MySQL step 1: execute MySQL DDL for dump data*/ EXTERNAL DDL FROM MySQL(caihao_test, caihao_test) CREATE TABLE `t` ( `id` int(11) NOT NULL, `a` int(11) DEFAULT NULL, `b` int(11) DEFAULT NULL, PRIMARY KEY (`id`), KEY `a` (`a`), KEY `b` (`b`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb42020.09.15 15:09:57.526682 [ 26340 ] {} <Debug> executeQuery: (internal) /* Rewritten MySQL DDL Query */ CREATE TABLE caihao_test.t (`id` Int32, `a` Nullable(Int32), `b` Nullable(Int32), `_sign` Int8() MATERIALIZED 1, `_version` UInt64() MATERIALIZED 1) ENGINE = ReplacingMergeTree(_version) PARTITION BY intDiv(id, 4294967) ORDER BY (id, assumeNotNull(a), assumeNotNull(b))2020.09.15 15:09:57.527544 [ 26340 ] {} <Debug> caihao_test.t: Loading data parts2020.09.15 15:09:57.527710 [ 26340 ] {} <Debug> caihao_test.t: Loaded data parts (0 items)2020.09.15 15:09:57.530654 [ 26340 ] {} <Debug> executeQuery: (internal) /*Materialize MySQL step 1: execute dump data*/ INSERT INTO t(id, a, b) VALUES2020.09.15 15:09:57.610028 [ 26340 ] {} <Debug> DiskLocal: Reserving 2.19 MiB on disk `default`, having unreserved 127.86 GiB.2020.09.15 15:09:57.614024 [ 26340 ] {} <Trace> caihao_test.t: Renaming temporary part tmp_insert_0_1_1_0 to 0_1_1_0.2020.09.15 15:09:57.614110 [ 26340 ] {} <Information> MaterializeMySQLSyncThread(caihao_test): Materialize MySQL step 1: dump t, 0.00 rows, 0.00 B in 0.081625235 sec., 0.00 rows/sec., 0.00 B/sec.2020.09.15 15:09:57.614171 [ 26340 ] {} <Information> MaterializeMySQLSyncThread: MySQL dump database position:=== Binlog Position ===Binlog: binlog.000001Position: 32936647GTIDSets: 431b0ec6-3561-11ea-a9a1-00163e0ec877:1-1001152020.09.15 15:09:57.690952 [ 26340 ] {} <Debug> MaterializeMySQLSyncThread: Skip MySQL event:=== RotateEvent ===Timestamp: 0Event Type: 4Server ID: 49Event Size: 44Log Pos: 0Flags: 32Position: 4Next Binlog: binlog.0000012020.09.15 15:09:57.690980 [ 26340 ] {} <Debug> MaterializeMySQLSyncThread: Skip MySQL event:=== FormatDescriptionEvent ===Timestamp: 1599787087Event Type: 15Server ID: 49Event Size: 119Log Pos: 123Flags: 0Binlog Version: 4Server Version:Create Timestamp: 0Event Header Len: 192020.09.15 15:09:57.691013 [ 26340 ] {} <Debug> MaterializeMySQLSyncThread: Skip MySQL event:=== PreviousGTIDsEvent ===Timestamp: 1599787087Event Type: 35Server ID: 49Event Size: 31Log Pos: 154Flags: 128[DryRun Event]

阅读到此的你,辛苦了

#历史文章摘要

GitHub都在用的高可用工具Orch:

Percona全力打造的监控平台PMM: