Hadoop

com.hadoop.compression.lzo.LzoCodec not found

[root@jwldata01 hadoop]# hadoop fs -text /data/bank.db/account/ds=2020-09-21/000000_0.lzo21/07/05 16:23:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable-text: Compression codec com.hadoop.compression.lzo.LzoCodec not found.Usage: hadoop fs [generic options] -text [-ignoreCrc] <src> ...复制

报Compression codec com.hadoop.compression.lzo.LzoCodec not found,在hadoop-env.sh的HADOOP_CLASSPATH追加$HADOOP_HOME/lib/native/lzo/hadoop-lzo.jar

no gplcompression in java.library.path

[root@jwldata01 hadoop]# hadoop fs -text /data/bank.db/account/ds=2020-09-21/000000_0.lzo21/07/05 16:24:56 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable21/07/05 16:24:57 ERROR lzo.GPLNativeCodeLoader: Could not load native gpl libraryjava.lang.UnsatisfiedLinkError: no gplcompression in java.library.pathat java.lang.ClassLoader.loadLibrary(ClassLoader.java:1867)at java.lang.Runtime.loadLibrary0(Runtime.java:870)at java.lang.System.loadLibrary(System.java:1122)at com.hadoop.compression.lzo.GPLNativeCodeLoader.<clinit>(GPLNativeCodeLoader.java:32)at com.hadoop.compression.lzo.LzoCodec.<clinit>(LzoCodec.java:71)at java.lang.Class.forName0(Native Method)at java.lang.Class.forName(Class.java:348)at org.apache.hadoop.conf.Configuration.getClassByNameOrNull(Configuration.java:2288)at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2253)at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:128)at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:175)at org.apache.hadoop.fs.shell.Display$Text.getInputStream(Display.java:158)at org.apache.hadoop.fs.shell.Display$Cat.processPath(Display.java:101)at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)at org.apache.hadoop.fs.shell.FsCommand.processRawArguments(FsCommand.java:118)at org.apache.hadoop.fs.shell.Command.run(Command.java:165)at org.apache.hadoop.fs.FsShell.run(FsShell.java:315)at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)at org.apache.hadoop.fs.FsShell.main(FsShell.java:372)21/07/05 16:24:57 ERROR lzo.LzoCodec: Cannot load native-lzo without native-hadoop-text: Fatal internal errorjava.lang.RuntimeException: native-lzo library not availableat com.hadoop.compression.lzo.LzopCodec.createDecompressor(LzopCodec.java:104)at com.hadoop.compression.lzo.LzopCodec.createInputStream(LzopCodec.java:89)at org.apache.hadoop.fs.shell.Display$Text.getInputStream(Display.java:162)at org.apache.hadoop.fs.shell.Display$Cat.processPath(Display.java:101)at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)at org.apache.hadoop.fs.shell.FsCommand.processRawArguments(FsCommand.java:118)at org.apache.hadoop.fs.shell.Command.run(Command.java:165)at org.apache.hadoop.fs.FsShell.run(FsShell.java:315)at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)at org.apache.hadoop.fs.FsShell.main(FsShell.java:372)复制

报java.lang.UnsatisfiedLinkError: no gplcompression in java.library.path,在hadoop-env.sh的JAVA_LIBRARY_PATH追加$HADOOP_HOME/lib/native/lzo/native

Unable to load native-hadoop library

[root@jwldata02 ~]# hadoop fs -ls /21/07/06 13:55:21 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableFound 6 itemsdrwxrwx--x+ - hive hive 0 2021-06-17 16:26 /datadrwx------ - hbase hbase 0 2021-07-01 18:24 /hbasedrwxr-xr-x - hive hive 0 2021-06-29 08:26 /resourcedrwxr-xr-x - hdfs supergroup 0 2021-06-18 11:00 /systemdrwxrwxrwt - hdfs supergroup 0 2021-06-11 11:20 /tmpdrwxr-xr-x - hdfs supergroup 0 2021-07-01 17:57 /user复制

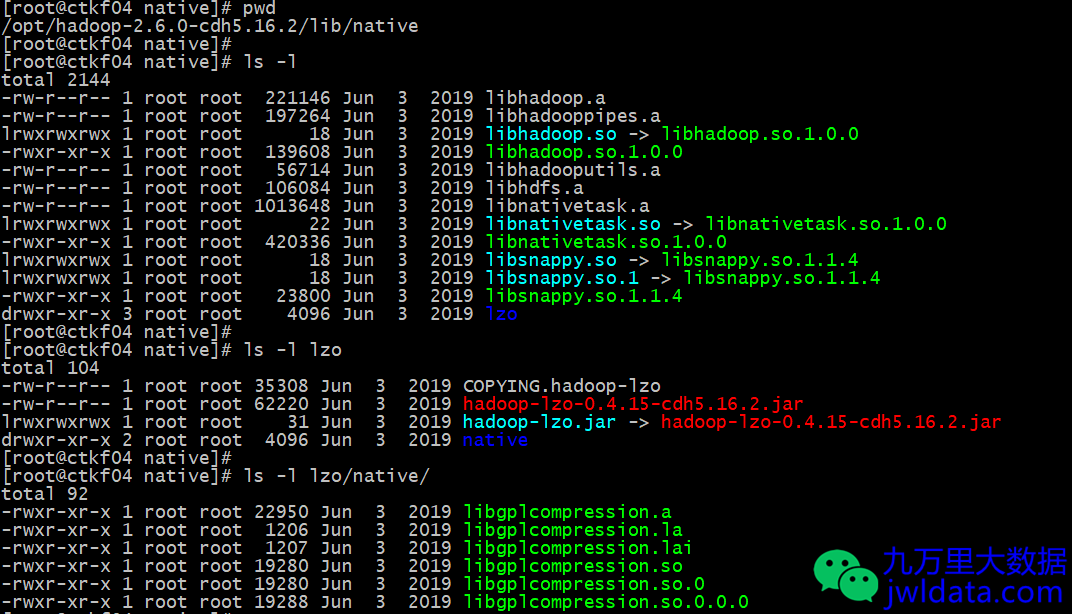

报Unable to load native-hadoop library for your platform... using builtin-java classes where applicable,在/opt/hadoop-2.6.0-cdh5.16.2/lib/native下查看是否缺少依赖文件。

scala> spark.sql("select * from bank.account").show()2021-07-07 08:51:59 WARN LdapGroupsMapping:204 - Exception trying to get groups for user hive: [LDAP: error code 53 - unauthenticated bind (DN with no password) disallowed]2021-07-07 08:51:59 WARN UserGroupInformation:1556 - No groups available for user hive2021-07-07 08:51:59 WARN UserGroupInformation:1556 - No groups available for user hive2021-07-07 08:52:00 WARN TaskSetManager:66 - Lost task 0.0 in stage 1.0 (TID 2, jwldata013, executor 2): java.lang.RuntimeException: native-lzo library not availableat com.hadoop.compression.lzo.LzopCodec.createDecompressor(LzopCodec.java:104)at com.hadoop.compression.lzo.LzopCodec.createInputStream(LzopCodec.java:89)at com.hadoop.mapred.DeprecatedLzoLineRecordReader.<init>(DeprecatedLzoLineRecordReader.java:59)at com.hadoop.mapred.DeprecatedLzoTextInputFormat.getRecordReader(DeprecatedLzoTextInputFormat.java:158)at org.apache.spark.rdd.HadoopRDD$$anon$1.liftedTree1$1(HadoopRDD.scala:267)复制

将/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib和/opt/cloudera/parcels/CDH/lib/hadoop/lib/native目录下的放到/opt/hadoop-2.6.0-cdh5.16.2/lib/native下。

cd /opt/hadoop-2.6.0-cdh5.16.2/etc/hadoopvi hadoop-env.shexport HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HADOOP_HOME/lib/native/lzo/hadoop-lzo.jarexport JAVA_LIBRARY_PATH=$JAVA_LIBRARY_PATH:$HADOOP_HOME/lib/native/lzo/nativeexport HADOOP_OPTS="-Djava.net.preferIPv4Stack=true $HADOOP_OPTS -Djava.library.path=$HADOOP_HOME/lib/native"复制

native和lzo依赖我打包了一份,放在文末,有需要的可以下载。

Spark

com.hadoop.compression.lzo.LzoCodec not found

Caused by: java.lang.IllegalArgumentException: Compression codec com.hadoop.compression.lzo.LzoCodec not found.at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:135)at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:175)at org.apache.hadoop.mapred.TextInputFormat.configure(TextInputFormat.java:45)... 66 moreCaused by: java.lang.ClassNotFoundException: Class com.hadoop.compression.lzo.LzoCodec not foundat org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:1980)at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:128)... 68 more复制

报Compression codec com.hadoop.compression.lzo.LzoCodec not found,在spark-env.sh的SPARK_DIST_CLASSPATH追加$HADOOP_HOME/lib/native/lzo/hadoop-lzo.jar

no gplcompression in java.library.path

scala> content.count()21/07/05 11:13:48 ERROR lzo.GPLNativeCodeLoader: Could not load native gpl libraryjava.lang.UnsatisfiedLinkError: no gplcompression in java.library.pathat java.lang.ClassLoader.loadLibrary(ClassLoader.java:1867)at java.lang.Runtime.loadLibrary0(Runtime.java:870)at java.lang.System.loadLibrary(System.java:1122)at com.hadoop.compression.lzo.GPLNativeCodeLoader.<clinit>(GPLNativeCodeLoader.java:32)at com.hadoop.compression.lzo.LzoCodec.<clinit>(LzoCodec.java:71)at java.lang.Class.forName0(Native Method)at java.lang.Class.forName(Class.java:348)at org.apache.hadoop.conf.Configuration.getClassByNameOrNull(Configuration.java:2013)......(此处省略)......at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:731)at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)21/07/05 11:13:48 ERROR lzo.LzoCodec: Cannot load native-lzo without native-hadoop复制

报Could not load native gpl library,在spark-env.sh的LD_LIBRARY_PATH追加$HADOOP_HOME/lib/native/lzo/native

Unable to load native-hadoop library

[root@ctkf04 bin]# ./spark-shellSLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/opt/spark-2.4.0-bin-hadoop2.6/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/opt/hadoop-2.6.0-cdh5.16.2/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]Setting default log level to "WARN".To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).2021-07-06 15:07:55 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable2021-07-06 15:07:56 WARN Client:66 - Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.复制

报Unable to load native-hadoop library for your platform... using builtin-java classes where applicable,在spark-env.sh的LD_LIBRARY_PATH添加$HADOOP_HOME/lib/native

cd /opt/cloudera/parcels/GPLEXTRAS/lib/hadooptar -zcf lzo-lib.tar.gz lib将lzo-lib.tar.gz解压后放到/opt/hadoop-2.6.0-cdh5.16.2/lib/native下,并将lib目录重命名为lzo复制

cd /opt/spark-1.6.3-bin-hadoop2.6/confvi spark-env.shexport JAVA_HOME=/usr/java/jdk1.8.0_181-amd64export HADOOP_HOME=/opt/hadoop-2.6.0-cdh5.16.2export HADOOP_CONF_DIR="$HADOOP_HOME/etc/hadoop:/opt/hive-1.1.0-cdh5.16.2/conf"export SPARK_DIST_CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$HADOOP_HOME/lib/native/lzo/hadoop-lzo.jarexport LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native/lzo/native:$HADOOP_HOME/lib/native复制

native-lzo library not available

scala> spark.sql("select * from bank.account").show()2021-07-07 09:47:13 WARN LdapGroupsMapping:204 - Exception trying to get groups for user hive: [LDAP: error code 53 - unauthenticated bind (DN with no password) disallowed]2021-07-07 09:47:13 WARN UserGroupInformation:1556 - No groups available for user hive2021-07-07 09:47:13 WARN UserGroupInformation:1556 - No groups available for user hive[Stage 0:> (0 + 1) / 1]2021-07-07 09:47:14 WARN TaskSetManager:66 - Lost task 0.0 in stage 0.0 (TID 0, jwldata012, executor 2): java.lang.RuntimeException: native-lzo library not availableat com.hadoop.compression.lzo.LzopCodec.createDecompressor(LzopCodec.java:104)at com.hadoop.compression.lzo.LzopCodec.createInputStream(LzopCodec.java:89)at com.hadoop.mapred.DeprecatedLzoLineRecordReader.<init>(DeprecatedLzoLineRecordReader.java:59)at com.hadoop.mapred.DeprecatedLzoTextInputFormat.getRecordReader(DeprecatedLzoTextInputFormat.java:158)at org.apache.spark.rdd.HadoopRDD$$anon$1.liftedTree1$1(HadoopRDD.scala:267)......(此处省略)......2021-07-07 09:47:16 ERROR TaskSetManager:70 - Task 0 in stage 0.0 failed 4 times; aborting joborg.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 3, jwldata012, executor 1): java.lang.RuntimeException: native-lzo library not availableat com.hadoop.compression.lzo.LzopCodec.createDecompressor(LzopCodec.java:104)at com.hadoop.compression.lzo.LzopCodec.createInputStream(LzopCodec.java:89)at com.hadoop.mapred.DeprecatedLzoLineRecordReader.<init>(DeprecatedLzoLineRecordReader.java:59)at com.hadoop.mapred.DeprecatedLzoTextInputFormat.getRecordReader(DeprecatedLzoTextInputFormat.java:158)at org.apache.spark.rdd.HadoopRDD$$anon$1.liftedTree1$1(HadoopRDD.scala:267)at org.apache.spark.rdd.HadoopRDD$$anon$1.<init>(HadoopRDD.scala:266)复制

报executor上的native-lzo library not available,在spark-defaults.conf上追加spark.executor.extraLibraryPath配置,且需要是服务端的路径。

spark-defaults.conf

spark.driver.extraLibraryPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/nativespark.executor.extraLibraryPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/nativespark.yarn.am.extraLibraryPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/native复制

配置总结一下

Hadoop

hadoop-env.sh

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HADOOP_HOME/lib/native/lzo/hadoop-lzo.jarexport JAVA_LIBRARY_PATH=$JAVA_LIBRARY_PATH:$HADOOP_HOME/lib/native/lzo/nativeexport HADOOP_OPTS="-Djava.net.preferIPv4Stack=true $HADOOP_OPTS -Djava.library.path=$HADOOP_HOME/lib/native"复制

Spark

spark-defaults.conf

spark.master=yarnspark.submit.deployMode=clientspark.driver.host=192.168.X.Xspark.eventLog.enabled=truespark.eventLog.dir=hdfs://nameservice1/user/spark/applicationHistoryspark.yarn.historyServer.address=http://jwldata003:18088spark.port.maxRetries=100spark.driver.extraJavaOptions -Dderby.system.home=/tmp/derbyspark.driver.extraLibraryPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/nativespark.executor.extraLibraryPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/nativespark.yarn.am.extraLibraryPath=/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/native复制

spark-env.sh

#!/usr/bin/env bashexport JAVA_HOME=/usr/java/jdk1.8.0_181-amd64export HADOOP_HOME=/opt/hadoop-2.6.0-cdh5.16.2export HADOOP_CONF_DIR="$HADOOP_HOME/etc/hadoop:/opt/hive-1.1.0-cdh5.16.2/conf"export SPARK_DIST_CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$HADOOP_HOME/lib/native/lzo/hadoop-lzo.jarexport LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native/lzo/native:$HADOOP_HOME/lib/native复制

hadoop native和lzo依赖下载地址

链接:https://pan.baidu.com/s/1_pTcbjh_v46lpmy67B8P0w

提取码:detv

欢迎关注我的微信公众号“九万里大数据”,原创技术文章第一时间推送。欢迎访问原创技术博客网站 jwldata.com[1],排版更清晰,阅读更爽快。

引用链接

[1]

jwldata.com: https://www.jwldata.com