1409.3215Sequence to Sequence Learning.pdf

5墨值下载

arXiv:1409.3215v3 [cs.CL] 14 Dec 2014

Sequence to Sequence Learning

with Neural Networks

Ilya Sutskever

Google

ilyasu@google.com

Oriol Vinyals

Google

vinyals@google.com

Quoc V. Le

Google

qvl@google.com

Abstract

Deep Neural Networks (DNNs) are powerful models that have achieved excel-

lent performance on difficult learning tasks. Although DNNs work well whenever

large labeled training sets are available, they cannot be used to map sequences to

sequences. In this paper, we present a general end-to-end approach to sequence

learning that makes minimal assumptions on the sequence structure. Our method

uses a multilayered Long Short-Term Memory (LSTM) to map the input sequence

to a vector of a fixed dimensionality, and then another deep LSTM to decode the

target sequence from the vector. Our main result is that on an English to French

translation task from the WMT’14 dataset, the translations produced by the LSTM

achieve a BLEU score of 34.8 on the entire test set, where the LSTM’s BLEU

score was penalized on out-of-vocabulary words. Additionally, the LSTM did not

have difficulty on long sentences. For comparison, a phrase-based SMT system

achieves a BLEU score of 33.3 on the same dataset. When we used the LSTM

to rerank the 1000 hypotheses produced by the aforementioned SMT system, its

BLEU score increases to 36.5, which is close to the previous best result on this

task. The LSTM also learned sensible phrase and sentence representations that

are sensitive to word order and are relatively invariant to the active and the pas-

sive voice. Finally, we found that reversing the order of the words in all source

sentences (but not target sentences) improved the LSTM’s performance markedly,

because doing so introduced many short term dependencies between the source

and the target sentence which made the optimization problem easier.

1 Introduction

Deep Neural Networks (DNNs) are extremely powerful machine learning models that achieve ex-

cellent performance on difficult problems such as speech recognition [13, 7] and visual object recog-

nition [19, 6, 21, 20]. DNNs are powerful because they can perform arbitrary parallel computation

for a modest number of steps. A surprising example of the power of DNNs is their ability to sort

N N-bit numbers using only 2 hidden layers of quadratic size [27]. So, while neural networks are

related to conventional statistical models, they learn an intricate computation. Furthermore, large

DNNs can be trained with supervised backpropagation whenever the labeled training set has enough

information to specify the network’s parameters. Thus, if there exists a parameter setting of a large

DNN that achieves good results (for example, because humans can solve the task very rapidly),

supervised backpropagation will find these parameters and solve the problem.

Despite their flexibility and power, DNNs can only be applied to problems whose inputs and targets

can be sensibly encoded with vectors of fixed dimensionality. It is a significant limitation, since

many important problems are best expressed with sequences whose lengths are not known a-priori.

For example, speech recognition and machine translation are sequential problems. Likewise, ques-

tion answering can also be seen as mapping a sequence of words representing the question to a

1

sequence of words representing the answer. It is therefore clear that a domain-independent method

that learns to map sequences to sequences would be useful.

Sequences pose a challenge for DNNs because they require that the dimensionality of the inputs and

outputs is known and fixed. In this paper, we show that a straightforward application of the Long

Short-Term Memory (LSTM) architecture [16] can solve general sequence to sequence problems.

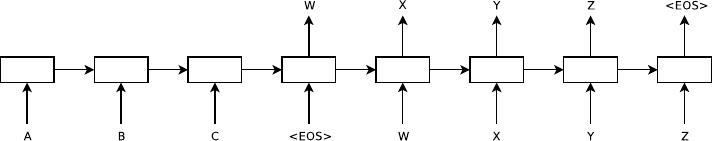

The idea is to use one LSTM to read the input sequence, one timestep at a time, to obtain large fixed-

dimensional vector representation, and then to use another LSTM to extract the output sequence

from that vector (fig. 1). The second LSTM is essentially a recurrent neural network language model

[28, 23, 30] except that it is conditioned on the input sequence. The LSTM’s ability to successfully

learn on data with long range temporal dependencies makes it a natural choice for this application

due to the considerable time lag between the inputs and their corresponding outputs (fig. 1).

There have been a number of related attempts to address the general sequence to sequence learning

problem with neural networks. Our approach is closely related to Kalchbrenner and Blunsom [18]

who were the first to map the entire input sentence to vector, and is related to Cho et al. [5] although

the latter was used only for rescoring hypotheses produced by a phrase-based system. Graves [10]

introduced a novel differentiable attention mechanism that allows neural networks to focus on dif-

ferent parts of their input, and an elegant variant of this idea was successfully applied to machine

translation by Bahdanau et al. [2]. The Connectionist Sequence Classification is another popular

technique for mapping sequences to sequences with neural networks, but it assumes a monotonic

alignment between the inputs and the outputs [11].

Figure 1: Our model reads an input sentence “ABC” and produces “WXYZ” as the output sentence. The

model stops making predictions after outputting the end-of-sentence token. Note that the LSTM reads the

input sentence in reverse, because doing so introduces many short term dependencies in the data that make the

optimization problem much easier.

The main result of this work is the following. On the WMT’14 English to French translation task,

we obtained a BLEU score of 34.81 by directly extracting translations from an ensemble of 5 deep

LSTMs (with 384M parameters and 8,000 dimensional state each) using a simple left-to-right beam-

search decoder. This is by far the best result achieved by direct translation with large neural net-

works. For comparison, the BLEU score of an SMT baseline on this dataset is 33.30 [29]. The 34.81

BLEU score was achieved by an LSTM with a vocabulary of 80k words, so the score was penalized

whenever the reference translation contained a word not covered by these 80k. This result shows

that a relatively unoptimized small-vocabulary neural network architecture which has much room

for improvement outperforms a phrase-based SMT system.

Finally, we used the LSTM to rescore the publicly available 1000-best lists of the SMT baseline on

the same task [29]. By doing so, we obtained a BLEU score of 36.5, which improves the baseline by

3.2 BLEU points and is close to the previous best published result on this task (which is 37.0 [9]).

Surprisingly, the LSTM did not suffer on very long sentences, despite the recent experience of other

researchers with related architectures [26]. We were able to do well on long sentences because we

reversed the order of words in the source sentence but not the target sentences in the training and test

set. By doing so, we introduced many short term dependencies that made the optimization problem

much simpler (see sec. 2 and 3.3). As a result, SGD could learn LSTMs that had no trouble with

long sentences. The simple trick of reversing the words in the source sentence is one of the key

technical contributions of this work.

A useful property of the LSTM is that it learns to map an input sentence of variable length into

a fixed-dimensional vector representation. Given that translations tend to be paraphrases of the

source sentences, the translation objective encourages the LSTM to find sentence representations

that capture their meaning, as sentences with similar meanings are close to each other while different

2

sentences meanings will be far. A qualitative evaluation supports this claim, showing that our model

is aware of word order and is fairly invariant to the active and passive voice.

2 The model

The Recurrent Neural Network (RNN) [31, 28] is a natural generalization of feedforward neural

networks to sequences. Given a sequence of inputs (x

1

, . . . , x

T

), a standard RNN computes a

sequence of outputs (y

1

, . . . , y

T

) by iterating the following equation:

h

t

= sigm

W

hx

x

t

+ W

hh

h

t−1

y

t

= W

yh

h

t

The RNN can easily map sequences to sequences whenever the alignment between the inputs the

outputs is known ahead of time. However, it is not clear how to apply an RNN to problems whose

input and the output sequences have different lengths with complicated and non-monotonic relation-

ships.

The simplest strategy for general sequence learning is to map the input sequence to a fixed-sized

vector using one RNN, and then to map the vector to the target sequence with another RNN (this

approach has also been taken by Cho et al. [5]). While it could work in principle since the RNN is

provided with all the relevant information, it would be difficult to train the RNNs due to the resulting

long term dependencies (figure 1) [14, 4, 16, 15]. However, the Long Short-Term Memory (LSTM)

[16] is known to learn problems with long range temporal dependencies, so an LSTM may succeed

in this setting.

The goal of the LSTM is to estimate the conditional probability p(y

1

, . . . , y

T

′

|x

1

, . . . , x

T

) where

(x

1

, . . . , x

T

) is an input sequence and y

1

, . . . , y

T

′

is its corresponding output sequence whose length

T

′

may differ from T . The LSTM computes this conditional probability by first obtaining the fixed-

dimensional representation v of the input sequence (x

1

, . . . , x

T

) given by the last hidden state of the

LSTM, and then computing the probability of y

1

, . . . , y

T

′

with a standard LSTM-LM formulation

whose initial hidden state is set to the representation v of x

1

, . . . , x

T

:

p(y

1

, . . . , y

T

′

|x

1

, . . . , x

T

) =

T

′

Y

t=1

p(y

t

|v, y

1

, . . . , y

t−1

) (1)

In this equation, each p(y

t

|v, y

1

, . . . , y

t−1

) distribution is represented with a softmax over all the

words in the vocabulary. We use the LSTM formulation from Graves [10]. Note that we require that

each sentence ends with a special end-of-sentence symbol “<EOS>”, which enables the model to

define a distribution over sequences of all possible lengths. The overall scheme is outlined in figure

1, where the shown LSTM computes the representation of “A”, “B”, “C”, “<EOS> ” and then uses

this representation to compute the probability of “W”, “X”, “Y”, “Z”, “<EOS>”.

Our actual models differ from the above description in three important ways. First, we used two

different LSTMs: one for the input sequence and another for the output sequence, because doing

so increases the number model parameters at negligible computational cost and makes it natural to

train the LSTM on multiple language pairs simultaneously [18]. Second, we found that deep LSTMs

significantly outperformed shallow LSTMs, so we chose an LSTM with four layers. Third, we found

it extremely valuable to reverse the order of the words of the input sentence. So for example, instead

of mapping the sentence a, b, c to the sentence α, β, γ, the LSTM is asked to map c, b, a to α, β, γ,

where α, β, γ is the translation of a, b, c. This way, a is in close proximity to α, b is fairly close to β,

and so on, a fact that makes it easy for SGD to “establish communication” between the input and the

output. We found this simple data transformation to greatly improve the performance of the LSTM.

3 Experiments

We applied our method to the WMT’14 English to French MT task in two ways. We used it to

directly translate the input sentence without using a reference SMT system and we it to rescore the

n-best lists of an SMT baseline. We report the accuracy of these translation methods, present sample

translations, and visualize the resulting sentence representation.

3

of 9

5墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

最新上传

下载排行榜

1

2

9-数据库人的进阶之路:从PG分区、SQL优化到拥抱AI未来(罗敏).pptx

3

1-PG版本兼容性案例(彭冲).pptx

4

2-TDSQL PG在复杂查询场景中的挑战与实践-opensource.pdf

5

6-PostgreSQL 哈希索引原理浅析(文一).pdf

6

3-AI时代的变革者-面向机器的接口语言(MOQL)_吕海波.pptx

7

8-基于PG向量和RAG技术的开源知识库问答系统MaxKB.pptx

8

4-IvorySQL V4:双解析器架构下的兼容性创新实践.pptx

9

7-拉起PG好伙伴DifySupaOdoo.pdf

10

《云原生安全攻防启示录》李帅臻.pdf

评论