A刊-Fluid-Shuttle_Efficient_Cloud_Data_Transmission_Based_on_Serverless_Computing_CompressionFluid-Shuttle.pdf

免费下载

IEEE/ACM TRANSACTIONS ON NETWORKING 1

Fluid-Shuttle: Efficient Cloud Data Transmission

Based on Serverless Computing Compression

Rong Gu , Member, IEEE, Shulin Wang, Haipeng Dai , Senior Member, IEEE, Xiaofei Chen,

Zhaokang Wang , Wenjie Bao, Jiaqi Zheng , Senior Member, IEEE, Yaofeng Tu, Yihua Huang ,

Lianyong Qi , Senior Member, IEEE, Xiaolong Xu , Senior Member, IEEE,

Wanchun Dou , and Guihai Chen , Fellow, IEEE

Abstract— Nowadays, there exists a lot of cross-region data

transmission demand on the cloud. It is promising to use

serverless computing for data compressing to save the total data

size. However, it is challenging to estimate the data transmission

time and monetary cost with serverless compression. In addition,

minimizing the data transmission cost is non-trivial due to the

enormous parameter space. This paper focuses on this problem

and makes the following contributions: 1) We propose empirical

data transmission time and monetary cost models based on

serverless compression. It can also predict compression infor-

mation, e.g., ratio and speed using chunk sampling and machine

learning techniques. 2) For single-task cloud data transmission,

we propose two efficient parameter search methods based on

Sequential Quadratic Programming (SQP) and Eliminate then

Divide and Conquer (EDC) with proven error upper bounds.

Besides, we propose a parameter fine-tuning strategy to deal

with transmission bandwidth variance. 3) Furthermore, for multi-

task scenarios, a parameter search method based on dynamic

programming and numerical computation is proposed. We have

implemented the system called Fluid-Shuttle, which includes

straggler optimization, cache optimization, and the autoscaling

decompression mechanism. Finally, we evaluate the performance

of Fluid-Shuttle with various workloads and applications on the

real-world AWS serverless computing platform. Experimental

results show that the proposed approach can improve the param-

eter search efficiency by over 3× compared with the state-of-art

methods and achieves better parameter quality. In addition, our

Manuscript received 25 May 2023; revised 7 February 2024;

accepted 18 April 2024; approved by IEEE/ACM TRANSACTIONS ON

NETWORKING Editor R. Pedarsani. This work was supported in part by

the National Natural Science Foundation of China under Grant 62072230,

Grant 62272223, and Grant U22A2031; in part by Jiangsu Province

Science and Technology Key Program under Grant BE2021729; and in

part by the Collaborative Innovation Center of Novel Software Technology

and Industrialization. (Corresponding authors: Rong Gu; Haipeng Dai;

Jiaqi Zheng.)

Rong Gu, Shulin Wang, Haipeng Dai, Xiaofei Chen, Wenjie Bao,

Jiaqi Zheng, Yihua Huang, Wanchun Dou, and Guihai Chen are

with the State Key Laboratory for Novel Software Technology,

Nanjing University, Nanjing, Jiangsu 210023, China (e-mail:

gurong@nju.edu.cn; wangshulin@smail.nju.edu.cn; haipengdai@nju.edu.cn;

xfchen@smail.nju.edu.cn; bwj_678@qq.com; jzheng@nju.edu.cn; yhuang@

nju.edu.cn; douwc@nju.edu.cn; gchen@nju.edu.cn).

Zhaokang Wang is with the College of Computer Science and Technology,

Nanjing University of Aeronautics and Astronautics, Nanjing 210016, China

(e-mail: wangzhaokang@nuaa.edu.cn).

Yaofeng Tu is with ZTE Corporation, Shenzhen 518057, China (e-mail:

tu.yaofeng@zte.com.cn).

Lianyong Qi is with the College of Computer Science and Technology,

China University of Petroleum (East China), Dongying 257099, China

(e-mail: lianyongqi@gmail.com).

Xiaolong Xu is with the School of Software, Nanjing University of

Information Science and Technology, Nanjing 210044, China (e-mail:

xlxu@nuist.edu.cn).

Digital Object Identifier 10.1109/TNET.2024.3402561

approach achieves higher time efficiency and lower monetary cost

compared with competing cloud data transmission approaches.

Index Terms— Data transmission, serverless compression,

cloud function configuration.

I. INTRODUCTION

N

OWADAYS, a large amount of data needs to be trans-

ferred across data centers or cloud regions [1]. For

example, software/model distribution, database replication,

search index synchronization, and other data backup opera-

tions require frequent data transmission on the cloud [2]. It is

reported that 70% of IT firms have massive data transmission

among data centers, ranging from 330 TB to 3.3 PB per

month, and the amount of data keeps overgrowing [3]. Data

transmission over long distances consumes massive band-

width resources, which is costly on the cloud. Cloud service

providers have spent hundreds of millions of US dollars on

data transmission every year [4]. Therefore, improving time

efficiency and reducing the monetary cost of cross-region

data transmission on the cloud is vital. In order to save the

bandwidth cost and improve data transmission efficiency, data

is usually compressed before transmission [5], [6]. However,

data compression itself brings extra computation costs. Thus,

it is important to make a tradeoff between the compression

computation cost and the saved bandwidth cost.

In the traditional cloud environment, it is common to rent

virtual machines (VMs) for data compression [7]. However,

the VMs are heavy for data transmission tasks because they

usually take nearly 1 minute to start [8] and are charged

hourly. In recent years, serverless computing is emerging

as the next generation of cloud computing technology [9].

It provides computing resources by cloud functions (e.g., AWS

Lambda [10]) with strong elasticity and fine-grained billing.

We compared the data transmission time and monetary cost

of using cloud functions compression with virtual machine

compression to transfer a 1 GB Lineitem dataset [11] on

AWS.

1

Experimental results show that the end-to-end data

transmission time of serverless compression (including cold

start time) is 1/4 of that of virtual machine compression

(including boot-up time).

Nevertheless, achieving efficient data transmission with

serverless compression faces the challenge of choosing

1

We use a typical AWS EC2 c5a.xlarge instance with 4 vCPU and 8 GB

memory, and 4 cloud functions each has 1536 MB memory.

1558-2566 © 2024 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See https://www.ieee.org/publications/rights/index.html for more information.

This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

Authorized licensed use limited to: ZTE CORPORATION. Downloaded on November 26,2024 at 05:42:44 UTC from IEEE Xplore. Restrictions apply.

2 IEEE/ACM TRANSACTIONS ON NETWORKING

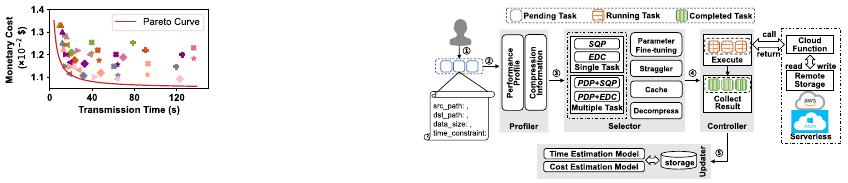

Fig. 1. Transmission time and monetary costs of transferring 1 GB

Lineitem dataset with various configurations. Colors represent cloud function

configurations, and shapes represent compression method types.

appropriate configurations, including the data compression

method type, parameters of serverless cloud functions, etc.

It needs proper configurations to balance the computation cost

and saved bandwidth cost [5], [6]. Fig. 1 shows the data

transmission time and monetary cost of transferring the 1 GB

Lineitem dataset using different AWS Lambda configurations

(the transmission process is shown in Fig. 3). The configu-

rations simultaneously impact the data transmission time and

monetary cost in a non-trivial fashion. For a given time con-

straint, there exist noticeable differences in data transmission

monetary costs among various configurations. We find that the

best configurations can reduce the data transmission monetary

cost by up to 75% compared with the worst ones. Therefore,

finding the optimal configuration is critical to reducing the

time and monetary cost of the data transmission on the cloud.

Existing works have limitations in considering the total

cost or all execution parameters on the cloud. They can be

classified to two categories. One category minimizes the data

transmission time and cost by optimizing system mechanisms

(e.g., the combination of multiple storage tiers [12], [13],

communication method [14]). However, these works do not

consider the expensive bandwidth cost and are not in our

problem scope. The other category searches proper config-

urations [15], [16] to reduce both time and monetary cost of

data transmission by using serverless computing, while these

methods only consider the cloud function memory size.

There are three main challenges in achieving time-efficient

and cost-saving cloud data transmission with serverless com-

pression. First, it is non-trivial to model and estimate the

time and monetary cost of the cloud data transmission with

serverless compression. Second, finding the optimal parame-

ters of the cloud data transmission system is time-consuming

due to enormous parameter space and joint optimization

among parameters. In addition, the non-continuous differ-

entiable objective function and constraint make it difficult

to find algebraic solutions. Third, when multiple cloud data

transmission tasks run concurrently, different tasks compete

for the shared concurrency of cloud functions, leading to

the parameter search space increasing exponentially with the

number of tasks.

To address the above challenges, we model the data trans-

mission process and formulate the parameter search problem

as a mixed-integer non-linear programming problem. For

various input data, we propose to predict the compression

information instead of using empirical settings. Furthermore,

by enumeration, objective function simplification, and convert-

ing non-continuous differentiable, we propose two efficient

and error-bounded optimal parameter search methods SQP

and EDC for single-task data transmission. For multiple-task

data transmission, we use dynamic programming and numeri-

Fig. 2. Overview of Fluid-Shuttle system.

cal analysis to solve the concurrency competition. Specifically,

our main contributions are as follows.

• For cloud data transmission based on serverless com-

pression, we propose two empirical models with high

accuracy to estimate the time cost and the monetary

cost. Besides, we propose a compression information

prediction method based on chunk sampling and machine

learning techniques. Then, we formulate the parameter

search task as a constrained mixed-integer non-linear

programming problem.

• After using enumeration, objective function simpli-

fication, and converting non-continuous differentiable

techniques, we propose two efficient and error-bounded

optimal parameter search methods SQP and EDC for

single-task data transmission. To deal with bandwidth

variance, we model bandwidth using Weibull distribution

and fine-tune the resource setting as necessary.

• To search high-quality configurations for multiple-task

data transmission, we propose a hybrid search method

based on dynamic programming and numerical analysis,

reducing time complexity from exponential to linear.

• Finally, we implement the system called Fluid-Shuttle

with straggler optimization, cache optimization, and

the autoscaling decompression mechanism. Experimental

results on the real-world cloud platform with various

workloads show that the proposed methods improve the

parameter search speed over 3×, compared with the state-

of-the-art methods, and have better parameter quality.

Fluid-Shuttle brings 1.4× performance improvement and

saves 55.8% monetary cost on average when transferring

data across cloud regions, compared with existing solu-

tions.

II. SYSTEM OVERVIEW

The overview architecture of our Fluid-Shuttle system is

shown in Fig. 2. It contains four main modules: Profiler,

Selector, Controller, and Updater. The Profiler module col-

lects the necessary information for data transmission tasks

and predicts compression information for various transmission

data. The Selector module selects a proper parameter search

method according to the target scenario. In addition, we have

packaged some extensions described in the following parts

for users according to their scenarios. The Controller module

calls cloud functions to execute data transmission tasks. It also

monitors related metrics. The Updater module updates the

internal parameters of the time and cost estimation models

based on the collected metrics.

The system workflow runs in steps as follows.

This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

Authorized licensed use limited to: ZTE CORPORATION. Downloaded on November 26,2024 at 05:42:44 UTC from IEEE Xplore. Restrictions apply.

of 16

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论