ICDE2023_Automatic Fusion Network for Cold-start CVR Prediction with Explicit Multi-Level Representation_腾讯云.pdf

免费下载

Automatic Fusion Network for Cold-start CVR

Prediction with Explicit Multi-Level Representation

Jipeng Jin

∗

, Guangben Lu

†

, Sijia Li

∗

, Xiaofeng Gao

∗

,AoTan

†

, Lifeng Wang

†

∗

MoE Key Lab of Artificial Intelligence, Dept. of Computer Science and Engineering, Shanghai Jiao Tong University, China

†

Corporate Development Group, Tencent, China

{jinjipeng,qdhlsj,gaoxiaofeng}@sjtu.edu.cn, {lucasgblu,williamatan,fandywang}@tencent.com

Abstract—Estimating conversion rate (CVR) accurately has

been one of the most central problems in online advertising.

Existing methods in production focus on learning effective

interactions among features to boost the model performance.

Despite great success, these methods treat all the features equally

without distinction. However, different features suffer differently

from cold-start issues. Tail elements in those high-cardinality

features, which we denote as fine-grained features, tend to

have inadequate samples and thus fail to obtain semantically

meaningful embeddings. Interacting with those features leads

astray and impairs the accuracy of new ads in a cold-start

scenario. In this paper, we propose Automatic Fusion Network

(AutoFuse) to better tackle the challenge. AutoFuse explicitly

separates features into groups based on their granularity and

learns multiple levels of representation conditioned on different

combinations of feature groups. Concretely, AutoFuse learns an

ad-level representation to depict the unique individual character

and a group-level representation to portray the collective infor-

mation by discarding the fine-grained features. The final robust

and general ad representation is obtained by integrating these two

level representations adaptively. Such a combination encompasses

a wider amount of information, and thereby mitigates the cold-

start issue. Extensive experiments on two industrial-scale datasets

and three public datasets show that AutoFuse significantly and

consistently outperforms a spectrum of competitive methods

including our currently deployed model. Meanwhile, the remark-

able improvement on new ads validates the effectiveness of our

method in cold-start scenarios. We design AutoFuse as a generic

approach and thus it can be seamlessly transferred into other

domains. Our method has been deployed online to serve billions

of users and ads and has achieved significant GMV gain of 2.84%.

Index Terms—Cold-start, Online Advertising, CVR Prediction

I. INTRODUCTION

Accurate conversion rate (CVR) prediction is at the heart of

any real-time advertising system to achieve a win-win of both

platform and advertisers. As a conversion requires sufficient

user engagement, the training data collected from users’ feed-

back is very sparse for most advertisements (hereinafter called

ads). Such a conversion rarity problem makes the cold-start

This work was supported by the National Key R&D Program of China

[2020YFB1707903], the National Natural Science Foundation of China

[62272302, 61972254], the Shanghai Municipal Science and Technology

Major Project [2021SHZDZX0102] and the Tencent Social Ads Rhino-Bird

Focused Research Program. The authors would like to thank Wei Wang and

Yanrong Kang for their contributions in preliminary works. X. Gao is the

corresponding author.

issue in CVR prediction much more prominent. Precisely, in

a real conversion scenario, the frequency distribution of ads is

inherently long-tailed, with a few popular ads and many more

new ads. In this case, the model training may be dominated by

popular ads and leave the recommendation precision for new

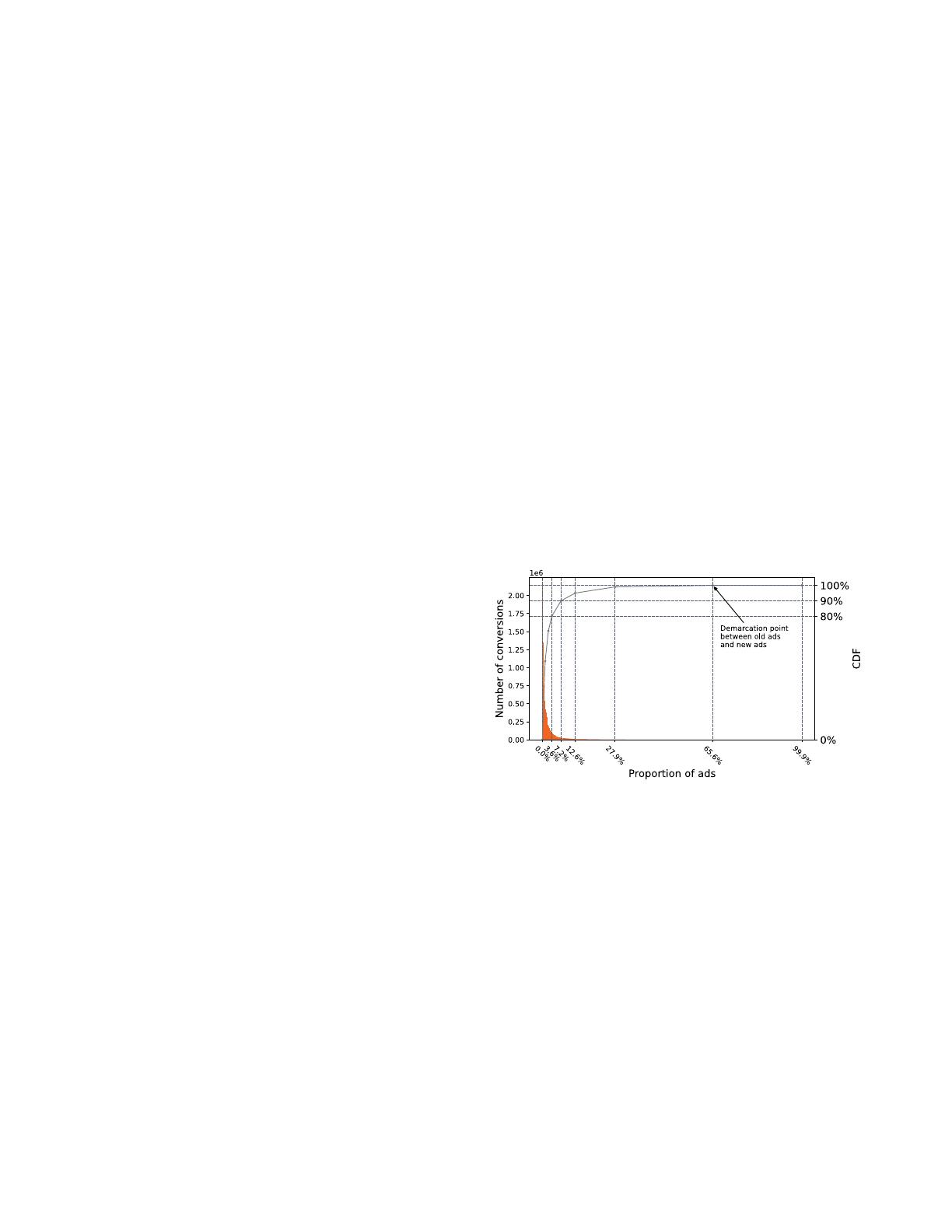

ads behind. For example, Figure 1 shows that top 7.2% of ads

occupy 90% of conversions. In practice, ads with fewer than 20

conversions within a certain time period are usually considered

as new ads. Figure 1 shows that new ads, which accounts for

34.4% of all ads, only have 0.0014% of conversions.

Fig. 1: Histogram of the number of conversions over different

proportions of ads in Tencent ad platform.

Utilizing content features of users and items is essential for

mitigating cold-start problems [1], [2]. Typically, these models

employ sophisticated neural networks to encode complicated

user states and item features in low-dimensional embedding

space and further predict each query. In practice, each feature

embedding is randomly initialized and trained repeatedly to-

wards a semantically meaningful state. However, such training

is data-demanding. Take ad identifier (ad ID) for example.

Real-world conversion data is usually highly skewed towards

popular ads. An old ad may have thousands of conversions

while a new ad barely has any. Under such a scenario, the

ID embeddings of old ads contain ample information while

a large number of new ads may overfit or fail to obtain

semantically meaningful embeddings for their associated ID.

Among hundreds of features we use, ad ID is not an exception.

The representation learning of advertiser ID and product ID

3440

2023 IEEE 39th International Conference on Data Engineering (ICDE)

2375-026X/23/$31.00 ©2023 IEEE

DOI 10.1109/ICDE55515.2023.00264

2023 IEEE 39th International Conference on Data Engineering (ICDE) | 979-8-3503-2227-9/23/$31.00 ©2023 IEEE | DOI: 10.1109/ICDE55515.2023.00264

Authorized licensed use limited to: Tencent. Downloaded on April 22,2025 at 08:35:27 UTC from IEEE Xplore. Restrictions apply.

TABLE I: The DNN model performance on Moments dataset

when removing ID features. Ad ID, campaign ID, advertiser

ID and product ID are removed one by one from V1 to V4.

V5 removes all other 9 ID features.

Model New Ads Old Ads Overall

DNN 0.8175 0.8449 0.8436

V1 0.8206 0.8386 0.8375

V2 0.8233 0.8354 0.8332

V3 0.8267 0.8326 0.8322

V4 0.8269 0.8323 0.8315

V5 0.8293 0.8304 0.8299

TABLE II: The DNN model performance on KDD2012 Cup

dataset when removing ID features. Ad ID, description ID and

advertiser ID are removed one by one from V1 to V3. Removal

of more ID features causes severe performance decrease in all

situations.

Model New Ads Old Ads Overall

DNN 0.6924 0.7503 0.7414

V1 0.6942 0.7458 0.7372

V2 0.6941 0.7436 0.7350

V3 0.6963 0.7407 0.7327

is also challenging since there exist large amounts of small

advertisers and niche products with a narrow appeal.

Table I shows the performance of base model (DNN [3],

currently used in Tencent platform) when features mentioned

above are gradually removed. We can observe that the perfor-

mance increases on new ads but decreases severely on old ads,

hence the overall performance is deteriorated. Similar results

can be reproduced in public dataset as shown in Table II. It

demonstrates the duality of these features: beneficial for the

old ads while ineffectual for new ones. However, the distribu-

tion of some features are moderate, e.g., product and industrial

category. Those features tend to have small cardinality and the

training samples for tail elements are sufficient enough. We

denote the former type of feature as fine-grained feature and

the latter type as coarse-grained feature. From this perspective,

the cold-start issue can be characterized as the inability to

provide effective embeddings for fine-grained features, thereby

impairing the final condensed ad representation.

Existing methods in CVR prediction employ similar tech-

niques developed in click-through rate (CTR) prediction [4]–

[6]. These methods aim to learn effective low- and high-order

feature interactions and thus significantly improve the perfor-

mance. However, these methods treat all the features equally

without distinction. As mentioned above, new ads tend to have

unreasonable embeddings for fine-grained features. Interacting

with such features may not yield expected effectiveness, and

further impairs the performance for new ads. Thus, special

attention should be attached to the feature grouping in cold-

start scenarios.

Intuitively, as shown in Figure 2(a), disregarding the dif-

ference between feature types and utilizing a single model

on all ads may not work well on new ads, despite its good

performance on old ads. On the other hand, discarding the

fine-grained features directly in Figure 2(b) can increase

the performance on new ads significantly, but sacrifices the

accuracy on old ads as fine-grained features contain unique

character and is indispensable. Figure 2(c) combines the

two models mentioned above and builds separate models for

different ads, use Figure 2(a) to predict CVR for old ads and

Figure 2(b) for new ads. It performs much worse since new

ads have much fewer data and much more intrinsic noise,

which make the complicated models hard to learn. Moreover,

decoupling suffers from different model update effectiveness,

which results in a large precision gap between old and new

ads. Meanwhile, maintaining multiple models simultaneously

consumes huge amounts of both computation and storage

resources, as well as required human effort. Hence, it is desired

to design a more powerful and efficient model.

In a real production scenario, new ads tend not to be

loners, but instead implicitly exist in smaller groups and larger

clusters. An advertiser may share same creative features among

its ads in one campaign. Ads within same category always have

overlapping features and collective commonalities. Moreover,

statistic shows that duplication of ads exists universally in our

platform. These are just few examples and the intrinsic con-

nection between ads is far more complicated than we thought.

Traditional models view an ad as an isolated individual and

ignore the potential of collective patterns. Though ad-specific

representations contain ample information for old ads, we

argue that those new ads are encouraged to facilitate collective

patterns to obtain more robust and general representations.

Since different new ads share some identical feature values,

these values divide new ads into groups naturally. The model

can extract informative group-level representations for new

ads with few conversions. In this way, cold-start issue can

be effectively alleviated.

To this end, we propose Automatic Fusion Network (Aut-

oFuse) to simultaneously learn multiple levels of represen-

tation with different feature groups. We illustrate simplified

architecture of AutoFuse in Figure 2(d). For each ad, we

first learn an ad-level representation with all the features to

depict the unique individual character. Then, we discard fine-

grained features and only utilize coarse-grained features to

learn group-level representation to portray the collective in-

formation in a broad and abstract perspective. Finally, through

dynamic fusion, we integrate these two level representations

to obtain a robust and general representation for this ad. The

dynamic fusion module can combine ad-level and group-level

representations selectively according to the popularity feature

of each ad, thereby producing a synergy effect between ad-

and group-level learning.

To evaluate our proposed method, we conduct extensive ex-

periments on public datasets and real-world industrial conver-

sion datasets sampled from Tencent ad platform. Experimental

results demonstrate that AutoFuse consistently outperforms

state-of-the-art baseline methods across all datasets, showing

significant improvement on not only old ads, but also new ads

especially.

The main contributions of this work are summarized as

follows:

3441

Authorized licensed use limited to: Tencent. Downloaded on April 22,2025 at 08:35:27 UTC from IEEE Xplore. Restrictions apply.

of 13

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论