ICDE2024_PlatoD2GL An Efficient Dynamic Deep Graph Learning System for Graph Neural Network Training on Billion-Scale Graphs_腾讯云.pdf

免费下载

PlatoD2GL: An Efficient Dynamic Deep Graph

Learning System for Graph Neural Network

Training on Billion-Scale Graphs

Xing Huang

†

Dandan Lin

∗

Weiyi Huang

†

Shijie Sun

†

Jie Wen

†

Chuan Chen

†

WeChat, Tencent Inc.

†

Shenzhen Institute of Computing Sciences

∗

{healyhuang, viniehuang, cedricsun, welkinwen, chuanchen}@tencent.com

†

lindandan@sics.ac.cn

∗

Abstract—Recently, huge interests in both academic and in-

dustry have been posed to Graph Neural Network due to its

power on revealing the topological information inside the data.

To support the real-world applications, most of (if not all) which

contain large-scale graphs with billions of edges, a number of

graph-based deep learning systems have been proposed and

implemented. However, all of them fail to efficiently process the

dynamic graphs in terms of both memory and time cost. The

state-of-the-art suffers from two issues: (1) expensive memory

consumption due to the huge indexing overhead of numerous

key-value pairs in traditional key-value topology storage and (2)

inefficient dynamic updating due to the heavy updates on indexing

structures for weighted neighbor sampling. In this paper, we

proposed a Dynamic Graph-based Learning System PlatoD2GL

to address above two issues. Specifically, we design a novel

and effective non-key-value data structure, termed samtree,for

dynamic topology storage, which largely reduces the memory

cost. In addition, we propose an efficient sampling indexing

structure FSTable by utilizing Fenwick tree, guaranteeing the

efficiency of both dynamic updates and weighted sampling.

Comprehensive experiments have demonstrated that, for dynamic

updating the graph topology, the efficiency of our system by up

to 79.8% and by up to 6.3 times in terms of memory and time

cost, respectively. Now, our system serves the major traffic in

WeChat Platform for training various GNN models.

I. INTRODUCTION

By taking the graph data (i.e., vertices and edges) as input,

graph neural network (GNN) models update the model param-

eters by considering both topology information and attributes

on vertices or edges. Due to its power, GNN models have

been widely applied in various real-world applications in many

critical fields, including recommendation systems [31], [44],

[12], [53], spam review detection [27], fraud detection [4],

[42], [6], anomaly detection [5], biomolecule classification

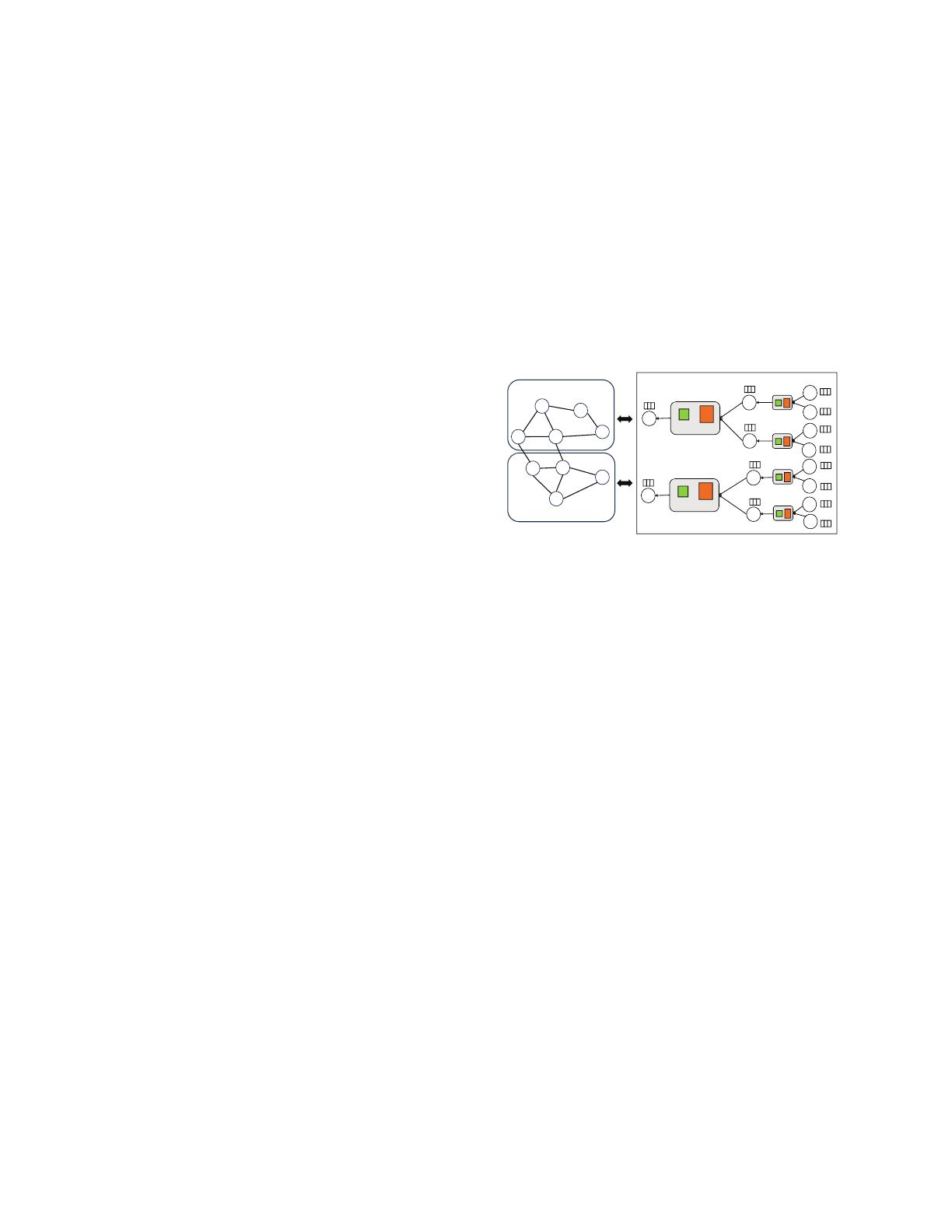

[41], natural language processing [48] and so forth. Figure 1

plots an example of the training phase of GNN models in

reality where the graph is stored in a distributed way. Specif-

ically, a GNN approach updates the embedding of a vertex

by iteratively aggregating the information from its neighbors.

For example, to update the embedding of vertex 1, it firstly

samples a proportion of it neighbors, i.e., vertex 2 and vertex

3, then aggregates the information of sampled neighbors, and

finally, combines the previous information of vertex 1 and

the neighbor information as the final embedding. Note that

sampling a proportion of neighbours is widely used in real

1

8

3

2

9

7

4

6

5

Graph Store 2

Graph Store 1

Training Phase

3

9

7

2

2

3

4

6

5

6

4

5

1

Aggr

Comb

Sample

5

Aggr

Comb

Sample

Comb: combination

Aggr: aggregation

Fig. 1: A running example of GNN training phase.

world applications since aggregating all neighbours of a vertex

is infeasible for large-scale graphs [17], [2], [18], [54], [15].

In general, to support the training of GNN models, a deep

graph learning system is important by providing the following

two abilities: (1) a distributed graph storage for storing the

large scale graphs (which is common in reality); (2) an efficient

sampling strategy for sampling K-hop neighbors of a vertex;

For example, for the live-streaming recommendation scenarios

in WeChat (the largest social media APP in China), the user-

item interaction graph could be with nearly 65.9 billion edges,

which cannot be stored in a single machines. However, due

to the explosion of GNN models in real-world applications,

supporting the GNN training on dynamic graphs is also an

indispensable requirement for a deep graph learning system.

For instance, for the online recommendation services, the

user interest is highly dynamic and non-stationary [3], [21],

[46], [31], leading to the frequently-evolving user-item graphs.

If a GNN-based recommendation model cannot capture the

instant user interest, the user might not be interested in the

recommended items inferred by the GNN model, making the

loss of user in the platform [11], [28], [29].

For training GNN on large scale graphs, a lot of dis-

tributed deep graph learning systems have been proposed,

e.g., AliGraph [54], Euler [22], Plato [23], DistDGL [52],

ByteGNN [51], and PlatoGL [31]. Most of existing deep graph

learning systems, e.g., Euler, Plato, DistDGL and ByteGNN

provide a static graph storage, i.e., directly storing the graph

in a cluster of physical machines (termed graph servers)by

using the graph partition methods (like METIS [24]). However,

these systems fail to support dynamic graphs since the graph

needs to be re-partitioned and re-deployed from scratch in

2421

2024 IEEE 40th International Conference on Data Engineering (ICDE)

2375-026X/24/$31.00 ©2024 IEEE

DOI 10.1109/ICDE60146.2024.00191

2024 IEEE 40th International Conference on Data Engineering (ICDE) | 979-8-3503-1715-2/24/$31.00 ©2024 IEEE | DOI: 10.1109/ICDE60146.2024.00191

Authorized licensed use limited to: Tencent. Downloaded on April 22,2025 at 08:50:16 UTC from IEEE Xplore. Restrictions apply.

graph servers when an edge is inserted/deleted in the graph.

Among existing systems, PlatoGL [31] is the state-of-the-

art to support a dynamic graph storage by utilizing the block-

based key-value store. That is, edges in a graph are stored

as tuples of key, value, where the key is a vertex s in the

graph with some extra information and the value is a block

that stores a proportion of neighbors of vertex s. In addition,

for the efficient weighted neighbor sampling, PlatoGL [31]

proposed a block-based sampling method by exploiting the

Inverse Transform Sampling (ITS) method [47]. However,

PlatoGL suffers from the following two limitations.

• the expensive memory consumption issue. Since a source

vertex s might have multiple blocks, each key designed by

PlatoGL consist of various information except the unique

identifier (ID) of vertex s for uniquely mapping to a specific

block. Thus, it has to maintain large number of indexings for

mapping the keys with their corresponding values (which is

the limitation of the key-value format), leading to expensive

memory overhead.

• the inefficient dynamic graph updates issue. It needs to

update c

umulative sum table (CSTable) (which is used for

fast weighted sampling) for each source vertex. To be spe-

cific, when a new neighbor of a source vertex s is inserted,

the CSTable of s should be re-computed from scratch since

each element v in CSTable requires to sum up all the weights

of vertices before v. In worst case, it takes O(n

L

) time cost

where n

L

is the number of elements (i.e., out-neighbors) in

CSTable. The case would be worse in reality since the graph

is frequently evolving in real-world applications.

Contributions. This paper tackles above questions. We intro-

duce PlatoD2GL, a distributed d

ynamic deep graph learning

system that supports both efficient dynamic graph updates,

without taking much memory cost, and fast sampling strategy.

The following shows our contributions.

(1) We propose a dynamic graph storage layer inside Pla-

toD2GL to store multiple GNN-related data. Specifically, we

design a novel and effective non-key-value topology storage,

termed samtree, which largely reduces the memory cost for

the dynamic updates by avoiding maintaining the indexings

for key-value mapping. Besides, we propose efficient inser-

tion and deletion mechanisms, and theoretically prove that

the average time cost of insertion and deletion is linear to

the number of vertices in the samtree.

(2) We propose an efficient sampling indexing structure FSTable

by utilizing Fenwick tree [10]. To our best knowledge, we

are the first to exploit Fenwick tree for dynamic graphs. In

addition, we theoretically prove that the time complexity of

updating a FSTable with n

L

elements is O(log n

L

), which

is more efficient than existing CSTable used in PlatoGL.

(3) We also design a novel weighted sampling method FTS by

incorporating our FSTable with traditional ITS method.

(4) We propose two novel optimization techniques inside our

PlatoD2GL system: (1) a compression technique by com-

pressing our samtree for further reducing the memory cost

and (2) a batch-based latch-free concurrent mechanism by

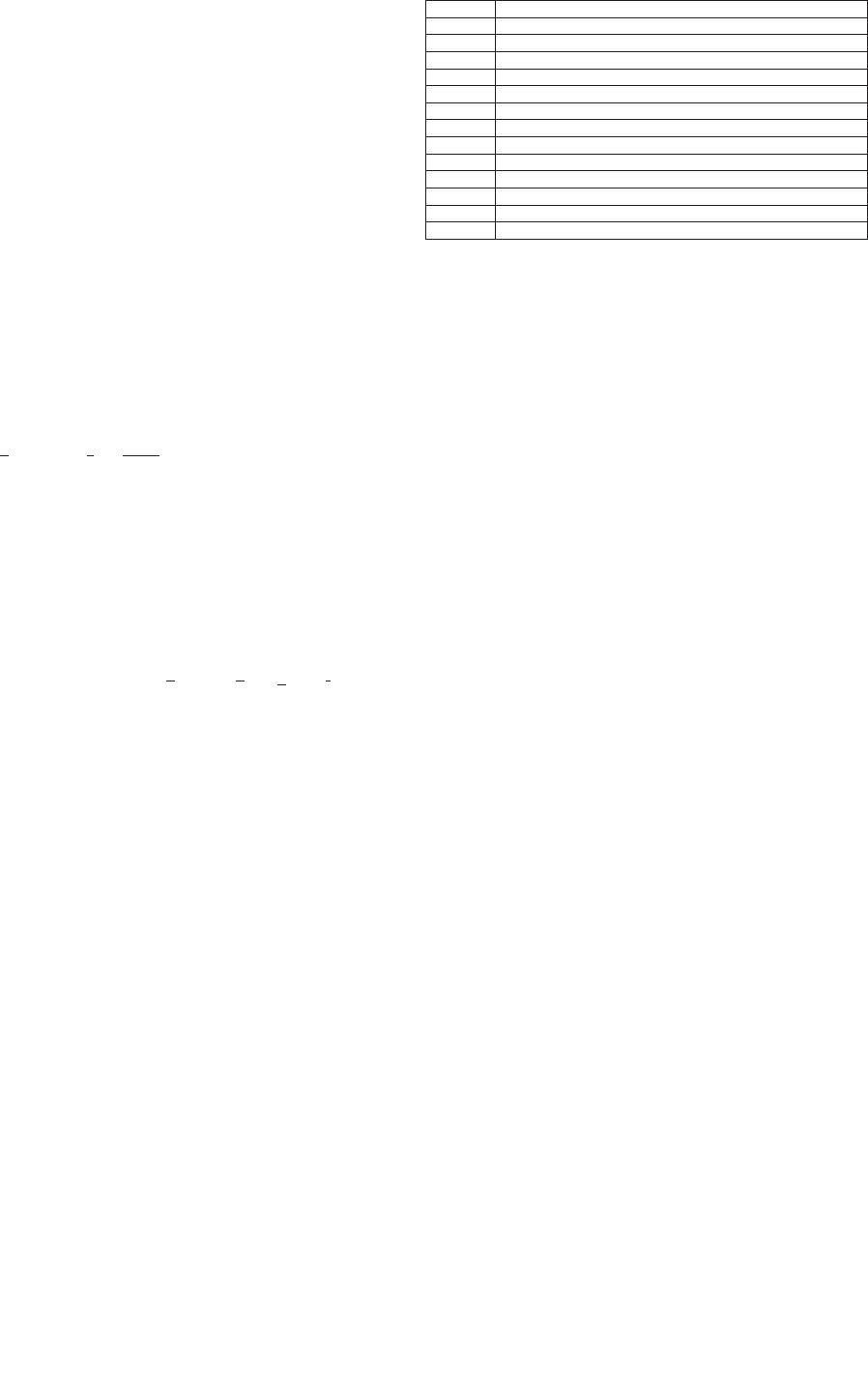

Symbol Description

G(V, E) The directed graph G with vertices set V , edges set E

w

u,v

The weight of an edge e(u, v)

w

u

The sum of weights of all edges that linked from u

N

s

The set of out-neighbours of vertex s

n

s

The number of out-neighbours of vertex s

T

s

The samtree of vertex s for storing its out-neighbors

c, H The node capacity and height of a smatree T , respectively

r

i

The node in the i-th level of a smatree T

α The slackness for α-Split algorithm

n

L

The number of vertices in a leaf node of a samtree

C The cumulative sum table (CSTable) for ITS method

F The FSTable for FTS method

S

L

The sum of weights in a leaf node

TABLE I: Frequently-used notations.

avoiding the race conditions for accelerating the dynamic

updating process.

(5) We experimentally verified that by conducting comprehen-

sive experiments on two public datasets and one production

dataset with up to 65.9 billion edges. Comprehensive ex-

periments demonstrate that PlatoD2GL takes less memory

cost than state-of-the-art by up to 79.8% on billion-scale

graphs. Besides, PlatoD2GL is faster than state-of-the-art by

up to 6.3 times and up to 13.7 times in terms of dynamic

updates and sampling, respectively.

II. P

RELIMINARIES

In this section, we firstly introduce background of the

problem studied in this paper, and then present a widely-used

weighted sampling method Inverse Transform Sampling (ITS),

which is the building stone in our proposed system. Table I

lists the notations used in this paper.

A. Background

We start with a simple directed weighted graph G(V, E,

W) where V and E represents the set of vertices and edges,

respectively, and W: E→R

+

is a function that assigns a

weight w

u,v

to an edge e(u, v) linking from vertex u to vertex

v. For simplicity, we denote a weighted edge as e(u, v, w

u,v

).

Let N

u

denote the set of out-neighbours of vertex u. In the

following, we use “out-neighbor” and “neighbor” interchange-

ably if it is clear in the context. In this paper, we consider the

heterogeneous graph with multiple types of vertices and/or

edges, which is common in real-world applications. Besides,

in this paper, we focus on the dynamic graphs. Given a time

interval t,adynamic graph can be considered as a series of

graphs {G

(t)

|t ∈ [1,T]} where G

(t)

is a heterogeneous graph

at timestamp t and T is the largest timestamp.

Basic operations in GNN models. GNN approaches

could be basically formulated as message passing [52],

[13], [45], [17], [43], [38], [39], where vertices in the

graph propagate their messages to their neighbours and

updates their representations (i.e., in form of embedding)

by aggregating the received messages from their neighbours.

Given a heterogeneous graph G, the embedding e

(0)

u

of each

vertex u is initially assigned as its features vector f

u

. To get

2422

Authorized licensed use limited to: Tencent. Downloaded on April 22,2025 at 08:50:16 UTC from IEEE Xplore. Restrictions apply.

of 14

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论