LIFF:在尺度和深度上的光场特征.pdf

50墨值下载

LiFF: Light Field Features in Scale and Depth

Donald G. Dansereau

1,2

, Bernd Girod

1

, and Gordon Wetzstein

1

1

Stanford University,

2

The University of Sydney

Abstract—Feature detectors and descriptors are key low-level

vision tools that many higher-level tasks build on. Unfortunately

these fail in the presence of challenging light transport effects

including partial occlusion, low contrast, and reflective or re-

fractive surfaces. Building on spatio-angular imaging modalities

offered by emerging light field cameras, we introduce a new

and computationally efficient 4D light field feature detector and

descriptor: LiFF. LiFF is scale invariant and utilizes the full

4D light field to detect features that are robust to changes in

perspective. This is particularly useful for structure from motion

(SfM) and other tasks that match features across viewpoints of a

scene. We demonstrate significantly improved 3D reconstructions

via SfM when using LiFF instead of the leading 2D or 4D features,

and show that LiFF runs an order of magnitude faster than the

leading 4D approach. Finally, LiFF inherently estimates depth

for each feature, opening a path for future research in light

field-based SfM.

I. INTRODUCTION

Feature detection and matching are the basis for a broad

range of tasks in computer vision. Image registration, pose

estimation, 3D reconstruction, place recognition, combinations

of these, e.g. structure from motion (SfM) and simultaneous

localisation and mapping (SLAM), along with a vast body of

related tasks, rely directly on being able to identify and match

features across images. While these approaches work relatively

robustly over a range of applications, some remain out of

reach due to poor performance in challenging conditions. Even

infrequent failures can be unacceptable, as in the case of

autonomous driving.

State-of-the-art features fail in challenging conditions

including self-similar, occlusion-rich, and non-Lambertian

scenes, as well as in low-contrast scenarios including low

light and scattering media. For example, the high rate of

self-similarity and occlusion in the scene in Fig. 1 cause the

COLMAP [35] SfM solution to fail. There is also an inherent

tradeoff between computational burden and robustness: given

sufficient computation it may be possible to make sense of an

outlier-rich set of features, but it is more desirable to begin

with higher-quality features, reducing computational burden,

probability of failure, power consumption, and latency.

Light field (LF) imaging is an established tool in computer

vision offering advantages in computational complexity and

robustness to challenging scenarios [7], [10], [29], [38], [48].

This is due both to a more favourable signal-to-noise ratio

(SNR) / depth of field tradeoff than for conventional cameras,

and to the rich depth, occlusion, and native non-Lambertian

surface capture inherently supported by LFs.

In this work we propose to detect and describe blobs directly

from 4D LFs to deliver more informative features compared

with the leading 2D and 4D alternatives. Just as the scale

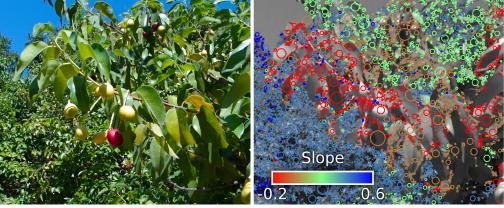

Fig. 1. (left) One of five views of a scene that COLMAP’s structure-from-

motion (SfM) solution fails to reconstruct using SIFT, but successfully

reconstructs using LiFF; (right) LiFF features have well-defined scale

and depth, measured as light field slope, revealing the 3D structure of

the scene – note we do not employ depth in the SfM solution. Code

and dataset are at http://dgd.vision/Tools/LiFF, see the supplementary

information for dataset details.

invariant feature transform (SIFT) detects blobs with well-

defined scale, the proposed light field feature (LiFF) identifies

blobs with both well-defined scale and well-defined depth

in the scene. Structures that change their appearance with

viewpoint, for example those refracted through or reflected

off curved surfaces, and those formed by occluding edges,

will not satisfy these criteria. At the same time, well-defined

features that are partially occluded are not normally detected

by 2D methods, but can be detected by LiFF via focusing

around partial occluders.

Ultimately LiFF features result in fewer mis-registrations,

more robust behaviour, and more complete 3D models than the

leading 2D and 4D methods, allowing operation over a broader

range of conditions. Following recent work comparing hand-

crafted and learned features [36], we evaluate LiFF in terms

of both low-level detections and the higher-level task of 3D

point cloud reconstruction via SfM.

LiFF features have applicability where challenging condi-

tions arise, including autonomous driving, delivery drones,

surveillance, and infrastructure monitoring, in which weather

and low light commonly complicate vision. It also opens a

range of applications in which feature-based methods are not

presently employed due to their poor rate of success, including

medical imagery, industrial sites with poor visibility such as

mines, and in underwater systems.

The key contributions of this work are:

• We describe LiFF, a novel feature detector and descriptor

that is less computationally expensive than leading 4D

methods and natively delivers depth information;

• We demonstrate that LiFF yields superior detection rates

compared with competing 2D and 4D methods in low-

SNR scenarios; and

arXiv:1901.03916v1 [cs.CV] 13 Jan 2019

加入“知识星球 行业与管理资源”库,免费下载报告合集

1.

每月上传分享2000+份最新行业资源(涵盖科技、金融、教育、互联网、

房地产、生物制药、医疗健康等行研报告、科技动态、管理方案等);

2. 免费下载资源库已存行业报告。

3. 免费下载资源库已存国内外咨询公司管理方案与企业运营制度等。

4. 免费下载资源库已存科技方案、论文、报告及课件。

微信扫码二维码,免费报告轻松领

微信扫码加入“知识星球 行业与管理资源”,

获取更多行业报告、管理文案、大师笔记

加入微信群,每日获取免费3+份报告

1.

扫一扫二维码,添加客服微信(微信号:Teamkon2)

2.

添加好友请备注:姓名+单位+业务领域

3. 群主将邀请您进专业行业报告资源群

of 10

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论