POLARIS- The Distributed SQL Engine in Azure Synapse.pdf

免费下载

POLARIS: The Distributed SQL Engine in Azure Synapse

Josep Aguilar-Saborit, Raghu Ramakrishnan, Krish Srinivasan

Kevin Bocksrocker, Ioannis Alagiannis, Mahadevan Sankara, Moe Shafiei

Jose Blakeley, Girish Dasarathy, Sumeet Dash, Lazar Davidovic, Maja Damjanic, Slobodan Djunic, Nemanja Djurkic, Charles Feddersen, Cesar

Galindo-Legaria, Alan Halverson, Milana Kovacevic, Nikola Kicovic, Goran Lukic, Djordje Maksimovic, Ana Manic, Nikola Markovic, Bosko Mihic,

Ugljesa Milic, Marko Milojevic, Tapas Nayak, Milan Potocnik, Milos Radic, Bozidar Radivojevic, Srikumar Rangarajan, Milan Ruzic, Milan Simic,

Marko Sosic, Igor Stanko, Maja Stikic, Sasa Stanojkov, Vukasin Stefanovic, Milos Sukovic, Aleksandar Tomic , Dragan Tomic, Steve Toscano,

Djordje Trifunovic, Veljko Vasic, Tomer Verona, Aleksandar Vujic, Nikola Vujic, Marko Vukovic, Marko Zivanovic

Microsoft Corp

ABSTRACT

In this paper, we describe the Polaris distributed SQL query engine

in Azure Synapse. It is the result of a multi-year project to re-

architect the query processing framework in the SQL DW parallel

data warehouse service, and addresses two main goals: (i) converge

data warehousing and big data workloads, and (ii) separate compute

and state for cloud-native execution.

From a customer perspective, these goals translate into many useful

features, including the ability to resize live workloads, deliver

predictable performance at scale, and to efficiently handle both

relational and unstructured data. Achieving these goals required

many innovations, including a novel “cell” data abstraction, and

flexible, fine-grained, task monitoring and scheduling capable of

handling partial query restarts and PB-scale execution. Most

importantly, while we develop a completely new scale-out

framework, it is fully compatible with T-SQL and leverages

decades of investment in the SQL Server single-node runtime and

query optimizer. The scalability of the system is highlighted by a

1PB scale run of all 22 TPC-H queries; to our knowledge, this is

the first reported run with scale larger than 100TB.

PVLDB Reference Format:

Josep Aguilar-Saborit, Raghu Ramakrishnan et al.

VLDB Conferences. PVLDB, 13(12): 3204 – 3216, 2020.

DOI: https://doi.org/10.14778/3415478.3415545

1. INTRODUCTION

Relational data warehousing has long been the enterprise approach

to data analytics, in conjunction with multi-dimensional business-

intelligence (BI) tools such as Power BI and Tableau. The recent

explosion in the number and diversity of data sources, together with

the interest in machine learning, real-time analytics and other

advanced capabilities, has made it necessary to extend traditional

relational DBMS based warehouses. In contrast to the traditional

approach of carefully curating data to conform to standard

enterprise schemas and semantics, data lakes focus on rapidly

ingesting data from many sources and give users flexible analytic

tools to handle the resulting data heterogeneity and scale.

A common pattern is that data lakes are used for data preparation,

and the results are then moved to a traditional warehouse for the

phase of interactive analysis and reporting. While this pattern

bridges the lake and warehouse paradigms and allows enterprises

to benefit from their complementary strengths, we believe that the

two approaches are converging, and that the full relational SQL tool

chain (spanning data movement, catalogs, business analytics and

reporting) must be supported directly over the diverse and large

datasets stored in a lake; users will not want to migrate all their

investments in existing tool chains.

In this paper, we present the Polaris interactive relational query

engine, a key component for converging warehouses and lakes in

Azure Synapse [1], with a cloud-native scale-out architecture that

makes novel contributions in the following areas:

• Cell data abstraction: Polaris builds on the abstraction of

a data “cell” to run efficiently on a diverse collection of data

formats and storage systems. The full SQL tool chain can now

be brought to bear over files in the lake with on-demand

interactive performance at scale, eliminating the need to move

files into a warehouse. This reduces costs, simplifies data

governance, and reduces time to insight. Additionally, in

conjunction with a re-designed storage manager (Fido [2]) it

supports the full range of query and transactional performance

needed for Tier 1 warehousing workloads.

• Fine-grained scale-out: The highly-available micro-

service architecture is based on (1) a careful packaging of data

and query processing into units called “tasks” that can be

readily moved across compute nodes and re-started at the task

level; (2) widely-partitioned data with a flexible distribution

model; (3) a task-level “workflow-DAG” that is novel in

spanning multiple queries, in contrast to [3, 4, 5, 6]; and (4) a

framework for fine-grained monitoring and flexible

scheduling of tasks.

• Combining scale-up and scale-out: Production-ready

scale-up SQL systems offer excellent intra-partition

parallelism and have been tuned for interactive queries with

deep enhancements to query optimization and vectorized

processing of columnar data partitions, careful control flow,

and exploitation of tiered data caches. While Polaris has a new

scale-out distributed query processing architecture inspired by

big data query execution frameworks, it is unique in how it

combines this with SQL Server’s scale-up features at each

node; we thus benefit from both scale-up and scale-out.

• Flexible service model: Polaris has a concept of a session,

which supports a spectrum of consumption models, ranging

from “serverless” ad-hoc queries to long-standing pools or

clusters. Leveraging the Polaris session architecture, Azure

Synapse is unique among cloud services in how it brings

together serverless and reserved pools with online scaling. All

data (e.g., files in the lake, as well as managed data in Fido

[2]) are accessible from any session, and multiple sessions can

This work is licensed under the Creative Commons Attribution-

NonCommercial-NoDerivatives 4.0 International License. To view a copy of

this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/. For any

use beyond those covered by this license, obtain permission by emailing

info@vldb.org. Copyright is held by the owner/author(s). Publication rights

licensed to the VLDB Endowment.

Proceedings of the VLDB Endowment, Vol. 13, No. 12

ISSN 2150-8097.

DOI: https://doi.org/10.14778/3415478.3415545

3204

access all underlying data concurrently. Fido supports

efficient transactional updates with data versioning.

1.1 Related Systems

The most closely related cloud services are AWS Redshift [7],

Athena [8], Google Big Query [9, 10], and Snowflake [11]. Of

course, on-premise data warehouses such as Exadata [12] and

Teradata [13] and big data systems such as Hadoop [3, 4, 14, 15],

Presto [16, 17] and Spark [5] target similar workloads (increasingly

migrating to the cloud) and have architectural similarities.

• Converging data lakes and warehouses. Polaris

represents data using a “cell” abstraction with two

dimensions: distributions (data alignment) and partitions

(data pruning). Each cell is self-contained with its own

statistics, used for both global and local QO. This

abstraction is the key building block enabling Polaris to

abstract data stores. Big Query and Snowflake support a

sort key (partitions) but not distribution alignment; we

discuss this further in Section 4.

• Service form factor. On one hand, we have reserved-

capacity services such as AWS Redshift, and on the other

serverless offerings such as Athena and Big Query.

Snowflake and Redshift Spectrum are somewhere in the

middle, with support for online scaling of the reserved

capacity pool size. Leveraging the Polaris session

architecture, Azure Synapse is unique in supporting both

serverless and reserved pools with online scaling; the

pool form factor represents the next generation of the

current Azure SQL DW service, which is subsumed as

part of Synapse. The same data can simultaneously be

operated on from both serverless SQL and SQL pools.

• Distributed cost-based query optimization over the data

lake. Related systems such as Snowflake [11], Presto [17,

18] and LLAP [14] do query optimization, but they have

not gone through the years of fine-tuning of SQL Server,

whose cost-based selection of distributed execution plans

goes back to the Chrysalis project [19]. A novel aspect of

Polaris is how it carefully re-factors the optimizer

framework in SQL Server and enhances it to be cell-

aware, in order to fully leverage the Query Optimizer

(QO), which implements a rich set of execution strategies

and sophisticated estimation techniques. We discuss

Polaris query optimization in Section 5; this is key to the

performance reported in Section 10.

• Massive scale-out of state-of-the-art scale-up query

processor. Polaris has the benefit of building on one of

the most sophisticated scale-up implementations in SQL

Server, and the scale-out framework is designed

expressly to achieve this—tasks at each node are

delegated to SQL Server instances—by carefully re-

factoring SQL Server code.

• Global resource-aware scheduling. The fine-grained

representation of tasks across all queries in the Polaris

workflow-graph is inspired by big data task graphs [3, 4,

5, 6], and enables much better resource utilization and

concurrency than traditional data warehouses. Polaris

advances existing big data systems in the flexibility of its

task orchestration framework, and in maintaining a

global view of multiple queries to do resource-aware

cross-query scheduling. This improves both resource

utilization and concurrency. In future, we plan to build

on this global view with autonomous workload

management features. See Section 6.

• Multi-layered data caching model. Hive LLAP [14]

showed the value of caching and pre-fetching of column

store data for big data workloads. Caching is especially

important in cloud-native architectures that separate state

from compute (Section 2), and Polaris similarly leverages

SQL Server buffer pools and SSD caching. Local nodes

cache columnar data in buffer pools, complemented by

caching of distributed data in SSD caches.

2. SEPARATING COMPUTE AND STATE

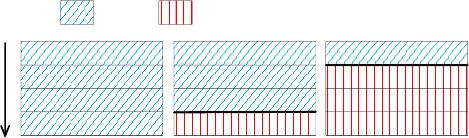

Figure 1 shows the evolution of data warehouse architectures over

the years, illustrating how state has been coupled with compute.

Data

Metadata

Xact

Caches

State

Data

Metadata

Xact

Caches

SEPARATED FROM COMPUTE

COMPUTE

(a) Stateful Compute (b) Stateless Compute

Data

Metadata

Xact

Caches

On-prem arch

Storage separation arch State separation arch

Figure 1. Decoupling state from compute.

To drive the end-to-end life cycle of a SQL statement with

transactional guarantees and top tier performance, engines maintain

state, comprised of cache, metadata, transaction logs, and data. On

the left side of Figure 1, we see the typical shared-nothing on-

premises architecture where all state is in the compute layer. This

approach relies on small, highly stable and homogenous clusters

with dedicated hardware for Tier-1 performance, and is expensive,

hard to maintain, and cluster capacity is bounded by machine sizes

because of the fixed topology; hence, it has scalability limits.

The shift to the cloud moves the dial towards the right side of Figure

1 and brings key architectural changes. The first step is the

decoupling of compute and storage, providing more flexible

resource scaling. Compute and storage layers can scale up and

down independently adapting to user needs; storage is abundant

and cheaper than compute, and not all data needs to be accessed at

all times. The user does not need compute to hold all data, and only

pays for the compute needed to query a working subset of it.

Decoupling of compute and storage is not, however, the same as

decoupling compute and state. If any of the remaining state held in

compute cannot be reconstructed from external services, then

compute remains stateful. In stateful architectures, state for in-

flight transactions is stored in the compute node and is not hardened

into persistent storage until the transaction commits. As such, when

a compute node fails, the state of non-committed transactions is

lost, and there is no alternative but to fail in-flight transactions.

Stateful architectures often also couple metadata describing data

distributions and mappings to compute nodes, and thus, a compute

node effectively owns responsibility for processing a subset of the

data and its ownership cannot be transferred without a cluster re-

start. In summary, resilience to compute node failure and elastic

assignment of data to compute are not possible in stateful

architectures. Several cloud services and on-prem data warehouse

architectures fall into this category, including Red Shift, SQL DW,

Teradata, Oracle, etc.

3205

of 13

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论