最近想试试国产数据库,选了OceanBase。主页上看到最新的社区版本是4.0,于是按照教程进行了安装。标准的1+3,一个监控节点,3个数据库节点。安装完毕以后,用root用户,在test数据库上创建了一些表,但是没有插入数据。

这时候,想看一下数据库节点的性能,就发现各个数据库节点的CPU比较高,30%左右,就是observer这个一个进程。过了一个晚上,第二天看,依然是比较高。看看有什么办法能找出来这个问题么?

机器配置:8C 16G 100G磁盘

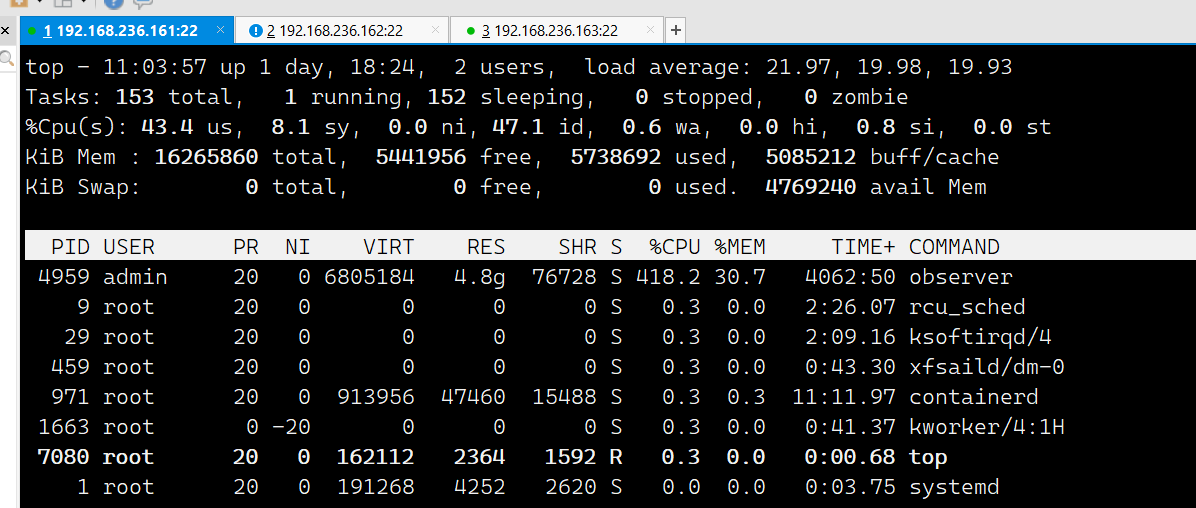

第一台机器,CPU 42%

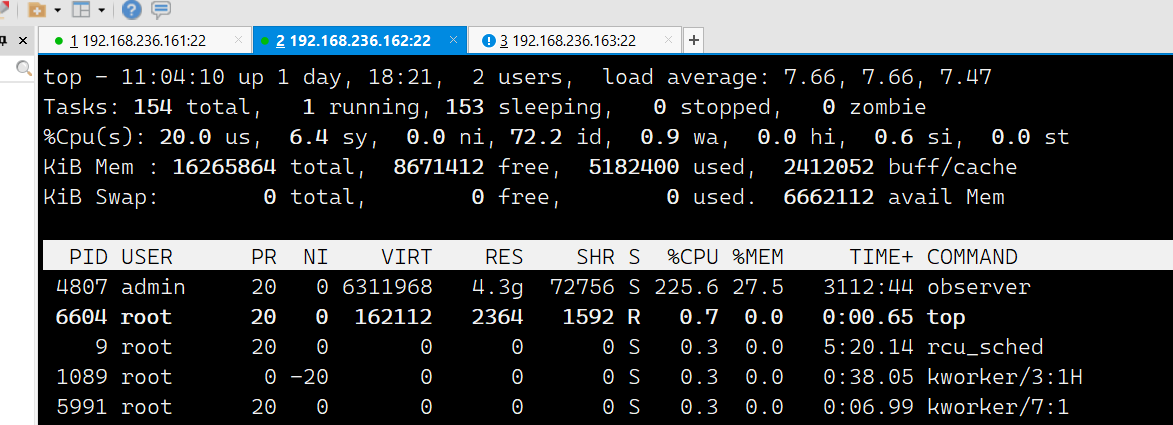

第二台机器,CPU 21%

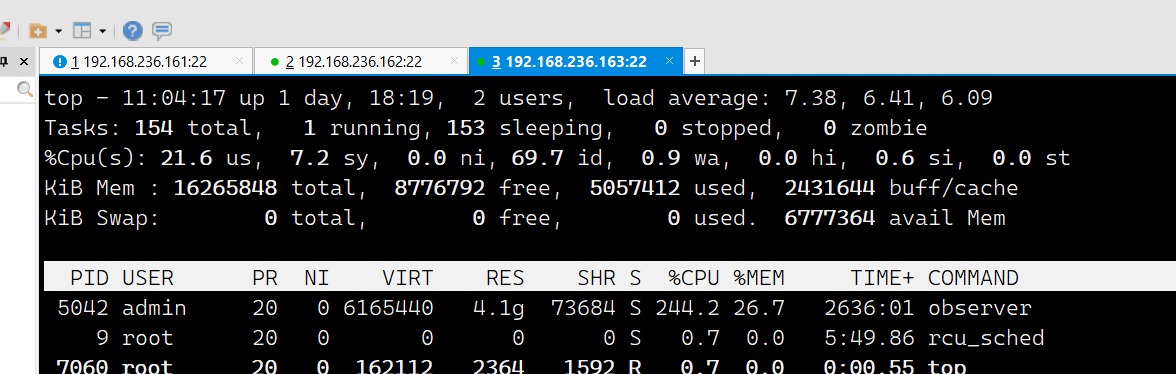

第三台机器,CPU 22%

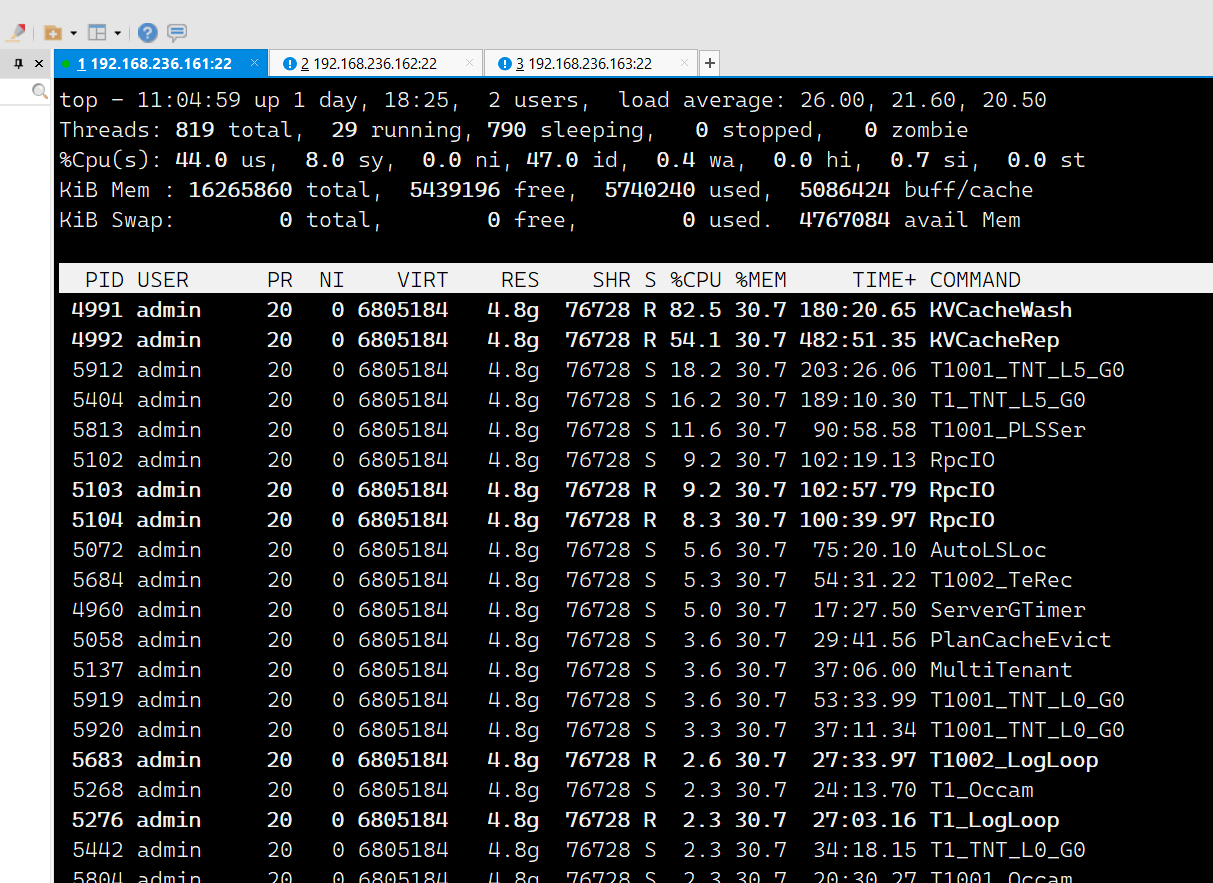

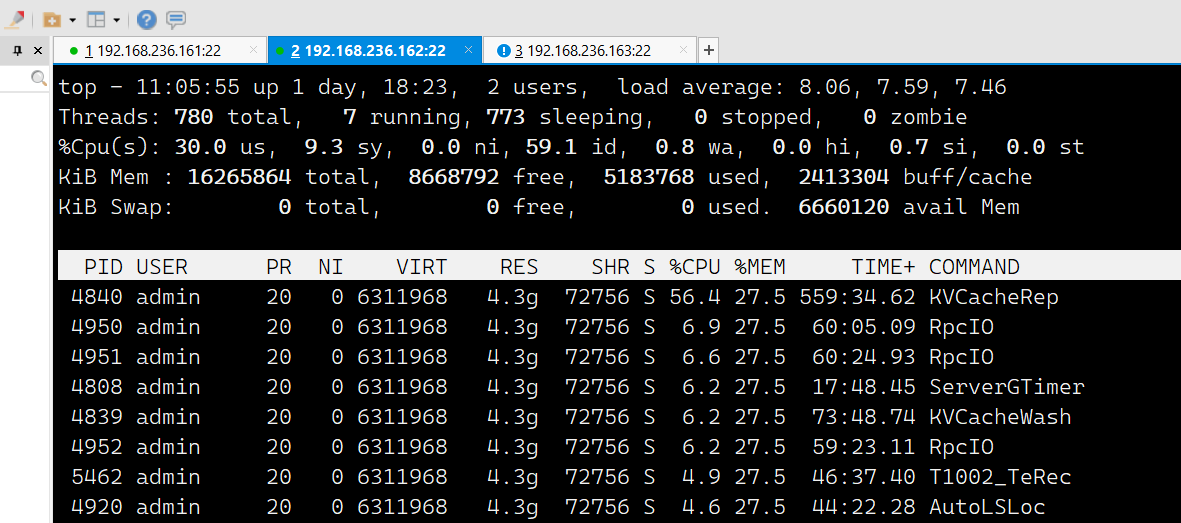

第一台机器 top 看进程

第二台机器看进程

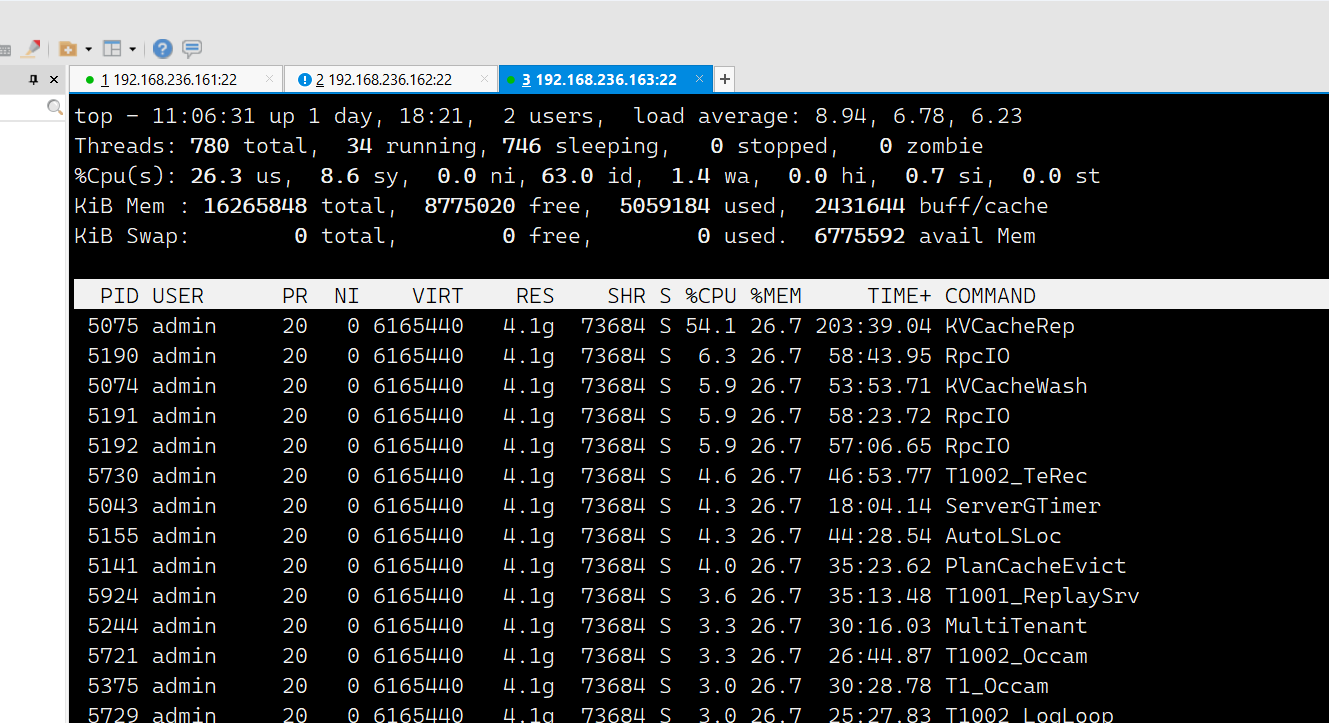

第三台机器看线程

这是第一台机器上面的observer.log 其中的一段

[2023-03-08 10:51:54.345705] WARN [LIB] ~ObTimeGuard (utility.h:853) [5383][T1_TenantWeakRe][T1][Y0-0000000000000000-0-0] [lt=34] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1, thread_timer_task' cost too much time, use

d=17255, time_dist: do_cluster_heartbeat=23, generate_cluster_version=17133, cluster_service_self_check=11)

[2023-03-08 10:51:54.397474] WARN [ARCHIVE] persist_archive_progress_ (ob_archive_persist_mgr.cpp:317) [5776][T1002_ArcSrv][T1002][YB42C0A8ECA1-0005F6496F293086-0-0] [lt=49] load archive round attr failed(ret=-4018, attr={key:{tenant_id:0, dest_no:-1}, incarnation:1, dest_id:0, round_id:0, state:{status:"INVALID"}, start_scn:0, checkpoint_scn:0, max_scn:0, compatible:{version:1}, base_piece_id:0, used_piece_id:0, piece_switch_interval:0, frozen_input_bytes:0, frozen_output_bytes:0, active_input_bytes:0, active_output_bytes:0, deleted_input_bytes:0, deleted_output_bytes:0, path:"", comment:""})

[2023-03-08 10:51:54.406036] WARN [LIB] ~ObTimeGuard (utility.h:853) [5895][T1001_TenantWea][T1001][Y0-0000000000000000-0-0] [lt=65] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1001, thread_timer_task' cost too much time, used=13415, time_dist: do_cluster_heartbeat=20, generate_cluster_version=10819)

[2023-03-08 10:51:54.459906] WARN [LIB] ~ObTimeGuard (utility.h:853) [4991][KVCacheWash][T0][Y0-0000000000000000-0-0] [lt=45] destruct(*this=time guard 'clean_garbage_node' cost too much time, used=140810)

[2023-03-08 10:51:54.469176] WARN [PALF] inner_append_log (palf_handle_impl.cpp:1701) [5809][T1001_IOWorker][T1001][Y0-0000000000000000-0-0] [lt=55] write log cost too much time(ret=0, this={palf_id:1, self:"192.168.236.161:2882", has_set_deleted:false}, lsn_array=[{lsn:1886175751}], log_ts_array=[1678243914451924325], time_cost=16829)

[2023-03-08 10:51:54.543255] WARN [LIB] ~ObTimeGuard (utility.h:853) [5383][T1_TenantWeakRe][T1][Y0-0000000000000000-0-0] [lt=174] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1, thread_timer_task' cost too much time, used=11398, time_dist: do_cluster_heartbeat=351, generate_cluster_version=7727)

[2023-03-08 10:51:54.544242] WARN [CLOG] do_thread_task_ (ob_remote_fetch_log_worker.cpp:181) [5753][T1002_RFLWorker][T1002][YB42C0A8ECA1-0005F6496E993086-0-0] [lt=51] no task exist, just skip(ret=-4018)

[2023-03-08 10:51:54.603782] TRACE [TRACE] print_trace_log_if_necessary_ (ob_trans_ctx.cpp:139) [5877][T1001_ApplySrv0][T1001][Y0-0000000000000000-0-0] [lt=91] [trans sampling] (TRACE=begin_ts=1678243914595460 2023-03-08 02:51:54.595460|[init] u=0 addr:0x7fb4f4f54d90, id:1, trans_id:{txid:3182593}, ctx_ref:0, opid:1575293|[start_trans] u=8 ret:0, left_time:29999358, ctx_ref:3, opid:1575294|[start_access] u=5 ret:0, trace_id:"YB42C0A8ECA1-0005F649747EFD65-0-0", opid:2, data_seq:1678243914594857, pending:1, ctx_ref:3, thread_id:783|[end_access] u=372 opid:2, pending:0, ctx_ref:3, thread_id:783|[set_stc] u=170 stc:1678243914596003, opid:1575298|[after_submit_log] u=1096 ret:0, log_no:0, t:1678243914596022584, lsn:{lsn:1886181374}|[commit] u=5 ret:0, thread_id:5895, ctx_ref:4, opid:1575298|[log_sync_succ_cb] u=6511 ret:0, log_type:0x1, t:1678243914596022584, offset:{lsn:1886181374}, ctx_ref:3|[log_sync_succ_cb] u=3 ret:0, log_type:0x40, t:1678243914596022584, offset:{lsn:1886181374}, ctx_ref:3|[response_scheduler] u=123 ret:0, tag1:false, tag2:false, status:0, commit_version:1678243914596022584|total_timeu=8293)

[2023-03-08 10:51:54.603993] TRACE [TRACE] print_trace_log_if_necessary_ (ob_trans_ctx.cpp:139) [5877][T1001_ApplySrv0][T1001][Y0-0000000000000000-0-0] [lt=141] [trans sampling] (TRACE=begin_ts=1678243914595460 2023-03-08 02:51:54.595460|[init] u=0 addr:0x7fb4f4f54d90, id:1, trans_id:{txid:3182593}, ctx_ref:0, opid:1575293|[start_trans] u=8 ret:0, left_time:29999358, ctx_ref:3, opid:1575294|[start_access] u=5 ret:0, trace_id:"YB42C0A8ECA1-0005F649747EFD65-0-0", opid:2, data_seq:1678243914594857, pending:1, ctx_ref:3, thread_id:783|[end_access] u=372 opid:2, pending:0, ctx_ref:3, thread_id:783|[set_stc] u=170 stc:1678243914596003, opid:1575298|[after_submit_log] u=1096 ret:0, log_no:0, t:1678243914596022584, lsn:{lsn:1886181374}|[commit] u=5 ret:0, thread_id:5895, ctx_ref:4, opid:1575298|[log_sync_succ_cb] u=6511 ret:0, log_type:0x1, t:1678243914596022584, offset:{lsn:1886181374}, ctx_ref:3|[log_sync_succ_cb] u=3 ret:0, log_type:0x40, t:1678243914596022584, offset:{lsn:1886181374}, ctx_ref:3|[response_scheduler] u=123 ret:0, tag1:false, tag2:false, status:0, commit_version:1678243914596022584|[exiting] u=149 ctx_ref:2, arg1:1, opid:1575300|[log_sync_succ_cb] u=4 ret:0, log_type:0x100, t:1678243914596022584, offset:{lsn:1886181374}, ctx_ref:1|[end_trans_cb] u=80 ret:0, arg1:0, arg2:1678243914596022584, async:false, opid:1575301|[destroy] u=3 opid:1575301|total_timeu=8529)

[2023-03-08 10:51:54.606029] WARN [LIB] ~ObTimeGuard (utility.h:853) [5895][T1001_TenantWea][T1001][Y0-0000000000000000-0-0] [lt=70] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1001, thread_timer_task' cost too much time, used=12695, time_dist: generate_cluster_version=10790, cluster_service_self_check=11)

[2023-03-08 10:51:54.724089] WARN [PALF] sliding_cb (log_sliding_window.cpp:1853) [5928][T1002_TNT_L0_G1][T1002][YB42C0A8ECA2-0005F64971C28E87-0-0] [lt=66] log_task life cost too much time(palf_id=1002, self="192.168.236.161:2882", log_id=497380, log_task={header:{begin_lsn:{lsn:60569932}, end_lsn:{lsn:18446744073709551615}, log_id:497380, min_log_ts:1678243914575520958, max_log_ts:1678243914575520958, data_len:66, proposal_id:1, prev_lsn:{lsn:60569810}, prev_proposal_id:1, committed_end_lsn:{lsn:60569932}, data_checksum:1715074492, accum_checksum:238684987, is_padding_log:false, is_raw_write:false}, state_map:{val:58}, ref_cnt:0, gen_ts:1678243914622516, submit_wait_time:11, submit_ts:1678243914622527, flush_cost:6793, flushed_ts:1678243914629320}, fs_cb_begin_ts=1678243914724064, log_life_time=101548)

[2023-03-08 10:51:54.742652] WARN [LIB] ~ObTimeGuard (utility.h:853) [5383][T1_TenantWeakRe][T1][Y0-0000000000000000-0-0] [lt=63] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1, thread_timer_task' cost too much time, used=10442, time_dist: do_cluster_heartbeat=206, generate_cluster_version=7318)

[2023-03-08 10:51:54.778483] WARN [LIB] ~ObTimeGuard (utility.h:853) [4991][KVCacheWash][T0][Y0-0000000000000000-0-0] [lt=45] destruct(*this=time guard 'clean_garbage_node' cost too much time, used=117631)

[2023-03-08 10:51:54.807463] WARN [LIB] ~ObTimeGuard (utility.h:853) [5895][T1001_TenantWea][T1001][Y0-0000000000000000-0-0] [lt=41] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1001, thread_timer_task' cost too much time, used=13745, time_dist: do_cluster_heartbeat=17, generate_cluster_version=13613)

[2023-03-08 10:51:55.002050] WARN [PALF] inner_append_log (palf_handle_impl.cpp:1701) [5809][T1001_IOWorker][T1001][Y0-0000000000000000-0-0] [lt=147] write log cost too much time(ret=0, this={palf_id:1, self:"192.168.236.161:2882", has_set_deleted:false}, lsn_array=[{lsn:1886190630}], log_ts_array=[1678243914988219607], time_cost=13214)

[2023-03-08 10:51:55.003071] WARN [LIB] ~ObTimeGuard (utility.h:853) [5767][T1002_TenantWea][T1002][Y0-0000000000000000-0-0] [lt=50] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1002, thread_timer_task' cost too much time, used=16796, time_dist: do_cluster_heartbeat=14, generate_cluster_version=16608)

[2023-03-08 10:51:55.007083] WARN [LIB] ~ObTimeGuard (utility.h:853) [5895][T1001_TenantWea][T1001][Y0-0000000000000000-0-0] [lt=51] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1001, thread_timer_task' cost too much time, used=12750, time_dist: do_cluster_heartbeat=19, generate_cluster_version=12646)

[2023-03-08 10:51:55.097587] WARN [LIB] ~ObTimeGuard (utility.h:853) [4991][KVCacheWash][T0][Y0-0000000000000000-0-0] [lt=45] destruct(*this=time guard 'clean_garbage_node' cost too much time, used=118347)

[2023-03-08 10:51:55.198375] WARN [LIB] ~ObTimeGuard (utility.h:853) [5767][T1002_TenantWea][T1002][Y0-0000000000000000-0-0] [lt=47] destruct(*this=time guard '[WRS] [TENANT_WEAK_READ_SERVICE] tenant_id=1002, thread_timer_task' cost too much time, used=11790, time_dist: do_cluster_heartbeat=18, generate_cluster_version=10325)

[2023-03-08 10:51:55.201930] WARN [PALF] sliding_cb (log_sliding_window.cpp:1853) [5928][T1002_TNT_L0_G1][T1002][YB42C0A8ECA3-0005F64972EE874D-0-0] [lt=54] log_task life cost too much time(palf_id=1003, self="192.168.236.161:2882", log_id=513068, log_task={header:{begin_lsn:{lsn:62480160}, end_lsn:{lsn:18446744073709551615}, log_id:513068, min_log_ts:1678243915046046881, max_log_ts:1678243915046046881, data_len:66, proposal_id:1, prev_lsn:{lsn:62480038}, prev_proposal_id:1, committed_end_lsn:{lsn:62480160}, data_checksum:940009244, accum_checksum:2219132794, is_padding_log:false, is_raw_write:false}, state_map:{val:58}, ref_cnt:0, gen_ts:1678243915101271, submit_wait_time:15, submit_ts:1678243915101286, flush_cost:10285, flushed_ts:1678243915111571}, fs_cb_begin_ts=1678243915201903, log_life_time=100632)

[2023-03-08 10:51:55.224348] WARN [PALF] sliding_cb (log_sliding_window.cpp:1853) [5928][T1002_TNT_L0_G1][T1002][YB42C0A8ECA2-0005F64971C28E8F-0-0] [lt=72] log_task life cost too much time(palf_id=1002, self="192.168.236.161:2882", log_id=497384, log_task={header:{begin_lsn:{lsn:60570420}, end_lsn:{lsn:18446744073709551615}, log_id:497384, min_log_ts:1678243915083379611, max_log_ts:1678243915083379611, data_len:66, proposal_id:1, prev_lsn:{lsn:60570298}, prev_proposal_id:1, committed_end_lsn:{lsn:60570420}, data_checksum:1715074492, accum_checksum:2316533938, is_padding_log:false, is_raw_write:false}, state_map:{val:58}, ref_cnt:0, gen_ts:1678243915123530, submit_wait_time:13, submit_ts:1678243915123543, flush_cost:1703, flushed_ts:1678243915125246}, fs_cb_begin_ts=1678243915224313, log_life_time=100783)

[2023-03-08 10:51:55.305745] WARN [PALF] inner_append_log (palf_handle_impl.cpp:1701) [5273][T1_IOWorker][T1][Y0-0000000000000000-0-0] [lt=178] write log cost too much time(ret=0, this={palf_id:1, self:"192.168.236.161:2882", has_set_deleted:false}, lsn_array=[{lsn:782904043}], log_ts_array=[1678243915280973573], time_cost=21781)

[2023-03-08 10:51:55.306014] WARN [PALF] inner_append_log (palf_handle_impl.cpp:1701) [5680][T1002_IOWorker][T1002][Y0-0000000000000000-0-0] [lt=622] write log cost too much time(ret=0, this={palf_id:1, self:"192.168.236.161:2882", has_set_deleted:false}, lsn_array=[{lsn:94510927}], log_ts_array=[1678243915217593248], time_cost=23431)

[2023-03-08 10:51:55.306206] WARN [PALF] inner_append_log (palf_handle_impl.cpp:1701) [5809][T1001_IOWorker][T1001][Y0-0000000000000000-0-0] [lt=63] write log cost too much time(ret=0, this={palf_id:1, self:"192.168.236.161:2882", has_set_deleted:false}, lsn_array=[{lsn:1886199989}], log_ts_array=[1678243915274246772], time_cost=25921)

评论

评论 有用 0

有用 0你好,KVcache和RpcIO线程一般意味着有sql访问,空闲情况不应该这么活跃。

麻烦用gv$ob_sql_audit视图统计下sql执行情况,把结果贴出来我们分析一下。

root用户连接第一个observer,先确保开启了sql统计:

alter system set enable_perf_event = true;

alter system set enable_sql_audit = true;

show parameters like "%enable_sql_audit%";

等待2分钟后,查询视图:

select sql_id, user_name,substr(query_sql,1,200), count(1) cnt from gv$ob_sql_audit where tenant_id = 1 group by sql_id order by cnt desc limit 20;

select count(1) cnt , avg(elapsed_time) as cost , sum(elapsed_time), query_sql from gv$ob_sql_audit where query_sql != '' group by sql_id order by cost*cnt desc limit 20;

评论

评论 有用 1

有用 1感谢!!!

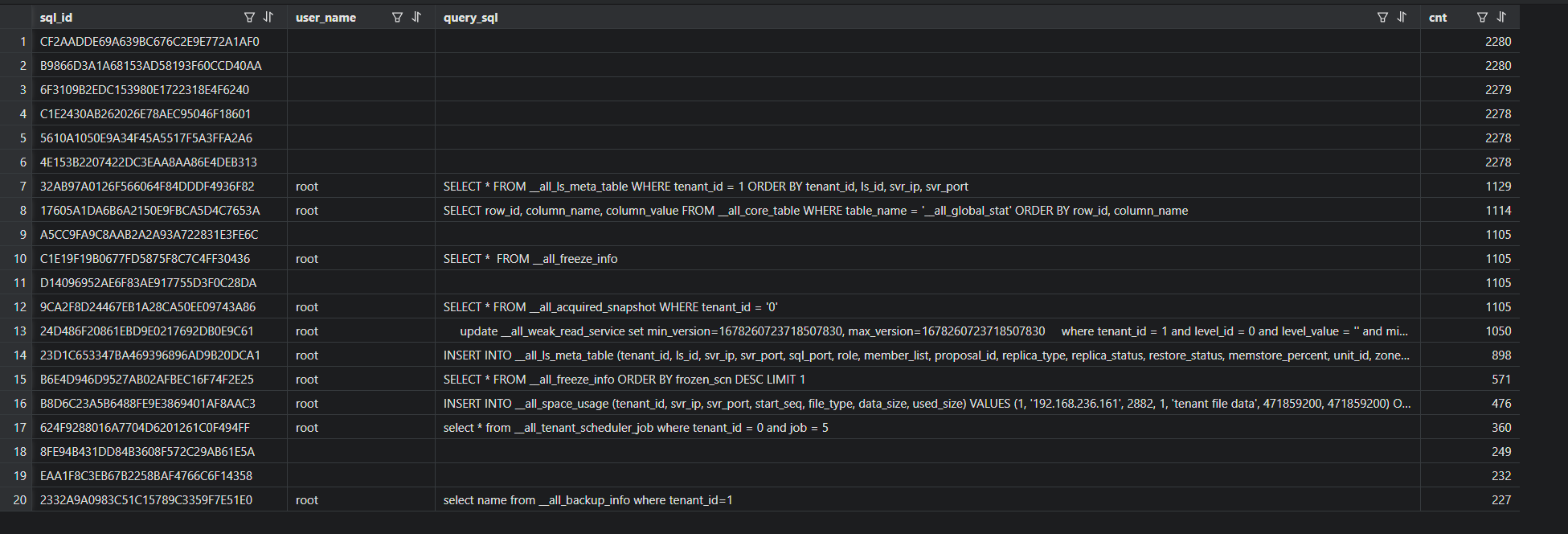

第一个SQL结果

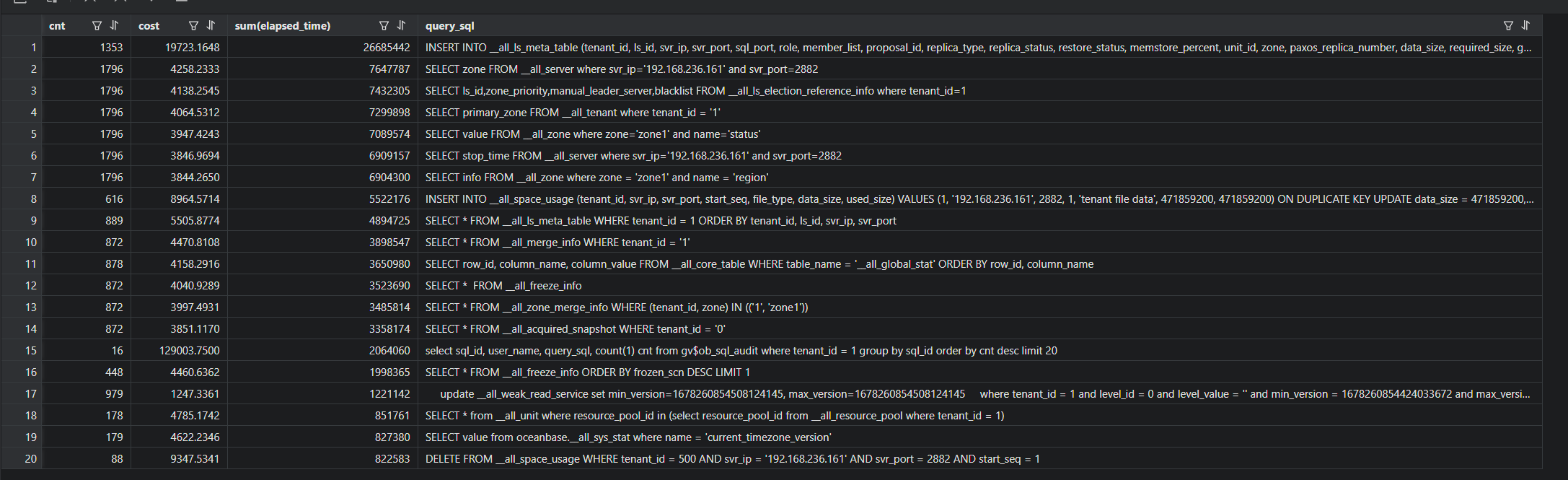

第二个SQL结果

评论

评论 有用 0

有用 0 墨值悬赏

墨值悬赏