原文地址:https://dzone.com/articles/cockroachdb-performance-characteristics-with-ycsba

原文作者:Artem Ervits

今天,我将通过在 CockroachDB 上部署 YCSB 套件中的 workload A 来讨论 CockroachDB 和非常流行的 YCSB 基准测试套件。

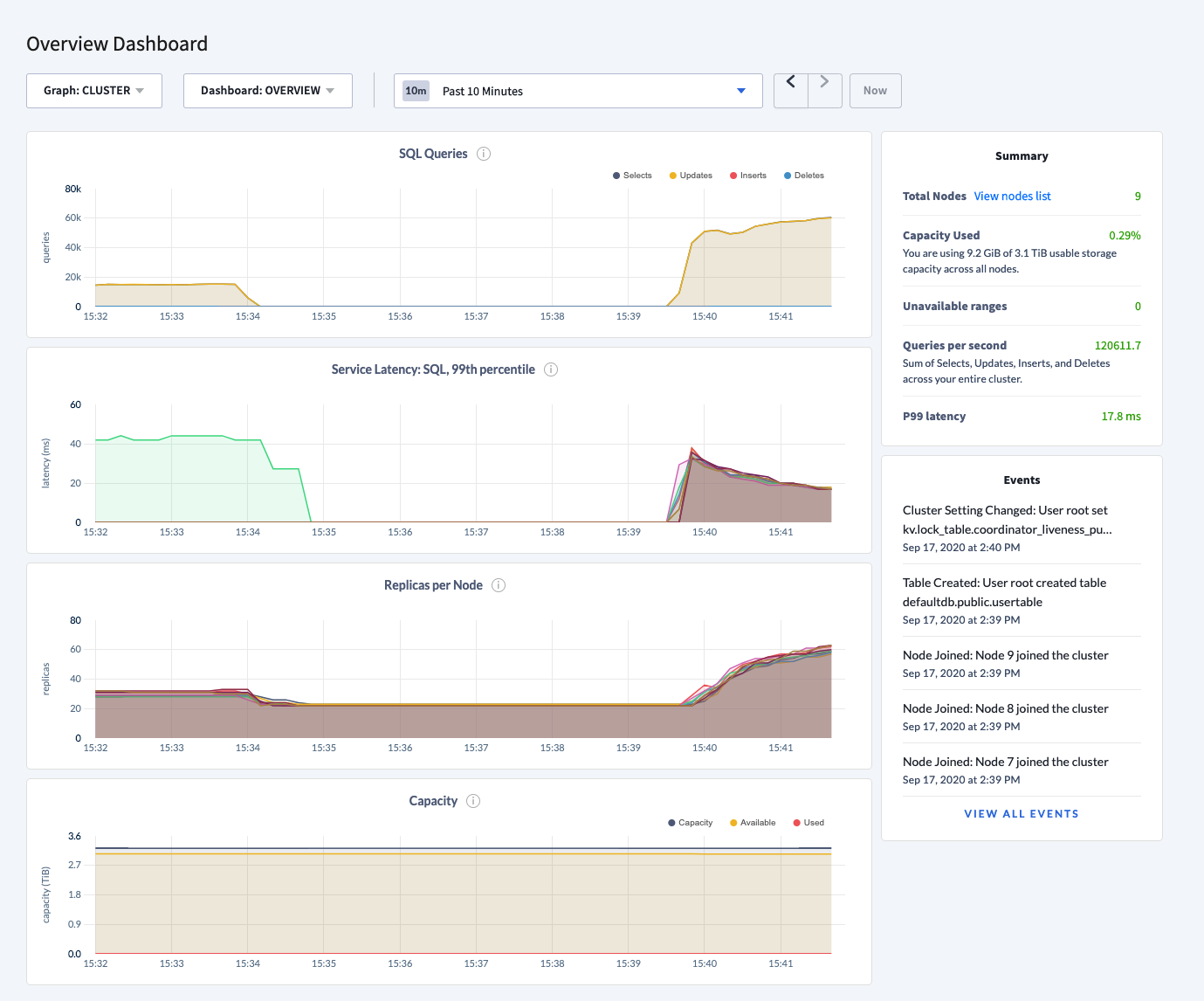

截止到 20.1 版本,CockroachDB 仍然使用 RocksDB 作为存储引擎。RocksDB 是一个键值存储库,也是 CockroachDB 架构中最基础的一部分。YCSB 是业界采用的基准测试程序,用于测试各种各样的数据库和键值存储的性能。YCSB workloada 是一个更新和读操作五五开结合的负载。我们将要在单区域9节点的 AWS 集群中用 workloada 负载来测试 CockroachDB 的性能。

我们将着眼于 20.1 版本中 RocksDB 的性能以及要在20.2版本中新引入的 RocksDB 的替代品——Pebble 的性能。

关乎此的一个小故事是我最近进行的一个POC,一个潜在客户根据他们的独立数据库引擎的内部基准设定了一套成功标准。NoSQL 数据库具有出色的性能特征,比如在一个 AWS 区域内跨9个节点的60-100k的 QPS。YCSB 客户机将在集群中的每个节点上运行。机器类型为i3.2x。CockroachDB 更喜欢 CPU 而不是 RAM,我们选择使用 c5d.4x 将 CPU 增加一倍。我使用 CockroachDB 20.1.5 进行测试。

在 AWS 上部署 CockroachDB

这里不讨论在 AWS 中部署 CockroachDB 的细节,可以按照文档来做。你只需要知道机器的型号是 c5d.4xlarge, 单区域9节点,3个外部客户端节点负责运行 YCSB 负载。这样做是为了给 Cockroach 节点提供所有可用的资源。

当集群启动后,连接集群并创建 YCSB 表。

CREATE TABLE usertable (

ycsb_key VARCHAR(255) PRIMARY KEY NOT NULL,

FIELD0 TEXT NOT NULL,

FIELD1 TEXT NOT NULL,

FIELD2 TEXT NOT NULL,

FIELD3 TEXT NOT NULL,

FIELD4 TEXT NOT NULL,

FIELD5 TEXT NOT NULL,

FIELD6 TEXT NOT NULL,

FIELD7 TEXT NOT NULL,

FIELD8 TEXT NOT NULL,

FIELD9 TEXT NOT NULL,

FAMILY (ycsb_key),

FAMILY (FIELD0),

FAMILY (FIELD1),

FAMILY (FIELD2),

FAMILY (FIELD3),

FAMILY (FIELD4),

FAMILY (FIELD5),

FAMILY (FIELD6),

FAMILY (FIELD7),

FAMILY (FIELD8),

FAMILY (FIELD9)

);

复制更改锁延迟配置项以检测冲突事务的协调者故障

仅在使用了 Zipfian 分布(Zipfian distributed),并且仅当客户端运行超过128个线程时才需要这一步。否则,请跳过此步骤。

SET CLUSTER SETTING kv.lock_table.coordinator_liveness_push_delay = '50ms';

复制此属性没有被标记为公共API,也没有文档说明。这里是它的代码

配置 YCSB

在不运行 CockroachDB 服务的客户机(即三台外部客户端结点)上部署 YCSB 套件。

SSH 到主机,在我的情况下是节点10、11和12。我们将要做的是下载 YCSB 套件,配置环境变量,下载 PostgreSQL 驱动程序,并安装好前置包。

curl --location https://github.com/brianfrankcooper/YCSB/releases/download/0.17.0/ycsb-jdbc-binding-0.17.0.tar.gz | gzip -dc - | tar -xvf -

export YCSB=~/ycsb-jdbc-binding-0.17.0

cd $YCSB/lib

curl -O --location https://jdbc.postgresql.org/download/postgresql-42.2.12.jar

sudo apt-get update && sudo apt-get install -y default-jre python

复制在节点11和12上重复如上操作

Warm Up the Leaseholders

在 CockroachDB 术语中,leader 副本负责协调写和读。

用集群中每个节点的 IP 列表来构造一个 JDBC url。下面是我的 IP 列表,忽略最后三个客户端节点。

10.12.20.65 10.12.29.101 10.12.17.181 10.12.21.30 10.12.23.9 10.12.16.241 10.12.21.44 10.12.17.10 10.12.21.101 10.12.31.155 10.12.31.110 10.12.16.177复制

给定节点的IP,我们可以用下面的命令来构建工作负载的加载阶段:

bin/ycsb load jdbc -s -P workloads/workloada -p db.driver=org.postgresql.Driver -p db.url="jdbc:postgresql://10.12.20.65:26257,10.12.29.101:26257,10.12.17.181:26257,10.12.21.30:26257,10.12.23.9:26257,10.12.16.241:26257,10.12.21.44:26257,10.12.17.10:26257,10.12.21.101:26257/defaultdb?autoReconnect=true&sslmode=disable&ssl=false&reWriteBatchedInserts=true&loadBalanceHosts=true" -p db.user=root -p db.passwd="" -p jdbc.fetchsize=10 -p jdbc.autocommit=true -p jdbc.batchupdateapi=true -p db.batchsize=128 -p recordcount=1000000 -p threadcount=32 -p operationcount=10000000

复制在一个客户端节点上执行它。

2020-08-24 14:55:41:431 10 sec: 447968 operations; 44796.8 current ops/sec; est completion in 13 seconds [INSERT: Count=447968, Max=223871, Min=3, Avg=698.46, 90=3, 99=99, 99.9=110975, 99.99=177535]

2020-08-24 14:55:51:431 20 sec: 910944 operations; 46297.6 current ops/sec; est completion in 2 second [INSERT: Count=462976, Max=166143, Min=3, Avg=692.56, 90=3, 99=12, 99.9=107071, 99.99=133503]

2020-08-24 14:55:53:594 22 sec: 1000000 operations; 41172.45 current ops/sec; [CLEANUP: Count=32, Max=95871, Min=4336, Avg=33047.88, 90=76479, 99=95871, 99.9=95871, 99.99=95871] [INSERT: Count=89056, Max=139135, Min=3, Avg=658.79, 90=3, 99=12, 99.9=98623, 99.99=120127]

[OVERALL], RunTime(ms), 22163

[OVERALL], Throughput(ops/sec), 45120.24545413527

[TOTAL_GCS_PS_Scavenge], Count, 15

[TOTAL_GC_TIME_PS_Scavenge], Time(ms), 106

[TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.4782746018138339

[TOTAL_GCS_PS_MarkSweep], Count, 0

[TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0

[TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0

[TOTAL_GCs], Count, 15

[TOTAL_GC_TIME], Time(ms), 106

[TOTAL_GC_TIME_%], Time(%), 0.4782746018138339

[CLEANUP], Operations, 32

[CLEANUP], AverageLatency(us), 33047.875

[CLEANUP], MinLatency(us), 4336

[CLEANUP], MaxLatency(us), 95871

[CLEANUP], 95thPercentileLatency(us), 86335

[CLEANUP], 99thPercentileLatency(us), 95871

[INSERT], Operations, 1000000

[INSERT], AverageLatency(us), 692.195737

[INSERT], MinLatency(us), 3

[INSERT], MaxLatency(us), 223871

[INSERT], 95thPercentileLatency(us), 5

[INSERT], 99thPercentileLatency(us), 66

[INSERT], Return=OK, 7808

[INSERT], Return=BATCHED_OK, 992192

复制基准测试

我之前做过的测试表明,添加额外的客户机来执行工作负载比运行单个客户机能产生更好的结果。此外,使用不同的线程数(变量 threadcount )进行测试时可以在使用128个线程时获得最好的性能,再多就会性能递减。

另一点需要注意的是,YCSB 在默认情况下依赖于 Zipfian分布,这使得它的性能比使用 uniform 分布算法时要差(译者注:关于两种分布的差别参考benchmarking - Zipfian vs Uniform - What’s the difference between these two YCSB distribution? - Stack Overflow)。为了简洁起见,我将只关注 uniform 分布。因此,给定所有条件后,作为工作负载中的事务阶段的一部分,应该对所有可用的客户机执行以下命令。

bin/ycsb run jdbc -s -P workloads/workloada -p db.driver=org.postgresql.Driver -p db.url="jdbc:postgresql://10.12.20.65:26257,10.12.29.101:26257,10.12.17.181:26257,10.12.21.30:26257,10.12.23.9:26257,10.12.16.241:26257,10.12.21.44:26257,10.12.17.10:26257,10.12.21.101:26257/defaultdb?autoReconnect=true&sslmode=disable&ssl=false&reWriteBatchedInserts=true&loadBalanceHosts=true" -p db.user=root -p db.passwd="" -p db.batchsize=128 -p jdbc.fetchsize=10 -p jdbc.autocommit=true -p jdbc.batchupdateapi=true -p recordcount=1000000 -p operationcount=10000000 -p threadcount=128 -p maxexecutiontime=180 -p requestdistribution=uniform

复制Client 1

[OVERALL], RunTime(ms), 180056 [OVERALL], Throughput(ops/sec), 38026.78611098769 [TOTAL_GCS_PS_Scavenge], Count, 80 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 185 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.10274581241391566 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 80 [TOTAL_GC_TIME], Time(ms), 185 [TOTAL_GC_TIME_%], Time(%), 0.10274581241391566 [READ], Operations, 3425678 [READ], AverageLatency(us), 1402.806852541307 [READ], MinLatency(us), 307 [READ], MaxLatency(us), 347903 [READ], 95thPercentileLatency(us), 4503 [READ], 99thPercentileLatency(us), 8935 [READ], Return=OK, 3425678 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 217.5234375 [CLEANUP], MinLatency(us), 27 [CLEANUP], MaxLatency(us), 1815 [CLEANUP], 95thPercentileLatency(us), 1392 [CLEANUP], 99thPercentileLatency(us), 1654 [UPDATE], Operations, 3421273 [UPDATE], AverageLatency(us), 5323.34846093837 [UPDATE], MinLatency(us), 756 [UPDATE], MaxLatency(us), 396543 [UPDATE], 95thPercentileLatency(us), 13983 [UPDATE], 99thPercentileLatency(us), 22655 [UPDATE], Return=OK, 3421273复制

Client 2

[OVERALL], RunTime(ms), 180062 [OVERALL], Throughput(ops/sec), 37611.283891104176 [TOTAL_GCS_PS_Scavenge], Count, 80 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 199 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.1105174884206551 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 80 [TOTAL_GC_TIME], Time(ms), 199 [TOTAL_GC_TIME_%], Time(%), 0.1105174884206551 [READ], Operations, 3387837 [READ], AverageLatency(us), 1425.872327977999 [READ], MinLatency(us), 304 [READ], MaxLatency(us), 352255 [READ], 95thPercentileLatency(us), 4567 [READ], 99thPercentileLatency(us), 9095 [READ], Return=OK, 3387837 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 486.4453125 [CLEANUP], MinLatency(us), 22 [CLEANUP], MaxLatency(us), 3223 [CLEANUP], 95thPercentileLatency(us), 2945 [CLEANUP], 99thPercentileLatency(us), 3153 [UPDATE], Operations, 3384526 [UPDATE], AverageLatency(us), 5372.31603893721 [UPDATE], MinLatency(us), 721 [UPDATE], MaxLatency(us), 394751 [UPDATE], 95thPercentileLatency(us), 14039 [UPDATE], 99thPercentileLatency(us), 22703 [UPDATE], Return=OK, 3384526复制

Client 3

[OVERALL], RunTime(ms), 180044 [OVERALL], Throughput(ops/sec), 38504.49334607096 [TOTAL_GCS_PS_Scavenge], Count, 92 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 164 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.09108884494901245 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 92 [TOTAL_GC_TIME], Time(ms), 164 [TOTAL_GC_TIME_%], Time(%), 0.09108884494901245 [READ], Operations, 3465232 [READ], AverageLatency(us), 1392.0378548968727 [READ], MinLatency(us), 303 [READ], MaxLatency(us), 335359 [READ], 95thPercentileLatency(us), 4475 [READ], 99thPercentileLatency(us), 8911 [READ], Return=OK, 3465232 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 963.7265625 [CLEANUP], MinLatency(us), 38 [CLEANUP], MaxLatency(us), 3945 [CLEANUP], 95thPercentileLatency(us), 3769 [CLEANUP], 99thPercentileLatency(us), 3921 [UPDATE], Operations, 3467271 [UPDATE], AverageLatency(us), 5247.070255541029 [UPDATE], MinLatency(us), 720 [UPDATE], MaxLatency(us), 401663 [UPDATE], 95thPercentileLatency(us), 13879 [UPDATE], 99thPercentileLatency(us), 22575 [UPDATE], Return=OK, 3467271复制

在20.2.0-beta.1版本的 Pebble 存储引擎上测试相同的负载

现在,我们将使用最新的20.2 beta1版本重新构建测试,看看 Pebble 存储引擎取代 RocksDB 是否会对工作负载产生积极影响。

新的 IP 列表如下:

10.12.21.64 10.12.16.87 10.12.23.78 10.12.31.249 10.12.23.120 10.12.24.143 10.12.24.2 10.12.17.108 10.12.18.94 10.12.24.224 10.12.26.64 10.12.28.166复制

在20.2版本的集群启动后,检查我们是否使用了 Pebble 的一种快速方法是 grep 日志。

登上一个节点在 cockroach.log 中搜索字符串 storage engine

grep 'storage engine' cockroach.log

I200917 15:56:22.443338 89 server/config.go:624 ⋮ [n?] 1 storage engine‹› initialized

storage engine: pebble

复制按之前一样执行所有的先决步骤。请注意 JDBC url,因为我对 ip 操作失误,导致性能严重下降,因为基准测试只针对少数节点。

在加载数据并定义要插入的数据之后:

bin/ycsb load jdbc -s -P workloads/workloada -p db.driver=org.postgresql.Driver -p db.url="jdbc:postgresql://10.12.21.64:26257,10.12.16.87:26257,10.12.23.78:26257,10.12.31.249:26257,10.12.23.120:26257,10.12.24.143:26257,10.12.24.2:26257,10.12.17.108:26257,10.12.18.94:26257/defaultdb?autoReconnect=true&sslmode=disable&ssl=false&reWriteBatchedInserts=true&loadBalanceHosts=true" -p db.user=root -p db.passwd="" -p jdbc.fetchsize=10 -p jdbc.autocommit=true -p jdbc.batchupdateapi=true -p db.batchsize=128 -p recordcount=1000000 -p threadcount=32 -p operationcount=10000000

复制[OVERALL], RunTime(ms), 75381 [OVERALL], Throughput(ops/sec), 13265.942346214564 [TOTAL_GCS_PS_Scavenge], Count, 15 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 92 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.12204666958517399 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 15 [TOTAL_GC_TIME], Time(ms), 92 [TOTAL_GC_TIME_%], Time(%), 0.12204666958517399 [CLEANUP], Operations, 32 [CLEANUP], AverageLatency(us), 141524.4375 [CLEANUP], MinLatency(us), 7964 [CLEANUP], MaxLatency(us), 390655 [CLEANUP], 95thPercentileLatency(us), 307199 [CLEANUP], 99thPercentileLatency(us), 390655 [INSERT], Operations, 1000000 [INSERT], AverageLatency(us), 2346.44805 [INSERT], MinLatency(us), 3 [INSERT], MaxLatency(us), 18563071 [INSERT], 95thPercentileLatency(us), 7 [INSERT], 99thPercentileLatency(us), 71 [INSERT], Return=OK, 7808 [INSERT], Return=BATCHED_OK, 992192复制

下载 YCSB 套件以及在所有客户端上下载 PostgreSQL 驱动程序,我们就可以开始基准测试了。

bin/ycsb run jdbc -s -P workloads/workloada -p db.driver=org.postgresql.Driver -p db.url="jdbc:postgresql://10.12.21.64:26257,10.12.16.87:26257,10.12.23.78:26257,10.12.31.249:26257,10.12.23.120:26257,10.12.24.143:26257,10.12.24.2:26257,10.12.17.108:26257,10.12.18.94:26257/defaultdb?autoReconnect=true&sslmode=disable&ssl=false&reWriteBatchedInserts=true&loadBalanceHosts=true" -p db.user=root -p db.passwd="" -p db.batchsize=128 -p jdbc.fetchsize=10 -p jdbc.autocommit=true -p jdbc.batchupdateapi=true -p recordcount=1000000 -p operationcount=10000000 -p threadcount=128 -p maxexecutiontime=180 -p requestdistribution=uniform

复制Client 1

[OVERALL], RunTime(ms), 180068 [OVERALL], Throughput(ops/sec), 36933.636181886846 [TOTAL_GCS_PS_Scavenge], Count, 24 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 84 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.046649043694604264 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 24 [TOTAL_GC_TIME], Time(ms), 84 [TOTAL_GC_TIME_%], Time(%), 0.046649043694604264 [READ], Operations, 3325714 [READ], AverageLatency(us), 1568.609565945839 [READ], MinLatency(us), 308 [READ], MaxLatency(us), 353279 [READ], 95thPercentileLatency(us), 5283 [READ], 99thPercentileLatency(us), 10319 [READ], Return=OK, 3325714 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 89.3515625 [CLEANUP], MinLatency(us), 20 [CLEANUP], MaxLatency(us), 1160 [CLEANUP], 95thPercentileLatency(us), 341 [CLEANUP], 99thPercentileLatency(us), 913 [UPDATE], Operations, 3324852 [UPDATE], AverageLatency(us), 5354.304089324878 [UPDATE], MinLatency(us), 747 [UPDATE], MaxLatency(us), 373759 [UPDATE], 95thPercentileLatency(us), 14463 [UPDATE], 99thPercentileLatency(us), 22655 [UPDATE], Return=OK, 3324852复制

Client 2

[OVERALL], RunTime(ms), 180048 [OVERALL], Throughput(ops/sec), 36497.55065315916 [TOTAL_GCS_PS_Scavenge], Count, 77 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 142 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.07886785746023282 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 77 [TOTAL_GC_TIME], Time(ms), 142 [TOTAL_GC_TIME_%], Time(%), 0.07886785746023282 [READ], Operations, 3286309 [READ], AverageLatency(us), 1590.261334524538 [READ], MinLatency(us), 313 [READ], MaxLatency(us), 371199 [READ], 95thPercentileLatency(us), 5331 [READ], 99thPercentileLatency(us), 10367 [READ], Return=OK, 3286309 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 481.5234375 [CLEANUP], MinLatency(us), 37 [CLEANUP], MaxLatency(us), 3357 [CLEANUP], 95thPercentileLatency(us), 3099 [CLEANUP], 99thPercentileLatency(us), 3321 [UPDATE], Operations, 3285002 [UPDATE], AverageLatency(us), 5416.266051588401 [UPDATE], MinLatency(us), 761 [UPDATE], MaxLatency(us), 411647 [UPDATE], 95thPercentileLatency(us), 14519 [UPDATE], 99thPercentileLatency(us), 22703 [UPDATE], Return=OK, 3285002复制

Client 3

[OVERALL], RunTime(ms), 180051 [OVERALL], Throughput(ops/sec), 36760.86775413633 [TOTAL_GCS_PS_Scavenge], Count, 81 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 171 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.09497309095756203 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 81 [TOTAL_GC_TIME], Time(ms), 171 [TOTAL_GC_TIME_%], Time(%), 0.09497309095756203 [READ], Operations, 3310513 [READ], AverageLatency(us), 1582.5869117565767 [READ], MinLatency(us), 294 [READ], MaxLatency(us), 355839 [READ], 95thPercentileLatency(us), 5311 [READ], 99thPercentileLatency(us), 10335 [READ], Return=OK, 3310513 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 501.859375 [CLEANUP], MinLatency(us), 35 [CLEANUP], MaxLatency(us), 2249 [CLEANUP], 95thPercentileLatency(us), 1922 [CLEANUP], 99thPercentileLatency(us), 2245 [UPDATE], Operations, 3308318 [UPDATE], AverageLatency(us), 5373.080574479237 [UPDATE], MinLatency(us), 708 [UPDATE], MaxLatency(us), 375807 [UPDATE], 95thPercentileLatency(us), 14487 [UPDATE], 99thPercentileLatency(us), 22687 [UPDATE], Return=OK, 3308318复制

我们可以看到性能有所下降,我们将用 RocksDB 引擎重新启动集群来再次进行测试,以检查这一下降是否与 Pebble 有关。

停集群,重启并传入环境变量:COCKROACH_STORAGE_ENGINE=rocksdb

为了确认集群是从 RocksDB 启动的,grep日志。

I200917 16:31:25.474204 47 server/config.go:624 ⋮ [n?] 1 storage engine‹› initialized storage engine: rocksdb复制

重跑先前的测试:

Client 1

[OVERALL], RunTime(ms), 180060 [OVERALL], Throughput(ops/sec), 36145.29045873597 [TOTAL_GCS_PS_Scavenge], Count, 75 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 152 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.08441630567588582 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 75 [TOTAL_GC_TIME], Time(ms), 152 [TOTAL_GC_TIME_%], Time(%), 0.08441630567588582 [READ], Operations, 3253510 [READ], AverageLatency(us), 1561.9589268205723 [READ], MinLatency(us), 342 [READ], MaxLatency(us), 418815 [READ], 95thPercentileLatency(us), 5103 [READ], 99thPercentileLatency(us), 9999 [READ], Return=OK, 3253510 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 301.65625 [CLEANUP], MinLatency(us), 18 [CLEANUP], MaxLatency(us), 2069 [CLEANUP], 95thPercentileLatency(us), 1883 [CLEANUP], 99thPercentileLatency(us), 2031 [UPDATE], Operations, 3254811 [UPDATE], AverageLatency(us), 5510.587541334965 [UPDATE], MinLatency(us), 808 [UPDATE], MaxLatency(us), 441855 [UPDATE], 95thPercentileLatency(us), 14839 [UPDATE], 99thPercentileLatency(us), 22815 [UPDATE], Return=OK, 3254811复制

Client 2

[OVERALL], RunTime(ms), 180052 [OVERALL], Throughput(ops/sec), 35503.34347855064 [TOTAL_GCS_PS_Scavenge], Count, 24 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 73 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.04054384288983183 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 24 [TOTAL_GC_TIME], Time(ms), 73 [TOTAL_GC_TIME_%], Time(%), 0.04054384288983183 [READ], Operations, 3196538 [READ], AverageLatency(us), 1604.055252276056 [READ], MinLatency(us), 344 [READ], MaxLatency(us), 526335 [READ], 95thPercentileLatency(us), 5223 [READ], 99thPercentileLatency(us), 10143 [READ], Return=OK, 3196538 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 247.4765625 [CLEANUP], MinLatency(us), 29 [CLEANUP], MaxLatency(us), 1959 [CLEANUP], 95thPercentileLatency(us), 1653 [CLEANUP], 99thPercentileLatency(us), 1799 [UPDATE], Operations, 3195910 [UPDATE], AverageLatency(us), 5597.925762302443 [UPDATE], MinLatency(us), 800 [UPDATE], MaxLatency(us), 578559 [UPDATE], 95thPercentileLatency(us), 14911 [UPDATE], 99thPercentileLatency(us), 22735 [UPDATE], Return=OK, 3195910复制

Client 3

[OVERALL], RunTime(ms), 180058 [OVERALL], Throughput(ops/sec), 36301.11963922736 [TOTAL_GCS_PS_Scavenge], Count, 78 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 154 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.08552799653445001 [TOTAL_GCS_PS_MarkSweep], Count, 0 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 0 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.0 [TOTAL_GCs], Count, 78 [TOTAL_GC_TIME], Time(ms), 154 [TOTAL_GC_TIME_%], Time(%), 0.08552799653445001 [READ], Operations, 3270285 [READ], AverageLatency(us), 1568.0345073900287 [READ], MinLatency(us), 350 [READ], MaxLatency(us), 432383 [READ], 95thPercentileLatency(us), 5103 [READ], 99thPercentileLatency(us), 9975 [READ], Return=OK, 3270285 [CLEANUP], Operations, 128 [CLEANUP], AverageLatency(us), 493.8984375 [CLEANUP], MinLatency(us), 37 [CLEANUP], MaxLatency(us), 4647 [CLEANUP], 95thPercentileLatency(us), 2353 [CLEANUP], 99thPercentileLatency(us), 4459 [UPDATE], Operations, 3266022 [UPDATE], AverageLatency(us), 5477.101235080474 [UPDATE], MinLatency(us), 819 [UPDATE], MaxLatency(us), 599551 [UPDATE], 95thPercentileLatency(us), 14807 [UPDATE], 99thPercentileLatency(us), 22655 [UPDATE], Return=OK, 3266022复制

所以,看起来我们可以排除是因为 Pebble 导致的性能下降,事实上,Pebble 的测试性能似乎比 RocksDB 更好。我将把性能下降归结为与 beta 软件相关的整体问题。

我提了一个 issue 以进一步调查。在调查后我会再做一次测试来确定性能特性。