作者:宇汇,壮怀,先河

概述

Cloud Native

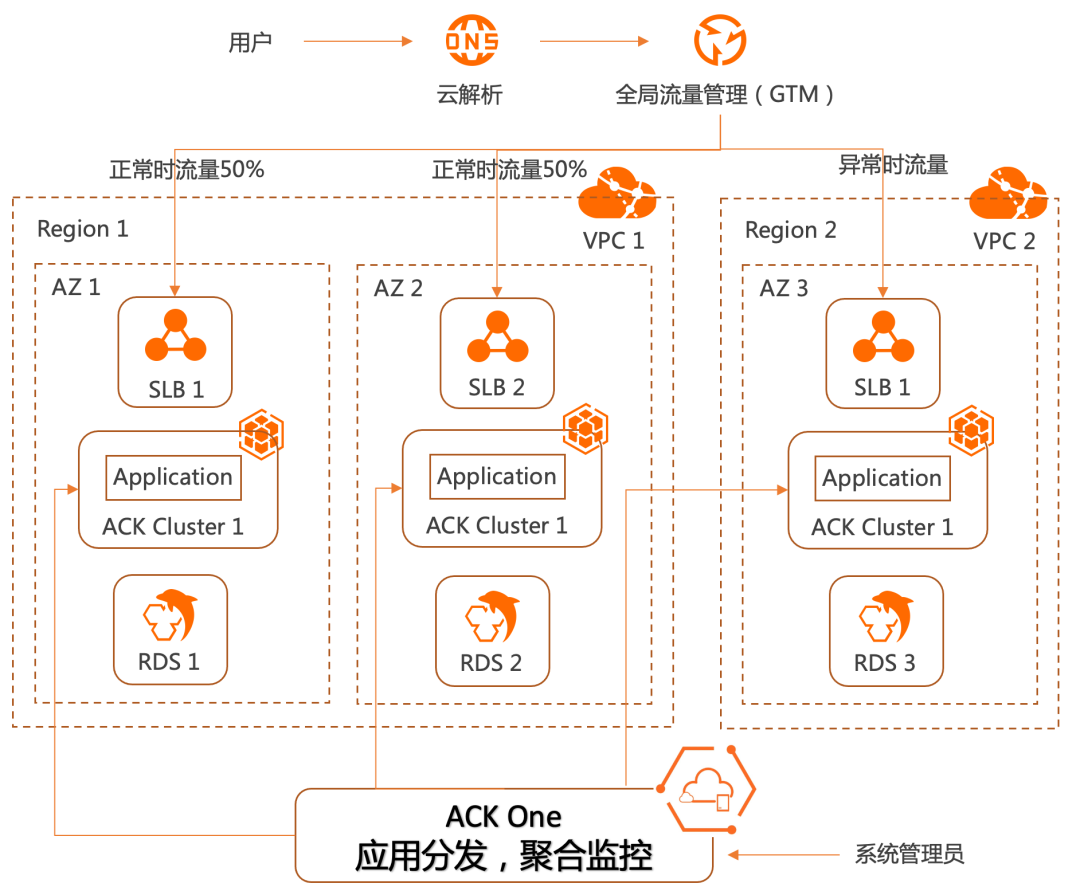

方案架构

Cloud Native

前提条件

Cloud Native

应用部署

Cloud Native

1. 执行一下命令创建命名空间 demo。

kubectl create namespace demo

apiVersion: apps/v1kind: Deploymentmetadata:labels:app: web-demoname: web-demonamespace: demospec:replicas: 5selector:matchLabels:app: web-demotemplate:metadata:labels:app: web-demospec:containers:- image: acr-multiple-clusters-registry.cn-hangzhou.cr.aliyuncs.com/ack-multiple-clusters/web-demo:0.4.0name: web-demoenv:- name: ENV_NAMEvalue: cluster1-beijingvolumeMounts:- name: config-filemountPath: "/config-file"readOnly: truevolumes:- name: config-fileconfigMap:items:- key: config.jsonpath: config.jsonname: web-demo---apiVersion: v1kind: Servicemetadata:name: web-demonamespace: demolabels:app: web-demospec:selector:app: web-demoports:- protocol: TCPport: 80targetPort: 8080---apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: web-demonamespace: demolabels:app: web-demospec:rules:- host: web-demo.example.comhttp:paths:- path:pathType: Prefixbackend:service:name: web-demoport:number: 80---apiVersion: v1kind: ConfigMapmetadata:name: web-demonamespace: demolabels:app: web-demodata:config.json: |{database-host: "beijing-db.pg.aliyun.com"}3. 执行以下命令,在主控实例上部署应用 web-demo。注意:在主控实例上创建 kube 资源并不会下发到子集群,此 kube 资源作为原数据,被后续 Application(步骤 4b)中引用。

kubectl apply -f app-meta.yaml复制

4. 创建应用分发规则。 复制

a. 执行以下命令,查看主控实例管理的关联集群,确定应用的分发目标 kubectl amc get managedcluster

Name Alias HubAcceptedmanagedcluster-cxxx cluster1-hangzhou truemanagedcluster-cxxx cluster2-beijing truemanagedcluster-cxxx cluster1-beijing true复制

apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: cluster1-beijingnamespace: demotype: topologyproperties:clusters: ["<managedcluster-cxxx>"] #分发目标集群1 cluster1-beijing---apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: cluster2-beijingnamespace: demotype: topologyproperties:clusters: ["<managedcluster-cxxx>"] #分发目标集群2 cluster2-beijing---apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: cluster1-hangzhounamespace: demotype: topologyproperties:clusters: ["<managedcluster-cxxx>"] #分发目标集群3 cluster1-hangzhou---apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: override-env-cluster2-beijingnamespace: demotype: overrideproperties:components:- name: "deployment"traits:- type: envproperties:containerName: web-demoenv:ENV_NAME: cluster2-beijing #对集群cluster2-beijing的deployment做环境变量的差异化配置---apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: override-env-cluster1-hangzhounamespace: demotype: overrideproperties:components:- name: "deployment"traits:- type: envproperties:containerName: web-demoenv:ENV_NAME: cluster1-hangzhou #对集群cluster1-hangzhou的deployment做环境变量的差异化配置---apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: override-replic-cluster1-hangzhounamespace: demotype: overrideproperties:components:- name: "deployment"traits:- type: scalerproperties:replicas: 1 #对集群cluster1-hangzhou的deployment做副本数的差异化配置---apiVersion: core.oam.dev/v1alpha1kind: Policymetadata:name: override-configmap-cluster1-hangzhounamespace: demotype: overrideproperties:components:- name: "configmap"traits:- type: json-merge-patch #对集群cluster1-hangzhou的deployment做configmap的差异化配置properties:data:config.json: |{database-address: "hangzhou-db.pg.aliyun.com"}---apiVersion: core.oam.dev/v1alpha1kind: Workflowmetadata:name: deploy-demonamespace: demosteps: #顺序部署cluster1-beijing,cluster2-beijing,cluster1-hangzhou。- type: deployname: deploy-cluster1-beijingproperties:policies: ["cluster1-beijing"]- type: deployname: deploy-cluster2-beijingproperties:auto: false #部署cluster2-beijing前需要人工审核policies: ["override-env-cluster2-beijing", "cluster2-beijing"] #在部署cluster2-beijing时做环境变量的差异化- type: deployname: deploy-cluster1-hangzhouproperties:policies: ["override-env-cluster1-hangzhou", "override-replic-cluster1-hangzhou", "override-configmap-cluster1-hangzhou", "cluster1-hangzhou"]#在部署cluster2-beijing时做环境变量,副本数,configmap的差异化---apiVersion: core.oam.dev/v1beta1kind: Applicationmetadata:annotations:app.oam.dev/publishVersion: version8name: web-demonamespace: demospec:components:- name: deployment #独立引用deployment,方便差异化配置type: ref-objectsproperties:objects:- apiVersion: apps/v1kind: Deploymentname: web-demo- name: configmap #独立引用configmap,方便差异化配置type: ref-objectsproperties:objects:- apiVersion: v1kind: ConfigMapname: web-demo- name: same-resource #不做差异化配置type: ref-objectsproperties:objects:- apiVersion: v1kind: Servicename: web-demo- apiVersion: networking.k8s.io/v1kind: Ingressname: web-demoworkflow:ref: deploy-demo

kubectl apply -f app.yaml

kubectl get app web-demo -n demo

NAME COMPONENT TYPE PHASE HEALTHY STATUS AGEweb-demo deployment ref-objects workflowSuspending true 47h复制

kubectl amc get deployment web-demo -n demo -m all

Run on ManagedCluster managedcluster-cxxx (cluster1-hangzhou)No resources found in demo namespace #第一次新部署应用,工作流还没有开始部署cluster1-hangzhouRun on ManagedCluster managedcluster-cxxx (cluster2-beijing)No resources found in demo namespace #第一次新部署应用,工作流还没有开始部署cluster2-beijiing,等待人工审核Run on ManagedCluster managedcluster-cxxx (cluster1-beijing)NAME READY UP-TO-DATE AVAILABLE AGEweb-demo 5/5 5 5 47h #Deployment在cluster1-beijing集群上运行正常复制

kubectl amc workflow resume web-demo -n demoSuccessfully resume workflow: web-demo

kubectl get app web-demo -n demo

NAME COMPONENT TYPE PHASE HEALTHY STATUS AGEweb-demo deployment ref-objects running true 47h复制

kubectl amc get deployment web-demo -n demo -m all

Run on ManagedCluster managedcluster-cxxx (cluster1-hangzhou)NAME READY UP-TO-DATE AVAILABLE AGEweb-demo 1/1 1 1 47hRun on ManagedCluster managedcluster-cxxx (cluster2-beijing)NAME READY UP-TO-DATE AVAILABLE AGEweb-demo 5/5 5 5 2dRun on ManagedCluster managedcluster-cxxx (cluster1-beijing)NAME READY UP-TO-DATE AVAILABLE AGEweb-demo 5/5 5 5 47h复制

kubectl amc get ingress -n demo -m all复制

Run on ManagedCluster managedcluster-cxxx (cluster1-hangzhou)NAME CLASS HOSTS ADDRESS PORTS AGEweb-demo nginx web-demo.example.com 47.xxx.xxx.xxx 80 47hRun on ManagedCluster managedcluster-cxxx (cluster2-beijing)NAME CLASS HOSTS ADDRESS PORTS AGEweb-demo nginx web-demo.example.com 123.xxx.xxx.xxx 80 2dRun on ManagedCluster managedcluster-cxxx (cluster1-beijing)NAME CLASS HOSTS ADDRESS PORTS AGEweb-demo nginx web-demo.example.com 182.xxx.xxx.xxx 80 2d

05 流量管理

Cloud Native

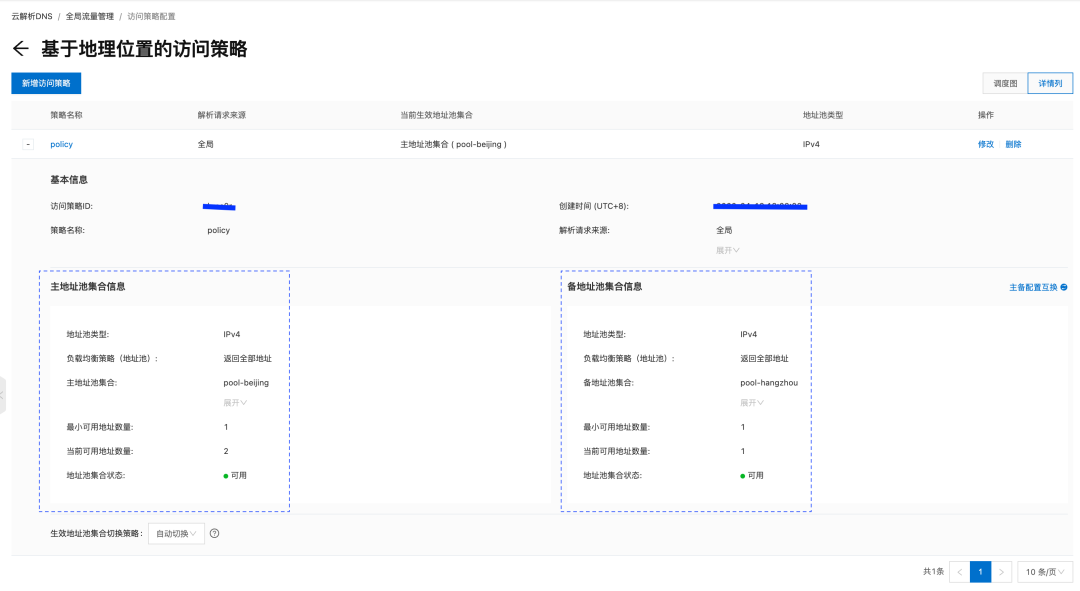

通过配置全局流量管理,自动检测应用运行状态,并在异常发生时,自动切换流量到监控集群。

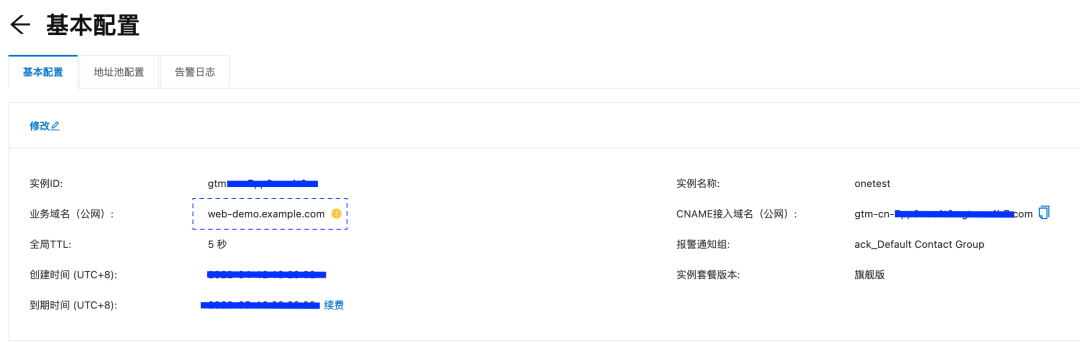

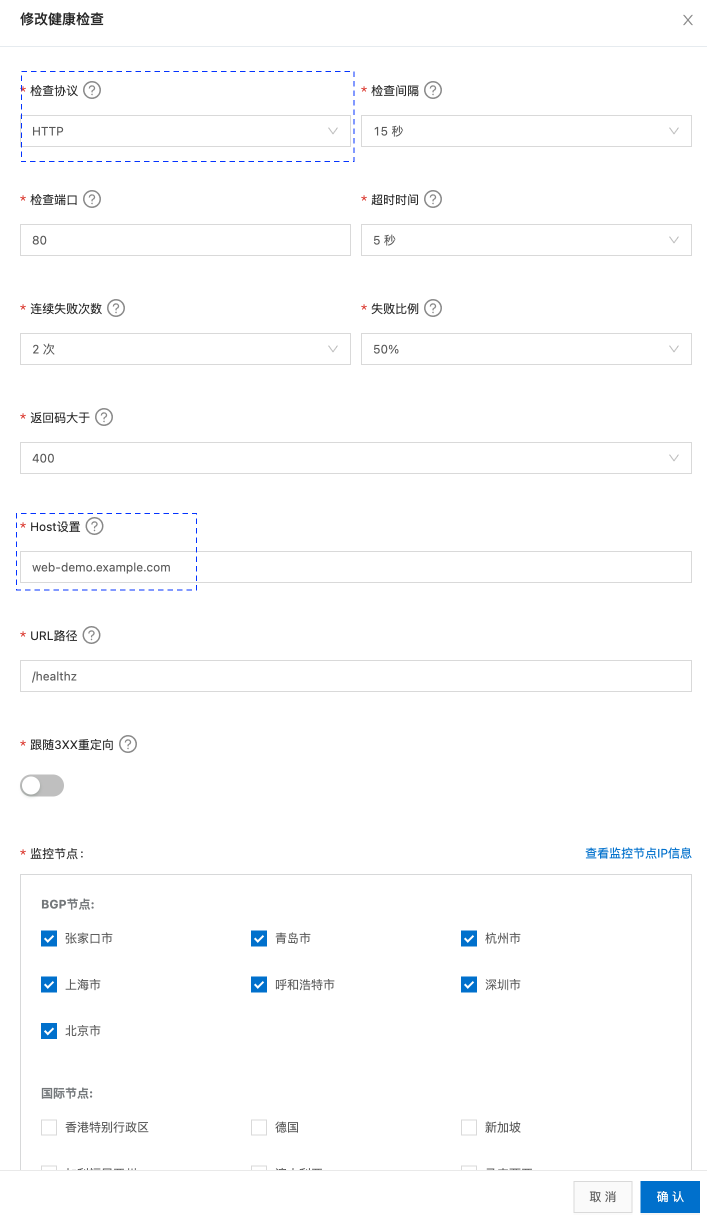

1. 配置全局流量管理实例,web-demo.example.com 为示例应用的域名,请替换为实际应用的域名,并设置 DNS 解析到全局流量管理的 CNAME 接入域名。

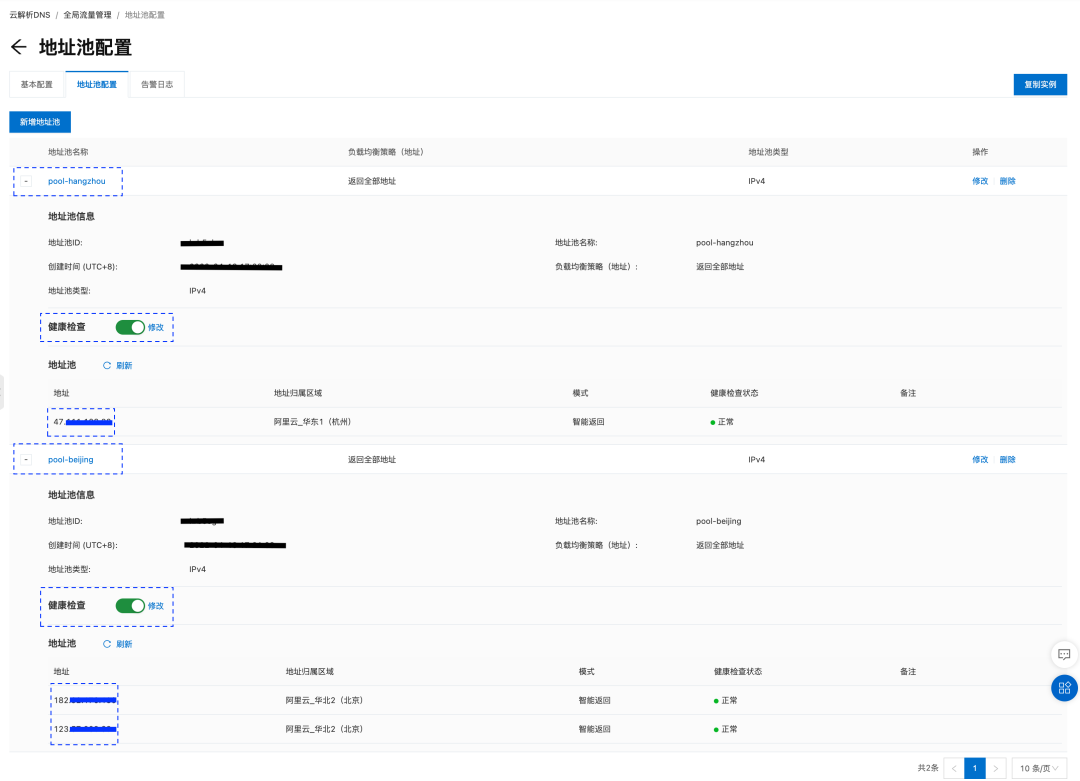

2. 在已创建的 GTM 示例中,创建 2 个地址池:

pool-beijing:包含 2 个北京集群的 Ingress IP 地址,负载均衡策略为返回全部地址,实现北京 2 个集群的负载均衡。Ingress IP 地址可通过在主控实例上运行 “kubectl amc get ingress -n demo -m all” 获取。

pool-hangzhou:包含 1 个杭州集群的 Ingress IP 地址。

部署验证

Cloud Native

1. 正常情况,所有有流量都有北京的 2 个集群上的应用处理,每个集群各处理 50% 流量。

for i in {1..50}; do curl web-demo.example.com; sleep 3; doneThis is env cluster1-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster1-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster1-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}2. 当集群 cluster1-beijing 上的应用异常时,GTM 将所有的流量路由到 cluster2-bejing 集群处理。

for i in {1..50}; do curl web-demo.example.com; sleep 3; done...<html><head><title>503 Service Temporarily Unavailable</title></head><body><center><h1>503 Service Temporarily Unavailable</h1></center><hr><center>nginx</center></body></html>This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}This is env cluster2-beijing !Config file is {database-host: "beijing-db.pg.aliyun.com"}

3. 当集群 cluster1-beijing 和 cluster2-beijing 上的应用同时异常时,GTM 将流量路由到 cluster1-hangzhou 集群处理。

for i in {1..50}; do curl web-demo.example.com; sleep 3; done<head><title>503 Service Temporarily Unavailable</title></head><body><center><h1>503 Service Temporarily Unavailable</h1></center><hr><center>nginx</center></body></html><html><head><title>503 Service Temporarily Unavailable</title></head><body><center><h1>503 Service Temporarily Unavailable</h1></center><hr><center>nginx</center></body></html>This is env cluster1-hangzhou !Config file is {database-address: "hangzhou-db.pg.aliyun.com"}This is env cluster1-hangzhou !Config file is {database-address: "hangzhou-db.pg.aliyun.com"}This is env cluster1-hangzhou !Config file is {database-address: "hangzhou-db.pg.aliyun.com"}This is env cluster1-hangzhou !Config file is {database-address: "hangzhou-db.pg.aliyun.com"}07 总结

Cloud Native

相关链接

Cloud Native

[1] 开启多集群管理主控实例:

https://help.aliyun.com/document_detail/384048.html

[2] 通过管理关联集群:

https://help.aliyun.com/document_detail/415167.html

[3] 创建 GTM 实例:

https://dns.console.aliyun.com/#/gtm2/list