有关dataX更多内容请参考:https://www.xmmup.com/alikaiyuanetlgongjuzhidataxhedatax-webjieshao.html

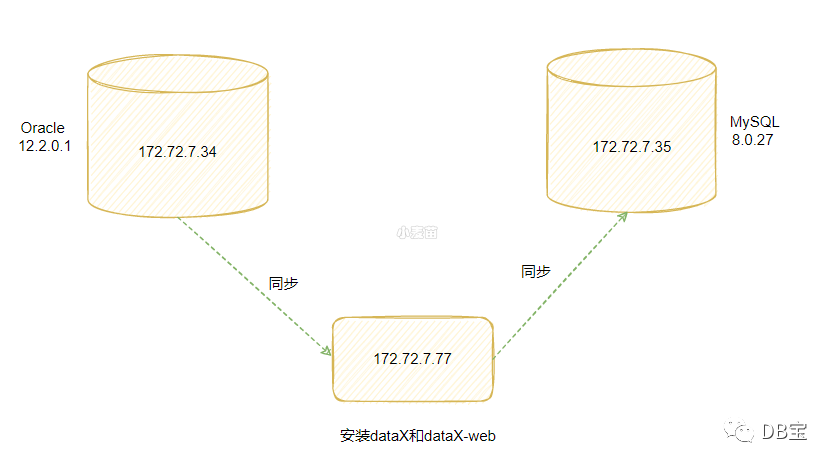

环境准备

Oracle和MySQL环境准备

1-- 创建专用网络

2docker network create --subnet=172.72.7.0/24 ora-network

3

4

5

6-- oracle 压测工具

7docker pull lhrbest/lhrdbbench:1.0

8

9docker rm -f lhrdbbench

10docker run -d --name lhrdbbench -h lhrdbbench \

11 --net=ora-network --ip 172.72.7.26 \

12 -v /sys/fs/cgroup:/sys/fs/cgroup \

13 --privileged=true lhrbest/lhrdbbench:1.0 \

14 /usr/sbin/init

15

16

17

18-- Oracle 12c

19docker rm -f lhrora1221

20docker run -itd --name lhrora1221 -h lhrora1221 \

21 --net=ora-network --ip 172.72.7.34 \

22 -p 1526:1521 -p 3396:3389 \

23 --privileged=true \

24 lhrbest/oracle_12cr2_ee_lhr_12.2.0.1:2.0 init

25

26

27

28-- mysql

29docker rm -f mysql8027

30docker run -d --name mysql8027 -h mysql8027 -p 3418:3306 \

31 --net=ora-network --ip 172.72.7.35 \

32 -v /etc/mysql/mysql8027/conf:/etc/mysql/conf.d \

33 -e MYSQL_ROOT_PASSWORD=lhr -e TZ=Asia/Shanghai \

34 mysql:8.0.27

35

36

37cat > /etc/mysql/mysql8027/conf/my.cnf << "EOF"

38[mysqld]

39default-time-zone = '+8:00'

40log_timestamps = SYSTEM

41skip-name-resolve

42log-bin

43server_id=80273418

44character_set_server=utf8mb4

45default_authentication_plugin=mysql_native_password

46EOF

47

48mysql -uroot -plhr -h 172.72.7.35

49create database lhrdb;

50

51

52

53

54-- 业务用户

55CREATE USER lhr identified by lhr;

56alter user lhr identified by lhr;

57GRANT DBA to lhr ;

58grant SELECT ANY DICTIONARY to lhr;

59GRANT EXECUTE ON SYS.DBMS_LOCK TO lhr;

60

61

62

63-- 启动监听

64vi /u01/app/oracle/product/11.2.0.4/dbhome_1/network/admin/listener.ora

65lsnrctl start

66lsnrctl status复制

dataX环境准备

1docker rm -f lhrdataX

2docker run -itd --name lhrdataX -h lhrdataX \

3 --net=ora-network --ip 172.72.7.77 \

4 -p 9527:9527 -p 39389:3389 -p 33306:3306 \

5 -v /sys/fs/cgroup:/sys/fs/cgroup \

6 --privileged=true lhrbest/datax:2.0 \

7 /usr/sbin/init

8

9

10http://172.17.0.4:9527/index.html

11admin/123456复制

Oracle端数据初始化

1-- 源端数据初始化

2/usr/local/swingbench/bin/oewizard -s -create -c /usr/local/swingbench/wizardconfigs/oewizard.xml -create \

3-version 2.0 -cs //172.72.7.34/lhrsdb -dba "sys as sysdba" -dbap lhr -dt thin \

4-ts users -u lhr -p lhr -allindexes -scale 0.0001 -tc 16 -v -cl

5

6

7col TABLE_NAME format a30

8SELECT a.table_name,a.num_rows FROM dba_tables a where a.OWNER='LHR' ;

9select object_type,count(*) from dba_objects where owner='LHR' group by object_type;

10select object_type,status,count(*) from dba_objects where owner='LHR' group by object_type,status;

11select sum(bytes)/1024/1024 from dba_segments where owner='LHR';

12

13-- 检查键是否正确:https://www.xmmup.com/ogg-01296-biaoyouzhujianhuoweiyijiandanshirengranshiyongquanbulielaijiexixing.html

14-- 否则OGG启动后,会报错:OGG-01296、OGG-06439、OGG-01169 Encountered an update where all key columns for target table LHR.ORDER_ITEMS are not present.

15select owner, constraint_name, constraint_type, status, validated

16from dba_constraints

17where owner='LHR'

18and VALIDATED='NOT VALIDATED';

19

20select 'alter table lhr.'||TABLE_NAME||' enable validate constraint '||CONSTRAINT_NAME||';'

21from dba_constraints

22where owner='LHR'

23and VALIDATED='NOT VALIDATED';

24

25

26-- 删除外键

27SELECT 'ALTER TABLE LHR.'|| D.TABLE_NAME ||' DROP constraint '|| D.CONSTRAINT_NAME||';'

28FROM DBA_constraints d where owner='LHR' and d.CONSTRAINT_TYPE='R';

29

30

31

32

33sqlplus lhr/lhr@172.72.7.34:1521/lhrsdb

34

35@/oggoracle/demo_ora_create.sql

36@/oggoracle/demo_ora_insert.sql

37

38

39SQL> select * from tcustmer;

40

41CUST NAME CITY ST

42---- ------------------------------ -------------------- --

43WILL BG SOFTWARE CO. SEATTLE WA

44JANE ROCKY FLYER INC. DENVER CO

45

46

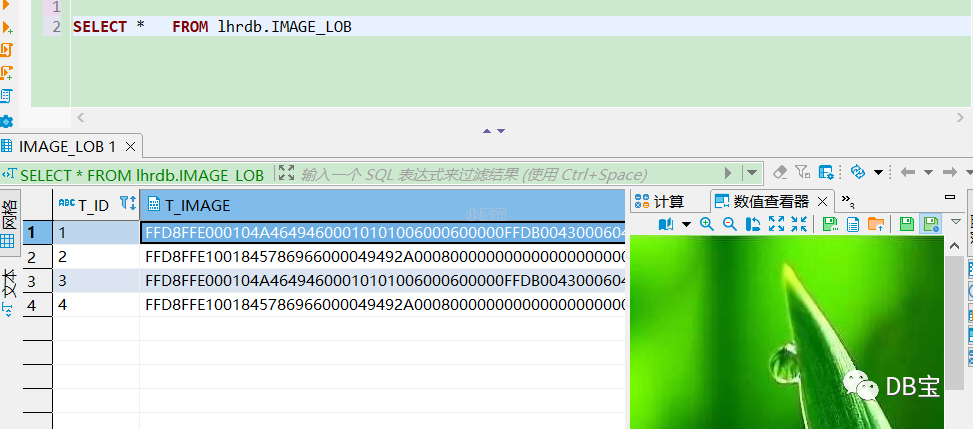

47-- 创建2个clob和blob类型的表

48sqlplus lhr/lhr@172.72.7.34:1521/lhrsdb @/oggoracle/demo_ora_lob_create.sql

49exec testing_lobs;

50select * from lhr.TSRSLOB;

51

52drop table IMAGE_LOB;

53CREATE TABLE IMAGE_LOB (

54 T_ID VARCHAR2 (5) NOT NULL,

55 T_IMAGE BLOB,

56 T_CLOB CLOB

57 );

58

59-- 插入blob文件

60CREATE OR REPLACE DIRECTORY D1 AS '/home/oracle/';

61grant all on DIRECTORY D1 TO PUBLIC;

62CREATE OR REPLACE NONEDITIONABLE PROCEDURE IMG_INSERT(TID VARCHAR2,

63 FILENAME VARCHAR2,

64 name VARCHAR2) AS

65 F_LOB BFILE;

66 B_LOB BLOB;

67BEGIN

68 INSERT INTO IMAGE_LOB

69 (T_ID, T_IMAGE,T_CLOB)

70 VALUES

71 (TID, EMPTY_BLOB(),name) RETURN T_IMAGE INTO B_LOB;

72 F_LOB := BFILENAME('D1', FILENAME);

73 DBMS_LOB.FILEOPEN(F_LOB, DBMS_LOB.FILE_READONLY);

74 DBMS_LOB.LOADFROMFILE(B_LOB, F_LOB, DBMS_LOB.GETLENGTH(F_LOB));

75 DBMS_LOB.FILECLOSE(F_LOB);

76 COMMIT;

77END;

78/

79

80

81BEGIN

82 IMG_INSERT('1','1.jpg','xmmup.com');

83 IMG_INSERT('2','2.jpg','www.xmmup.com');

84 END;

85/

86

87

88select * from IMAGE_LOB;

89

90

91

92

93

94----- oracle所有表

95SQL> select * from tab;

96

97TNAME TABTYPE CLUSTERID

98------------------------------ ------- ----------

99ADDRESSES TABLE

100CARD_DETAILS TABLE

101CUSTOMERS TABLE

102IMAGE_LOB TABLE

103INVENTORIES TABLE

104LOGON TABLE

105ORDERENTRY_METADATA TABLE

106ORDERS TABLE

107ORDER_ITEMS TABLE

108PRODUCTS VIEW

109PRODUCT_DESCRIPTIONS TABLE

110PRODUCT_INFORMATION TABLE

111PRODUCT_PRICES VIEW

112TCUSTMER TABLE

113TCUSTORD TABLE

114TSRSLOB TABLE

115TTRGVAR TABLE

116WAREHOUSES TABLE

117

11818 rows selected.

119

120

121

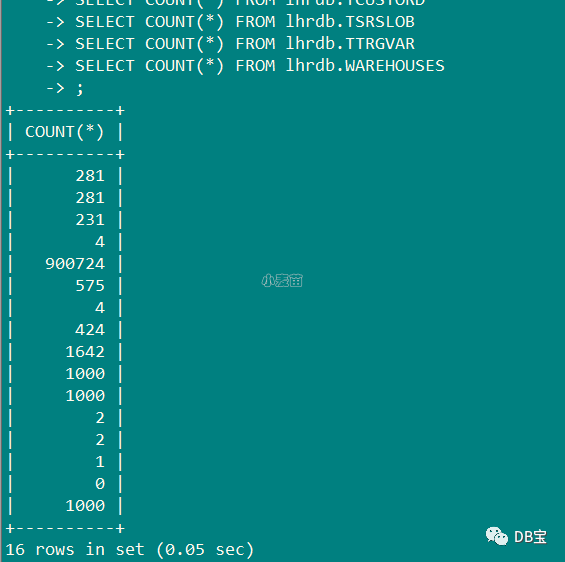

122SELECT COUNT(*) FROM LHR.ADDRESSES UNION ALL

123SELECT COUNT(*) FROM LHR.CARD_DETAILS UNION ALL

124SELECT COUNT(*) FROM LHR.CUSTOMERS UNION ALL

125SELECT COUNT(*) FROM LHR.IMAGE_LOB UNION ALL

126SELECT COUNT(*) FROM LHR.INVENTORIES UNION ALL

127SELECT COUNT(*) FROM LHR.LOGON UNION ALL

128SELECT COUNT(*) FROM LHR.ORDERENTRY_METADATA UNION ALL

129SELECT COUNT(*) FROM LHR.ORDERS UNION ALL

130SELECT COUNT(*) FROM LHR.ORDER_ITEMS UNION ALL

131SELECT COUNT(*) FROM LHR.PRODUCT_DESCRIPTIONS UNION ALL

132SELECT COUNT(*) FROM LHR.PRODUCT_INFORMATION UNION ALL

133SELECT COUNT(*) FROM LHR.TCUSTMER UNION ALL

134SELECT COUNT(*) FROM LHR.TCUSTORD UNION ALL

135SELECT COUNT(*) FROM LHR.TSRSLOB UNION ALL

136SELECT COUNT(*) FROM LHR.TTRGVAR UNION ALL

137SELECT COUNT(*) FROM LHR.WAREHOUSES

138;

139

140 COUNT(*)

141----------

142 281

143 281

144 231

145 4

146 900724

147 575

148 4

149 424

150 1642

151 1000

152 1000

153 2

154 2

155 1

156 0

157 1000

158

15916 rows selected.复制

最终,在Oracle端共包括16张表,2个视图,其中2个表TSRSLOB和IMAGE_LOB包括了blob和clob字段。

生成MySQL端DDL语句

可以使用Navicat的数据传输功能或其它工具直接从Oracle端生成MySQL类型的建表语句如下:

1mysql -uroot -plhr -h 172.72.7.35 -D lhrdb -f < ddl.sql

2

3mysql> show tables;

4+----------------------+

5| Tables_in_lhrdb |

6+----------------------+

7| ADDRESSES |

8| CARD_DETAILS |

9| CUSTOMERS |

10| IMAGE_LOB |

11| INVENTORIES |

12| LOGON |

13| ORDERENTRY_METADATA |

14| ORDERS |

15| ORDER_ITEMS |

16| PRODUCT_DESCRIPTIONS |

17| PRODUCT_INFORMATION |

18| TCUSTMER |

19| TCUSTORD |

20| TSRSLOB |

21| TTRGVAR |

22| WAREHOUSES |

23+----------------------+

2416 rows in set (0.01 sec)复制

DDL语句如下:

1/*

2 Navicat Premium Data Transfer

3

4 Source Server : ora12c

5 Source Server Type : Oracle

6 Source Server Version : 120200

7 Source Host : 192.168.1.35:1526

8 Source Schema : LHR

9

10 Target Server Type : MySQL

11 Target Server Version : 80099

12 File Encoding : 65001

13

14 Date: 28/06/2022 15:19:41

15*/

16

17SET NAMES utf8;

18SET FOREIGN_KEY_CHECKS = 0;

19

20-- ----------------------------

21-- Table structure for ADDRESSES

22-- ----------------------------

23DROP TABLE IF EXISTS `ADDRESSES`;

24CREATE TABLE `ADDRESSES` (

25 `ADDRESS_ID` decimal(12, 0) NOT NULL,

26 `CUSTOMER_ID` decimal(12, 0) NOT NULL,

27 `DATE_CREATED` datetime NOT NULL,

28 `HOUSE_NO_OR_NAME` varchar(60) NULL,

29 `STREET_NAME` varchar(60) NULL,

30 `TOWN` varchar(60) NULL,

31 `COUNTY` varchar(60) NULL,

32 `COUNTRY` varchar(60) NULL,

33 `POST_CODE` varchar(12) NULL,

34 `ZIP_CODE` varchar(12) NULL,

35 PRIMARY KEY (`ADDRESS_ID`),

36 INDEX `ADDRESS_CUST_IX`(`CUSTOMER_ID` ASC)

37);

38

39-- ----------------------------

40-- Table structure for CARD_DETAILS

41-- ----------------------------

42DROP TABLE IF EXISTS `CARD_DETAILS`;

43CREATE TABLE `CARD_DETAILS` (

44 `CARD_ID` decimal(12, 0) NOT NULL,

45 `CUSTOMER_ID` decimal(12, 0) NOT NULL,

46 `CARD_TYPE` varchar(30) NOT NULL,

47 `CARD_NUMBER` decimal(12, 0) NOT NULL,

48 `EXPIRY_DATE` datetime NOT NULL,

49 `IS_VALID` varchar(1) NOT NULL,

50 `SECURITY_CODE` decimal(6, 0) NULL,

51 PRIMARY KEY (`CARD_ID`),

52 INDEX `CARDDETAILS_CUST_IX`(`CUSTOMER_ID` ASC)

53);

54

55-- ----------------------------

56-- Table structure for CUSTOMERS

57-- ----------------------------

58DROP TABLE IF EXISTS `CUSTOMERS`;

59CREATE TABLE `CUSTOMERS` (

60 `CUSTOMER_ID` decimal(12, 0) NOT NULL,

61 `CUST_FIRST_NAME` varchar(40) NOT NULL,

62 `CUST_LAST_NAME` varchar(40) NOT NULL,

63 `NLS_LANGUAGE` varchar(3) NULL,

64 `NLS_TERRITORY` varchar(30) NULL,

65 `CREDIT_LIMIT` decimal(9, 2) NULL,

66 `CUST_EMAIL` varchar(100) NULL,

67 `ACCOUNT_MGR_ID` decimal(12, 0) NULL,

68 `CUSTOMER_SINCE` datetime NULL,

69 `CUSTOMER_CLASS` varchar(40) NULL,

70 `SUGGESTIONS` varchar(40) NULL,

71 `DOB` datetime NULL,

72 `MAILSHOT` varchar(1) NULL,

73 `PARTNER_MAILSHOT` varchar(1) NULL,

74 `PREFERRED_ADDRESS` decimal(12, 0) NULL,

75 `PREFERRED_CARD` decimal(12, 0) NULL,

76 PRIMARY KEY (`CUSTOMER_ID`),

77 INDEX `CUST_ACCOUNT_MANAGER_IX`(`ACCOUNT_MGR_ID` ASC),

78 INDEX `CUST_DOB_IX`(`DOB` ASC),

79 INDEX `CUST_EMAIL_IX`(`CUST_EMAIL` ASC)

80);

81

82-- ----------------------------

83-- Table structure for IMAGE_LOB

84-- ----------------------------

85DROP TABLE IF EXISTS `IMAGE_LOB`;

86CREATE TABLE `IMAGE_LOB` (

87 `T_ID` varchar(5) NOT NULL,

88 `T_IMAGE` longblob NULL,

89 `T_CLOB` longtext NULL

90);

91

92-- ----------------------------

93-- Table structure for INVENTORIES

94-- ----------------------------

95DROP TABLE IF EXISTS `INVENTORIES`;

96CREATE TABLE `INVENTORIES` (

97 `PRODUCT_ID` decimal(6, 0) NOT NULL,

98 `WAREHOUSE_ID` decimal(6, 0) NOT NULL,

99 `QUANTITY_ON_HAND` decimal(8, 0) NOT NULL,

100 PRIMARY KEY (`PRODUCT_ID`, `WAREHOUSE_ID`),

101 INDEX `INV_PRODUCT_IX`(`PRODUCT_ID` ASC),

102 INDEX `INV_WAREHOUSE_IX`(`WAREHOUSE_ID` ASC)

103);

104

105-- ----------------------------

106-- Table structure for LOGON

107-- ----------------------------

108DROP TABLE IF EXISTS `LOGON`;

109CREATE TABLE `LOGON` (

110 `LOGON_ID` decimal(65, 30) NOT NULL,

111 `CUSTOMER_ID` decimal(65, 30) NOT NULL,

112 `LOGON_DATE` datetime NULL

113);

114

115-- ----------------------------

116-- Table structure for ORDER_ITEMS

117-- ----------------------------

118DROP TABLE IF EXISTS `ORDER_ITEMS`;

119CREATE TABLE `ORDER_ITEMS` (

120 `ORDER_ID` decimal(12, 0) NOT NULL,

121 `LINE_ITEM_ID` decimal(3, 0) NOT NULL,

122 `PRODUCT_ID` decimal(6, 0) NOT NULL,

123 `UNIT_PRICE` decimal(8, 2) NULL,

124 `QUANTITY` decimal(8, 0) NULL,

125 `DISPATCH_DATE` datetime NULL,

126 `RETURN_DATE` datetime NULL,

127 `GIFT_WRAP` varchar(20) NULL,

128 `CONDITION` varchar(20) NULL,

129 `SUPPLIER_ID` decimal(6, 0) NULL,

130 `ESTIMATED_DELIVERY` datetime NULL,

131 PRIMARY KEY (`ORDER_ID`, `LINE_ITEM_ID`),

132 INDEX `ITEM_ORDER_IX`(`ORDER_ID` ASC),

133 INDEX `ITEM_PRODUCT_IX`(`PRODUCT_ID` ASC)

134);

135

136-- ----------------------------

137-- Table structure for ORDERENTRY_METADATA

138-- ----------------------------

139DROP TABLE IF EXISTS `ORDERENTRY_METADATA`;

140CREATE TABLE `ORDERENTRY_METADATA` (

141 `METADATA_KEY` varchar(30) NULL,

142 `METADATA_VALUE` varchar(30) NULL

143);

144

145-- ----------------------------

146-- Table structure for ORDERS

147-- ----------------------------

148DROP TABLE IF EXISTS `ORDERS`;

149CREATE TABLE `ORDERS` (

150 `ORDER_ID` decimal(12, 0) NOT NULL,

151 `ORDER_DATE` datetime NOT NULL,

152 `ORDER_MODE` varchar(8) NULL,

153 `CUSTOMER_ID` decimal(12, 0) NOT NULL,

154 `ORDER_STATUS` decimal(2, 0) NULL,

155 `ORDER_TOTAL` decimal(8, 2) NULL,

156 `SALES_REP_ID` decimal(6, 0) NULL,

157 `PROMOTION_ID` decimal(6, 0) NULL,

158 `WAREHOUSE_ID` decimal(6, 0) NULL,

159 `DELIVERY_TYPE` varchar(15) NULL,

160 `COST_OF_DELIVERY` decimal(6, 0) NULL,

161 `WAIT_TILL_ALL_AVAILABLE` varchar(15) NULL,

162 `DELIVERY_ADDRESS_ID` decimal(12, 0) NULL,

163 `CUSTOMER_CLASS` varchar(30) NULL,

164 `CARD_ID` decimal(12, 0) NULL,

165 `INVOICE_ADDRESS_ID` decimal(12, 0) NULL,

166 PRIMARY KEY (`ORDER_ID`),

167 INDEX `ORD_CUSTOMER_IX`(`CUSTOMER_ID` ASC),

168 INDEX `ORD_ORDER_DATE_IX`(`ORDER_DATE` ASC),

169 INDEX `ORD_SALES_REP_IX`(`SALES_REP_ID` ASC),

170 INDEX `ORD_WAREHOUSE_IX`(`WAREHOUSE_ID` ASC, `ORDER_STATUS` ASC)

171);

172

173-- ----------------------------

174-- Table structure for PRODUCT_DESCRIPTIONS

175-- ----------------------------

176DROP TABLE IF EXISTS `PRODUCT_DESCRIPTIONS`;

177CREATE TABLE `PRODUCT_DESCRIPTIONS` (

178 `PRODUCT_ID` decimal(6, 0) NOT NULL,

179 `LANGUAGE_ID` varchar(3) NOT NULL,

180 `TRANSLATED_NAME` varchar(50) NOT NULL,

181 `TRANSLATED_DESCRIPTION` text NOT NULL,

182 PRIMARY KEY (`PRODUCT_ID`, `LANGUAGE_ID`),

183 UNIQUE INDEX `PRD_DESC_PK`(`PRODUCT_ID` ASC, `LANGUAGE_ID` ASC),

184 INDEX `PROD_NAME_IX`(`TRANSLATED_NAME` ASC)

185);

186

187-- ----------------------------

188-- Table structure for PRODUCT_INFORMATION

189-- ----------------------------

190DROP TABLE IF EXISTS `PRODUCT_INFORMATION`;

191CREATE TABLE `PRODUCT_INFORMATION` (

192 `PRODUCT_ID` decimal(6, 0) NOT NULL,

193 `PRODUCT_NAME` varchar(50) NOT NULL,

194 `PRODUCT_DESCRIPTION` text NULL,

195 `CATEGORY_ID` decimal(4, 0) NOT NULL,

196 `WEIGHT_CLASS` decimal(1, 0) NULL,

197 `WARRANTY_PERIOD` longtext NULL,

198 `SUPPLIER_ID` decimal(6, 0) NULL,

199 `PRODUCT_STATUS` varchar(20) NULL,

200 `LIST_PRICE` decimal(8, 2) NULL,

201 `MIN_PRICE` decimal(8, 2) NULL,

202 `CATALOG_URL` varchar(50) NULL,

203 PRIMARY KEY (`PRODUCT_ID`),

204 INDEX `PROD_CATEGORY_IX`(`CATEGORY_ID` ASC),

205 INDEX `PROD_SUPPLIER_IX`(`SUPPLIER_ID` ASC)

206);

207

208-- ----------------------------

209-- Table structure for TCUSTMER

210-- ----------------------------

211DROP TABLE IF EXISTS `TCUSTMER`;

212CREATE TABLE `TCUSTMER` (

213 `CUST_CODE` varchar(4) NOT NULL,

214 `NAME` varchar(30) NULL,

215 `CITY` varchar(20) NULL,

216 `STATE` char(2) NULL,

217 PRIMARY KEY (`CUST_CODE`)

218);

219

220-- ----------------------------

221-- Table structure for TCUSTORD

222-- ----------------------------

223DROP TABLE IF EXISTS `TCUSTORD`;

224CREATE TABLE `TCUSTORD` (

225 `CUST_CODE` varchar(4) NOT NULL,

226 `ORDER_DATE` datetime NOT NULL,

227 `PRODUCT_CODE` varchar(8) NOT NULL,

228 `ORDER_ID` decimal(65, 30) NOT NULL,

229 `PRODUCT_PRICE` decimal(8, 2) NULL,

230 `PRODUCT_AMOUNT` decimal(6, 0) NULL,

231 `TRANSACTION_ID` decimal(65, 30) NULL,

232 PRIMARY KEY (`CUST_CODE`, `ORDER_DATE`, `PRODUCT_CODE`, `ORDER_ID`)

233);

234

235-- ----------------------------

236-- Table structure for TSRSLOB

237-- ----------------------------

238DROP TABLE IF EXISTS `TSRSLOB`;

239CREATE TABLE `TSRSLOB` (

240 `LOB_RECORD_KEY` decimal(65, 30) NOT NULL,

241 `LOB1_CLOB` longtext NULL,

242 `LOB2_BLOB` longblob NULL,

243 PRIMARY KEY (`LOB_RECORD_KEY`)

244);

245

246-- ----------------------------

247-- Table structure for TTRGVAR

248-- ----------------------------

249DROP TABLE IF EXISTS `TTRGVAR`;

250CREATE TABLE `TTRGVAR` (

251 `LOB_RECORD_KEY` decimal(65, 30) NOT NULL,

252 `LOB1_VCHAR0` text NULL,

253 `LOB1_VCHAR1` text NULL,

254 `LOB1_VCHAR2` text NULL,

255 `LOB1_VCHAR3` varchar(200) NULL,

256 `LOB1_VCHAR4` text NULL,

257 `LOB1_VCHAR5` text NULL,

258 `LOB1_VCHAR6` varchar(100) NULL,

259 `LOB1_VCHAR7` varchar(250) NULL,

260 `LOB1_VCHAR8` text NULL,

261 `LOB1_VCHAR9` text NULL,

262 `LOB2_VCHAR0` text NULL,

263 `LOB2_VCHAR1` text NULL,

264 `LOB2_VCHAR2` text NULL,

265 `LOB2_VCHAR3` text NULL,

266 `LOB2_VCHAR4` text NULL,

267 `LOB2_VCHAR5` text NULL,

268 `LOB2_VCHAR6` text NULL,

269 `LOB2_VCHAR7` varchar(150) NULL,

270 `LOB2_VCHAR8` text NULL,

271 `LOB2_VCHAR9` varchar(50) NULL,

272 PRIMARY KEY (`LOB_RECORD_KEY`)

273);

274

275-- ----------------------------

276-- Table structure for WAREHOUSES

277-- ----------------------------

278DROP TABLE IF EXISTS `WAREHOUSES`;

279CREATE TABLE `WAREHOUSES` (

280 `WAREHOUSE_ID` decimal(6, 0) NOT NULL,

281 `WAREHOUSE_NAME` varchar(35) NULL,

282 `LOCATION_ID` decimal(4, 0) NULL,

283 PRIMARY KEY (`WAREHOUSE_ID`),

284 INDEX `WHS_LOCATION_IX`(`LOCATION_ID` ASC)

285);

286

287SET FOREIGN_KEY_CHECKS = 1;复制

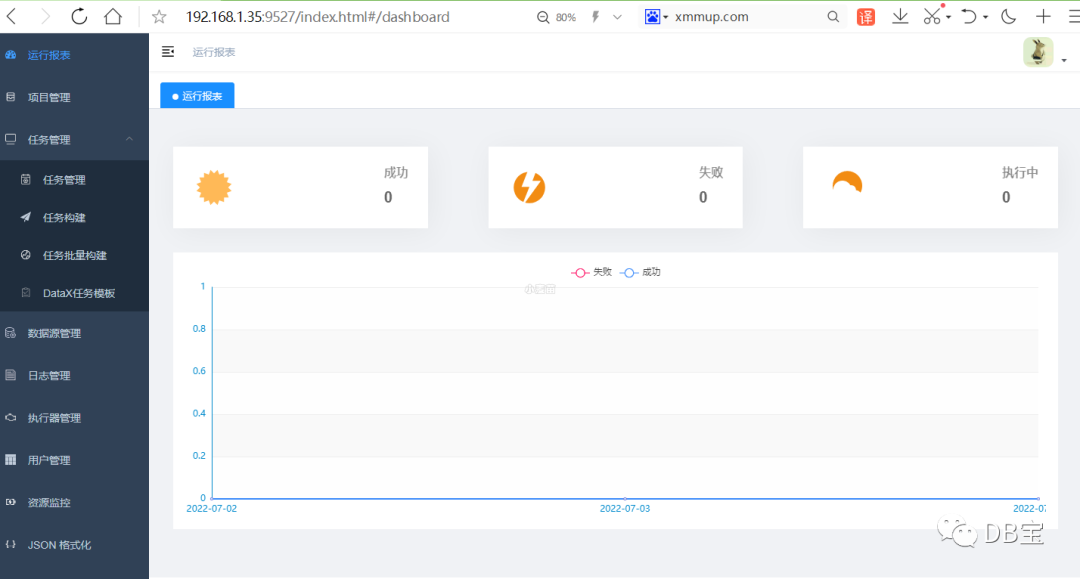

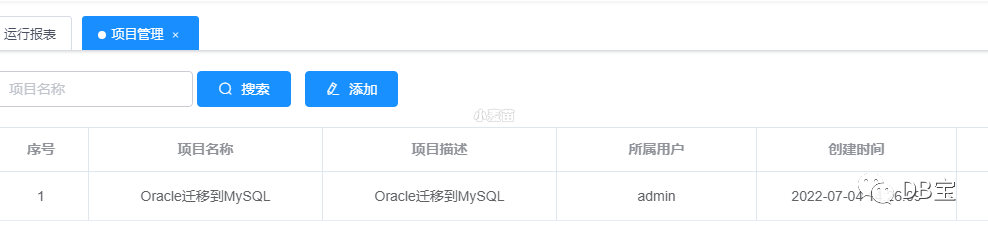

dataX-web配置

1、新增项目

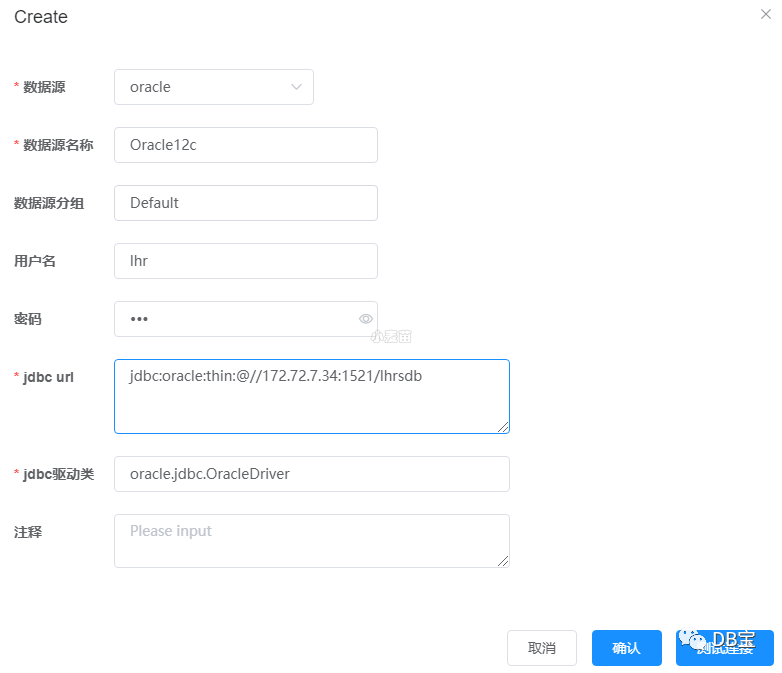

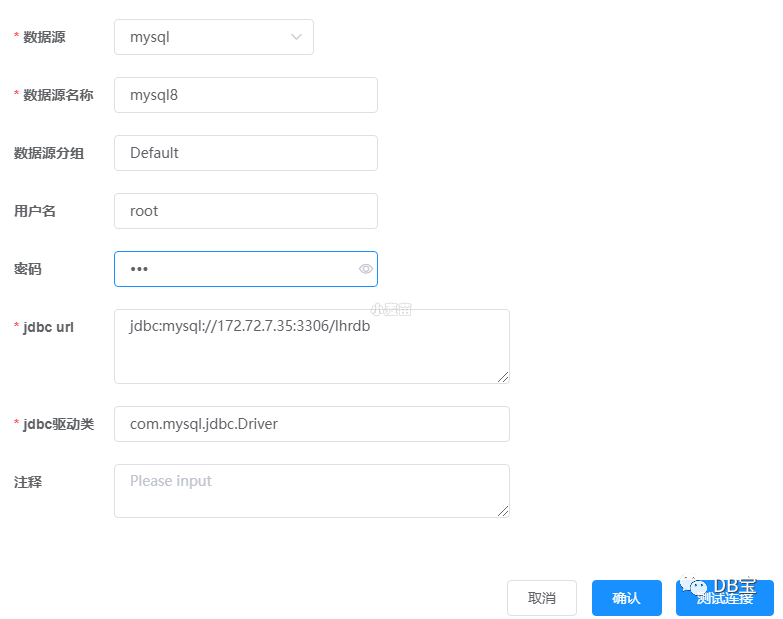

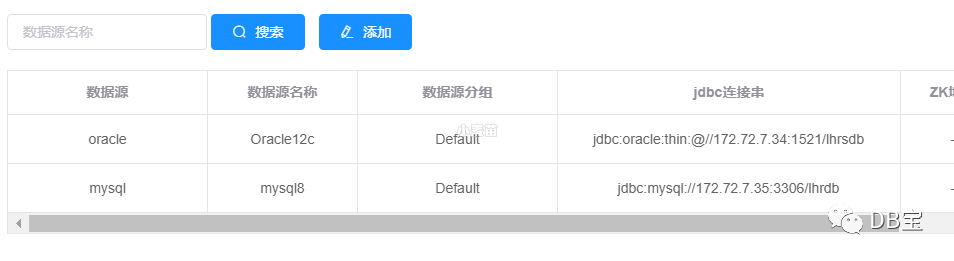

2、新增Oracle和MySQL数据源,并测试通过

1jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb

2jdbc:mysql://172.72.7.35:3306/lhrdb复制

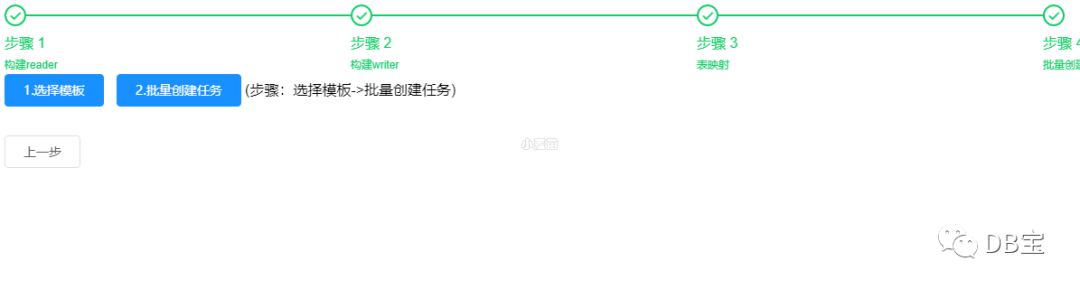

3、新增datax任务模板

4、构建批量任务

注意:任务构建每次只能建立一张表的迁移,所以,我们需要选择批量构建。

然后就是选择job的模板,最后就是批量创建任务。

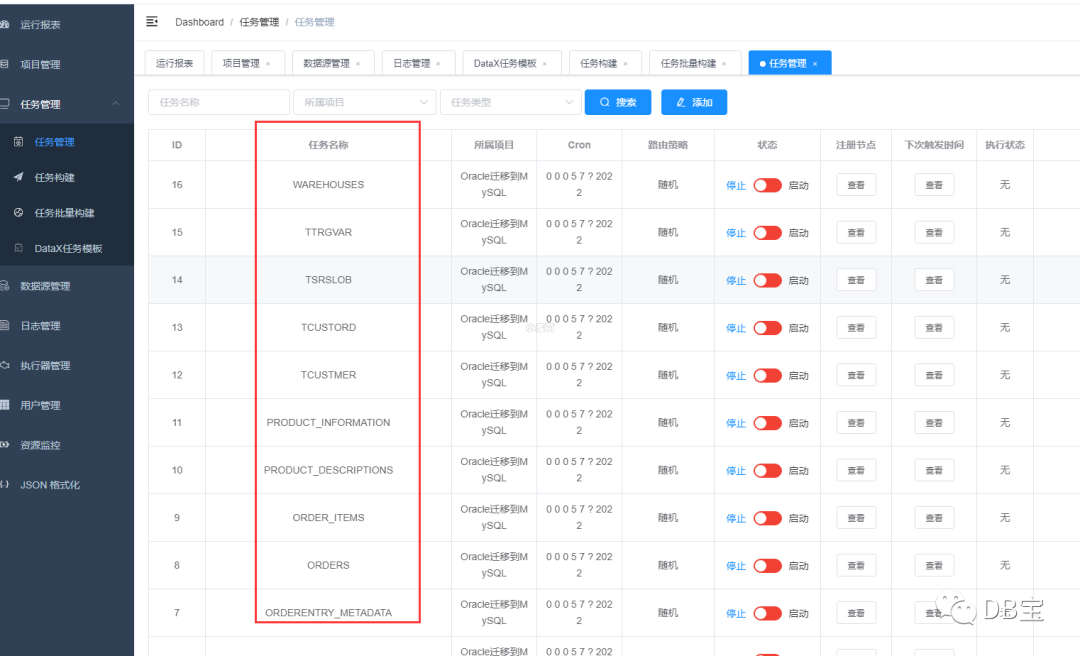

创建完成后,点击任务管理:

可以看到,基本是每张表1个任务。任务构建成功后,默认不开启执行调度。

我们可以在后台更新数据库表来批量操作:

1mysql -uroot -plhr -D datax

2update job_info t set t.job_cron='0 18 15 4 7 ? 2022';

3update job_info t set t.trigger_status=1;

4

5

6

7-- 日志

8tailf /usr/local/datax-web-2.1.2/modules/datax-executor/bin/console.out复制

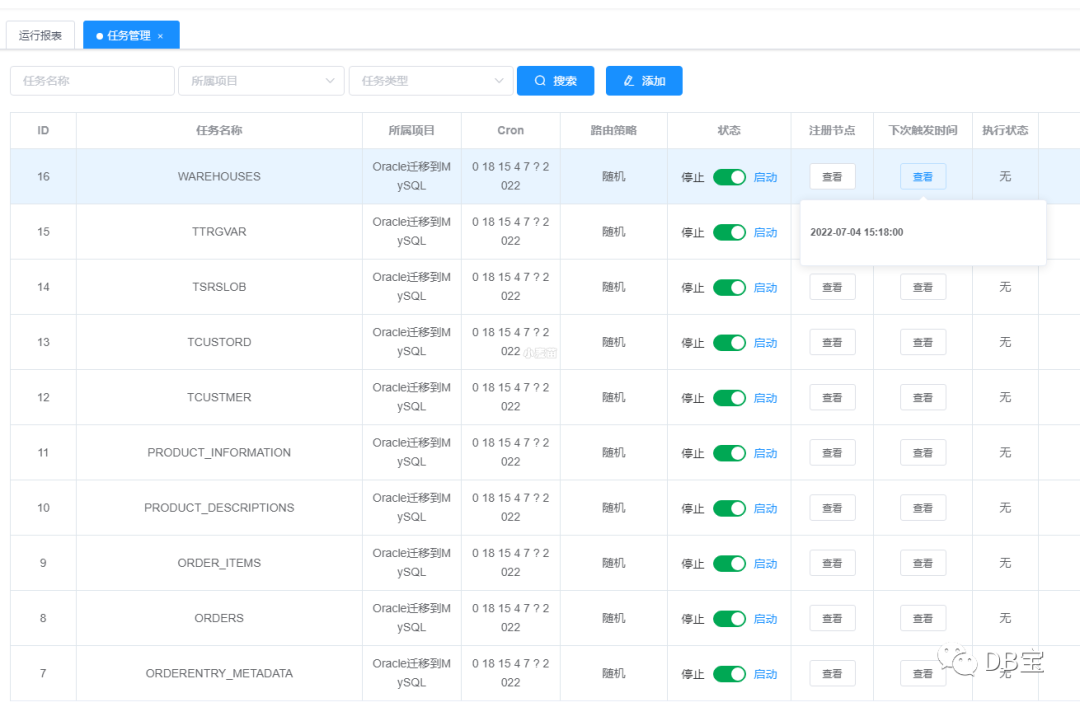

更新完成后,查看界面:

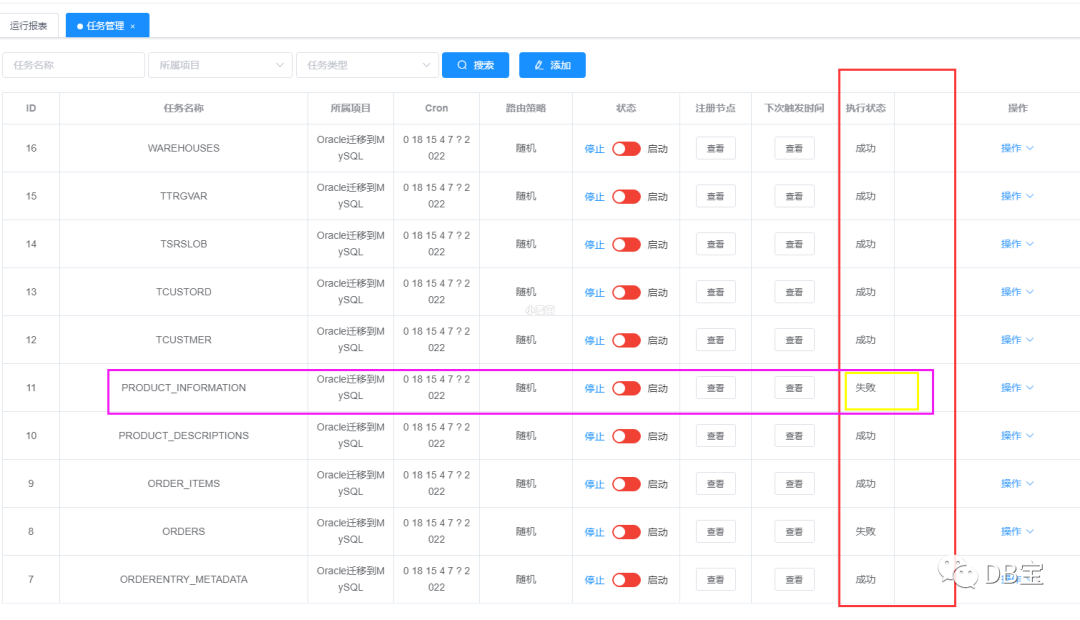

等到15:18分后,刷新一下界面,如下:

发现,除了ORDERS表和PRODUCT_INFORMATION表后,其它都迁移成功了。

PRODUCT_INFORMATION表错误解决

日志内容:

12022-07-04 15:18:00 [JobThread.run-130] <br>----------- datax-web job execute start -----------<br>----------- Param:

22022-07-04 15:18:00 [BuildCommand.buildDataXParam-100] ------------------Command parameters:

32022-07-04 15:18:01 [ExecutorJobHandler.execute-57] ------------------DataX process id: 4782

42022-07-04 15:18:01 [AnalysisStatistics.analysisStatisticsLog-53]

52022-07-04 15:18:01 [AnalysisStatistics.analysisStatisticsLog-53] DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

62022-07-04 15:18:01 [AnalysisStatistics.analysisStatisticsLog-53] Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

72022-07-04 15:18:01 [AnalysisStatistics.analysisStatisticsLog-53]

82022-07-04 15:18:01 [AnalysisStatistics.analysisStatisticsLog-53]

92022-07-04 15:18:01 [ProcessCallbackThread.callbackLog-186] <br>----------- datax-web job callback finish.

102022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.444 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

112022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.449 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

122022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.684 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

132022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.693 [main] INFO Engine - the machine info =>

142022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

152022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] osInfo: Red Hat, Inc. 1.8 25.332-b09

162022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] jvmInfo: Linux amd64 3.10.0-1127.10.1.el7.x86_64

172022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] cpu num: 16

182022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

192022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] totalPhysicalMemory: -0.00G

202022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] freePhysicalMemory: -0.00G

212022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] maxFileDescriptorCount: -1

222022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] currentOpenFileDescriptorCount: -1

232022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

242022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] GC Names [PS MarkSweep, PS Scavenge]

252022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

262022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] MEMORY_NAME | allocation_size | init_size

272022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] PS Eden Space | 256.00MB | 256.00MB

282022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] Code Cache | 240.00MB | 2.44MB

292022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] Compressed Class Space | 1,024.00MB | 0.00MB

302022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] PS Survivor Space | 42.50MB | 42.50MB

312022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] PS Old Gen | 683.00MB | 683.00MB

322022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] Metaspace | -0.00MB | 0.00MB

332022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

342022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

352022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.724 [main] INFO Engine -

362022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

372022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "content":[

382022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

392022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "reader":{

402022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "parameter":{

412022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "password":"***",

422022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "column":[

432022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"PRODUCT_ID\"",

442022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"PRODUCT_NAME\"",

452022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"PRODUCT_DESCRIPTION\"",

462022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"CATEGORY_ID\"",

472022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"WEIGHT_CLASS\"",

482022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"WARRANTY_PERIOD\"",

492022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"SUPPLIER_ID\"",

502022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"PRODUCT_STATUS\"",

512022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"LIST_PRICE\"",

522022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"MIN_PRICE\"",

532022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"CATALOG_URL\""

542022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

552022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "connection":[

562022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

572022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "jdbcUrl":[

582022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb"

592022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

602022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "table":[

612022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "PRODUCT_INFORMATION"

622022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ]

632022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

642022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

652022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "splitPk":"",

662022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "username":"lhr"

672022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

682022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "name":"oraclereader"

692022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

702022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "writer":{

712022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "parameter":{

722022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "password":"***",

732022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "column":[

742022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`PRODUCT_ID`",

752022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`PRODUCT_NAME`",

762022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`PRODUCT_DESCRIPTION`",

772022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`CATEGORY_ID`",

782022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`WEIGHT_CLASS`",

792022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`WARRANTY_PERIOD`",

802022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`SUPPLIER_ID`",

812022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`PRODUCT_STATUS`",

822022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`LIST_PRICE`",

832022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`MIN_PRICE`",

842022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`CATALOG_URL`"

852022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

862022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "connection":[

872022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

882022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "jdbcUrl":"jdbc:mysql://172.72.7.35:3306/lhrdb",

892022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "table":[

902022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "PRODUCT_INFORMATION"

912022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ]

922022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

932022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

942022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "username":"root"

952022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

962022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "name":"mysqlwriter"

972022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

982022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

992022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

1002022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "setting":{

1012022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "errorLimit":{

1022022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "record":0,

1032022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "percentage":0.02

1042022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

1052022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "speed":{

1062022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "byte":1048576,

1072022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "channel":3

1082022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1092022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1102022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1112022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

1122022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.820 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

1132022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.823 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

1142022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.823 [main] INFO JobContainer - DataX jobContainer starts job.

1152022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.825 [main] INFO JobContainer - Set jobId = 0

1162022-07-04 15:18:03 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:03.705 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb.

1172022-07-04 15:18:03 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:03.882 [job-0] INFO OriginalConfPretreatmentUtil - table:[PRODUCT_INFORMATION] has columns:[PRODUCT_ID,PRODUCT_NAME,PRODUCT_DESCRIPTION,CATEGORY_ID,WEIGHT_CLASS,WARRANTY_PERIOD,SUPPLIER_ID,PRODUCT_STATUS,LIST_PRICE,MIN_PRICE,CATALOG_URL].

1182022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.236 [job-0] INFO OriginalConfPretreatmentUtil - table:[PRODUCT_INFORMATION] all columns:[

1192022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] PRODUCT_ID,PRODUCT_NAME,PRODUCT_DESCRIPTION,CATEGORY_ID,WEIGHT_CLASS,WARRANTY_PERIOD,SUPPLIER_ID,PRODUCT_STATUS,LIST_PRICE,MIN_PRICE,CATALOG_URL

1202022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] ].

1212022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.372 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

1222022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] INSERT INTO %s (`PRODUCT_ID`,`PRODUCT_NAME`,`PRODUCT_DESCRIPTION`,`CATEGORY_ID`,`WEIGHT_CLASS`,`WARRANTY_PERIOD`,`SUPPLIER_ID`,`PRODUCT_STATUS`,`LIST_PRICE`,`MIN_PRICE`,`CATALOG_URL`) VALUES(?,?,?,?,?,?,?,?,?,?,?)

1232022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] ], which jdbcUrl like:[jdbc:mysql://172.72.7.35:3306/lhrdb?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&rewriteBatchedStatements=true&tinyInt1isBit=false]

1242022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.373 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

1252022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.373 [job-0] INFO JobContainer - DataX Reader.Job [oraclereader] do prepare work .

1262022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.391 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

1272022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.392 [job-0] INFO JobContainer - jobContainer starts to do split ...

1282022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.392 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 1048576 bytes.

1292022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.414 [job-0] INFO JobContainer - DataX Reader.Job [oraclereader] splits to [1] tasks.

1302022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.415 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

1312022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.450 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

1322022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.457 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

1332022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.460 [job-0] INFO JobContainer - Running by standalone Mode.

1342022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.483 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

1352022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.488 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to 1048576.

1362022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.489 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

1372022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.542 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

1382022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.657 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [select "PRODUCT_ID","PRODUCT_NAME","PRODUCT_DESCRIPTION","CATEGORY_ID","WEIGHT_CLASS","WARRANTY_PERIOD","SUPPLIER_ID","PRODUCT_STATUS","LIST_PRICE","MIN_PRICE","CATALOG_URL" from PRODUCT_INFORMATION

1392022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] ] jdbcUrl:[jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb].

1402022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.908 [0-0-0-reader] ERROR StdoutPluginCollector -

1412022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[WARRANTY_PERIOD], 字段名称:[-103], 字段Java类型:[oracle.sql.INTERVALYM]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .

1422022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

1432022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.buildRecord(CommonRdbmsReader.java:329) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1442022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.transportOneRecord(CommonRdbmsReader.java:237) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1452022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.startRead(CommonRdbmsReader.java:209) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1462022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.reader.oraclereader.OracleReader$Task.startRead(OracleReader.java:110) [oraclereader-0.0.1-SNAPSHOT.jar:na]

1472022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.ReaderRunner.run(ReaderRunner.java:57) [datax-core-0.0.1-SNAPSHOT.jar:na]

1482022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750) [na:1.8.0_332]

1492022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.913 [0-0-0-reader] ERROR StdoutPluginCollector - 脏数据:

1502022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] {"exception":"Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[WARRANTY_PERIOD], 字段名称:[-103], 字段Java类型:[oracle.sql.INTERVALYM]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .","record":[{"byteSize":3,"index":0,"rawData":"116","type":"DOUBLE"},{"byteSize":33,"index":1,"rawData":"GI1UIwLTmiYIq58xRuA2R1zol 6 mAeDR","type":"STRING"},{"byteSize":43,"index":2,"rawData":"Qs qeVNnksYSRmWmyKAOttUaVoDMeM zFnyczzTnyME","type":"STRING"},{"byteSize":2,"index":3,"rawData":"53","type":"DOUBLE"},{"byteSize":1,"index":4,"rawData":"7","type":"DOUBLE"}],"type":"reader"}

1512022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.918 [0-0-0-reader] ERROR ReaderRunner - Reader runner Received Exceptions:

1522022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-07], Description:[读取数据库数据失败. 请检查您的配置的 column/table/where/querySql或者向 DBA 寻求帮助.]. - 执行的SQL为: select "PRODUCT_ID","PRODUCT_NAME","PRODUCT_DESCRIPTION","CATEGORY_ID","WEIGHT_CLASS","WARRANTY_PERIOD","SUPPLIER_ID","PRODUCT_STATUS","LIST_PRICE","MIN_PRICE","CATALOG_URL" from PRODUCT_INFORMATION 具体错误信息为:com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[WARRANTY_PERIOD], 字段名称:[-103], 字段Java类型:[oracle.sql.INTERVALYM]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .

1532022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

1542022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.util.RdbmsException.asQueryException(RdbmsException.java:93) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1552022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.startRead(CommonRdbmsReader.java:220) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1562022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.reader.oraclereader.OracleReader$Task.startRead(OracleReader.java:110) ~[oraclereader-0.0.1-SNAPSHOT.jar:na]

1572022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.ReaderRunner.run(ReaderRunner.java:57) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

1582022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750) [na:1.8.0_332]

1592022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:16.497 [job-0] INFO StandAloneJobContainerCommunicator - Total 1 records, 82 bytes | Speed 8B/s, 0 records/s | Error 1 records, 82 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

1602022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:16.498 [job-0] ERROR JobContainer - 运行scheduler 模式[standalone]出错.

1612022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:16.498 [job-0] ERROR JobContainer - Exception when job run

1622022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[Framework-14], Description:[DataX传输脏数据超过用户预期,该错误通常是由于源端数据存在较多业务脏数据导致,请仔细检查DataX汇报的脏数据日志信息, 或者您可以适当调大脏数据阈值 .]. - 脏数据条数检查不通过,限制是[0]条,但实际上捕获了[1]条.

1632022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

1642022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.util.ErrorRecordChecker.checkRecordLimit(ErrorRecordChecker.java:58) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

1652022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.scheduler.AbstractScheduler.schedule(AbstractScheduler.java:89) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

1662022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.schedule(JobContainer.java:535) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

1672022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:119) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

1682022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.start(Engine.java:93) [datax-core-0.0.1-SNAPSHOT.jar:na]

1692022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.entry(Engine.java:175) [datax-core-0.0.1-SNAPSHOT.jar:na]

1702022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.main(Engine.java:208) [datax-core-0.0.1-SNAPSHOT.jar:na]

1712022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:16.499 [job-0] INFO StandAloneJobContainerCommunicator - Total 1 records, 82 bytes | Speed 82B/s, 1 records/s | Error 1 records, 82 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

1722022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:16.501 [job-0] ERROR Engine -

1732022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1742022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] 经DataX智能分析,该任务最可能的错误原因是:

1752022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[Framework-14], Description:[DataX传输脏数据超过用户预期,该错误通常是由于源端数据存在较多业务脏数据导致,请仔细检查DataX汇报的脏数据日志信息, 或者您可以适当调大脏数据阈值 .]. - 脏数据条数检查不通过,限制是[0]条,但实际上捕获了[1]条.

1762022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30)

1772022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.util.ErrorRecordChecker.checkRecordLimit(ErrorRecordChecker.java:58)

1782022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.scheduler.AbstractScheduler.schedule(AbstractScheduler.java:89)

1792022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.schedule(JobContainer.java:535)

1802022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:119)

1812022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.start(Engine.java:93)

1822022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.entry(Engine.java:175)

1832022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.main(Engine.java:208)

1842022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1852022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Mon Jul 04 15:18:04 GMT+08:00 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

1862022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Mon Jul 04 15:18:06 GMT+08:00 2022 WARN: Caught while disconnecting...

1872022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1882022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] EXCEPTION STACK TRACE:

1892022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1902022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1912022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1922022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] ** BEGIN NESTED EXCEPTION **

1932022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1942022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] javax.net.ssl.SSLException

1952022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] MESSAGE: closing inbound before receiving peer's close_notify

1962022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1972022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] STACKTRACE:

1982022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

1992022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] javax.net.ssl.SSLException: closing inbound before receiving peer's close_notify

2002022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at sun.security.ssl.SSLSocketImpl.shutdownInput(SSLSocketImpl.java:740)

2012022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at sun.security.ssl.SSLSocketImpl.shutdownInput(SSLSocketImpl.java:719)

2022022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.MysqlIO.quit(MysqlIO.java:2249)

2032022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.ConnectionImpl.realClose(ConnectionImpl.java:4232)

2042022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.ConnectionImpl.close(ConnectionImpl.java:1472)

2052022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.util.DBUtil.closeDBResources(DBUtil.java:492)

2062022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.util.DBUtil.getTableColumnsByConn(DBUtil.java:526)

2072022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.util.OriginalConfPretreatmentUtil.dealColumnConf(OriginalConfPretreatmentUtil.java:105)

2082022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.util.OriginalConfPretreatmentUtil.dealColumnConf(OriginalConfPretreatmentUtil.java:140)

2092022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.util.OriginalConfPretreatmentUtil.doPretreatment(OriginalConfPretreatmentUtil.java:35)

2102022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Job.init(CommonRdbmsWriter.java:41)

2112022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.writer.mysqlwriter.MysqlWriter$Job.init(MysqlWriter.java:31)

2122022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.initJobWriter(JobContainer.java:704)

2132022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:304)

2142022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113)

2152022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.start(Engine.java:93)

2162022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.entry(Engine.java:175)

2172022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.Engine.main(Engine.java:208)

2182022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2192022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2202022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] ** END NESTED EXCEPTION **

2212022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2222022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2232022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Mon Jul 04 15:18:06 GMT+08:00 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2242022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Mon Jul 04 15:18:06 GMT+08:00 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2252022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Mon Jul 04 15:18:06 GMT+08:00 2022 WARN: Caught while disconnecting...

2262022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2272022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] EXCEPTION STACK TRACE:

2282022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2292022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2302022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2312022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] ** BEGIN NESTED EXCEPTION **

2322022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2332022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] javax.net.ssl.SSLException

2342022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] MESSAGE: closing inbound before receiving peer's close_notify

2352022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2362022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] STACKTRACE:

2372022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2382022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] javax.net.ssl.SSLException: closing inbound before receiving peer's close_notify

2392022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at sun.security.ssl.SSLSocketImpl.shutdownInput(SSLSocketImpl.java:740)

2402022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at sun.security.ssl.SSLSocketImpl.shutdownInput(SSLSocketImpl.java:719)

2412022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.MysqlIO.quit(MysqlIO.java:2249)

2422022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.ConnectionImpl.realClose(ConnectionImpl.java:4232)

2432022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.ConnectionImpl.close(ConnectionImpl.java:1472)

2442022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.util.DBUtil.closeDBResources(DBUtil.java:492)

2452022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.prepare(CommonRdbmsWriter.java:259)

2462022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.writer.mysqlwriter.MysqlWriter$Task.prepare(MysqlWriter.java:73)

2472022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.WriterRunner.run(WriterRunner.java:50)

2482022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750)

2492022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2502022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2512022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] ** END NESTED EXCEPTION **

2522022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2532022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53]

2542022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Mon Jul 04 15:18:06 GMT+08:00 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2552022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] Exception in thread "taskGroup-0" com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-07], Description:[读取数据库数据失败. 请检查您的配置的 column/table/where/querySql或者向 DBA 寻求帮助.]. - 执行的SQL为: select "PRODUCT_ID","PRODUCT_NAME","PRODUCT_DESCRIPTION","CATEGORY_ID","WEIGHT_CLASS","WARRANTY_PERIOD","SUPPLIER_ID","PRODUCT_STATUS","LIST_PRICE","MIN_PRICE","CATALOG_URL" from PRODUCT_INFORMATION 具体错误信息为:com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[WARRANTY_PERIOD], 字段名称:[-103], 字段Java类型:[oracle.sql.INTERVALYM]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .

2562022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30)

2572022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.util.RdbmsException.asQueryException(RdbmsException.java:93)

2582022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.startRead(CommonRdbmsReader.java:220)

2592022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.reader.oraclereader.OracleReader$Task.startRead(OracleReader.java:110)

2602022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.ReaderRunner.run(ReaderRunner.java:57)

2612022-07-04 15:18:16 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750)

2622022-07-04 15:18:16 [JobThread.run-165] <br>----------- datax-web job execute end(finish) -----------<br>----------- ReturnT:ReturnT [code=500, msg=command exit value(1) is failed, content=null]

2632022-07-04 15:18:16 [TriggerCallbackThread.callbackLog-186] <br>----------- datax-web job callback finish.复制

仔细阅读,核心错误内容如下:

[AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[WARRANTY_PERIOD], 字段名称:[-103], 字段Java类型:[oracle.sql.INTERVALYM]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .

查询Oracle端和MySQL端的数据类型:

1PRODUCT_INFORMATION.WARRANTY_PERIOD

2Oracle:INTERVAL YEAR(2) TO MONTH

3MySQL:longtext复制

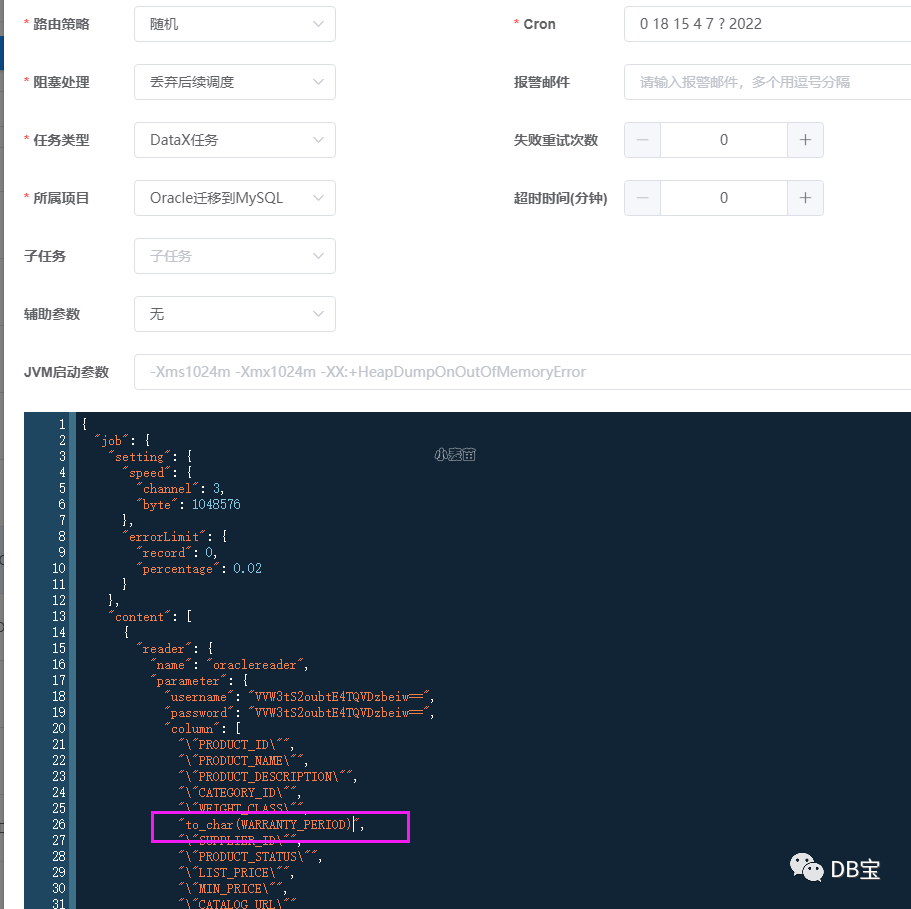

解决办法:"to_char(WARRANTY_PERIOD)"

如下:

然后,重新同步该表即可。

ORDERS表错误解决

报错:

12022-07-04 15:18:00 [JobThread.run-130] <br>----------- datax-web job execute start -----------<br>----------- Param:

22022-07-04 15:18:00 [BuildCommand.buildDataXParam-100] ------------------Command parameters:

32022-07-04 15:18:00 [ExecutorJobHandler.execute-57] ------------------DataX process id: 4546

42022-07-04 15:18:00 [AnalysisStatistics.analysisStatisticsLog-53]

52022-07-04 15:18:00 [AnalysisStatistics.analysisStatisticsLog-53] DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

62022-07-04 15:18:00 [AnalysisStatistics.analysisStatisticsLog-53] Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

72022-07-04 15:18:00 [AnalysisStatistics.analysisStatisticsLog-53]

82022-07-04 15:18:00 [AnalysisStatistics.analysisStatisticsLog-53]

92022-07-04 15:18:01 [ProcessCallbackThread.callbackLog-186] <br>----------- datax-web job callback finish.

102022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.008 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

112022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.059 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

122022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.284 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

132022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.294 [main] INFO Engine - the machine info =>

142022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

152022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] osInfo: Red Hat, Inc. 1.8 25.332-b09

162022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] jvmInfo: Linux amd64 3.10.0-1127.10.1.el7.x86_64

172022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] cpu num: 16

182022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

192022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] totalPhysicalMemory: -0.00G

202022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] freePhysicalMemory: -0.00G

212022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] maxFileDescriptorCount: -1

222022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] currentOpenFileDescriptorCount: -1

232022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

242022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] GC Names [PS MarkSweep, PS Scavenge]

252022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

262022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] MEMORY_NAME | allocation_size | init_size

272022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] PS Eden Space | 256.00MB | 256.00MB

282022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] Code Cache | 240.00MB | 2.44MB

292022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] Compressed Class Space | 1,024.00MB | 0.00MB

302022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] PS Survivor Space | 42.50MB | 42.50MB

312022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] PS Old Gen | 683.00MB | 683.00MB

322022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] Metaspace | -0.00MB | 0.00MB

332022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

342022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

352022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.332 [main] INFO Engine -

362022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

372022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "content":[

382022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

392022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "reader":{

402022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "parameter":{

412022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "password":"***",

422022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "column":[

432022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"ORDER_ID\"",

442022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"ORDER_DATE\"",

452022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"ORDER_MODE\"",

462022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"CUSTOMER_ID\"",

472022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"ORDER_STATUS\"",

482022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"ORDER_TOTAL\"",

492022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"SALES_REP_ID\"",

502022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"PROMOTION_ID\"",

512022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"WAREHOUSE_ID\"",

522022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"DELIVERY_TYPE\"",

532022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"COST_OF_DELIVERY\"",

542022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"WAIT_TILL_ALL_AVAILABLE\"",

552022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"DELIVERY_ADDRESS_ID\"",

562022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"CUSTOMER_CLASS\"",

572022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"CARD_ID\"",

582022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "\"INVOICE_ADDRESS_ID\""

592022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

602022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "connection":[

612022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

622022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "jdbcUrl":[

632022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb"

642022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

652022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "table":[

662022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "ORDERS"

672022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ]

682022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

692022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

702022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "splitPk":"",

712022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "username":"lhr"

722022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

732022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "name":"oraclereader"

742022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

752022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "writer":{

762022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "parameter":{

772022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "password":"***",

782022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "column":[

792022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`ORDER_ID`",

802022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`ORDER_DATE`",

812022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`ORDER_MODE`",

822022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`CUSTOMER_ID`",

832022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`ORDER_STATUS`",

842022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`ORDER_TOTAL`",

852022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`SALES_REP_ID`",

862022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`PROMOTION_ID`",

872022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`WAREHOUSE_ID`",

882022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`DELIVERY_TYPE`",

892022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`COST_OF_DELIVERY`",

902022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`WAIT_TILL_ALL_AVAILABLE`",

912022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`DELIVERY_ADDRESS_ID`",

922022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`CUSTOMER_CLASS`",

932022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`CARD_ID`",

942022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "`INVOICE_ADDRESS_ID`"

952022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

962022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "connection":[

972022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] {

982022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "jdbcUrl":"jdbc:mysql://172.72.7.35:3306/lhrdb",

992022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "table":[

1002022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "ORDERS"

1012022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ]

1022022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1032022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

1042022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "username":"root"

1052022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

1062022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "name":"mysqlwriter"

1072022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1082022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1092022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] ],

1102022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "setting":{

1112022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "errorLimit":{

1122022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "record":0,

1132022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "percentage":0.02

1142022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] },

1152022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "speed":{

1162022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "byte":1048576,

1172022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] "channel":3

1182022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1192022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1202022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] }

1212022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53]

1222022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.402 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

1232022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.405 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

1242022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.406 [main] INFO JobContainer - DataX jobContainer starts job.

1252022-07-04 15:18:02 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:02.408 [main] INFO JobContainer - Set jobId = 0

1262022-07-04 15:18:03 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:03.316 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb.

1272022-07-04 15:18:03 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:03.568 [job-0] INFO OriginalConfPretreatmentUtil - table:[ORDERS] has columns:[ORDER_ID,ORDER_DATE,ORDER_MODE,CUSTOMER_ID,ORDER_STATUS,ORDER_TOTAL,SALES_REP_ID,PROMOTION_ID,WAREHOUSE_ID,DELIVERY_TYPE,COST_OF_DELIVERY,WAIT_TILL_ALL_AVAILABLE,DELIVERY_ADDRESS_ID,CUSTOMER_CLASS,CARD_ID,INVOICE_ADDRESS_ID].

1282022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.558 [job-0] INFO OriginalConfPretreatmentUtil - table:[ORDERS] all columns:[

1292022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] ORDER_ID,ORDER_DATE,ORDER_MODE,CUSTOMER_ID,ORDER_STATUS,ORDER_TOTAL,SALES_REP_ID,PROMOTION_ID,WAREHOUSE_ID,DELIVERY_TYPE,COST_OF_DELIVERY,WAIT_TILL_ALL_AVAILABLE,DELIVERY_ADDRESS_ID,CUSTOMER_CLASS,CARD_ID,INVOICE_ADDRESS_ID

1302022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] ].

1312022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.651 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

1322022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] INSERT INTO %s (`ORDER_ID`,`ORDER_DATE`,`ORDER_MODE`,`CUSTOMER_ID`,`ORDER_STATUS`,`ORDER_TOTAL`,`SALES_REP_ID`,`PROMOTION_ID`,`WAREHOUSE_ID`,`DELIVERY_TYPE`,`COST_OF_DELIVERY`,`WAIT_TILL_ALL_AVAILABLE`,`DELIVERY_ADDRESS_ID`,`CUSTOMER_CLASS`,`CARD_ID`,`INVOICE_ADDRESS_ID`) VALUES(?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?)

1332022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] ], which jdbcUrl like:[jdbc:mysql://172.72.7.35:3306/lhrdb?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&rewriteBatchedStatements=true&tinyInt1isBit=false]

1342022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.651 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

1352022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.652 [job-0] INFO JobContainer - DataX Reader.Job [oraclereader] do prepare work .

1362022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.652 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

1372022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.653 [job-0] INFO JobContainer - jobContainer starts to do split ...

1382022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.653 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 1048576 bytes.

1392022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.676 [job-0] INFO JobContainer - DataX Reader.Job [oraclereader] splits to [1] tasks.

1402022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.677 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

1412022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.718 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

1422022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.746 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

1432022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.755 [job-0] INFO JobContainer - Running by standalone Mode.

1442022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.778 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

1452022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.866 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to 1048576.

1462022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.866 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

1472022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.906 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

1482022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:05.912 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [select "ORDER_ID","ORDER_DATE","ORDER_MODE","CUSTOMER_ID","ORDER_STATUS","ORDER_TOTAL","SALES_REP_ID","PROMOTION_ID","WAREHOUSE_ID","DELIVERY_TYPE","COST_OF_DELIVERY","WAIT_TILL_ALL_AVAILABLE","DELIVERY_ADDRESS_ID","CUSTOMER_CLASS","CARD_ID","INVOICE_ADDRESS_ID" from ORDERS

1492022-07-04 15:18:05 [AnalysisStatistics.analysisStatisticsLog-53] ] jdbcUrl:[jdbc:oracle:thin:@//172.72.7.34:1521/lhrsdb].

1502022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.166 [0-0-0-reader] ERROR StdoutPluginCollector -

1512022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[ORDER_DATE], 字段名称:[-102], 字段Java类型:[oracle.sql.TIMESTAMPLTZ]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .

1522022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

1532022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.buildRecord(CommonRdbmsReader.java:329) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1542022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.transportOneRecord(CommonRdbmsReader.java:237) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1552022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.startRead(CommonRdbmsReader.java:209) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1562022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.reader.oraclereader.OracleReader$Task.startRead(OracleReader.java:110) [oraclereader-0.0.1-SNAPSHOT.jar:na]

1572022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.ReaderRunner.run(ReaderRunner.java:57) [datax-core-0.0.1-SNAPSHOT.jar:na]

1582022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750) [na:1.8.0_332]

1592022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.201 [0-0-0-reader] ERROR StdoutPluginCollector - 脏数据:

1602022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] {"exception":"Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[ORDER_DATE], 字段名称:[-102], 字段Java类型:[oracle.sql.TIMESTAMPLTZ]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .","record":[{"byteSize":3,"index":0,"rawData":"152","type":"DOUBLE"}],"type":"reader"}

1612022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] 2022-07-04 15:18:06.228 [0-0-0-reader] ERROR ReaderRunner - Reader runner Received Exceptions:

1622022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-07], Description:[读取数据库数据失败. 请检查您的配置的 column/table/where/querySql或者向 DBA 寻求帮助.]. - 执行的SQL为: select "ORDER_ID","ORDER_DATE","ORDER_MODE","CUSTOMER_ID","ORDER_STATUS","ORDER_TOTAL","SALES_REP_ID","PROMOTION_ID","WAREHOUSE_ID","DELIVERY_TYPE","COST_OF_DELIVERY","WAIT_TILL_ALL_AVAILABLE","DELIVERY_ADDRESS_ID","CUSTOMER_CLASS","CARD_ID","INVOICE_ADDRESS_ID" from ORDERS 具体错误信息为:com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-12], Description:[不支持的数据库类型. 请注意查看 DataX 已经支持的数据库类型以及数据库版本.]. - 您的配置文件中的列配置信息有误. 因为DataX 不支持数据库读取这种字段类型. 字段名:[ORDER_DATE], 字段名称:[-102], 字段Java类型:[oracle.sql.TIMESTAMPLTZ]. 请尝试使用数据库函数将其转换datax支持的类型 或者不同步该字段 .

1632022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:30) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

1642022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.util.RdbmsException.asQueryException(RdbmsException.java:93) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1652022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.startRead(CommonRdbmsReader.java:220) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

1662022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.reader.oraclereader.OracleReader$Task.startRead(OracleReader.java:110) ~[oraclereader-0.0.1-SNAPSHOT.jar:na]

1672022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.ReaderRunner.run(ReaderRunner.java:57) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

1682022-07-04 15:18:06 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750) [na:1.8.0_332]