11.安装CoreDNS

[root@k8s-master01 ~]# cat coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: registry.aliyuncs.com/google_containers/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

#如果更改了k8s service的网段需要将coredns的serviceIP改成k8s service网段的第十个IP

[root@k8s-master01 ~]# sed -i "s#10.96.0.10#10.96.0.10#g" coredns.yaml复制

安装coredns

[root@k8s-master01 ~]# grep "image:" coredns.yaml

image: registry.aliyuncs.com/google_containers/coredns:1.7.0

[root@k8s-master01 ~]# cat download_coredns_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_metrics_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' coredns.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Coredns镜像"${END}

for i in ${images};do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Coredns镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_coredns_images.sh

[root@k8s-master01 ~]# docker images |grep coredns

harbor.raymonds.cc/google_containers/coredns 1.7.0 bfe3a36ebd25 19 months ago 45.2MB

[root@k8s-master01 ~]# sed -ri 's@(.*image:) registry.aliyuncs.com(/.*)@\1 harbor.raymonds.cc\2@g' coredns.yaml

[root@k8s-master01 ~]# grep "image:" coredns.yaml

image: harbor.raymonds.cc/google_containers/coredns:1.7.0

[root@k8s-master01 ~]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

#查看状态

[root@k8s-master01 ~]# kubectl get po -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-867d46bfc6-gmbcc 1/1 Running 0 41s复制

ubuntu会出现如下问题:

root@k8s-master01:~# kubectl get pod -A -o wide|grep coredns

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-847c895554-9jqq5 0/1 CrashLoopBackOff 1 8s 192.171.30.65 k8s-master02.example.local <none> <none>

#由于ubuntu系统有dns本地缓存,造成coredns不能正常解析

#具体问题请参考官方https://coredns.io/plugins/loop/#troubleshooting

root@k8s-master01:~# kubectl edit -n kube-system cm coredns

...

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop #将loop插件直接删除,避免内部循环

reload

loadbalance

}

root@k8s-master01:~# kubectl get pod -A -o wide |grep coredns

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-847c895554-r9tsd 0/1 CrashLoopBackOff 4 3m4s 192.170.21.195 k8s-node03.example.local <none> <none>

root@k8s-master01:~# kubectl delete pod coredns-847c895554-r9tsd -n kube-system

pod "coredns-847c895554-r9tsd" deleted

root@k8s-master01:~# kubectl get pod -A -o wide |greo coredns

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-847c895554-cqwl5 1/1 Running 0 13s 192.167.195.130 k8s-node02.example.local <none> <none>

#现在就正常了复制

12.安装Metrics Server

https://github.com/kubernetes-sigs/metrics-server

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。

安装metrics server

[root@k8s-master01 ~]# cat components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --metric-resolution=30s

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100复制

修改下面内容:

[root@k8s-master01 ~]# vim components.yaml

...

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --metric-resolution=30s

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

#添加下面内容

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem #注意二进制包证书文件是front-proxy-ca.pem

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

...

volumeMounts:

- mountPath: tmp

name: tmp-dir

#添加下面内容

- name: ca-ssl

mountPath: etc/kubernetes/pki

...

volumes:

- emptyDir: {}

name: tmp-dir

#添加下面内容

- name: ca-ssl

hostPath:

path: etc/kubernetes/pki

...复制

下载镜像并修改镜像地址

[root@k8s-master01 ~]# grep "image:" components.yaml

image: registry.aliyuncs.com/google_containers/metrics-server:v0.4.1

[root@k8s-master01 ~]# cat download_metrics_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_metrics_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' components.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Metrics镜像"${END}

for i in ${images};do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Metrics镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_metrics_images.sh

[root@k8s-master01 ~]# docker images |grep metrics

harbor.raymonds.cc/google_containers/metrics-server v0.4.1 9759a41ccdf0 14 months ago 60.5MB

[root@k8s-master01 ~]# sed -ri 's@(.*image:) registry.aliyuncs.com(/.*)@\1 harbor.raymonds.cc\2@g' components.yaml

[root@k8s-master01 ~]# grep "image:" components.yaml

image: harbor.raymonds.cc/google_containers/metrics-server:v0.4.1复制

安装metrics server

[root@k8s-master01 ~]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created复制

查看状态

[root@k8s-master01 ~]# kubectl get pod -n kube-system |grep metrics

metrics-server-5b7c76b46c-bv9g5 1/1 Running 0 24s

[root@k8s-master01 ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01.example.local 204m 5% 1203Mi 31%

k8s-master02.example.local 133m 3% 880Mi 23%

k8s-master03.example.local 144m 3% 1038Mi 27%

k8s-node01.example.local 115m 2% 571Mi 9%

k8s-node02.example.local 94m 2% 579Mi 9%

k8s-node03.example.local 83m 2% 577Mi 9%复制

13.安装dashboard

13.1 Dashboard部署

Dashboard用于展示集群中的各类资源,同时也可以通过Dashboard实时查看Pod的日志和在容器中执行一些命令等。

[root@k8s-master01 ~]# cat recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-beijing.aliyuncs.com/dotbalo/dashboard:v2.0.4

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: certs

# Create on-disk volume to store exec logs

- mountPath: tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path:

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: registry.cn-beijing.aliyuncs.com/dotbalo/metrics-scraper:v1.0.4

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path:

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

[root@k8s-master01 ~]# vim recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加这行

ports:

- port: 443

targetPort: 8443

nodePort: 30005 #添加这行

selector:

k8s-app: kubernetes-dashboard

...

[root@k8s-master01 ~]# grep "image:" recommended.yaml

image: kubernetesui/dashboard:v2.0.4

image: kubernetesui/metrics-scraper:v1.0.4复制

下载镜像并上传到harbor

[root@k8s-master01 ~]# cat download_dashboard_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_dashboard_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' recommended.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Dashboard镜像"${END}

for i in ${images};do

docker pull registry.aliyuncs.com/google_containers/$i

docker tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

docker rmi registry.aliyuncs.com/google_containers/$i

docker push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Dashboard镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_dashboard_images.sh

[root@k8s-master01 ~]# sed -ri 's@(.*image:) kubernetesui(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' recommended.yaml

[root@k8s-master01 ~]# grep "image:" recommended.yaml

image: harbor.raymonds.cc/google_containers/dashboard:v2.0.4

image: harbor.raymonds.cc/google_containers/metrics-scraper:v1.0.4

[root@k8s-master01 ~]# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created复制

创建管理员用户admin.yaml

[root@k8s-master01 ~]# vim admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

[root@k8s-master01 ~]# kubectl apply -f admin.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created复制

13.2 登录dashboard

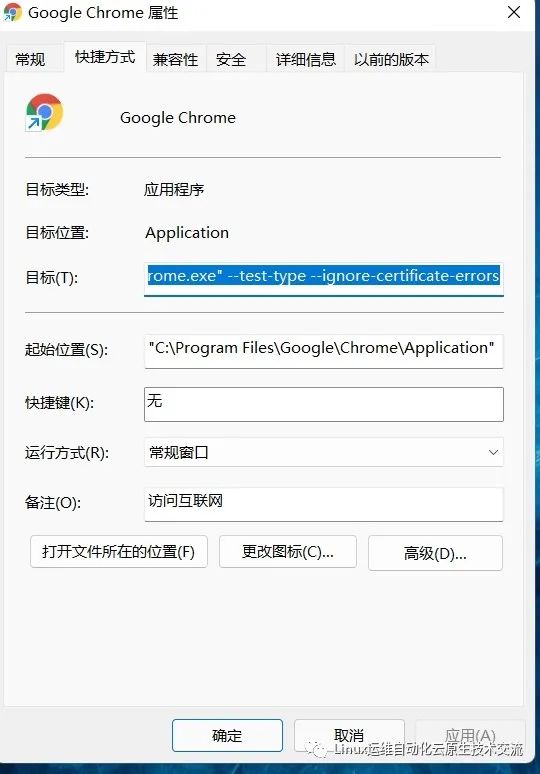

在谷歌浏览器(Chrome)启动文件中加入启动参数,用于解决无法访问Dashboard的问题,参考图1-1:

--test-type --ignore-certificate-errors复制

图1-1 谷歌浏览器 Chrome的配置

[root@k8s-master01 ~]# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.106.189.113 <none> 443:30005/TCP 18s复制

访问Dashboard:https://172.31.3.101:30005,参考图1-2

图1-2 Dashboard登录方式

13.2.1 token登录

查看token值:

[root@k8s-master01 ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-9dmsd

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 7d0d71d5-8454-40c1-877f-63e56e4fceda

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1411 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InhpRVlPQzVJRER0TUR2OURobHI4Wnh0Z192eVo1SndMOGdfaEprNFg2RmMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTlkbXNkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI3ZDBkNzFkNS04NDU0LTQwYzEtODc3Zi02M2U1NmU0ZmNlZGEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.AfUDqSA8YtMo7UJdQ_NMMU5BylS_fbf7jb_vTuYKsqVlxVjWe_1AgnaicyG0pO4UnL6_vkzqY3MigTqwlyuYKXrDb58F4MwjzHjMKMLHlesjo9WkzMptdq83fIU_8FQ731TROGaZsXGuBu1zOppiWqag-43d0Lqv2BBVl70-6-F5BAJ_XM5NSbFz7slxIUjbWJ4szauNCnUhy8z89bH4JIwVCD_lqvsC0rvCM8kgEaHHv9qIYL1uFfK8Y5bFy7BMXWHhJo5VwRvQ6-8Nz4bXgfDKWeBgovrnkR71WrgGtK0LZHPYZZo-GrxkVn4ixb0AOdgYxruXgkjs1otwoNvoig复制

将token值输入到令牌后,单击登录即可访问Dashboard,参考图1-3:

13.2.2 使用kubeconfig文件登录dashboard

[root@k8s-master01 ~]# cp etc/kubernetes/admin.kubeconfig kubeconfig

[root@k8s-master01 ~]# vim kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQ1RENDQXN5Z0F3SUJBZ0lVTExMRWFmWUZtT256NFFZOHMzcWNOTTFFWUFFd0RRWUpLb1pJaHZjTkFRRUwKQlFBd2R6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEV6QVJCZ05WQkFvVENrdDFZbVZ5Ym1WMFpYTXhHakFZQmdOVkJBc1RFVXQxWW1WeWJtVjBaWE10CmJXRnVkV0ZzTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1DQVhEVEl5TURFeE5qRXpORE13TUZvWUR6SXgKTWpFeE1qSXpNVE0wTXpBd1dqQjNNUXN3Q1FZRFZRUUdFd0pEVGpFUU1BNEdBMVVFQ0JNSFFtVnBhbWx1WnpFUQpNQTRHQTFVRUJ4TUhRbVZwYW1sdVp6RVRNQkVHQTFVRUNoTUtTM1ZpWlhKdVpYUmxjekVhTUJnR0ExVUVDeE1SClMzVmlaWEp1WlhSbGN5MXRZVzUxWVd3eEV6QVJCZ05WQkFNVENtdDFZbVZ5Ym1WMFpYTXdnZ0VpTUEwR0NTcUcKU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkFRQzV1S3F2S1RsK3V3SHg3cGZLSGtHd0o0cFoxOXVkZ0xpZApjY0xBemFjSldoNTlIbnVJSzQ4SWQyVXQzenNNbmpWUktkMzR0NUNFQldkMmNQeVFveGl3ck5EdkNySnJBNmdoCkdTY0VMa0dpSldnYzNjN0lKSXlhM3d3akxITVBCbHp3RC80aitqWFFwTTltWElWeE5ndVk0dW1NeStXYzNBTGwKdE8yMEllUzVzTDlOWi9yc0F4MU8wOWtlc3ZYYXl4cWVXTXRJUStKQ1lqUzNETk95R1M1WERwTkRSaExRdUxJUApRUktHVGVvVm1vL0FvNHlIVFcyL0JJSXFJN1p6OGdMRUNWZlFPV3E0Q2JTMWRTbkJJYUZVc3RKRjNoMEd3UWRuCnc4NHBmV25DRlEzMkhFN0N2SVdMckcweFcyTmc3djhyWGIrdGZHQ2FSVEtLREVZQjNzU0RBZ01CQUFHalpqQmsKTUE0R0ExVWREd0VCL3dRRUF3SUJCakFTQmdOVkhSTUJBZjhFQ0RBR0FRSC9BZ0VDTUIwR0ExVWREZ1FXQkJRbApsNW1MUVlLdWw5SGJRNFplc1lKMGc5TDIrekFmQmdOVkhTTUVHREFXZ0JRbGw1bUxRWUt1bDlIYlE0WmVzWUowCmc5TDIrekFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBVHgyVUZSY0xFNzl1YXVpSTRGajBoNldSVndkTHhxMWsKZk90QWRuL0F5bmhHcjVoV09UVW5OS05OMG14NFM1WHZQTmptdVNHRGFCcjJtb3NjSU9pYmVDbHdUNWFSRG56YwpzS3loc2ZhNi9CcTVYNHhuMjdld0dvWjNuaXNSdExOQllSWHNjWTdHZ2U4c1V4eXlPdGdjNTRVbWRWYnJPN1VMCkRJV3VlYVdtT2FxOUxvNzlRWTdGQlFteEZab1lFeDY4ODMxNVZMNEY2bC83cVVKZ1FhOXBVV2Qwb0RDeExEaEwKUFhnZkEyakNBZmVpQVl6RFh3T1BwaURqN3lYSmZQVGlCSXFEQS9lYmYzOXFiTXhGMmtGdTdkOXNNaXNIYXZabgpsYUJqbHlCYTRBQ0d1eE9xTzlLaFc1cG04ZkE5NlBDRmh0eERPUmtURTVmNkcrZHBqWmpUNnc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://172.31.3.188:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQvRENDQXVTZ0F3SUJBZ0lVTTJNRVRqTnFFRFVlVzkxQWVacUxJVE5rNTlRd0RRWUpLb1pJaHZjTkFRRUwKQlFBd2R6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEV6QVJCZ05WQkFvVENrdDFZbVZ5Ym1WMFpYTXhHakFZQmdOVkJBc1RFVXQxWW1WeWJtVjBaWE10CmJXRnVkV0ZzTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1DQVhEVEl5TURFeE5qRXpOVEF3TUZvWUR6SXgKTWpFeE1qSXpNVE0xTURBd1dqQjJNUXN3Q1FZRFZRUUdFd0pEVGpFUU1BNEdBMVVFQ0JNSFFtVnBhbWx1WnpFUQpNQTRHQTFVRUJ4TUhRbVZwYW1sdVp6RVhNQlVHQTFVRUNoTU9jM2x6ZEdWdE9tMWhjM1JsY25NeEdqQVlCZ05WCkJBc1RFVXQxWW1WeWJtVjBaWE10YldGdWRXRnNNUTR3REFZRFZRUURFd1ZoWkcxcGJqQ0NBU0l3RFFZSktvWkkKaHZjTkFRRUJCUUFEZ2dFUEFEQ0NBUW9DZ2dFQkFNd3M0U0pjOXlxMGI5OTRoUUc4Sis3a2wwenQ4dmxVY3k5RwpZMnhGbWFqU3dsYmFEOG13YmtqU05BckdrSjF4TC9Pd1FkTWxYUTJMT3dnSERqTERRUzlLa2QwZ2FWY2M3RjdvCm8xZGE1TEJWQW5uSzVzWUFwSjJ5ZHpZcFFqc3IwZkFEdjNkS3d2OWIwaXZkZCt1cGQ0cWU2cFVmK0IxalozV1IKTVpSSnFmN2hCWTdoR3BUU09ZR2dlTGFDUXFNTDBhMzJmVVZHaHJ3WmFveWVQSzBab2dTSi9HVHRmQTltWnFEaQorOW4xa1pwQlBhN2xCd3h1eng4T1hweUpwWmZYSEh0Zis2MTNoVDV6RnkxZUpQQnZHQkhnMXhVZXNmT0xDazZ5Cm9penFOSjYxaVk1Y0plTWU5U2NvR2VXQ0xPNGV1eU14MVRjMzVvUUlsMzlqUEhkVzdkOENBd0VBQWFOL01IMHcKRGdZRFZSMFBBUUgvQkFRREFnV2dNQjBHQTFVZEpRUVdNQlFHQ0NzR0FRVUZCd01CQmdnckJnRUZCUWNEQWpBTQpCZ05WSFJNQkFmOEVBakFBTUIwR0ExVWREZ1FXQkJRSWdua25wWW5xRzFKNmpUY3ROZHhnYXRtZCt6QWZCZ05WCkhTTUVHREFXZ0JRbGw1bUxRWUt1bDlIYlE0WmVzWUowZzlMMit6QU5CZ2txaGtpRzl3MEJBUXNGQUFPQ0FRRUEKUUJXVCs0TmZNT3ZDRyt6ZTBzZjZTRWNSNGR5c2d6N2wrRUJHWGZRc015WWZ2V1IzdlVqSXFDbVhkdGdRVGVoQQpCenFjdXRnU0NvQWROM05oamF5eWZ2WWx5TGp1dFN4L1llSFM2N2IxMG5oY3FQV2ZRdiswWnB3dW1Tblp1R2JzCm8xdDF4aUhRRFFJeGxnNnZ6NjV2TXM0RDhYMGIrNkZlYVE2QVhJU0FFNENla0V6aTBGVjFFUUZuV2FOU24yT1AKNERoR2VsajJHRWpValNybDNQY0JnWG1Za1hMSHMvMFB5a3JjVnI0WWtudHJ4Wkp1WWd6cURTS1NJQk91WkpXVwpabkZXb0x1aWZEWXJZVjI1WXUzVXoyY2JYSUxDaVRvc1BRUDBhU3hMV25vMXJlV0VZWVFnNTdHbHBWVkxHcXVWCjNTRndWQjJwTE1NKy9WYi9JRGJWa0E9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBekN6aElsejNLclJ2MzNpRkFid243dVNYVE8zeStWUnpMMFpqYkVXWnFOTENWdG9QCnliQnVTTkkwQ3NhUW5YRXY4N0JCMHlWZERZczdDQWNPTXNOQkwwcVIzU0JwVnh6c1h1aWpWMXJrc0ZVQ2Vjcm0KeGdDa25iSjNOaWxDT3l2UjhBTy9kMHJDLzF2U0s5MTM2NmwzaXA3cWxSLzRIV05uZFpFeGxFbXAvdUVGanVFYQpsTkk1Z2FCNHRvSkNvd3ZScmZaOVJVYUd2Qmxxako0OHJSbWlCSW44Wk8xOEQyWm1vT0w3MmZXUm1rRTlydVVICkRHN1BIdzVlbkltbGw5Y2NlMS83clhlRlBuTVhMVjRrOEc4WUVlRFhGUjZ4ODRzS1RyS2lMT28wbnJXSmpsd2wKNHg3MUp5Z1o1WUlzN2g2N0l6SFZOemZtaEFpWGYyTThkMWJ0M3dJREFRQUJBb0lCQVFDSmZWOU5xSlM0cVREOApwMGZKMTA1OHpHb21YOFhTcUUrNGNnblppelRpUHFxbm1jZ3Y1U01lM280MUEybTIyOVdTb0FwemlTR1VVVUc3Ck1pVVpnZXFQVWdQUGlGZm5WWTdHaXBvVDVSMUNzTHd1RDdnL2RZZGt1aDBVMTh2RjFNaFdlKytmQVRVMmlEcUwKVjJPOXlpeTVxRElIb2JPTzlyVmdzaGxVNWhZWGozTzY0UHdhanltSlVCNjZkK25RYVNnVXdtNGFMNzdVOCtyeApTQlFkOG16Vy8xMGgxQ3RXMkozYVcxbGwyaDJvZTlEUGVmWHhwUElsamhWbkZBRzZQQkhvb1Bna0hDOXM3OWJnCkpPck1IcGxneVRmeGxRNi82VU9wcEd0ZjlzOElsdnVMQkd0bDQvMEt6UVo2L2VRcXJONCsyelFOSkhVUmM1YXgKNVBvOVd5YmhBb0dCQU5qVU1jeG03N0VnbHFKWk5KWDFtVHJ3STFrMDhLR1J6UlZDMU83ZzhIWUZ3ekgveWJuNgpVTlUraTFqMDJMdXB2SVhFQ1ZRblJIcy81Tk00TXFqOE9xQ0l5L0pkUWFCeCtwcUQ3TlhJcnZhaEkyMzI0WE1ICjRuQzRzZHc0Rm5oWlNJTTg1d0VnU3hkVG1wNzBCdlNPckFwOGVsT2wzbG4yOWljb0pGaE56OFY3QW9HQkFQRVAKZk8vME9yb3JrWjhzSk5YY2RvM0FNenRKVzZxMlorWXBXcVBlaTlDNnBxZE9GMmhFMERXdy9uSG4vaDRiL2hZZwpUVmJscUxkYUtTSUZGVE9FUWkxREFieDdSZ3U2SHdvL1ZnRlpaYWNwM016YUlkMDYwOXBnR2drYW5MLzJ4MkI1ClVoMjNrK0RsYmlZTEFLcU5WbmcrL1pBTFpTOGx2cWJLT0JHYWhPSHRBb0dBUzY2TkR6Wml0V1dWam1jcWxxa1oKNmR1Rnl3NVNhMkt6dlpjTk1hL3IzcFlXVXE1Z1gvekNHQnh6a1FJdFlCdFh4U3p1d0tQUUlHRGw0dCs3dHdZTApCSnVhN0NhbTBIVFlMdlNiUnVkOFFuTnVKV1RGdmx2aktzc2NzYXdXRTcyK05LaWVUT05Uc25tby81QlhtU2J2Clg5Mmc2Tzk5VTlPQ2lacFdUVWdqbkY4Q2dZQkx1RnU4Vy9FZWpaVCtkTFZWWUJ6MVJjeFI4U2NVSnB2WVZtRWMKWEVsNjFVYUlBeVdqSVFwdDh4eloxcytoMFpVc2loVUJHTDY0YVYvR1NlWncramgzVXpiMlo1cUhFSDJ6a0ZXSgpzdlVWWHpiMk9nYXRJVTl1cHdWR21zOW1GVFJuZjNSbDFVWmtQRzB2RWdHeGtSZjZTWDhJZ2l2VWRYeS9rNEd0Ck5lWkx1UUtCZ0NjNkdseE9TNDhhRWRlSmZtQVR2OXY5eVhHWW1Ta2w5YnRpV25iL2dud1RFOHlTaTFPdXY5a3EKNllaellwNmNQN0FUNUQvc29yb25pTFRsanlOUXJ3bUh1WjhKUkJFbzExc3dMZlRoTlB2R2ZHSEFBRTJ6eDZBMQpQZXhQd2lwczhOVFl6ZG5JczA0VkZ2YVI0V2lidUFXZmxCRUFiUUVtYnhnM1A2MmYwbnBvCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InhpRVlPQzVJRER0TUR2OURobHI4Wnh0Z192eVo1SndMOGdfaEprNFg2RmMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTlkbXNkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI3ZDBkNzFkNS04NDU0LTQwYzEtODc3Zi02M2U1NmU0ZmNlZGEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.AfUDqSA8YtMo7UJdQ_NMMU5BylS_fbf7jb_vTuYKsqVlxVjWe_1AgnaicyG0pO4UnL6_vkzqY3MigTqwlyuYKXrDb58F4MwjzHjMKMLHlesjo9WkzMptdq83fIU_8FQ731TROGaZsXGuBu1zOppiWqag-43d0Lqv2BBVl70-6-F5BAJ_XM5NSbFz7slxIUjbWJ4szauNCnUhy8z89bH4JIwVCD_lqvsC0rvCM8kgEaHHv9qIYL1uFfK8Y5bFy7BMXWHhJo5VwRvQ6-8Nz4bXgfDKWeBgovrnkR71WrgGtK0LZHPYZZo-GrxkVn4ixb0AOdgYxruXgkjs1otwoNvoig复制

14.集群验证

安装busybox

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF复制

Pod必须能解析Service

Pod必须能解析跨namespace的Service

每个节点都必须要能访问Kubernetes的kubernetes svc 443和kube-dns的service 53

Pod和Pod之前要能通

a) 同namespace能通信

b) 跨namespace能通信

c) 跨机器能通信

验证解析

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h9m

#Pod必须能解析Service

[root@k8s-master01 ~]# kubectl exec busybox -n default -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

#Pod必须能解析跨namespace的Service

[root@k8s-master01 ~]# kubectl exec busybox -n default -- nslookup kube-dns.kube-system

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local复制

每个节点都必须要能访问Kubernetes的kubernetes svc 443和kube-dns的service 53

[root@k8s-master01 ~]# telnet 10.96.0.1 443

Trying 10.96.0.1...

Connected to 10.96.0.1.

Escape character is '^]'.

[root@k8s-master02 ~]# telnet 10.96.0.1 443

[root@k8s-master03 ~]# telnet 10.96.0.1 443

[root@k8s-node01 ~]# telnet 10.96.0.1 443

[root@k8s-node02 ~]# telnet 10.96.0.1 443

[root@k8s-node03 ~]# telnet 10.96.0.1 443

[root@k8s-master01 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 63m

metrics-server ClusterIP 10.100.238.136 <none> 443/TCP 41m

[root@k8s-master01 ~]# telnet 10.96.0.10 53

Trying 10.96.0.10...

Connected to 10.96.0.10.

Escape character is '^]'.

Connection closed by foreign host.

[root@k8s-master02 ~]# telnet 10.96.0.10 53

[root@k8s-master03 ~]# telnet 10.96.0.10 53

[root@k8s-node01 ~]# telnet 10.96.0.10 53

[root@k8s-node02 ~]# telnet 10.96.0.10 53

[root@k8s-node03 ~]# telnet 10.96.0.10 53

[root@k8s-master01 ~]# curl 10.96.0.10:53

curl: (52) Empty reply from server

[root@k8s-master02 ~]# curl 10.96.0.10:53

[root@k8s-master03 ~]# curl 10.96.0.10:53

[root@k8s-node01 ~]# curl 10.96.0.10:53

[root@k8s-node02 ~]# curl 10.96.0.10:53

[root@k8s-node03 ~]# curl 10.96.0.10:53复制

Pod和Pod之前要能通

[root@k8s-master01 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-6fdd497b59-mfc6t 1/1 Running 0 108m 172.31.3.102 k8s-master02.example.local <none> <none>

calico-node-8scp5 1/1 Running 0 108m 172.31.3.111 k8s-node01.example.local <none> <none>

calico-node-cj25g 1/1 Running 0 108m 172.31.3.113 k8s-node03.example.local <none> <none>

calico-node-g9gtn 1/1 Running 0 108m 172.31.3.103 k8s-master03.example.local <none> <none>

calico-node-thsfj 1/1 Running 0 108m 172.31.3.101 k8s-master01.example.local <none> <none>

calico-node-wl4lt 1/1 Running 0 108m 172.31.3.112 k8s-node02.example.local <none> <none>

calico-node-xm2cx 1/1 Running 0 108m 172.31.3.102 k8s-master02.example.local <none> <none>

coredns-847c895554-l9hpz 1/1 Running 0 69m 192.165.109.65 k8s-master03.example.local <none> <none>

metrics-server-6879599d55-mfrvm 1/1 Running 0 47m 192.167.195.129 k8s-node02.example.local <none> <none>

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 17m 192.162.55.65 k8s-master01.example.local <none> <none>

[root@k8s-master01 ~]# kubectl exec -it busybox

error: you must specify at least one command for the container

[root@k8s-master01 ~]# kubectl exec -it busybox -- sh

/ # ping 192.167.195.129

PING 192.167.195.129 (192.167.195.129): 56 data bytes

64 bytes from 192.167.195.129: seq=0 ttl=62 time=0.700 ms

64 bytes from 192.167.195.129: seq=1 ttl=62 time=0.474 ms

64 bytes from 192.167.195.129: seq=2 ttl=62 time=0.468 ms

64 bytes from 192.167.195.129: seq=3 ttl=62 time=0.464 ms

^C

--- 192.167.195.129 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.464/0.526/0.700 ms

/ # exit

[root@k8s-master01 ~]# kubectl create deploy nginx --image=nginx --replicas=3

deployment.apps/nginx created

[root@k8s-master01 ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 93s

[root@k8s-master01 ~]# kubectl get pod -o wide|grep nginx

nginx-6799fc88d8-9mb86 1/1 Running 0 95s 192.169.111.130 k8s-node01.example.local <none> <none>

nginx-6799fc88d8-jwlvm 1/1 Running 0 95s 192.167.195.130 k8s-node02.example.local <none> <none>

nginx-6799fc88d8-wjmw8 1/1 Running 0 95s 192.170.21.195 k8s-node03.example.local <none> <none>

[root@k8s-master01 ~]# kubectl delete deploy nginx

deployment.apps "nginx" deleted

[root@k8s-master01 ~]# kubectl delete pod busybox

pod "busybox" deleted复制

15.生产环境关键性配置

docker参数配置:

vim etc/docker/daemon.json

{

"registry-mirrors": [ #docker镜像加速

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

],

"exec-opts": ["native.cgroupdriver=systemd"], #k8s需要systemd启动docker

"max-concurrent-downloads": 10, #并发下载线程数

"max-concurrent-uploads": 5, #并发上传线程数

"log-opts": {

"max-size": "300m", #docker日志文件最大300m

"max-file": "2" #最大2个文件

},

"live-restore": true #docker服务重启,容器不会重启

}复制

controller-manager 参数配置:

[root@k8s-master01 ~]# vim lib/systemd/system/kube-controller-manager.service

# --feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true \ #这个是bootstrap自动颁发证书,新版默认就是true,不用设置

--cluster-signing-duration=876000h0m0s \ #用来控制签发证书的有效期限。

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03; do scp lib/systemd/system/kube-controller-manager.service $NODE:/lib/systemd/system/; done

kube-controller-manager.service 100% 1113 670.4KB/s 00:00

kube-controller-manager.service 100% 1113 1.0MB/s 00:00

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master02 ~]# systemctl daemon-reload

[root@k8s-master03 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl restart kube-controller-manager

[root@k8s-master02 ~]# systemctl restart kube-controller-manager

[root@k8s-master03 ~]# systemctl restart kube-controller-manager复制

10-kubelet.conf 参数配置:

[root@k8s-master01 ~]# vim etc/systemd/system/kubelet.service.d/10-kubelet.conf

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 --image-pull-progress-deadline=30m"

#--tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 #更改k8s的加密算法

#--image-pull-progress-deadline=30m #如果在该参数值所设置的期限之前没有拉取镜像的进展,镜像拉取操作将被取消。仅当容器运行环境设置为 docker 时,此特定于 docker 的参数才有效。

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02 k8s-node03; do scp etc/systemd/system/kubelet.service.d/10-kubelet.conf $NODE:/etc/systemd/system/kubelet.service.d/ ;done复制

kubelet-conf.yml 参数配置:

[root@k8s-master01 ~]# vim etc/kubernetes/kubelet-conf.yml

#添加如下配置

rotateServerCertificates: true

allowedUnsafeSysctls: #允许容器设置内核,有安全风险,根据实际需求设置

- "net.core*"

- "net.ipv4.*"

kubeReserved: #k8s预留资源

cpu: "1"

memory: 1Gi

ephemeral-storage: 10Gi

systemReserved: #系统预留资源

cpu: "1"

memory: 1Gi

ephemeral-storage: 10Gi

#rotateServerCertificates: true #当证书即将过期时自动从 kube-apiserver 请求新的证书进行轮换。要求启用 RotateKubeletServerCertificate 特性开关,以及对提交的 CertificateSigningRequest 对象进行批复(approve)操作。

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02 k8s-node03; do scp etc/kubernetes/kubelet-conf.yml $NODE:/etc/kubernetes/ ;done

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master02 ~]# systemctl daemon-reload

[root@k8s-master03 ~]# systemctl daemon-reload

[root@k8s-node01 ~]# systemctl daemon-reload

[root@k8s-node02 ~]# systemctl daemon-reload

[root@k8s-node03 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl restart kubelet

[root@k8s-master02 ~]# systemctl restart kubelet

[root@k8s-master03 ~]# systemctl restart kubelet

[root@k8s-node01 ~]# systemctl restart kubelet

[root@k8s-node02 ~]# systemctl restart kubelet

[root@k8s-node03 ~]# systemctl restart kubelet复制

添加label:

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local Ready <none> 19h v1.20.14

k8s-master02.example.local Ready <none> 19h v1.20.14

k8s-master03.example.local Ready <none> 19h v1.20.14

k8s-node01.example.local Ready <none> 19h v1.20.14

k8s-node02.example.local Ready <none> 19h v1.20.14

k8s-node03.example.local Ready <none> 19h v1.20.14

[root@k8s-master01 ~]# kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master01.example.local Ready <none> 19h v1.20.14 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master01.example.local,kubernetes.io/os=linux,node.kubernetes.io/node=

k8s-master02.example.local Ready <none> 19h v1.20.14 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master02.example.local,kubernetes.io/os=linux,node.kubernetes.io/node=

k8s-master03.example.local Ready <none> 19h v1.20.14 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master03.example.local,kubernetes.io/os=linux,node.kubernetes.io/node=

k8s-node01.example.local Ready <none> 19h v1.20.14 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01.example.local,kubernetes.io/os=linux,node.kubernetes.io/node=

k8s-node02.example.local Ready <none> 19h v1.20.14 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node02.example.local,kubernetes.io/os=linux,node.kubernetes.io/node=

k8s-node03.example.local Ready <none> 19h v1.20.14 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node03.example.local,kubernetes.io/os=linux,node.kubernetes.io/node=

[root@k8s-master01 ~]# kubectl label node k8s-master01.example.local node-role.kubernetes.io/control-plane='' node-role.kubernetes.io/master=''

node/k8s-master01.example.local labeled

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local Ready control-plane,master 84m v1.20.14

k8s-master02.example.local Ready <none> 84m v1.20.14

k8s-master03.example.local Ready <none> 84m v1.20.14

k8s-node01.example.local Ready <none> 84m v1.20.14

k8s-node02.example.local Ready <none> 84m v1.20.14

k8s-node03.example.local Ready <none> 84m v1.20.14

[root@k8s-master01 ~]# kubectl label node k8s-master02.example.local node-role.kubernetes.io/control-plane='' node-role.kubernetes.io/master=''

node/k8s-master02.example.local labeled

[root@k8s-master01 ~]# kubectl label node k8s-master03.example.local node-role.kubernetes.io/control-plane='' node-role.kubernetes.io/master=''

node/k8s-master03.example.local labeled

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01.example.local Ready control-plane,master 85m v1.20.14

k8s-master02.example.local Ready control-plane,master 85m v1.20.14

k8s-master03.example.local Ready control-plane,master 85m v1.20.14

k8s-node01.example.local Ready <none> 85m v1.20.14

k8s-node02.example.local Ready <none> 85m v1.20.14

k8s-node03.example.local Ready <none> 85m v1.20.14复制

安装总结:

1、 kubeadm

2、 二进制

3、 自动化安装

a) Ansible

i. Master节点安装不需要写自动化。

ii. 添加Node节点,playbook。

4、 安装需要注意的细节

a) 上面的细节配置

b) 生产环境中etcd一定要和系统盘分开,一定要用ssd硬盘。

c) Docker数据盘也要和系统盘分开,有条件的话可以使用ssd硬盘

16.TLS Bootstrapping原理

16.1 Bootstrapping Kubelet启动过程

TLS BootStrapping 官方文档:

https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet-tls-bootstrapping/#initialization-process

https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kubelet-tls-bootstrapping/

TLS 启动引导

在一个 Kubernetes 集群中,工作节点上的组件(kubelet 和 kube-proxy)需要与 Kubernetes 主控组件通信,尤其是 kube-apiserver。为了确保通信本身是私密的、不被干扰,并且确保集群的每个组件都在与另一个 可信的组件通信,我们强烈建议使用节点上的客户端 TLS 证书。

启动引导这些组件的正常过程,尤其是需要证书来与 kube-apiserver 安全通信的 工作节点,可能会是一个具有挑战性的过程,因为这一过程通常不受 Kubernetes 控制, 需要不少额外工作。这也使得初始化或者扩缩一个集群的操作变得具有挑战性。

为了简化这一过程,从 1.4 版本开始,Kubernetes 引入了一个证书请求和签名 API 以便简化此过程。该提案可在 这里看到。

本文档描述节点初始化的过程,如何为 kubelet 配置 TLS 客户端证书启动引导, 以及其背后的工作原理。

初始化过程

当工作节点启动时,kubelet 执行以下操作:

寻找自己的

kubeconfig

文件,文件一般位于/etc/kubernetes/kubelet.kubeconfig检索 API 服务器的 URL 和凭据,通常是来自

kubeconfig

文件中的 TLS 密钥和已签名证书尝试使用这些凭据来与 API 服务器通信

假定 kube-apiserver 成功地认证了 kubelet 的凭据数据,它会将 kubelet 视为 一个合法的节点并开始将 Pods 分派给该节点。

注意,签名的过程依赖于:

kubeconfig

中包含密钥和本地主机的证书证书被 kube-apiserver 所信任的一个证书机构(CA)所签名

负责部署和管理集群的人有以下责任:

创建 CA 密钥和证书

将 CA 证书发布到 kube-apiserver 运行所在的主控节点上

为每个 kubelet 创建密钥和证书;强烈建议为每个 kubelet 使用独一无二的、 CN 取值与众不同的密钥和证书

使用 CA 密钥对 kubelet 证书签名

将 kubelet 密钥和签名的证书发布到 kubelet 运行所在的特定节点上

本文中描述的 TLS 启动引导过程有意简化甚至完全自动化上述过程,尤其是 第三步之后的操作,因为这些步骤是初始化或者扩缩集群时最常见的操作。

查看kubelet.kubeconfig证书有效期:

首先对kubelet.kubeconfi的certificate-authority-data字段进行解密:

[root@k8s-node01 ~]# more etc/kubernetes/kubelet.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQ1RENDQXN5Z0F3SUJBZ0lVSWJmT2dZVFVIekNaV0VYQWY3VUJ5dnREMHRJd0RRWUpLb1p

JaHZjTkFRRUwKQlFBd2R6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEV6QVJCZ05WQkFvVENrdDFZbVZ5Ym1WMF

pYTXhHakFZQmdOVkJBc1RFVXQxWW1WeWJtVjBaWE10CmJXRnVkV0ZzTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1DQVhEVEl5TURFeU1qQTRNVGd3TUZvWUR6SXgKTWpFeE1qSTVNR

Gd4T0RBd1dqQjNNUXN3Q1FZRFZRUUdFd0pEVGpFUU1BNEdBMVVFQ0JNSFFtVnBhbWx1WnpFUQpNQTRHQTFVRUJ4TUhRbVZwYW1sdVp6RVRNQkVHQTFVRUNoTUtTM1ZpWlhKdVpYUmxjekVh

TUJnR0ExVUVDeE1SClMzVmlaWEp1WlhSbGN5MXRZVzUxWVd3eEV6QVJCZ05WQkFNVENtdDFZbVZ5Ym1WMFpYTXdnZ0VpTUEwR0NTcUcKU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkF

RQzhYbUNkcExaVmpZSU9HbXI3OWpYZUNPSzFnb241V2QySgpkZGozLzI1Z0FWR2xWTm9tZzJtblc3eU0vSGtxK0pCZVpKcFFkaUt1cmlaaGZOTVJMZmQ1NEFuV3lTSmVnQlJXCnZuWUZEWk

d1MlkrTlBiOW9rYm16enMxUnBKYUxweG1nR2wvWnBCSTYxdHVza3JUWG8xNlh3Y2Vhdm1EMHpUcW8KdThEVzNRTFFCUDV1MVZFNjlGSWNiaEtxQ08xaWhnKytLTHo2dnBBbXFHOWlmN1JLM

jVvcVFzMzdueG5KQ3BMdgoxZEFmZmtxVmRLRVAwWEpIaC9neHBkbEVlL3VxbzdtZGZmMTY0MEhKRnRETk5EaWF3Tkh1cDlNWFdFTjY4QnVxCkd5ZE9oazZZSDdMd2thVW9VQXF6QmJNY1R6

QzljMG95SmVrQ21wZlVkcW4xVDNURWFUeEpBZ01CQUFHalpqQmsKTUE0R0ExVWREd0VCL3dRRUF3SUJCakFTQmdOVkhSTUJBZjhFQ0RBR0FRSC9BZ0VDTUIwR0ExVWREZ1FXQkJUcQpGY2x

pUlZZMW1Rby9DUXlkemlwSm5LcThQVEFmQmdOVkhTTUVHREFXZ0JUcUZjbGlSVlkxbVFvL0NReWR6aXBKCm5LcThQVEFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBcTR2d0kwanlrbmZabk

1EL1hGUDFtUVlQdGYwRitqa2sKQlUvWDRKb0JiN1V5dUFlbGQ3ckdBWEVJK016QWUvSmNRSGFIUXowY3gzUm9palpKL01wUWN1R2k2S1NKUVVIRgp0a29Eb2JIV3RuOW9SMGs2TXZYZEpPU

nRiRHJ1WjlNL0xKTVFFemhVQSt4NWlLSXN0Q2VhV3hBM25BbWNUZUVNCjMrY2tNeXg1aGNWbWM4MXppaG1Ed3phTW9xYmVoNklXSlR4TlIzbFE4dlFqdlhwNXdqTnpZZzc4VjZCVTNhcVgK

U2VvU0k3QnRqcjNLRG9NcWRoaldCalpnTlljK1FjQTNMQmVRM3NqYjY5Sk1Ud0wrY1hXMFMrVEwwdTJWSURUOQpwR1lReDhQM21paSt1eFpPaVRibFFmaGxjUGR4RUZZM0JZZDlmRzgrc2w

vUzg1dnU5RzVZM1E9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://172.31.3.188:6443

name: default-cluster

contexts:

- context:

cluster: default-cluster

namespace: default

user: default-auth

name: default-context

current-context: default-context

[root@k8s-node01 ~]# echo "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQ1RENDQXN5Z0F3SUJBZ0lVSWJmT2dZVFVIekNaV0VYQWY3VUJ5dnREMHRJd0RRWUpLb1p

JaHZjTkFRRUwKQlFBd2R6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEV6QVJCZ05WQkFvVENrdDFZbVZ5Ym1WMF

pYTXhHakFZQmdOVkJBc1RFVXQxWW1WeWJtVjBaWE10CmJXRnVkV0ZzTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1DQVhEVEl5TURFeU1qQTRNVGd3TUZvWUR6SXgKTWpFeE1qSTVNR

Gd4T0RBd1dqQjNNUXN3Q1FZRFZRUUdFd0pEVGpFUU1BNEdBMVVFQ0JNSFFtVnBhbWx1WnpFUQpNQTRHQTFVRUJ4TUhRbVZwYW1sdVp6RVRNQkVHQTFVRUNoTUtTM1ZpWlhKdVpYUmxjekVh

TUJnR0ExVUVDeE1SClMzVmlaWEp1WlhSbGN5MXRZVzUxWVd3eEV6QVJCZ05WQkFNVENtdDFZbVZ5Ym1WMFpYTXdnZ0VpTUEwR0NTcUcKU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkF

RQzhYbUNkcExaVmpZSU9HbXI3OWpYZUNPSzFnb241V2QySgpkZGozLzI1Z0FWR2xWTm9tZzJtblc3eU0vSGtxK0pCZVpKcFFkaUt1cmlaaGZOTVJMZmQ1NEFuV3lTSmVnQlJXCnZuWUZEWk

d1MlkrTlBiOW9rYm16enMxUnBKYUxweG1nR2wvWnBCSTYxdHVza3JUWG8xNlh3Y2Vhdm1EMHpUcW8KdThEVzNRTFFCUDV1MVZFNjlGSWNiaEtxQ08xaWhnKytLTHo2dnBBbXFHOWlmN1JLM

jVvcVFzMzdueG5KQ3BMdgoxZEFmZmtxVmRLRVAwWEpIaC9neHBkbEVlL3VxbzdtZGZmMTY0MEhKRnRETk5EaWF3Tkh1cDlNWFdFTjY4QnVxCkd5ZE9oazZZSDdMd2thVW9VQXF6QmJNY1R6

QzljMG95SmVrQ21wZlVkcW4xVDNURWFUeEpBZ01CQUFHalpqQmsKTUE0R0ExVWREd0VCL3dRRUF3SUJCakFTQmdOVkhSTUJBZjhFQ0RBR0FRSC9BZ0VDTUIwR0ExVWREZ1FXQkJUcQpGY2x

pUlZZMW1Rby9DUXlkemlwSm5LcThQVEFmQmdOVkhTTUVHREFXZ0JUcUZjbGlSVlkxbVFvL0NReWR6aXBKCm5LcThQVEFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBcTR2d0kwanlrbmZabk

1EL1hGUDFtUVlQdGYwRitqa2sKQlUvWDRKb0JiN1V5dUFlbGQ3ckdBWEVJK016QWUvSmNRSGFIUXowY3gzUm9palpKL01wUWN1R2k2S1NKUVVIRgp0a29Eb2JIV3RuOW9SMGs2TXZYZEpPU

nRiRHJ1WjlNL0xKTVFFemhVQSt4NWlLSXN0Q2VhV3hBM25BbWNUZUVNCjMrY2tNeXg1aGNWbWM4MXppaG1Ed3phTW9xYmVoNklXSlR4TlIzbFE4dlFqdlhwNXdqTnpZZzc4VjZCVTNhcVgK

U2VvU0k3QnRqcjNLRG9NcWRoaldCalpnTlljK1FjQTNMQmVRM3NqYjY5Sk1Ud0wrY1hXMFMrVEwwdTJWSURUOQpwR1lReDhQM21paSt1eFpPaVRibFFmaGxjUGR4RUZZM0JZZDlmRzgrc2w

vUzg1dnU5RzVZM1E9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==" |base64 --decode >/tmp/1复制

然后使用OpenSSL即可查看证书过期时间:

[root@k8s-node01 ~]# openssl x509 -in tmp/1 -noout -dates

notBefore=Jan 22 08:18:00 2022 GMT

notAfter=Dec 29 08:18:00 2121 GMT复制

16.2 TLS Bootstrapping初始化流程

启动引导初始化

在启动引导初始化过程中,会发生以下事情:

1.kubelet 启动

2.kubelet 看到自己 没有 对应的 kubeconfig

文件

3.kubelet 搜索并发现 bootstrap-kubeconfig

文件

4.kubelet 读取该启动引导文件,从中获得 API 服务器的 URL 和用途有限的 一个“令牌(Token)”

5.kubelet 建立与 API 服务器的连接,使用上述令牌执行身份认证

a) Apiserver会识别tokenid,apiserver会查找该tokenid对于的bootstrap的一个secret

[root@k8s-node01 ~]# more etc/kubernetes/bootstrap-kubelet.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQ1RENDQXN5Z0F3SUJBZ0lVSWJmT2dZVFVIekNaV0VYQWY3VUJ5dnREMHRJd0RRWUpLb1p

JaHZjTkFRRUwKQlFBd2R6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEV6QVJCZ05WQkFvVENrdDFZbVZ5Ym1WMF

pYTXhHakFZQmdOVkJBc1RFVXQxWW1WeWJtVjBaWE10CmJXRnVkV0ZzTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1DQVhEVEl5TURFeU1qQTRNVGd3TUZvWUR6SXgKTWpFeE1qSTVNR

Gd4T0RBd1dqQjNNUXN3Q1FZRFZRUUdFd0pEVGpFUU1BNEdBMVVFQ0JNSFFtVnBhbWx1WnpFUQpNQTRHQTFVRUJ4TUhRbVZwYW1sdVp6RVRNQkVHQTFVRUNoTUtTM1ZpWlhKdVpYUmxjekVh

TUJnR0ExVUVDeE1SClMzVmlaWEp1WlhSbGN5MXRZVzUxWVd3eEV6QVJCZ05WQkFNVENtdDFZbVZ5Ym1WMFpYTXdnZ0VpTUEwR0NTcUcKU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkF

RQzhYbUNkcExaVmpZSU9HbXI3OWpYZUNPSzFnb241V2QySgpkZGozLzI1Z0FWR2xWTm9tZzJtblc3eU0vSGtxK0pCZVpKcFFkaUt1cmlaaGZOTVJMZmQ1NEFuV3lTSmVnQlJXCnZuWUZEWk

d1MlkrTlBiOW9rYm16enMxUnBKYUxweG1nR2wvWnBCSTYxdHVza3JUWG8xNlh3Y2Vhdm1EMHpUcW8KdThEVzNRTFFCUDV1MVZFNjlGSWNiaEtxQ08xaWhnKytLTHo2dnBBbXFHOWlmN1JLM

jVvcVFzMzdueG5KQ3BMdgoxZEFmZmtxVmRLRVAwWEpIaC9neHBkbEVlL3VxbzdtZGZmMTY0MEhKRnRETk5EaWF3Tkh1cDlNWFdFTjY4QnVxCkd5ZE9oazZZSDdMd2thVW9VQXF6QmJNY1R6

QzljMG95SmVrQ21wZlVkcW4xVDNURWFUeEpBZ01CQUFHalpqQmsKTUE0R0ExVWREd0VCL3dRRUF3SUJCakFTQmdOVkhSTUJBZjhFQ0RBR0FRSC9BZ0VDTUIwR0ExVWREZ1FXQkJUcQpGY2x

pUlZZMW1Rby9DUXlkemlwSm5LcThQVEFmQmdOVkhTTUVHREFXZ0JUcUZjbGlSVlkxbVFvL0NReWR6aXBKCm5LcThQVEFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBcTR2d0kwanlrbmZabk

1EL1hGUDFtUVlQdGYwRitqa2sKQlUvWDRKb0JiN1V5dUFlbGQ3ckdBWEVJK016QWUvSmNRSGFIUXowY3gzUm9palpKL01wUWN1R2k2S1NKUVVIRgp0a29Eb2JIV3RuOW9SMGs2TXZYZEpPU

nRiRHJ1WjlNL0xKTVFFemhVQSt4NWlLSXN0Q2VhV3hBM25BbWNUZUVNCjMrY2tNeXg1aGNWbWM4MXppaG1Ed3phTW9xYmVoNklXSlR4TlIzbFE4dlFqdlhwNXdqTnpZZzc4VjZCVTNhcVgK

U2VvU0k3QnRqcjNLRG9NcWRoaldCalpnTlljK1FjQTNMQmVRM3NqYjY5Sk1Ud0wrY1hXMFMrVEwwdTJWSURUOQpwR1lReDhQM21paSt1eFpPaVRibFFmaGxjUGR4RUZZM0JZZDlmRzgrc2w

vUzg1dnU5RzVZM1E9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://172.31.3.188:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: tls-bootstrap-token-user

name: tls-bootstrap-token-user@kubernetes

current-context: tls-bootstrap-token-user@kubernetes

kind: Config

preferences: {}

users:

- name: tls-bootstrap-token-user

user:

token: c8ad9c.2e4d610cf3e7426e

[root@k8s-master01 ~]# kubectl get secret -n kube-system |grep bootstrap-token

bootstrap-token-c8ad9c bootstrap.kubernetes.io/token 6 25h

[root@k8s-master01 ~]# kubectl get secret -n kube-system bootstrap-token-c8ad9c -n kube-system

NAME TYPE DATA AGE

bootstrap-token-c8ad9c bootstrap.kubernetes.io/token 6 25h

[root@k8s-master01 ~]# kubectl get secret -n kube-system bootstrap-token-c8ad9c -n kube-system -o yaml

apiVersion: v1

data:

auth-extra-groups: c3lzdGVtOmJvb3RzdHJhcHBlcnM6ZGVmYXVsdC1ub2RlLXRva2VuLHN5c3RlbTpib290c3RyYXBwZXJzOndvcmtlcixzeXN0ZW06Ym9vdHN0cmFwcGVyczppbmdyZXNz

description: VGhlIGRlZmF1bHQgYm9vdHN0cmFwIHRva2VuIGdlbmVyYXRlZCBieSAna3ViZWxldCAnLg==

token-id: YzhhZDlj

token-secret: MmU0ZDYxMGNmM2U3NDI2ZQ==

usage-bootstrap-authentication: dHJ1ZQ==

usage-bootstrap-signing: dHJ1ZQ==

kind: Secret

metadata:

creationTimestamp: "2022-01-22T10:15:34Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:auth-extra-groups: {}

f:description: {}

f:token-id: {}

f:token-secret: {}

f:usage-bootstrap-authentication: {}

f:usage-bootstrap-signing: {}

f:type: {}

manager: kubectl-create

operation: Update

time: "2022-01-22T10:15:34Z"

name: bootstrap-token-c8ad9c

namespace: kube-system

resourceVersion: "3179"

uid: 8eaae737-db38-45ec-81d8-2563b2b4db0b

type: bootstrap.kubernetes.io/token

[root@k8s-master01 ~]# echo "YzhhZDlj" |base64 -d

c8ad9c

[root@k8s-master01 ~]# echo "MmU0ZDYxMGNmM2U3NDI2ZQ==" |base64 -d

2e4d610cf3e7426e复制

b) 找个这个secret中的一个字段,apiserver把这个token识别成一个username,名称是system:bootstrap:<token-id>,属于system:bootstrappers这个组,这个组具有申请csr的权限,该组的权限绑定在一个叫system:node-bootstrapper的clusterrole

i. # clusterrole k8s集群级别的权限控制,它作用整个k8s集群

ii. # clusterrolebinding 集群权限的绑定,它可以把某个clusterrole绑定到一个用户、组或者seviceaccount

c) CSR:相当于一个申请表,可以拿着这个申请表去申请我们的证书。

[root@k8s-master01 ~]# echo "c3lzdGVtOmJvb3RzdHJhcHBlcnM6ZGVmYXVsdC1ub2RlLXRva2VuLHN5c3RlbTpib290c3RyYXBwZXJzOndvcmtlcixzeXN0ZW06Ym9vdHN0cmFwcGVyczppbmdyZXNz" |base64 -d

system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

[root@k8s-master01 ~]# kubectl get clusterrole |grep system:node-bootstrapper

system:node-bootstrapper 2022-01-22T09:33:13Z

[root@k8s-master01 ~]# kubectl get clusterrole system:node-bootstrapper -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2022-01-22T09:33:13Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

managedFields:

- apiVersion: rbac.authorization.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:rbac.authorization.kubernetes.io/autoupdate: {}

f:labels:

.: {}

f:kubernetes.io/bootstrapping: {}

f:rules: {}

manager: kube-apiserver

operation: Update

time: "2022-01-22T09:33:13Z"

name: system:node-bootstrapper

resourceVersion: "98"

uid: 02b0597e-7b48-4e42-b95c-451b2e2b027c

rules:

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- create

- get

- list

- watch

[root@k8s-master01 ~]# kubectl get clusterrolebinding |grep kubelet-bootstrap

kubelet-bootstrap ClusterRole/system:node-bootstrapper 25h

[root@k8s-master01 ~]# kubectl get clusterrolebinding kubelet-bootstrap -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: "2022-01-22T10:15:34Z"

managedFields:

- apiVersion: rbac.authorization.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:roleRef:

f:apiGroup: {}

f:kind: {}

f:name: {}

f:subjects: {}

manager: kubectl-create

operation: Update

time: "2022-01-22T10:15:34Z"

name: kubelet-bootstrap

resourceVersion: "3180"

uid: 7a0db396-e6de-4ceb-b9b4-0311e0c6d2eb

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token复制

6.kubelet 现在拥有受限制的凭据来创建和取回证书签名请求(CSR)

7.kubelet 为自己创建一个 CSR,并将其 signerName 设置为 kubernetes.io/kube-apiserver-client-kubelet

8.CSR 被以如下两种方式之一批复:

a) K8s管理员使用kubectl手动的颁发证书

b) 如果配置了相关权限,kube-controller-manager会自动同意。

如果配置了,kube-controller-manager 会自动批复该 CSR

如果配置了,一个外部进程,或者是人,使用 Kubernetes API 或者使用

kubectl

来批复该 CSR

i. Controller-manager有一个CSRApprovingController。他会校验kubelet发来的csr的username和group是否有创建csr的权限,而且还要验证签发着是否是kubernetes.io/kube-apiserver-client-kubelet

ii. Controller-manager同意CSR请求

[root@k8s-master01 ~]# kubectl get clusterrolebinding |grep node-autoapprove-bootstrap

node-autoapprove-bootstrap ClusterRole/system:certificates.k8s.io:certificatesigningrequests:nodeclient 26h

[root@k8s-master01 ~]# kubectl get clusterrolebinding node-autoapprove-bootstrap -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: "2022-01-22T10:15:34Z"

managedFields:

- apiVersion: rbac.authorization.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:roleRef:

f:apiGroup: {}

f:kind: {}

f:name: {}

f:subjects: {}

manager: kubectl-create

operation: Update

time: "2022-01-22T10:15:34Z"

name: node-autoapprove-bootstrap

resourceVersion: "3181"

uid: 52405ef8-be75-420c-9269-6924fb2d9e42

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

[root@k8s-master01 ~]# kubectl get clusterrole system:certificates.k8s.io:certificatesigningrequests:nodeclient -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2022-01-22T09:33:13Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

managedFields:

- apiVersion: rbac.authorization.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:rbac.authorization.kubernetes.io/autoupdate: {}

f:labels:

.: {}

f:kubernetes.io/bootstrapping: {}

f:rules: {}

manager: kube-apiserver

operation: Update

time: "2022-01-22T09:33:13Z"

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

resourceVersion: "104"

uid: 8ffed872-63e7-4583-a67c-059c7b25e246

rules:

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests/nodeclient

verbs:

- create复制

9.kubelet 所需要的证书被创建

10.证书被发放给 kubelet

11.kubelet 取回该证书

12.kubelet 创建一个合适的 kubeconfig

,其中包含密钥和已签名的证书

13.kubelet 开始正常操作

14.可选地,如果配置了,kubelet 在证书接近于过期时自动请求更新证书

15.更新的证书被批复并发放;取决于配置,这一过程可能是自动的或者手动完成

a) Kubelet创建的CSR是属于一个O:system:nodes

b) CN:system:nodes:主机名

[root@k8s-master01 ~]# kubectl get clusterrolebinding |grep node-autoapprove-certificate-rotation

node-autoapprove-certificate-rotation ClusterRole/system:certificates.k8s.io:certificatesigningrequests:selfnodeclient 26h

[root@k8s-master01 ~]# kubectl get clusterrolebinding node-autoapprove-certificate-rotation -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: "2022-01-22T10:15:34Z"

managedFields:

- apiVersion: rbac.authorization.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:roleRef:

f:apiGroup: {}

f:kind: {}

f:name: {}

f:subjects: {}

manager: kubectl-create

operation: Update

time: "2022-01-22T10:15:34Z"

name: node-autoapprove-certificate-rotation

resourceVersion: "3182"

uid: d82bb68b-49ff-496c-b559-f8bb5f603a46

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

[root@k8s-master01 ~]# kubectl get clusterrole system:certificates.k8s.io:certificatesigningrequests:selfnodeclient -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2022-01-22T09:33:13Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

managedFields:

- apiVersion: rbac.authorization.k8s.io/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:rbac.authorization.kubernetes.io/autoupdate: {}

f:labels:

.: {}

f:kubernetes.io/bootstrapping: {}

f:rules: {}

manager: kube-apiserver

operation: Update

time: "2022-01-22T09:33:13Z"

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

resourceVersion: "105"

uid: e8939fc0-49d2-4f47-938e-71ba6d5c9924

rules:

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests/selfnodeclient

verbs:

- create复制

本文的其余部分描述配置 TLS 启动引导的必要步骤及其局限性。