8.部署node

8.1 安装node组件

将组件发送到node节点

[root@k8s-master01 ~]# for NODE in k8s-node01 k8s-node02 k8s-node03; do echo $NODE; scp -o StrictHostKeyChecking=no usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done复制

node节点创建/opt/cni/bin目录

[root@k8s-node01 ~]# mkdir -p opt/cni/bin

[root@k8s-node02 ~]# mkdir -p opt/cni/bin

[root@k8s-node03 ~]# mkdir -p opt/cni/bin复制

8.2 复制etcd证书

node节点创建etcd证书目录

[root@k8s-node01 ~]# mkdir etc/etcd/ssl -p

[root@k8s-node02 ~]# mkdir etc/etcd/ssl -p

[root@k8s-node03 ~]# mkdir etc/etcd/ssl -p复制

将etcd证书复制到node节点

[root@k8s-etcd01 pki]# for NODE in k8s-node01 k8s-node02 k8s-node03; do

ssh -o StrictHostKeyChecking=no $NODE "mkdir -p etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp -o StrictHostKeyChecking=no etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done复制

所有master节点创建etcd的证书目录

[root@k8s-node01 ~]# mkdir etc/kubernetes/pki/etcd -p

[root@k8s-node02 ~]# mkdir etc/kubernetes/pki/etcd -p

[root@k8s-node03 ~]# mkdir etc/kubernetes/pki/etcd -p

[root@k8s-node01 ~]# ln -s etc/etcd/ssl/* etc/kubernetes/pki/etcd/

[root@k8s-node02 ~]# ln -s etc/etcd/ssl/* etc/kubernetes/pki/etcd/

[root@k8s-node03 ~]# ln -s etc/etcd/ssl/* etc/kubernetes/pki/etcd/复制

8.3 复制kubernetes证书和配置文件

node节点创建kubernetes相关目录

[root@k8s-node01 ~]# mkdir -p etc/kubernetes/pki

[root@k8s-node02 ~]# mkdir -p etc/kubernetes/pki

[root@k8s-node03 ~]# mkdir -p etc/kubernetes/pki复制

Master01节点复制证书至Node节点

[root@k8s-master01 ~]# for NODE in k8s-node01 k8s-node02 k8s-node03; do

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done复制

8.4 配置kubelet

node节点创建相关目录

[root@k8s-node01 ~]# mkdir -p etc/kubernetes/manifests/ etc/systemd/system/kubelet.service.d var/lib/kubelet var/log/kubernetes

[root@k8s-node02 ~]# mkdir -p etc/kubernetes/manifests/ etc/systemd/system/kubelet.service.d var/lib/kubelet var/log/kubernetes

[root@k8s-node03 ~]# mkdir -p etc/kubernetes/manifests/ etc/systemd/system/kubelet.service.d var/lib/kubelet var/log/kubernetes复制

Master01节点复制配置文件kubelet service至Node节点

[root@k8s-master01 ~]# for NODE in k8s-node01 k8s-node02 k8s-node03; do scp lib/systemd/system/kubelet.service $NODE:/lib/systemd/system/ ;done复制

node节点配置10-kubelet.conf的配置文件

[root@k8s-node01 ~]# cat > etc/systemd/system/kubelet.service.d/10-kubelet.conf <<EOF

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--hostname-override=172.31.3.111"

Environment="KUBELET_RINTIME=--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node=''"

ExecStart=

ExecStart=/usr/local/bin/kubelet \$KUBELET_KUBECONFIG_ARGS \$KUBELET_CONFIG_ARGS \$KUBELET_SYSTEM_ARGS \$KUBELET_EXTRA_ARGS \$KUBELET_RINTIME

EOF

[root@k8s-node02 ~]# cat > etc/systemd/system/kubelet.service.d/10-kubelet.conf <<EOF

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--hostname-override=172.31.3.112"

Environment="KUBELET_RINTIME=--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node=''"

ExecStart=

ExecStart=/usr/local/bin/kubelet \$KUBELET_KUBECONFIG_ARGS \$KUBELET_CONFIG_ARGS \$KUBELET_SYSTEM_ARGS \$KUBELET_EXTRA_ARGS \$KUBELET_RINTIME

EOF

[root@k8s-node03 ~]# cat > etc/systemd/system/kubelet.service.d/10-kubelet.conf <<EOF

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--hostname-override=172.31.3.113"

Environment="KUBELET_RINTIME=--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node=''"

ExecStart=

ExecStart=/usr/local/bin/kubelet \$KUBELET_KUBECONFIG_ARGS \$KUBELET_CONFIG_ARGS \$KUBELET_SYSTEM_ARGS \$KUBELET_EXTRA_ARGS \$KUBELET_RINTIME

EOF复制

Master01节点kubelet的配置文件至Node节点

[root@k8s-master01 ~]# for NODE in k8s-node01 k8s-node02 k8s-node03; do scp etc/kubernetes/kubelet-conf.yml $NODE:/etc/kubernetes/ ;done复制

启动node节点kubelet

[root@k8s-node01 ~]# systemctl daemon-reload && systemctl enable --now kubelet

[root@k8s-node02 ~]# systemctl daemon-reload && systemctl enable --now kubelet

[root@k8s-node03 ~]# systemctl daemon-reload && systemctl enable --now kubelet

[root@k8s-node01 ~]# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: etc/systemd/system/kubelet.service.d

└─10-kubelet.conf

Active: active (running) since Sun 2022-09-25 17:26:52 CST; 13s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 5957 (kubelet)

Tasks: 14 (limit: 23474)

Memory: 34.9M

CGroup: system.slice/kubelet.service

└─5957 usr/local/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kube>

Sep 25 17:26:53 k8s-node01.example.local kubelet[5957]: I0925 17:26:53.683391 5957 kubelet_network_linux.go:63] "Initialized iptables rules>

Sep 25 17:26:53 k8s-node01.example.local kubelet[5957]: I0925 17:26:53.766025 5957 kubelet_network_linux.go:63] "Initialized iptables rules>

Sep 25 17:26:53 k8s-node01.example.local kubelet[5957]: I0925 17:26:53.766047 5957 status_manager.go:161] "Starting to sync pod status with>

Sep 25 17:26:53 k8s-node01.example.local kubelet[5957]: I0925 17:26:53.766064 5957 kubelet.go:2010] "Starting kubelet main sync loop"

Sep 25 17:26:53 k8s-node01.example.local kubelet[5957]: E0925 17:26:53.766104 5957 kubelet.go:2034] "Skipping pod synchronization" err="PLE>

Sep 25 17:26:54 k8s-node01.example.local kubelet[5957]: I0925 17:26:54.279327 5957 apiserver.go:52] "Watching apiserver"

Sep 25 17:26:54 k8s-node01.example.local kubelet[5957]: I0925 17:26:54.305974 5957 reconciler.go:169] "Reconciler: start to sync state"

Sep 25 17:26:58 k8s-node01.example.local kubelet[5957]: E0925 17:26:58.602184 5957 kubelet.go:2373] "Container runtime network not ready" n>

Sep 25 17:27:03 k8s-node01.example.local kubelet[5957]: I0925 17:27:03.232179 5957 transport.go:135] "Certificate rotation detected, shutti>

Sep 25 17:27:03 k8s-node01.example.local kubelet[5957]: E0925 17:27:03.603901 5957 kubelet.go:2373] "Container runtime network not ready" n>

[root@k8s-node02 ~]# systemctl status kubelet

[root@k8s-node03 ~]# systemctl status kubelet复制

查看集群状态

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.31.3.101 NotReady <none> 9m8s v1.25.2

172.31.3.102 NotReady <none> 9m5s v1.25.2

172.31.3.103 NotReady <none> 9m3s v1.25.2

172.31.3.111 NotReady <none> 64s v1.25.2

172.31.3.112 NotReady <none> 48s v1.25.2

172.31.3.113 NotReady <none> 46s v1.25.2复制

8.5 配置kube-proxy

Master01节点复制kube-proxy相关文件到node

[root@k8s-master01 ~]# for NODE in k8s-node01 k8s-node02 k8s-node03; do

scp etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp etc/kubernetes/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp lib/systemd/system/kube-proxy.service $NODE:/lib/systemd/system/kube-proxy.service

done复制

node节点启动kube-proxy

[root@k8s-node01 ~]# systemctl daemon-reload && systemctl enable --now kube-proxy

[root@k8s-node02 ~]# systemctl daemon-reload && systemctl enable --now kube-proxy

[root@k8s-node03 ~]# systemctl daemon-reload && systemctl enable --now kube-proxy

[root@k8s-node01 ~]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Kube Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2022-09-25 17:28:29 CST; 26s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 6162 (kube-proxy)

Tasks: 5 (limit: 23474)

Memory: 10.8M

CGroup: system.slice/kube-proxy.service

└─6162 usr/local/bin/kube-proxy --config=/etc/kubernetes/kube-proxy.conf --v=2

Sep 25 17:28:29 k8s-node01.example.local kube-proxy[6162]: I0925 17:28:29.391982 6162 flags.go:64] FLAG: --write-config-to=""

Sep 25 17:28:29 k8s-node01.example.local kube-proxy[6162]: I0925 17:28:29.393147 6162 server.go:442] "Using lenient decoding as strict deco>

Sep 25 17:28:29 k8s-node01.example.local kube-proxy[6162]: I0925 17:28:29.393298 6162 feature_gate.go:245] feature gates: &{map[]}

Sep 25 17:28:29 k8s-node01.example.local kube-proxy[6162]: I0925 17:28:29.393365 6162 feature_gate.go:245] feature gates: &{map[]}

Sep 25 17:28:29 k8s-node01.example.local kube-proxy[6162]: I0925 17:28:29.407927 6162 proxier.go:666] "Failed to load kernel module with mo>

Sep 25 17:28:29 k8s-node01.example.local kube-proxy[6162]: E0925 17:28:29.427017 6162 node.go:152] Failed to retrieve node info: nodes "k8s>

Sep 25 17:28:30 k8s-node01.example.local kube-proxy[6162]: E0925 17:28:30.464338 6162 node.go:152] Failed to retrieve node info: nodes "k8s>

Sep 25 17:28:32 k8s-node01.example.local kube-proxy[6162]: E0925 17:28:32.508065 6162 node.go:152] Failed to retrieve node info: nodes "k8s>

Sep 25 17:28:36 k8s-node01.example.local kube-proxy[6162]: E0925 17:28:36.760898 6162 node.go:152] Failed to retrieve node info: nodes "k8s>

Sep 25 17:28:45 k8s-node01.example.local kube-proxy[6162]: E0925 17:28:45.067601 6162 node.go:152] Failed to retrieve node info: nodes "k8s>

[root@k8s-node02 ~]# systemctl status kube-proxy

[root@k8s-node03 ~]# systemctl status kube-proxy复制

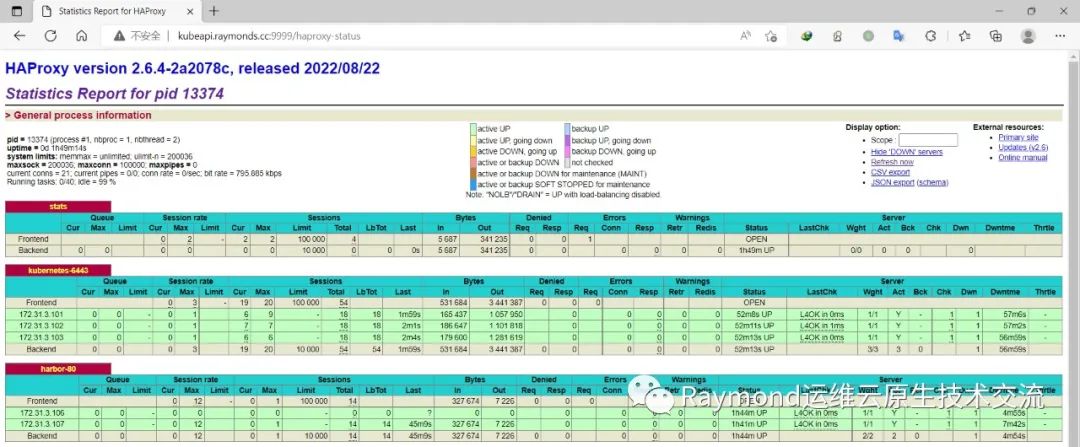

查看haproxy状态

http://kubeapi.raymonds.cc:9999/haproxy-status

9.安装Calico

https://docs.projectcalico.org/maintenance/kubernetes-upgrade#upgrading-an-installation-that-uses-the-kubernetes-api-datastore

calico安装:https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises

[root@k8s-master01 ~]# curl https://docs.projectcalico.org/manifests/calico-etcd.yaml -O复制

修改calico-etcd.yaml的以下位置

[root@k8s-master01 ~]# grep "etcd_endpoints:.*" calico-etcd.yaml

etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"

[root@k8s-master01 ~]# sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://172.31.3.108:2379,https://172.31.3.109:2379,https://172.31.3.110:2379"#g' calico-etcd.yaml

[root@k8s-master01 ~]# grep "etcd_endpoints:.*" calico-etcd.yaml

etcd_endpoints: "https://172.31.3.108:2379,https://172.31.3.109:2379,https://172.31.3.110:2379"

[root@k8s-master01 ~]# grep -E "(.*etcd-key:.*|.*etcd-cert:.*|.*etcd-ca:.*)" calico-etcd.yaml

# etcd-key: null

# etcd-cert: null

# etcd-ca: null

[root@k8s-master01 ~]# ETCD_KEY=`cat etc/kubernetes/pki/etcd/etcd-key.pem | base64 | tr -d '\n'`

[root@k8s-master01 ~]# ETCD_CERT=`cat etc/kubernetes/pki/etcd/etcd.pem | base64 | tr -d '\n'`

[root@k8s-master01 ~]# ETCD_CA=`cat etc/kubernetes/pki/etcd/etcd-ca.pem | base64 | tr -d '\n'`

[root@k8s-master01 ~]# sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

[root@k8s-master01 ~]# grep -E "(.*etcd-key:.*|.*etcd-cert:.*|.*etcd-ca:.*)" calico-etcd.yaml

etcd-key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBdTBieEdrUE5pM3IyaGdNTlUvU0tjNitjZHVUNWk4N1FIU1Q1Tk9mY0ExTDlPcGxsCm1LTllPTXAzcSt1Wko1bHZ4Ui9QalIxZnV4QUtramQ2THBMQnNBRmxGTm1lNDh4RTJRUVlWQjFDRGFCU0lzcUMKRUxtR3BXdUhkR2RRbzE4YmUxK2hvKzFXMHpzdDV2VGNaTE4vRlhiNURQWFBLZEw4Mm5NZkRmcndGWHEyUCttWQp3M2p0RU1jc3BJcXlTZzhnQ2NpZHhySzNDYkkwYVdkZTE3OVpNU0I2bmtlbXd2Mi9wWDJ6OHZMOUNwZXFuSnR4ClFvcUd6ckpXc0w0K0xyYUVwL0FUQU9pbE42K2VsR2hWL2NHTm45LzJDNStPWTRPTWkxYXl0anVMUVgyWlpMbTIKWGdnWGdGczRHaTF4SWg5aFBGSWxnUW1zRzlxaTZKUW1hNHNuTlFJREFRQUJBb0lCQUErM2VHeDMzRHdrYWFQOQpoWlRTSlB4b2RIMFY0a3QxWThuT1hJdXdHYXE5d0RxMnZPdithVno2d09oUXNWMjlaci9vVjRiRVBGQjZuQ2lCCk4yUEpOVEFNTGV0K0IvT2VKUGtCZXZrMEsyTHhYWE5HQTN4YjFZejBaVDNEbmVUWUNucGtJRENkcm5lenM3cDYKT2pUSlM1VUZrd2tmWis5ZW9aSERyNHVBejcwOWxrSWpidHdxOURtVTFhR1F2QTMxWDFaY2dKUENGWVpOeFRCaQpRbEhGdVJ5d2hCM2g3bzBGUWs0ekEzblBkYlJrdkVXM202ak44NkdKV2oxUHlLYjZiK0c3UXR6cit5MnNQVlN3CnhjaHlSc2VJRkVWQ2lFQzNlMUFYdjRpdXp0Q2o4a3lEbVZmbkZaOGQvNXp1eittUVVWQjB3ZGFleVgyZmE3aFcKM05ZbkZORUNnWUVBeU1TZm9QaVBNVXpkdUFYSkZCM2xUdVlIVnRYYlNkRGZsc3Z2b01HT0NTU1crWUUzS3hQcwpMMERzVENyNVdvVmJqVVppRTRUQ0pHVmdLNVNFemhjOWZrRmFTM29ZR2FJMGdqamk4RStrNWJ3RU8xdkN0eFZOCnpsbjR3RUZMbFFFUFppWjV4SWl0eExuREYrT1VHVEErZVJFMytnL0ZjK3NiNmhMUkU1YjV1UWNDZ1lFQTdzd3oKQldjSS9tUzVSV2VKVU1nZUJ2Vm5OdXJDZzd0ZWRqc0ExU3Y1VXVwajI2d2traE1PbmRLNTZrR1o5Y0FrRU85RgpaMmRSeEM1QmJDWmV4Tnl1Z3lKN2dBWTNmOGNJU0hCMk00cGN2UWZZTTNUbUZHaDVVMWdpOGVEUVZabzZUZ2dyCk1TaU9ZaUJhQUEyMldUc0ppL2pzUXgzVDhscG9SV25YcjF5cnV1TUNnWUJSL3V2ckJGa0hHNHVhTXRLeTRwcmEKcEZ2dS9SeTRneFF1TkZCRDZZa205c2lxVWpuRDREa2YrM1lHamE2Vlo4M0NYektESWo5Z09mOFREVzlIOUhucQo3S29DRlhWdVVxNzdXRnhuSlVBRmk4cDJxNzFVcE9ESUhEcloybEVTSkFLMEI1Ykh5OEtjaS9tLzhmUjBiUjIyCnVHK1NNNHJERXd5dGhzM1pJRm9SVlFLQmdRRGFVQThaRWxDVG1td1Mrb3Y4TVdmYVBzS2szMDBEZCtudE54WVUKelVYOE90TWVRcXVRYkNIQndhUThlTXNUZEJ6RTZxck4xUlJZd1YwSVRhLzRWRFNyS0h3MTEva25OVVBxVGY2UwpNaDJFcDhaTmpNTEh4NWViellqdER2WUlQSjZ2TmlLZXA1QThQNDFvWFNEblJPVCtkWTB4OHZRUXJmeUQ4VGJCCldIeWJ0d0tCZ0MrNlN6YzBpR0ZyM1VoSVc1ZHFOOERJYkl0eHZ5REh5WWtrWlB5UytueVo5OEw4ZlZnNGNYa3UKU0p4WENKNlZwOGZJY09NR0VtakIwRFp5SlRxUTUweFpRKzhkS3F3akV0SjFwNGVNRmIxamxickhHczhGRnJhOAorWkR6dTN1RGZIZXBFZzFub1dBZEo5RWV6VWI1RWdVOWtaeHdoWHNFZzJFMDZGYjhYbm42Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

etcd-cert: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUVCVENDQXUyZ0F3SUJBZ0lVYkw0ckFPcVk0UmQ3WWhrY3h2VGxpNkxZMDBzd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1p6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeERUQUxCZ05WQkFvVEJHVjBZMlF4RmpBVUJnTlZCQXNURFVWMFkyUWdVMlZqZFhKcGRIa3hEVEFMCkJnTlZCQU1UQkdWMFkyUXdJQmNOTWpJd09USTFNRGd4T0RBd1doZ1BNakV5TWpBNU1ERXdPREU0TURCYU1HY3gKQ3pBSkJnTlZCQVlUQWtOT01SQXdEZ1lEVlFRSUV3ZENaV2xxYVc1bk1SQXdEZ1lEVlFRSEV3ZENaV2xxYVc1bgpNUTB3Q3dZRFZRUUtFd1JsZEdOa01SWXdGQVlEVlFRTEV3MUZkR05rSUZObFkzVnlhWFI1TVEwd0N3WURWUVFECkV3UmxkR05rTUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF1MGJ4R2tQTmkzcjIKaGdNTlUvU0tjNitjZHVUNWk4N1FIU1Q1Tk9mY0ExTDlPcGxsbUtOWU9NcDNxK3VaSjVsdnhSL1BqUjFmdXhBSwpramQ2THBMQnNBRmxGTm1lNDh4RTJRUVlWQjFDRGFCU0lzcUNFTG1HcFd1SGRHZFFvMThiZTEraG8rMVcwenN0CjV2VGNaTE4vRlhiNURQWFBLZEw4Mm5NZkRmcndGWHEyUCttWXczanRFTWNzcElxeVNnOGdDY2lkeHJLM0NiSTAKYVdkZTE3OVpNU0I2bmtlbXd2Mi9wWDJ6OHZMOUNwZXFuSnR4UW9xR3pySldzTDQrTHJhRXAvQVRBT2lsTjYrZQpsR2hWL2NHTm45LzJDNStPWTRPTWkxYXl0anVMUVgyWlpMbTJYZ2dYZ0ZzNEdpMXhJaDloUEZJbGdRbXNHOXFpCjZKUW1hNHNuTlFJREFRQUJvNEdtTUlHak1BNEdBMVVkRHdFQi93UUVBd0lGb0RBZEJnTlZIU1VFRmpBVUJnZ3IKQmdFRkJRY0RBUVlJS3dZQkJRVUhBd0l3REFZRFZSMFRBUUgvQkFJd0FEQWRCZ05WSFE0RUZnUVU5VmxoYTRpaApjUzVIV3hCUEU2WkhBYldRbExnd1JRWURWUjBSQkQ0d1BJSUthemh6TFdWMFkyUXdNWUlLYXpoekxXVjBZMlF3Ck1vSUthemh6TFdWMFkyUXdNNGNFZndBQUFZY0VyQjhEYkljRXJCOERiWWNFckI4RGJqQU5CZ2txaGtpRzl3MEIKQVFzRkFBT0NBUUVBTXdEbjhldlZEWU9Jc1lyUnJhVGVTdmRPbTZjVGJNd1VaVFVJWDE5L0FPeVRVUzZMUWwzZApGZnpPWGUrV1YwOGhsOUhVd0NueWQ2RW5kVXlWTHVaVTBETGkxazhwcXV2b3pkWDZkajNHRDRGM0lINXU2ZytOClVnTUFuc1lKLzBzNElJcG9ZVWVvNFgrd3drU3RMR2ZuVlZqZnF3OS9VbGE4WEZMWTRGSm9EUGw3VzJuWTFncUUKUlpJbnRFWHFOeVZlSGQzRDNvalVJWTVtTGZiRkdHOGVmSmIwbjIwNEpBTjdBbzZhUkw4cmdiS2tLbTRIWjArYgp3bENBRmc0d2FZdlV3UEp0S3NyN2NFN1l6d0RwbGdBZG1lbzVaZ0RkeVU3WmFPUHJ5SE5YUE5LNEtMUDNFZmVHCis3bGhzc0t4T1Y3WDNxNTJBM1o2eWpab2hEZ1o4Y1RxMWc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

etcd-ca: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURvRENDQW9pZ0F3SUJBZ0lVWVQ4Z0ZmSHFHRi9aYlljS2dPbTVUakVtdlowd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1p6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeERUQUxCZ05WQkFvVEJHVjBZMlF4RmpBVUJnTlZCQXNURFVWMFkyUWdVMlZqZFhKcGRIa3hEVEFMCkJnTlZCQU1UQkdWMFkyUXdJQmNOTWpJd09USTFNRGd4TnpBd1doZ1BNakV5TWpBNU1ERXdPREUzTURCYU1HY3gKQ3pBSkJnTlZCQVlUQWtOT01SQXdEZ1lEVlFRSUV3ZENaV2xxYVc1bk1SQXdEZ1lEVlFRSEV3ZENaV2xxYVc1bgpNUTB3Q3dZRFZRUUtFd1JsZEdOa01SWXdGQVlEVlFRTEV3MUZkR05rSUZObFkzVnlhWFI1TVEwd0N3WURWUVFECkV3UmxkR05rTUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF0VzFEOWZuQ1NYM2cKZitLVlJFb0JJV2RGWitlVkpwZWpWK2Nhckk3WERLWTdiNnpuTjJtMlN1ZDVvck05Q1lDWTBHenlkRk1kMHVabQpIckZ5aE5KKzhuNlRBSW9VUkVxc3d4aXRVanA3SXI2blZQTG52aTNKZ0o5WGpnZmtQbDF4cGNrRnFtbzFwd0FkCk0xQzdORlhldlVkaVF6aHhSYUh4dEo0VDhNQ256dVJ6blNTYUdjbkFWT0xQdjNJdXJkSk9NaDNta00vQXNIN0cKajZKRHNHZEdhSFk2VU1BbEQzOG9kcVFObEpDNHBLelZIbTFWV3BSREx0dFdMcFJHNDdSdjlHUmsrRUdocEVrbwpKNVl4aXpBSngrRDI5ZE5kSm16UkxLMDJrNjhTUTNSRkdaOWVpUTA0amhPWFJMNUVEVVRDQkNON1hvRjFwU0dHClBZcm9WV0czZndJREFRQUJvMEl3UURBT0JnTlZIUThCQWY4RUJBTUNBUVl3RHdZRFZSMFRBUUgvQkFVd0F3RUIKL3pBZEJnTlZIUTRFRmdRVWlVRk1Bb3Z5RlBLaWd0bE12YlUyVk56NEF4Z3dEUVlKS29aSWh2Y05BUUVMQlFBRApnZ0VCQUZ4d29tMHgyeDZWNUhVaE9IVkgyVGcyUGdacFFYMTFDS2hGM2JBQUw2M0preCtKK3pUZmJJTGRoM29HCmU3bEJPT2RpVXF5dWI4bmQxY2pIa2VqS3R5RFlOOE5MNnVRSXFOQ2M2SFB1NjZ6TEpVU0JwaFZ4YktVSEFxWGYKTWRuSkQzekoyYkM2Sml5Mi9IRW5heXM5MnllRjA1YUxOYjQ2aXB5Slh5bEk0MmNSbm11cjY0L1ZJdVQ2dkZISgp3bEZDb1FvWmVWT0hYRXJYcVA4V0ozWUpOdGpkWktsMHRQdWVYTG5Ob1VYSmlLYkE1TEFESHJaS1ptUUxWNGpHCkc1T05EV3VCNjIvY1NVMGtRbFFJeU5kMVBxVHFpdlI5Wm9iWnhoZGJIa2g1MGVxU1V3YmN1V2hzQytRelA2ZVgKRFBueUJxaThaVitSV25kR1doMXdQbVdjd2I4PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

[root@k8s-master01 ~]# grep -E "(.*etcd_ca:.*|.*etcd_cert:.*|.*etcd_key:.*)" calico-etcd.yaml

etcd_ca: "" # "/calico-secrets/etcd-ca"

etcd_cert: "" # "/calico-secrets/etcd-cert"

etcd_key: "" # "/calico-secrets/etcd-key"

[root@k8s-master01 ~]# sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

[root@k8s-master01 ~]# grep -E "(.*etcd_ca:.*|.*etcd_cert:.*|.*etcd_key:.*)" calico-etcd.yaml

etcd_ca: "/calico-secrets/etcd-ca" # "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert" # "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key" # "/calico-secrets/etcd-key"

# 更改此处为自己的pod网段

[root@k8s-master01 ~]# POD_SUBNET="192.168.0.0/12"复制

# 注意下面的这个步骤是把calico-etcd.yaml文件里面的CALICO_IPV4POOL_CIDR下的网段改成自己的Pod网段,也就是把192.168.x.x/16改成自己的集群网段,并打开注释:

[root@k8s-master01 ~]# grep -E "(.*CALICO_IPV4POOL_CIDR.*|.*192.168.0.0.*)" calico-etcd.yaml

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

[root@k8s-master01 ~]# sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

[root@k8s-master01 ~]# grep -E "(.*CALICO_IPV4POOL_CIDR.*|.*192.168.0.0.*)" calico-etcd.yaml

- name: CALICO_IPV4POOL_CIDR

value: 192.168.0.0/12

[root@k8s-master01 ~]# grep "image:" calico-etcd.yaml

image: docker.io/calico/cni:v3.24.1

image: docker.io/calico/node:v3.24.1

image: docker.io/calico/node:v3.24.1

image: docker.io/calico/kube-controllers:v3.24.1复制

下载calico镜像并上传harbor

[root@k8s-master01 ~]# cat download_calico_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_calico_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' calico-etcd.yaml | uniq)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Calico镜像"${END}

for i in ${images};do

nerdctl pull registry.cn-beijing.aliyuncs.com/raymond9/$i

nerdctl tag registry.cn-beijing.aliyuncs.com/raymond9/$i ${HARBOR_DOMAIN}/google_containers/$i

nerdctl rmi registry.cn-beijing.aliyuncs.com/raymond9/$i

nerdctl push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Calico镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_calico_images.sh

[root@k8s-master01 ~]# nerdctl images | grep v3.24.1

harbor.raymonds.cc/google_containers/cni v3.24.1 21df750b80ba About a minute ago linux/amd64 188.3 MiB 83.3 MiB

harbor.raymonds.cc/google_containers/kube-controllers v3.24.1 b65317537174 26 seconds ago linux/amd64 68.0 MiB 29.7 MiB

harbor.raymonds.cc/google_containers/node v3.24.1 135054e0bc90 37 seconds ago linux/amd64 218.7 MiB 76.5 MiB

[root@k8s-master01 ~]# sed -ri 's@(.*image:) docker.io/calico(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' calico-etcd.yaml

[root@k8s-master01 ~]# grep "image:" calico-etcd.yaml

image: harbor.raymonds.cc/google_containers/cni:v3.24.1

image: harbor.raymonds.cc/google_containers/node:v3.24.1

image: harbor.raymonds.cc/google_containers/node:v3.24.1

image: harbor.raymonds.cc/google_containers/kube-controllers:v3.24.1

[root@k8s-master01 ~]# kubectl apply -f calico-etcd.yaml

#查看容器状态

[root@k8s-master01 ~]# kubectl get pod -n kube-system |grep calico

calico-kube-controllers-67bd695c56-zt5k8 1/1 Running 0 6m44s

calico-node-fm5x2 1/1 Running 0 6m44s

calico-node-gbhd2 1/1 Running 0 6m44s

calico-node-m7gcc 1/1 Running 0 6m44s

calico-node-nnbjq 1/1 Running 0 6m44s

calico-node-v9cgw 1/1 Running 0 6m44s

calico-node-zlmx6 1/1 Running 0 6m44s

#查看集群状态

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.31.3.101 Ready <none> 28m v1.25.2

172.31.3.102 Ready <none> 28m v1.25.2

172.31.3.103 Ready <none> 28m v1.25.2

172.31.3.111 Ready <none> 20m v1.25.2

172.31.3.112 Ready <none> 19m v1.25.2

172.31.3.113 Ready <none> 19m v1.25.2复制

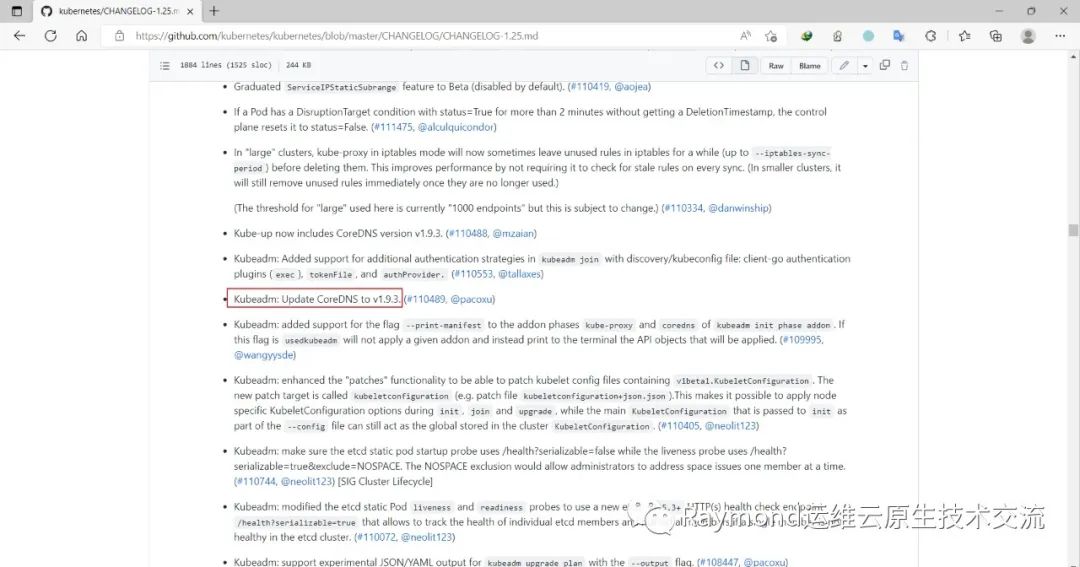

10.安装CoreDNS

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.25.md

在官网查看kubernetes v1.25的coredns版本是v1.9.3

https://github.com/coredns/deployment

[root@k8s-master01 ~]# wget https://dl.k8s.io/v1.25.2/kubernetes.tar.gz

[root@k8s-master01 ~]# tar xf kubernetes.tar.gz

[root@k8s-master01 ~]# cp kubernetes/cluster/addons/dns/coredns/coredns.yaml.base root/coredns.yaml

[root@k8s-master01 ~]# vim coredns.yaml

...

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa { #修改Cluster DNS Domain

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

...

containers:

- name: coredns

image: registry.k8s.io/coredns/coredns:v1.9.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 256Mi #设置内存大小,生产一般内存设置2-3G,CPU会分到2-4核,如果再高就开多副本

requests:

cpu: 100m

memory: 70Mi

...

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.10 #如果更改了k8s service的网段需要将coredns的serviceIP改成k8s service网段的第十个IP

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP复制

安装coredns

[root@k8s-master01 ~]# grep "image:" coredns.yaml

image: registry.k8s.io/coredns/coredns:v1.9.3

[root@k8s-master01 ~]# cat download_coredns_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_metrics_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' coredns.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Coredns镜像"${END}

for i in ${images};do

nerdctl pull registry.aliyuncs.com/google_containers/$i

nerdctl tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

nerdctl rmi registry.aliyuncs.com/google_containers/$i

nerdctl push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Coredns镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_coredns_images.sh

[root@k8s-master01 ~]# nerdctl images |grep coredns

harbor.raymonds.cc/google_containers/coredns v1.9.3 8e352a029d30 15 seconds ago linux/amd64 46.5 MiB 14.2 MiB

[root@k8s-master01 ~]# sed -ri 's@(.*image:) .*coredns(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' coredns.yaml

[root@k8s-master01 ~]# grep "image:" coredns.yaml

image: harbor.raymonds.cc/google_containers/coredns:v1.9.3

[root@k8s-master01 ~]# kubectl apply -f coredns.yaml

#查看状态

[root@k8s-master01 ~]# kubectl get pod -n kube-system |grep coredns

coredns-8668f8476d-njcl2 1/1 Running 0 7s复制

ubuntu会出现如下问题:

root@k8s-master01:~# kubectl get pod -A -o wide|grep coredns

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-847c895554-9jqq5 0/1 CrashLoopBackOff 1 8s 192.171.30.65 k8s-master02.example.local <none> <none>

#由于ubuntu系统有dns本地缓存,造成coredns不能正常解析

#具体问题请参考官方https://coredns.io/plugins/loop/#troubleshooting

root@k8s-master01:~# kubectl edit -n kube-system cm coredns

...

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop #将loop插件直接删除,避免内部循环

reload

loadbalance

}

root@k8s-master01:~# kubectl get pod -A -o wide |grep coredns

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-847c895554-r9tsd 0/1 CrashLoopBackOff 4 3m4s 192.170.21.195 k8s-node03.example.local <none> <none>

root@k8s-master01:~# kubectl delete pod coredns-847c895554-r9tsd -n kube-system

pod "coredns-847c895554-r9tsd" deleted

root@k8s-master01:~# kubectl get pod -A -o wide |greo coredns

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-847c895554-cqwl5 1/1 Running 0 13s 192.167.195.130 k8s-node02.example.local <none> <none>

#现在就正常了复制

11.安装Metrics Server

https://github.com/kubernetes-sigs/metrics-server

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。

[root@k8s-master01 ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml复制

修改下面内容:

[root@k8s-master01 ~]# vim components.yaml

...

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

#添加下面内容

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem #注意二进制包证书文件是front-proxy-ca.pem

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

...

volumeMounts:

- mountPath: tmp

name: tmp-dir

#添加下面内容

- name: ca-ssl

mountPath: etc/kubernetes/pki

...

volumes:

- emptyDir: {}

name: tmp-dir

#添加下面内容

- name: ca-ssl

hostPath:

path: etc/kubernetes/pki复制

下载镜像并修改镜像地址

[root@k8s-master01 ~]# grep "image:" components.yaml

image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1

[root@k8s-master01 ~]# cat download_metrics_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_metrics_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' components.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Metrics镜像"${END}

for i in ${images};do

nerdctl pull registry.aliyuncs.com/google_containers/$i

nerdctl tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

nerdctl rmi registry.aliyuncs.com/google_containers/$i

nerdctl push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Metrics镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_metrics_images.sh

[root@k8s-master01 ~]# nerdctl images |grep metric

harbor.raymonds.cc/google_containers/metrics-server v0.6.1 5ddc6458eb95 8 seconds ago linux/amd64 68.9 MiB 26.8 MiB

[root@k8s-master01 ~]# sed -ri 's@(.*image:) .*metrics-server(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' components.yaml

[root@k8s-master01 ~]# grep "image:" components.yaml

image: harbor.raymonds.cc/google_containers/metrics-server:v0.6.1复制

安装metrics server

[root@k8s-master01 ~]# kubectl apply -f components.yaml复制

查看状态

[root@k8s-master01 ~]# kubectl get pod -n kube-system |grep metrics

metrics-server-785dd7cc54-48xhq 1/1 Running 0 31s

[root@k8s-master01 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

172.31.3.101 173m 8% 1732Mi 48%

172.31.3.102 111m 5% 964Mi 26%

172.31.3.103 85m 4% 1348Mi 37%

172.31.3.111 49m 2% 588Mi 16%

172.31.3.112 60m 3% 611Mi 16%

172.31.3.113 80m 4% 634Mi 17%复制

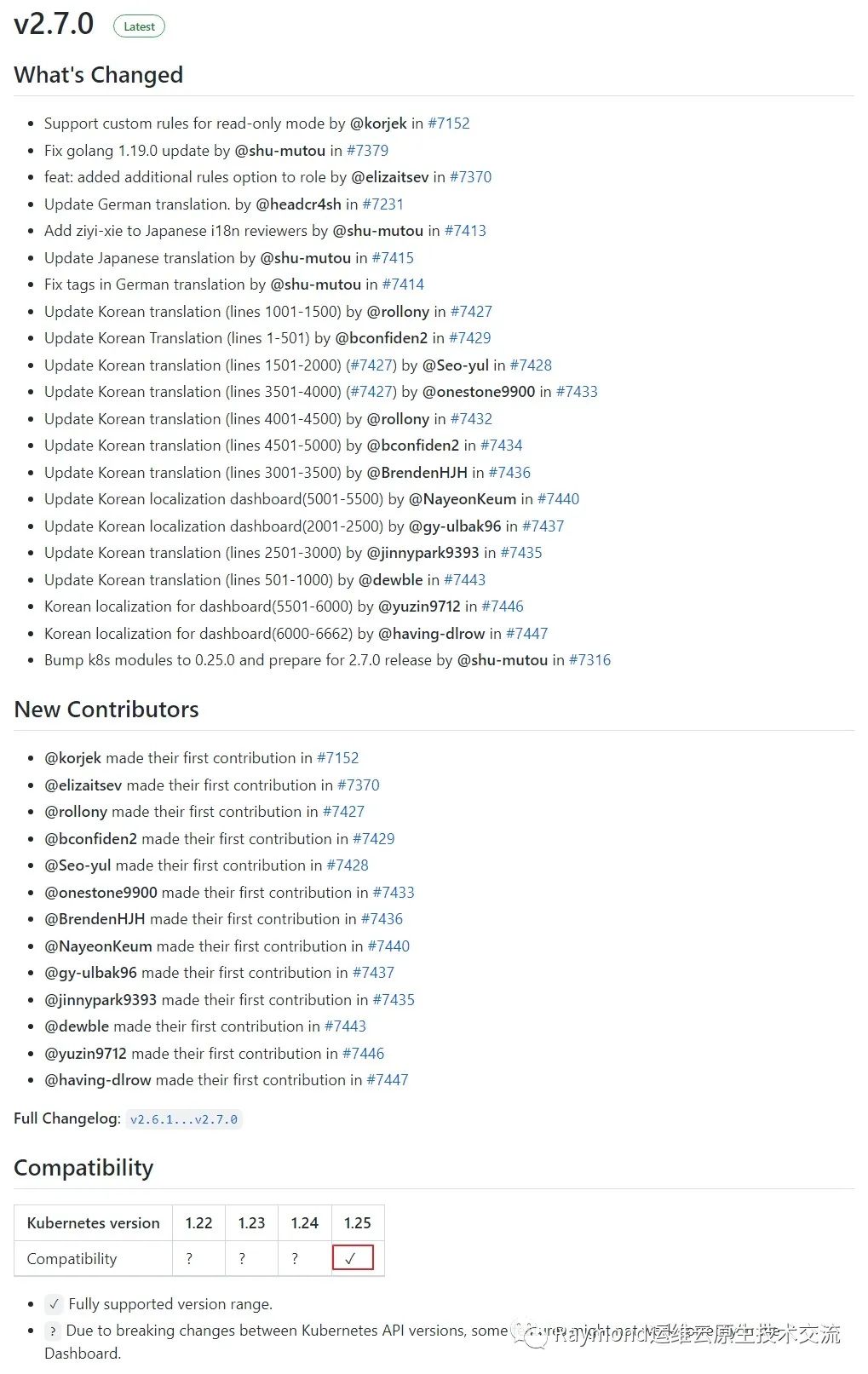

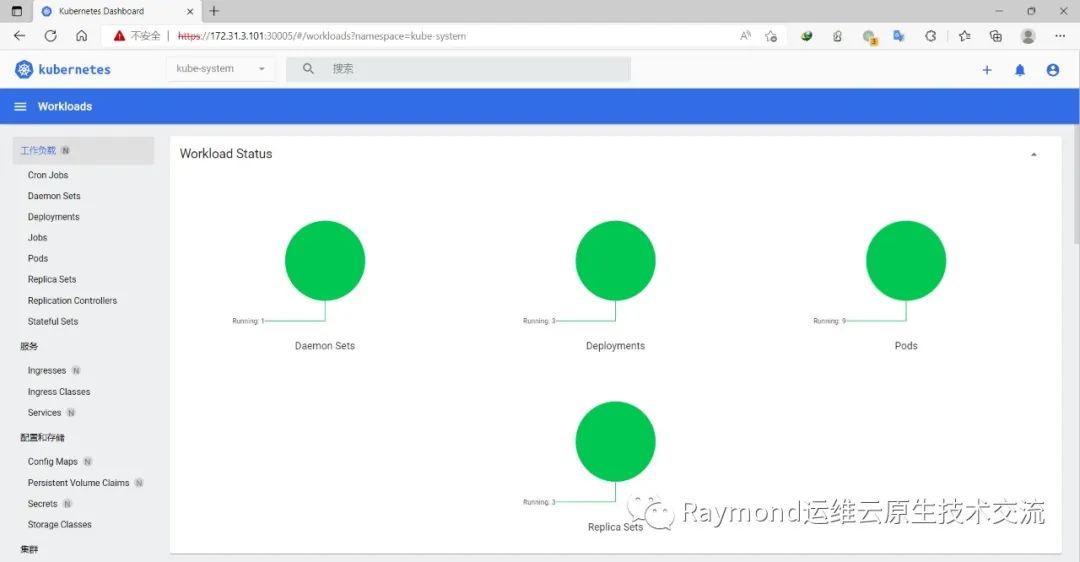

12.安装dashboard

12.1 Dashboard部署

Dashboard用于展示集群中的各类资源,同时也可以通过Dashboard实时查看Pod的日志和在容器中执行一些命令等。

https://github.com/kubernetes/dashboard/releases

查看对应版本兼容的kubernetes版本

可以看到上图dashboard v2.7.0是支持kuberneres 1.25版本的

[root@k8s-master01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

[root@k8s-master01 ~]# vim recommended.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加这行

ports:

- port: 443

targetPort: 8443

nodePort: 30005 #添加这行

selector:

k8s-app: kubernetes-dashboard

...

[root@k8s-master01 ~]# grep "image:" recommended.yaml

image: kubernetesui/dashboard:v2.7.0

image: kubernetesui/metrics-scraper:v1.0.8复制

下载镜像并上传到harbor

[root@k8s-master01 ~]# cat download_dashboard_images.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: Raymond

#QQ: 88563128

#Date: 2022-01-11

#FileName: download_dashboard_images.sh

#URL: raymond.blog.csdn.net

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

images=$(awk -F "/" '/image:/{print $NF}' recommended.yaml)

HARBOR_DOMAIN=harbor.raymonds.cc

images_download(){

${COLOR}"开始下载Dashboard镜像"${END}

for i in ${images};do

nerdctl pull registry.aliyuncs.com/google_containers/$i

nerdctl tag registry.aliyuncs.com/google_containers/$i ${HARBOR_DOMAIN}/google_containers/$i

nerdctl rmi registry.aliyuncs.com/google_containers/$i

nerdctl push ${HARBOR_DOMAIN}/google_containers/$i

done

${COLOR}"Dashboard镜像下载完成"${END}

}

images_download

[root@k8s-master01 ~]# bash download_dashboard_images.sh

[root@k8s-master01 ~]# nerdctl images |grep -E "(dashboard|metrics-scraper)"

harbor.raymonds.cc/google_containers/dashboard v2.7.0 2e500d29e9d5 11 seconds ago linux/amd64 245.8 MiB 72.3 MiB

harbor.raymonds.cc/google_containers/metrics-scraper v1.0.8 76049887f07a 2 seconds ago linux/amd64 41.8 MiB 18.8 MiB

[root@k8s-master01 ~]# sed -ri 's@(.*image:) kubernetesui(/.*)@\1 harbor.raymonds.cc/google_containers\2@g' recommended.yaml

[root@k8s-master01 ~]# grep "image:" recommended.yaml

image: harbor.raymonds.cc/google_containers/dashboard:v2.7.0

image: harbor.raymonds.cc/google_containers/metrics-scraper:v1.0.8

[root@k8s-master01 ~]# kubectl create -f recommended.yaml复制

创建管理员用户admin.yaml

[root@k8s-master01 ~]# cat > admin.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

[root@k8s-master01 ~]# kubectl apply -f admin.yaml复制

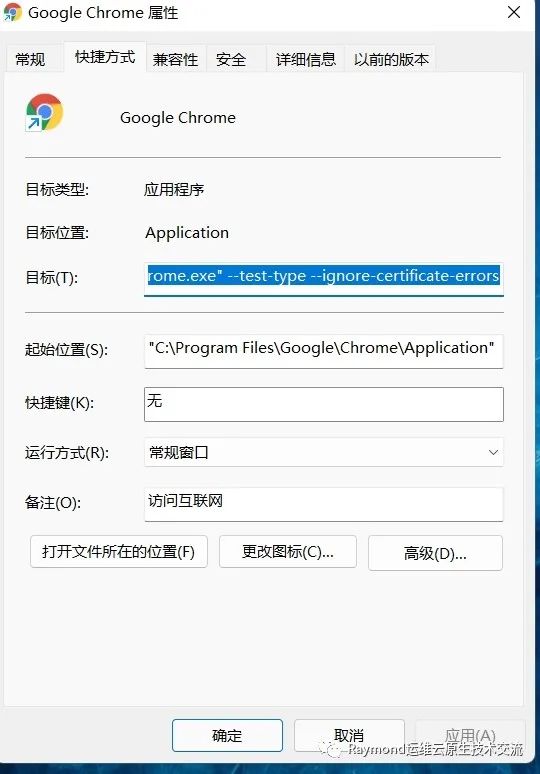

12.2 登录dashboard

在谷歌浏览器(Chrome)启动文件中加入启动参数,用于解决无法访问Dashboard的问题,参考图1-1:

--test-type --ignore-certificate-errors复制

图1-1 谷歌浏览器 Chrome的配置

[root@k8s-master01 ~]# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.108.134.101 <none> 443:30005/TCP 51s复制

访问Dashboard:https://172.31.3.101:30005,参考图1-2

图1-2 Dashboard登录方式

12.2.1 token登录

创建token:

[root@k8s-master01 ~]# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6IjdtYlhqaVhELW5yNHBKbmplcHNlQjYzWDZtNU1VWlJSV0NwWDFCQk41MkUifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjY0MTAzNTc0LCJpYXQiOjE2NjQwOTk5NzQsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiN2U3ZWU0YWYtMjhkNS00MzdkLWI0OTgtY2FkZmNkNGE3M2JjIn19LCJuYmYiOjE2NjQwOTk5NzQsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.VoCfYjT6yWvol_66yiFWkM2ryufknCHuay4t_oKF4G54aI6GdeHLe6OFp5EljvM0-gWcR7SJfZbimrT9sPYdOuyo0QZKqfSD0I73UTKMbWsAfusyf0eeF1PrKDMUb9337J22jhqDIbqYB_e2Yf77Le38mKcS7Cc_Bm1cBKeL15vLLTDwwn_uCsE0YGXWHAsEzrYAbzC7MnPyw2AfuzSvWVuk0DC7j7zlGtjMys07pq84oh2vU4sYV5TUo1lQ5Rug75bkWFjljuJ3wmN1WcNQoN6DGlOSMDGo_LN_wVOHdom7_5RRM7YR0td88FCGTDWsgmkdeNWrhVB7X0-MHLewEA复制

将token值输入到令牌后,单击登录即可访问Dashboard,参考图1-3:

12.2.2 使用kubeconfig文件登录dashboard

[root@k8s-master01 ~]# cp etc/kubernetes/admin.kubeconfig kubeconfig

[root@k8s-master01 ~]# vim kubeconfig

...

#在最下面添加token

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjdtYlhqaVhELW5yNHBKbmplcHNlQjYzWDZtNU1VWlJSV0NwWDFCQk41MkUifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjY0MTAzNTc0LCJpYXQiOjE2NjQwOTk5NzQsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiN2U3ZWU0YWYtMjhkNS00MzdkLWI0OTgtY2FkZmNkNGE3M2JjIn19LCJuYmYiOjE2NjQwOTk5NzQsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.VoCfYjT6yWvol_66yiFWkM2ryufknCHuay4t_oKF4G54aI6GdeHLe6OFp5EljvM0-gWcR7SJfZbimrT9sPYdOuyo0QZKqfSD0I73UTKMbWsAfusyf0eeF1PrKDMUb9337J22jhqDIbqYB_e2Yf77Le38mKcS7Cc_Bm1cBKeL15vLLTDwwn_uCsE0YGXWHAsEzrYAbzC7MnPyw2AfuzSvWVuk0DC7j7zlGtjMys07pq84oh2vU4sYV5TUo1lQ5Rug75bkWFjljuJ3wmN1WcNQoN6DGlOSMDGo_LN_wVOHdom7_5RRM7YR0td88FCGTDWsgmkdeNWrhVB7X0-MHLewEA复制

13.集群验证

安装busybox

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 2m20s复制

Pod必须能解析Service

Pod必须能解析跨namespace的Service

每个节点都必须要能访问Kubernetes的kubernetes svc 443和kube-dns的service 53

Pod和Pod之前要能通

a) 同namespace能通信

b) 跨namespace能通信

c) 跨机器能通信

验证解析

[root@k8s-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h9m

#Pod必须能解析Service

[root@k8s-master01 ~]# kubectl exec busybox -n default -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

#Pod必须能解析跨namespace的Service

[root@k8s-master01 ~]# kubectl exec busybox -n default -- nslookup kube-dns.kube-system

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local复制

每个节点都必须要能访问Kubernetes的kubernetes svc 443和kube-dns的service 53

[root@k8s-master01 ~]# telnet 10.96.0.1 443

Trying 10.96.0.1...

Connected to 10.96.0.1.

Escape character is '^]'.

^CConnection closed by foreign host.

[root@k8s-master02 ~]# telnet 10.96.0.1 443

[root@k8s-master03 ~]# telnet 10.96.0.1 443

[root@k8s-node01 ~]# telnet 10.96.0.1 443

[root@k8s-node02 ~]# telnet 10.96.0.1 443

[root@k8s-node03 ~]# telnet 10.96.0.1 443

[root@k8s-master01 ~]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 19m

metrics-server ClusterIP 10.110.185.15 <none> 443/TCP 16m

[root@k8s-master01 ~]# telnet 10.96.0.10 53

Trying 10.96.0.10...

Connected to 10.96.0.10.

Escape character is '^]'.

^CConnection closed by foreign host.

[root@k8s-master02 ~]# telnet 10.96.0.10 53

[root@k8s-master03 ~]# telnet 10.96.0.10 53

[root@k8s-node01 ~]# telnet 10.96.0.10 53

[root@k8s-node02 ~]# telnet 10.96.0.10 53

[root@k8s-node03 ~]# telnet 10.96.0.10 53

[root@k8s-master01 ~]# curl 10.96.0.10:53

curl: (52) Empty reply from server

[root@k8s-master02 ~]# curl 10.96.0.10:53

[root@k8s-master03 ~]# curl 10.96.0.10:53

[root@k8s-node01 ~]# curl 10.96.0.10:53

[root@k8s-node02 ~]# curl 10.96.0.10:53

[root@k8s-node03 ~]# curl 10.96.0.10:53复制

Pod和Pod之前要能通

[root@k8s-master01 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-67bd695c56-zt5k8 1/1 Running 0 32m 172.31.3.101 172.31.3.101 <none> <none>

calico-node-fm5x2 1/1 Running 0 32m 172.31.3.111 172.31.3.111 <none> <none>

calico-node-gbhd2 1/1 Running 0 32m 172.31.3.103 172.31.3.103 <none> <none>

calico-node-m7gcc 1/1 Running 0 32m 172.31.3.101 172.31.3.101 <none> <none>

calico-node-nnbjq 1/1 Running 0 32m 172.31.3.112 172.31.3.112 <none> <none>

calico-node-v9cgw 1/1 Running 0 32m 172.31.3.102 172.31.3.102 <none> <none>

calico-node-zlmx6 1/1 Running 0 32m 172.31.3.113 172.31.3.113 <none> <none>

coredns-8668f8476d-njcl2 1/1 Running 0 21m 192.171.30.65 172.31.3.102 <none> <none>

metrics-server-785dd7cc54-48xhq 1/1 Running 0 18m 192.170.21.193 172.31.3.113 <none> <none>

[root@k8s-master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 5m35s 192.167.195.129 172.31.3.112 <none> <none>

[root@k8s-master01 ~]# kubectl exec -it busybox -- sh

/ # ping 192.170.21.193

PING 192.170.21.193 (192.170.21.193): 56 data bytes

64 bytes from 192.170.21.193: seq=0 ttl=62 time=1.323 ms

64 bytes from 192.170.21.193: seq=1 ttl=62 time=0.643 ms

^C

--- 192.170.21.193 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.643/0.983/1.323 ms

/ # exit

[root@k8s-master01 ~]# kubectl create deploy nginx --image=nginx --replicas=3

deployment.apps/nginx created

[root@k8s-master01 ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 93s

[root@k8s-master01 ~]# kubectl get pod -o wide |grep nginx

nginx-76d6c9b8c-4kcv7 1/1 Running 0 3m29s 192.165.109.66 172.31.3.103 <none> <none>

nginx-76d6c9b8c-b87sx 1/1 Running 0 3m29s 192.170.21.194 172.31.3.113 <none> <none>

nginx-76d6c9b8c-p5vzc 1/1 Running 0 3m29s 192.169.111.130 172.31.3.111 <none> <none>

[root@k8s-master01 ~]# kubectl delete deploy nginx

deployment.apps "nginx" deleted

[root@k8s-master01 ~]# kubectl delete pod busybox

pod "busybox" deleted复制

14.生产环境关键性配置

docker参数配置:

vim etc/docker/daemon.json

{

"registry-mirrors": [ #docker镜像加速

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

],

"exec-opts": ["native.cgroupdriver=systemd"], #k8s需要systemd启动docker

"max-concurrent-downloads": 10, #并发下载线程数

"max-concurrent-uploads": 5, #并发上传线程数

"log-opts": {

"max-size": "300m", #docker日志文件最大300m

"max-file": "2" #最大2个文件

},

"live-restore": true #docker服务重启,容器不会重启

}复制

controller-manager 参数配置:

[root@k8s-master01 ~]# vim lib/systemd/system/kube-controller-manager.service

# --feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true \ #这个是bootstrap自动颁发证书,新版默认就是true,不用设置

--cluster-signing-duration=876000h0m0s \ #用来控制签发证书的有效期限。

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03; do scp -o StrictHostKeyChecking=no lib/systemd/system/kube-controller-manager.service $NODE:/lib/systemd/system/; done

kube-controller-manager.service 100% 1113 670.4KB/s 00:00

kube-controller-manager.service 100% 1113 1.0MB/s 00:00

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl restart kube-controller-manager

[root@k8s-master02 ~]# systemctl daemon-reload && systemctl restart kube-controller-manager

[root@k8s-master03 ~]# systemctl daemon-reload && systemctl restart kube-controller-manager复制

10-kubelet.conf 参数配置:

[root@k8s-master01 ~]# vim etc/systemd/system/kubelet.service.d/10-kubelet.conf

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 --image-pull-progress-deadline=30m"

#--tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 #更改k8s的加密算法

#--image-pull-progress-deadline=30m #如果在该参数值所设置的期限之前没有拉取镜像的进展,镜像拉取操作将被取消。仅当容器运行环境设置为 docker 时,此特定于 docker 的参数才有效。

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02 k8s-node03; do scp -o StrictHostKeyChecking=no etc/systemd/system/kubelet.service.d/10-kubelet.conf $NODE:/etc/systemd/system/kubelet.service.d/ ;done复制

kubelet-conf.yml 参数配置:

[root@k8s-master01 ~]# vim etc/kubernetes/kubelet-conf.yml

#添加如下配置

rotateServerCertificates: true

allowedUnsafeSysctls: #允许容器设置内核,有安全风险,根据实际需求设置

- "net.core*"

- "net.ipv4.*"

kubeReserved: #k8s预留资源

cpu: "1"

memory: 1Gi

ephemeral-storage: 10Gi

systemReserved: #系统预留资源

cpu: "1"

memory: 1Gi

ephemeral-storage: 10Gi

#rotateServerCertificates: true #当证书即将过期时自动从 kube-apiserver 请求新的证书进行轮换。要求启用 RotateKubeletServerCertificate 特性开关,以及对提交的 CertificateSigningRequest 对象进行批复(approve)操作。

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02 k8s-node03; do scp /etc/kubernetes/kubelet-conf.yml $NODE:/etc/kubernetes/ ;done

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl restart kubelet

[root@k8s-master02 ~]# systemctl daemon-reload && systemctl restart kubelet

[root@k8s-master03 ~]# systemctl daemon-reload && systemctl restart kubelet

[root@k8s-node01 ~]# systemctl daemon-reload && systemctl restart kubelet

[root@k8s-node02 ~]# systemctl daemon-reload && systemctl restart kubelet

[root@k8s-node03 ~]# systemctl daemon-reload && systemctl restart kubelet复制

添加label:

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.31.3.101 Ready <none> 151m v1.25.2

172.31.3.102 Ready <none> 150m v1.25.2

172.31.3.103 Ready <none> 150m v1.25.2

172.31.3.111 Ready <none> 124m v1.25.2

172.31.3.112 Ready <none> 124m v1.25.2

172.31.3.113 Ready <none> 124m v1.25.2

[root@k8s-master01 ~]# kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

172.31.3.101 NotReady <none> 63m v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.31.3.101,kubernetes.io/os=linux,node.kubernetes.io/node=

172.31.3.102 Ready <none> 63m v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.31.3.102,kubernetes.io/os=linux,node.kubernetes.io/node=

172.31.3.103 Ready <none> 63m v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.31.3.103,kubernetes.io/os=linux,node.kubernetes.io/node=

172.31.3.111 Ready <none> 55m v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.31.3.111,kubernetes.io/os=linux,node.kubernetes.io/node=

172.31.3.112 Ready <none> 54m v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.31.3.112,kubernetes.io/os=linux,node.kubernetes.io/node=

172.31.3.113 Ready <none> 54m v1.25.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=172.31.3.113,kubernetes.io/os=linux,node.kubernetes.io/node=

[root@k8s-master01 ~]# kubectl label node 172.31.3.101 node-role.kubernetes.io/control-plane='' node-role.kubernetes.io/master=''

node/172.31.3.101 labeled

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.31.3.101 Ready control-plane,master 152m v1.25.2

172.31.3.102 Ready <none> 151m v1.25.2

172.31.3.103 Ready <none> 151m v1.25.2

172.31.3.111 Ready <none> 125m v1.25.2

172.31.3.112 Ready <none> 125m v1.25.2

172.31.3.113 Ready <none> 125m v1.25.2

[root@k8s-master01 ~]# kubectl label node 172.31.3.102 node-role.kubernetes.io/control-plane='' node-role.kubernetes.io/master=''

node/172.31.3.102 labeled

[root@k8s-master01 ~]# kubectl label node 172.31.3.103 node-role.kubernetes.io/control-plane='' node-role.kubernetes.io/master=''

node/172.31.3.103 labeled

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

172.31.3.101 Ready control-plane,master 152m v1.25.2

172.31.3.102 Ready control-plane,master 152m v1.25.2

172.31.3.103 Ready control-plane,master 151m v1.25.2

172.31.3.111 Ready <none> 125m v1.25.2

172.31.3.112 Ready <none> 125m v1.25.2

172.31.3.113 Ready <none> 125m v1.25.2复制

安装总结:

1、 kubeadm

2、 二进制

3、 自动化安装

a) Ansible

i. Master节点安装不需要写自动化。

ii. 添加Node节点,playbook。

4、 安装需要注意的细节

a) 上面的细节配置

b) 生产环境中etcd一定要和系统盘分开,一定要用ssd硬盘。

c) Docker数据盘也要和系统盘分开,有条件的话可以使用ssd硬盘