unnest和no_unnest

Oracle中,子查询非嵌套(Subquery Unnesting):当where子查询中有in,not in,exists,not exists等,CBO会尝试将子查询展开(unnest),从而消除FILTER,这个过程就叫做子查询非嵌套。

LightDB从23.1开始支持此优化器特性

创建测试表emp和dept,默认的执行计划如下,两个表走hash join

test@test=> explain analyze

test@test-> select ename, deptno

test@test-> from emp

test@test-> where deptno in (select deptno from dept where dname = 'CHICAGO');

QUERY PLAN

------------------------------------------------------------------------------------------------------------

Hash Join (cost=19.80..35.11 rows=2 width=44) (actual time=0.047..0.053 rows=0 loops=1)

Hash Cond: (emp.deptno = dept.deptno)

-> Seq Scan on emp (cost=0.00..14.20 rows=420 width=44) (actual time=0.012..0.013 rows=1 loops=1)

-> Hash (cost=19.75..19.75 rows=4 width=12) (actual time=0.013..0.015 rows=0 loops=1)

Buckets: 1024 Batches: 1 Memory Usage: 8kB

-> Seq Scan on dept (cost=0.00..19.75 rows=4 width=12) (actual time=0.011..0.012 rows=0 loops=1)

Filter: ((dname)::text = 'CHICAGO'::text)

Rows Removed by Filter: 4

Planning Time: 0.210 ms

Execution Time: 0.115 ms

(10 rows)

复制指定hint no_unnest 让执行计划走filter

test@test-> where deptno in (select /*+ no_unnest */ deptno from dept where dname = 'CHICAGO');

QUERY PLAN

----------------------------------------------------------------------------------------------------------------

Seq Scan on emp @"lt#1" (cost=19.76..35.01 rows=210 width=44) (actual time=0.033..0.035 rows=0 loops=1)

Filter: (hashed SubPlan 1)

Rows Removed by Filter: 14

SubPlan 1

-> Seq Scan on dept @"lt#0" (cost=0.00..19.75 rows=4 width=12) (actual time=0.006..0.007 rows=0 loops=1)

Filter: ((dname)::text = 'CHICAGO'::text)

Rows Removed by Filter: 4

Planning Time: 0.153 ms

Execution Time: 0.087 ms

(9 rows)

复制同样只用unnest hint,可以实现非filter的执行计划

test@test=> explain analyze

test@test-> select ename, deptno

test@test-> from emp

test@test-> where deptno in (select /*+ unnest */ deptno from dept where dname = 'CHICAGO');

QUERY PLAN

--------------------------------------------------------------------------------------------------------------------

Hash Join (cost=19.80..35.11 rows=2 width=44) (actual time=0.027..0.032 rows=0 loops=1)

Hash Cond: (emp.deptno = dept.deptno)

-> Seq Scan on emp @"lt#1" (cost=0.00..14.20 rows=420 width=44) (actual time=0.009..0.010 rows=1 loops=1)

-> Hash (cost=19.75..19.75 rows=4 width=12) (actual time=0.010..0.011 rows=0 loops=1)

Buckets: 1024 Batches: 1 Memory Usage: 8kB

-> Seq Scan on dept @"lt#0" (cost=0.00..19.75 rows=4 width=12) (actual time=0.008..0.009 rows=0 loops=1)

Filter: ((dname)::text = 'CHICAGO'::text)

Rows Removed by Filter: 4

Planning Time: 0.217 ms

Execution Time: 0.065 ms

(10 rows)

复制push_pred

谓词推入(Pushing Predicate):当SQL语句中包含有不能合并的视图,并且视图有谓词过滤(也就是where过滤条件),CBO会将谓词过滤条件推入视图中,这个过程就叫做谓词推入。谓词推入的主要目的就是让Oracle尽可能早的过滤掉无用的数据,从而提升查询性能。

create table test as select * from pg_class;

create table test1 as select * from pg_class;

create or replace view v_pushpredicate as

select * from test

union all

select * from test1;

create index i_test_id on test(oid);

create index i_test1_id on test1(oid);

复制执行下面语句

test@test=> explain analyze select /*+push_pred(v)*/* from v_pushpredicate v where oid = 19787;

QUERY PLAN

--------------------------------------------------------------------------------------------------------------------------

Append (cost=0.28..4.59 rows=2 width=265) (actual time=0.051..0.091 rows=2 loops=1)

-> Index Scan using i_test_id on test (cost=0.28..2.29 rows=1 width=265) (actual time=0.049..0.051 rows=1 loops=1)

Index Cond: (oid = '19787'::oid)

-> Index Scan using i_test1_id on test1 (cost=0.28..2.29 rows=1 width=265) (actual time=0.031..0.032 rows=1 loops=1)

Index Cond: (oid = '19787'::oid)

Planning Time: 1.080 ms

Execution Time: 0.137 ms

(7 rows)

复制并行相关hint

Oracle并行的生产者和消费者模型中,Table Queue: 生产者和消费者之间的数据分发. 常见的数据并行分发方式有broadcast, hash. 12c引入多种新的数据分发, 比如replicate, 更加智能的adaptive分发.

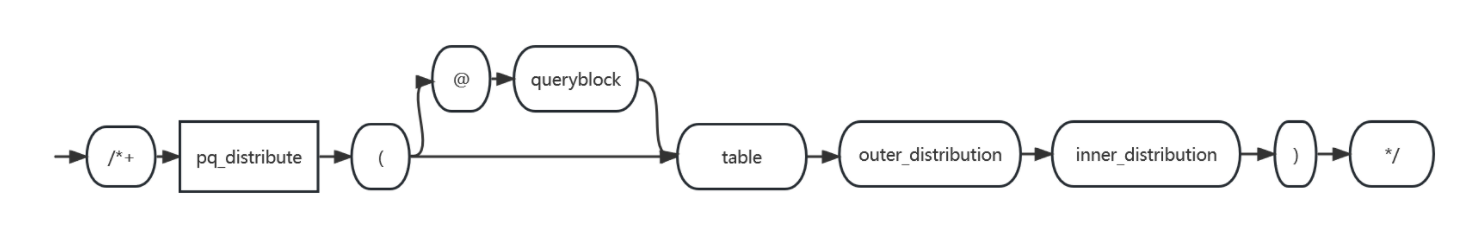

在 oracle 中 pq_distribute 用来控制并行连接的方式,LightDB 的语法与oracle相同, 具体如下所示:

但是 LightDB 只支持 none,broadcast 和 hash,hash 组合,其中 hash,hash 只支持语法(因为LightDB 不支持此模式)。其他模式待后续支持。

test@test=> explain analyze select

test@test-> /*+ use_hash(a,b) pq_distribute(a none,broadcast) leading(b a)*/

test@test-> * from test a, test1 b where a.oid = b.oid;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------

Gather (cost=37.05..62.87 rows=758 width=530) (actual time=1.942..12.191 rows=758 loops=1)

Workers Planned: 3

Workers Launched: 2

-> Hash Join (cost=37.05..62.87 rows=245 width=530) (actual time=0.477..1.342 rows=253 loops=3)

Hash Cond: (b.oid = a.oid)

-> Parallel Seq Scan on test1 b @"lt#0" (cost=0.00..22.45 rows=245 width=265) (actual time=0.005..0.218 rows=254 loops=3)

-> Hash (cost=27.58..27.58 rows=758 width=265) (actual time=1.387..1.390 rows=758 loops=1)

Buckets: 1024 Batches: 1 Memory Usage: 163kB

-> Seq Scan on test a @"lt#0" (cost=0.00..27.58 rows=758 width=265) (actual time=0.005..0.595 rows=758 loops=1)

Planning Time: 0.282 ms

Execution Time: 12.724 ms

(11 rows)

复制使用pq_distribute(b hash,hash)执行计划如下

LightDB 只支持 none,broadcast 和 hash,hash 组合,其中 hash,hash 只支持语法。其他模式待后续支持

test@test=> explain analyze select

test@test-> /*+ use_hash(a,b) pq_distribute(a none,broadcast) */

test@test-> * from test a, test1 b where a.oid = b.oid;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------

Gather (cost=25.52..49.67 rows=758 width=530) (actual time=2.451..12.746 rows=758 loops=1)

Workers Planned: 3

Workers Launched: 2

-> Parallel Hash Join (cost=25.52..49.67 rows=245 width=530) (actual time=0.766..1.678 rows=253 loops=3)

Hash Cond: (a.oid = b.oid)

-> Parallel Seq Scan on test a @"lt#0" (cost=0.00..22.45 rows=245 width=265) (actual time=0.005..0.665 rows=758 loops=1)

-> Parallel Hash (cost=22.45..22.45 rows=245 width=265) (actual time=0.502..0.503 rows=254 loops=3)

Buckets: 1024 Batches: 1 Memory Usage: 200kB

-> Parallel Seq Scan on test1 b @"lt#0" (cost=0.00..22.45 rows=245 width=265) (actual time=0.013..0.635 rows=761 loops=1)

Planning Time: 0.273 ms

Execution Time: 13.294 ms

(11 rows)

复制