Towards End-to-End Generative Modeling of Long Videos.pdf

50墨值下载

Towards End-to-End Generative Modeling of Long Videos

with Memory-Efficient Bidirectional Transformers

Jaehoon Yoo

1

, Semin Kim

1

, Doyup Lee

2

, Chiheon Kim

2

, Seunghoon Hong

1

1

KAIST,

2

Kakao Brain

{wogns98, kimsm1121, seunghoon.hong}@kaist.ac.kr, {damien.re, frost.conv}@kakaobrain.com

Abstract

Autoregressive transformers have shown remarkable

success in video generation. However, the transformers are

prohibited from directly learning the long-term dependency

in videos due to the quadratic complexity of self-attention,

and inherently suffering from slow inference time and er-

ror propagation due to the autoregressive process. In this

paper, we propose Memory-efficient Bidirectional Trans-

former (MeBT) for end-to-end learning of long-term depen-

dency in videos and fast inference. Based on recent ad-

vances in bidirectional transformers, our method learns to

decode the entire spatio-temporal volume of a video in par-

allel from partially observed patches. The proposed trans-

former achieves a linear time complexity in both encoding

and decoding, by projecting observable context tokens into

a fixed number of latent tokens and conditioning them to

decode the masked tokens through the cross-attention. Em-

powered by linear complexity and bidirectional modeling,

our method demonstrates significant improvement over the

autoregressive transformers for generating moderately long

videos in both quality and speed. Videos and code are avail-

able at https://sites.google.com/view/mebt-cvpr2023.

1. Introduction

Modeling the generative process of videos is an impor-

tant yet challenging problem. Compared to images, gener-

ating convincing videos requires not only producing high-

quality frames but also maintaining their semantic struc-

tures and dynamics coherently over long timescale [11, 16,

27, 36,38, 54].

Recently, autoregressive transformers on discrete repre-

sentation of videos have shown promising generative mod-

eling performances [11,32,51,53]. Such methods generally

involve two stages, where the video frames are first turned

into discrete tokens through vector quantization, and then

their sequential dynamics are modeled by an autoregressive

transformer. Powered by the flexibility of discrete distri-

butions and the expressiveness of transformer architecture,

these methods demonstrate impressive results in learning

and synthesizing high-fidelity videos.

However, autoregressive transformers for videos suffer

from critical scaling issues in both training and inference.

During training, due to the quadratic cost of self-attention,

the transformers are forced to learn the joint distribution of

frames entirely from short videos (e.g., 16 frames [11, 53])

and cannot directly learn the statistical dependencies of

frames over long timescales. During inference, the mod-

els are challenged by two issues of autoregressive gener-

ative process – its serial process significantly slows down

the inference speed, and perhaps more importantly, autore-

gressive prediction is prone to error propagation where the

prediction error of the frames accumulates over time.

To (partially) address the issues, prior works proposed

improved transformers for generative modeling of videos,

which are categorized as the following: (a) Employing

sparse attention to improve scaling during training [12, 16,

51], (b) Hierarchical approaches that employ separate mod-

els in different frame rates to generate long videos with

a smaller computation budget [11, 16], and (c) Remov-

ing autoregression by formulating the generative process as

masked token prediction and training a bidirectional trans-

former [12, 13]. While each approach is effective in ad-

dressing specific limitations in autoregressive transformers,

none of them provides comprehensive solutions to afore-

mentioned problems – (a, b) still inherits the problems in

autoregressive inference and cannot leverage the long-term

dependency by design due to the local attention window,

and (c) is not appropriate to learn long-range dependency

due to the quadratic computation cost. We believe that an

alternative that jointly resolves all the issues would provide

a promising approach towards powerful and efficient video

generative modeling with transformers.

In this work, we propose an efficient transformer for

video synthesis that can fully leverage the long-range de-

pendency of video frames during training, while being able

to achieve fast generation and robustness to error propaga-

tion. We achieve the former with a linear complexity ar-

1

arXiv:2303.11251v2 [cs.CV] 27 Mar 2023

chitecture that still imposes dense dependencies across all

timesteps, and the latter by removing autoregressive seri-

alization through masked generation with a bidirectional

transformer. While conceptually simple, we show that ef-

ficient dense architecture and masked generation are highly

complementary, and when combined together, lead to sub-

stantial improvements in modeling longer videos compared

to previous works both in training and inference. The con-

tributions of this paper are as follows:

• We propose Memory-efficient Bidirectional Trans-

former (MeBT) for generative modeling of video. Un-

like prior methods, MeBT can directly learn long-

range dependency from training videos while enjoying

fast inference and robustness in error propagation.

• To train MeBT for moderately long videos, we propose

a simple yet effective curriculum learning that guides

the model to learn short- to long-term dependencies

gradually over training.

• We evaluate MeBT on three challenging real-world

video datasets. MeBT achieves a performance compet-

itive to state-of-the-arts for short videos of 16 frames,

and outperforms all for long videos of 128 frames

while being considerably more efficient in memory

and computation during training and inference.

2. Background

This section introduces generative transformers for

videos that utilize discrete token representation, which can

be categorized into autoregressive and bidirectional models.

Let x ∈ R

T ×H×W ×3

be a video. To model its genera-

tive distribution p(x), prior works on transformers employ

discrete latent representation of frames y ∈ R

t×h×w×d

and

model the prior distribution on the latent space p(y).

Vector Quantization To map a video x into discrete to-

kens y, previous works [11, 13, 32, 48, 51] utilize vector

quantization with an encoder E that maps x onto a learn-

able codebook F = {e

i

}

U

i=1

[45]. Specifically, given

a video x, the encoder produces continuous embeddings

h = E(x) ∈ R

t×h×w×d

and searches for the nearest code

e

u

∈ F .

The encoder E is trained through an autoencoding

framework by introducing a decoder D that takes discrete

tokens y and produces reconstruction

ˆ

x = D(y). The en-

coder E, codebook F , and the decoder D are optimized

with the following training objective:

L

q

= ||x −

ˆ

x||

2

2

+ ||sg(h) − y||

2

2

+ β||sg(y) − h||

2

2

,

(1)

where sg denotes stop-gradient operator. In practice, to

improve the quality of discrete representations, additional

perceptual loss and adversarial loss are often introduced [9].

For the choice of the encoder E, we utilize 3D convo-

lutional networks that compress a video in both spatial and

temporal dimensions following prior works [11, 53].

Autoregressive Transformers Given the discrete latent

representations y ∈ R

t×h×w×d

, generative modeling of

videos boils down to modeling the prior p(y). Prior work

based on autoregressive transformers employ a sequential

factorization p(y) = Π

i≤N

p(y

i

|y

<i

) where N = thw, and

use a transformer to model the conditional distribution of

each token p(y

i

|y

<i

). The transformer is trained to mini-

mize the following negative log-likelihood of training data:

L

a

=

X

i≤N

− log p(y

i

|y

<i

). (2)

During inference, the transformer generates a video by

sequentially sampling each token y

i

from the conditional

p(y

i

|y

<i

) based on context y

<i

. The sampled tokens y are

then mapped back to a video using the decoder D.

While simple and powerful, autoregressive transform-

ers for videos suffer from critical scaling issues. First,

each conditional p(y

n

|y

<n

) involves O(n

2

) computational

cost due to the quadratic complexity of self-attention. This

forces the model to only utilize short-term context in both

training and inference, making it inappropriate in modeling

spatio-temporal long-term coherence. Furthermore, during

inference, the sequential decoding requires N model pre-

dictions that recursively depend on the previous one. This

leads to a slow inference, and more notably, a potential error

propagation over space and time since the prediction error

at a certain token accumulates over the remaining decod-

ing steps. This is particularly problematic for videos since

N is often very large as tokenization spans both spatial and

temporal dimensions.

Bidirectional Transformers To improve the decoding ef-

ficiency of autoregressive transformers, bidirectional gener-

ative transformers have been proposed [5, 13, 56]. Contrary

to autoregressive models that predict a single consecutive

token at each step, a bidirectional transformer learns to pre-

dict multiple masked tokens at once based on the previously

generated context. Specifically, given the random masking

indices m ⊆ {1, ..., N}, it models the joint distribution over

masked tokens y

M

= {y

i

|i ∈ m} conditioned on the visi-

ble context y

C

= {y

i

|i /∈ m}, and is trained with the below

objective:

L

b

= − log p(y

M

|y

C

, z

M

) ≈ −

X

i∈m

− log p(y

i

|y

C

, z

M

),

(3)

where mask embeddings z

M

encode positions of the mask

tokens with learnable vectors. Each conditional in Eq. (3)

is modeled by a transformer, but contrary to autoregressive

2

y

C

z

M

z

L

y

M

s

s + 1

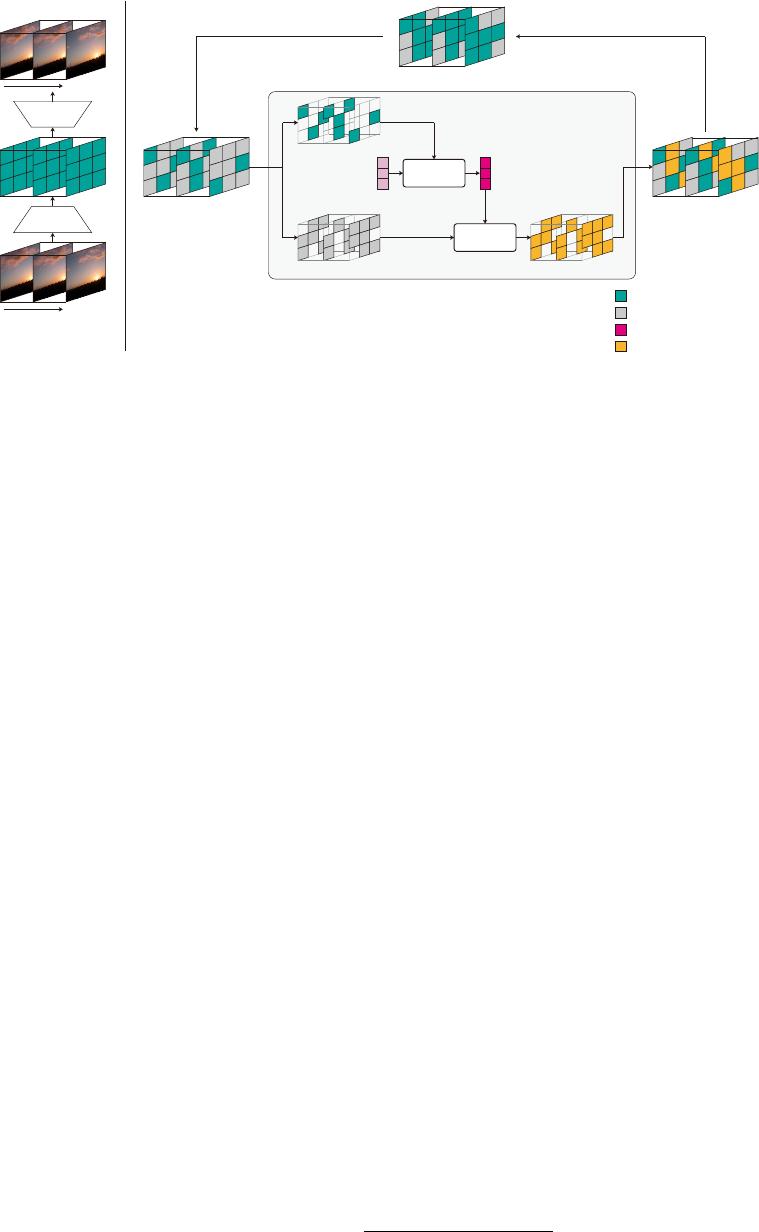

Figure 1. Overview of our method. Our model learns to predict masked tokens from the context tokens with linear complexity encoder and

decoder. The encoder and decoder utilize latent bottlenecks to achieve linear complexity while performing full dependency modeling.

models that apply causal masking, bidirectional transform-

ers operate on entire tokens y = y

C

∪ y

M

. During in-

ference, the model is initialized with empty context (i.e.,

y

C

= ∅), and performs iterative decoding by predicting the

probability over all masked tokens y

M

by Eq. (3) and sam-

pling their subset as the context for the next iteration.

Adopting bidirectional transformers to model videos has

two advantages compared to autoregressive transformers.

First, by decoding multiple tokens at once, bidirectional

transformers enjoy a better parallelization and smaller de-

coding steps than autoregressive transformers which results

in a faster sampling. Second, bidirectional transformers are

more robust to error propagation than autoregressive coun-

terparts since the decoding of the masked token is indepen-

dent of the temporal order, allowing consistent prediction

quality over time. However, complexity of the bidirectional

transformers is still quadratic to the number of tokens, lim-

iting the length of training videos to only short sequences

thus hindering learning long-term dependencies.

3. Memory-efficient Bidirectional Transformer

Our goal is to design a generative transformer for videos

that can perform fast inference with a robustness to error

propagation, while being able to fully leverage the long-

range statistical dependency of video frames in training.

For inference speed and robustness, we adopt the bidi-

rectional approach and parameterize the joint distribution

of masked tokens p(y

M

|y

C

, z

M

) ≈ Π

i∈m

p(y

i

|y

C

, z

M

)

(Eq. (3)) with a transformer. For learning long-range depen-

dency, we take a simple approach of employing an efficient

transformer architecture [40] of sub-quadratic complexity

and directly training it with longer videos. While sparse at-

tention (e.g., local, axial, strided) is dominant in autoregres-

sive transformers to reduce complexity [11, 12, 16, 51], we

find it potentially problematic for bidirectional transformers

since the context y

C

can be provided for an arbitrary sub-

set of token positions that often cannot be covered by the

fixed sparse attention patterns

1

. Thus, unlike prior work,

we design an efficient bidirectional transformer based on

low-rank latent bottleneck [17,18,21,25,30,49] that always

enables a dense attention dependency over all token posi-

tions while guaranteeing linear complexity.

To this end, we propose Memory-efficient Bidirectional

Transformer (MeBT) for generative modeling of videos.

The overall framework of MeBT is illustrated in Fig. 1. To

predict the masked tokens y

M

from the context tokens y

C

,

MeBT employs an encoder-decoder architecture based on

a fixed number of latent bottleneck tokens z

L

∈ R

N

L

×d

with N

L

N . The encoder projects the context tokens y

C

to the latent tokens z

L

, and the decoder utilizes the latent

tokens z

L

to predict the masked tokens y

M

. Overall, the

encoder and decoder exploit the latent bottleneck to achieve

a linear complexity O(N) while performing a full depen-

dency modeling across the token positions. In the following

sections, we describe the details of the encoder, decoder,

and the training and inference procedures of MeBT.

3.1. Encoder Architecture

The encoder aims to project all previously generated

context tokens y

C

to fixed-size latent bottleneck z

L

with

a memory and time cost linear to context size O(N

C

).

Following prior work on transformers with latent bottle-

necks [17, 18, 21, 25, 30], we construct the encoder as an

alternating stack of two types of attention layers that pro-

gressively update the latent tokens based on the provided

context tokens. Specifically, as illustrated in Fig. 2, the first

layer updates the latent tokens by cross-attending to context

tokens, and the second one updates the latent tokens by per-

1

Note that, in autoregressive transformers, the context y

<i

(Eq. (2)) is

always the entire past, allowing sparse attention to robustly see the context.

3

of 15

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

北信源 DBA

最新上传

下载排行榜

1

2

9-数据库人的进阶之路:从PG分区、SQL优化到拥抱AI未来(罗敏).pptx

3

1-PG版本兼容性案例(彭冲).pptx

4

2-TDSQL PG在复杂查询场景中的挑战与实践-opensource.pdf

5

6-PostgreSQL 哈希索引原理浅析(文一).pdf

6

8-基于PG向量和RAG技术的开源知识库问答系统MaxKB.pptx

7

3-AI时代的变革者-面向机器的接口语言(MOQL)_吕海波.pptx

8

4-IvorySQL V4:双解析器架构下的兼容性创新实践.pptx

9

7-拉起PG好伙伴DifySupaOdoo.pdf

10

《云原生安全攻防启示录》李帅臻.pdf

相关文档

评论