大家好, 今天和大家分享一个真实生产案例: 异地数据库同步延时导致的主库关闭失败问题。

简单的说一下背景:

我们要在计划内部署维护窗口做2件事:

1.ORACLE 迁移到PG的项目,数据同步, 大致几十个GB的数据(包含索引)。

2.PG 端要做一个小版本的升级,从版本15.2升级到15.3 , 需要执行停库操作,进行 make install-world , 再重启数据库

生产的数据库架构是: 武汉PDC (1 Primary DB + 2 standby DB) + 天津DRC灾备中心 (1 standby DB)

预计窗口停机: 23:00- 00:00 1个小时

部署当天晚上:

1.执行 ORACLE 迁移到PG的项目,数据同步 – 比较顺利,和期望是一致的

2.PG实例在关机的时候 出现了命令 pg_ctl stop -D $PG_DATA hang 住,长时间等待的情况。。。

后台日志出现了大量的关闭失败的信息

2023-07-17 23:45:05.033 CST [109594] repmgr@repmgr-10.25.14.149/[unknown]FATAL: the database system is shutting down 2023-07-17 23:45:05.035 CST [109595] repmgr@repmgr-10.25.14.149/[unknown]FATAL: the database system is shutting down 2023-07-17 23:45:05.613 CST [109603] capp_lm@cappcore-10.26.212.19/[unknown]FATAL: the database system is shutting down 2023-07-17 23:45:05.822 CST [109605] capp_lm@cappcore-10.26.212.49/[unknown]FATAL: the database system is shutting down复制

而此时 ps -ef | grep postgres , 后台进程还是存在的, 但是这个时候尝试连接 PSQL 也是失败的

由于维护窗口只有一个小时,时间紧迫,采取了 -m immediate 参数 立刻关闭的方式。 (生产环境不建议这种方式)

pg_ctl stop -D $PG_DATA -m immediate

复制这下算是顺利关机, 然后给PG 软件小版本升级,然后启动实例一切顺利!

作为运维DBA来说,如果生产如果出现问题,恢复生产的可用性永远是第一位的, 然后才是调查 root cause.

初步怀疑原因: 和主从延时有关,异地网络的传输效率比较差, wal sender 进程 blocking the 主库关闭.

我们可以找个测试环境来复盘这个问题:

测试环境模拟 1 主 : pref170+ 2从: pref171,pref173 +1从: pgdr(异地)

postgres=# select pid,usename,application_name,replay_lag,reply_time from pg_stat_replication;

pid | usename | application_name | replay_lag | reply_time

--------+---------+------------------+-----------------+-------------------------------

22250 | repmgr | pref173 | 00:00:00.000816 | 2023-08-19 10:43:49.650063+08

22252 | repmgr | pref171 | 00:00:00.000923 | 2023-08-19 10:43:49.650243+08

126245 | repmgr | pgdr | 00:00:00.020848 | 2023-08-19 10:43:49.661431+08

(3 rows)

复制登录主库 pref170: 模拟数据大量数据导入8000W数据,造成主从延时。

postgres=# create table t_load (id bigint, context text);

CREATE TABLE

postgres=# insert into t_load select generate_series(1,80000000),md5('a')||md5('b')||md5('c')||md5('d')||md5('e');

INSERT 0 80000000

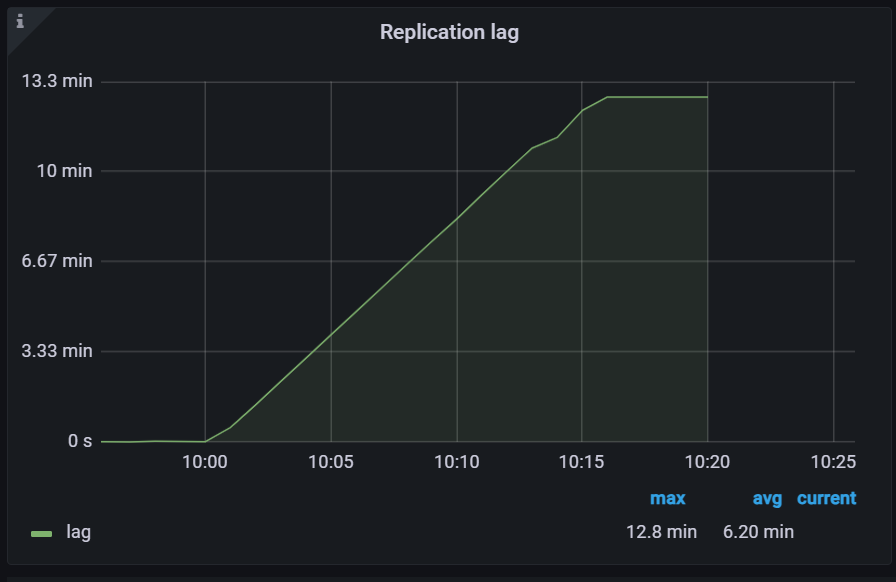

复制查看延时: 异地节点延时grafana 监控图 同步lag 已经达到了 10分钟以上

我们尝试关闭主库:

INFRA [postgres@ljzdccapp006 ~]# /opt/pgsql-15/bin/pg_ctl stop -D /data/pref6005/data

waiting for server to shut down............................................................... failed

pg_ctl: server does not shut down

复制查看主库后台进程:果然是walsender 进程健在,指向的同步节点是异地DC的机器

INFRA [postgres@ljzdccapp006 ~]# ps -ef | grep postgres

postgres 13444 1 0 Aug19 ? 00:01:07 /opt/pgsql-15/bin/postgres -D /data/pref6005/data

postgres 17151 13444 0 Aug19 ? 00:00:40 postgres: pref170: walsender repmgr 10.25.15.85(54434) streaming C/F5F06000

复制我们尝试再次登陆关闭未成功的主库:数据库的状态是 shutting down ,不允许建立任何连接

INFRA [postgres@ljzdccapp006 ~]# psql

psql: error: connection to server on socket "/tmp/.s.PGSQL.6005" failed: FATAL: the database system is shutting down

复制查看主库日志: 也全部都是 the database system is shutting down , 数据库连接失败的信息

2023-08-20 10:21:40.162 CST [96140] repmgr@[unknown]-10.67.38.171/[unknown]FATAL: the database system is shutting down 2023-08-20 10:21:40.211 CST [96141] [unknown]@[unknown]-10.67.38.173/[unknown]LOG: connection received: host=10.67.38.173 port=56246 2023-08-20 10:21:40.212 CST [96141] repmgr@[unknown]-10.67.38.173/[unknown]FATAL: the database system is shutting down 2023-08-20 10:21:40.282 CST [96143] [unknown]@[unknown]-10.67.38.173/[unknown]LOG: connection received: host=10.67.38.173 port=56248 2023-08-20 10:21:40.283 CST [96143] repmgr@repmgr-10.67.38.173/[unknown]FATAL: the database system is shutting down 2023-08-20 10:21:40.284 CST [96144] [unknown]@[unknown]-10.67.38.173/[unknown]LOG: connection received: host=10.67.38.173 port=56250 2023-08-20 10:21:40.284 CST [96144] repmgr@repmgr-10.67.38.173/[unknown]FATAL: the database system is shutting down复制

至此我们完全模拟了生产由于跨DC延时,关闭主库hang 住关闭失败的情况, 果然是后台进程walsender blocking了实例的关闭。

我们更近一步,从源码的角度看一下为什么 walsender 进程会阻碍了 shutdown process.

我们执行的关闭命令: /opt/pgsql-15/bin/pg_ctl stop -D /data/pref6005/data, 对应的后台代码是 pg_ctl.c 的 do_stop(void)

kill((pid_t) pid, sig) 向后台linux 系统发送 kill 的信号量: #define SIGINT 2 /* Interrupt (ANSI). */

static void

do_stop(void)

{

pgpid_t pid;

pid = get_pgpid(false);

if (pid == 0) /* no pid file */

{

write_stderr(_("%s: PID file \"%s\" does not exist\n"), progname, pid_file);

write_stderr(_("Is server running?\n"));

exit(1);

}

else if (pid < 0) /* standalone backend, not postmaster */

{

pid = -pid;

write_stderr(_("%s: cannot stop server; "

"single-user server is running (PID: %ld)\n"),

progname, pid);

exit(1);

}

if (kill((pid_t) pid, sig) != 0)

{

write_stderr(_("%s: could not send stop signal (PID: %ld): %s\n"), progname, pid,

strerror(errno));

exit(1);

}

if (!do_wait)

{

print_msg(_("server shutting down\n"));

return;

}

else

{

print_msg(_("waiting for server to shut down..."));

if (!wait_for_postmaster_stop())

{

print_msg(_(" failed\n"));

write_stderr(_("%s: server does not shut down\n"), progname);

if (shutdown_mode == SMART_MODE)

write_stderr(_("HINT: The \"-m fast\" option immediately disconnects sessions rather than\n"

"waiting for session-initiated disconnection.\n"));

exit(1);

}

print_msg(_(" done\n"));

print_msg(_("server stopped\n"));

}

}

复制我们看看 后台主进程 postmaster 怎么处理这个信号量: postmaster.c -> pmdie(SIGNAL_ARGS)

/*

* pmdie -- signal handler for processing various postmaster signals.

*/

static void

pmdie(SIGNAL_ARGS)

{

case SIGINT:

/*

* Fast Shutdown:

*

* Abort all children with SIGTERM (rollback active transactions

* and exit) and shut down when they are gone.

*/

if (Shutdown >= FastShutdown)

break;

Shutdown = FastShutdown;

ereport(LOG,

(errmsg("received fast shutdown request")));

/* Report status */

AddToDataDirLockFile(LOCK_FILE_LINE_PM_STATUS, PM_STATUS_STOPPING);

#ifdef USE_SYSTEMD

sd_notify(0, "STOPPING=1");

#endif

if (pmState == PM_STARTUP || pmState == PM_RECOVERY)

{

/* Just shut down background processes silently */

pmState = PM_STOP_BACKENDS;

}

else if (pmState == PM_RUN ||

pmState == PM_HOT_STANDBY)

{

/* Report that we're about to zap live client sessions */

ereport(LOG,

(errmsg("aborting any active transactions")));

pmState = PM_STOP_BACKENDS;

}

/*

* PostmasterStateMachine will issue any necessary signals, or

* take the next step if no child processes need to be killed.

*/

PostmasterStateMachine();

break;

复制关闭postmaster 主进程下所有子进程函数: postmaster.c -> static void PostmasterStateMachine(void)

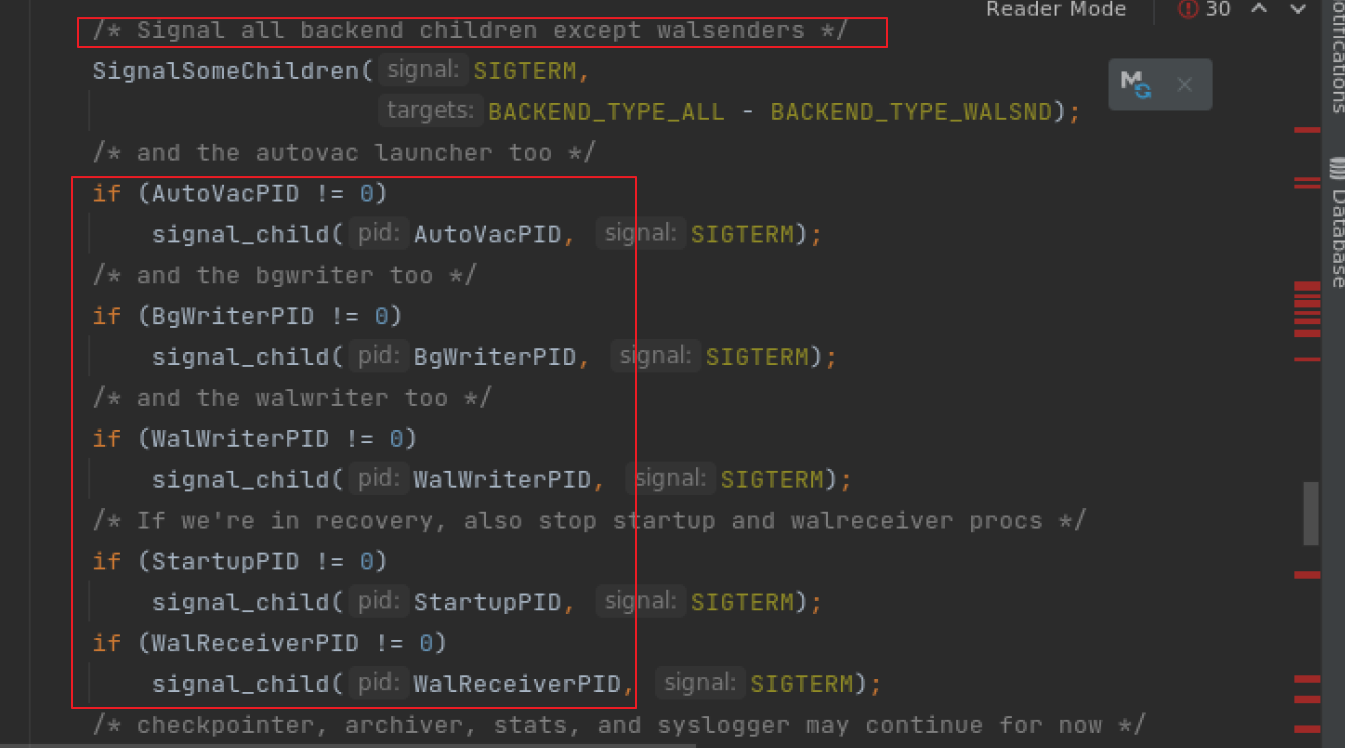

我们可以看到 /* Signal all backend children except walsenders */ , 一幕了然, 也从源码的角度证实了walsenders 进程号是不会被发送信号量 kill掉的

写到最后总结:

1)如果数据库存在异地部署且网络不佳的状况,关闭主库前需要检查数据库延时的lag : select pid,usename,application_name,replay_lag,reply_time from pg_stat_replication;

2)遇到数据库维护重启+数据迁移任务: 可以调整顺序1. 数据库维护重启 2. 执行大量数据任务

3)如果上诉1,2步骤不小心忽略了, 可以尝试直接关闭有延时的异地从库,这样主库进程 walsender 自然会关闭, postmaster 主进程也随之关闭。

Have a fun 🙂 !