目录

- 前言

- 在RHEL7上安装单节点的Vertica数据库

- 用Tableau可视化Vertica数据库之“DB Time”篇

- 用Tableau可视化Vertica数据库之“LPS&TPS”篇

- 用Tableau可视化Vertica数据库之“MBPS&IOPS By Function”篇

- 参考内容

前言

在我的上一篇墨天轮博客文章中描述了什么是Vertica数据库,相信朋友们已经对Vertica数据库有了一定的了解。今天,我首先给大家带来快速安装Vertica数据库和结合具体的业务场景来用Tableau可视化Vertica数据库中的“DB Time”表。说到这里,也许您可能会问,什么是DB Time?因为我是一名Oracle DBA工程师,需要时刻关注Oracle的性能情况,而DB Time是衡量Oracle性能的一个非常重要的指标。为了能够快速了解到Oracle数据库最近一段时间内的DB Time,我们可以通过相关的性能查询SQL语句来得到一段时间内的DB Time取值。但是在Oracle数据库当中DB Time的保留时间是由AWR报告的保留策略决定的,根据实际的业务诉求,我们通常只保留一个月的AWR报告,所以DB Time的数据也只能保留一个月。为了能够尽可能地将DB Time的数据保留的时间长一些,我们可以把DB Time的SQL查询导出到CSV(各个值用逗号分开)文件,然后将这个CSV文件导入到Vertica数据库(Vertica数据库是一种列式存储,支持大规模并行处理,非常适合海量数据的分析处理)然后用Tableau这个数据库可视化工具连接到Vertica数据库就能将DB Time表进行可视化,生成直观形象的折线图,让我们能够快速地了解什么时间段的DB Time值高,什么时间段的DB Time值会相对低一些,进而快速了解业务高峰期是什么时候和数据库的总体运行情况。

其次,我们要介绍将Oracle数据库的LPS(Logons Per Sec,每秒登录数)和TPS(User Transaction Per Sec,每秒用户事务数)的性能查询数据导入Vertica数据库并用Tableau工具连接Vertica进行LPS和TPS的可视化操作。

最后,我们要介绍的是将Oracle数据库的MBPS&IOPS By Function性能查询数据导入Vertica数据库,然后用Tableau工具连接到Vertica,并对导入的性能表进行可视化操作。另外,我们知道Oracle数据库的动态性能视图V$IOSTAT_FUNCTION里有下面的一些数据列和对应的数据类型:

DESC v$iostat_function

Name Null? Type

----------------------------------------------------------------- -------- --------------------------------------------

FUNCTION_ID NUMBER

FUNCTION_NAME VARCHAR2(18)

SMALL_READ_MEGABYTES NUMBER

SMALL_WRITE_MEGABYTES NUMBER

LARGE_READ_MEGABYTES NUMBER

LARGE_WRITE_MEGABYTES NUMBER

SMALL_READ_REQS NUMBER

SMALL_WRITE_REQS NUMBER

LARGE_READ_REQS NUMBER

LARGE_WRITE_REQS NUMBER

NUMBER_OF_WAITS NUMBER

WAIT_TIME NUMBER复制其中,我们使用SQL语句查询V$IOSTAT_FUNCTION中的FUNCTION_ID和FUNCTION_NAME后发现Oracle的IO按FUNCTION分类总共有14个不同的类别,请看下面的查询语句和结果:

SET PAGESIZE 30

COLUMN function_name FOR a18

SELECT function_id, function_name FROM v$iostat_function ORDER BY 1;

FUNCTION_ID FUNCTION_NAME

----------- ------------------------------------

0 RMAN

1 DBWR

2 LGWR

3 ARCH

4 XDB

5 Streams AQ

6 Data Pump

7 Recovery

8 Buffer Cache Reads

9 Direct Reads

10 Direct Writes

11 Smart Scan

12 Archive Manager

13 Others

14 rows selected.复制因此,我们需要从两个维度(最近1分钟和最近1小时)可视化我们所提到的14个类别(在图表中也可将其称作“图例”)的IO(IO分为MBPS和IOPS两类,即每秒的IO读写容量和每秒的IO读写请求)情况。其中,最近1分钟的数据保存在动态性能视图V$IOFUNCMETRIC里,最近1小时的数据保存在动态性能视图V$IOFUNCMETRIC_HISTORY上。所以两个维度和两个IO类别的互相组合,这里将要使用四个SQL查询来实现我们的业务需求。

在RHEL7上安装单节点的Vertica数据库

安装CE(社区免费)版本的RPM包 vertica-11.0.1-2.x86_64.RHEL6.rpm

[root@test ~]# ls -lrht vertica-11.0.1-2.x86_64.RHEL6.rpm

-rw-r--r-- 1 root root 514M Dec 6 16:07 vertica-11.0.1-2.x86_64.RHEL6.rpm

[root@test ~]# rpm -ivh --nodeps vertica-11.0.1-2.x86_64.RHEL6.rpm

warning: vertica-11.0.1-2.x86_64.RHEL6.rpm: Header V3 RSA/SHA256 Signature, key ID f54b82a0: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:vertica-11.0.1-2 ################################# [100%]

Vertica Analytic Database v11.0.1-2 successfully installed on host test

To complete your NEW installation and configure the cluster, run:

/opt/vertica/sbin/install_vertica

To complete your Vertica UPGRADE, run:

/opt/vertica/sbin/update_vertica

----------------------------------------------------------------------------------

Important

----------------------------------------------------------------------------------

Before upgrading Vertica, you must backup your database. After you restart your

database after upgrading, you cannot revert to a previous Vertica software version.

----------------------------------------------------------------------------------

View the latest Vertica documentation at https://www.vertica.com/documentation/vertica/复制此时,可以看到/opt目录下生成了vertica目录,其大小为1.9G。

[root@test opt]# cd vertica/

[root@test vertica]# ls -lrht

total 3.5M

drwxrwxr-x 2 root root 6 Nov 20 08:04 log

-rw-r--r-- 1 root root 3.4M Nov 20 08:11 LICENSES

drwxr-xr-x 2 root root 22 Dec 15 08:53 agent

drwxr-xr-x 2 root root 4.0K Dec 15 08:53 bin

drwxr-xr-x 4 root root 101 Dec 15 08:53 config

drwxr-xr-x 3 root root 26 Dec 15 08:53 examples

drwxr-xr-x 2 root root 57 Dec 15 08:53 en-US

drwxr-xr-x 2 root root 45 Dec 15 08:53 include

drwxr-xr-x 3 root root 101 Dec 15 08:53 java

drwxr-xr-x 2 root root 4.0K Dec 15 08:53 lib

drwxr-xr-x 2 root root 31 Dec 15 08:53 lib64

drwxrwxr-x 4 root root 36 Dec 15 08:53 oss

drwxr-xr-x 19 root root 4.0K Dec 15 08:54 packages

drwxr-xr-x 2 root root 4.0K Dec 15 08:54 sbin

drwxr-xr-x 2 root root 4.0K Dec 15 08:54 scripts

drwxrwxr-x 5 root root 91 Dec 15 08:54 sdk

drwxr-xr-x 9 root root 132 Dec 15 08:54 share

drwxrwxr-x 8 root root 80 Dec 15 08:54 spread

[root@test vertica]# du -sh .

1.9G .复制用install_vertica脚本配置Vertica数据库集群

接下来,我们用install_vertica脚本配置Vertica集群。详见如下操作:

[root@test ~]# /opt/vertica/sbin/install_vertica --hosts 127.0.0.1 --rpm ~/vertica-11.0.1-2.x86_64.RHEL6.rpm --dba-user dbadmin

Vertica Analytic Database 11.0.1-2 Installation Tool

>> Validating options...

Mapping hostnames in --hosts (-s) to addresses...

>> Starting installation tasks.

>> Getting system information for cluster (this may take a while)...

Default shell on nodes:

127.0.0.1 /bin/bash

>> Validating software versions (rpm or deb)...

warning: /root/vertica-11.0.1-2.x86_64.RHEL6.rpm: Header V3 RSA/SHA256 Signature, key ID f54b82a0: NOKEY

>> Beginning new cluster creation...

successfully backed up admintools.conf on 127.0.0.1

>> Creating or validating DB Admin user/group...

Password for new dbadmin user (empty = disabled) <<== 输入 密码 回车 (注意: 不回显)

Successful on hosts (1): 127.0.0.1

Provided DB Admin account details: user = dbadmin, group = verticadba, home = /home/dbadmin

Creating group... Adding group

Validating group... Okay

Creating user... Adding user, Setting credentials

Validating user... Okay

>> Validating node and cluster prerequisites...

Prerequisites not fully met during local (OS) configuration for

verify-127.0.0.1.xml:

HINT (S0305): https://www.vertica.com/docs/11.0.x/HTML/index.htm#cshid=S0305

HINT(eS0305): TZ is unset for dbadmin. Consider updating .profile or

.bashrc

WARN (N0010): https://www.vertica.com/docs/11.0.x/HTML/index.htm#cshid=N0010

WARN(eN0010): Linux iptables (firewall) has some non-trivial rules in

tables: filter, mangle

WARN (S0112): https://www.vertica.com/docs/11.0.x/HTML/index.htm#cshid=S0112

WARN(eS0112): vm.swappiness is higher than recommended: your 30 > 1

FAIL (S0312): https://www.vertica.com/docs/11.0.x/HTML/index.htm#cshid=S0312

FAIL(eS0312): Transparent hugepages is set to 'never'. Must be 'always'.

System prerequisites failed. Threshold = WARN

Hint: Fix above failures or use --failure-threshold

Installation FAILED with errors.

****

AdminTools and your existing Vertica databases may be unavailable.

Investigate the above warnings/errors and re-run installation.

****复制根据提示信息排错并重新配置集群

从上面的安装中,我们可以看到有四个重要的消息提示,它们依次为:HINT (S0305)、WARN (N0010)、WARN (S0112)和FAIL (S0312),顺便还告知了网址和采取的解决办法。我们接着进行如下操作:

-- https://www.vertica.com/docs/11.0.x/HTML/Content/Authoring/InstallationGuide/BeforeYouInstall/TZenvironmentVar.htm?cshid=S0305

[root@test ~]# echo 'export TZ="Asia/Shanghai"' >> /home/dbadmin/.bash_profile

-- https://www.vertica.com/docs/11.0.x/HTML/Content/Authoring/InstallationGuide/BeforeYouInstall/CheckforSwappiness.htm?cshid=S0112

[root@test ~]# echo 0 > /proc/sys/vm/swappiness

[root@test ~]# echo 'vm.swappiness = 0' >> /etc/sysctl.conf

-- https://www.vertica.com/docs/11.0.x/HTML/Content/Authoring/InstallationGuide/BeforeYouInstall/transparenthugepages.htm?cshid=S0312

[root@test ~]# echo always > /sys/kernel/mm/transparent_hugepage/enabled

[root@test ~]# vi /etc/rc.local

......

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo always > /sys/kernel/mm/transparent_hugepage/enabled

fi

......

"/etc/rc.local" 34L, 1357C written

[root@test ~]# chmod +x /etc/rc.d/rc.local复制经过上述操作以后,我们接着用install_vertica脚本进行配置,注意:最后加上参数和值“--failure-threshold FAIL”。因为WARN (N0010)的警告非常奇怪,而且我们已经关闭防火墙,Vertica官网上说,只能加这个参数来回避这个问题。

[root@test ~]# /opt/vertica/sbin/install_vertica --hosts 127.0.0.1 --rpm ~/vertica-11.0.1-2.x86_64.RHEL6.rpm --dba-user dbadmin --failure-threshold FAIL

Vertica Analytic Database 11.0.1-2 Installation Tool

>> Validating options...

Mapping hostnames in --hosts (-s) to addresses...

>> Starting installation tasks.

>> Getting system information for cluster (this may take a while)...

Default shell on nodes:

127.0.0.1 /bin/bash

>> Validating software versions (rpm or deb)...

warning: /root/vertica-11.0.1-2.x86_64.RHEL6.rpm: Header V3 RSA/SHA256 Signature, key ID f54b82a0: NOKEY

>> Beginning new cluster creation...

successfully backed up admintools.conf on 127.0.0.1

>> Creating or validating DB Admin user/group...

Successful on hosts (1): 127.0.0.1

Provided DB Admin account details: user = dbadmin, group = verticadba, home = /home/dbadmin

Creating group... Group already exists

Validating group... Okay

Creating user... User already exists

Validating user... Okay

>> Validating node and cluster prerequisites...

Prerequisites not fully met during local (OS) configuration for

verify-127.0.0.1.xml:

WARN (N0010): https://www.vertica.com/docs/11.0.x/HTML/index.htm#cshid=N0010

WARN(eN0010): Linux iptables (firewall) has some non-trivial rules in

tables: filter, mangle

System prerequisites passed. Threshold = FAIL

>> Establishing DB Admin SSH connectivity...

Installing/Repairing SSH keys for dbadmin

>> Setting up each node and modifying cluster...

Creating Vertica Data Directory...

Updating agent...

Creating node node0001 definition for host 127.0.0.1

... Done

>> Sending new cluster configuration to all nodes...

Starting or restarting agent...

>> Completing installation...

Running upgrade logic

Installation complete.

Please evaluate your hardware using Vertica's validation tools:

https://www.vertica.com/docs/11.0.x/HTML/index.htm#cshid=VALSCRIPT

To create a database:

1. Logout and login as dbadmin. (see note below)

2. Run /opt/vertica/bin/adminTools as dbadmin

3. Select Create Database from the Configuration Menu

Note: Installation may have made configuration changes to dbadmin

that do not take effect until the next session (logout and login).

To add or remove hosts, select Cluster Management from the Advanced Menu.复制在Vertica集群上创建一个新的数据库

用Vertica官网上的11.0.x帮助文档中的Creating a Database and Users来创建一个新的数据库vdb_oracle_perf。具体操作如下所示:

1. 用su命令将从操作系统的root用户切换到dbadmin用户,查看集群状态,它应该返回空值。注:因为返回行很冗长,所以在此省略了屏幕上提示的17条条款内容。

[root@test ~]# su - dbadmin

[dbadmin@test ~]$

[dbadmin@test ~]$ /opt/vertica/bin/admintools -t view_cluster

Micro Focus End User License Agreement - Enterprise Version

......

5200-0949 v1.0, 2017

? Copyright 2015-2017 EntIT Software LLC

Enter ACCEPT to accept license terms & conditions, or REJECT to not accept the license and quit: ACCEPT <<== 输入 ACCEPT

DB | Host | State

----+------+-------复制2. 创建数据库“vdb_oracle_perf”,并设置密码为“oracle_perf”。

[dbadmin@test ~]$ /opt/vertica/bin/admintools -t create_db --data_path=/home/dbadmin --catalog_path=/home/dbadmin --database=vdb_oracle_perf --password=oracle_perf --hosts=localhost复制3. 用vsql命令登录到客户端,密码为前面设置的密码,最终出现提示符“dbadmin=> ”。

[dbadmin@test ~]$ /opt/vertica/bin/vsql

Password: <<== 输入密码(不回显)

Welcome to vsql, the Vertica Analytic Database interactive terminal.

Type: \h or \? for help with vsql commands

\g or terminate with semicolon to execute query

\q to quit

dbadmin=> 复制创建连接用户并授权

创建数据库的连接用户为“Qwz”并设置密码,然后授予公共schema给该用户。其具体的操作命令,如下所示:

dbadmin=> CREATE USER Qwz IDENTIFIED BY 'oracle';

dbadmin=> GRANT USAGE ON SCHEMA PUBLIC TO Qwz;复制用Tableau可视化Vertica数据库之“DB Time”篇

将Oracle数据库的DB Time查询导入Vertica数据库

将Oracle数据库的DB Time的两个SQL查询分别保存为CSV文件

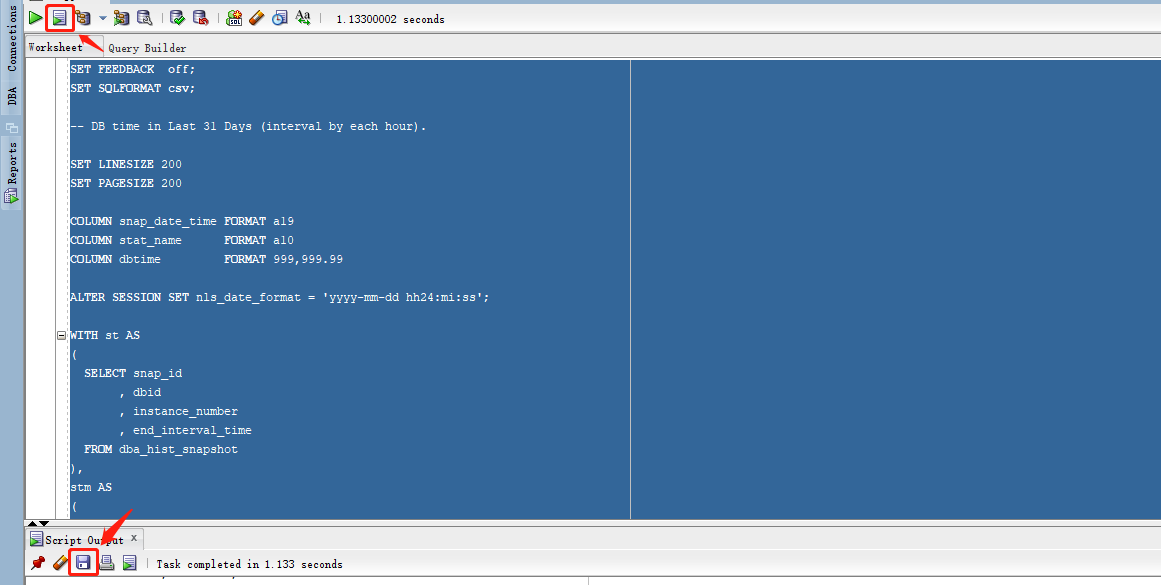

用Oracle SQL Developer将下面的两个DB Time的SQL查询以脚本方式运行并保存为CSV文件,详见具体的SQL代码和对应的屏幕截图。

SET FEEDBACK off;

SET SQLFORMAT csv;

-- DB time in Last 31 Days (interval by each hour).

SET LINESIZE 200

SET PAGESIZE 200

COLUMN snap_date_time FORMAT a19

COLUMN stat_name FORMAT a10

COLUMN dbtime FORMAT 999,999.99

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

WITH st AS

(

SELECT snap_id

, dbid

, instance_number

, end_interval_time

FROM dba_hist_snapshot

),

stm AS

(

SELECT snap_id

, dbid

, instance_number

, stat_name

, value

FROM dba_hist_sys_time_model

WHERE stat_name = 'DB time'

),

dbtime_per_hour AS

(

SELECT CAST(st.end_interval_time AS DATE) snap_date_time

, stm.stat_name

, ROUND((stm.value - LAG(stm.value, 1, 0) OVER (PARTITION BY stm.dbid, stm.instance_number ORDER BY stm.snap_id))/1e6/6e1, 2) dbtime

FROM st

, stm

WHERE st.snap_id = stm.snap_id

AND st.instance_number = stm.instance_number

AND st.dbid = stm.dbid

AND CAST(st.end_interval_time AS DATE) >= SYSDATE - 31

ORDER BY snap_date_time

)

SELECT snap_date_time -- the group column

, stat_name -- the series column

, dbtime -- the value column

FROM dbtime_per_hour

WHERE dbtime NOT IN (SELECT MAX(dbtime) FROM dbtime_per_hour)

;复制

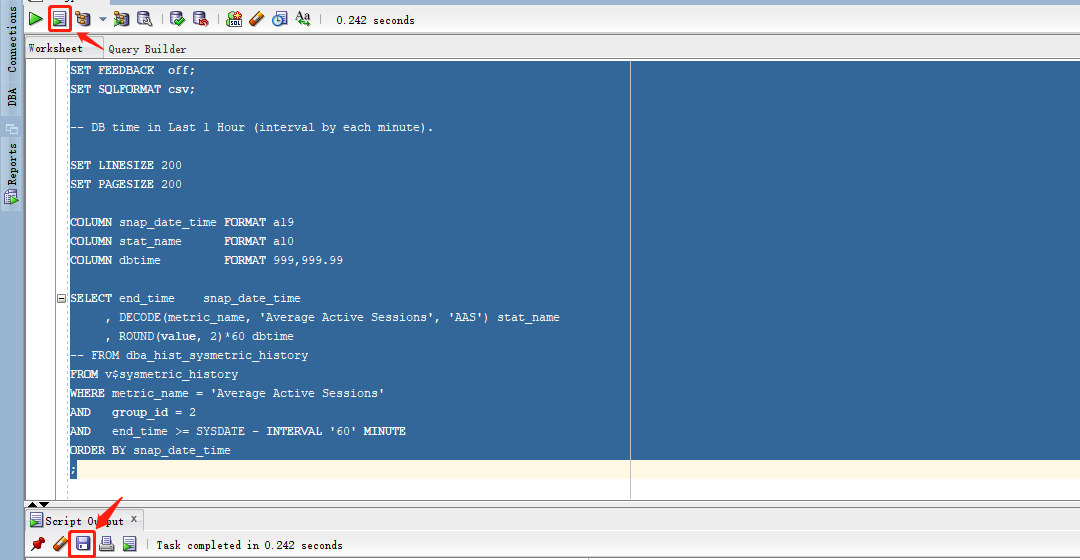

SET FEEDBACK off;

SET SQLFORMAT csv;

-- DB time in Last 1 Hour (interval by each minute).

SET LINESIZE 200

SET PAGESIZE 200

COLUMN snap_date_time FORMAT a19

COLUMN stat_name FORMAT a10

COLUMN dbtime FORMAT 999,999.99

SELECT end_time snap_date_time

, DECODE(metric_name, 'Average Active Sessions', 'AAS') stat_name

, ROUND(value, 2)*60 dbtime

-- FROM dba_hist_sysmetric_history

FROM v$sysmetric_history

WHERE metric_name = 'Average Active Sessions'

AND group_id = 2

AND end_time >= SYSDATE - INTERVAL '60' MINUTE

ORDER BY snap_date_time

;复制

将两个CSV文件上传到服务器/home/dbadmin目录下

[dbadmin@test ~]$ ls -lrht

total 40K

drwxr-xr-x 5 dbadmin verticadba 134 Dec 15 14:06 vdb_oracle_perf

-rw-r--r-- 1 dbadmin verticadba 27K Dec 16 18:37 oracle_dbtime.csv <<==

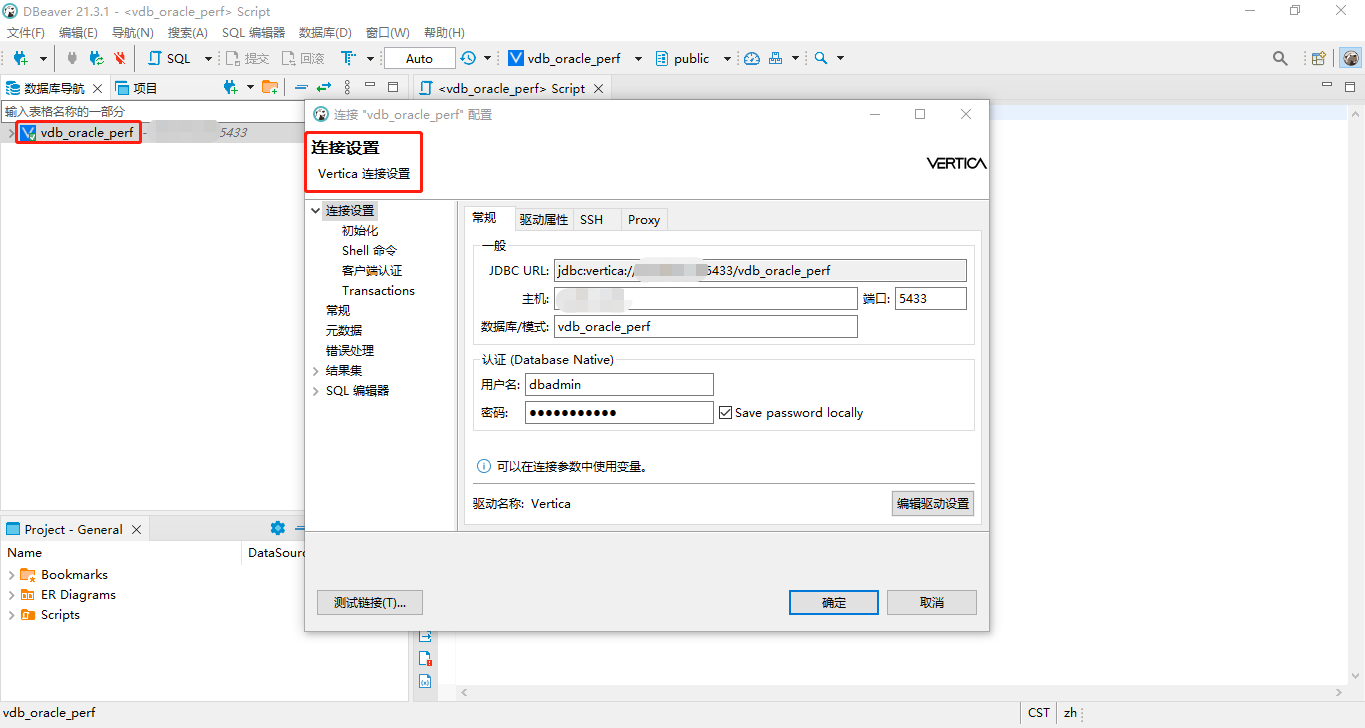

-rw-r--r-- 1 dbadmin verticadba 1.9K Dec 16 18:37 oracle_dbtime_2.csv <<==复制用DBeaver 21.3.1客户端工具连接到Vertica数据库

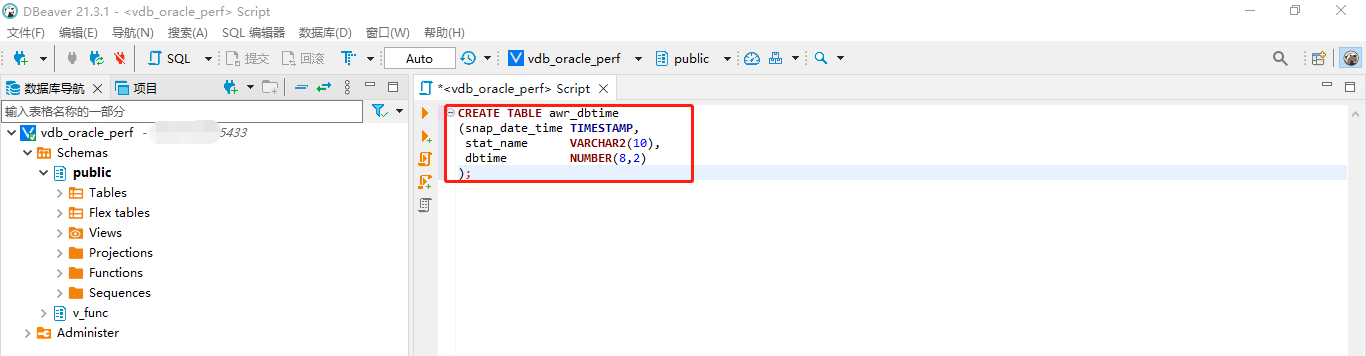

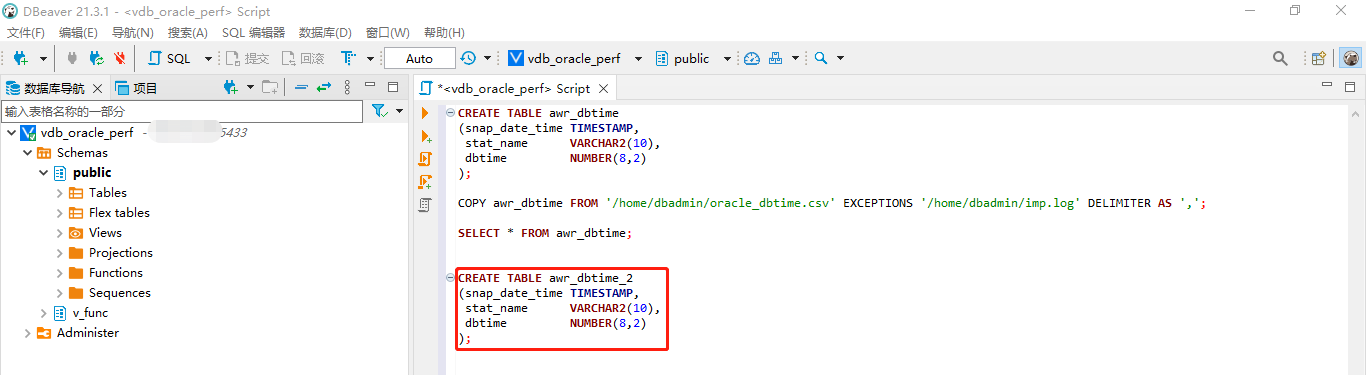

在DBeaver 21.3.1上创建两张表awr_dbtime和awr_dbtime_2

创建步骤分别详见如下两图:

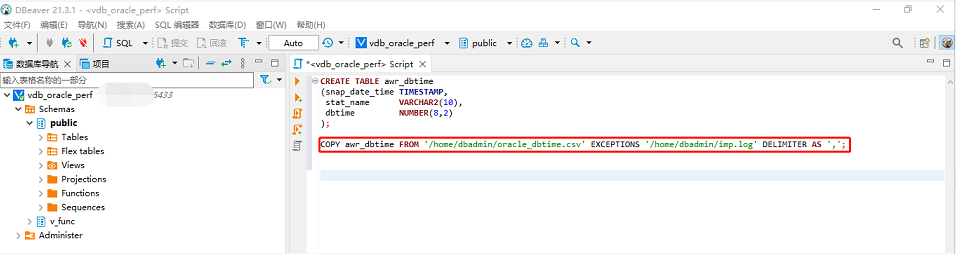

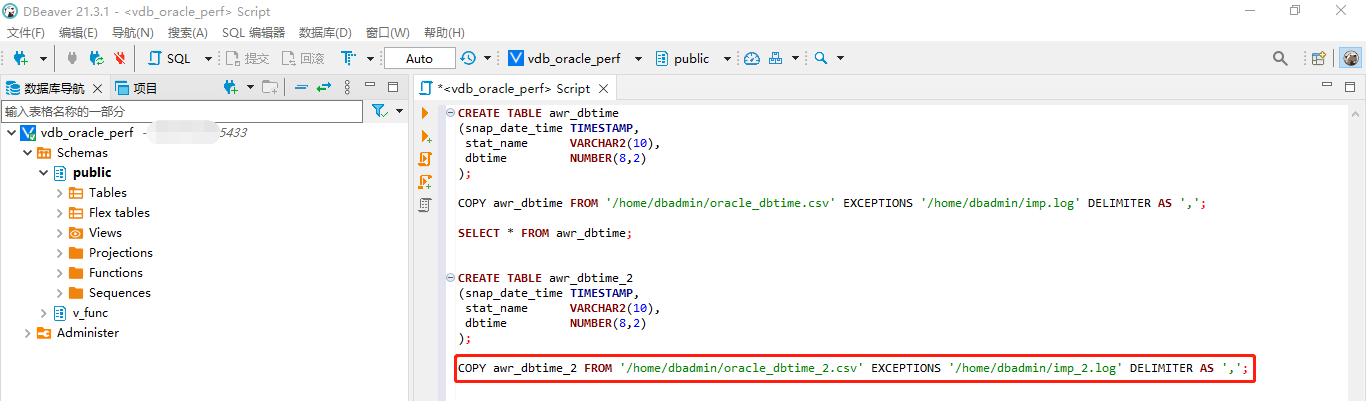

将两个CSV文件分别导入刚创建的两张表中

相应的屏幕截图如下所示:

用Tableau可视化Vertica数据库的表

用Tableau连接Vertica数据库

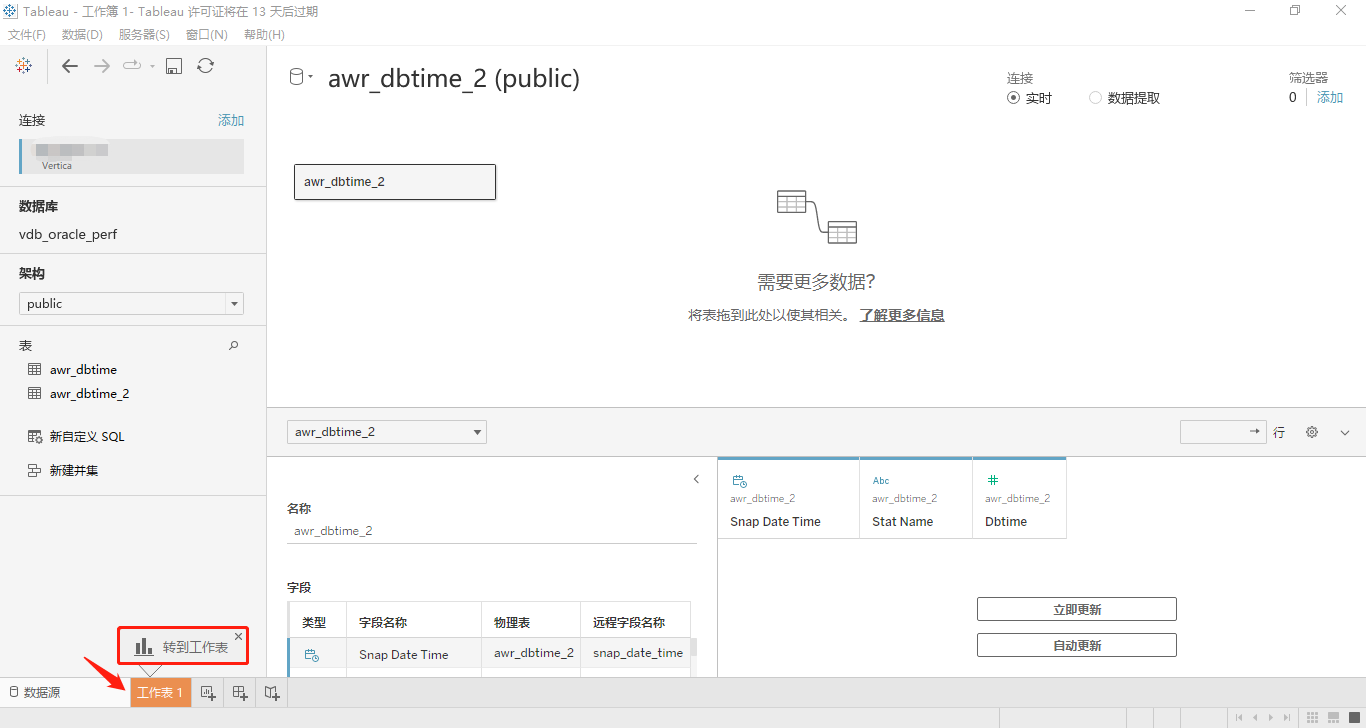

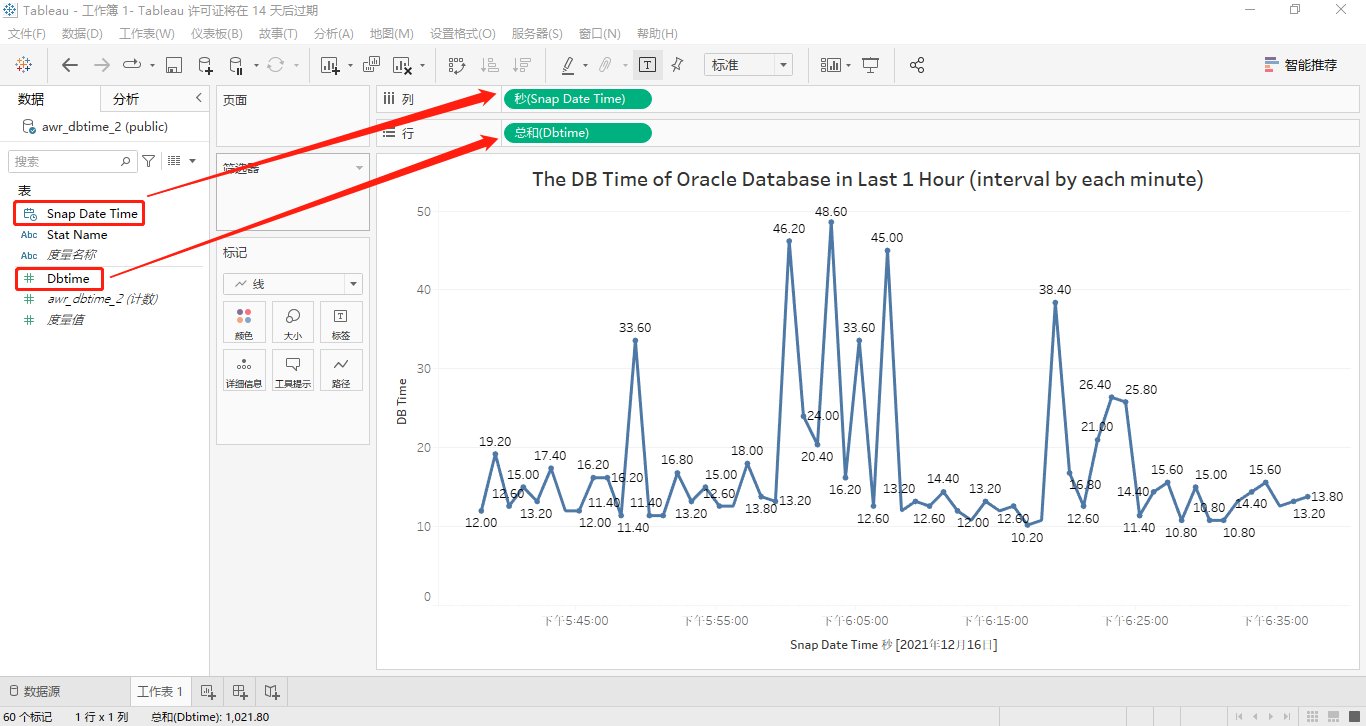

用Tableau可视化表awr_dbtime_2(最近1小时,按每分钟间隔)

在连接成功Vertica数据库以后,首先选择架构(这里翻译得可能有误,应该叫schema)public,然后将表awr_dbtime_2拖动到指定的位置,单击底部的“工作表1”,顺次见如下两图:

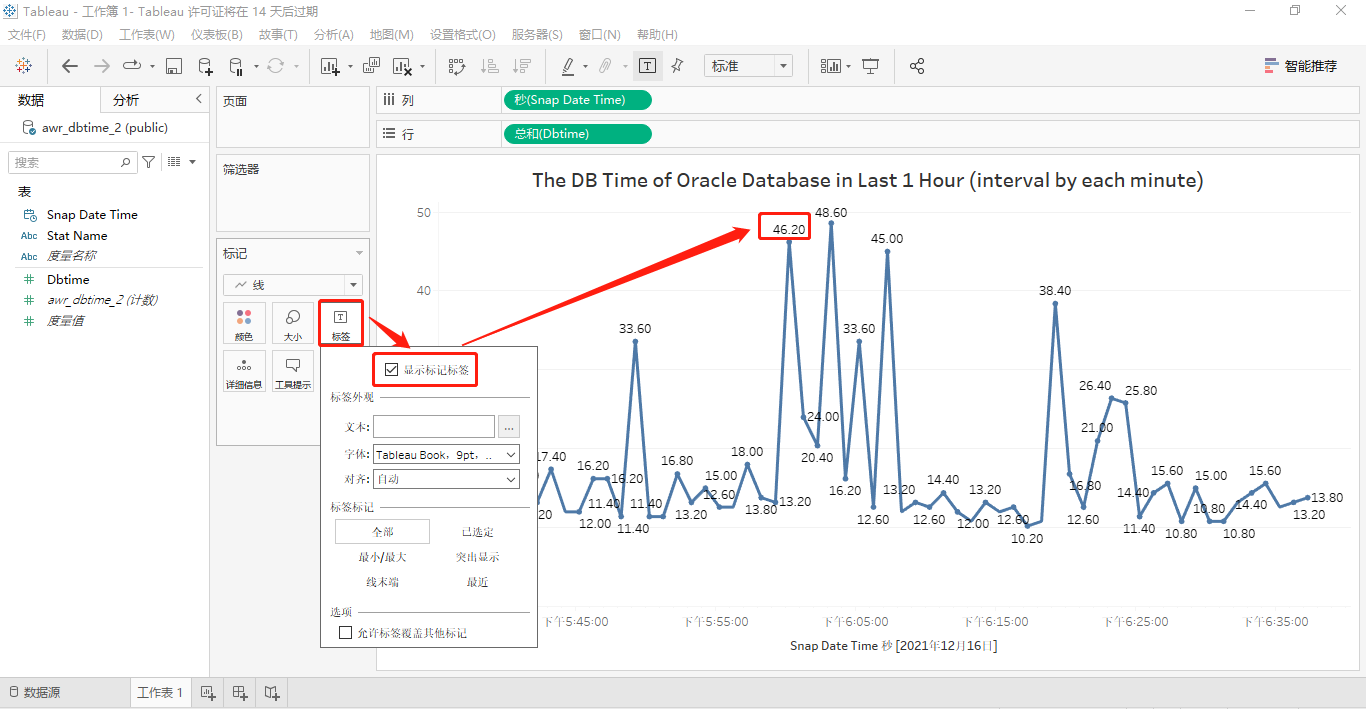

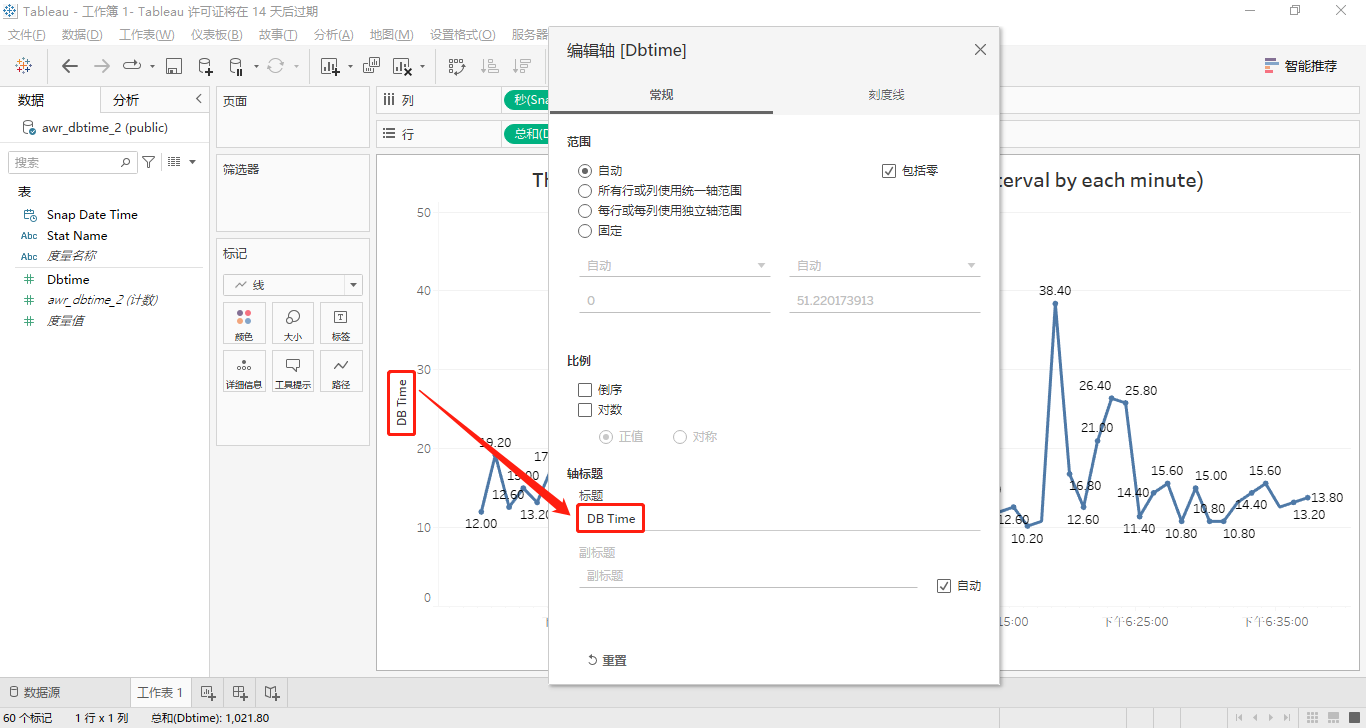

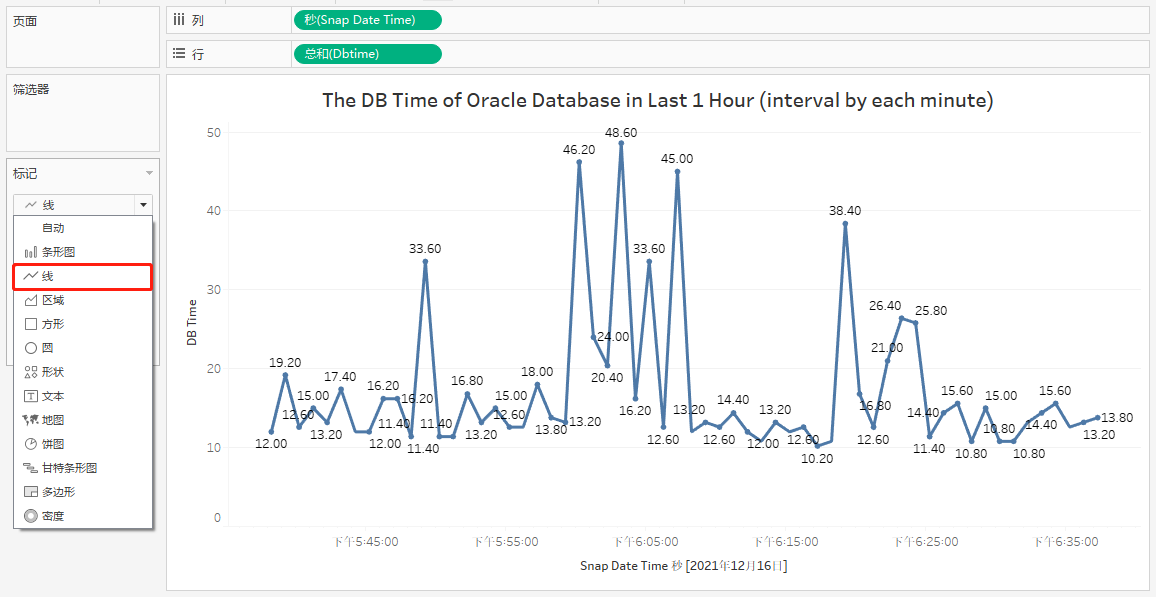

接着,我们按照简单的五个步骤来进行可视化表awr_dbtime_2,这个表里包含按每分钟间隔的最近1小时的DB Time取值。每个步骤的屏幕截图依次如下所示:

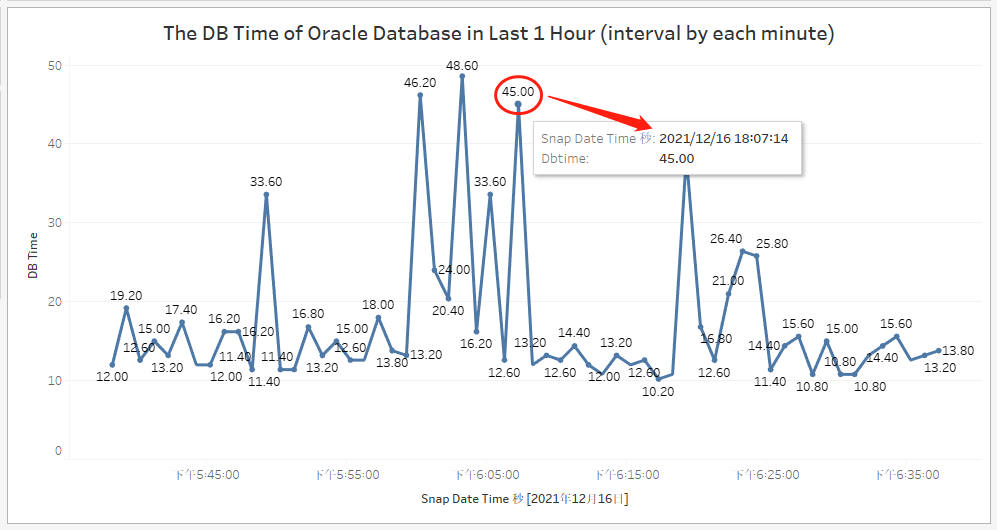

最后,我们用鼠标移动到一个对应的标签上面,它会显示具体的快照时间和DB Time取值,效果如下图:

至此,最近1小时的DB Time(按每分钟间隔)的折线图已经制作完成。另外,Tableau可以将其导出并保存为PDF或PowerPoint格式的文件。

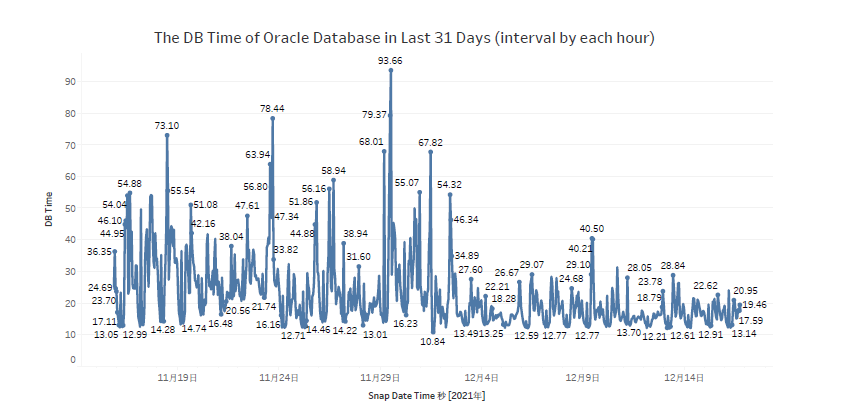

用Tableau可视化表awr_dbtime(最近31天,按每小时间隔)

和前面的可视化表awr_dbtime_2的方法相同,在此省略一些设置步骤,直接附上最终的折线图。

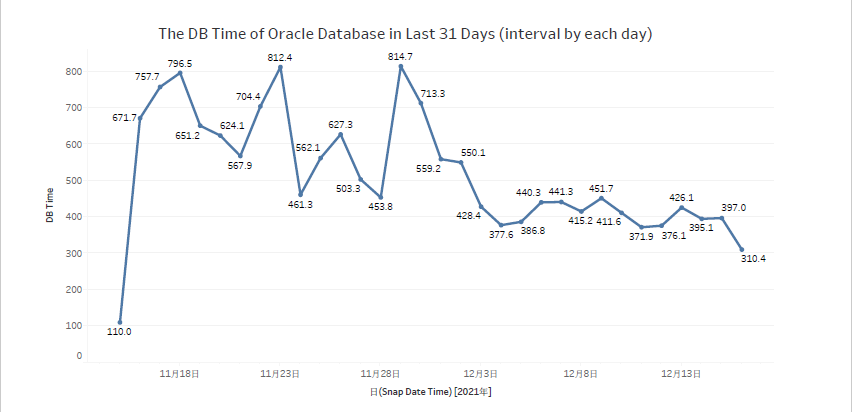

用Tableau可视化表awr_dbtime(最近31天,按每天间隔)

同样的思路和方法,最终的效果图如下所示:

以上就是用Tableau可视化Vertica数据库之“DB Time”篇的全部内容,欢迎朋友们在评论区提出您的宝贵意见或建议,我将认真阅您的反馈信息,并尽快逐一回复,谢谢!

另外,您也可以在我的GitHub上找到如何查询Oracle数据库最近31天的DB Time(按每小时间隔)和最近1小时的DB Time(按每分钟间隔)。

用Tableau可视化Vertica数据库之“LPS&TPS”篇

将Oracle数据库的LPS&TPS查询导入Vertica数据库

将Oracle数据库的LPS&TPS查询保存为CSV文件

这里需要说明一下,LPS和TPS分别是Oracle度量的两个指标,我们使用一个SQL查询即可完成,但是我们需要从三个维度才能更详细地阐述LPS&TPS。这三个维度分别是:最近1小时(按每分钟间隔),最近24小时(按每小时间隔)和最近31天(按每小时间隔)。和上一篇博客文章类似的操作方法,我们在Oracle SQL Developer中以脚本方式查询出LPS&TPS,然后将其保存为CSV文件。由于文件中的数据行过长,所以不便在这里贴出,现已上传到我的GitHub,您可以从这里查看,它们分别是:oracle_lps_tps_in_last_1_hour.csv,oracle_lps_tps_in_last_24_hours.csv和oracle_lps_tps_in_last_31_days.csv。三个维度的SQL代码分别如下所示:

-- Logons Per Sec & User Transaction Per Sec in Last 1 Hour (interval by each minute).

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 200

COLUMN metric_name FORMAT a12

COLUMN snap_date_time FORMAT a20

COLUMN psn FORMAT 999,999.99

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') snap_date_time -- the group column

, DECODE(metric_name, 'User Transaction Per Sec', 'Transactions', 'Logons Per Sec', 'Logons') metric_name -- the series column

, ROUND(value, 2) psn -- the value column

FROM v$sysmetric_history

WHERE metric_name IN ('User Transaction Per Sec', 'Logons Per Sec')

AND group_id = 2 -- just retrieve the name with "System Metrics Long Duration" in v$metricgroup

-- ORDER BY snap_date_time

-- , metric_name

ORDER BY metric_name

, snap_date_time复制-- Logons Per Sec & User Transaction Per Sec in Last 24 Hours (interval by each hour).

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 200

COLUMN metric_name FORMAT a12

COLUMN snap_date_time FORMAT a20

COLUMN psn FORMAT 999,999.99

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') snap_date_time -- the group column

, DECODE(metric_name, 'User Transaction Per Sec', 'Transactions', 'Logons Per Sec', 'Logons') metric_name -- the series column

, ROUND(average, 2) psn -- the value column

FROM dba_hist_sysmetric_summary

WHERE metric_name IN ('User Transaction Per Sec', 'Logons Per Sec')

AND end_time >= SYSDATE - 1

-- ORDER BY snap_date_time

-- , metric_name

ORDER BY metric_name

, snap_date_time

;复制-- Logons Per Sec & User Transaction Per Sec in Last 31 Days (interval by each hour).

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 200

COLUMN metric_name FORMAT a12

COLUMN snap_date_time FORMAT a20

COLUMN psn FORMAT 999,999.99

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') snap_date_time -- the group column

, DECODE(metric_name, 'User Transaction Per Sec', 'Transactions', 'Logons Per Sec', 'Logons') metric_name -- the series column

, ROUND(average, 2) psn -- the value column

FROM dba_hist_sysmetric_summary

WHERE metric_name IN ('User Transaction Per Sec', 'Logons Per Sec')

AND end_time >= SYSDATE - 30

-- ORDER BY snap_date_time

-- , metric_name

ORDER BY metric_name

, snap_date_time

;复制将所有CSV文件上传到Vertica服务器的/home/dbadmin目录下

这里,省略了上传的过程和一些授权相关的步骤,最后的上传结果详见“<<==”所指向的三个CSV文件。

[dbadmin@test ~]$ ls -lrht

total 112K

drwxr-xr-x 5 dbadmin verticadba 134 Dec 15 14:06 vdb_oracle_perf

-rw-r--r-- 1 dbadmin verticadba 27K Dec 16 18:37 oracle_dbtime.csv

-rw-r--r-- 1 dbadmin verticadba 1.9K Dec 16 18:37 oracle_dbtime_2.csv

-rw-rw-r-- 1 dbadmin verticadba 390 Dec 16 18:44 imp.log

-rw-rw-r-- 1 dbadmin verticadba 393 Dec 16 18:45 imp_2.log

-rw-r--r-- 1 dbadmin verticadba 4.7K Dec 20 09:42 oracle_lps_tps_in_last_1_hour.csv <<==

-rw-r--r-- 1 dbadmin verticadba 2.0K Dec 20 09:45 oracle_lps_tps_in_last_24_hours.csv <<==

-rw-r--r-- 1 dbadmin verticadba 57K Dec 20 09:47 oracle_lps_tps_in_last_31_days.csv <<==复制用vsql客户端命令连接到Vertica数据库

这次我们不用DBeaver客户端工具去连接Vertica数据库了,哈哈!因为在Vertica中自带了一个客户端,vsql的Linux命令行工具(类似于Oracle的SQL*Plus),而且使用它在命令行接口上更加方便和快捷。连接过程详见下面的操作步骤:

[root@test ~]# su - dbadmin

[dbadmin@test ~]$ /opt/vertica/bin/vsql -h 127.0.0.1 vdb_oracle_perf dbadmin

Password:

Welcome to vsql, the Vertica Analytic Database interactive terminal.

Type: \h or \? for help with vsql commands

\g or terminate with semicolon to execute query

\q to quit

vdb_oracle_perf=> 复制在Vertica数据库中创建相关的LPS&TPS表

在vsql下分别创建三个表,表名依次为:oracle_lps_tps_in_last_1_hour,oracle_lps_tps_in_last_24_hours和oracle_lps_tps_in_last_31_days。其操作步骤分别如下所示:

vdb_oracle_perf=> CREATE TABLE public.oracle_lps_tps_in_last_1_hour

vdb_oracle_perf=> (snap_date_time TIMESTAMP,

vdb_oracle_perf=> metric_name VARCHAR2(20),

vdb_oracle_perf=> psn NUMBER(8,2)

vdb_oracle_perf=> );

CREATE TABLE

vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.oracle_lps_tps_in_last_24_hours

vdb_oracle_perf=> (snap_date_time TIMESTAMP,

vdb_oracle_perf=> metric_name VARCHAR2(20),

vdb_oracle_perf=> psn NUMBER(8,2)

vdb_oracle_perf=> );

CREATE TABLE

vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.oracle_lps_tps_in_last_31_days

vdb_oracle_perf=> (snap_date_time TIMESTAMP,

vdb_oracle_perf=> metric_name VARCHAR2(20),

vdb_oracle_perf=> psn NUMBER(8,2)

vdb_oracle_perf=> );

CREATE TABLE

vdb_oracle_perf=> 复制使用COPY命令将CSV文件导入刚创建的表中

导入过程请看下面的具体步骤:

vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.oracle_lps_tps_in_last_1_hour FROM '/home/dbadmin/oracle_lps_tps_in_last_1_hour.csv' EXCEPTIONS '/home/dbadmin/imp_lps_tps_1.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_lps_tps_1.log], [/home/dbadmin/imp_lps_tps_1.log.1], etc

Rows Loaded

-------------

122

(1 row)

vdb_oracle_perf=> COPY public.oracle_lps_tps_in_last_24_hours FROM '/home/dbadmin/oracle_lps_tps_in_last_24_hours.csv' EXCEPTIONS '/home/dbadmin/imp_lps_tps_2.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_lps_tps_2.log], [/home/dbadmin/imp_lps_tps_2.log.1], etc

Rows Loaded

-------------

48

(1 row)

vdb_oracle_perf=> COPY public.oracle_lps_tps_in_last_31_days FROM '/home/dbadmin/oracle_lps_tps_in_last_31_days.csv' EXCEPTIONS '/home/dbadmin/imp_lps_tps_3.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_lps_tps_3.log], [/home/dbadmin/imp_lps_tps_3.log.1], etc

Rows Loaded

-------------

1440

(1 row)

vdb_oracle_perf=> 复制用Tableau可视化Vertica数据库的表

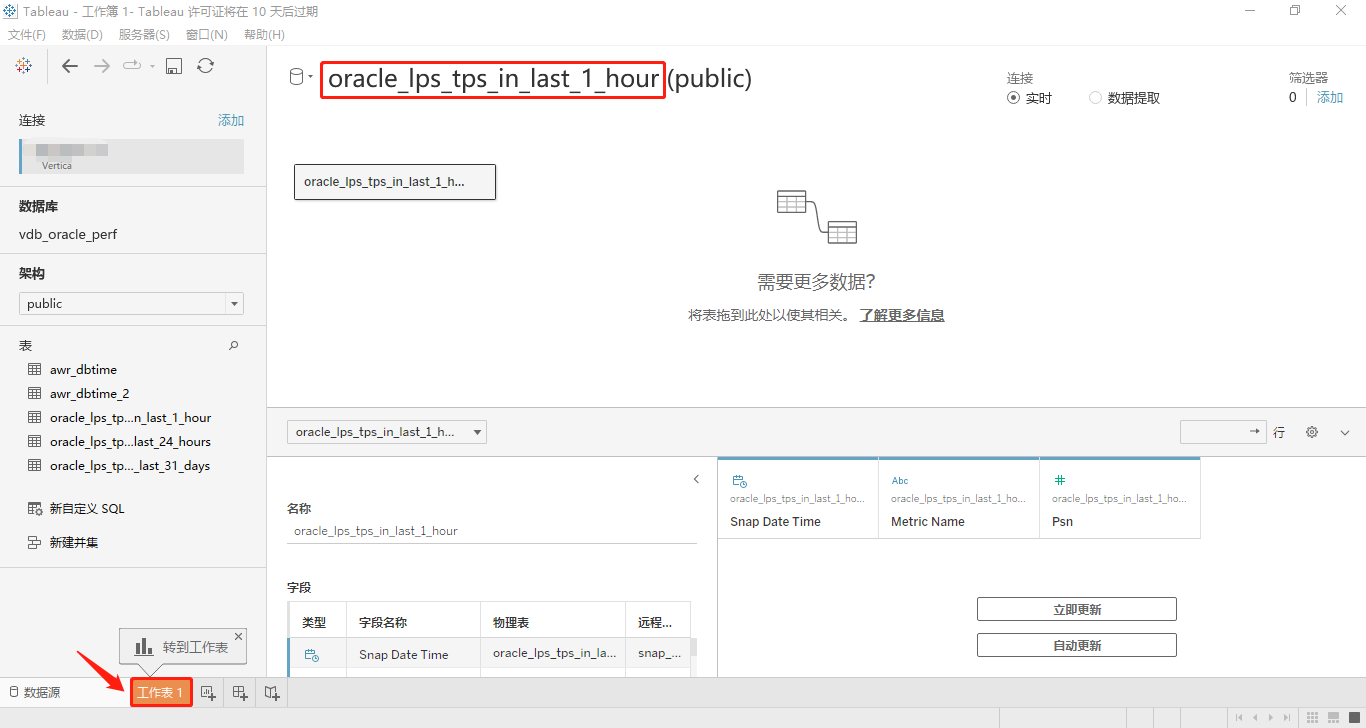

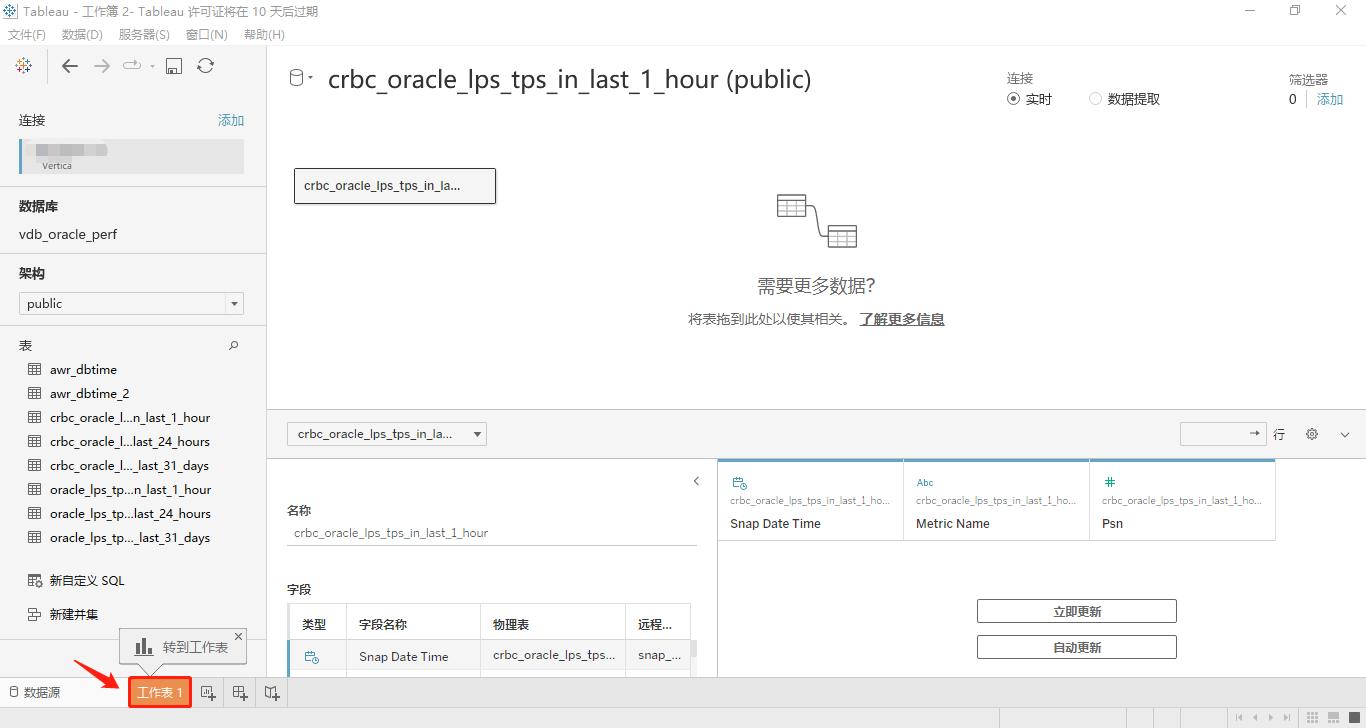

在Tableau中连接Vertica数据库并选择schema为public,将表oracle_lps_tps_in_last_1_hour用鼠标拖动到指定位置,然后转到工作表,准备下一步的可视化操作。对应的两个屏幕截图分别是:

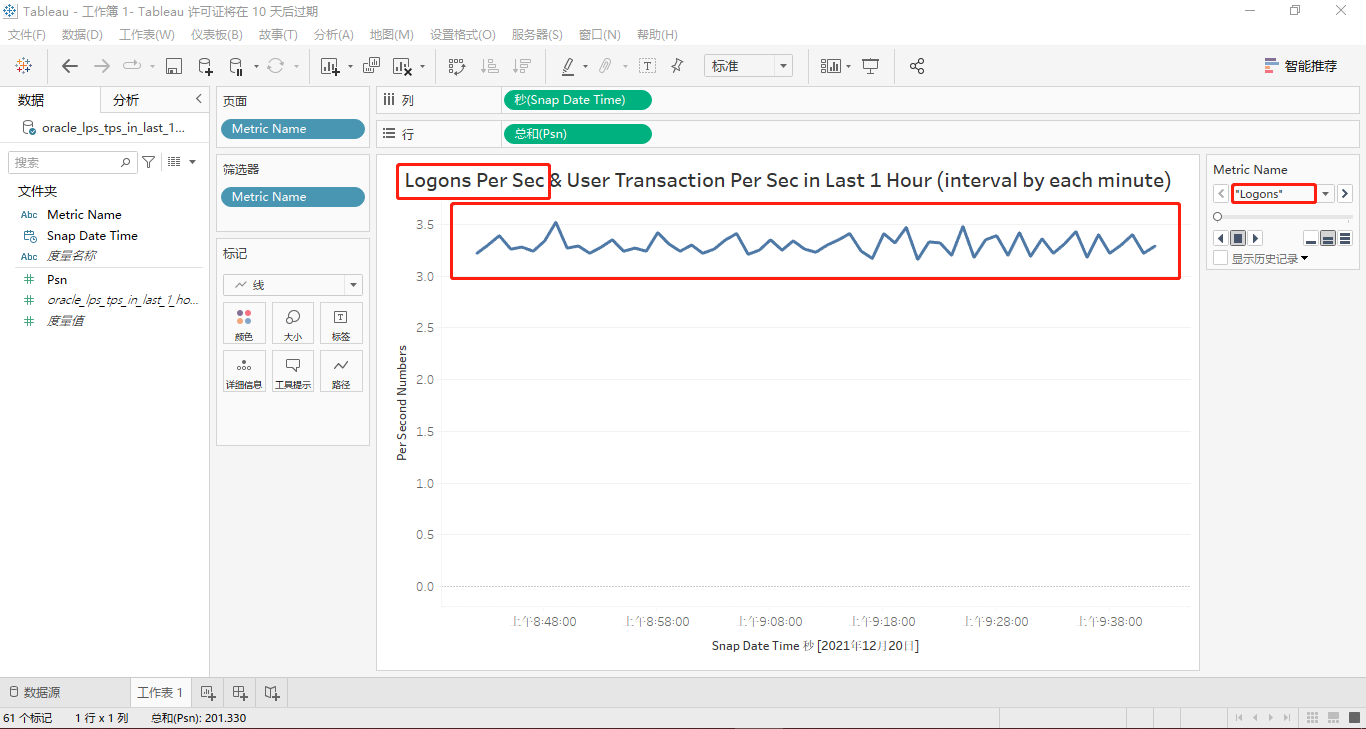

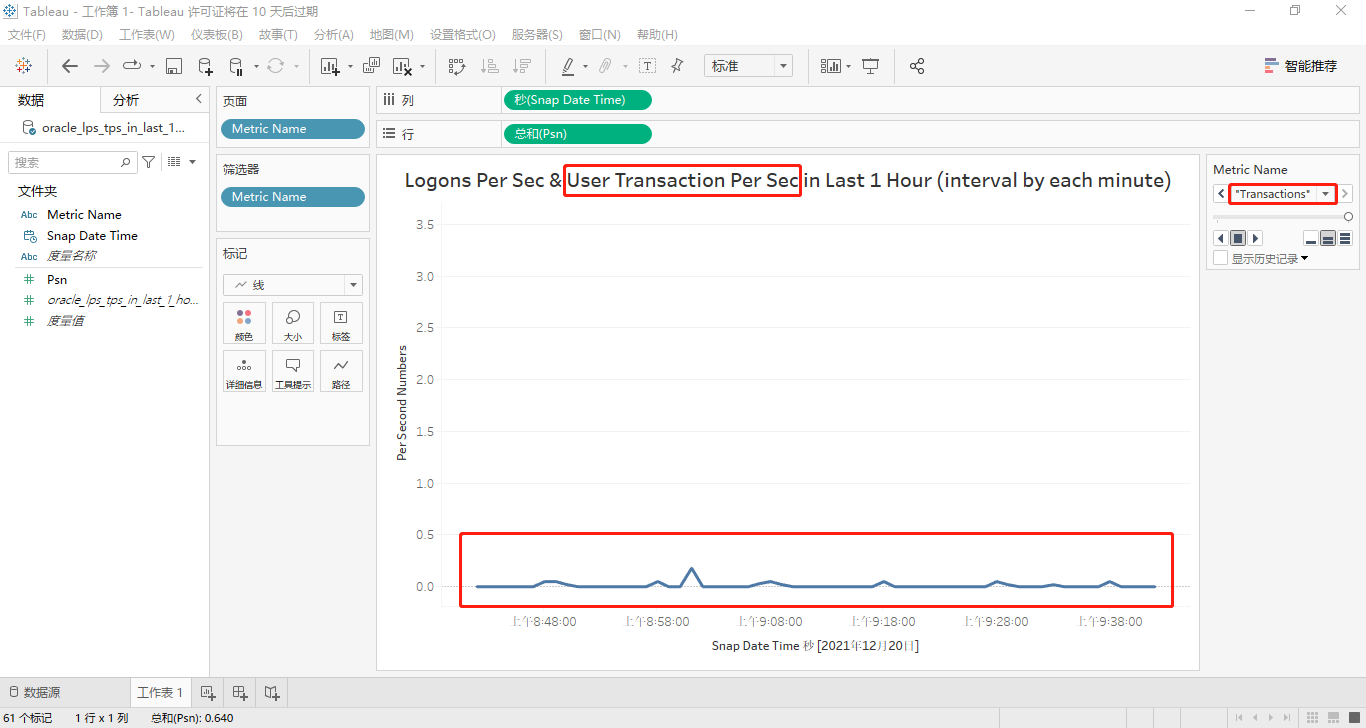

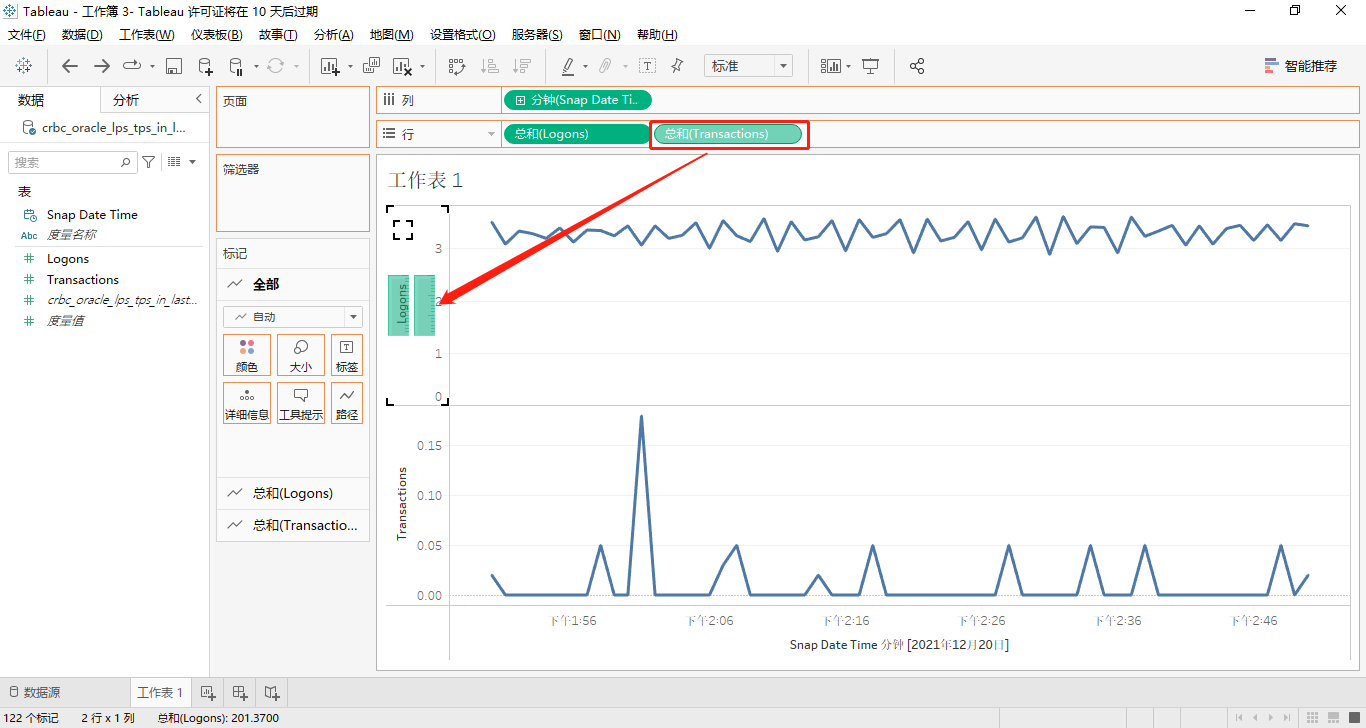

在工作表区域上,我们把度量名称Snap Date Time用鼠标拖动到标签名为“列”的标签框里(默认是年,将其展开到秒),把度量值Psn拖动到标签名为“行”的标签框中,再将度量名称Metric Name拖动到标签名为“页面”的标签里。与此同时,我们会看到在整个页面的右边区域上新增了一个Metric Name的标签。不幸的是,这两个度量“Logons”和“Transactions”不能同时出现在一个“折线图”中。我们需要在标签Metric Name上选择Logons或Transactions才能出现与其对应的折线图。详见如下两图的红框标明位置:

回头想想,造成这个问题的原因是表oracle_lps_tps_in_last_1_hour的列metric name的值Logons和Transactions在同一列,也就是其对应的度量值psn均在同一列,所以这两个度量各称无法出现在同一个折线图上。因此我们需要把metric name的两个值所在的行转变为两个列,在这两个列中分别保存各自的度量值psn,这个需求我们称之为行转列操作。接着在Tableau中的Vertica数据库表oracle_lps_tps_in_last_1_hour才会有两个不同的度量。到这里,我们就暂先放弃了对表oracle_lps_tps_in_last_24_hours和oracle_lps_tps_in_last_31_days的可视化操作。

对原始SQL进行行转列改造,再重复执行前两步

1. 三个维度的行转列部分SQL代码如下所示(由于代码过多,这里只贴出行转列的关键部分,其余内容和前面展示的SQL代码完全一致):

-- Converting rows TO columns Based on Logons Per Sec & User Transaction Per Sec in Last 1 Hour (interval by each minute).

......

WITH lps_tps_in_last_1_hour AS

(

......

)

SELECT *

FROM lps_tps_in_last_1_hour

PIVOT ( MAX(psn)

FOR metric_name IN

( 'Logons' AS "Logons"

, 'Transactions' AS "Transactions"

)

)

ORDER BY snap_date_time

;复制-- Converting rows TO columns Based on Logons Per Sec & User Transaction Per Sec in Last 24 Hours (interval by each hour).

......

WITH lps_tps_in_last_24_hours AS

(

......

)

SELECT *

FROM lps_tps_in_last_24_hours

PIVOT ( MAX(psn)

FOR metric_name IN

( 'Logons' AS "Logons"

, 'Transactions' AS "Transactions"

)

)

ORDER BY snap_date_time

;复制-- Converting rows TO columsn Based on Logons Per Sec & User Transaction Per Sec in Last 31 Days (interval by each hour).

......

WITH lps_tps_in_last_31_days AS

(

......

)

SELECT *

FROM lps_tps_in_last_31_days

PIVOT ( MAX(psn)

FOR metric_name IN

( 'Logons' AS "Logons"

, 'Transactions' AS "Transactions"

)

)

ORDER BY snap_date_time

;复制2. 同样,将上面的SQL代码分别在Oracle SQL Developer里以脚本方式运行并保存为CSV文件,您可以从这里查看,它们分别是:crbc_oracle_lps_tps_in_last_1_hour.csv,crbc_oracle_lps_tps_in_last_24_hours.csv和crbc_oracle_lps_tps_in_last_31_days.csv。

3. 将三个CSV文件上传到Vertica数据库服务器的/home/dbadmin/目录下,见“<<==”标明的位置。

[dbadmin@test ~]$ ls -lrht

total 156K

drwxr-xr-x 5 dbadmin verticadba 134 Dec 15 14:06 vdb_oracle_perf

-rw-r--r-- 1 dbadmin verticadba 27K Dec 16 18:37 oracle_dbtime.csv

-rw-r--r-- 1 dbadmin verticadba 1.9K Dec 16 18:37 oracle_dbtime_2.csv

-rw-rw-r-- 1 dbadmin verticadba 390 Dec 16 18:44 imp.log

-rw-rw-r-- 1 dbadmin verticadba 393 Dec 16 18:45 imp_2.log

-rw-r--r-- 1 dbadmin verticadba 4.7K Dec 20 09:42 oracle_lps_tps_in_last_1_hour.csv

-rw-r--r-- 1 dbadmin verticadba 2.0K Dec 20 09:45 oracle_lps_tps_in_last_24_hours.csv

-rw-r--r-- 1 dbadmin verticadba 57K Dec 20 09:47 oracle_lps_tps_in_last_31_days.csv

-rw-rw-r-- 1 dbadmin verticadba 425 Dec 20 10:06 imp_lps_tps_1.log

-rw-rw-r-- 1 dbadmin verticadba 428 Dec 20 10:06 imp_lps_tps_2.log

-rw-rw-r-- 1 dbadmin verticadba 428 Dec 20 10:07 imp_lps_tps_3.log

-rw-r--r-- 1 dbadmin verticadba 1.9K Dec 20 14:51 crbc_oracle_lps_tps_in_last_1_hour.csv <<==

-rw-r--r-- 1 dbadmin verticadba 831 Dec 20 14:52 crbc_oracle_lps_tps_in_last_24_hours.csv <<==

-rw-r--r-- 1 dbadmin verticadba 24K Dec 20 14:53 crbc_oracle_lps_tps_in_last_31_days.csv <<==复制4. 用vsql连接到Vertica数据库创建新表(表名在原表的基础上增加crbc前缀)并用COPY命令导入CSV文件中的数据。

vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crbc_oracle_lps_tps_in_last_1_hour

vdb_oracle_perf=> (snap_date_time TIMESTAMP,

vdb_oracle_perf=> logons NUMBER(8,2),

vdb_oracle_perf=> transactions NUMBER(8,2)

vdb_oracle_perf=> );

CREATE TABLE

vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crbc_oracle_lps_tps_in_last_24_hours

vdb_oracle_perf=> (snap_date_time TIMESTAMP,

vdb_oracle_perf=> logons NUMBER(8,2),

vdb_oracle_perf=> transactions NUMBER(8,2)

vdb_oracle_perf=> );

CREATE TABLE

vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crbc_oracle_lps_tps_in_last_31_days

vdb_oracle_perf=> (snap_date_time TIMESTAMP,

vdb_oracle_perf=> logons NUMBER(8,2),

vdb_oracle_perf=> transactions NUMBER(8,2)

vdb_oracle_perf=> );

CREATE TABLE

vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crbc_oracle_lps_tps_in_last_1_hour FROM '/home/dbadmin/crbc_oracle_lps_tps_in_last_1_hour.csv' EXCEPTIONS '/home/dbadmin/imp_crbc_lps_tps_1.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_crbc_lps_tps_1.log], [/home/dbadmin/imp_crbc_lps_tps_1.log.1], etc

Rows Loaded

-------------

61

(1 row)

vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crbc_oracle_lps_tps_in_last_24_hours FROM '/home/dbadmin/crbc_oracle_lps_tps_in_last_24_hours.csv' EXCEPTIONS '/home/dbadmin/imp_crbc_lps_tps_2.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_crbc_lps_tps_2.log], [/home/dbadmin/imp_crbc_lps_tps_2.log.1], etc

Rows Loaded

-------------

24

(1 row)

vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crbc_oracle_lps_tps_in_last_31_days FROM '/home/dbadmin/crbc_oracle_lps_tps_in_last_31_days.csv' EXCEPTIONS '/home/dbadmin/imp_crbc_lps_tps_3.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_crbc_lps_tps_3.log], [/home/dbadmin/imp_crbc_lps_tps_3.log.1], etc

Rows Loaded

-------------

720

(1 row)

vdb_oracle_perf=> 复制5. 在Tableau中连接Vertica数据库并可视化新表

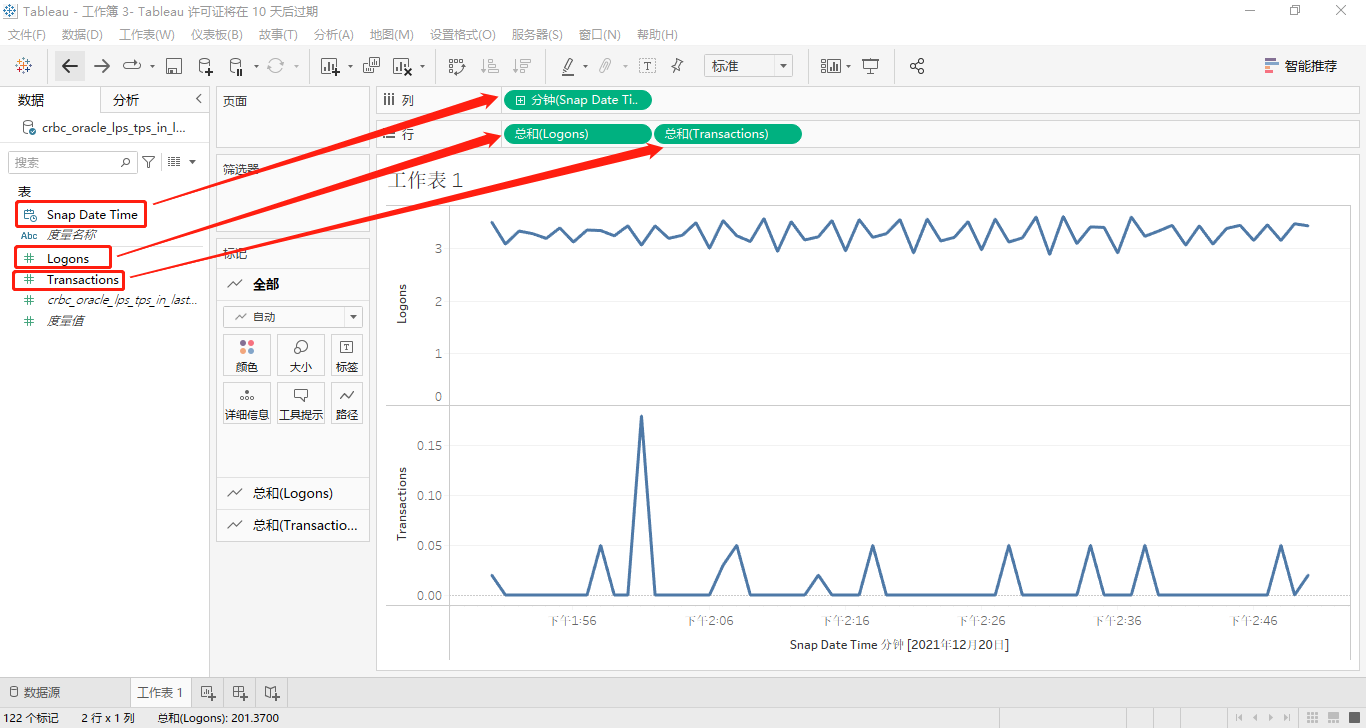

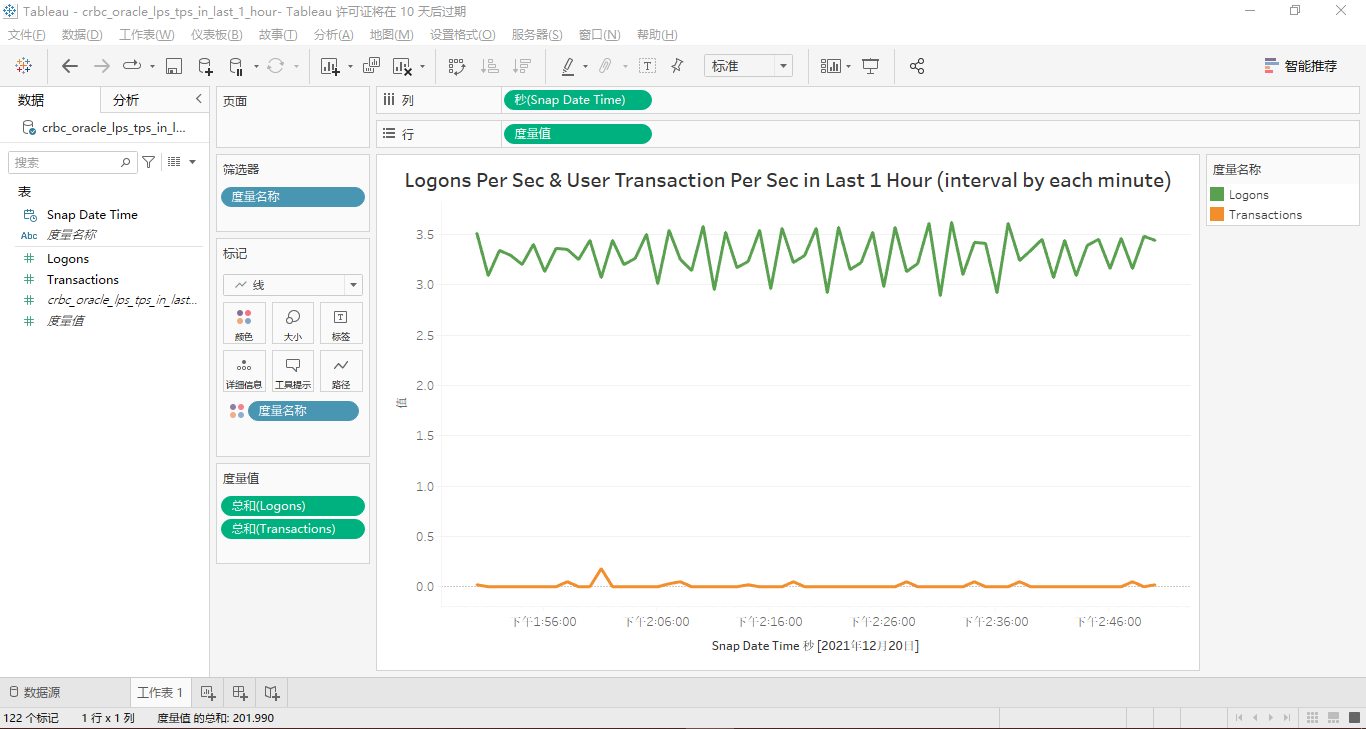

这次,我们终于可以将两个度量Logons和Transactions在同一个折线图中展示出来了,其中对新表crbc_oracle_lps_tps_in_last_1_hour的可视化操作依次见如下五个屏幕截图:

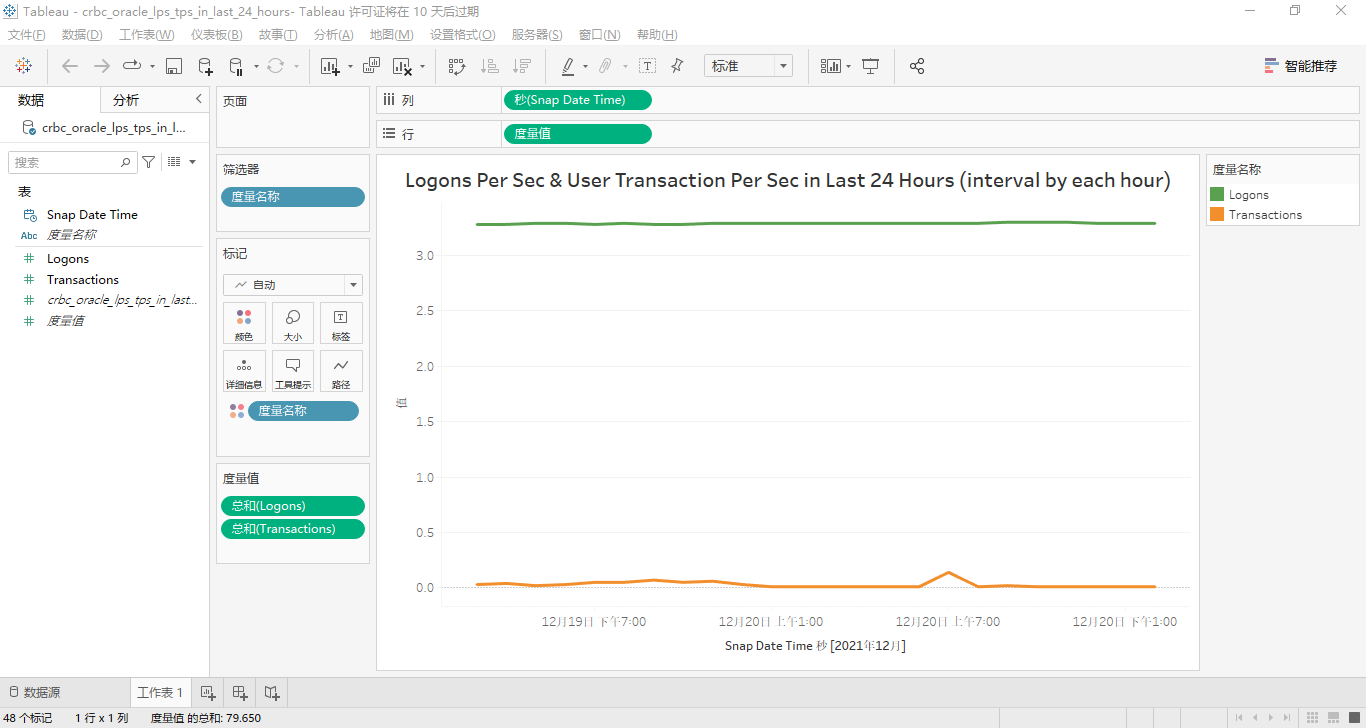

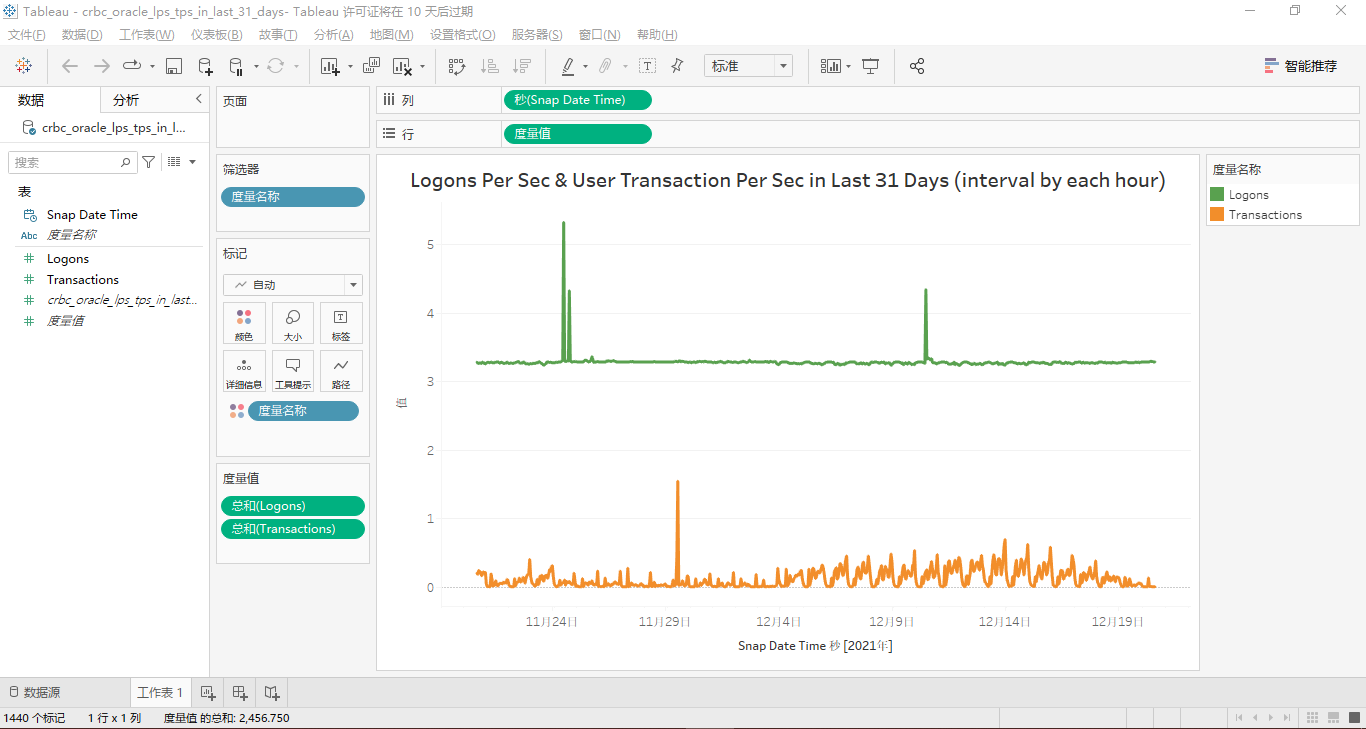

表crbc_oracle_lps_tps_in_last_24_hours和crbc_oracle_lps_tps_in_last_31_days的可视化结果分别如下所示:

以上就是用Tableau可视化Vertica数据库之“LPS&TPS”篇的所有内容。另外,您也可以在acquire_lps_union_tps.sql的第32-64行、第66-98行、第100-132行、第217-238行、第344-365行和第367-388行找到我前面提到的所有SQL源代码。欢迎业界各位朋友在文章底部的评论区提出您的反馈意见。

用Tableau可视化Vertica数据库之“MBPS&IOPS By Function”篇

将Oracle数据库的MBPS&IOPS By Function查询导入Vertica数据库

将Oracle数据库的MBPS&IOPS By Function查询保存为CSV文件

和前两篇文章的方法相同,我们在SQL Develooper中分别以脚本方式运行下面的四个SQL查询并将其保存为CSV文件。具体的操作步骤有些繁琐,所以这里只贴出SQL代码,依次(首先,最近1分钟和最近1小时的MBPS;其次,最近1分钟和最近1小时的IOPS)如下所示:

-- Converting rows to columns Based on I/O Megabytes per Second in Last 1 Minute.

-- Vertical Axis Name: MB Per Sec

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 10

COLUMN sample_time FORMAT a11

COLUMN function_name FORMAT a18

COLUMN io_mbps FORMAT 999,999,999.999

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

WITH ifm AS

(

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') sample_time

, function_name

, ROUND((small_read_mbps+small_write_mbps+large_read_mbps+large_write_mbps), 3) io_mbps

FROM v$iofuncmetric

)

SELECT * FROM ifm

PIVOT ( MAX(io_mbps)

FOR function_name IN

( 'Buffer Cache Reads' AS "Buffer Cache Reads"

, 'Direct Reads' AS "Direct Reads"

, 'Direct Writes' AS "Direct Writes"

, 'DBWR' AS "DBWR"

, 'LGWR' AS "LGWR"

, 'ARCH' AS "ARCH"

, 'RMAN' AS "RMAN"

, 'Recovery' AS "Recovery"

, 'Data Pump' AS "Data Pump"

, 'Streams AQ' AS "Streams AQ"

, 'XDB' AS "XDB"

, 'Others' AS "Others"

, 'Archive Manager' AS "Archive Manager"

, 'Smart Scan' AS "Smart Scan"

)

)

ORDER BY sample_time

;复制-- Converting rows to columns Based on I/O Megabytes per Second in Last 1 Hour (interval by each minute).

-- Vertical Axis Name: MB Per Sec

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 80

COLUMN sample_time FORMAT a11

COLUMN function_name FORMAT a18

COLUMN io_mbps FORMAT 999,999,999.999

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

WITH ifmh AS

(

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') sample_time

, function_name

, ROUND((small_read_mbps+small_write_mbps+large_read_mbps+large_write_mbps), 3) io_mbps

FROM v$iofuncmetric_history

)

SELECT * FROM ifmh

PIVOT ( MAX(io_mbps)

FOR function_name IN

( 'Buffer Cache Reads' AS "Buffer Cache Reads"

, 'Direct Reads' AS "Direct Reads"

, 'Direct Writes' AS "Direct Writes"

, 'DBWR' AS "DBWR"

, 'LGWR' AS "LGWR"

, 'ARCH' AS "ARCH"

, 'RMAN' AS "RMAN"

, 'Recovery' AS "Recovery"

, 'Data Pump' AS "Data Pump"

, 'Streams AQ' AS "Streams AQ"

, 'XDB' AS "XDB"

, 'Others' AS "Others"

, 'Archive Manager' AS "Archive Manager"

, 'Smart Scan' AS "Smart Scan"

)

)

ORDER BY sample_time

;复制-- Converting rows to columns Based on I/O Requests per Second in Last 1 Minute.

-- Horizontal Axis Name: I/O Per Sec

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 10

COLUMN sample_time FORMAT a11

COLUMN function_name FORMAT a18

COLUMN iops FORMAT 999,999,999.999

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

WITH ifm AS

(

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') sample_time

, function_name

, ROUND((small_read_iops+small_write_iops+large_read_iops+large_write_iops), 3) iops

FROM v$iofuncmetric

)

SELECT * FROM ifm

PIVOT ( MAX(iops)

FOR function_name IN

( 'Buffer Cache Reads' AS "Buffer Cache Reads"

, 'Direct Reads' AS "Direct Reads"

, 'Direct Writes' AS "Direct Writes"

, 'DBWR' AS "DBWR"

, 'LGWR' AS "LGWR"

, 'ARCH' AS "ARCH"

, 'RMAN' AS "RMAN"

, 'Recovery' AS "Recovery"

, 'Data Pump' AS "Data Pump"

, 'Streams AQ' AS "Streams AQ"

, 'XDB' AS "XDB"

, 'Others' AS "Others"

, 'Archive Manager' AS "Archive Manager"

, 'Smart Scan' AS "Smart Scan"

)

)

ORDER BY sample_time

;复制-- Converting rows to columns Based on I/O Requests per Second in Last 1 Hour (interval by each minute).

-- Horizontal Axis Name: I/O Per Sec

SET FEEDBACK off;

SET SQLFORMAT csv;

SET LINESIZE 200

SET PAGESIZE 80

COLUMN sample_time FORMAT a11

COLUMN function_name FORMAT a18

COLUMN iops FORMAT 999,999,999.999

ALTER SESSION SET nls_date_format = 'yyyy-mm-dd hh24:mi:ss';

WITH ifmh AS

(

SELECT TO_CHAR(end_time, 'yyyy-mm-dd hh24:mi:ss') sample_time

, function_name

, ROUND((small_read_iops+small_write_iops+large_read_iops+large_write_iops), 3) iops

FROM v$iofuncmetric_history

)

SELECT * FROM ifmh

PIVOT ( MAX(iops)

FOR function_name IN

( 'Buffer Cache Reads' AS "Buffer Cache Reads"

, 'Direct Reads' AS "Direct Reads"

, 'Direct Writes' AS "Direct Writes"

, 'DBWR' AS "DBWR"

, 'LGWR' AS "LGWR"

, 'ARCH' AS "ARCH"

, 'RMAN' AS "RMAN"

, 'Recovery' AS "Recovery"

, 'Data Pump' AS "Data Pump"

, 'Streams AQ' AS "Streams AQ"

, 'XDB' AS "XDB"

, 'Others' AS "Others"

, 'Archive Manager' AS "Archive Manager"

, 'Smart Scan' AS "Smart Scan"

)

)

ORDER BY sample_time

;复制由于CSV文件的内容过多,所以我把它们分别上传到了我的GitHub,您可以查看这4个文件:crtc_oracle_io_mbps_in_last_1_minute.csv,crtc_oracle_io_mbps_in_last_1_hour.csv,crtc_oracle_iops_in_last_1_minute.csv和crtc_oracle_iops_in_last_1_hour.csv。

将所有CSV文件上传到Vertica服务器的/home/dbadmin目录下

这里,省略具体的上传步骤和相关授权操作,最终的上传结果如下所示(用“<<==”标明):

[dbadmin@test ~]$ ls -lrht

total 184K

drwxr-xr-x 5 dbadmin verticadba 134 Dec 15 14:06 vdb_oracle_perf

......

-rw-r--r-- 1 dbadmin verticadba 225 Dec 23 10:54 crtc_oracle_io_mbps_in_last_1_minute.csv <<==

-rw-r--r-- 1 dbadmin verticadba 3.0K Dec 23 10:56 crtc_oracle_io_mbps_in_last_1_hour.csv <<==

-rw-r--r-- 1 dbadmin verticadba 233 Dec 23 10:57 crtc_oracle_iops_in_last_1_minute.csv <<==

-rw-r--r-- 1 dbadmin verticadba 3.6K Dec 23 10:59 crtc_oracle_iops_in_last_1_hour.csv <<==复制用vsql客户端命令连接到Vertica数据库

用Linux命令su切换Vertica数据库服务器的root用户到dbadmin用户,然后用vsql命令进行连接,下面是具体的操作过程:

[root@test ~]# su - dbadmin

[dbadmin@test ~]$

[dbadmin@test ~]$ /opt/vertica/bin/vsql -h 127.0.0.1 vdb_oracle_perf dbadmin

Password:

Welcome to vsql, the Vertica Analytic Database interactive terminal.

Type: \h or \? for help with vsql commands

\g or terminate with semicolon to execute query

\q to quit

vdb_oracle_perf=> 复制在Vertica数据库中创建相关的MBPS&IOPS By Function表

在public的schema下,分别创建表crtc_oracle_io_mbps_in_last_1_minute,crtc_oracle_io_mbps_in_last_1_hour,crtc_oracle_iops_in_last_1_minute和crtc_oracle_iops_in_last_1_hour。

vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crtc_oracle_io_mbps_in_last_1_minute

vdb_oracle_perf-> (sample_time TIMESTAMP,

vdb_oracle_perf(> buffer_cache_reads NUMBER(12,3),

vdb_oracle_perf(> direct_reads NUMBER(12,3),

vdb_oracle_perf(> direct_writes NUMBER(12,3),

vdb_oracle_perf(> dbwr NUMBER(12,3),

vdb_oracle_perf(> lgwr NUMBER(12,3),

vdb_oracle_perf(> arch NUMBER(12,3),

vdb_oracle_perf(> rman NUMBER(12,3),

vdb_oracle_perf(> recovery NUMBER(12,3),

vdb_oracle_perf(> data_pump NUMBER(12,3),

vdb_oracle_perf(> streams_aq NUMBER(12,3),

vdb_oracle_perf(> xdb NUMBER(12,3),

vdb_oracle_perf(> others NUMBER(12,3),

vdb_oracle_perf(> archive_manager NUMBER(12,3),

vdb_oracle_perf(> smart_scan NUMBER(12,3)

vdb_oracle_perf(> );

CREATE TABLE

vdb_oracle_perf=> 复制vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crtc_oracle_io_mbps_in_last_1_hour

vdb_oracle_perf-> (sample_time TIMESTAMP,

vdb_oracle_perf(> buffer_cache_reads NUMBER(12,3),

vdb_oracle_perf(> direct_reads NUMBER(12,3),

vdb_oracle_perf(> direct_writes NUMBER(12,3),

vdb_oracle_perf(> dbwr NUMBER(12,3),

vdb_oracle_perf(> lgwr NUMBER(12,3),

vdb_oracle_perf(> arch NUMBER(12,3),

vdb_oracle_perf(> rman NUMBER(12,3),

vdb_oracle_perf(> recovery NUMBER(12,3),

vdb_oracle_perf(> data_pump NUMBER(12,3),

vdb_oracle_perf(> streams_aq NUMBER(12,3),

vdb_oracle_perf(> xdb NUMBER(12,3),

vdb_oracle_perf(> others NUMBER(12,3),

vdb_oracle_perf(> archive_manager NUMBER(12,3),

vdb_oracle_perf(> smart_scan NUMBER(12,3)

vdb_oracle_perf(> );

CREATE TABLE

vdb_oracle_perf=> 复制vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crtc_oracle_iops_in_last_1_minute

vdb_oracle_perf-> (sample_time TIMESTAMP,

vdb_oracle_perf(> buffer_cache_reads NUMBER(12,3),

vdb_oracle_perf(> direct_reads NUMBER(12,3),

vdb_oracle_perf(> direct_writes NUMBER(12,3),

vdb_oracle_perf(> dbwr NUMBER(12,3),

vdb_oracle_perf(> lgwr NUMBER(12,3),

vdb_oracle_perf(> arch NUMBER(12,3),

vdb_oracle_perf(> rman NUMBER(12,3),

vdb_oracle_perf(> recovery NUMBER(12,3),

vdb_oracle_perf(> data_pump NUMBER(12,3),

vdb_oracle_perf(> streams_aq NUMBER(12,3),

vdb_oracle_perf(> xdb NUMBER(12,3),

vdb_oracle_perf(> others NUMBER(12,3),

vdb_oracle_perf(> archive_manager NUMBER(12,3),

vdb_oracle_perf(> smart_scan NUMBER(12,3)

vdb_oracle_perf(> );

CREATE TABLE

vdb_oracle_perf=> 复制vdb_oracle_perf=>

vdb_oracle_perf=> CREATE TABLE public.crtc_oracle_iops_in_last_1_hour

vdb_oracle_perf-> (sample_time TIMESTAMP,

vdb_oracle_perf(> buffer_cache_reads NUMBER(12,3),

vdb_oracle_perf(> direct_reads NUMBER(12,3),

vdb_oracle_perf(> direct_writes NUMBER(12,3),

vdb_oracle_perf(> dbwr NUMBER(12,3),

vdb_oracle_perf(> lgwr NUMBER(12,3),

vdb_oracle_perf(> arch NUMBER(12,3),

vdb_oracle_perf(> rman NUMBER(12,3),

vdb_oracle_perf(> recovery NUMBER(12,3),

vdb_oracle_perf(> data_pump NUMBER(12,3),

vdb_oracle_perf(> streams_aq NUMBER(12,3),

vdb_oracle_perf(> xdb NUMBER(12,3),

vdb_oracle_perf(> others NUMBER(12,3),

vdb_oracle_perf(> archive_manager NUMBER(12,3),

vdb_oracle_perf(> smart_scan NUMBER(12,3)

vdb_oracle_perf(> );

CREATE TABLE

vdb_oracle_perf=> 复制使用COPY命令将CSV文件导入刚创建的表中

在上一步操作中,我们已经创建成功了4个表。现在我们用COPY命令将上传到Vertica数据库服务器的4个CSV文件分别导入到那4个表中。操作步骤依次为:

vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crtc_oracle_io_mbps_in_last_1_minute FROM '/home/dbadmin/crtc_oracle_io_mbps_in_last_1_minute.csv' EXCEPTIONS '/home/dbadmin/imp_io_mbps_1.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_io_mbps_1.log], [/home/dbadmin/imp_io_mbps_1.log.1], etc

Rows Loaded

-------------

1

(1 row)复制vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crtc_oracle_io_mbps_in_last_1_hour FROM '/home/dbadmin/crtc_oracle_io_mbps_in_last_1_hour.csv' EXCEPTIONS '/home/dbadmin/imp_io_mbps_2.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_io_mbps_2.log], [/home/dbadmin/imp_io_mbps_2.log.1], etc

Rows Loaded

-------------

61

(1 row)复制vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crtc_oracle_iops_in_last_1_minute FROM '/home/dbadmin/crtc_oracle_iops_in_last_1_minute.csv' EXCEPTIONS '/home/dbadmin/imp_iops_1.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_iops_1.log], [/home/dbadmin/imp_iops_1.log.1], etc

Rows Loaded

-------------

1

(1 row)复制vdb_oracle_perf=>

vdb_oracle_perf=> COPY public.crtc_oracle_iops_in_last_1_hour FROM '/home/dbadmin/crtc_oracle_iops_in_last_1_hour.csv' EXCEPTIONS '/home/dbadmin/imp_iops_2.log' DELIMITER AS ',';

NOTICE 7850: In a multi-threaded load, rejected record data may be written to additional files

HINT: Exceptions may be written to files [/home/dbadmin/imp_iops_2.log], [/home/dbadmin/imp_iops_2.log.1], etc

Rows Loaded

-------------

61

(1 row)

vdb_oracle_perf=> 复制用Tableau可视化Vertica数据库的表

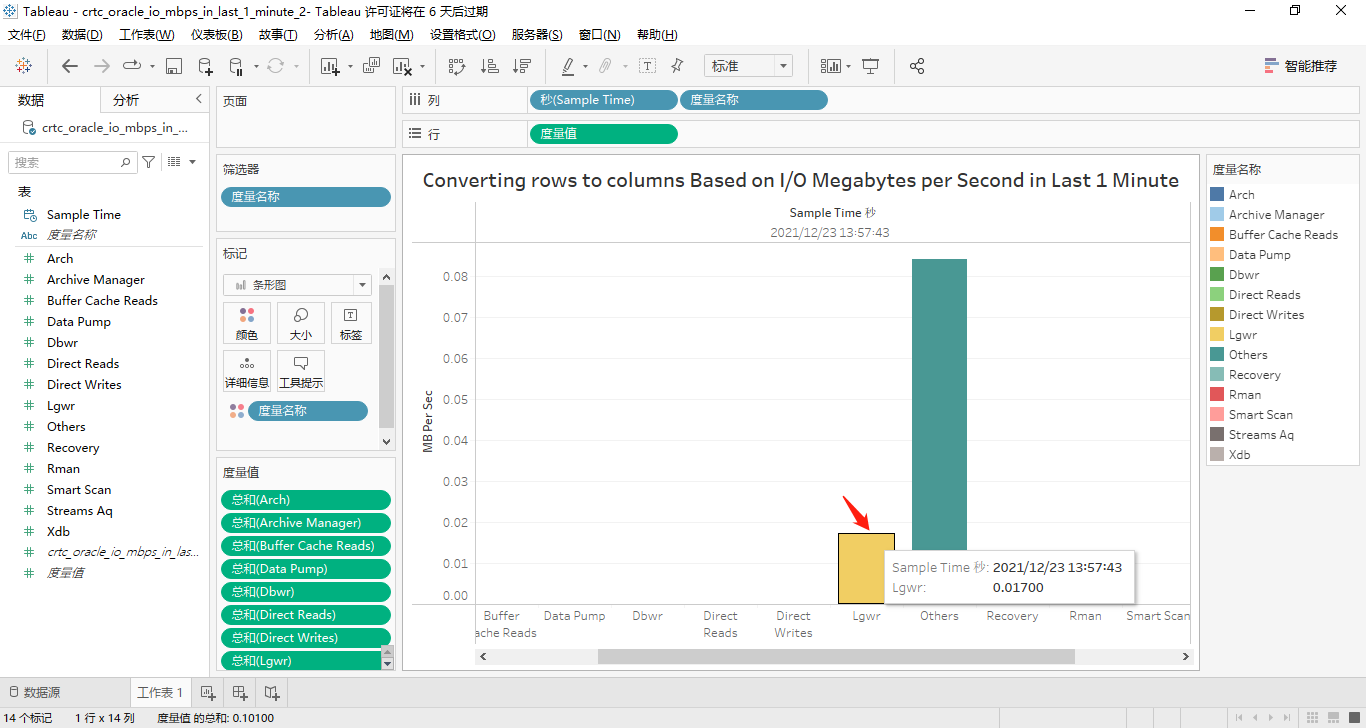

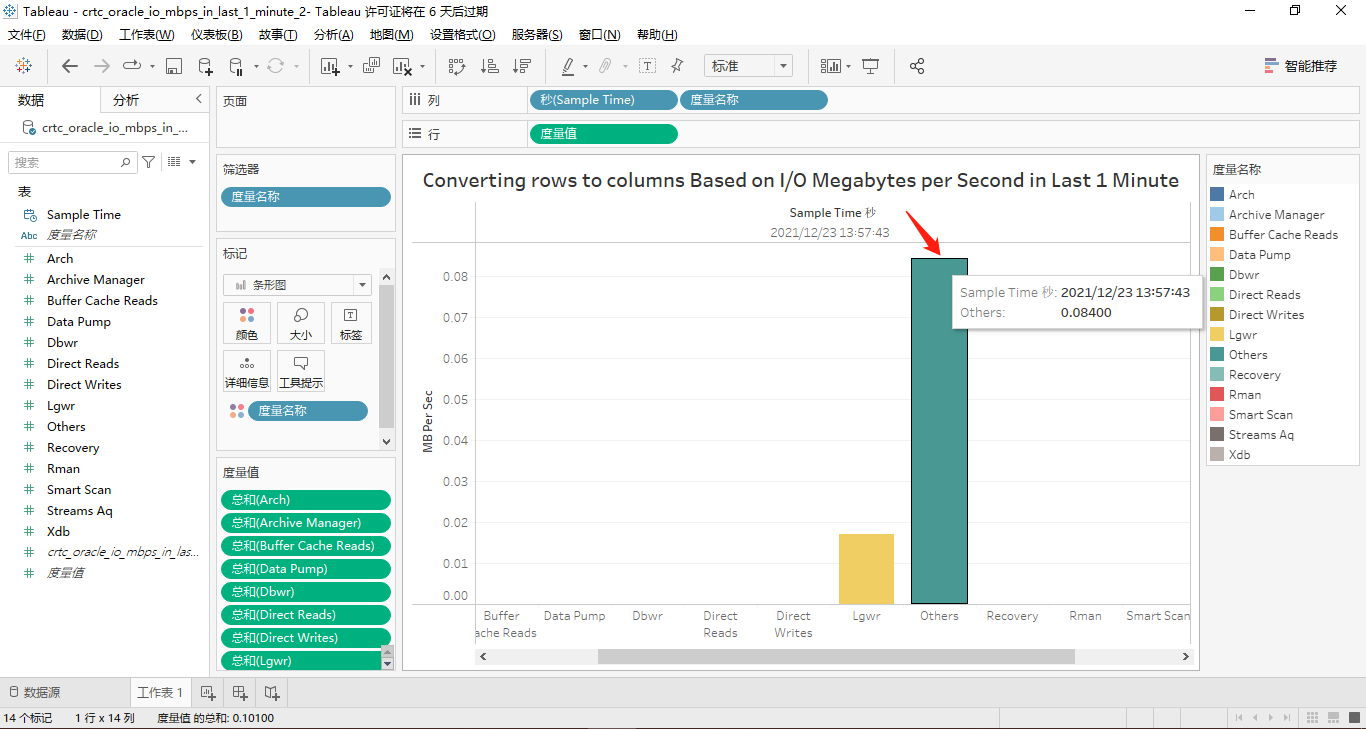

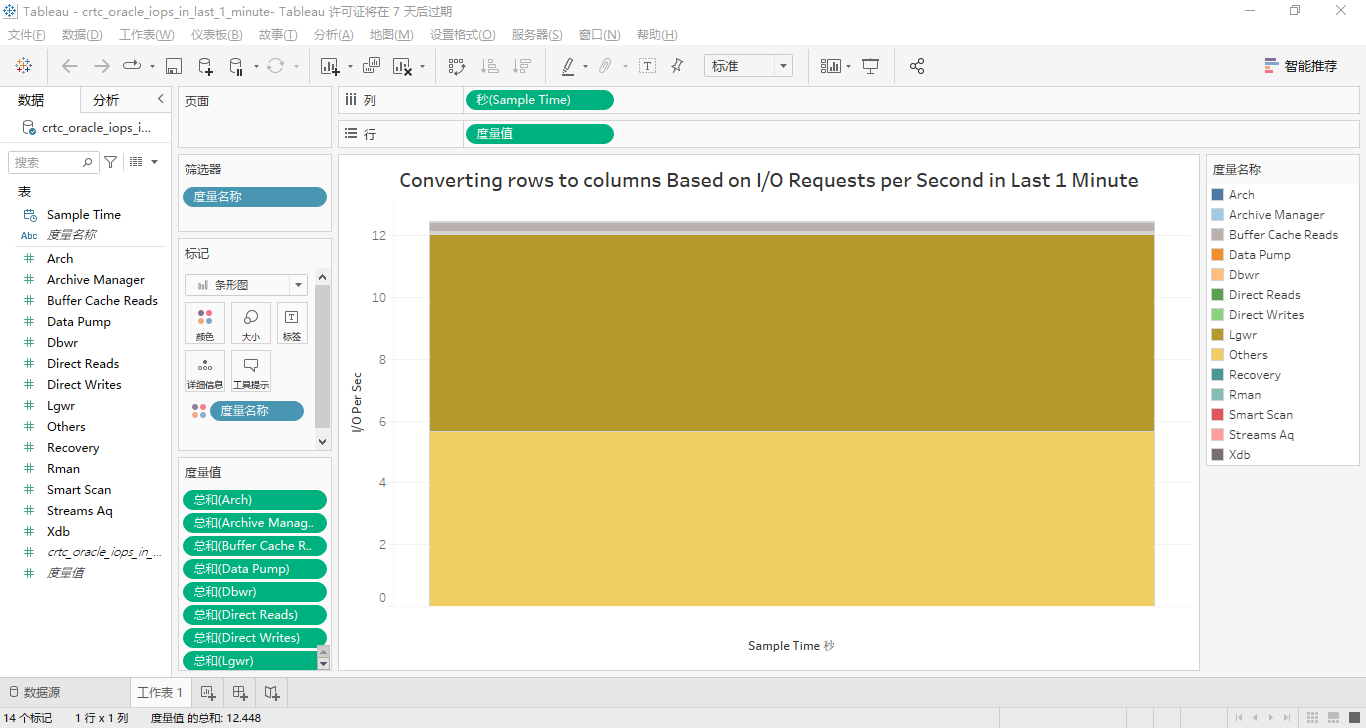

按Function Name分类的最近1分钟的IO MBPS

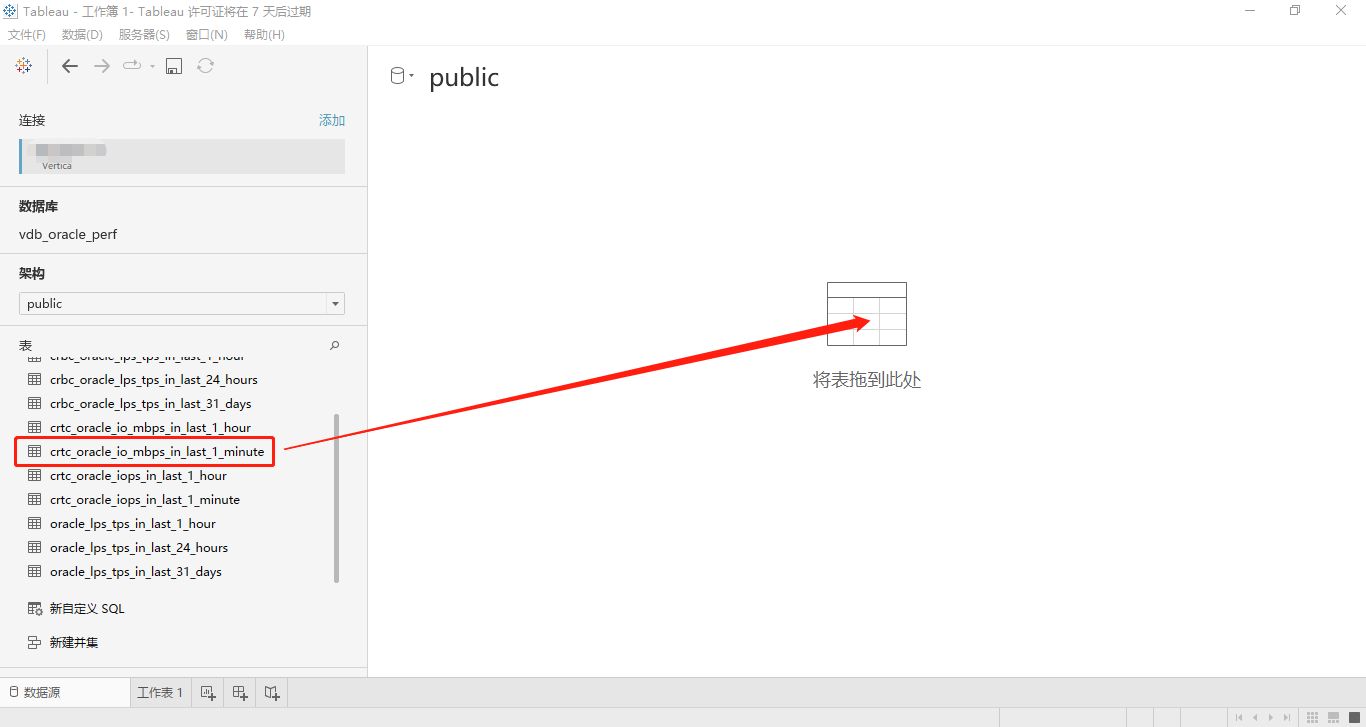

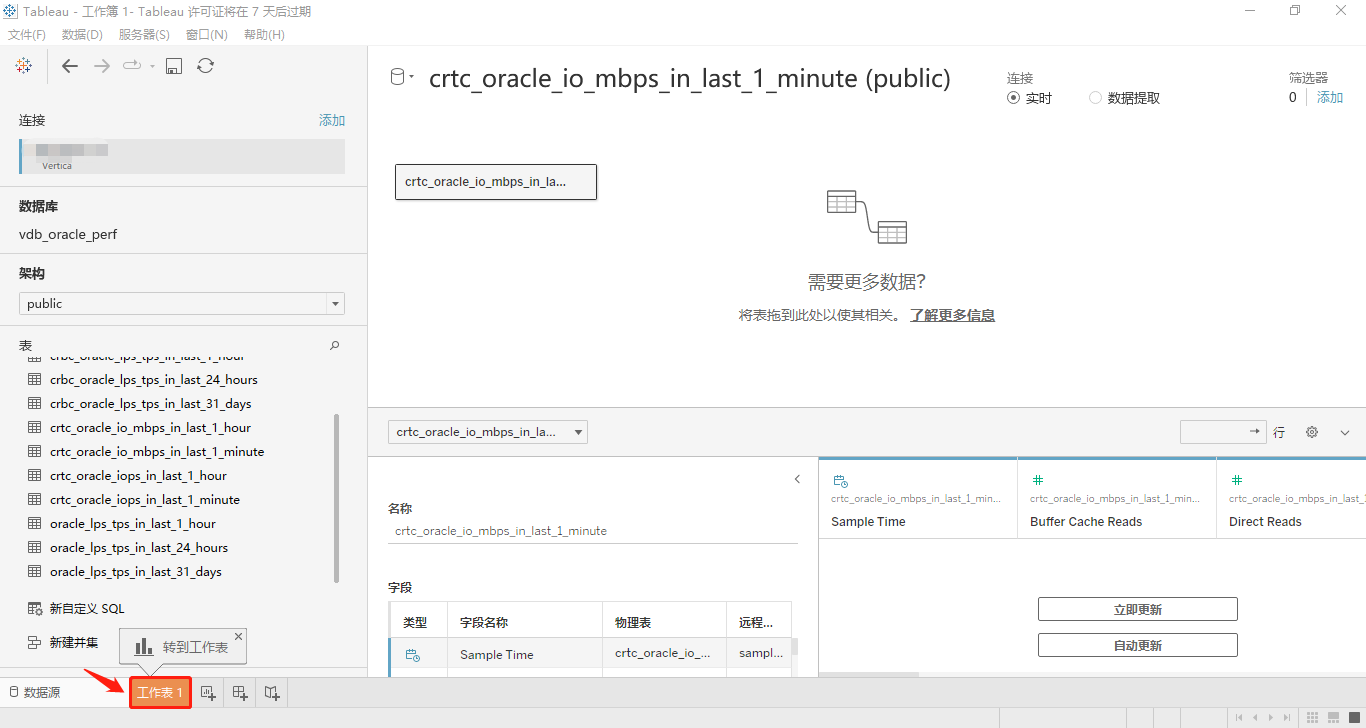

打开Tableau Desktop工具,然后连接到Vertica数据库,选择schema为public,然后将表crtc_oracle_io_mbps_in_last_1_minute拖动到指定位置,点击底部的工作表,进入工作表编辑区。详见下面两个屏幕截图:

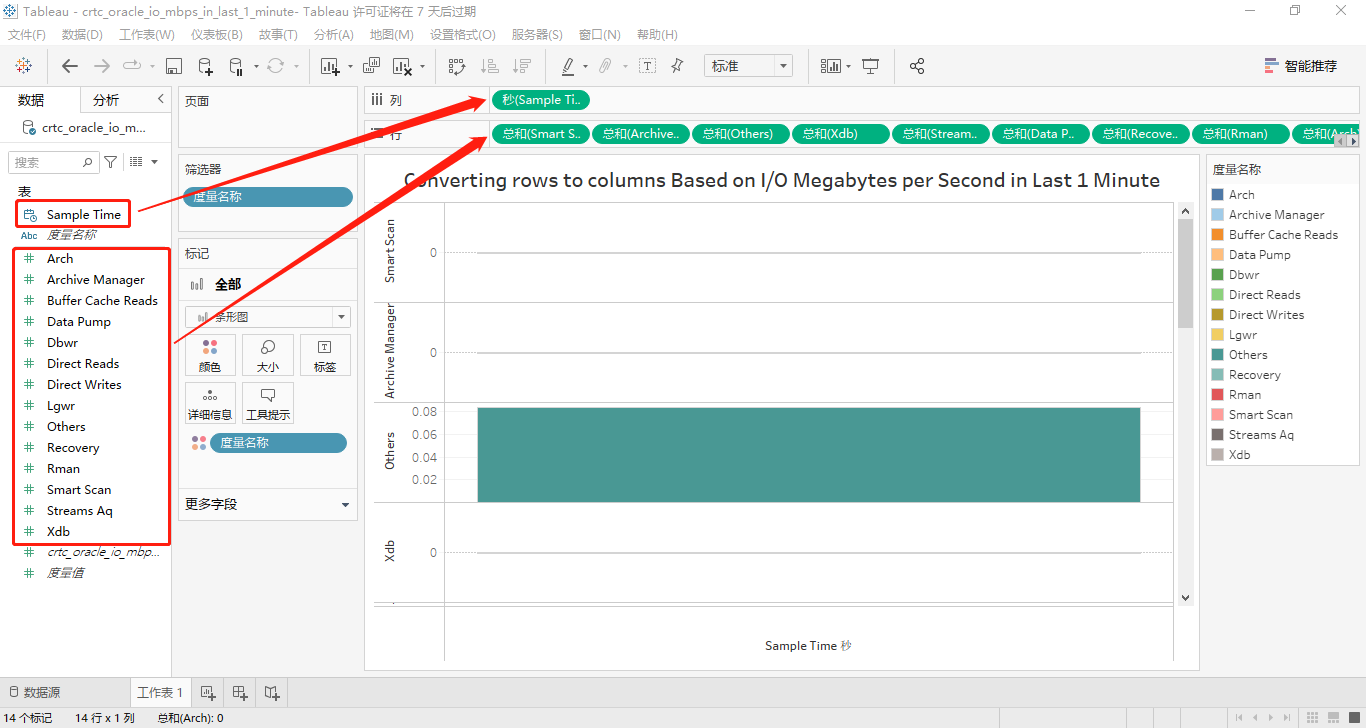

接着,将工作区中左侧“数据”标签卡内“表”的度量名称Sample Time用鼠标拖到位于工作区上方标签名为“列”的右侧“标签框”中,同样的方法,将“表”的14个度量值分别拖到位于工作区上方标签名为“行”的右侧“标签框”中,屏幕截图如下所示:

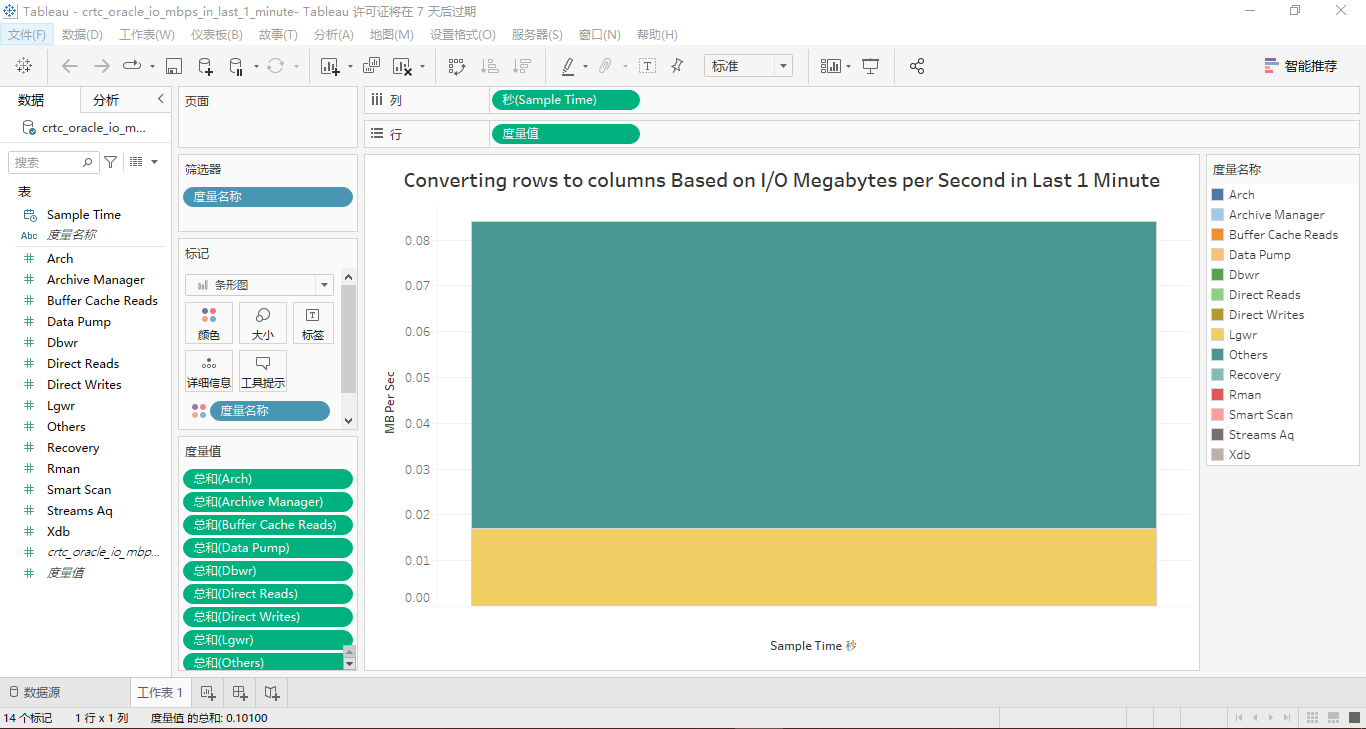

然后将标签名为“行”内的其余13个度量依次用鼠标拖动到工作区中部的图表纵坐标轴名称为“Smart Scan”的区域,也就是将这14个度量都合并到一个纵坐标轴上,顺便修改图表的名称和纵坐标轴名称,最终的效果如图所示:

因为每个度量在最近1分钟的数据只有一个值显示,显然,所有度量在纵坐标轴上显示的话,这个柱状条形图看起来很臃肿!因此,将这个14个度量换到横坐标轴上,详见下面的两个屏幕截图:

正如我们所看到的,只有Lgwr和Others这两个度量有取值。

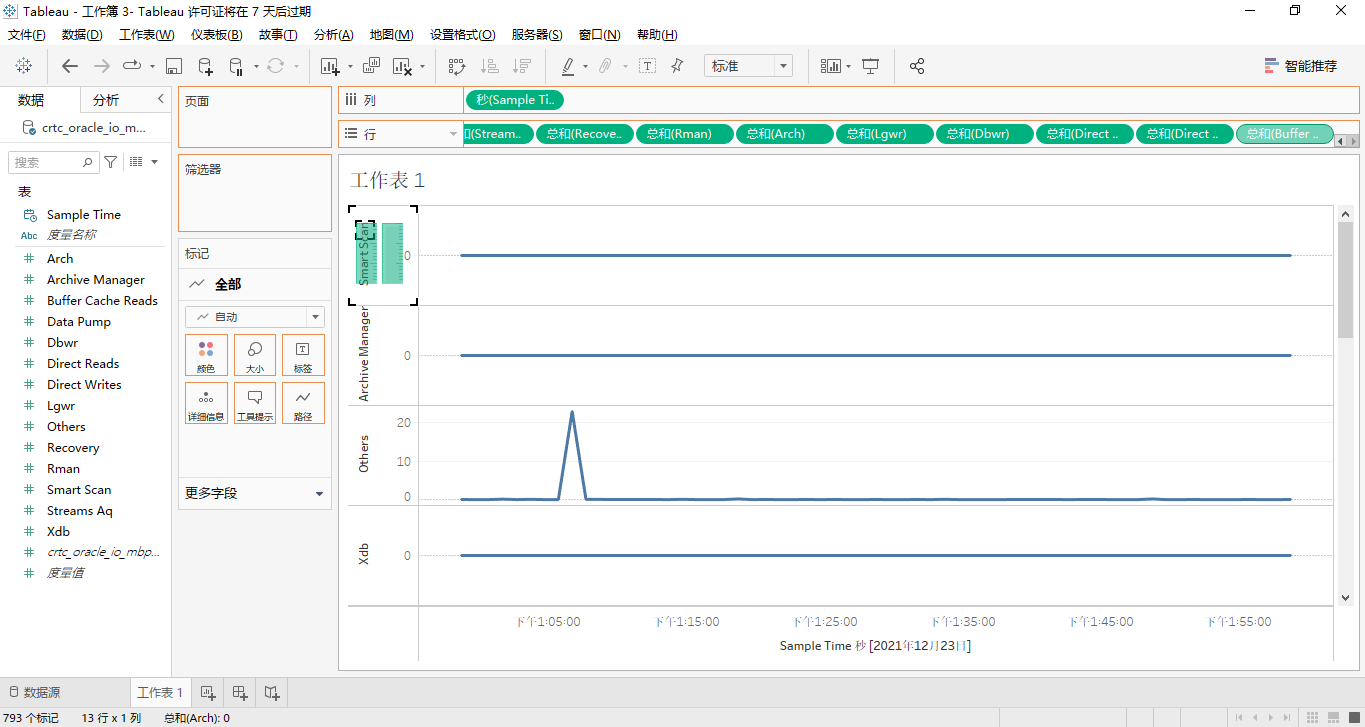

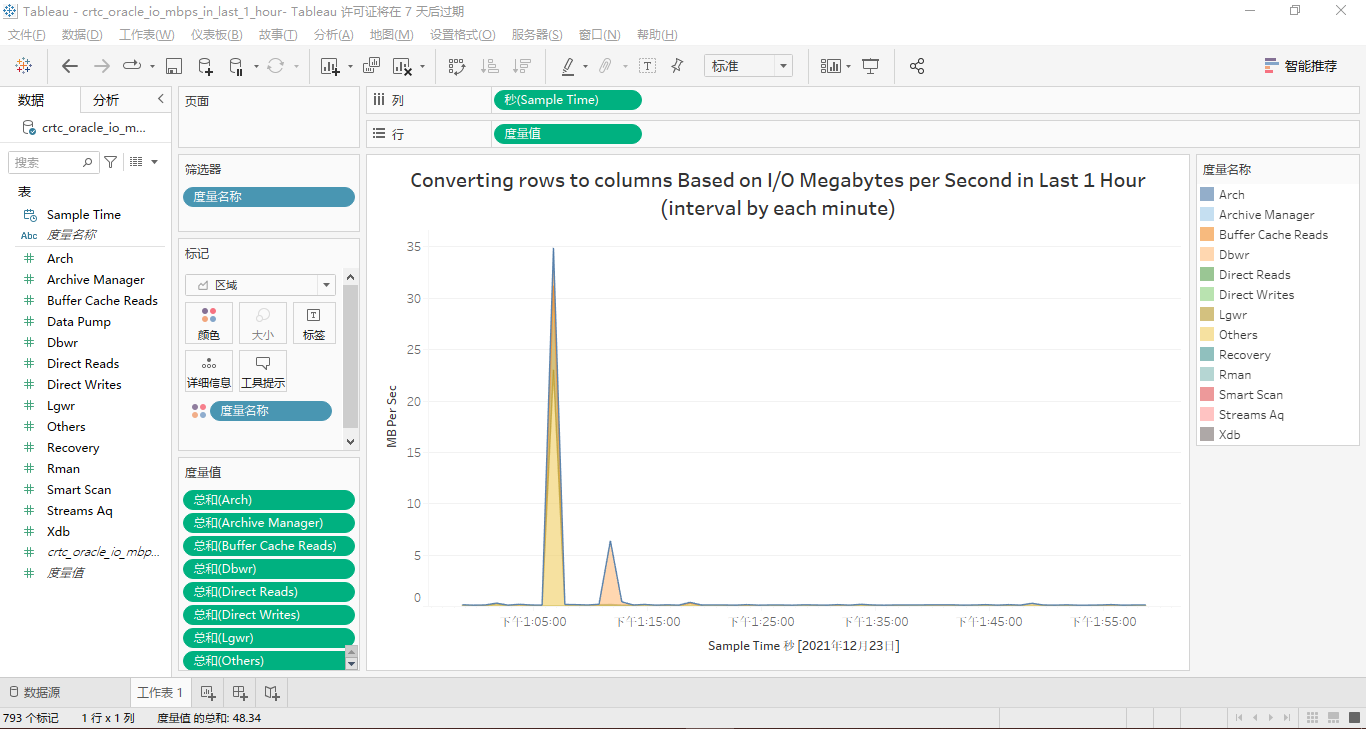

按Function Name分类的最近1小时的IO MBPS

因为上一环节我们已经非常详细地说明了在Tablesau Desktop中可视化表crtc_oracle_io_mbps_in_last_1_minute的每一步骤,所以在这里,我们进行快速地操作,见如下屏幕截图。

最终,按Function Name分类的最近1小时的IO MBPS的面积堆叠图是这样的:

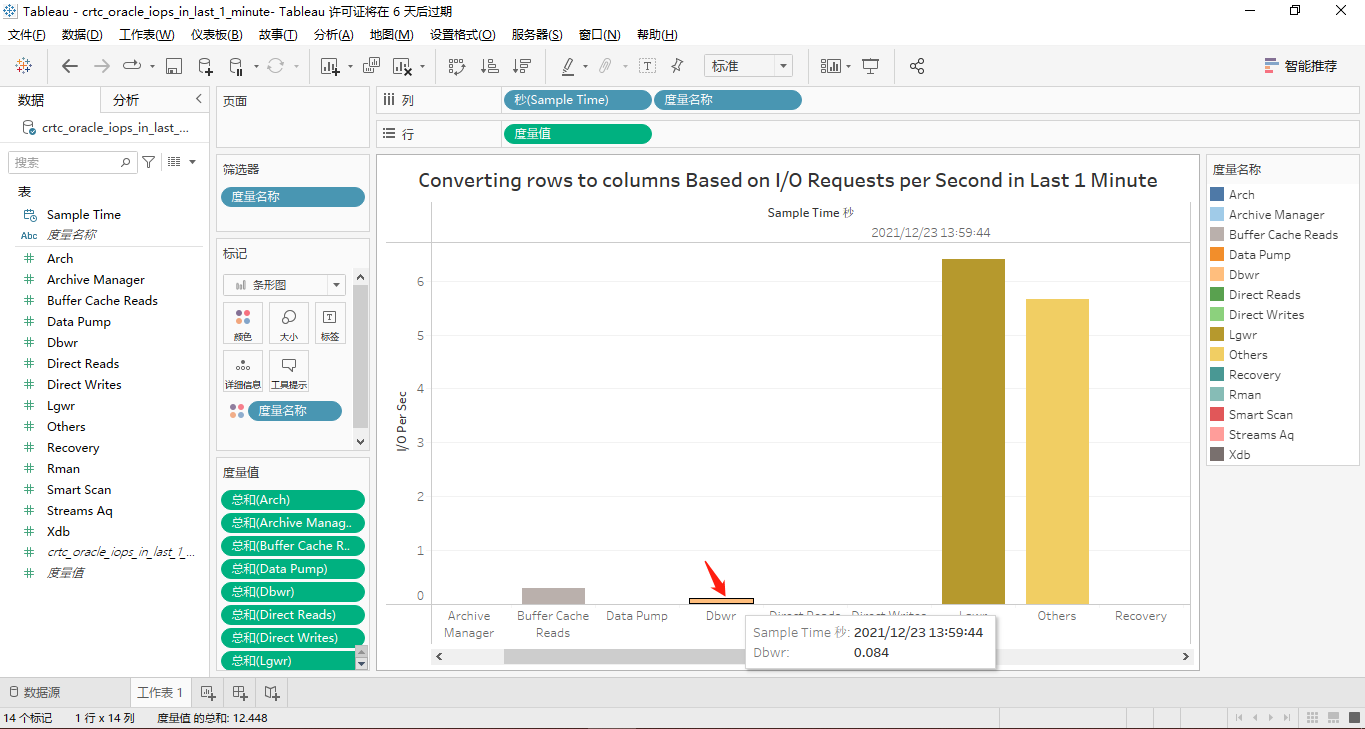

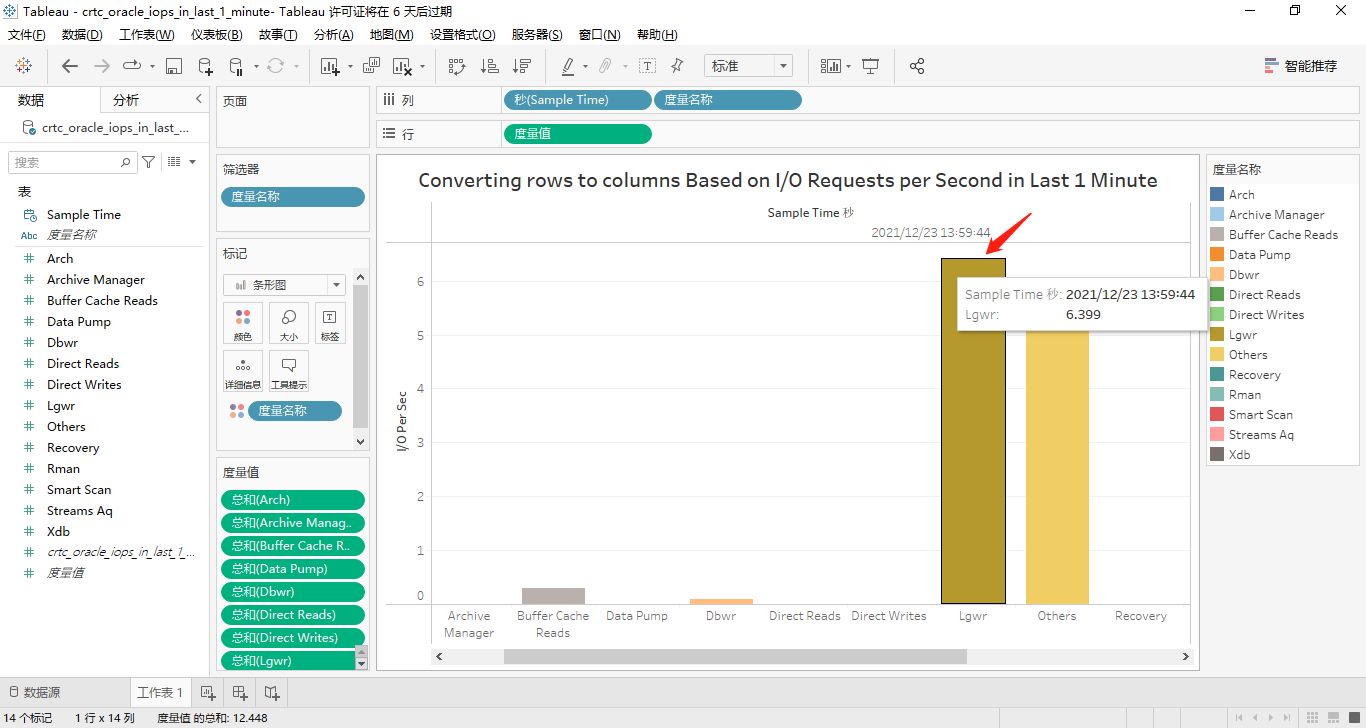

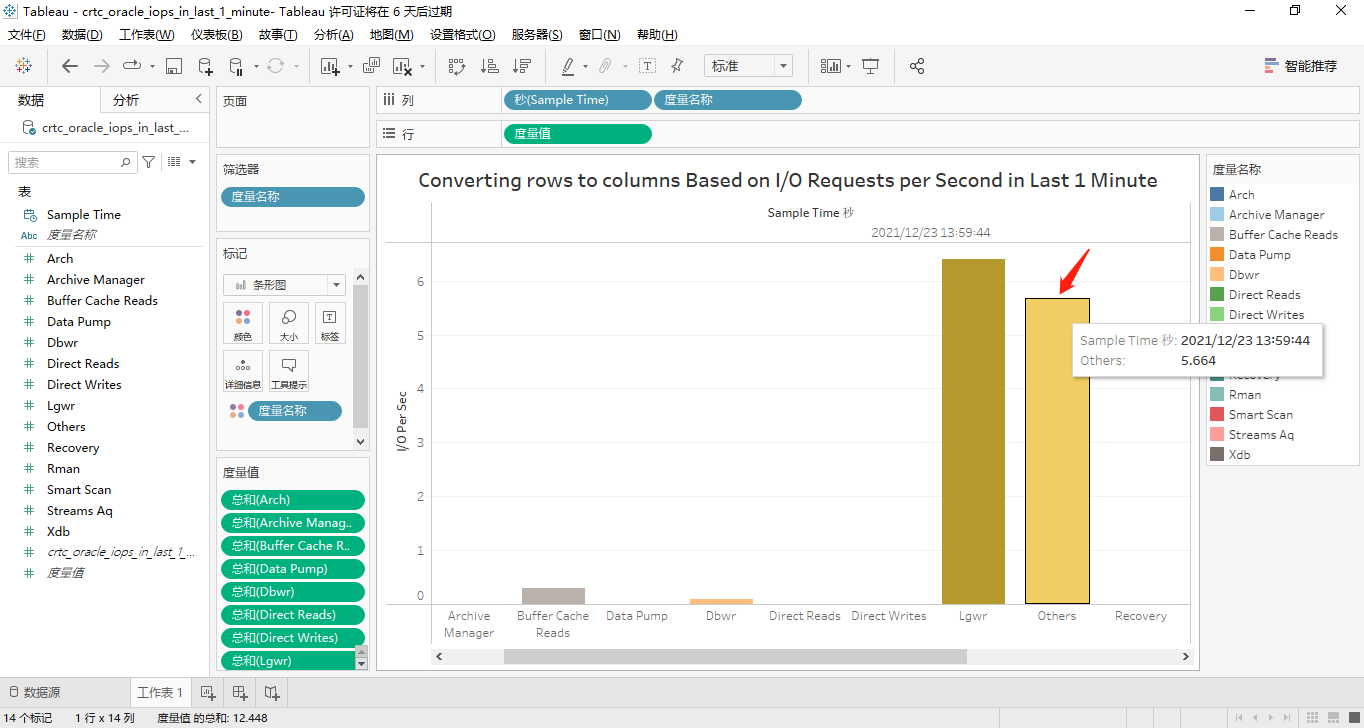

按Function Name分类的最近1分钟的IOPS

这14个度量均在纵坐标轴上显示的条形柱状图为:

接着,我们将那14个度量都转换到横坐标轴上显示。其中,有取值的4个度量对应的屏幕截图分别如下所示:

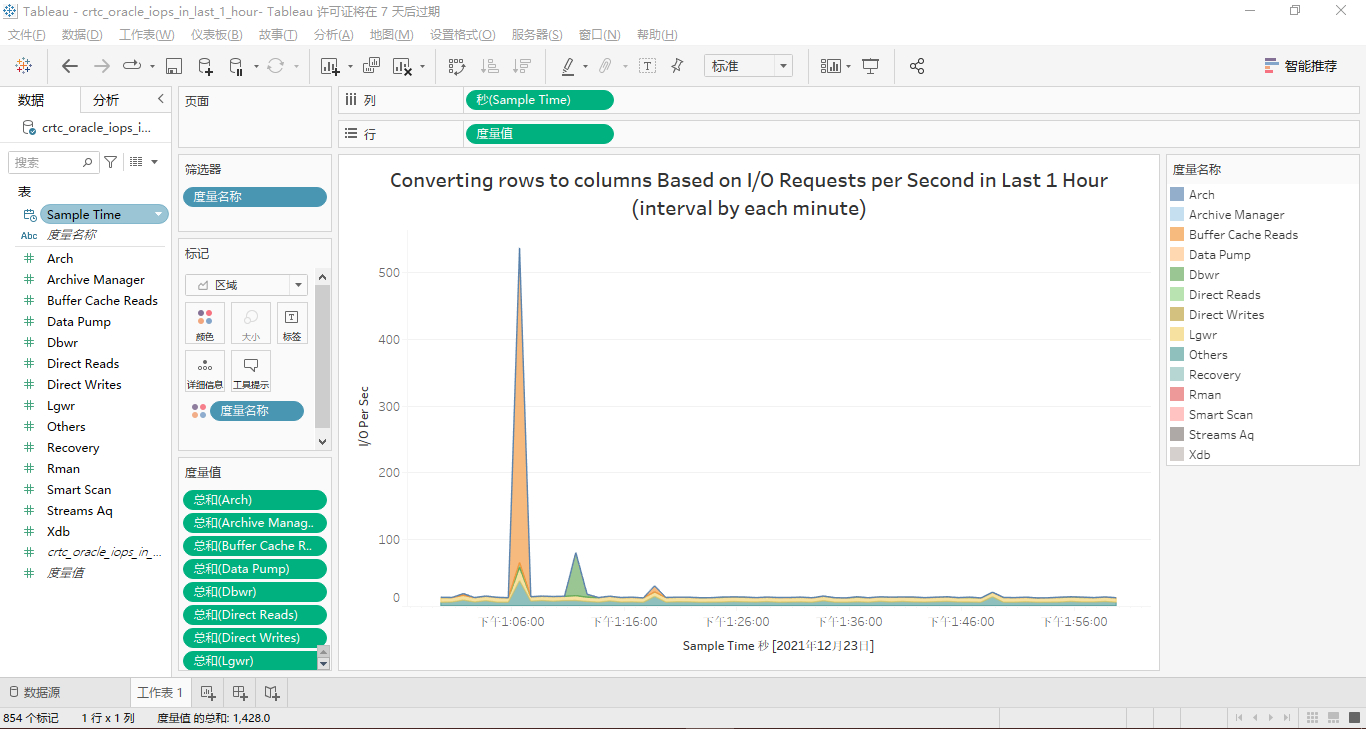

按Function Name分类的最近1小时的IOPS

最近1小时的IOPS的面积堆叠图设置相对简单,最终效果见下图:

以上就是用Tableau可视化Vertica数据库之“MBPS&IOPS By Function”篇的所有内容。另外,您也可以从acquire_io_mbps_by_function.sql和acquire_iops_by_function.sql查看我前面提到的所有SQL源代码。如果您有好的建议或意见,欢迎在文章底部的评论区提出,我将逐条阅读,并在最快时间内回复。

参考内容

- 用Tableau可视化Vertica数据库之“DB Time”篇

- 什么是Vertica数据库?

- Quickstart Guide from the official documentation of Vertica 11.0.x

- HINT (S0305)

- WARN (N0010)

- WARN (S0112)

- FAIL (S0312)

- DBeaver Community Edition 21.3.1

- Using This Guide from the official documentation of Vertica 11.0.x

- Tableau Desktop 2021.4.1

- Oracle导入csv文本文件到Vertica

- Visual output in Vertica

- Tableau生成折线图

- 用Tableau可视化Vertica数据库之“LPS&TPS”篇

- 用Tableau可视化Vertica数据库之“MBPS&IOPS By Function”篇

评论

点赞

点赞