MGR组复制是MySQL的一种高可用复制技术,用于构建高可用、高扩展和高容错的拓扑架构中。本文简要介绍MGR复制技术的原理,并配置多主模式进行测试。

一、组复制 (MGR)介绍

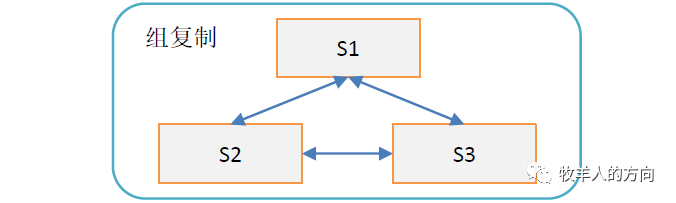

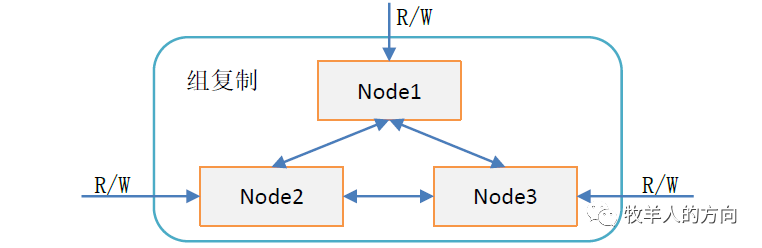

这3个节点互相通信,每当有事件发生,都会向其他节点传播该事件,然后协商,如果大多数节点都同意这次的事件,那么该事件将通过,否则该事件将失败或回滚。这些节点可以是单主模式(single-primary),也可以是多主模式(multi-primary)。单主模式只有一个主节点可以接受写操作,主节点故障时可以自动选举主节点。多主模型下,所有节点都可以接受写操作,所以没有master-slave的概念。

1.1 MGR复制原理

1.1.1 MGR插件体系结构

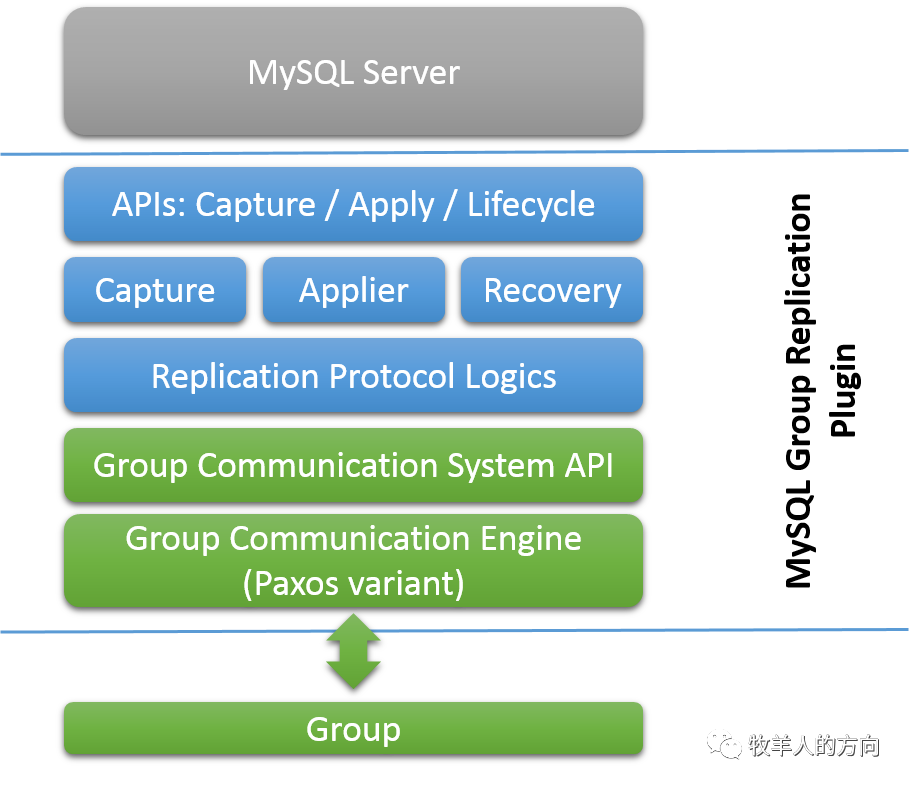

Capture/Apply/Lifecycle APIs:MGR插件包含一组捕获、应用和生命周期API,用于控制插件与MySQL服务器的交互方式。这些接口将MySQL服务器核心与MGR插件隔离。服务器向插件通知启动、恢复、准备接收连接、即将提交事务等消息。插件指示服务器执行诸如提交事务、中止正在进行的事务、事务在中继日志中排队等动作。

Capture/Apply/Recovery组件:

捕获组件Capture负责跟踪与正在执行的事务相关的上下文;

应用组件Apply负责在数据库上执行远程事务;

恢复组件Recovery管理分布式恢复,负责选择捐赠者,对故障做出反应,执行追赶程序,使加入该组的服务器获得更新。

Replication Protocol Logics:复制协议模块包含复制协议的特定逻辑。它处理冲突检测,接收事务并将其传播到组。

组复制插件体系结构的最后两层是组通信系统(GCS)API,以及基于Paxos的组通信引擎(XCom)的实现。GCS API将消息传递层的实现与插件上层分离,组通信引擎处理与复制组成员的通信。

1.1.2 MGR复制原理

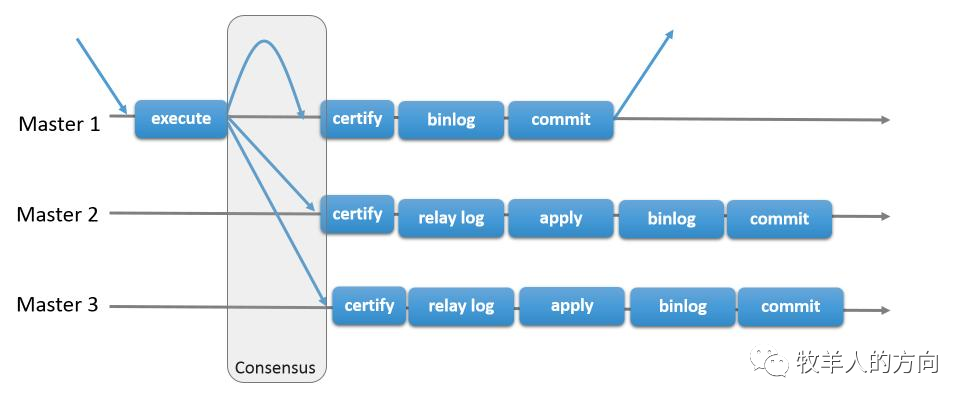

组复制MGR技术是一种高可用系统的技术,其中复制组是由能够相互通信的多个服务器节点组成,在通信层则提供了原子消息和完全信息交互等保障机制,实现基于复制协议的多主更新。复制组由多个服务器组成,每个server成员可以独立的执行事务,但所有的读写RW事务只有在冲突检测成功后才会提交,只读RO事务则不需要冲突检测。因此,当一个读写事务准备提交的时候,会自动在组内进行原子性的广播,告知其它节点变更了什么内容、执行了什么事务。这种原子广播的方式,使得这个事务在每一个节点上都保持着同样顺序。这意味着每一个节点都以同样的顺序,接收到了同样的事务日志,所以每一个节点以同样的顺序重演了这些事务日志,最终整个组内保持了完全一致的状态。

对于在不同的节点上执行的事务之间有可能存在资源争用,特别是在两个不同的并发事务上。假设在不同的节点上有两个并发事务,更新了同一行数据,那么就会发生资源争用。面对这种情况,组复制会判定先提交的事务为有效事务,会在整个组内重演,后提交的事务会直接中断,或者回滚,最后丢弃掉。因此,组复制MGR是一个无共享的复制方案,每一个节点都保存了完整的数据副本。

1.2 MGR复制模式

MGR有两种复制模式:单主模式和多主模式。在单主模式下,组复制具有自动选主功能,每次只有一个节点负责写入,读可以从任意一个节点读取,组内数据保持最终一致。多主模式下,所有的节点都可以同时接受读写,也能够保证组内数据最终一致性。

1)单写模式

首先运行主节点(即那个可写可读的节点,read_only = 0)

运行其他的节点,并把这些节点一一加进group。其他的节点就会自动同步主节点上面的变化,然后将自己设置为只读模式(read_only = 1)。

当主节点意外宕机或者下线,在满足大多数节点存活的情况下,group内部发起选举,选出下一个可用的读节点,提升为主节点。

主选举根据group内剩下存活节点的UUID按字典序升序来选择,即剩余存活的节点按UUID字典序排列,然后选择排在最前的节点作为新的主节点。

2)多写模式

首先关闭单主模式开关loose-group_replication_single_primary_mode=OFF

运行第一个节点,设置loose-group_replication_bootstrap_group=ON

运行其它节点,并START GROUP_REPLICATION加入到group组中

当组内的某个节点发生故障时,会自动从将该节点从组内踢出,与其他节点隔离。剩余的节点之间保持主从复制的正常同步关系。当该节点的故障恢复后,只需手动激活组复制即可(即执行"START GROUP_REPLICATION;");

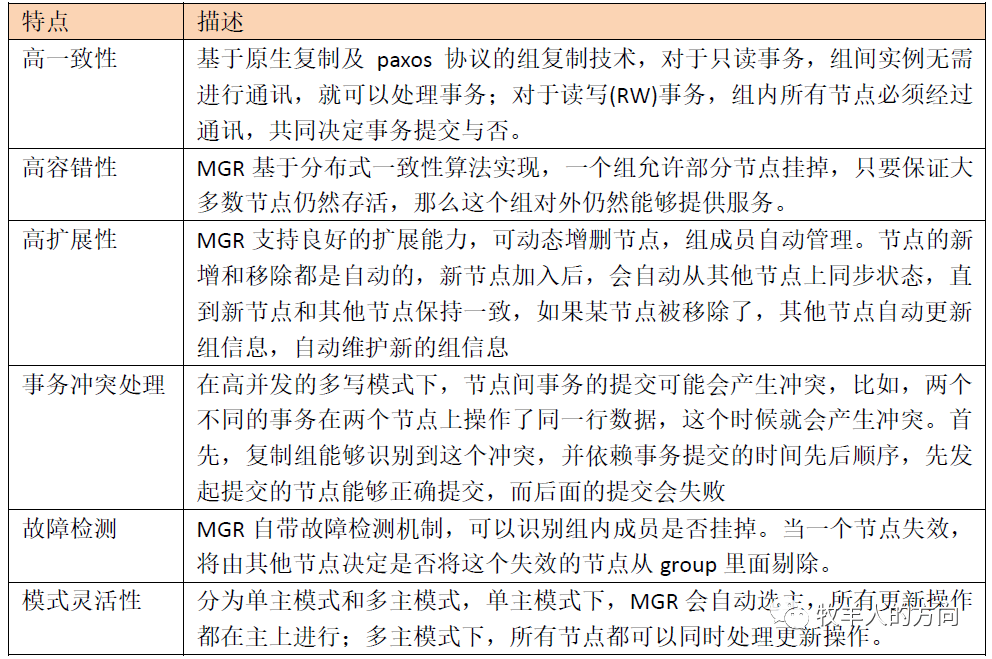

1.3 MGR复制特点

1.4 MGR复制的限制

存储引擎必须为Innodb,即仅支持InnoDB表

每个表必须提供主键,用于做write set的冲突检测

只支持ipv4,网络需求较高

必须打开GTID特性,二进制日志格式必须设置为ROW,用于选主与write set

COMMIT可能会导致失败,类似于快照事务隔离级别的失败场景

目前一个MGR集群组最多支持9个节点

不支持外键于save point特性,无法做全局间的约束检测与部分部分回滚

二进制日志binlog不支持Replication event checksums

多主模式(也就是多写模式) 不支持SERIALIZABLE事务隔离级别

多主模式不能完全支持级联外键约束

多主模式不支持在不同节点上对同一个数据库对象并发执行DDL(在不同节点上对同一行并发进行RW事务,后发起的事务会失败)

二、MGR组复制测试

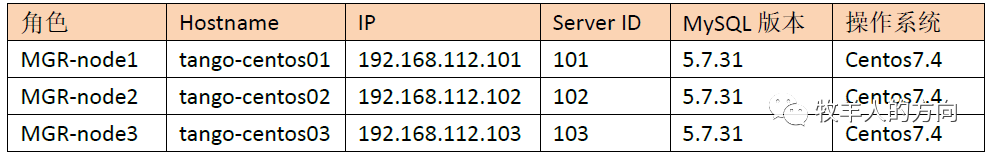

2.1 环境准备

2)安装配置好MySQL数据库

[root@tango-centos01 ~]# service mysql status SUCCESS! MySQL running (2081)[root@tango-centos02 ~]# service mysql status SUCCESS! MySQL running (2014)[root@tango-centos03 ~]# service mysql status SUCCESS! MySQL running (1812)复制

3)配置/etc/hosts信息

192.168.112.101 tango-centos01192.168.112.102 tango-centos02192.168.112.103 tango-centos03复制

2.2 MGR组复制配置 (本案例采用MGR多写模式)

2.2.1 节点centos01的配置

1)配置MGR的组名,组名可以随便起,但不能使用主机的GTID,比如使用节点mysql中的uuid作为组名:

mysql> select uuid();+--------------------------------------+| uuid() |+--------------------------------------+| 61e1dc92-c60f-11eb-983b-000c2937f917 |+--------------------------------------+1 row in set (0.00 sec)复制

将该值配置到loose-group_replication_group_name中

2)修改配置文件/etc/my.cnf

[root@tango-centos01 ~]# vim etc/my.cnf[mysqld]basedir=/usr/local/mysqldatadir=/usr/local/mysql/data/mysqlsocket=/tmp/mysql.socklog-error=/usr/local/mysql/data/mysql/mysql.errpid-file=/usr/local/mysql/data/mysql/mysql.pidlog_timestamps=SYSTEM# Disabling symbolic-links is recommended to prevent assorted security riskssymbolic-links=0#GTID:server_id = 101gtid_mode = onenforce_gtid_consistency = on#binloglog_bin = mysql-binlog-slave-updates = 1binlog_format = rowsync-master-info = 1sync_binlog = 1master_info_repository=TABLErelay_log_info_repository=TABLEbinlog_checksum=NONE#relay logskip_slave_start = 1#MGRtransaction_write_set_extraction=XXHASH64 #以便在server收集写集合的同时将其记录到二进制日志。写集合基于每行的主键,并且是行更改后的唯一标识此标识将用于检测冲突。loose-group_replication_group_name="61e1dc92-c60f-11eb-983b-000c2937f917" #组的名字可以随便起,但不能用主机的GTID! 所有节点的这个组名必须保持一致!loose-group_replication_start_on_boot=off #为了避免每次启动自动引导具有相同名称的第二个组,所以设置为OFF。loose-group_replication_local_address= "192.168.112.101:6606"loose-group_replication_group_seeds= "192.168.112.101:6606,192.168.112.102:6606,192.168.112.103:6606"loose-group_replication_bootstrap_group=offloose-group_replication_single_primary_mode=off #关闭单主模式的参数(本例测试时多主模式,所以关闭该项)loose-group_replication_enforce_update_everywhere_checks=on #开启多主模式的参数loose-group_replication_ip_whitelist="192.168.112.0/24,127.0.0.1/8" # 允许加入组复制的客户机来源的ip白名单复制

3)重启mysqld服务

[root@tango-centos01 mysql]# service mysql restartShutting down MySQL.. SUCCESS! Starting MySQL.. SUCCESS!复制

4)安装MGR插件

mysql> INSTALL PLUGIN group_replication SONAME 'group_replication.so'; #安装复制组插件Query OK, 0 rows affected (0.04 sec)mysql> SHOW PLUGINS;+----------------------------+----------+--------------------+----------------------+---------+| Name | Status | Type | Library | License |+----------------------------+----------+--------------------+----------------------+---------+| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |+----------------------------+----------+--------------------+----------------------+---------+47 rows in set (0.00 sec)复制

5)MGR复制配置

mysql> SET SQL_LOG_BIN=0; #即不记录二进制日志Query OK, 0 rows affected (0.00 sec)mysql> GRANT REPLICATION SLAVE ON *.* TO repl@'%' IDENTIFIED BY '1qaz@WSX';Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> FLUSH PRIVILEGES;Query OK, 0 rows affected (0.00 sec) mysql> reset master;Query OK, 0 rows affected (0.11 sec) mysql> SET SQL_LOG_BIN=1;Query OK, 0 rows affected (0.00 sec) mysql> CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='1qaz@WSX' FOR CHANNEL 'group_replication_recovery';Query OK, 0 rows affected, 2 warnings (0.29 sec)mysql> SET GLOBAL group_replication_bootstrap_group=ON; #只在第一个节点执行这个步骤Query OK, 0 rows affected (0.00 sec)mysql> START GROUP_REPLICATION;ERROR 3096 (HY000): The START GROUP_REPLICATION command failed as there was an error when initializing the group communication layer.mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | e5fb2194-eb27-11ea-b838-000c2992e812 | tango-centos01 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+1 row in set (0.00 sec)复制

要保证上面的group_replication_applier的状态为"ONLINE"。

2.2.2 其它节点centos01/02配置

1)配置centos02和centos03节点的my.cnf文件

只需要修改server_id和group_replication_local_address的配置即可。

[root@tango-centos02 mysql]# vi etc/my.cnfserver-id=102loose-group_replication_local_address= "192.168.112.102:6606"[root@tango-centos03 ~]# vi etc/my.cnfserver-id=103loose-group_replication_local_address= "192.168.112.103:6606"复制

2)配置完成后,要记得重启mysqld服务

[root@tango-centos02 mysql]# service mysql restartShutting down MySQL.. SUCCESS! Starting MySQL.. SUCCESS![root@tango-centos03 ~]# service mysql restartShutting down MySQL.. SUCCESS! Starting MySQL.. SUCCESS!复制

3)登录mysql进行相关设置操作

mysql> SET SQL_LOG_BIN=0;Query OK, 0 rows affected (0.03 sec)mysql> GRANT REPLICATION SLAVE ON *.* TO repl@'%' IDENTIFIED BY '1qaz@WSX';Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> FLUSH PRIVILEGES;Query OK, 0 rows affected (0.01 sec)mysql> reset master;Query OK, 0 rows affected (0.01 sec)mysql> SET SQL_LOG_BIN=1;Query OK, 0 rows affected (0.00 sec)mysql> CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='1qaz@WSX' FOR CHANNEL 'group_replication_recovery';Query OK, 0 rows affected, 2 warnings (0.03 sec) mysql> INSTALL PLUGIN group_replication SONAME 'group_replication.so';Query OK, 0 rows affected (0.04 sec) mysql> SHOW PLUGINS;+----------------------------+----------+--------------------+----------------------+---------+| Name | Status | Type | Library | License |+----------------------------+----------+--------------------+----------------------+---------+| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |+----------------------------+----------+--------------------+----------------------+---------+47 rows in set (0.00 sec) 这里只需要执行这一步即可!mysql> START GROUP_REPLICATION;Query OK, 0 rows affected (5.87 sec)复制

4)查看组内情况,发现centos02和centos03已经成功加入这个组内了。

注意:这里一定要将三个节点的/etc/hosts文件里绑定主机名,否则这里添加组的时候会一直报错:RECOVERING (必须要是ONLINE才行)

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | RECOVERING || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | RECOVERING || group_replication_applier | e5fb2194-eb27-11ea-b838-000c2992e812 | tango-centos01 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+3 rows in set (0.00 sec)复制

在/etc/hosts绑定主机名后重启GROUP_REPLICATION

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | ONLINE || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | ONLINE || group_replication_applier | e5fb2194-eb27-11ea-b838-000c2992e812 | tango-centos01 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+3 rows in set (0.00 sec)复制

2.2.3 组复制数据同步测试

1)在任意一个节点上执行

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | ONLINE || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | ONLINE || group_replication_applier | e5fb2194-eb27-11ea-b838-000c2992e812 | tango-centos01 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+3 rows in set (0.00 sec)复制

如上,说明已经在centos01、centos02、centos03 三个节点上成功部署了基于GTID的组复制同步环境。现在在三个节点中的任意一个上面更新数据,那么其他两个节点的数据库都会将新数据同步过去。

2)在centos01节点数据库更新数据

mysql> select * from tango.tb01;+------+------+| id | name |+------+------+| 1 | bj || 2 | sz || 3 | gz || 4 | sh |+------+------+4 rows in set (0.00 sec)mysql> insert into tango.tb01 values(5,'fs');Query OK, 1 row affected (0.01 sec)复制

接着在centos02、centos03节点数据库查看,发现更新后数据已经同步过来

mysql> select * from tango.tb01;+----+------+| id | name |+----+------+| 1 | bj || 2 | sz || 3 | gz || 4 | sh || 5 | fs |+----+------+5 rows in set (0.00 sec)复制

3)在centos02节点数据库更新数据

mysql> delete from tango.tb01 where id=2;Query OK, 1 row affected (0.00 sec)复制

接着在centos01、centos03节点数据库查看,发现更新后数据已经同步过来

mysql> select * from tango.tb01;+----+------+| id | name |+----+------+| 1 | bj || 3 | gz || 4 | sh || 5 | fs |+----+------+4 rows in set (0.00 sec)复制

3)在centos03节点数据库更新数据

mysql> update tango.tb01 set name='nh' where id=5;Query OK, 1 row affected (0.01 sec)Rows matched: 1 Changed: 1 Warnings: 0复制

接着在centos01、centos02节点数据库查看,发现更新后数据已经同步过来

mysql> select * from tango.tb01;+----+------+| id | name |+----+------+| 1 | bj || 3 | gz || 4 | sh || 5 | nh |+----+------+4 rows in set (0.00 sec)复制

2.2.4 组复制故障测试

当组内的某个节点发生故障时,会自动从将该节点从组内踢出,与其他节点隔离。剩余的节点之间保持主从复制的正常同步关系。当该节点的故障恢复后,只需手动激活组复制即可(即执行"START GROUP_REPLICATION;");

场景一:单个节点发生故障

1)模拟centos01的mysql发生故障,比如关闭mysql服务(或者该节点网络故障,其他节点与之通信失败等)

[root@tango-centos01 ~]# service mysql stopShutting down MySQL............ SUCCESS! [root@tango-centos01 ~]# service mysql status ERROR! MySQL is not running复制

在剩余的两个节点中的任意一个查看

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | ONLINE || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+2 rows in set (0.00 sec)复制

如上,在centos01节点的mysql发生故障后,会自动从这个组内踢出,剩余的两个节点的组复制同步关系正常。

2)在centos02节点更新数据

mysql> select * from tango.tb01;+----+------+| id | name |+----+------+| 1 | bj || 3 | gz || 4 | sh || 5 | nh |+----+------+4 rows in set (0.03 sec)mysql> insert into tango.tb01 values(6,'ah');Query OK, 1 row affected (0.18 sec)复制

在另一个节点centos03上查看,发现更新数据已同步过来

mysql> select * from tango.tb01;+----+------+| id | name |+----+------+| 1 | bj || 3 | gz || 4 | sh || 5 | nh || 6 | ah |+----+------+5 rows in set (0.00 sec)复制

3)当centos01节点的mysql服务恢复后,是什么情况

[root@tango-centos01 ~]# service mysql startStarting MySQL.. SUCCESS! [root@tango-centos01 ~]# service mysql status SUCCESS! MySQL running (3025)复制

在剩余的两个节点中的任意一个查看

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | ONLINE || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+2 rows in set (0.00 sec)复制

如上发现,centos01节点恢复后,不会自动添加到组内,需要手动激活下该节点的组复制功能

[root@tango-centos01 mysql]# ./bin/mysql -uroot –ppassword...........mysql> START GROUP_REPLICATION;Query OK, 0 rows affected (2.89 sec)复制

再次查看,发现centos01节点已经重新添加到组内了

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | ONLINE || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | ONLINE || group_replication_applier | e5fb2194-eb27-11ea-b838-000c2992e812 | tango-centos01 | 3306 | ONLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+3 rows in set (0.00 sec)复制

centos01节点恢复后,并重新添加到组内后,其他节点更新的数据也会及时同步过来

mysql> select * from tango.tb01;+----+------+| id | name |+----+------+| 1 | bj || 3 | gz || 4 | sh || 5 | nh || 6 | ah |+----+------+5 rows in set (0.00 sec)复制

场景二:所有节点发生故障

如果三个节点都发生故障的话,在节点的故障都恢复后,需要手动重新做组复制,操作流程如下:

1)查看MGR状态

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | OFFLINE |+---------------------------+--------------------------------------+----------------+-------------+--------------+1 row in set (0.06 sec)复制

启动组复制GROUP_REPLICATION失败:

mysql> START GROUP_REPLICATION;ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.复制

查看错误日志:

2021-06-06T09:13:10.661868+08:00 0 [Note] Plugin group_replication reported: 'XCom initialized and ready to accept incoming connections on port 6606'2021-06-06T09:13:10.663418+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.664027+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.664641+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.665000+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.665280+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.665629+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.666179+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.666506+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.666820+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.667107+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.667387+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.667996+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.668959+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.670214+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.670903+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.671453+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.672301+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.673110+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.673373+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.101:6606 on local port: 6606.'2021-06-06T09:13:10.674168+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error on opening a connection to 192.168.112.103:6606 on local port: 6606.'2021-06-06T09:13:10.674209+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] Error connecting to all peers. Member join failed. Local port: 6606'2021-06-06T09:13:10.677983+08:00 0 [Warning] Plugin group_replication reported: 'read failed'2021-06-06T09:13:10.706774+08:00 0 [ERROR] Plugin group_replication reported: '[GCS] The member was unable to join the group. Local port: 6606'复制

2)第一个节点centos01执行如下操作

mysql> reset master;mysql> SET SQL_LOG_BIN=1;mysql> CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='1qaz@WSX' FOR CHANNEL 'group_replication_recovery';mysql> STOP GROUP_REPLICATION;mysql> SET GLOBAL group_replication_bootstrap_group=ON;mysql> START GROUP_REPLICATION;mysql> SET GLOBAL group_replication_bootstrap_group=OFF;mysql> SELECT * FROM performance_schema.replication_group_members;复制

3)第二个节点centos02执行如下操作

mysql> reset master;mysql> SET SQL_LOG_BIN=1;mysql> CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='1qaz@WSX' FOR CHANNEL 'group_replication_recovery';mysql> START GROUP_REPLICATION;mysql> SELECT * FROM performance_schema.replication_group_members;复制

4)第三个节点centos03执行如下操作

mysql> reset master;mysql> SET SQL_LOG_BIN=1;mysql> CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='1qaz@WSX' FOR CHANNEL 'group_replication_recovery';mysql> START GROUP_REPLICATION;mysql> SELECT * FROM performance_schema.replication_group_members;复制

5)查看MGR状态已经恢复

mysql> SELECT * FROM performance_schema.replication_group_members;+---------------------------+--------------------------------------+----------------+-------------+--------------+| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |+---------------------------+--------------------------------------+----------------+-------------+--------------+| group_replication_applier | be42da32-eb35-11ea-9505-000c2937f917 | tango-centos02 | 3306 | ONLINE || group_replication_applier | de623c4b-eb35-11ea-b1e4-000c2959d3e3 | tango-centos03 | 3306 | ONLINE || group_replication_applier | e5fb2194-eb27-11ea-b838-000c2992e812 | tango-centos01 | 3306 | ONLINE |

+---------------------------+--------------------------------------+----------------+-------------+--------------+

3 rows in set (0.00 sec)复制

以上完成了MySQL组复制MGR多主模式的配置和测试。

参考资料

https://www.cnblogs.com/kevingrace/p/10260685.html

https://blog.csdn.net/wzy0623/article/details/95195028

https://blog.csdn.net/wzy0623/article/details/95619837