1.什么是Service

2.Service的使用

3.Service的四种type

4.什么是Ingress

5.Ingress的安装

6.Ingress的使用

1.什么是Service

Service官网文档

我们使用前面学过的Deployment来运行我们的应用。因为在任何时刻,我们都不知道每个Pod的健康程度,因为Pod是个临时资源,Pod的创建和销毁是为了匹配Deployment的预期状态,Deployment可以动态地创建和销毁Pod。

同时每个Pod都会拥有自己的IP地址,如果Pod经常创建和销毁,那如何保证这个服务的Pod让别的服务进行服务发现并跟踪其要连接的IP地址,以便使其使用负载均衡的组件?

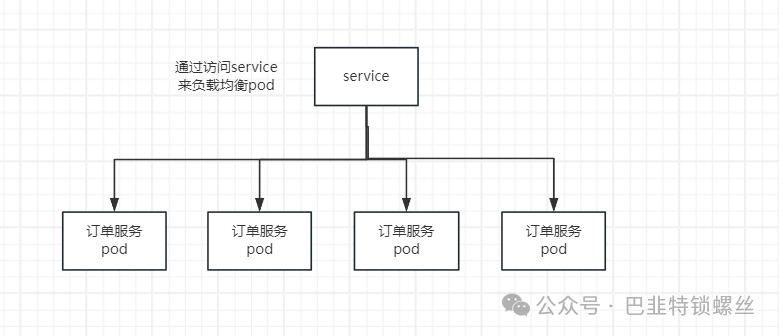

假设我们现在有一组订单服务,创建副本数为3,我们要把这3个pod当做一个Service让别的服务进行服务发现,因为pod是临时资源,ip经常发生变化,此时我们就要使用service,K8s中的service是将一组pod公开为网络服务的方法。

2.Service的使用

Service一般搭配Deployment一起使用,我们先创建一组Deployment。

apiVersion:apps/v1

kind:Deployment

metadata:

name:my-nginx

labels:

app:nginx

spec:

replicas:3

selector:

matchLabels:

app:nginx

template:

metadata:

labels:

app:nginx

spec:

containers:

-name:nginx

image:nginx

ports:

-containerPort:80复制

然后通过service负载均衡访问上面这一组Pod

apiVersion:v1

kind:Service

metadata:

name:my-nginx

namespace:default

labels:

app:nginx

spec:

ports:

-name:nginx

protocol:TCP

port:80

targetPort:80

selector:

app:nginx

type:ClusterIP#有好几种模式,下文会讲解复制

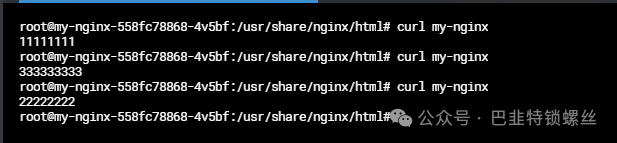

此时Service就会创建成功,我们在pod内通过curl my-nginx访问,就可以实现负载均衡。

额外补充: 此时我们整个服务的调用链路就变成了 Service->Deployment->Pod->jar。

3.Service的四种type

我们前面通过ClusterIp创建了service,service中type可选值如下,代表四种不同的服务发现类型。

ClusterIP(默认):

通过集群的内部IP暴露服务,选择该值时服务只能够在集群内部访问。这也是默认的ServiceType。

None会创建出没有ClusterIP的headless service(无头服务),Pod需要用服务的域名访问

type:ClusterIP

ClusterIP:手动指定/None/复制

NodePort:

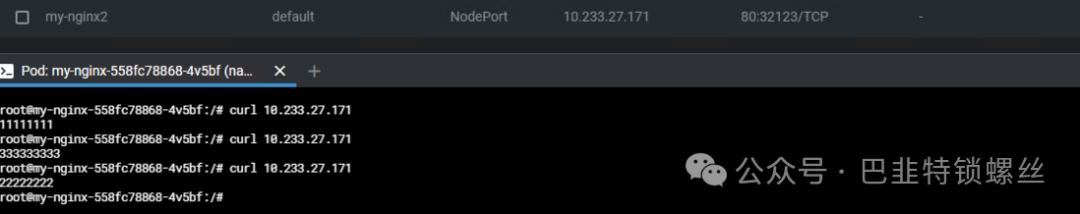

通过每个节点上的IP和静态端口暴露服务。NodePort服务会路由到自动创建的ClusterIP服务,通过请求<节点IP>:<节点端口>,我们可以从集群的外部访问到一个NodePort服务。

如果将 type 字段设置为 NodePort ,则 Kubernetes 将在 --service-node-port-range 标志指定的范围内分配端口(默认值:30000-32767)

K8s集群的所有机器都将打开监听这个端口的数据,访问任何一个机器,都可以访问这个service对应的pod。

使用nodePort自定义端口

apiVersion:v1

kind:Service

metadata:

name:my-nginx2

namespace:default

labels:

app:nginx

spec:

ports:

-name:nginx

protocol:TCP

port:80

targetPort:80

nodePort:32123

selector:

app:nginx

type:NodePort复制

LoadBalancer:在集群外部再做一个负载均衡设备,而这个设备需要外部环境的支持,外部服务发送到这个设备商的请求,会被设备负载后转发到集群中。

apiVersion:v1

kind:Service

metadata:

name:loadbalancer-service

spec:

type:LoadBalancer

selector:

app:nginx#只想DaemonSet

ports:

-protocol:TCP

port:80

targetPort:80复制

ExternalName可以将服务映射到 externalName 字段的内容的域名,但是要注意跨域,一般不用。

apiVersion:v1

kind:Service

metadata:

name:my-nginx3

namespace:default

labels:

app:nginx

spec:

ports:

-name:nginx

protocol:TCP

port:80

targetPort:80

selector:

app:nginx

type:ExternalName

externalName:www.baidu.com复制

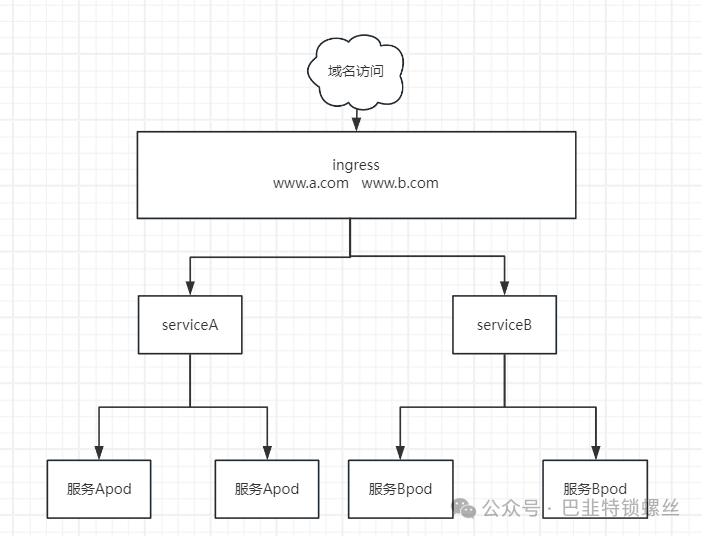

4.什么是Ingress

什么是ingress官网文档

我们可以使用Service对外暴露服务,但如果直接使用Service对外暴露访问端口,我们会有如下的一些问题:

Service可以使用NodePort暴露集群对外访问端口,但是性能底下不安全。

缺少统一访问入口,可以负载均衡,限流等等

因为有上述缺点,所以Ingress出现了。

Ingress是K8s中的一种资源对象,用于管理从集群外部访问集群内部的HTTP和HTTPS路由。流量路由由 Ingress 资源所定义的规则来控制。

通过配置,Ingress可为Service提供外部可访问的URL,对其流量作为负载均衡。

5.Ingress的安装

我们需要拥有一个Ingress控制器才能满足Ingress的要求,仅仅创建Ingress资源本身并没有任何效果,我们使用kubectl apply -f ingress.yaml 使用下面的文件内容就可以创建了。

apiVersion:v1

kind:Namespace

metadata:

name:ingress-nginx

labels:

app:ingress-nginx

---

apiVersion:v1

kind:ServiceAccount

metadata:

name:nginx-ingress-controller

namespace:ingress-nginx

labels:

app:ingress-nginx

---

apiVersion:rbac.authorization.k8s.io/v1

kind:ClusterRole

metadata:

name:nginx-ingress-controller

labels:

app:ingress-nginx

rules:

-apiGroups:

-""

resources:

-configmaps

-endpoints

-nodes

-pods

-secrets

-namespaces

-services

verbs:

-get

-list

-watch

-apiGroups:

-"extensions"

-"networking.k8s.io"

resources:

-ingresses

verbs:

-get

-list

-watch

-apiGroups:

-""

resources:

-events

verbs:

-create

-patch

-apiGroups:

-"extensions"

-"networking.k8s.io"

resources:

-ingresses/status

verbs:

-update

-apiGroups:

-""

resources:

-configmaps

verbs:

-create

-apiGroups:

-""

resources:

-configmaps

resourceNames:

-"ingress-controller-leader-nginx"

verbs:

-get

-update

---

apiVersion:rbac.authorization.k8s.io/v1

kind:ClusterRoleBinding

metadata:

name:nginx-ingress-controller

labels:

app:ingress-nginx

roleRef:

apiGroup:rbac.authorization.k8s.io

kind:ClusterRole

name:nginx-ingress-controller

subjects:

-kind:ServiceAccount

name:nginx-ingress-controller

namespace:ingress-nginx

---

apiVersion:v1

kind:Service

metadata:

labels:

app:ingress-nginx

name:nginx-ingress-lb

namespace:ingress-nginx

spec:

# DaemonSet need:

# ----------------

type:ClusterIP

# ----------------

# Deployment need:

# ----------------

# type: NodePort

# ----------------

ports:

-name:http

port:80

targetPort:80

protocol:TCP

-name:https

port:443

targetPort:443

protocol:TCP

-name:metrics

port:10254

protocol:TCP

targetPort:10254

selector:

app:ingress-nginx

---

kind:ConfigMap

apiVersion:v1

metadata:

name:nginx-configuration

namespace:ingress-nginx

labels:

app:ingress-nginx

data:

keep-alive:"75"

keep-alive-requests:"100"

upstream-keepalive-connections:"10000"

upstream-keepalive-requests:"100"

upstream-keepalive-timeout:"60"

allow-backend-server-header:"true"

enable-underscores-in-headers:"true"

generate-request-id:"true"

http-redirect-code:"301"

ignore-invalid-headers:"true"

log-format-upstream:'{"@timestamp": "$time_iso8601","remote_addr": "$remote_addr","x-forward-for": "$proxy_add_x_forwarded_for","request_id": "$req_id","remote_user": "$remote_user","bytes_sent": $bytes_sent,"request_time": $request_time,"status": $status,"vhost": "$host","request_proto": "$server_protocol","path": "$uri","request_query": "$args","request_length": $request_length,"duration": $request_time,"method": "$request_method","http_referrer": "$http_referer","http_user_agent": "$http_user_agent","upstream-sever":"$proxy_upstream_name","proxy_alternative_upstream_name":"$proxy_alternative_upstream_name","upstream_addr":"$upstream_addr","upstream_response_length":$upstream_response_length,"upstream_response_time":$upstream_response_time,"upstream_status":$upstream_status}'

max-worker-connections:"65536"

worker-processes:"2"

proxy-body-size:20m

proxy-connect-timeout:"10"

proxy_next_upstream:errortimeouthttp_502

reuse-port:"true"

server-tokens:"false"

ssl-ciphers:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA

ssl-protocols:TLSv1TLSv1.1TLSv1.2

ssl-redirect:"false"

worker-cpu-affinity:auto

---

kind:ConfigMap

apiVersion:v1

metadata:

name:tcp-services

namespace:ingress-nginx

labels:

app:ingress-nginx

---

kind:ConfigMap

apiVersion:v1

metadata:

name:udp-services

namespace:ingress-nginx

labels:

app:ingress-nginx

---

apiVersion:apps/v1

kind:DaemonSet

metadata:

name:nginx-ingress-controller

namespace:ingress-nginx

labels:

app:ingress-nginx

annotations:

component.version:"v0.30.0"

component.revision:"v1"

spec:

# Deployment need:

# ----------------

# replicas: 1

# ----------------

selector:

matchLabels:

app:ingress-nginx

template:

metadata:

labels:

app:ingress-nginx

annotations:

prometheus.io/port:"10254"

prometheus.io/scrape:"true"

scheduler.alpha.kubernetes.io/critical-pod:""

spec:

# DaemonSet need:

# ----------------

hostNetwork:true

# ----------------

serviceAccountName:nginx-ingress-controller

priorityClassName:system-node-critical

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

-podAffinityTerm:

labelSelector:

matchExpressions:

-key:app

operator:In

values:

-ingress-nginx

topologyKey:kubernetes.io/hostname

weight:100

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

-matchExpressions:

-key:type

operator:NotIn

values:

-virtual-kubelet

containers:

-name:nginx-ingress-controller

image:registry.cn-beijing.aliyuncs.com/acs/aliyun-ingress-controller:v0.30.0.2-9597b3685-aliyun

args:

-/nginx-ingress-controller

---configmap=$(POD_NAMESPACE)/nginx-configuration

---tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

---udp-services-configmap=$(POD_NAMESPACE)/udp-services

---publish-service=$(POD_NAMESPACE)/nginx-ingress-lb

---annotations-prefix=nginx.ingress.kubernetes.io

---enable-dynamic-certificates=true

---v=2

securityContext:

allowPrivilegeEscalation:true

capabilities:

drop:

-ALL

add:

-NET_BIND_SERVICE

runAsUser:101

env:

-name:POD_NAME

valueFrom:

fieldRef:

fieldPath:metadata.name

-name:POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath:metadata.namespace

ports:

-name:http

containerPort:80

-name:https

containerPort:443

livenessProbe:

failureThreshold:3

httpGet:

path:/healthz

port:10254

scheme:HTTP

initialDelaySeconds:10

periodSeconds:10

successThreshold:1

timeoutSeconds:10

readinessProbe:

failureThreshold:3

httpGet:

path:/healthz

port:10254

scheme:HTTP

periodSeconds:10

successThreshold:1

timeoutSeconds:10

# resources:

# limits:

# cpu: "1"

# memory: 2Gi

# requests:

# cpu: "1"

# memory: 2Gi

volumeMounts:

-mountPath:/etc/localtime

name:localtime

readOnly:true

volumes:

-name:localtime

hostPath:

path:/etc/localtime

type:File

nodeSelector:

boge/ingress-controller-ready:"true"

tolerations:

-operator:Exists

initContainers:

-command:

-/bin/sh

--c

-|

mount -o remount rw proc/sys

sysctl -w net.core.somaxconn=65535

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w fs.file-max=1048576

sysctl -w fs.inotify.max_user_instances=16384

sysctl -w fs.inotify.max_user_watches=524288

sysctl -w fs.inotify.max_queued_events=16384

image:registry.cn-beijing.aliyuncs.com/acs/busybox:v1.29.2

imagePullPolicy:Always

name:init-sysctl

securityContext:

privileged:true

procMount:Default

---

## Deployment need for aliyun'k8s:

#apiVersion: v1

#kind: Service

#metadata:

# annotations:

# service.beta.kubernetes.io/alibaba-cloud-loadbalancer-id: "lb-xxxxxxxxxxxxxxxxxxx"

# service.beta.kubernetes.io/alibaba-cloud-loadbalancer-force-override-listeners: "true"

# labels:

# app: nginx-ingress-lb

# name: nginx-ingress-lb-local

# namespace: ingress-nginx

#spec:

# externalTrafficPolicy: Local

# ports:

# - name: http

# port: 80

# protocol: TCP

# targetPort: 80

# - name: https

# port: 443

# protocol: TCP

# targetPort: 443

# selector:

# app: ingress-nginx

# type: LoadBalancer复制

6.Ingress的使用

apiVersion:networking.k8s.io/v1beta1

kind:Ingress

metadata:

name:my-nginx-ingress

namespace:default

annotations:

nginx.ingress.kubernetes.io/rewrite-target:/

nginx.ingress.kubernetes.io/client-max-body-size:10M

nginx.ingress.kubernetes.io/proxy-body-size:10M

nginx.ingress.kubernetes.io/proxy-connect-timeout:'300'

nginx.ingress.kubernetes.io/proxy-read-timeout:'300'

nginx.ingress.kubernetes.io/proxy-send-timeout:'300'

spec:

rules:

-host:nginx.local## 可以修改本地hosts文件,将服务地地址指向 nginx.local

http:

paths:

-path:/

backend:## 指定需要响应的后端服务

serviceName:my-nginx## kubernetes集群的svc名称

servicePort:80## service的端口号复制

通过访问nginx.local,我们的整个调用链路就是:

域名--->ingress--->service--->pod--->具体服务

版权声明:本文内容来自个人博客segmentfault:苏凌峰,遵循CC 4.0 BY-SA版权协议上原文接及本声明。本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行可。原文链接:https://segmentfault.com/a/1190000045041280如有涉及到侵权,请联系,将立即予以删除处理。在此特别鸣谢原作者的创作。此篇文章的所有版权归原作者所有,与本公众号无关,商业转载建议请联系原作者,非商业转载请注明出处。复制