Big Data System Testing Method based on Chaos Engineering_中国移动.pdf

免费下载

Big Data System Testing Method Based on Chaos

Engineering

Guo Chen, Guotao Bai, Chun Zhang and Juan Wang

China Mobile Information Technology Co., Ltd.

Beijing, China

guochen_cnmobile@163.com

Kang Ni and Zhi Chen

School of Computer, Nanjing University of Posts and

Telecommunications

Nanjing, Jiangsu Province, China

tznikang@163.com, chenz@njupt.edu.cn

Abstract—The big data systems usually have multiple

interdependent network-elements and processes. With the

gradual growth in the number of network-elements and the

complexity of networking, the possibility of system abnormalities

also increases, then the influences of faults on the system are

difficult to assess. For effectively discovering the possible defects

of big data system products and solving the stability problem of

the evaluation system, chaos engineering is employed for the big

data systems, and a big data system testing method based on

chaos engineering is proposed. The proposed method, which is

fault-tolerant, resilient and reliable, mainly includes the

processes, i.e., exception injection, exception recovery, system

correctness verification, observable real-time data statistics, and

result reporting. The experimental results illustrate that the

proposed big data system based on chaos method can shorten the

development cycle of the version by 20% and observe 21 hidden

faults caused by improper handling of abnormal scenarios, which

effectively improves the robustness and stability of the

distributed system.

Keywords- big data system; database system; chaos engineering;

chaos testing; reliability

I. INTRODUCTION

With the growth of the scale and complexity of big data

systems [1], the system architecture is developing towards a

distributed direction. For guaranteeing the reliable operation of

software systems, the high-availability deployment solution has

become a research hotspot on the design of software system

architecture [2-3]. While a single point or cluster failure occurs

within the system, the system's self-recovery and fault

tolerance will decrease. Recently, although researchers have

made relevant progress in advanced architecture, high-quality

code and perfect testing methods, distributed systems still

cannot supply the requirements of high availability and

flexibility [4-5]. For discovering the defects existing in the

distributed system timely, the embedding of chaos engineering

emerges.

Chaos engineering [6-8] refers to continuously simulating

various unknown faults that may occur in the production

environment of a distributed system, and testing the response

of the distributed system to these faults for verifying that the

system operates in a turbulent environment and improving the

stability of the system. The main differences between Chaos

engineering and other non-chaotic methods are that Chaos

engineering [9] is a practice of generating new information,

while fault injection is a fixed method of testing a certain

special case. Injecting failures, i.e., communication delays and

errors, are an effective way to explore the possible undesirable

behaviors of complex systems. Additionally, exploratory

testing forms of chaos engineering can generate the novel

knowledge of system. This form not only tests known

properties, but also performs the verification by the integration.

The application of chaos engineering in software system is

of value in both theoretical research and actual practice. Ali

Basiri et al. built a platform, named ChAP, for running chaos

experiment engineering in Netflix micro service architecture,

which mainly focuses on two failure modes of service

degradation and service failure, it can automatically generate

and execute chaos experiments [10]. Reference [11] proposed a

ChaosOrca framework, which performs the fault injection for

container systems in a micro service environment. Reference [9]

proposed a ChaosMachine framework, which inserts exception

throwing behavior and embedding chaos engineering to verify

the ability of java service. In addition, there are some practical

tools that can be deployed, i.e., ChaoMonkey, Chaosblade, and

Chaos-toolkit [12-14]. Most of the existing tools are aimed at

cloud-native conditions, and they are integrated in the

kubernetes environment [15-16]. According to the

characteristics of the physical machine, the novel tool develops

a special chaos experiment function and supports the direct

injection of exceptions on the physical machine which has the

characteristics of light weight, simple installation, and

deployment, and the tool combines the application scenarios of

big data systems to perform abnormal injection and verification

of real business. Recently, China Mobile and ZTE (Zhongxing

Telecom Equipment) have jointly developed a domestic big

data system product. During the development process, a unit

testing has a high line coverage to ensure that all the logic code

can be covered, and numbers of integration tests are deployed

to guarantee that the system can work with other components.

But in the test of a distributed software system, the software

still has the following shortcomings: 1) There are various types

of network elements. The big data system includes four types

of network-element nodes: management nodes, distributed

transaction control nodes, computing nodes, and storage nodes;

2) The physical network is complex. The telecommunications

field where distributed data is commonly used, a system is

usually deployed across data centers, computer rooms, and

cities. Therefore, the total number of servers ranges from

dozens to hundreds or thousands; 3) There are various types of

exceptions. In the field of distributed systems, common

exceptions contain: process-level exceptions, network

exceptions, hardware exceptions, third-party input exceptions,

and resource load exceptions. Herein, each exception type can

be subdivided into several or even dozens of different

subdivisions scenes.

For solving the above problems, this paper proposes a big

data system testing method based on chaos engineering. The

method mainly contains four aspects: testing process, abnormal

injection strategy, abnormal model, and testing planning. It can

be observed by the verification experiments of testing data. The

proposed method not only shortens the research and

development cycle of distributed software system, but also

enhances the efficiency of the research and development.

Meanwhile, the proposed method greatly improves the

robustness, stability and fault location emergency capability of

the distributed system.

II. B

IG DATA SYSTEM TESTING METHOD BASED ON

CHAOS TESTING

The big data system testing method based on chaos

engineering can be divided into the following four parts:

1. The injection of exception: According to the established

rules, one or more exceptions are continuously injected into the

running distributed system in a planned or random manner.

2. The abnormal recovery: After abnormal injection, the

system obtains the ability to automatically recover from

abnormality by the training.

3. The verification of correctness: After abnormal injection,

some evaluation criteria are employed to judge whether the

performance of the system meets expectations, and an interface

between the development interface and the evaluation system is

established. The system will be troubleshot while it fails.

4. The observability of the real-time data statistics and

resulting report: The system records the operation log in detail

during the operation, collects relevant information, and outputs

the operation report for the tester.

A. Testing Process

The basic principle of chaos testing contains: start from the

known and detect the unknown. It mainly follows the states:

already known and noticed, noticed but not understood,

understood but not noticed, neither noticed nor understood.

Figure 1 shows the flow chart of chaos testing. After the big

data system environment is deployed, online services can be

started normally. Various exceptions are injected into the big

data system based on the planning rules or random policies,

then check the business status and system status, and while the

status detection passes, this system continues the exceptions

and repeats the cycle until the status detection fails. While the

status detection fails, this system stops the exception injection,

throws a running error and locates the fault.

Figure 1. The flow chart of chaos testing

B. The Strategy of Exception Injection

The chaos engineering focuses on solving the problem of

uncertainty, but in the testing process, it should follow the

order of covering from certainty to uncertainty, to cover

various abnormal scenarios in the maximum. The order of

exception testing in a big data system is given as follows:

1. The traversal of one-dimensional exception, sequentially

injecting various types of applicable exceptions into various

network-elements to achieve the purpose of single exception

traversal of network-elements.

2. The traversal of two-dimensional exception, one or two

network-elements, and one or two exceptions combined

traversal. The combined scenarios can be divided into: a single

network-element injects two different exceptions, one

exception is injected into two network-elements, and two

network-elements are injected. Two different exceptions are

injected separately.

3. Gradually increase the degree of chaos based on the

actual situation, that is, query the anomalies by means of

continuous drills and return to operations, and continuously

promote the progress of systems, tools and processes.

4. Random anomaly test, select M network elements

randomly, and inject random N anomalies to conduct chaotic

testing.

Figure 2. Exception injection for chaos testing tools

Figure 2 illustrates the exception injection methods for the

chaos testing tools, i.e.,

1. From the interface of chaotic exception injection, the

proposed method obtains the information of M modules to be

injected with exceptions randomly.

2. Start M concurrent processes and set a rand_sleep() in

each process.

3. n (1≤n) exception messages are given randomly in each

process function, each exception message is executed by a

thread.

4. Set a rand_sleep() in each thread to create system

exceptions at intervals.

C. Exception Model

1) Exception Type

First, this subsection presents common exception types.

Process-level exceptions and network exceptions can be

automatically simulated in different ways. For example:

process-level exceptions can be simulated by using various

normal stop commands, kill commands, kill -9 commands,

pkill-u commands, pkill-9-u commands, gdb attach commands,

kill-stop pid commands; tc tools can simulate various types of

network packet loss, disorder, delay, and packet damage. The

iptables command can simulate various network flashes and

disconnections, and IP address conflicts and modifications can

automatically simulate other network anomalies.

In addition, server-system resource exceptions, file-system

exceptions, operating system-level exceptions, and other

exceptions are reported in Table I.

TABLE I. EXCEPTION TYPE

Server-system

resource

exception

OS-level

exception

File-system

exception

Other

exceptions

Insufficient

CPU

OS restart,

graceful

shutdown

File-permission

exceptions, i.e.,

read-only,

write-only.

Switch power

off and restart

IO bottleneck

OS abnormal

crash

File does not

exis

t

Cyber storm

Not-enough

storage

Hard-disk

failure, file-

system

damage

File-format

error, file

Corruption

NTP-service

failure

Disk full

Various

service

exceptions:

ftp, sftp

File-function

call errors

DNS server

broken

Inode

exhausted

Dynamic

password

modification

\ \

The above table only lists six typical exception types in big

data system products, and different distributed products have

their own unique characteristics. The type and scope of

exceptions are defined by the characteristics of products. The

test objectives of this product are given clearly: 1) for transfer

business; 2) for data consistency verification.

2) Anomaly Model Mechanism

For network exceptions, OS exceptions, and various

dependency exceptions, big data system component exceptions

occur at different levels. The occurrence of all exceptions will

indirectly or directly influent the service of systems. The

ultimate goal is to observe the service's ability to recover while

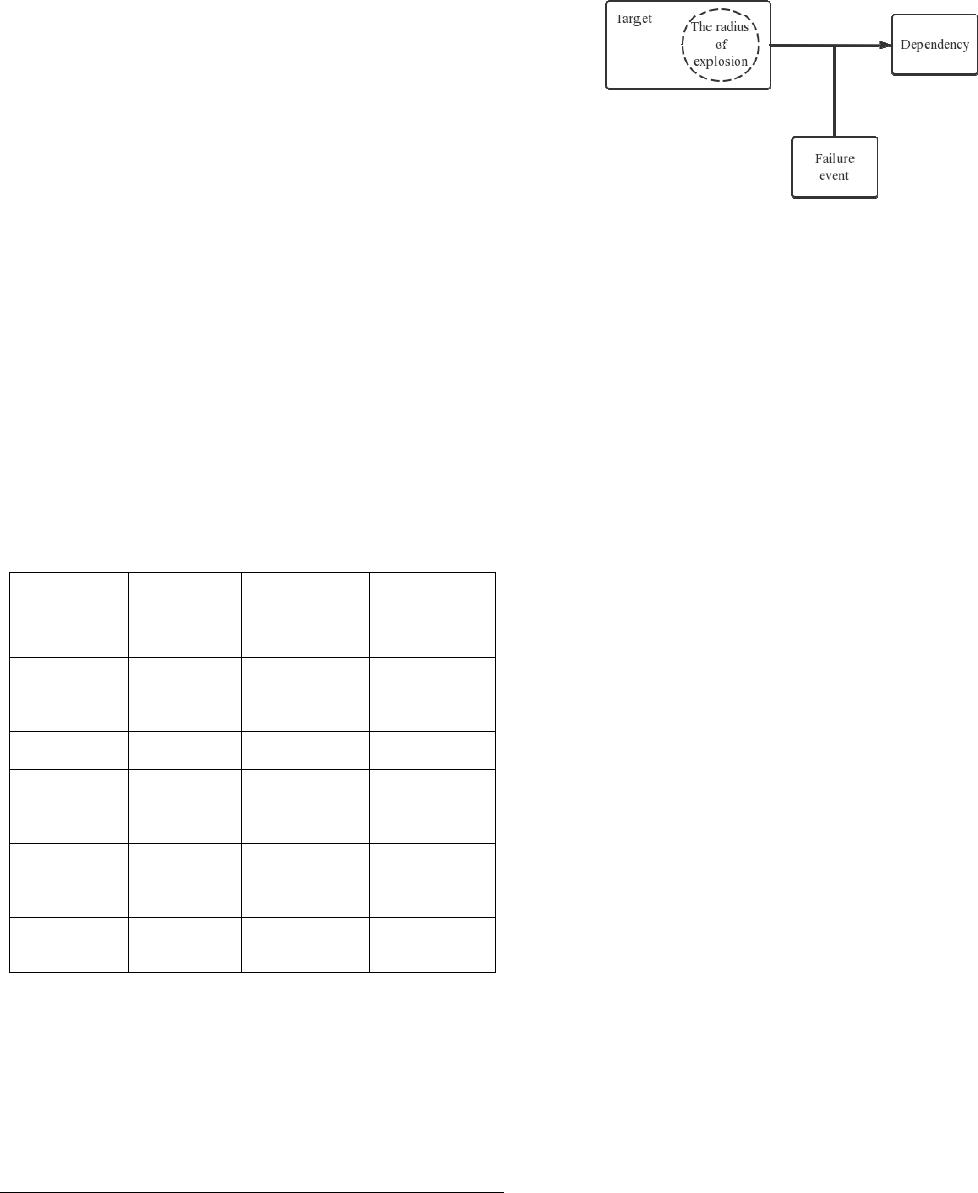

the external dependencies are abnormal. Figure 3 shows the

schematic diagram of abnormal model.

Figure 3. Schematic diagram of abnormal model

Target: For the target service mentioned above, before

starting the chaos experiment, it is necessary to clarify what

service to inject anomalies into. This service is the main

observation target.

The radius of explosion: Based on the concept of chaos

engineering, for reducing the risk of the experiments, the full

flow of the service will not be affected. Usually, a deployment

unit is filtered out, which is either a computer room or a cluster.

Dependency: The service is affected by the failure, but its

dependencies are actually abnormal. The exception may come

from middleware, from a downstream service, or from the

central processing unit (CPU), disk, and network.

Failure event: What kind of failure has occurred in the

external dependencies of the service is described, i.e., a

downstream service returning a rejection, packet loss, or delay;

another example, a disk write exception, busy read, full write

and other unexpected situations.

D. Testing Planning

1) Exception Traversal

The business tool that simulates the production business

continuously delivers various Structured Query Language

(SQL) services to the big data system by the load balancer and

employs the exception injection tool to randomly inject

exceptions into the business network. During the idle period of

the business, check the data whether the business data is

inconsistent: if the business data is consistent, enter the next

round of cyclic testing. If the business data is inconsistent, stop

the test immediately and manually intervene to check the cause

of the data inconsistency. Figure 4 gives the abnormal traversal

flow chart of the chaos testing tool. The test can be divided into

three categories: function, performance, and high availability.

By comparing the test indicators in the normal running state

and the running state after fault injection, the processing ability

and robustness of the database in various abnormal scenarios

are verified. OLTP (On-Line Transaction Processing)

benchmark test, concurrent transfer, total account balance after

verification, concurrent execution of five transaction models,

and the test of database isolation level.

Identify applicable sponsor/s here. If no sponsors, delete this text box.

(sponsors)

of 6

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

xxxxx DBA

最新上传

下载排行榜

1

2

centos7下oracle11.2.0.4 rac安装详细图文(虚拟机模拟多路径).docx

3

达梦数据2024年年度报告.pdf

4

北京市公安局服务器、操作系统、数据库、工作站采购项目_G25050中标人投标分项报价表(第1包).pdf

5

【GoldenDB】一种分布式数据库变更数据检索方法、装置、设备及介质_CN202411914568.4_金篆信科有限责任公司.pdf

6

sql-课件-第4章-SQL高级应用.ppt

7

《2024龙芯生态白皮书》.pdf

8

《太极计算机股份有限公司2024年年度报告》.pdf

9

北京市公安局服务器、操作系统、数据库、工作站采购项目_G25050中标人投标分项报价表(第2包).pdf

10

MySQL 和 PG 性能 PK,基准测试跑起来!.doc

相关文档

评论