SIGMOD2022_HiEngine_ How to Architect a Cloud-Native Memory-Optimized database engine_华为云.pdf

免费下载

HiEngine: How to Architect a Cloud-Native Memory-Optimized

Database Engine

Yunus Ma, Siphrey Xie, Henry Zhong, Leon Lee, King Lv

Cloud Database Innovation Lab of Cloud BU, Huawei Research Center

hiengine@huaweicloud.com

ABSTRACT

Fast database engines have become an essential building block in

many systems and applications. Yet most of them are designed

based on on-premise solutions and do not directly work in the

cloud. Existing cloud-native database systems are mostly disk resi-

dent databases that follow a storage-centric design and exploit the

potential of modern cloud infrastructure, such as manycore pro-

cessors, large main memory and persistent memory. However, in-

memory databases are infrequent and untapped.

is paper presents HiEngine, Huawei’s cloud-native memory-

optimized in-memory database engine that endows hierarchical

database architecture and lls this gap. HiEngine simultaneously

(1) leverages the cloud infrastructure with reliable storage services

on the compute-side (in addition to the storage tier) for fast persis-

tence and reliability, (2) achieves main-memory database engines’

high performance, and (3) retains backward compatibility with ex-

isting cloud-native database systems. HiEngine is integrated with

Huawei GaussDB(for MySQL), it brings the benets of main-memory

database engines to the cloud and co-exists with disk-based en-

gines. Compared to conventional systems, HiEngine outperforms

prior storage-centric solutions by up to 7.5× and provides com-

parable performance to on-premise memory-optimized database

engines.

CCS CONCEPTS

• Information systems → DBMS engine architectures.

KEYWORDS

Cloud-Native, Memory-Optimized, ARM, Log is Database

ACM Reference Format:

Yunus Ma, Siphrey Xie, Henry Zhong, Leon Lee, King Lv. 2022. HiEngine:

How to Architect a Cloud-Native Memory-Optimized Database Engine. In

Proceedings of the 2022 International Conference on Management of Data

(SIGMOD ’22), June 12–17, 2022, Philadelphia, PA, USA. ACM, New York,

NY, USA, 14 pages. https://doi.org/10.1145/3514221.3526043

1 INTRODUCTION

Future data processing workloads and systems will be cloud-centric.

Many customers are migrating or have already switched to the

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for prot or commercial advantage and that copies bear this notice and the full cita-

tion on the rst page. Copyrights for components of this work owned by others than

ACM must be honored. Abstracting with credit is permied. To copy otherwise, or re-

publish, to post on servers or to redistribute to lists, requires prior specic permission

and/or a fee. Request permissions from permissions@acm.org.

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

© 2022 Association for Computing Machinery.

ACM ISBN 978-1-4503-9249-5/22/06…$15.00

https://doi.org/10.1145/3514221.3526043

cloud, for beer reliability, security, and lower personnel and hard-

ware cost. To cope with this trend, a number of cloud-native data-

base systems have been developed, such as Amazon Aurora [46],

Alibaba PolarDB [2], Huawei GaussDB(for MySQL) (Taurus) [13]

and Microso Hyperscale (Socrates) [5]. ese systems are typi-

cally built on top of on-premise open-source (e.g., MySQL) or com-

mercial products (e.g., SQL Server) to allow easy migration and

adoption. By ooading much functionality to the storage layer and

heavily optimizing components such as the storage engine, they

exhibit magnitudes faster and/or cheaper than their on-premise

counterparts [46]. However, these systems are mostly disk resident

databases that follow a storage-centric design. ey have a buer

pools that in-memory databases do not, which directly aects how

to architect the engine and exploit hardware performance for in-

memory databases in cloud platform. Full disaggregated in-memory

databases can reduce the access latency but they behave expensive

storage cost [42, 50].

In particular, the availability of multicore CPUs and large main

memory has led to a plethora of memory-optimized database en-

gines for online transaction processing (OLTP) in both academia

and industry [7, 14, 15, 18, 23–25, 30, 33, 43, 45]. Contrary to con-

ventional engines that optimize for storage accesses, memory-optimized

engines adopt memory-ecient data structures and optimizations

for multi-socket multicore architectures to leverage high parallelism

in processors; I/O happens quietly in the background without in-

terrupting forward processing. is allows memory-optimized en-

gines to support up to millions of transactions per second. Many

of today’s businesses and applications depend on them, ranging

from retail, fraud detection, to telecom and scenarios that were

dominated by conventional disk-based systems. As customers that

require high OLTP performance migrate their applications to the

cloud, it becomes necessary for vendors to oer cloud-native, memory-

optimized OLTP solutions.

Existing memory-optimized database engines are mostly mono-

lithic solutions designed for on-premise environments. At a rst

glance, it may seem trivial to directly deploy an on-premise engine

in the cloud, by processing transactions using compute nodes and

persisting data and log records in some reliable storage service,

such as Amazon S3 or Azure Storage, which transparently repli-

cate data under the hood. However, modern cloud environments

exhibit a few idiosyncrasies that make this approach far from the

ideal.

First, storage services are typically hosted in separate nodes

and so have to be accessed via the network; persisting data di-

rectly there on the critical path can add intolerable latency to in-

dividual transactions. Especially, this model does not work well

in Huawei Cloud where inter-layer networking latency (between

Industrial Track Paper

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

2177

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA Y. Ma, S. Xie, H. Zhong, L. Lee, K.Lv

compute and storage nodes) is much higher than that of intra-

layer networking (from one compute node to another, or from one

storage node to another). Although throughput can remain ele-

vated with techniques such as pipelined and group commit [22]

adopted by existing cloud-native systems [13, 46], this largely de-

feats the goal of achieving low-latency transactions, a main pur-

pose of using a memory-optimized database engine. In terms of

reliability and availability, the direct deployment approach would

again waste much space by storing redundant copies as the stor-

age service already replicates data, similar to the cases that were

made against deploying directly a traditional database system [2,

5, 13, 46] in the cloud. Finally, the directly deployment approach

is also ill-suited in today’s cloud which is becoming more hetero-

geneous as non-x86 (notably ARM

1

) processors make inroads into

data centers. Most memory-optimized database systems were de-

signed around the x86 architecture to leverages its fast inter-core

communication and total store ordering. It was unclear how well

or whether they would work in ARM-based environments. ese

challenges and issues call for a new design that retains main-memory

performance, yet fully adapts to the cloud architecture.

We present HiEngine, Huawei’s cloud-native, memory-optimized

in-memory database engine built from scratch to tackle these chal-

lenges. A key design in HiEngine is (re)introducing persistent states

to the compute layer, to enable fast persistence and low-latency

transactions directly in the compute layer without going through

the inter-layer network on the critical path. Instead, transactions

are considered commied as soon as their log records are persisted

and replicated in the compute layer. HiEngine uses a highly paral-

lel design to store log in persistent memory on the compute side.

e log is batched and ushed periodically to the storage layer in

background to achieve high availability and reliability. is is en-

abled by SRSS [13], Huawei’s unied, shared reliable storage ser-

vice that replicates data transparently with strong consistency. In

the compute layer, SRSS leverages persistent memory such as In-

tel Optane DCPMM [12] or baery-backed DRAM [1, 47] to store

and replicate the log tail in three compute nodes. e replication

is also done in the storage layer by SRSS, but in the background

without aecting transaction latency. is way, HiEngine retains

main-memory performance in the cloud, while continuing to lever-

age what reliable storage services oers in terms of reliability and

high availability.

HiEngine also uniquely optimizes for ARM-based manycore pro-

cessors, which have been making inroads to cloud data centers as a

cost-eective and energy-ecient option. e main dierentiating

feature is that these ARM processors oer even higher core counts

their popular x86 counterparts and exhibit worse NUMA eect

in multi-socket seings. HiEngine proposes a few optimizations

to improve transaction throughput for ARM-based manycore pro-

cessors, in particular timestamp allocation and memory manage-

ment. Although there has been numerous work on main-memory

database engines that leverage modern hardware, most of them

are piecewise solutions and/or focused on particular components

(e.g., concurrency control or garbage collection). In this paper, by

1

For example, both Huawei Cloud [19] and Amazon Web Services [3] have deployed

ARM processors in their cloud oerings.

building HiEngine we share our experience and highlight the im-

portant design decisions that were rarely discussed in the research

literature for practitioners to bring and integrate ideas together to

a realize a system that is production-ready in a cloud environment.

It is also desirable to maintain backward compatibility with storage-

centric engines. Traditional cloud-native systems are gaining mo-

mentum in the market, with established ecosystem based on previ-

ous on-premise oerings. For example, Aurora, GaussDB(for MySQL)

and PolarDB all oer MySQL-compatible interfaces to allow ap-

plications to migrate easily to the cloud; Microso Socrates re-

tains the familiar SQL Server T-SQL interfaces for applications. It

is unlikely that all applications and workloads would migrate to

main-memory engines: some may not even need the high perfor-

mance provided by these engines. Similar to the adoption of main-

memory engines in on-premise environments, we believe that the

new main-memory engine needs to co-exist with existing disk-

based engines in the existing ecosystem. In later sections, we de-

scribe our approach for two database engines (HiEngine and Inn-

oDB) to co-exist in GaussDB(for MySQL) [13], Huawei’s cloud-

native, MySQL-compatible database systems oering in produc-

tion.

Finally, HiEngine introduces a few new techniques in core data-

base engine design to bridge the gap between academic prototypes

and real products in the cloud. HiEngine adopts the concept of in-

direction arrays [24] that ease data access processes, but were lim-

ited by issues such as xed static array sizes. We extend this idea

by partitioned indirection arrays to support dynamically growing

and shrinking indirection arrays, while maintaining the benets

brought by them. HiEngine also adds support for other notable fea-

tures such as index persistence and partial memory support, both

of which were oen ignored by prior research prototypes.

Next, we begin in Section 2 with recent hardware trends in mod-

ern cloud infrastructure and Huawei SRSS, which is a critical build-

ing block that enables the making of HiEngine. Section 3 rst gives

an overview of HiEngine architecture and design principles. We

then describe the detailed design of HiEngine in Sections 4–5, fol-

lowed by evaluation results in Section 6. We cover related work in

Section 7 and conclude in Section 8.

2 MODERN CLOUD INFRASTRUCTURE

is section gives an overview of recent cloud infrastructure (hard-

ware adoption and architecture) trends at Huawei Cloud which

HiEngine is built around.

2.1 Hardware Trends and Challenges

Modern cloud computing infrastructure decouples compute and

storage elements to achieve high scalability: Conceptually, one set

of nodes are dedicated to compute-intensive tasks (“compute” nodes

or instances), and another set of nodes are dedicated to storage

(“storage” nodes or instances). Most servers in cloud data centers

are x86-based and soware is thus designed around the x86 archi-

tecture which features stronger but relatively fewer cores per chip

(e.g., a couple of dozens).

Represented by products such as Amazon Graviton [3], Ampere

Altra [4] and Huawei Kunpeng [19], ARM-based manycore proces-

sors are making inroads into cloud data centers for beer price

Industrial Track Paper

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

2178

HiEngine: How to Architect a Cloud-Native Memory-Optimized Database Engine SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

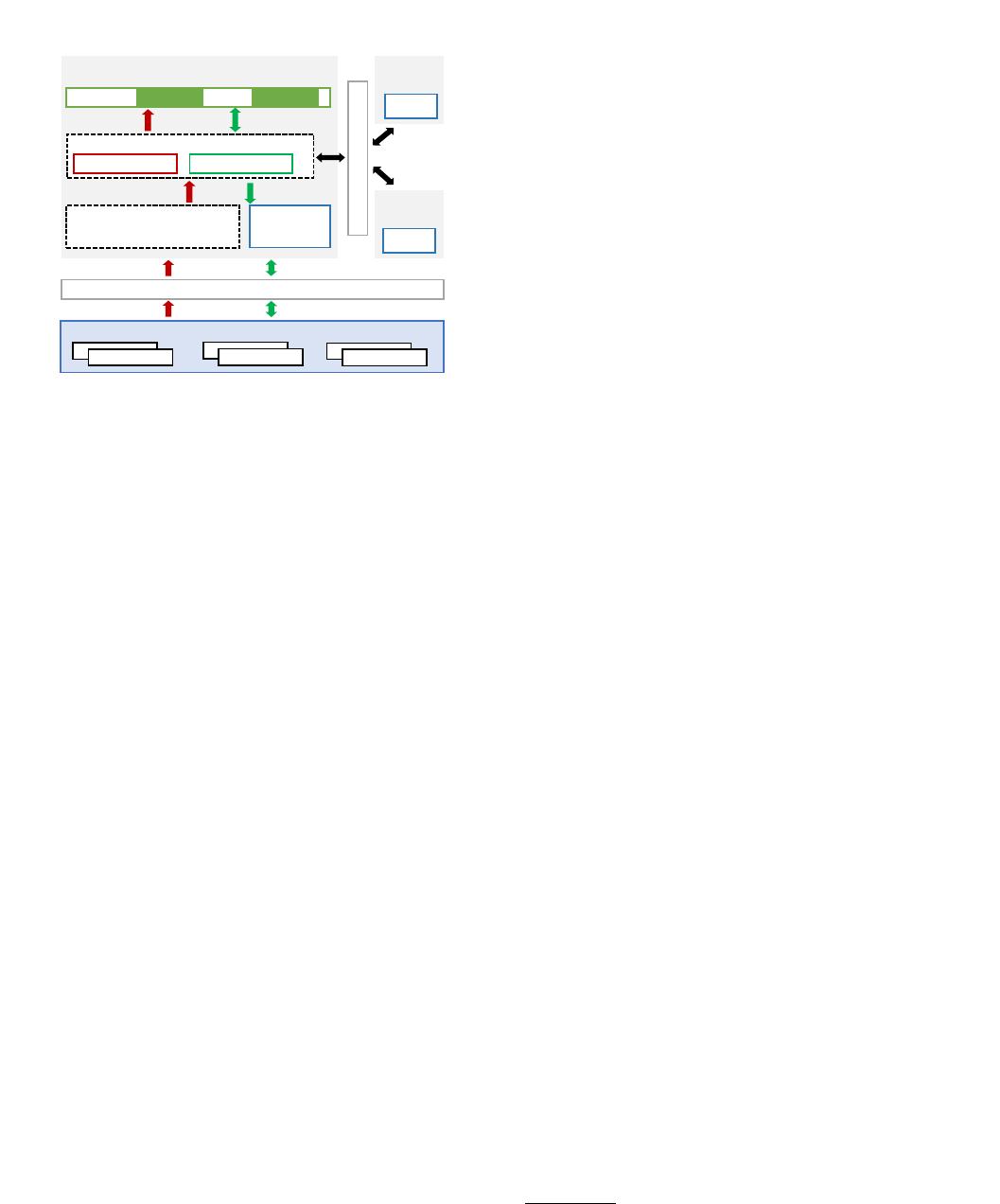

Compute Node 1

Inter-Layer (Compute-Storage) Network

Shared Reliable Storage Service (SRSS)

PMem

Local

Persistent

Memory

Application Address Space:

SRSS Client Library

PLog interfacemmap interface

SRSS Kernel Module

/dev/plogdev + page tables

mmap read

mmap(/dev/plogdev)

Append/read

PMem append

Intra-Layer RDMA Network

Compute

Node 2

Compute

Node 3

PMem

Local

PM

Local

PM

PLogs

PLogs

PLogs

Figure 1: Memory-semantic and compute-side persistent

storage support in SRSS. Data is replicated three ways in the

compute and storage layers. A specialized mmap kernel dri-

ver supports consistent read from storage layer and local or

remote persistent memory via low-latency storage network.

per performance, customizability and energy proles. Compared

to their x86 counterparts, these ARM processors typically feature

many more cores. For example, Huawei Kunpeng 920 and Ampere

Altra oer up to 64 and 80 cores. But typically these cores oer

weaker compute power and when integrated into a multi-socket

server, exhibit more severe NUMA eect. e high parallelism and

NUMA eect necessitate further optimizations and tuning of data-

base systems to extract the full potential of highly parallel ARM

processors; we describe related optimizations in HiEngine in later

sections.

In the decoupled architecture, compute nodes typically features

large main memory (enough for keeping at least the working set,

if not the whole database), but limited and ephemeral local stor-

age that will not get persisted across instance instantiations. Stor-

age nodes are the only permanent home of data and focus more

on providing high capacity and high bandwidth. Data is typically

replicated multiple times under the hood by the reliable storage ser-

vice running in the storage layer for high reliability and availability

guarantees. Applications running on the compute nodes must go

through the network to reach the storage tier to access data. Reduc-

ing such network roundtrips has been a major focus in prior cloud-

native systems, as it oen dominates the total runtime [2, 5, 13, 46].

Typically, storage and compute nodes are maintained in sepa-

rate pods, incurring higher cross-layer network communication

cost between the compute and storage layers. Although fast net-

works (e.g., 40-200Gbps Ethernet and Inniband) with remote di-

rect memory access (RDMA) capabilities are becoming a norm in

data centers for communications within and across compute/stor-

age layers (i.e., intra-layer communication), there is still a notice-

able gap between the compute and storage layers. is poses non-

trivial challenges for reducing end-to-end commit latency, espe-

cially for main-memory database systems. For example, Huawei

Cloud already leverages RDMA within its storage service to pro-

vide fast and strong consistency guarantees, but the inter-layer

network latency (between the compute and storage layers) is about

3–5× longer than intra-layer latency on the compute side.

Meanwhile, persistent memory products such as Intel Optane

DCPMM [12] and ash-backed NVDIMMs [1, 47] are blurring the

boundary between memory and storage with byte-addressability

and persistence on the memory bus. ey present both opportuni-

ties and challenges to the decoupled architecture. On the one hand,

the application would require byte-addressability to realize the full

potential of persistent memory, making it desirable to equip persis-

tent memory on compute nodes, instead of hiding them behind the

networked I/O interface in the storage tier. Main-memory OLTP

engines can potentially benet from this setup to achieve high

throughput and reduce transaction commit latency in the cloud.

On the other hand, deploying persistent memory on the compute

side naturally goes against the stateless nature of compute nodes,

make them another permanent home of data. We describe in the

next section how SRSS, a core component of Huawei Cloud that

bridges this gap and provides the necessary persistence primitives

for HiEngine in the cloud.

2.2 SRSS: Huawei’s Reliable Storage Service

Now we introduce the shared reliable storage service that is cur-

rently in production in Huawei Cloud. Detailed discussions of them

are out of the scope of this paper; we focus on the aspects that

would impact how we architect HiEngine.

SRSS is Huawei’s next-generation distributed storage service

built on top of modern SSDs using RDMA over fast data center

networks to oer replication, strong consistency guarantees and

high performance across availability zones and data centers. It is

designed as a log-structured, append-only store that replicates data

3-ways under the hood. e basic unit of data storage is a persis-

tent log (PLog), which is a contiguous, xed-size chunk (64MB or

larger) in SSD. SRSS provides applications typical interfaces that

one can nd in log-structured storage: create/open/close PLog, ap-

pend and read; in-place update is disallowed by its nature [13]. Ap-

plications communicate through the SRSS client, which is a thin

layer deployed on compute nodes to provide the aforementioned

interfaces, and communicate with the storage backend. SRSS uses

a pool of SSDs and storage nodes to provide reliability with strong

consistency. Upon receiving write (append) requests, the storage

backend synchronously replicates data to three storage nodes in

parallel, and returns to client signaling success only when data

is persisted across all three nodes. In case of failures during the

(replicated) write operation, SRSS “seals” (i.e., permanently closes)

a PLog which would disallow any further writes to it; the applica-

tion then needs to retry its write request while the storage backend

switches to use another node. Read can be served by any replica,

as determined by SRSS’s routing layer and partitioning scheme.

2.3 Compute-Side Persistence in SRSS

With persistent memory (PM)

2

and low-latency RDMA network,

SRSS extends persistence to the compute layer with the aforemen-

tioned PLog abstraction to provide persistence, high availability

and strong consistency in the compute layer. In addition to the

open, close, append and read interfaces, on the compute side,

SRSS uniquely provides memory semantics via memory map (mmap) [21]

2

Not limited by particular PM technologies, which can be products such as Intel Op-

tane DCPMM or NVDIMMs based on DRAM and ash memory with supercapacitors.

Industrial Track Paper

SIGMOD ’22, June 12–17, 2022, Philadelphia, PA, USA

2179

of 14

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

最新上传

下载排行榜

1

2

centos7下oracle11.2.0.4 rac安装详细图文(虚拟机模拟多路径).docx

3

白鳝-DBAIOPS:国产化替换浪潮进行时,信创数据库该如何选型?.pdf

4

PostgreSQL 缓存命中率低?可以这么做.doc

5

达梦数据2024年年度报告.pdf

6

李飞-AI 引领的企业级智能分析架构演进与行业实践.pdf

7

刘杰-江苏广电:从Oracle+Hadoop到TiDB,数据中台、实时数仓运维0负担.pdf

8

刘晓国-基于 Elasticsearch 创建企业 AI 搜索应用实践.pdf

9

晋鑫宇_AI Agent 赋能社交媒体-构建未来社交生态的核心驱动力.pdf

10

Sunny duan-大模型安全挑战与实践:构建 AI 时代的安全防线.pdf

相关文档

评论