Spitfire: A Three-Tier Buffer Manager for Volatile and Non-Volatile Memory

5墨值下载

Spitfire: A Three-Tier Buer Manager for Volatile and

Non-Volatile Memory(Preview Version)

Xinjing Zhou

mortyzhou@tencent.com

Tencent Inc.

Joy Arulraj

arulraj@gatech.edu

Georgia Institute of Technology

Andrew Pavlo

pavlo@cs.cmu.edu

Carnegie Mellon University

David Cohen

david.e.cohen@intel.com

Intel

Abstract

The design of the buer manager in database management sys-

tems (DBMSs) is inuenced by the performance characteristics of

volatile memory (i.e., DRAM) and non-volatile storage (e.g., SSD).

The key design assumptions have been that the data must be mi-

grated to DRAM for the DBMS to operate on it and that storage

is orders of magnitude slower than DRAM. But the arrival of new

non-volatile memory (NVM) technologies that are nearly as fast as

DRAM invalidates these previous assumptions.

Researchers have recently designed Hymem, a novel buer man-

ager for a three-tier storage hierarchy comprising of DRAM, NVM,

and SSD. Hymem supports cache-line-grained loading and an NVM-

aware data migration policy. While these optimizations improve

its throughput, Hymem suers from two limitations. First, it is a

single-threaded buer manager. Second, it is evaluated on an NVM

emulation platform. These limitations constrain the utility of the

insights obtained using Hymem.

In this paper, we present Spitfire, a multi-threaded, three-tier

buer manager that is evaluated on Optane Persistent Memory

Modules, an NVM technology that is now being shipped by Intel.

We introduce a general framework for reasoning about data migra-

tion in a multi-tier storage hierarchy. We illustrate the limitations

of the optimizations used in Hymem on Optane and then discuss

how Spitfire circumvents them. We demonstrate that the data

migration policy has to be tailored based on the characteristics of

the devices and the workload. Given this, we present a machine

learning technique for automatically adapting the policy for an

arbitrary workload and storage hierarchy. Our experiments show

that Spitfire works well across dierent workloads and storage

hierarchies. We present a set of guidelines for picking the storage

hierarchy and migration policy based on the workload.

1 Introduction

The techniques for buer management in a canonical DRAM-SSD

hierarchy are predicated on the assumptions that: (1) the data must

be copied from SSD to DRAM for the DBMS to operate on it, and

that (2) storage is orders of magnitude slower than DRAM [

4

,

16

].

But emerging non-volatile memory (NVM) technologies upend

these design assumptions. They support low latency reads and

writes similar to DRAM, but with persistent writes and large storage

capacity like an SSD. In a DRAM-SSD hierarchy, the buer manager

decides what pages to move between disk and memory and when

to move them. However, with a DRAM-NVM-SSD hierarchy, in

addition to these decisions, it must also decide where to move them

(i.e., which storage tier).

Prior Work.

Recently, researchers have designed Hymem, a novel

three-tier buer manager for a DRAM-NVM-SSD hierarchy [

37

].

Hymem employs a set of optimizations tailored for NVM. It adopts a

data migration policy consisting of four decisions: DRAM admission,

DRAM eviction, NVM admission, and NVM eviction.

❶

Initially, a

newly-allocated 16 KB page resides on SSD. When a transaction

requests that page, Hymem eagerly admits the entire page to DRAM.

❷

DRAM eviction is the next decision that it takes to reclaim space.

It uses the CLOCK algorithm for picking the victim page [

34

].

❸

Next, it must decide whether that page must be admitted to the

NVM buer (if it is not already present in that buer). Hymem seeks

to identify warm pages. It maintains a queue of recently considered

pages to make the NVM admission decision. It admits pages that

were recently denied admission. Each time a page is considered for

admission, Hymem checks whether the page is in the admission

queue. If so, it is removed from the queue and admitted into the

NVM buer. Otherwise, it is added to the queue and directly moved

to SSD, thereby bypassing the NVM buer.

❹

Lastly, it uses the

CLOCK algorithm for evicting a page from the NVM buer.

Hymem supports cache-line-grained loading to improve the uti-

lization of NVM bandwidth. Unlike SSD, NVM supports low-latency

access to 256 B blocks. Hymem uses cache line-grained loading to

extract only the hot data objects from an otherwise cold 16 KB page.

By only loading those cache lines that are needed, Hymem lowers

its bandwidth consumption.

Hymem supports a mini page layout for reducing the DRAM

footprint of cache-line-grained pages. This layout allows it to e-

ciently keep track of which cachelines are loaded. When the mini

page overows (i.e., all sixteen cache lines are loaded), Hymem

transparently promotes it to a full page.

Limitations.

These optimizations enable Hymem to work well

across dierent workloads on a DRAM-NVM-SSD storage hierarchy.

However, it suers from two limitations. First, it is a single-threaded

buer manager. Second, it is evaluated on an NVM emulation plat-

form. These limitations constrain the utility of the insights obtained

using Hymem (§6.5). In particular, the data migration policy em-

ployed in Hymem is not the optimal one for certain workloads. We

show that the cache line-grained loading and mini-page optimiza-

tions must be tailored for a real NVM device. We also illustrate

that the choice of the data migration policy is signicantly more

important than these auxiliary optimizations.

Xinjing Zhou, Joy Arulraj, Andrew Pavlo, and David Cohen

DRAM NVM SSD

Latency

Idle Sequential Read Latency 75 ns 170 ns 10 µs

Idle Random Read Latency 80 ns 320 ns 12 µs

Bandwidth (6 DRAM and 6 NVM modules)

Sequential Read 180 GB/s 91.2 GB/s 2.6 GB/s

Random Read 180 GB/s 28.8 GB/s 2.4 GB/s

Sequential Write 180 GB/s 27.6 GB/s 2.4 GB/s

Random Write 180 GB/s 6 GB/s 2.3 GB/s

Other Key Attributes

Price ($/GB) 10 4.5 2.8

Addressability Byte Byte Block

Media Access Granularity 64 B 256 B 16 KB

Persistent No Yes Yes

Endurance (cycles) 10

16

10

10

10

12

Table 1: Device Characteristics –

Comparison of key characteristics of

DRAM, NVM (Optane DC PMMs), and SSD (Optane DC P4800X).

Our Approach.

In this paper, we present Spitfire, a multi-threaded,

three-tier buer manager that is evaluated on Optane Data Center

(DC) Persistent Memory Modules (PMMs), an NVM technology that

is being shipped by Intel [

7

]. As summarized in Table 1, Optane

DC PMMs bridges the performance and cost dierentials between

DRAM and an enterprise-grade SSD [

26

,

32

]. Unlike SSD, it delivers

higher bandwidth and lower latency to CPUs. Unlike DRAM, it

supports persistent writes and large storage capacity.

We begin by introducing a taxonomy for data migration policies

that subsumes the specic policies employed in previous three-

tier buer managers [

22

,

37

]. Since the CPU is capable of directly

operating on NVM-resident data, Spitfire does not eagerly move

data from NVM to DRAM. We show that lazy data migration from

NVM to DRAM ensures that only hot data is promoted to DRAM.

An optimal migration policy maximizes the utility of movement

of data between dierent devices in the storage hierarchy. We

empirically demonstrate that the policy must be tailored based on

the characteristics of the devices and the workload. Given this, we

present a machine learning technique for automatically adapting

the policy for an arbitrary workload and storage hierarchy. This

adaptive data migration scheme eliminates the need for manual

tuning of the policy.

Contributions. We make the following contributions:

•

We introduce a taxonomy for reasoning about data migration

in a multi-tier storage hierarchy (§3).

•

We introduce an adaptation mechanism that converges to a

near-optimal policy for an arbitrary workload and storage

hierarchy without requiring any manual tuning (§4).

•

We implement our techniques in Spitfire, a multi-threaded,

three-tier buer manager (§5).

•

We evaluate Spitfire on Optane DC PMMs and demonstrate

that it works well across diverse workloads and storage hier-

archies (§6).

•

We present a set of guidelines for choosing the storage hierar-

chy and migration policy based on workload (§6.6, §6.7).

•

We demonstrate that an NVM-SSD hierarchy works well on

write-intensive workloads (§6.6).

2

NVM

SSD

Database Write-Ahead Log

Buffer Pool

DRAM

Buffer Pool

5

87

61

10

9

Cache-line-grained

page (NVM-backed)

Entire page

(SSD-backed)

2

9

3 4

DRAM Admission

DRAM Eviction 10

NVM Admission

5

CPU Cache

2 9

NVM Eviction

6

CPU Cache

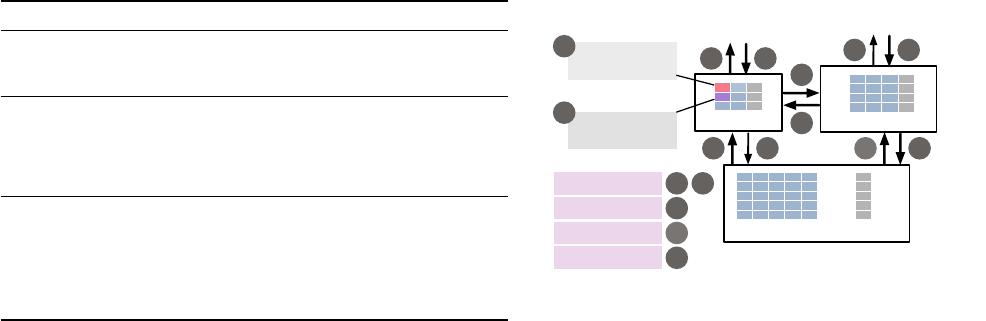

Figure 1: Data Migration Policy in Hymem

– The four critical decisions

in the data migration policy adopted by Hymem.

2 Background

We rst provide an overview of the NVM-aware optimizations

adopted by Hymem in §2.1. We then discuss how Spitfire makes

use of Optane DC PMMs in §2.2.

2.1 NVM-aware Optimizations in Hymem

The traditional approaches for buer management in DBMSs are

incompatible with the advent of byte-addressable NVM. The rea-

sons for this are twofold. First, to process SSD-resident data, the

buer manager must copy it to DRAM before the DBMS can per-

form any operations. In contrast, the CPU can directly operate on

NVM-resident data (256 B blocks) [

7

]. Second, NVM bridges the

performance gap between DRAM and SSD. Given these observa-

tions, researchers have designed Hymem, a novel buer manager

for a DRAM-NVM-SSD hierarchy [

37

]. The key NVM-centric opti-

mizations in Hymem include:

Data Migration Policy.

Figure 1 presents the data ow paths in

the multi-tier storage hierarchy. NVM introduces new data ow

paths in the storage hierarchy (

❶

,

❷

,

❺

,

❻

,

❼

,

❽

). By leveraging

these additional paths, Hymem reduces data movement between

dierent tiers and minimizes the number of writes to NVM. The for-

mer results in improving the DBMS’s performance, while the latter

extends the lifetime of NVM devices with limited write-endurance.

The default read path comprises of three steps: moving data from

SSD to NVM (

❶

), then to DRAM (

❷

), and lastly to the CPU cache

(

❸

). Similarly, the default write path consists of three steps: moving

data from processor cache to DRAM (

❹

), then to NVM (

❺

), and

nally to SSD (❻).

As we discussed in §1, the four critical decisions in the data

migration policy adopted by Hymem include: DRAM admission,

DRAM eviction, NVM admission, and NVM eviction. When a trans-

action requests a page, Hymem checks if the page is in the DRAM

buer. If it is not present in the DRAM buer, it checks if the page

is present in the NVM buer or not. In the former case, it directly

accesses the page residing on NVM (

❷

). In the latter case, it eagerly

admits the entire page residing on SSD into the DRAM buer (

❾

). It

does not leverage the path from SSD to NVM (

❶

). DRAM eviction

is the second decision that Hymem takes to reclaim space. It must

decide whether that page must be admitted to the NVM buer. Each

time a page is considered for admission, Hymem checks whether

the page is in the admission queue. If so, it is removed from the

Spitfire: A Three-Tier Buer Manager for Volatile and Non-Volatile Memory(Preview Version)

!"#$%&'()%*+

!"#$%&'()%*,

!"#$%&'()%*-

!"#$%&'()%*.

!"#$%&'()%*.//

01*-)231* 41*-5641*,

4(0781*--+-9:-

.

0%;(4%)71*+-+-9:+

.

!"#$%&'()%*-

<54"7%4*!"#$%&'()%*.

=">%*!"5"#(781*./?*#"#$%*@()%;

=">%*A%"4%01*.*#"#$%*@()%;

!"#$%&'()%*.//

NVM

DRAM

(a) Cache-line-grained Page

Cache-Line 1

Cache-Line 3

Cache-Line 0

Cache-Line 2

Cache-Line 255

NVM

slots: [0, 2, 255]nvm: pId: 3

full:

dirty: 0100AB0

2

count: 3

Updated Cache-Line 2

Cache-Line 0

Cache-Line 255

Page Capacity: 16 cache lines

Page Header: 1 cache line

DRAM

(b) Mini Page

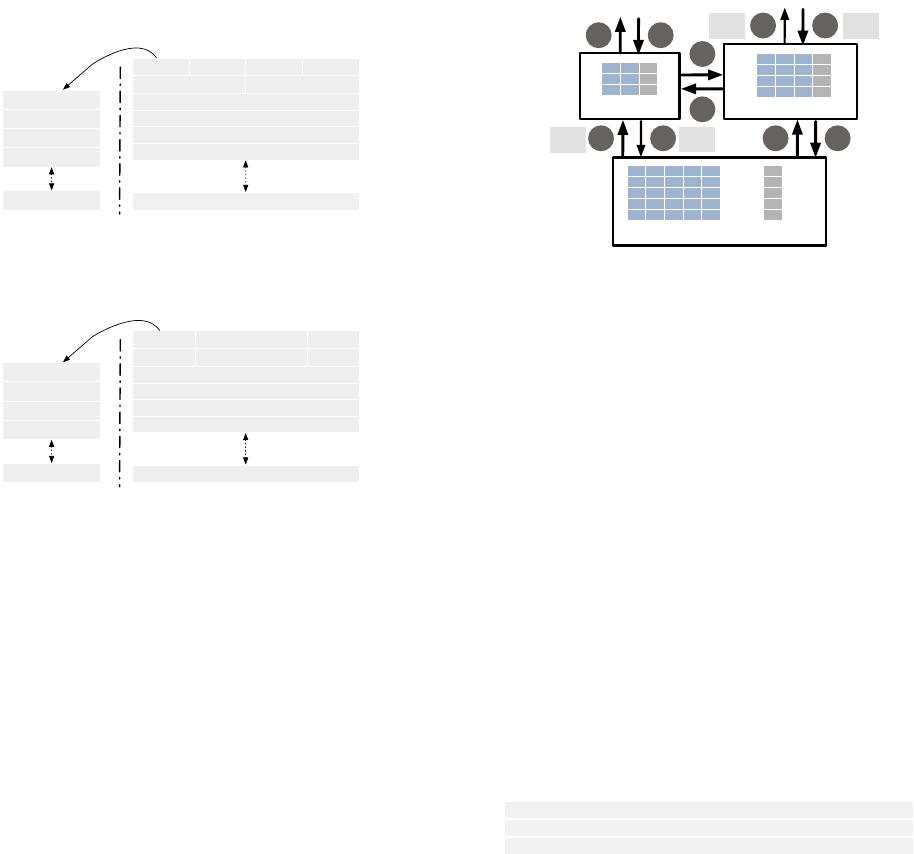

Figure 2: Page Layouts in Hymem–

The page layouts that Hymem uses

to lower the NVM bandwidth consumption and the DRAM footprint, re-

spectively [37].

queue and admitted into the NVM buer (

❺

). Otherwise, the page’s

identier is added to the queue, and it is directly moved from DRAM

to SSD (

❿

). Lastly, it evicts pages from the NVM buer when it

needs to reclaim space (❻).

Cache-line-grained Loading.

Hymem supports cache line-grained

loading to extract only the hot data objects from an otherwise cold

16 KB page residing on NVM (

❷

). This lowers the NVM bandwidth

consumption by only loading those 64 B cache lines that are needed.

As shown in Figure 2a, to support cache-line-grained pages, Hymem

maintains a couple of bitmasks (labeled

resident

and

dirty

) to

keep track of the cache lines that are already loaded and those that

are dirtied. The

r

and

d

bits indicate whether the entire page is

resident and dirty, respectively. In this example, the rst, third, and

last cache-lines are loaded, as indicated by the corresponding set

bits in

resident

. To support on-demand loading of cache-lines,

each cache line-grained page in DRAM contains a pointer to the

underlying NVM page. The header ts within two cache lines.

Mini Page.

Hymem supports a mini page layout for reducing the

DRAM footprint of cache-line-grained pages. This layout allows

it to eciently keep track of the loaded cachelines. A mini page

stores up to sixteen cache-lines. As shown in Figure 2b, it contains

a

slots

array for directing the accesses. Each slot stores the logical

identier of the cache-line residing in the mini page. For instance,

the 255

th

cache line in the underlying NVM page is loaded into

the second slot of the DRAM-resident mini page. Hymem uses the

count

eld to keep track of the number of occupied slots (i.e., loaded

cache lines). When the mini page overows, Hymem transparently

promotes it to a full page. The

dirty

bit mask determines the cache

2

NVM

SSD

Database Write-Ahead Log

Buffer Pool

DRAM

Buffer Pool

5

43

87

61

10

9

D

r

D

w

N

r

N

w

Figure 3: Data Flow Paths

– The dierent data ow paths in a multi-tier

storage hierarchy consisting of DRAM, NVM, and SSD.

lines that must be written back to NVM when the page is evicted

from the DRAM buer. In this example, the second cache line must

be written back. The header of a mini page ts within a cache line.

2.2 Leveraging Optane DC PMMs

Optane DC PMMs can be congured in one of these two modes:

(1)

memory

mode and (2)

app direct

mode. In the former mode,

the DRAM DIMMs serve as a hardware-managed cache (i.e., direct

mapped write-back L4 cache) for frequently-accessed data residing

on slower PMMs. The

memory

mode enables legacy software to

leverage PMMs as a high-capacity volatile main memory device

without extensive modications. However, it does not allow the

DBMS to utilize the non-volatility property of PMMs. In the latter

mode, the PMMs are directly exposed to the processor and the

DBMS directly manages both DRAM and NVM. In this paper, we

congure the PMMs in

app direct

mode to ensure the durability

of NVM-resident data.

Evaluation Platform.

We evaluate Spitfire on a platform con-

taining Optane DC PMMs. We create a namespace on top of the

NVM regions in

fsdax

mode. This allows Spitfire to directly ac-

cess NVM using the load/store interface. It maps a le residing on

the NVM device using the following commands:

fd = open(("/mnt/pmem0/file", O

_

RDWR, 0);

res = ftruncate(fd, SIZE);

ptr = mmap(nullptr, SIZE, PROT

_

WRITE, MAP

_

SHARED, fd, 0);

Spitfire uses the resulting pointer to directly manage the NVM-

resident buer. To ensure the persistence of NVM-resident data,

it rst writes back the cache lines using the

clwb

instruction and

then issues an

sfence

instruction so that the data reaches NVM.

The

clwb

instruction writes back the cache lines without evicting

them. Since this instruction is non-blocking, Spitfire must issue the

sfence

instruction to ensure that the cache write back is completed

and the data is persistent.

We next describe how Spitfire leverages the additional data

ow paths introduced by NVM.

3 NVM-Aware Data Migration

We propose a probabilistic technique for deciding where to move

data. This multi-tier data migration policy works in tandem with

the page replacement policy used in the DRAM and NVM buers.

Similar to Hymem, Spitfire uses the CLOCK page replacement

policy for deciding what data should be evicted from a buer [

34

].

Once a page is selected for eviction or promotion, Spitfire uses

of 14

5墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

云和恩墨(北京)信息技术有限公司 架构师

最新上传

下载排行榜

1

2

9-数据库人的进阶之路:从PG分区、SQL优化到拥抱AI未来(罗敏).pptx

3

1-PG版本兼容性案例(彭冲).pptx

4

2-TDSQL PG在复杂查询场景中的挑战与实践-opensource.pdf

5

6-PostgreSQL 哈希索引原理浅析(文一).pdf

6

3-AI时代的变革者-面向机器的接口语言(MOQL)_吕海波.pptx

7

8-基于PG向量和RAG技术的开源知识库问答系统MaxKB.pptx

8

4-IvorySQL V4:双解析器架构下的兼容性创新实践.pptx

9

7-拉起PG好伙伴DifySupaOdoo.pdf

10

《云原生安全攻防启示录》李帅臻.pdf

相关文档

评论